Abstract

Purpose

To evaluate the test-retest repeatability of a computer-based contrast sensitivity (CS) test, the Spaeth/Richman contrast sensitivity (SPARCS) test, and to determine the effects of age and lens status on CS in normal eyes.

Study design

Prospective cross-sectional study.

Methods

The participants were assessed by use of the SPARCS test in each eye 3 times. The first 2 sessions were supervised, while the third was unsupervised. CS was determined for 5 areas of vision (central, superotemporal, superonasal, inferotemporal, and inferonasal) and combined to provide a total score. The test-retest repeatability was determined using Bland-Altman analysis and the intraclass correlation coefficient (ICC).

Results

The total SPARCS scores (maximum possible score = 100) ranged from 86.37 (±1.09) (for those aged 20 to 29 years) to 70.71 (±2.64) (for those aged 80 to 87 years). Individuals aged between 10 and 87 years with a normal eye examination (n = 205) were enrolled. When the SPARCS scores for the first 2 sessions were compared, the ICC was 0.79, and the repeated tests were fairly equivalent (mean difference = −0.29, P = .491). The test-retest 95% limits of agreement (95% LoA) ranged from −11.07 to +11.35. When the supervised sessions were compared with the unsupervised session, the ICC was 0.80, and there was slight improvement in the CS scores during the unsupervised session (mean difference = −1.15, P = .0001). The test-retest 95% LoA ranged from −9.18 to +10.60. The CS declined with advanced age and increased cataract severity (P <0.0001).

Conclusion

Strong agreement was found between repeated SPARCS scores. Older age and increased lens opacity were associated with decline in CS in 5 areas of the visual field. The SPARCS test provides reliable and reproducible assessment of CS in normal eyes.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Contrast sensitivity (CS) is a valuable measure of a person’s ability to “perceive slight changes in luminance between regions which are not separated by definite borders,” (Arden [1]). It is also a valuable predictor of functional vision. A reduction in CS may hinder a person’s ability to perform everyday tasks such as reading, walking, driving, climbing stairs, dialing telephone numbers, or recognizing road signs and faces [2,3,4,5,6]. It is also strongly correlated with self-reported visual status [7]. Patients with ocular disorders such as cataracts, glaucoma, age-related macular degeneration, and diabetic retinopathy often have significantly impaired CS [8,9,10,11,12].

Although visual acuity (VA) and CS correlate, the use of CS in an ophthalmic clinical examination may provide a more valuable assessment of a person’s vision than VA alone [13]. Visual acuity can adequately assess only high spatial frequency CS. A letter on the 20/20 line on a standard Snellen VA chart is equivalent to 30 cycles per degree and tests only central VA [14]. However, many real-world tasks are not performed in high contrast and may be dependent on peripheral visual function. The concept that contrast sensitivity changes with eccentricity has been previously studied [15, 16]. Low spatial frequency CS is an important predictor of a person’s visual function [3,4,5, 13, 17, 18]. If low spatial frequency CS is reduced, measuring the VA alone may underestimate the visual impairment. In fact, CS is better than central VA in predicting ability not only to discriminate real-world targets, such as faces and road signs, but also to perform daily tasks that require orientation and mobility, distance judgment, or reading [3,4,5, 13, 17,18,19]. Therefore, a test of low spatial frequency CS may be a clinically valuable tool to evaluate vision loss, especially in individuals who report poor visual function despite normal VA [20].

There are many validated CS tests that measure CS accurately. These tests commonly use letters or gratings. Letter tests such as the Pelli-Robson test and the Mars test are quick, reliable, and easy to understand [21, 22]. However, the results may be influenced by a person’s level of familiarity with the Latin alphabet, and these tests do not measure peripheral CS [23]. While the currently available grating tests eliminate the confounders of language, they have other flaws. For example, the Vistech and Functional Acuity Contrast Test (FACT) grating tests have poor reproducibility and reliability, presumably owing to having few answer choices [24,25,26]. As also seen in the Cambridge gratings test and Vector Vision’s CSV-1000, having few answer choices increases the likelihood that a participant may guess correctly [27, 28]. The Freiburg Visual Acuity and Contrast Test (FrACT) assesses central vision using a computer program available for download free of charge; the FrACT uses the Landolt C, Sloan letters, or contrast gratings to assess central VA and CS but does not assess peripheral CS [26,27,28,29]. The contrast sensitivity test offers 4 (contrast gratings test) to 10 (Sloan letters test) answer choices and has shown good repeatability, with 95% limits of agreement (LoA) of ±0.15 [30]. Many CS tests are presented in chart form; uneven lighting, surface reflections, and fading over time may impact the test results [26].

The Spaeth/Richman contrast sensitivity (SPARCS) test is an internet-based computer program that ascertains a person’s CS both centrally and peripherally. Because it uses contrast gratings, it does not require literacy or pattern recognition. The SPARCS test evaluates 4 peripheral regions and the central area in an unpredictable order and offers multiple answer choices, decreasing the chance of the individual memorizing the answers or guessing correctly. This test has been studied and validated for the clinical assessment of patients with cataracts, glaucoma, and age-related macular degeneration (AMD) [8,9,10, 31].

The goal of our study was to determine the test-retest repeatability of the SPARCS test, with and without supervision. In addition, we aimed to determine the effect of age and lens status on CS as measured by the SPARCS test. The data from this study will be used to develop a normative database for this test.

Patients and methods

Patients

Two hundred five healthy individuals free of ocular disease were enrolled. Informed consent was obtained from all the participants included in the study.

The participants included patients recruited from the Wills Eye Hospital Cataract and Primary Eye Care Service and volunteers from the Philadelphia area. Approximately 25 participants were recruited per decade of life (ages 10–19, 20–29, 30–39, 40–49, 50–59, 60–69, 70–79, and 80+ years). Patients aged 70 to 79 years or 80 years or older were combined into 1 group, for which 50 participants were recruited.

To ensure a normative database representative of the population in which we believe this test will be used, participants were excluded if they had any ocular disease that affected their VA or visual function such as glaucoma, AMD, or diabetic retinopathy. Additional exclusion criteria included best-corrected visual acuity (BCVA) of 20/50 or worse, cataract grade of 3+ or worse, posterior capsular opacity grade of 3+ or worse, refractive error greater than +6 or −6 D, and incisional eye surgery within 3 months before testing.

Clinical evaluation and CS assessment

The demographic information and systemic and ocular medical history were documented for each participant. All the participants were subsequently given an ophthalmic clinical assessment to determine if they had ocular disease or decreased vision. An ophthalmologist conducted external, biomicroscopic, and funduscopic examinations. The optic nerve was examined to determine the vertical cup-to-disc (C/D) ratio and the Disc Damage Likelihood Score (DDLS) [32].

Spaeth/Richman contrast sensitivity (SPARCS) test

The SPARCS test is accessed through the SPARCS website, https://www.sparcscontrastcenter.com/, which assigns each patient a unique ID and provides instructions for taking the test. The test can be performed on standard web-browsing software, using a monitor with a width of at least 22 cm and a height of at least 26.5 cm that is capable of 1024-×-768 resolution and 256 gray levels [9]. Variations in screen size can be accommodated by adjusting the size of the testing graphic using an integrated calibration function in accordance with the instructions provided.

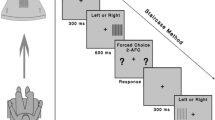

Two parallel horizontal lines and 2 parallel vertical lines divide the computer screen into 9 areas, with a rectangular central area (Fig. 1a). The SPARCS test evaluates CS in 5 regions of the visual field: superotemporal, superonasal, inferotemporal, inferonasal, and central. For each eye, the regions are referred to as the left upper quadrant (LUQ), left lower quadrant (LLQ), right upper quadrant (RUQ), right lower quadrant (RLQ), and central area. Standardization settings in the online test rescale the screen such that the center rectangle is 4.4 cm in width. The correct viewing distance is 50 cm from the screen. At this distance, the entire test area occupies 30° of vision horizontally and 23.5° of vision vertically; the central test area occupies 5° of vision horizontally and 3.5° of vision vertically [9].

Main screen of the SPARCS test. a Two vertical lines and 2 horizontal lines intersect to form 9 rectangles. Contrast sensitivity is tested in the left upper quadrant, left lower quadrant, right upper quadrant, right lower quadrant, and central area. b A presentation of darkened vertical square wave gratings in the left upper quadrant during the SPARCS test

The nontested eye was covered with an occluder and appropriate habitual correction was used. Testing was conducted in a room with fluorescent lighting and no windows to minimize glare and reflections. The light level in the room measured 775 lux. At the beginning of the test, the participants were instructed to focus on the screen’s central area and, when ready, to select “Right Eye” or “Left Eye” in the central area with a computer mouse to select the eye being tested. An image of vertical dark bars (with a fixed spatial frequency of 0.4 cycles per degree) appeared at random in 1 of the 5 testing areas for 0.3 seconds, while the other 4 areas remained the same color as the background (Fig. 1b). The participant then selected the area in which the image had appeared by clicking on it. In the versions of the SPARCS test used in previous studies, a technician administered the test and selected (with a computer mouse) the area that the participant identified [9, 10]. In the current study, the version of the SPARCS test used was participant-administered. The participant identified and selected the testing area on his or her own. Before the next image could appear, the participant was prompted to fixate on the central area again by pressing a Click Here button. The next image was presented immediately after the click, assuring that fixation was centered at the time each was presented. After clicking centrally, the next image was presented in 1 of the 5 test areas. If the participant was unsure where that image had presented, he or she was instructed to choose an area nevertheless to ensure that the SPARCS test is a forced-choice test. The test defines consecutive clicking on the same quadrant more than 4 times as a refusal to guess, in which case the test is automatically terminated, no score given, and an explanation provided before retesting. During the course of the test, vertical square waves of varying contrast levels continued to appear in a random pattern.

The SPARCS testing strategy uses a staircase method with reversals, as described by Richman and colleagues, to determine the CS threshold for each testing area, similarly to the method in which visual field thresholds are determined. The range of contrast tested is from 100% to 0.45% (log contrast sensitivity 0.00–2.35) [9]. The test is completed once a contrast threshold has been determined for each of the 5 testing areas. The log-based score of each of the 5 testing areas is scaled out of 20, making a maximum SPARCS score of 100. SPARCS scores in individual areas can be converted to log CS using the equation: log CS score = (SPARCS score * 2.346353) / 20. Total SPARCS scores can be converted to log CS using the equation: log CS score = (SPARCS score * 2.346353) / 100.

The participants performed the SPARCS test in each eye 3 times. The first 2 sessions were supervised, whilst the third was unsupervised. Before beginning the first session, a trained technician positioned the patient at the correct testing distance and explained the test. The technician remained in the room and observed the participant completing the first 2 tests. The participant was given a break of 5 to 15 minutes after the supervised tests. The third and final test was performed without observation.

Statistical analysis

The data were analyzed using SAS Analytics Pro software, version 9.2 (SAS Institute). Continuous variables were summarized by age group using means, standard deviations, medians, and ranges. Categorical variables were summarized using frequencies and percentages.

A linear repeated-measures model was used to estimate the differences between the supervised and unsupervised SPARCS scores, adjusting for region, eye, lens status, and age. The fixed effects were the supervision status (supervised vs unsupervised) and eye. An unstructured correlation structure was used to account for correlation among the repeated measurements from the same participant.

The test-retest repeatability was assessed using the intraclass correlation coefficient (ICC) and the Bland-Altman method. The ICC was calculated from a repeated-measures ANOVA model that included terms for the participant, eye, and session. The first ICC measured the agreement between the first and second supervised sessions, and the second ICC measured the agreement between the average of the 2 supervised sessions and the single unsupervised session. The ICC was constructed using the variance components (participant + eye/participant + eye + session). The Bland-Altman method was performed to compare the average of 2 tests against the difference between 2 tests [33, 34]. This method was used to determine the bias or the average of the differences between the 2 methods, the standard deviation of the differences, and the 95% LoA, which were the lower and upper bounds of the score for the repeated sessions.

The procedures of this prospective cross-sectional study were approved by the institutional review board (IRB) of the Wills Eye Hospital, and the study was conducted in accordance with the Declaration of Helsinki and the Health Insurance Portability and Accountability Act (HIPAA).

Results

Data were analyzed for 410 eyes from 205 participants. Table 1 summarizes the demographic variables and baseline characteristics of the study participants, as well as the mean (SE) overall SPARCS scores, using the least square means from the ANOVA model for each age group studied. The mean duration of all the SPARCS testing sessions conducted was 2 minutes and 59 seconds.

The mean (SD) overall SPARCS scores were 80.27 (7.81) and 80.56 (8.30) for supervised tests 1 and 2, respectively, and 81.42 (7.56) for the unsupervised test. When the overall SPARCS scores were converted to log CS, the mean (SD) scores were 1.88 (0.18) for supervised test 1, 1.89 (0.19) for supervised test 2, and 1.91 (0.18) for the unsupervised test.

The repeated-measures ANOVA for differences in the overall SPARCS test scores for the 2 supervised and 1 unsupervised test sessions were as follows: the mean difference (95% confidence interval; P value) between supervised tests 1 and 2 was 0.29 (− 0.79, 0.21; P = .491); between supervised test 1 and the unsupervised test, −1.15 (−1.70, 0.61; P = .0001); between supervised test 2 and the unsupervised test, −0.86 (−1.41, −0.32; P = .0051); and between the average of supervised tests 1 and 2 vs the unsupervised test, −1.02 (−1.50, −0.53; P < .0001) (Table 2). Table 3 shows the 95% LoA for the test-retest of the individual SPARCS area scores as well as the overall score.

Figure 2 shows the plots for the mean overall SPARCS score by (a) age in years and (b) lens status. The results indicated that CS declines with advanced age and increased cataract severity.

When compared with the 10- to 19-years age group, the overall SPARCS scores were significantly lower for all age groups of 60 years and older (P = .0111 for 60–69 years, P < .0001 for 70–79 years and 80+ years; pairwise post hoc tests). When the 20- to 29-years age group was used as a reference comparison, the overall SPARCS scores were significantly lower for all age groups of 50 years and older (P = .0290 for 50–59 years; P = .0002 for 60–69 years; P < .0001 for 70–79 years and 80+ years; Tukey-adjusted post hoc test).

When compared with the Clear Lens group, significant decreases in CS were found for lens statuses of Trace/1+, 2+ and posterior chamber intraocular lens (PCIOL) (P < .0001 for all, pairwise post hoc test). Significant differences in CS were also found when Trace/1+ was compared with 2+ (P = .0039, Tukey-adjusted post hoc test) and PCIOL (P = .0535, Tukey-adjusted post hoc test). No significant difference was found when PCIOL was compared with 2+ (P = .9808, Tukey-adjusted post hoc test). A Spearman correlation analysis showed a significant correlation between age and increase in cataract severity level (ρ = 0.80, P < .0001, using the more severe cataract/lens status per participant).

Figure 3 summarizes the Bland-Altman method of measuring agreement between the first and second supervised tests (left panel, 95% LoA −11.07 to +11.35) and between the average of the supervised tests and the single unsupervised test (right panel, 95% LoA −9.18 to +10.60). The Bland-Altman plot suggests decreasing dispersion for the supervised tests versus the unsupervised test.

Plots summarizing the Bland-Altman method of measuring agreement between supervised test 1 and supervised test 2 (a the lower and upper limits of agreement are −11.07 and +11.35, respectively) and between the average of the supervised tests and the unsupervised test (b the lower and upper limits of agreement are −9.18 and +10.60, respectively) for 1 eye per patient. The average of the 2 methods is presented against the difference between the 2 methods (unsupervised—supervised or supervised test 2—supervised test 1)

When agreement between the testing sessions was evaluated, the ICC showed good agreement between the testing sessions. When the first and the second supervised sessions were compared, the ICC was 0.79. When the averages of the 2 supervised sessions and the single unsupervised session were compared, the ICC was 0.80.

Discussion

In this study, we established a normative database for an internet-based contrast sensitivity test, the SPARCS test.

The SPARCS test is accessible in an increasing number of locations worldwide. It requires only a computer with internet access, a standard web browser, and a ruler to measure the distance between the patient and the monitor. It does not require special equipment, software, or technical training since the program includes concise instructions. Unlike a chart test, it is not vulnerable to fading print. Because the SPARCS test is delivered from a central server, software updates are instantaneously effective wherever the test is used and do not depend on the end-user’s ability or willingness to install them. No matter where the SPARCS test is used, all the SPARCS data are collected in a central database, with integrated privacy safeguards, thereby opening a path to significant research potential.

Because the SPARCS test tests contrast using gratings, it is not influenced by the level of the test-taker’s familiarity with the Latin alphabet, as a letter test may be. The program has multiple answer choices, reducing the chance that test-takers will guess correctly, thereby improving its reproducibility and reliability. However, a possible limitation of the test is the testing of CS at a fixed spatial frequency. Although testing CS at multiple spatial frequencies may provide useful information about an individual’s visual function, it is time-consuming and may particularly impact the use of the SPARCS test since the test aims to measure CS in 5 distinct areas of the visual field [26, 35].

The Wills Eye Hospital Glaucoma Research Center has previously conducted studies evaluating the reliability of the SPARCS test in populations of patients with eye diseases. In a population of glaucoma patients, Richman and colleagues found a SPARCS score of less than 70 to have a sensitivity of 79.7% and a specificity of 92.8% for identifying glaucoma [9]. Faria and colleagues found the SPARCS test to be a valuable tool in assessing CS in patients with AMD, who had significantly lower scores than those of the controls [10]. A recent longitudinal study has reported that SPARCS scores significantly correlate with retinal nerve fiber layer thickness as measured by optical coherence tomography and work as a predictive tool of structural glaucoma damage [31].

In agreement with previous studies, our study confirmed that CS diminishes with advanced age and increased severity of cataracts (Fig. 2) [8, 36,37,38,39,40]. As seen in Figure 2a, the clustering around the regression line is tight. We also found that pseudophakic participants had lower CS than did participants with cataracts. Since only 7.3% of the participants were pseudophakic, it is possible that these findings may not be confirmed in larger samples. Some previous studies have found that CS improves with extracapsular cataract extraction with implantation of an intraocular lens, whilst others have found that patients with intraocular lens implants have lower CS than those of controls matched by age, sex, and visual acuity [40,41,42]. It is known that CS decreases with age, at least in part owing to retinal changes, which could partly explain the results found in our sample [43, 44]. It is also possible that any posterior capsular opacification graded under 3+ may have contributed to the relatively reduced CS. However, because of the lack of conclusive findings in the literature, CS in pseudophakic eyes is an important aspect to address in further studies.

In this study’s population of normal eyes, we found the SPARCS test to have strong agreement between repeated tests. The total SPARCS score had 95% LoA ranging between ±9.18 and ±10.60 for the repeated tests, which are values corresponding to approximately ±9.2% to 10.6% (on a SPARCS scale of 0–100). When converting the SPARCS scores back to log-based scores, these LoA are ±0.21 and ± 0.24, which are similar to previously reported LoA for the Pelli-Robson test, which ranged from +/− 0.17 to +/− 0.19 [30, 45]. The repeatability of both tests seems clinically acceptable.

In addition, no significant difference was found between the first and second supervised tests. Therefore, analysis of the test results demonstrates that with minimal instruction, the SPARCS test can be reliably performed by the patient, suggesting a potential for broader self-administered testing.

The difference between the supervised and unsupervised scores was significant, with better scores for the unsupervised test (81.42 vs 80.40). However, such a small difference is not likely to be clinically significant. The notion that results of visual acuity tests improve after repeated testing has been studied and demonstrated [46]. We hypothesize that the scores may have increased owing to a possible learning curve leading to a better understanding of how to take the test or that the participants may have felt more comfortable taking the test without an observer watching them.

The comparability of the supervised and unsupervised scores therefore supports the usefulness of the SPARCS test outside of a standardized doctor’s office and without the supervision of a trained technician. Previous studies have investigated the benefit of supervision and found that it may not need to be a consistent requirement. Van Coevorden and colleagues found supervision of visual field testing to be necessary only if patients were aged older than 70 years, had less than a grade-12 educational background, or had previous false-positives or fixation losses [47]. Another study found no difference in the mean deviation, pattern standard deviation, fixation losses, false-positives, or false-negatives of continuous and intermittent monitorings [48]. Patients should be able to assess their visual function and CS using the SPARCS test at their convenience. The improvement in scores should be considered when interpreting the results.

One challenge of performing the SPARCS test is possible patient fatigue. It may take 5 to 10 minutes to test both eyes. In this study, there was minimal fatigue. If the participants felt fatigue, they could restart the test. Another challenge is ensuring that the ambient lighting and monitor luminance levels are adequately uniform in different testing environments. We did not perform a gamma correction to adjust for the low contrast levels or to measure the screen luminance. However, minor variations in luminance may not be significant in basic screening applications, an important target application for the SPARCS test. In addition, requiring users to standardize low levels of contrast undercuts the goal of providing broad accessibility. Our goal is to identify ocular disease using a test that is easy to use and accessible. We do not want to restrict use of this test to standardized testing environments. Where high levels of precision are desired, inexpensive photometers can be used to calibrate light levels. Future studies should assess the effect of luminance on the SPARCS test.

Current clinical care is heavily influenced by tests to help determine whether a patient’s condition is stable or changing. Knowing the test-retest variability of an instrument is essential to know if a change is real and not fluctuation or noise in the test itself. In addition, clinicians need to use judgment regarding the number of times a test has been taken when interpreting the results [49, 50]. For example, if someone took the SPARCS test 4 times with scores of 79, 80, 78, and 78 and then took the test 4 times a year later with scores of 72, 73, 73, and 72, technically, the mean scores are not beyond the 95% test-retest values of the SPARCS test; however, one can be confident the change is real because of the number of times the test was taken.

In conclusion, the present study provides information about a novel test of CS, an essential foundation of visual function. The SPARCS test is a reliable test of CS and, with instruction, can be performed without supervision. Given its intrinsic accessibility and minimal hardware and software requirements, the SPARCS test may be suitable as a standard tool for evaluating and monitoring CS in individuals with either normal eyes or visual impairment at home, in offices, and wherever there is internet access. The establishment of a normative database should assist in differentiating between normal and unhealthy eyes in the context of the clinic and future research.

References

Arden GB. The importance of measuring contrast sensitivity in cases of visual disturbance. Br J Ophthalmol. 1978;62:198–209.

West SK, Rubin GS, Broman AT, Munoz B, Bandeen-Roche K, Turano K. How does visual impairment affect performance on tasks of everyday life? The SEE Project. Salisbury Eye Evaluation. Arch Ophthal. 2002;120:774–80.

Owsley C, Sekuler R, Boldt C. Aging and low-contrast vision: face perception. Invest Ophthalmol Vis Sci. 1981;21:362–5.

Evans DW, Ginsburg AP. Contrast sensitivity predicts age-related differences in highway-sign discriminability. Hum Factors. 1985;27:637–42.

Owsley C, Sloane ME. Contrast sensitivity, acuity, and the perception of ‘real-world’ targets. Br J Ophthalmol. 1987;71:791–6.

Kaleem MA, Munoz BE, Munro CA, Gower EW, West SK. Visual characteristics of elderly night drivers in the Salisbury Eye Evaluation Driving Study. Invest Ophthalmol Vis Sci. 2012;53:5161–7.

El-Gasim M, Munoz B, West SK, Scott AW. Associations between self-rated vision score, vision tests, and self-reported visual function in the Salisbury Eye Evaluation Study. Invest Ophthalmol Vis Sci. 2013;54:6439–45.

Gupta L, Cvintal V, Delvadia R, Sun Y, Erdem E, Zangalli C, et al. SPARCS and Pelli-Robson contrast sensitivity testing in normal controls and patients with cataract. Eye (Lond). 2017;31:753–61.

Richman J, Zangalli C, Lu L, Wizov SS, Spaeth E, Spaeth GL. The Spaeth/Richman contrast sensitivity test (SPARCS): design, reproducibility and ability to identify patients with glaucoma. Br J Ophthalmol. 2015;99:16–20.

Faria BM, Duman F, Zheng CX, Waisbourd M, Gupta L, Ali M, et al. Evaluating contrast sensitivity in age-related macular degeneration using a novel computer-based test, the Spaeth/Richman Contrast Sensitivity test. Retina. 2015;35:1465–73.

Kuyk T, Elliott JL. Visual factors and mobility in persons with age-related macular degeneration. J Rehabil Res Dev. 1999;36:303–12.

Stavrou EP, Wood JM. Letter contrast sensitivity changes in early diabetic retinopathy. Clin Exp Optom. 2003;86:152–6.

Rubin GS, Roche KB, Prasada-Rao P, Fried LP. Visual impairment and disability in older adults. Optom Vis Sci. 1994;71:750–60.

Ginsburg AP, Hendee HW. Quantification of visual capability. In: Hendee WR, Wells P, editors. The Perception of Visual Information. New York, NY: Springer; 1997. p. 57–83.

Rovamo J, Virsu V, Nasanen R. Cortical magnification factor predicts the photopic contrast sensitivity of peripheral vision. Nature. 1978;271:54–6.

Rosen R, Lundstrom L, Venkataraman AP, Winter S, Unsbo P. Quick contrast sensitivity measurements in the periphery. J Vis. 2014;14:3.

Marron JA, Bailey IL. Visual factors and orientation-mobility performance. Am J Optom Physiol Optics. 1982;59:413–26.

Leat SJ, Woodhouse JM. Reading performance with low vision aids: relationship with contrast sensitivity. Ophthalmic Physiol Opt. 1993;13:9–16.

Ginsburg AP, Evans DW, Sekule R, Harp SA. Contrast sensitivity predicts pilots’ performance in aircraft simulators. Am J Optom Physiol Optics. 1982;59:105–9.

Bernth-Petersen P. Visual functioning in cataract patients: methods of measuring and results. Acta Ophthalmol. 1981;59:198–205.

Pelli DG, Robson JG, Wilkins AJ. The design of a new letter chart for measuring contrast sensitivity. Clin Vis Sci. 1988;2:187–99.

Arditi A. Improving the design of the letter contrast sensitivity test. Invest Ophthalmol Vis Sci. 2005;46:2225–9.

Hadavand MB, Heidary F, Heidary R, Gharebaghi R. A modified Middle Eastern contrast sensitivity chart. Med Hypothesis Discov Innov Ophthalmol. 2014;3:17–9.

Reeves BC, Wood JM, Hill AR. Vistech VCTS 6500 charts: within- and between-session reliability. Optom Vis Sci. 1991;68:728–37.

Pesudovs K, Hazel CA, Doran RM, Elliott DB. The usefulness of Vistech and FACT contrast sensitivity charts for cataract and refractive surgery outcomes research. Br J Ophthalmol. 2004;88:11–6.

Richman J, Spaeth GL, Wirostko B. Contrast sensitivity basics and a critique of currently available tests. J Cataract Refract Surgery. 2013;39:1100–6.

Wilkins AJ, Della Sala S, Somazzi L, Nimmo-Smith I. Age-related norms for the Cambridge low contrast gratings, including details concerning their design and use. Clin Vis Sci. 1988;2:201–12.

Kelly SA, Pang Y, Klemencic S. Reliability of the CSV-1000 in adults and children. Optom Vis Sci. 2012;89:1172–81.

Bach M. The Freiburg Visual Acuity Test: variability unchanged by post-hoc re-analysis. Graefes Arch Clin Exp Ophthalmol. 2007;245:965–71.

Kollbaum PS, Jansen ME, Kollbaum EJ, Bullimore MA. Validation of an iPad test of letter contrast sensitivity. Optom Vis Sci. 2014;91:291–6.

Amanullah S, Okudolo J, Rahmatnejad K, Lin SC, Wizov SS, Manzi Muhire RS, et al. The relationship between contrast sensitivity and retinal nerve fiber layer thickness in patients with glaucoma. Graefes Arch Clin Exp Ophthalmol. 2017;255:2415–22.

Spaeth GL, Henderer J, Liu C, Kesen M, Altangerel U, Bayer A, et al. The disc damage likelihood scale: reproducibility of a new method of estimating the amount of optic nerve damage caused by glaucoma. Trans Am Ophthalmol Soc. 2002;100:181–5.

Bland JM, Altman DG. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet. 1986;1:307–10.

Bland JM, Altman DG. Comparing methods of measurement: why plotting difference against standard method is misleading. Lancet. 1995;346:1085–7.

Pelli DG, Bex P. Measuring contrast sensitivity. Vision Res. 2013;90:10–4.

Rubin GS, West SK, Munoz B, Bandeen-Roche K, Zeger S, Schein O, et al. A comprehensive assessment of visual impairment in a population of older Americans: the SEE Study. Salisbury Eye Evaluation Project. Invest Ophthalmol Vis Sci. 1997;38:557–68.

Ross JE, Clarke DD, Bron AJ. Effect of age on contrast sensitivity function: uniocular and binocular findings. Br J Ophthalmol. 1985;69:51–6.

Mateus C, Lemos R, Silva MF, Reis A, Fonseca P, Oliveiros B, et al. Aging of low and high level vision: from chromatic and achromatic contrast sensitivity to local and 3D object motion perception. PloS One. 2013;8:e55348.

Cheng Y, Shi X, Cao XG, Li XX, Bao YZ. Correlation between contrast sensitivity and the lens opacities classification system III in age-related nuclear and cortical cataracts. Chin Med J. 2013;126:1430–5.

Rubin GS, Adamsons IA, Stark WJ. Comparison of acuity, contrast sensitivity, and disability glare before and after cataract surgery. Arch Ophthal. 1993;111:56–61.

McGwin G Jr, Scilley K, Brown J, Owsley C. Impact of cataract surgery on self-reported visual difficulties: comparison with a no-surgery reference group. J Cataract Refract Surgery. 2003;29:941–8.

Mela EK, Gartaganis SP, Koliopoulos JX. Contrast sensitivity function after cataract extraction and intraocular lens implantation. Doc Ophthalmol. 1996;92:79–91.

Dagnelie G. Age-related psychophysical changes and low vision. Invest Ophthalmol Vis Sci. 2013;54:88–93.

Salvi SM, Akhtar S, Currie Z. Ageing changes in the eye. Postgrad Med J. 2006;82:581–7.

Lovie-Kitchin JE, Brown B. Repeatability and intercorrelations of standard vision tests as a function of age. Optom Vis Sci. 2000;77:412–20.

Otto J, Michelson G. Repetitive tests of visual function improved visual acuity in young subjects. Br J Ophthalmol. 2014;98:383–6.

Van Coevorden RE, Mills RP, Chen YY, Barnebey HS. Continuous visual field test supervision may not always be necessary. Ophthalmology. 1999;106:178–81.

Johnson LN, Aminlari A, Sassani JW. Effect of intermittent versus continuous patient monitoring on reliability indices during automated perimetry. Ophthalmology. 1993;100:76–84.

Vesti E, Johnson CA, Chauhan BC. Comparison of different methods for detecting glaucomatous visual field progression. Invest Ophthalmol Vis Sci. 2003;44:3873–9.

Lee AC, Sample PA, Blumenthal EZ, Berry C, Zangwill L, Weinreb RN. Infrequent confirmation of visual field progression. Ophthalmology. 2002;109:1059–65.

Acknowledgements

This work was supported by the Partridge Foundation (grant # PRT13001).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

L. Gupta, None; M. Waisbourd, None; C. T. Sanvicente, None; M. Hsieh, None; S. S. Wizov, None; E. E. Spaeth, P (Spaeth/Richman contrast sensitivity test (SPARCS), No. 8,042,946); J. Richman, P (Spaeth/Richman contrast sensitivity test (SPARCS), No. 8,042,946); G. L. Spaeth, P (Spaeth/Richman Contrast Sensitivity Test (SPARCS), No. 8,042,946).

Additional information

Corresponding author: Sheryl S. Wizov

About this article

Cite this article

Gupta, L., Waisbourd, M., Sanvicente, C.T. et al. Establishment of a normative database and evaluation of the test-retest repeatability of the Spaeth/Richman contrast sensitivity test. Jpn J Ophthalmol 63, 73–81 (2019). https://doi.org/10.1007/s10384-018-0640-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10384-018-0640-3