Abstract

In this article, we first present a unified scheme to apply nonlinear dynamic time integration methods. The unified scheme covers many existing time integration methods as exceptional cases. This paper has investigated time integration methods, including the Newmark, Wilson, Houbolt, and \(\rho _{\infty }\)-Bathe method. We then implement the multi-point methods as the nonlinear solution schemes along with the direct time integration methods in nonlinear dynamic analysis. Also, a unified scheme for applying single-point and multi-point methods is presented. Finally, we demonstrate with numerical examples that the unified scheme provides a framework for comparing direct time integration methods. We also investigate the performance of multi-point methods as nonlinear solution methods in detail.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Direct time integration methods constitute a well-established component of computational structural mechanics [1,2,3,4]. The importance of accurate dynamic analysis has led to various time integration methods appearing in recent decades. The time integration methods are classified into two types: explicit and implicit. A variety of explicit methods for dynamic analysis have been proposed. It should be noted that explicit methods are conditionally stable and require a tiny time step to achieve accurate results in most of the analyses. Mainly the implicit methods have unconditional stability, and the time intervals in these methods depend on the characteristics of the problem. Hence, the implicit methods can be much more effective for many problems, especially for structural frequency analysis. Following Houbolt method [5] and Newmark method [6], a series of single-step methods such as Wilson method [7] was introduced. These single-step methods show the second-order accuracy, and they have controllable numerical damping [8,9,10,11,12,13]. Spatial discretization leads to the creation of spurious high-frequency modes. The numerical dissipation of implicit methods removes the effect of these spurious modes. Also, the numerical dissipation brings numerical stability to the method when applied to nonlinear systems. On the other hand, improvement of the dissipation capability is associated with the reduction of their accuracy [14,15,16,17,18,19], and then, high-order time-marching procedures have been developed for dynamic analysis [20,21,22]. Implicit methods are used widely in the nonlinear dynamic analysis of systems. Initially, nonlinear dynamic analyses were performed using implicit time integration methods without inner iterative loops, and the tangential stiffness matrices were calculated only at the beginning of each time step. Then, the importance of using iterative loops to achieve the appropriate response was identified [23]. The iterative process is the method of choice to find the solution for nonlinear systems [1]. The single-point and multi-point methods are two categories of iterative procedures to solve the nonlinear systems [24]. The single-point methods use one evaluated point to approximate the next point. The multi-point methods do not throw away information about previously computed points. Multi-point methods are a family of efficient root-finding techniques for nonlinear problems [25]. In recent decades, due to the ability of multi-point methods to overcome the theoretical limits of single-point methods, interest has been prompted in applying and developing these techniques. The multi-point methods have been utilized to solve the nonlinear systems that arise in many problems, such as approximation of artificial satellites’ preliminary orbits [26], plasticity problems [27], power flow equations [28], fracture analysis [29], and path-following methods [30,31,32,33].

Finding a tight path of dynamic equilibrium in discrete time steps by iteration can be very important [23]. In studies on nonlinear dynamic analysis of structures, the Newton–Raphson method is widely used for establishing a dynamic equilibrium at the time steps. This study focuses on decreasing the number of iterations of the time integration methods at the time steps by improving the order of convergence of the iteration methods. Therefore, we replace the multi-point methods (order of convergence higher than two) with the Newton-Raphson method (order of convergence two) and evaluate the performance of the time integration methods by the iteration methods with different orders of convergence. Numerous techniques have emerged due to the development of direct time integration methods for nonlinear dynamic analysis. In order to compare old and emerging methods, it is required to model the problems using each time integration method. In this study, we proposed a unified scheme to avoid repeating the problem’s modeling process for each method.

The main structure of this paper is organized as follows: Several implicit time integration methods are mentioned in Sect. 2. Several iteration methods are proposed in Sect. 3. A unified scheme for the implicit time integration methods and the unified scheme for nonlinear solvers in the dynamic analysis is presented in Sect. 4. We investigate the application of direct time integration methods along with multi-point methods using numerical examples in Sect. 5. Finally, we summarize and draw conclusions in Sect. 6.

2 Implicit direct time integration methods

The equation of motion of a nonlinear system under the force vector \({{{\mathbf {P}}}^t}\) can be expressed as:

where \({{{\mathbf {M}}}}\) is the mass matrix, \({{{\mathbf {C}}}}\) is the damping matrix, \({{{\mathbf {U}}}}\) is the vector of nodal displacements/rotations and \({{\mathbf {F}}}\) denotes the vector of nodal forces corresponding to the element internal stresses.

The exact analytical solution of Eq. (1) is difficult or almost impossible for most practical problems. Direct time integration is a method for solving nonlinear dynamic problems. In these methods, the equilibrium equations are satisfied at discrete time points. The dynamic equations at discrete time points are similar to static equations. Therefore, all the solution techniques used in the static analysis can also be applied effectively in the time integration methods.

2.1 Newmark method

Newmark [6] developed step-by-step methods based on the following equations for the approximation of velocity and displacement for finding the dynamic response of a system [1, 2]:

\(\alpha\) provides a linearly varying weighting between the influence of the initial and the final accelerations on velocity, and \(\beta\) similarly adjusts the effect of the initial and final accelerations on the displacement [4]. We can substitute Eqs. (2) and (3) in the equation of motion in the linear case (Eq. (1)) and compute displacement at time \(t+\Delta t\) as follows:

where \({\hat{{\mathbf {K}}}}^{t+\Delta {t}}\) and \({\hat{{\mathbf {P}}}}^{t+\Delta {t}}\) are the effective stiffness matrix and effective load vector at the time \({t+\Delta {t}}\), respectively. They are calculated as follows:

\({{\mathbf {K}}}^{t+\Delta {t}}\) is stiffness matrix of the structure at the time \({t+\Delta {t}}\), and is constant in linear equations. \({\varvec{\eta }}\) and \({\varvec{\delta }}\) are dynamic components of the effective load vector and effective stiffness matrix, respectively. These dynamic components are affected by the mass and damping of the structure and are computed by the corresponding time integration method. The dynamic components are considered in the Newmark method as follows:

While \({ {\mathbf {U}}}^{{t}+\Delta {t}}\) is known, the velocity \({\dot{{\mathbf {U}}}}^{{t}+\Delta {t}}\) and acceleration \({\ddot{{\mathbf {U}}}}^{{t}+\Delta {t}}\) could be computed by Eqs. (2) and (3).

The average acceleration and linear acceleration methods are two exceptional cases of the Newmark method. Figure 1 shows the average acceleration and linear acceleration methods. The average acceleration method (Fig. 1a) is obtained by the Newmark equations (Eqs. (2) and (3)) with \(\alpha =\frac{1}{2}\) and \(\beta =\frac{1}{4}\). The average acceleration method is unconditionally stable for all values \(\frac{\Delta t}{T}\). The Newmark method can be linearized by setting \(\alpha =\frac{1}{2}\) and \(\beta =\frac{1}{6}\) in the Newmark equations, as shown in Fig. 1b. In contrast to the average acceleration method, the linear acceleration method is conditionally stable. It is stable only for \(\frac{\Delta t}{T}\leqslant \frac{\sqrt{3}}{\pi }\) [3].

2.2 Wilson method

Wilson [7] proposed a time integration technique similar to the linear acceleration method. As it is shown in Fig. 2, Wilson assumed that the acceleration varies linearly over an extended interval (Eq. (9)). In the Wilson method, parameter \(\theta\) should be more than 1.37 to satisfy unconditional stability. The optimal value of \(\theta\) is 1.4 [3]. In this study, we considered two values 1.4 and 2 for \(\theta\).

The fundamental relations used in the Wilson method are similar to the linear acceleration method. The main difference is to replace \(\Delta t\) in the linear acceleration method with \(\theta \Delta t\) to obtain the Wilson method.

Equations (5) and (6) calculate the effective stiffness matrix and effective load vector at the time \(t+\theta \Delta {t}\). The dynamic components in the Wilson method are as follows:

The exciting force is assumed to vary linearly over the same time interval. Thus, the force vector at time \(t+\theta \Delta {t}\) is computed as:

\({{\mathbf {U}}}^{t+\theta \Delta t}\) can be obtained by Eq. (4), and then, \({{\mathbf {U}}}^{t+ \Delta t}\) is calculated as follows:

The velocity and acceleration are calculated by setting \(\alpha =\frac{1}{2}\) and \(\beta =\frac{1}{6}\) in Eqs. (2) and (3).

2.3 Houbolt method

Houbolt method [5] uses a four-point finite difference expression to approximate the acceleration and velocity components. The backward difference equations in the Houbolt method are employed by the following (Fig. 3):

Equations (5) and (6) calculate the effective stiffness and effective load vector, and the dynamic components in the Houbolt method can be expressed:

\({{\mathbf {U}}}^{t+\Delta {t}}\) is calculated by Eqs. (4)–(6), (16) and (17) , which requires the knowledge of \({{\mathbf {U}}}^{t}\), \({{\mathbf {U}}}^{t-\Delta {t}}\) and \({{\mathbf {U}}}^{t-2\Delta {t}}\). Therefore, \({{\mathbf {U}}}^{0}\), \({\dot{{\mathbf {U}}}}^{0}\) and \({\ddot{{\mathbf {U}}}}^{0}\) are required to start the Houbolt method. However, it is more accurate to calculate \({{\mathbf {U}}}^{\Delta {t}}\) and \({{\mathbf {U}}}^{2\Delta {t}}\) by one of the other methods described. We applied the average acceleration method for the start of the Houbolt method. This method belongs to the implicit method class and is unconditionally stable [1].

2.4 \(\rho _{\infty }\)-Bathe method

In the \(\rho _{\infty }\)-Bathe method [15, 16] the unknown displacements, velocities, and accelerations are calculated by considering the time step to consist of two sub-steps \(\Delta {t}=\Delta t_{1}+\Delta t_{2}\). The first sub-step is \(\Delta t_{1}=\gamma \Delta t\) and the second sub-step is \(\Delta t_{2}=(1-\gamma ) \Delta t\). In the first sub-step, the trapezoidal rule is used for the equilibrium at time \(t+\Delta t_{1}\):

In the second sub-step, the following relations are used for the equilibrium at time \(t+\Delta t\):

where \(q_{0}\), \(q_{1}\), \(q_{2}\), \(s_{0}\), \(s_{1}\), \(s_{2}\) and \(\gamma\) could be computed in the \(\rho _{\infty }\)-Bathe method to achieve unconditional stability in linear analysis and second-order accuracy as follows [15]:

A relation is defined to assign the amount of numerical dissipation in the high-frequency range as follows [15]:

where

The \(\rho _{\infty }\)-Bathe method has two free parameters \(\gamma\) and \(\rho _{\infty }\) in Eqs. (22) and (23). This scheme provides the same effective stiffness matrix for each sub-step by \(\rho _{\infty }\in [0,1]\). The local maximum of amplitude decay and the global minimum of period elongation are calculated as follows [16]:

The effective stiffness matrices and the effective load vectors in the first and second time sub-steps could be computed by Eqs. (5) and (6), and the dynamic components in the \(\rho _{\infty }\)-Bathe method can be expressed:

Noh and Bathe [15, 16] investigated three characteristics including period elongation, amplitude decay and spectral radius for the \(\rho _{\infty }\)-Bathe method. According to studies, the \(\rho _{\infty }\)-Bathe method with \(\rho _{\infty }\rightarrow 1\) and \(\gamma =\gamma _{0}\) has a minimum period elongation, and for \(\rho _{\infty }\rightarrow 1\) and \(\gamma \rightarrow 0\) has the least amount of amplitude decay. The spectral radius will be close to one at low frequencies and zero at high frequencies by selecting \(\rho _{\infty }\rightarrow 0\) and \(\gamma \rightarrow 0\), also the response will have a reasonable period elongation by selecting \(\gamma =\gamma _{0}\).

3 Solutions of nonlinear equations in dynamic analysis

In nonlinear analysis, F as the resisting force is a nonlinear function of unknown U. A nonlinear solution method should be used during the analysis. This is a typical requirement of the direct time integration methods. Here, we first develop the Newton–Raphson method of iteration for nonlinear dynamic analysis. Then, we clarify how to implement the multi-point methods as the nonlinear solution method in dynamic analysis.

In dynamic analysis of a one degree of freedom system, we intend to determine the response quantities \({U^{t+\Delta {t}}}\), \({{{\dot{U}}}^{t+\Delta {t}}}\) and \({{\ddot{U}}}^{t+\Delta {t}}\) at time \({t+\Delta {t}}\) that satisfy Eq. (1), which can be expressed as:

where

According to Eq. (31), the effective resisting force \({{\hat{F}}}\) in dynamic analysis includes the inertia and damping forces, and F corresponds to the element internal stresses. In fact, the dynamic analysis Eq. (30) at the time \({t+\Delta {t}}\) is similar to the static analysis. Therefore, Eq. (30) can be solved by the Newton–Raphson method for the multi-degree of freedom systems [2]:

\({\hat{{\mathbf {K}}}_{T}}^{t+\Delta {t}}\) in nonlinear analysis is the effective tangent stiffness matrix, and \({\hat{{\mathbf {R}}}^{t+\Delta {t}}_{(j)}}\) is the effective residual force vector of iteration \(j^{th}\) at time \(t+\Delta {t}\). The residual force can be calculated by substituting Eq. (31) in the right side of Eq. (32):

\({\varvec{\eta }}\) and \({\varvec{\delta }}\) are dynamic components of the effective load vector and effective stiffness matrices, respectively, which are computed according to the time integration methods in the Sect. 2. The residual force vector can be rewritten by combining Eq. (6) with Eq. (33) as follows:

Now, we can use the left side of Eq. (32) to obtain the value of \({\Delta {{\mathbf {U}}}_{(j)}^{t+\Delta {t}}}\), and the response will be determined in the next iteration as:

The iteration process continues until the solution error is less than or equal to the tolerance threshold. In this study, we consider the Euclidean norm of \((\Delta {{\mathbf {U}}}_{(n)}^{t+\Delta t})^{T}{\hat{{\mathbf {R}}}}_{(n)}^{t+\Delta t}\) for measuring the solution error, and \(\epsilon =10^{-20}\) as tolerance threshold. Figure 4 provides a geometric interpretation of the application of the Newton–Raphson as a nonlinear solution method. This method converges with quadratic rate to find the exact response in Table 2.

3.1 Multi-point methods

The multi-point methods are the other accepted method to solve the system of nonlinear equations. The multi-point methods show higher-order convergence than the Newton–Raphson method. The important characteristic of multi-point methods is that they achieve a higher-order convergence rate without requiring higher-order derivatives. This article applies four multi-point methods as the nonlinear solution technique to improve the convergence rate of the iterative process of the nonlinear direct time integration method. However, the algorithmic implementations of these four multi-point techniques are carefully described in the next section to be easy to extend to other multi-point methods. In this paper, we implement four multi-point methods including: (1) Weerakoon–Fernando method [34], (2) Homeier method [35], (3) Jarratt method [36] and (4) Darvishi–Barati method [37]. The Weerakoon–Fernando and Homeier methods have two stages at each iteration with the third order of convergence. Jarratt and Darvishi–Barati methods are the fourth-order techniques.

Let \(f(x)=0\) be a nonlinear equation, and \(f'(x)\) is the first derivative of f(x). Weerakoon and Fernando proposed the following relation for the solution of the nonlinear equation:

This method was derived by applying numerical integration [34], whereas this cubic scheme was initially proposed by Traub [38].

Another cubic method as a solver of nonlinear equations was presented by Homeier [35]. This two-point method is given as:

First, this method was developed for the univariant case [39] and then applied to find the root of multi-variable cases [35].

Another implemented iterative method in this paper is Jarratt procedure [36] which is defined as follows:

where Jarratt presented it for the univariant cases, and then, it was applied for many multi-variant problems [40, 41].

The Darvishi–Barati method is defined as follows:

where this fourth-order method was a modification of the third-order methods proposed by Fortini and Sormani [42]. The abbreviations of the iteration methods are represented in Table 1.

In solving nonlinear equations, increasing the order of convergence is one of the topics that has gained much attention. However, the derivatives of order greater than one are usually difficult to evaluate, especially for multivariate cases. The iteration methods can achieve higher convergence by the first derivative. The order of convergence in different methods can be calculated by the information of the last three iterations. The order of convergence (\(r_{\text {c}}\)) for a method is obtained using the following expression [27, 29]:

The order of convergence of the iteration methods provided in Eqs. (36), (37), (39) and (41) is determined by finding a single root of Eq. (43), where the iteration process can be started by an initial guess \(x_{0}=0.5\), and the root of the equation is \(x_{root}=0\) [27].

Table 2 shows the logarithm of the function value at every iteration and the order of convergence for the iteration methods.

3.1.1 Weerakoon–Fernando method of iteration in dynamic analysis

According to Eq. (36), the Weerakoon–Fernando method is used to find the solution to Eq. (30) as follows:

We move the sub-iteration index behind each quantity to reduce clutter. Therefore, \(^{(1)} ( {\hat{{\mathbf {K}}}_{T}})\) and \(^{(1)} ( {\hat{{\mathbf {R}}}})\) are the effective tangent stiffness matrix and the effective residual force vector at the first stage of the iteration method, respectively. The solution of linearized Eq. (44) \(\left( ^{(1)}\Delta {{\mathbf {U}}} \right)\) is used to make a better estimation of displacement as:

The effective tangent stiffness is calculated by \(^{(2)}{{\mathbf {U}}}^{t+\Delta {t}}_{(j)}\) for solving the second linearized equation as follows:

where \(({\hat{{\mathbf {K}}}_{\text {sec}}})_{(j)}^{t+\Delta {t}}\) is the effective secant stiffness matrix and is an equivalent approximation of the effective tangent stiffness matrices:

The final solution, according to the Weerakoon–Fernando method, is estimated as:

The iterative process of the Weerakoon–Fernando method for a system is illustrated in Fig. 5.

3.1.2 Homeier method of iteration in dynamic analysis

The process of solving the Homeier method is similar to the Weerakoon–Fernando method, except that the force vector in the Homeier method is half the force vector in the Weerakoon–Fernando method. In other words, in Eqs. (44) and (46), \(\left[ \frac{1}{2}\left( {^{(1)}{\hat{{\mathbf {R}}}}^{{t+\Delta {t}}}_{(j)}} \right) \right]\) and \(\left[ {^{(1)} {\hat{{\mathbf {R}}}}^{t+\Delta {t}}_{(j)}} \right]\) are used instead of \(\left[ {^{(1)} {\hat{{\mathbf {R}}}}^{t+\Delta {t}}_{(j)}} \right]\) and \(\left[ 2\left( {^{(1)}{\hat{{\mathbf {R}}}}^{{t+\Delta {t}}}_{(j)}} \right) \right]\), respectively. Also, the approximation of the effective secant stiffness matrix in the Homeier method is \(({\hat{{\mathbf {K}}}_{\text {sec}}})_{(j)}^{t+\Delta {t}} \cong \left( ^{(2)}({\hat{{\mathbf {K}}}_{T}})^{t+\Delta {t}}_{(j)} \right)\). Figure 6 illustrates the details of the iterative process of the Homeier method for a system.

3.1.3 Jarratt method of iteration in dynamic analysis

The iterative process of the Jarratt method is the same as the Weerakoon–Fernando method and the Homeier method. There are only differences in the effective force vector and the effective secant stiffness matrix. For using the Jarratt method, the effective force vector should be changed from \(\left[ {^{(1)} {\hat{{\mathbf {R}}}}^{t+\Delta {t}}_{(j)}} \right]\) and \(\left[ 2\left( {^{(1)}{\hat{{\mathbf {R}}}}^{{t+\Delta {t}}}_{(j)}} \right) \right]\) to \(\left[ \frac{2}{3}\left( {^{(1)}{\hat{{\mathbf {R}}}}^{{t+\Delta {t}}}_{(j)}} \right) \right]\) and \(\left[ \frac{1}{2}\left( {^{(1)}{\hat{{\mathbf {R}}}}^{{t+\Delta {t}}}_{(j)}} \right) \right]\) in Eqs. (44) and (46), respectively. Also, the approximation of the effective secant stiffness matrix in the Jarratt method is as follows:

Figure 7 provides a geometric interpretation of the iterative process of the Jarratt method for a system.

3.1.4 Darvishi–Barati method of iteration in dynamic analysis

The process of solving the Darvishi–Barati method in each iteration consists of three sub-steps, which has one sub-step more than the previous multi-point iteration methods. The first and second sub-steps for the Darvishi–Barati method can be obtained using Eqs. (44)–(46) and (48) in which Eq. (46) should be changed as follows:

\({^{(2)} {\hat{{\mathbf {R}}}}^{t+\Delta {t}}_{(j)}}\) is computed by \(^{(2)}{{\mathbf {U}}}^{t+\Delta {t}}_{(j)}\). The approximation of effective secant stiffness matrix in the third sub-iteration is as follows:

The approximated matrix is employed to make a better estimation of the displacement vector as follows:

The final sub-step of iteration according to the Darvishi–Barati method is estimated as:

The iterative process of Darvishi–Barati method for a system is illustrated in Fig. 8.

4 Unified scheme

In this study, the four time integration methods and the five iteration methods are comparatively studied for satisfying the equilibrium equations at discrete time intervals. Each of the time integration methods is coupled with the iteration methods to evaluate the performance of the methods, which necessitates a significant number of problems modeled. Therefore, a unified scheme was provided to avoid repeating modeling, save time, and switch between the methods. In the unified scheme, the iteration methods are subroutines in the main program (time integration method). This section obtains a unified scheme to extract different methods by changing the coefficients without the need for re-programming. The main advantages of the unified scheme are comprehensive programming and improved software efficiency, especially in commercial applications.

4.1 A unified scheme for direct time integration methods

The time integration method includes a set of coefficients, vectors, and matrices. These sets have differences and similarities with each other. A unified set can be obtained by the intersection of the set’s similarities and the union of the set’s differences and can be introduced as a unified scheme. The following two main sections obtained the unified scheme (Algorithm 1) :

-

Unifying the time sub-steps in each time step

We considered the time sub-steps as a unified loop because time integration methods have at least one time sub-step. The number of time sub-steps (nss) equals the number of loop repetitions. The Newmark method, the Wilson method, and the Houbolt method are called the simple methods (\({\text {nss}}=1\)), and the \(\rho _{\infty }\)-Bathe method is considered as a composite method (\({\text {nss}}=2\)). The repetition loops are connected in series as follows:

$$\begin{aligned} h_{\text {ssi}}=\sum _{i=0}^{\text {ssi}}\Delta t_{i}\,;\,\,\,\,\Delta t_{0}=0\,, \end{aligned}$$(54)where ssi is the current sub-step number, \(\Delta t_{i}\) is the time intervals of sub-step ith and \(h_{\text {ssi}}\) is the sum of time intervals of sub-steps (\(h_{\text {nss}}=\Delta t\)). In time integration methods, an adequate time step \(\Delta t\) ensures an accurate response for structural modes. The value of \(\Delta t\) is a multiple of T (\(\Delta t=\Omega T\)). T is the structure’s natural period, which is calculated based on the stiffness matrix and mass matrix. The accuracy of the time integration method will be increased by selecting the low value of \(\Omega\), but on the other hand, it will also increase the cost of calculations. Therefore, the value of \(\Omega\) should be determined according to the required accuracy of the problem. Some temporal integration methods have conditional stability, and \(\Omega\) should be less than the stability limit of the method.

-

Unifying coefficients and parameters due to each method

The response of the time integration methods is calculated step by step, where the outputs of each time step are counted as inputs for the next step. Therefore, since the response in the time integration methods is obtained by sequences (effective loads, displacements, velocities, and accelerations) described in Sect. 2, we attempted to formulate the methods in as similar a pattern as possible. In these sequences, coefficients (integration constants) are obtained by special relations of the time integration methods. For example, in the Newmark method, the coefficients are obtained by relations in terms of variable parameters (\(\alpha\), \(\beta\)). For unifying coefficients, all variable parameters of the methods (\(\alpha\), \(\beta\), \(\rho _{\infty }\), \(\gamma\), \(\theta\)) should be placed in a unified relation. Choosing any of the methods for analysis causes the effect of the variable parameters of other methods to be neutralized in the unified relation. For example, if the Newmark method is used as the analysis method, the variable parameters of the other methods (\(\rho _{\infty }\), \(\gamma\), \(\theta\)) are used as neutral constant parameters, and the unified relation is obtained only in terms of the variable parameters of this method (\(\alpha\), \(\beta\)). In the unified scheme, the parameter \(\rho _{\infty }\) for the composite method is a variable parameter, whereas the values of \(\rho _{\infty }\) do not affect the coefficients in other methods.

The variable and constant parameters in Algorithm 1 are defined in Table 3. As a sample, how to obtain coefficient \(a_{0}\) in the unified scheme is described in Appendix 1. This process can be generalized to obtain other coefficients of Algorithm 1.

4.2 A unified scheme for methods of iteration

Iteration methods are observed in Sect. 3 as the nonlinear solver in dynamic analysis. Algorithm 2 presents a unified scheme for iteration methods that allows the single-point and multi-point methods to be used easily with time integration methods. Another important feature of the unified scheme is its ability to be extended to other iteration methods. Table 4 is used to select the type of desired iteration method at the start of Algorithm 2. The sub-steps of the algorithm are equal to the points of iteration methods (NP) used to find the equilibrium path. NP is different for various iteration methods. The corresponding value of NP for each method is presented in Table 4.

5 Numerical examples

We conduct a comparison study that shows the performance of time integration methods along with multi-point methods as solvers of nonlinear equations. A variety of time integration methods have been employed for solving numerical examples. Each technique is shortened in Table 5 to provide a better representation. In this study, numerical exercises are conducted by computation equipment with a Core-5i processor running at 2.5 GHz and 4GB of RAM.

Toggle truss [43] and space truss [43, 44] were used to analyze the proposed methods. In the first example, the toggle truss is motivated by a step load. In the second and third examples, the trusses are subjected to the El-Centro earthquake load [43].

5.1 Toggle truss under step load

The toggle truss, shown in Fig. 9, is subjected to a vertical step load of \(P = 20\) KPa at node 2. The moment of inertia, the modulus of elasticity and the cross-sectional area of members are \(19630\,\text {cm}^{4}\), \(2\times 10^{5}\) MPa and \(314\,\text {cm}^{2}\), respectively. The lumped mass of each node is \(5\times 10^{4}\,\text {Ns}^{2}\)/mm [43].

The exact solution of the truss under the step load is expressed in Eq. (55) [2]. The time integration methods obtain the dynamic response, and the results are compared with the exact solution in Fig. 10.

Toggle truss [43]

where T, \(\xi\) and \(u_{\text {st}}\) are the natural period, damping and static response of the truss, respectively.

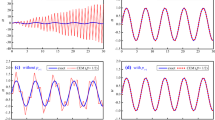

In Fig. 10, the dynamic response fluctuates around the static response of the truss, which is half of the maximum dynamic response (\(u_{\text {st}}= 0.5u_{\text {max}}\)). The SM4 and SM5 methods exhibit significant numerical damping compared to the other methods. These methods are matched at the start of the analysis, but as time passes, the gap between the responses of methods gradually widens. Figure 10b, d illustrate that decreasing the time step improves the response of time integration methods. Tables 6 and 7 provide information on the maximum displacement of the time integration methods. It is revealed that the CMs frequently have more accurate results than the SMs. Also, the SM2 method in simple methods and the CM3 method in composite methods provide more accurate responses for the maximum displacement.

We plot the maximum displacement (\(u_{\text {max}}\)) vs \(\frac{\Delta t}{T}\) in Figs. 11 and 12 for investigating the sensitivity of the time integration methods to the variation of \(\frac{\Delta t}{T}\), as can be seen the response of SM2 loses stability at the high value of \(\frac{\Delta t}{T}\) and has response only for \(\frac{\Delta t}{T}\le 0.551\). Therefore, the SM2 method can be introduced as a time integration method with conditional stability. The rest of the time integration methods have acceptable responses for various values of \(\frac{\Delta t}{T}\) that indicate these methods are stable regardless of \(\frac{\Delta t}{T}\). Therefore, these methods are unconditionally stable. According to Figs. 11 and 12, the numerical results are entirely close to the exact response at the low values of \(\frac{\Delta t}{T}\). On other hand, by increasing \(\frac{\Delta t}{T}\), the maximum response of SM5 and CM2 deviate from the exact method sooner than the other methods, whereas CM3 deviates later than the other methods. In high values of \(\frac{\Delta t}{T}\), the Wilson method gives a closer response to the exact solution, although the deviation from the exact response increases when \(\theta\) changes from 1.4 (SM3) to 2 (SM4).

The step load, inputted to the toggle truss, is polluted by considerable random noise, which is shown in Fig. 13. The toggle truss was motivated by the load polluted with noise for 50 s. In Fig. 14, the responses of the truss were calculated by the SM1 with the time step of \({\Delta t}=0.01\) T, and the responses were normalized so that they could compare the effects of noise on acceleration, velocity, and displacement. The noise intensity on velocity and displacement is reduced due to the time integration process for calculating the responses so that there can be more noise in the acceleration than the velocity and displacement. In Fig. 15, the maximum vertical acceleration of node 2 of the truss was evaluated by the time integration methods. The time step of the methods \({\Delta t}\) = 0.01 T, 0.1 T and 0.6 T was considered. It can be seen that the response of the truss under random noise is diverged (loss of stability) by the SM2 method with time step \({\Delta t}=0.6\) T and, on the other hand, the response of other time integration methods maintains stability and has appropriate convergence.

5.2 Toggle truss under earthquake loading

The toggle truss is subjected to horizontal seismic load at nodes 1 and 3 of the truss. The El-Centro earthquake record, as shown in Fig. 16. The viscous damping ratio of the truss is equal to 0.05 [43]. The mechanical properties of the truss were stated in the previous example.

El-Centro earthquake record [43]

In this example, the time integration methods with time step \({\Delta t}=0.01\) T and the iteration methods are used for the nonlinear dynamic analysis. The responses in Fig. 17a, b were validated by the response of reference [43].

The iteration methods were applied in the time integration methods for investigating the performance of the iteration methods as nonlinear solution methods in dynamic analysis. Using the time integration methods and iteration methods results in 125 procedures for nonlinear dynamic analysis. The numerical results of the study based on these procedures are shown in Tables 8, 9, 10, 11, 12. In these tables, \({\text {SUM}}_{\text {iter}}\) represents the total number of iterations for each analysis, and \(\mu _{\text {iter}}\) is the average number of iterations, which is equal to the total number of iterations per number of time steps. According to Table 8, the SP method converges with the maximum number of iterations, whereas the MP4 method converges with the minimum number of iterations in the simple time integration methods. \({\text {SUM}}_{\text {iter}}\) and \(\mu _{\text {iter}}\) of the other iteration methods are between methods SP and MP4.

In composite methods, the iteration methods are applied as the loops for solving nonlinear equations in each sub-step of CMs. In the CM1 (Table 9), the minimum number of iterations is achieved by applying the MP4 method in the first time sub-step and the MP2 method in the second time sub-step. The minimum number of iterations in the CM3 (Table 11) occurs by applying the MP4 method in the first time sub-step and the MP1 or MP3 methods in the second time sub-step. The iteration methods perform nearly identically in the CM2 and CM4, as shown in Tables 10 and 12. The minimum number of iterations occurs in the CM2 and CM4 methods when applying the MP3 and MP4 methods as the nonlinear solution methods in both time sub-steps. The maximum number of iterations for the convergence in CMs occurs when using the SP in both time sub-steps. The MP methods have a significant impact on reducing the number of iterations when the value of \(\gamma =\gamma _{0}\) (Eq. (25)) is chosen in the CMs.

Section 3 states that the error in each iteration is crucial for arriving at the convergence of response. Therefore, the error analysis of the proposed method is discussed in Figs. 18 and 19. As a sample, the CM2 method with various iteration methods is considered. SP-SP in the figure means that the SP method is used in both time sub-steps of the time integration method. According to \(\epsilon =10^{-20}\), Fig. 18 shows each of the time sub-steps is converged with the maximum of three iterations by the SP, MP1 and MP2 methods, and the maximum of two iterations by the MP3 and MP4 methods. It can also be seen that the average of error for the third iteration in the SP, MP1 and MP2 and the second iteration in the MP3 and MP4 is less than the tolerance threshold. Therefore, satisfying the tolerance threshold and the error is achieved by reducing or increasing the number of iterations. Figure 19 analyzes the proposed method’s error by the maximum number of iterations in the time steps. We can see that reducing the tolerance threshold (reducing the error) increases the maximum number of iterations in time steps. In the MP4 method, the maximum number of iterations of time steps is less than in other methods, which indicates convergence is achieved sooner than in other methods. On the other hand, the SP method reacted the opposite to the MP4 method. The fractures of the diagrams in Fig. 19 can be considered as the sensitivity of the error of the iteration methods to the tolerance threshold. The SP method has the highest sensitivity, and the MP4 method has the lowest.

5.3 Space truss under earthquake loading

The space truss is a three-story structure with 12 free nodes. The elastic modulus of its members is 200 GPa, and the lumped mass at the node is 50 \(\text {N{s}}^2\)/mm. The El-Centro earthquake load is applied to nodes 1, 2, 3, and 4 of the truss [43, 44] (Fig. 20).

Space truss [43]

This example examines the four integration methods, including the SM1, SM3, SM5, and CM2 methods, with the five iteration methods. The response of the roof nodes in the x-direction is obtained by the methods and compared with reference [43] in Fig. 21.

The number of iterations for convergence of the iteration methods is obtained with various time steps of time integration methods, and the weighted average of iterations (\({\overline{\text {SUM}}}_{\text {iter}}\)) is calculated by values \(\frac{\Delta t}{T}\). In Eq. (56), twenty-two values (\(n=22\)) are considered as samples between 0.1 and 0.01 for \(\frac{\Delta t}{T}\), which is obtained through arithmetic progression.

The common differences between terms and the first term in the sequence are \(d=0.004\) and \(\left( \frac{\Delta t}{T} \right) _{0}=0.01\), respectively. \(\left( \frac{\Delta t}{T} \right) _{i}\) is the ith term in the sequence of arithmetic progression. Now, the weighted average of iterations (\({\overline{\text {SUM}}}_{\text {iter}}\)) is calculated for the time sub-steps of the methods as follows:

\(({\text {SUM}}_{\text {iter}})_{i}\) is the number of iterations of the methods corresponding to \(\left( \frac{\Delta t}{T} \right) _{i}\). As can be seen in Fig. 22 the MP4 and MP3 methods cause the minimum value of \({\overline{\text {SUM}}}_{\text {iter}}\) in the time integration methods. On the other hand, the SP method causes the maximum value of \({\overline{\text {SUM}}}_{\text {iter}}\).

The unified scheme has the ability to quickly alter the solving method of the problem by not duplicating the modeling for each of the methods and saving time for evaluating the problem using various methods. Now we show the computational efficiency of the unified scheme and the conventional methods by obtaining the response of the space truss under earthquake loading. In this regard, we assume that the time integration and iteration methods are the same in these two states. As a sample, the SM1 method is selected with the MP3 method. Figure 23 shows that the response of the SM1 inserted into the unified scheme (SM1-IIUS) conforms with references [43] and the SM1 extracted from the unified scheme (SM1-EFUS). The computational costs of the SM1-IIUS and the SM1-EFUS method are shown in Table 13, which differ slightly from each other.

6 Conclusion

The first part of this study described a unified scheme for efficiently implementing various implicit time integration methods and iteration methods. For the first time, we also provided a framework for multi-point iteration methods in nonlinear dynamic analysis of structures. The unified scheme is adjusted so that it can implement various other methods in this scheme in the future. The unified scheme made it possible to quickly implement new procedures and compare the performance of nonlinear implicit time integration methods. We observed, using numerical tests, that the composite time integration method could be more substantial than simple time integration methods in maintaining stability. Many nonlinear analyses are inferior due to the high number of iterations required by single-point methods. Using multi-point procedures as the solution algorithm in nonlinear direct time integration methods reduces the number of iterations required to reach convergence. The numerical results show that applying the MP4 method as a nonlinear solver in simple time integration methods causes the response to converge with the least number of iterations. The reduction of iterations in composite time integration methods is determined by how the iteration methods are placed in the time sub-steps and the interval of time sub-steps (in addition to the type of iteration methods). Using \(\gamma =\gamma _{0}\) in the composite time integration method allows multi-point iteration methods to reduce the number of iterations significantly. The convergence of a nonlinear dynamic response becomes problematic when the accuracy for convergence is set to be high. However, the multi-point method can reduce the difficulty of achieving convergence. The type of time integration methods may challenge the number of convergence iterations. The MP3 and MP4 methods have the lowest weighted averages of iterations and are more stable than other iteration methods for reducing the number of iterations in various time integration methods. We anticipate that a practically helpful framework for nonlinear dynamic analysis provides a unified scheme of the existing methods for easy implementation and to ensure efficiency and robustness in structural problems beyond the benchmark scale. Whether our unified scheme described in this article can be extended to include other time integration methods remains to be seen.

References

Bathe KJ (2006) Finite element procedures. Klaus-Jurgen Bathe, Berlin

Chopra AK (2015) Dynamics of structures: international edition. Pearson Higher Ed, New York

Humar J (2012) Dynamics of structures. CRC Press, Boca Raton

Clough RW, Penzien Y (1975) Dynamics of structures. McGraw-Hill, New York

Houbolt JC (1950) A recurrence matrix solution for the dynamic response of elastic aircraft. J Aeronaut Sci 17(9):540–550

Newmark NM (1959) A method of computation for structural dynamics. J Eng Mech Div 85(3):67–94

Wilson E, Farhoomand I, Bathe KJ (1972) Nonlinear dynamic analysis of complex structures. Earthq Eng Struct Dynam 1(3):241–252

Hilber HM, Hughes TJ, Taylor RL (1977) Improved numerical dissipation for time integration algorithms in structural dynamics. Earthq Eng Struct Dynam 5(3):283–292

Wood W, Bossak M, Zienkiewicz O (1980) An alpha modification of newmark’s method. Int J Numer Meth Eng 15(10):1562–1566

Zienkiewicz O, Wood W, Hine N, Taylor R (1984) A unified set of single step algorithms. Part 1: general formulation and applications. Int J Numer Methods Eng 20(8):1529–1552

Chung J, Hulbert G (1993) A time integration algorithm for structural dynamics with improved numerical dissipation: the generalized-\(\alpha\) method. J Appl Mech 60(2):371–375

Hulbert GM, Chung J (1996) Explicit time integration algorithms for structural dynamics with optimal numerical dissipation. Comput Methods Appl Mech Eng 137(2):175–188

Zhou X, Tamma KK (2004) Design, analysis, and synthesis of generalized single step single solve and optimal algorithms for structural dynamics. Int J Numer Meth Eng 59(5):597–668

Bathe KJ, Noh G (2012) Insight into an implicit time integration scheme for structural dynamics. Comput Struct 98:1–6

Noh G, Bathe KJ (2019) The bathe time integration method with controllable spectral radius: the \(\rho _{\infty }\)-bathe method. Comput Struct 212:299–310

Noh G, Bathe KJ (2019) For direct time integrations: a comparison of the newmark and \(\rho _{\infty }\)-bathe schemes. Comput Struct 225:106079

Kim W (2019) An accurate two-stage explicit time integration scheme for structural dynamics and various dynamic problems. Int J Numer Meth Eng 120(1):1–28

Kim W (2019) A new family of two-stage explicit time integration methods with dissipation control capability for structural dynamics. Eng Struct 195:358–372

Ji Y, Xing Y (2020) A two-sub-step generalized central difference method for general dynamics. Int J Struct Stab Dyn 20(07):2050071

Soares Jr D (2020) A straightforward high-order accurate time-marching procedure for dynamic analyses. Eng Comput:1–19

Soares Jr D (2020) Efficient high-order accurate explicit time-marching procedures for dynamic analyses. Eng Comput:1–15

Soares Jr D (2021) Three novel truly-explicit time-marching procedures considering adaptive dissipation control. Eng Comput:1–18

Bathe KJ, Wilson EL (1974) Nonsap-a nonlinear structural analysis program. Nucl Eng Des 29(2):266–293

Petkovic M, Neta B, Petkovic L, Dzunic J (2012) Multipoint methods for solving nonlinear equations. Academic press, New York

Petković MS, Neta B, Petković LD, Džunić J (2014) Multipoint methods for solving nonlinear equations: a survey. Appl Math Comput 226:635–660

Arroyo V, Cordero A, Torregrosa JR (2011) Approximation of artificial satellites’ preliminary orbits: the efficiency challenge. Math Comput Model 54(7–8):1802–1807

Kiran R, Li L, Khandelwal K (2015) Performance of cubic convergent methods for implementing nonlinear constitutive models. Comput Struct 156:83–100

Derakhshandeh SY, Pourbagher R (2016) Application of high-order newton-like methods to solve power flow equations. IET Gen Transmiss Distrib 10(8):1853–1859

Kiran R, Khandelwal K (2018) On the application of multipoint root-solvers for improving global convergence of fracture problems. Eng Fract Mech 193:77–95

Maghami A, Shahabian F, Hosseini SM (2018) Path following techniques for geometrically nonlinear structures based on multi-point methods. Comput Struct 208:130–142

Maghami A, Shahabian F, Hosseini SM (2019) Geometrically nonlinear analysis of structures using various higher order solution methods: a comparative analysis for large deformation. Comput Model Eng Sci 121(3):877–907

Maghami A, Schillinger D (2020) A stiffness parameter and truncation error criterion for adaptive path following in structural mechanics. Int J Numer Meth Eng 121(5):967–989

Maghami A, Hosseini SM (2021) Intelligent step-length adjustment for adaptive path-following in nonlinear structural mechanics based on group method of data handling neural network. Mech Adv Mater Struct:1–28

Weerakoon S, Fernando T (2000) A variant of newton’s method with accelerated third-order convergence. Appl Math Lett 13(8):87–93

Homeier H (2004) A modified newton method with cubic convergence: the multivariate case. J Comput Appl Math 169(1):161–169

Jarratt P (1966) Some fourth order multipoint iterative methods for solving equations. Math Comput 20(95):434–437

Darvishi M, Barati A (2007) A fourth-order method from quadrature formulae to solve systems of nonlinear equations. Appl Math Comput 188(1):257–261

Traub JF (1982) Iterative methods for the solution of equations, vol 312. American Mathematical Soc

Homeier H (2003) A modified newton method for rootfinding with cubic convergence. J Comput Appl Math 157(1):227–230

Sharma JR, Sharma R (2010) Modified jarratt method for computing multiple roots. Appl Math Comput 217(2):878–881

Sharma JR, Gupta P (2014) An efficient fifth order method for solving systems of nonlinear equations. Comput Math Appl 67(3):591–601

Frontini M, Sormani E (2004) Third-order methods from quadrature formulae for solving systems of nonlinear equations. Appl Math Comput 149(3):771–782

Thai HT, Kim SE (2011) Nonlinear inelastic time-history analysis of truss structures. J Constr Steel Res 67(12):1966–1972

Blandford GE (1996) Large deformation analysis of inelastic space truss structures. J Struct Eng 122(4):407–415

Acknowledgements

The authors would like to express their gratitude to anonymous reviewers for their in-depth comments that significantly strengthened this paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix 1: Unifying \(a_{0}\) in the unified scheme

Appendix 1: Unifying \(a_{0}\) in the unified scheme

The coefficient \(a_{0}\) in the unified scheme is as follows:

where is used in Eq. (59):

The unified scheme includes the four time integration methods, so we obtain the coefficient \(a_{0}\) according to the time integration methods:

1.1 Unifying \(a_{0}\) in \(\rho _{\infty }\)-Bathe method

The \(\rho _{\infty }\)-Bathe method is a composite method. In this method, the values of dynamic components \(\varvec{\delta } _{1}\) and \(\varvec{\delta } _{2}\) are as follows:

where

By comparing Eqs. (60) and (61) with Eq. (59), the following can be expressed:

Therefore, \(a_{0}=\frac{1}{(q_{2} \Delta t)^{2}}\) can be considered as the comprehensive state of the coefficient \(a_{0}\), when \(q_{2}\) be expressed as follows:

Now we require to obtain a unified equation for \(q_{2}\) in terms of ssi to satisfy Eq. (65):

We can express for \(q_{1}\) a unified equation in terms of y by comparing Eq. (65) with Eq. (66). Therefore, Eq. (63) is rewritten as follows:

1.2 Unifying \(a_{0}\) in Newmark method

The Newmark method is a simple method. Therefore, \(\delta _{1}\) in the unified scheme for the Newmark method is as follows:

The dynamic component of \(\delta _{1}\) is compared between the \(\rho _{\infty }\)-Bathe and Newmark methods, and it is observed that the coefficient \(a_{0}\) in the Newmark method has no \(\gamma\). Therefore, we consider \(\gamma\) as a constant and neutral parameter in the Newmark method (\(\gamma\) = 1). The coefficient \(a_{0}\) obtained in the previous step is written as follows to include both methods:

1.3 Unifying \(a_{0}\) in Wilson method

The Wilson method is a simple method and is similar to the linear acceleration method (\(\alpha =\frac{1}{2},\,\beta =\frac{1}{6}\)). \(\delta _{1}\) in the unified scheme for the Wilson method is as follows:

Equation (69) can be rewritten by comparing Eqs. (59) and (69) with Eq. (70):

1.4 Unifying \(a_{0}\) in Houbolt method

The Houbolt method is a simple method. \(\delta _{1}\) in the unified scheme for the Houbolt method is as follows:

By choosing the Houbolt method for analysis in the unified scheme, the variables of other methods should be considered as constant and neutral parameters (\(\gamma =1,\,\theta =1,\,\alpha =\frac{1}{2},\,\beta =\frac{1}{4}\)). Therefore, Eq. (71) can be rewritten by comparing Eqs. (59) and (71) with Eq. (72):

In the unified scheme can be selected the Houbolt method by \(H=1\), and the coefficients of the Houbolt method can be activated.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Shahraki, M., Shahabian, F. & Maghami, A. A unified scheme for nonlinear dynamic direct time integration methods: a comparative study on the application of multi-point methods. Engineering with Computers 39, 3229–3248 (2023). https://doi.org/10.1007/s00366-022-01743-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00366-022-01743-1