Abstract

Atherosclerosis, one of the leading causes of death in USA and worldwide, begins with a lesion in the intima of the arterial wall, allowing LDL to penetrate into the intima where they are oxidized. The immune system considers these oxidized LDL as a dangerous substance and tasks the macrophages to attack them; incapacitated macrophages become foam cells and leads to the formation of a plaque. As the plaque continues to grow, it progressively restricts the blood flow, possibly triggering heart attack or stroke. Because the blood vessels tend to be circular, two-space dimensional cross section model is a good approximation, and the two-space dimensional models are studied in Friedman et al. (J Differ Equ 259(4):1227–1255, 2015) and Zhao and Hu (J Differ Equ 288:250–287, 2021). It is interesting to see whether a true three-space dimensional stationary solution can be developed. We shall establish a three-space dimensional stationary solution for the mathematical model of the initiation and development of atherosclerosis which involves LDL and HDL cholesterols, macrophages and foam cells. The model is a highly nonlinear and coupled system of PDEs with a free boundary, the interface between the plaque and the blood flow. We establish infinite branches of symmetry-breaking stationary solutions which bifurcate from the annular stationary solution in the longitude direction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Atherosclerosis is a chronic inflammatory disease in which a plaque builds up in the innermost layer of the artery. As the plaque grows, it progressively hardens and narrows the arteries thereby increasing the shear force of blood flow. The increased shear force may cause rupture of the plaque, which leads to thrombus formation in the lumen and may then block downstream arteries (Friedman and Hao 2015; Hao and Friedman 2014). Plaque rupture in the cerebral artery results in a stroke, while a coronary thrombus causes myocardial infarction, i.e., a heart attack. Every year about 900,000 people in USA and 13 million people worldwide die of heart attack or stroke (Hao and Friedman 2014; Friedman and Hao 2015).

Mathematical models describing the growth of a plaque in the arteries (e,g., Calvez et al. 2009; Cohen et al. 2014; Friedman and Hao 2015; Friedman et al. 2015; Hao and Friedman 2014; McKay et al. 2005; Mukherjee et al. 2019) were introduced. All of these models include the interaction of the “bad” cholesterols, low density lipoprotein (LDL), and the “good” cholesterols, high density lipoprotein (HDL), in triggering whether plaque will grow or shrink.

A series of events happens when lesions develop in the inner surface of the arterial wall (Friedman and Hao 2015) (see also Friedman 2018, Chapters 7 and 8): “LDL and HDL move from the blood into the arterial intima through those endothelial lesions and get oxidized by free radicals which are continuously released by biochemical reactions within the body. The immune system considers oxidized LDL (ox-LDL) as a dangerous substance, hence a chain of immune response is triggered. Sensing the presence of ox-LDL, endothelial cells begin to secret monocyte chemoattractant protein (MCP-1), which attracts monocytes circulating in the blood to penetrate into the intima. Once in the intima, these monocytes are converted into macrophages. The macrophages endocytose the ox-LDL and are eventually turned into foam cells. These foam cells have to be removed by the immune system, and at the same time they trigger a chronic inflammatory reaction: they secrete pro-inflammatory cytokines (e.g., TNF-\(\alpha \), IL-1) which increase endothelial cells activation to recruit more new monocytes. Smooth muscle cells (SMCs) are attracted from the media into intima by chemotactic forces due to growth factors secreted by macrophages and T-cells. ECM is remodeled by matrix metalloproteinase (MMP) which is released by a variety of cell types including SMCs, and is inhibited by tissue inhibitor of metalloproteinase (TIMP) produced by macrophages and SMCs. Interleukin IL-12, secreted by macrophages and foam cells, activates T-cells to promote the growth of a plaque. The activated T-cells secrete interferon IFN-\(\gamma \), which in turn enhance activation of macrophages in the intima. The effect of oxidized LDL on plaque growth can be reduced by the good cholesterols, HDL: HDL can remove harmful bad cholesterol out from the foam cells and convert foam cells into anti-inflammatory macrophages; moreover, HDL also competes with LDL on free radicals, decreasing the amount of radicals that are available to oxidize LDL.”

In the paper (Calvez et al. 2009), oxidized LDL, macrophages, foam cells were modeled on a rectangular two-space dimensional domain, then the growth speed of the lesion is modeled with conservation and the assumption of incomprehensibility. An ODE system was formulated in Cohen et al. (2014); when only LDL and macrophages are in the system, nice phase-plane analysis were carried out. In McKay et al. (2005), more realistic variables such as chemo-attractant, monocytes, T-cells, proliferation factors, smooth muscle cells are introduced in addition to LDL, HDL, macrophages and radicals; both ODE models and PDE models are proposed. A simple reaction-diffusion system to describe the early onset of atherosclerotic plaque formation was introduced in Mukherjee et al. (2019).

In Hao and Friedman (2014); Friedman and Hao (2015), a more sophisticated reaction-diffusion free boundary model was introduced. The model includes the interactions of variables LDL, oxidized LDL, HDL, inflammatory macrophages, anti-inflammatory macrophages, foam cells, radicals, IL-12 (Interleukin-12), MCP-1 (Monocyte Chemoattractant Protein-1), MMP (matrix metalloproteinase), smooth muscle cells and T-cells. This resulted in 17 equations in the system, plus the boundary and free boundary conditions. Nice numerical simulations were carried out.

It is extremely challenging to analyze a reaction-diffusion free boundary problem with 17 equations. Friedman et al. (2015) considered a simplified model involving LDL and HDL cholesterols, macrophages and foam cells. As the blood vessel is a long and thin tube, it is a good approximation to assume that the artery is a radially symmetric infinite cylinder. They further simplified the problem by considering the cross section only, which reduces the problem to a two-space dimensional problem. Rigorous mathematical analysis was carried out to prove that for any \(H_0\) and any small \(\varepsilon >0\), there exists a unique \(L_0\) such that there is a unique \(\varepsilon \)-thin stationary plaque; this is a reasonable requirement representing a balance of “good” and “bad” cholesterols. Necessary and sufficient conditions were found to characterize situations where a small initial plaque would shrink and disappear or persist for all time. Since it is not reasonable to assume that plaques have a strictly radially symmetric shape, Zhao and Hu (2021, 2022) investigated a systematic symmetry-breaking bifurcations utilizing the Crandall-Rabinowitz theorem. But verifying the Crandall-Rabinowitz theorem is a great challenge because the system admits no explicit smooth solutions. A number of sharp estimates were established in Zhao and Hu (2021, 2022) to overcome this difficulty. The result, however, represents bifurcations in the cross-section direction and therefore is two-space dimensional. It would be interesting to see whether bifurcations would occur in the longitude direction, which is a three-space dimensional problem. This is the goal of this paper.

The structure of this paper is as follows. In Sect. 2 we present our mathematical model, followed by the main result of the problem. In Sect. 3, we collect some well-known results which will be needed in the sequel. After the establishment of a variety of estimates for our PDE system, the Crandall-Rabinowitz theorem is applied to prove our main result in Sects. 4 and 5. Section 6 covers the conclusion.

2 Mathematical model

For reader’s convenience, we shall briefly describe the model derived in Friedman et al. (2015) and (Friedman 2018, Chapters 7 and 8). We consider a PDE model consisting of LDL and HDL cholesterols, macrophages and foam cells. The simplified model lumps the all LDL into the variable L, whether oxidized or not. Likewise, all HDL are lumped into the variable H, whether oxidized or not. The inflammatory macrophages and anti-inflammatory macrophages are lumped together into the variable M. The domain under consideration is the evolving plaque region \(\{\Omega (t),t>0\}\) with a moving boundary \(\Gamma (t)\), \(\Gamma (t)\subset \{ r<1\}\times \{-\infty<z<\infty \}\), and the fixed boundary \(\partial B_1\times {{\mathbb {R}}}=\{ r=1 \}\times \{-\infty<z<\infty \}\) representing the blood vessel wall.

The LDL satisfies, in \(\Omega (t)\),

where we have normalized the diffusion rate to 1, \(\Delta =\frac{1}{r}\frac{\partial }{\partial r}\Big (r\frac{\partial }{\partial r}\Big ) + \frac{1}{r^2}\frac{\partial ^2}{\partial \theta ^2} + \frac{\partial ^2}{\partial z^2}\) in the cylindrical domain, and the term \(-k_{1} \frac{M L}{K_{1}+L}\) is of Michaelis-Menten type and is a result of inflammatory macrophages ingesting oxidized LDL. Here the inflammatory macrophages and oxidized LDL consist of a portion of the total macrophages and LDL, respectively, and the proportion factor is absorbed into \(k_1\). The positive constant \(\rho _1\) is the natural rate of elimination of LDL.

Likewise, HDL satisfies, in \(\Omega (t)\),

where the term \(-k_{2} \frac{H F}{K_{2}+F}\) represents the amount of HDL consumed to remove harmful bad cholesterol out from the foam cells and revert foam cells into anti-inflammatory macrophages. The positive constant \(\rho _2\) is the natural rate of elimination of HDL.

The macrophages and foam cells satisfy, in \(\Omega (t)\),

where the positive constants \(\rho _3, \rho _4\) denote the natural death rate of M and F, respectively. The extra term \(\lambda \frac{ML^{}}{\gamma +H}\) describes the effects that oxidized LDL attracts inflammatory macrophages while HDL decreases this impact by competing with LDL on free radicals.

The combined densities of macrophages and foam cells in the plaque is in a relatively small range, so it is assumed to be a constant \(M_0\), i.e.,

It is further assumed (Friedman 2018) that the plaque texture is of a porous medium type and invoke Darcy’s law,

By adding the two Eqs. in (2.3) and (2.4) and using Darcy’s law, we derive (2.10) below. Replaced \(\mathbf {v}\) with \(-\nabla p\), the equation for F can be written in the form of (2.9) below and the equation for M can be eliminated. In summary, we have the following system of equations in the plaque region \(\{\Omega (t),t>0\}\),

Next we proceed to derive boundary conditions. By continuity of the velocity field, we immediately have the free boundary condition

where \(V_n\) is the velocity of the free boundary \(\Gamma (t)\) in the outward normal direction \({\varvec{n}}\). Naturally, there are no exchange through the blood vessel wall (\(r=1\)) for all variables and the velocity is zero:

On the free boundary,

where \(L_0\) and \(H_0\) in the flux boundary conditions (2.13) and (2.14) respectively represent the concentrations of L and H in the blood with \(\beta _1>0\) and \(\beta _2>0\) being transfer rate. And of course, there are no foam cells in the blood. Finally, the adhesiveness of the plaque yields the equation:

where \(\kappa \) is the mean curvature in the direction \({\varvec{n}}\) for \(\Gamma (t)\).

Since the main interest is the free boundary, we could also consider a finite domain within \(\{ 0<z< T\}\). Setting the time derivatives to be zero, the corresponding stationary version of the system (2.7)-(2.16) in the finite cylinder \(\Omega \) with inner boundary \(\Gamma \) and fixed outer boundary \(\Gamma _0=\{r=1\}\times [0,T]\) is

As in Zhao and Hu (2021), we shall use \(\mu = \frac{1}{\epsilon }[\lambda L_0-\rho _3(\gamma +H_0)]\) as our bifurcation parameter. And we will keep all parameters fixed except \(L_0\) and \(\rho _4\) so that \(\mu \) varies by changing \(L_0\). Even though \(\epsilon \) appears in the denominator, \(\mu \) is of order O(1), since the balance of LDL and HDL is required for the existence of a stationary solution, i.e., \(\lambda L_0-\rho _3(\gamma +H_0)\) is of order \(O(\epsilon )\).

The existence of a radially symmetric stationary solution can be found in Friedman et al. (2015) and Zhao and Hu (2021). To be precise, the existence and uniqueness from Friedman et al. (2015) and Zhao and Hu (2021) are for a solution in two dimensions (independent of the variable z). It is clear that it is also a three-space dimensional solution, modulus the fact that two-space dimensional and three-space dimensional mean curvature differ by a factor of \(\frac{1}{2}\) even for a cylindrical domain and its cross section (\(\frac{1}{n-1} =1\) when \(n=2\) and \(\frac{1}{n-1}=\frac{1}{2}\) when \(n=3\)). But that does not have a material adverse impact on the existence and uniqueness proofs. To be rigorous, we need to show that the solution in three dimensions is also unique in the class of three-space dimensional solutions, and hence the two-space dimensional solution must also be the unique three-space dimensional solution.

As in Zhao and Hu (2021), we let

We state the following analog of (Zhao and Hu 2021, Theorem 2.1). The existence is already obtained in Zhao and Hu (2021). The uniqueness proof boils down to a maximum principle, which is apparently also valid in this domain, and hence the proof of the uniqueness is omitted.

Theorem 2.1

For every \(\mu ^{*}>\mu _{c}\) and \(\mu _{c}<\mu <\mu ^{*}\), we can find a small \(\varepsilon ^{*} =\epsilon ^*(\mu ^*)>0\), and for each \(0<\varepsilon <\varepsilon ^{*}\), there exists a unique \(\rho _{4}\) such that the system (2.17)–(2.25) admits a unique solution \(\left( L_{*}(r), H_{*}(r), F_{*}(r), p_{*}(r)\right) \) with \(0 \le L_*(r) \le L_0, \; 0\le H_*(r) \le H_0, \; 0\le F_*(r) \le M_0\).

A slight modification of the maximum principle would imply that the uniqueness is also valid if the solution is considered in the infinite domain \(\{1-\epsilon<r<1\}\times \{ -\infty<z<\infty \}\).

The result of this paper is summarized in the following theorem.

Theorem 2.2

For each integer n satisfying

we can find a small \(E>0\) and for each \(0<\epsilon <E\), there exists a unique \(\mu ^n(\epsilon )\), notice that the relationship between \(\mu ^n(\epsilon )\) and T is given by

such that if \(\mu ^n(\epsilon ) > \mu _c\) (\(\mu _c\) is defined in (2.26)), then \(\mu =\mu ^n(\epsilon )\) is a bifurcation point of the symmetry-breaking stationary solution of the system (2.17) – (2.25). Moreover, the free boundary of this bifurcation solution is of the form

Remark 2.1

The assumption (2.27) requires \(n\ne \frac{T}{2\pi }\). It is also clear that if \(n> \frac{T}{2\pi }\), then (2.27) is automatically satisfied. As a matter of fact, (2.27) is a very weak assumption and would be satisfied other than some isolated n’s.

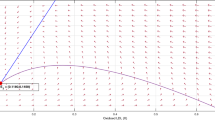

To the best of our knowledge, this is the first paper producing stationary solutions of small plaques as in Fig. 1.

3 Preliminaries

3.1 Estimates on stationary solution

We now collect various estimates on \(L_{*}(r),\ H_{*}(r),\ F_{*}(r),\ p_{*}(r)\) which are already obtained in (Zhao and Hu 2021, (2.11)–(2.13), (2.18), (4.47)–(4.49), (4.3), (4,4), (2.16), (2.17)).

Lemma 3.1

(see Zhao and Hu 2021) Let \( \mu _c<\mu <\mu ^*\). Then

The following estimate holds for first derivatives,

The estimates of the second derivatives at the boundary \(r=1-\epsilon \) are given by

For the function \(p_*(r)\),

where the function \(J_1(\mu ,\rho _4(\mu ))\) satisfies

with C independent of \(\epsilon \). And for the parameter \(\rho _4\),

3.2 The Crandall-Rabinowitz theorem

Next, we state the Crandall-Rabinowitz theorem, which is critical in studying bifurcation.

Theorem 3.2

(see Crandall and Rabinowitz 1971, Theorem 1.7) Let X, Y be real Banach spaces and \(F(x,\mu )\) a \(C^{p}\) map, \(p\ge 3\), of a neighborhood \((0,\mu _0)\) in \(X\times {\mathbb {R}}\) into Y. Suppose

-

(i)

\(F(0,\mu )=0\) for all \(\mu \) in a neighborhood of \(\mu _0\);

-

(ii)

\(\text{ Ker }[F_x(0,\mu _0)]\) is a one-dimensional space, spanned by \(x_0\);

-

(iii)

\(\text{ Im }[F_x(0,\mu _0)]=Y_1\) has codimension 1;

-

(iv)

\([F_{\mu x}](0,\mu _0)x_0\notin Y_1\).

Then \((0,\mu _0)\) is a bifurcation point of the equation \(F(x,\mu )=0\) in the following sense: in a neighborhood of \((0,\mu _0)\) the set of solutions of \(F(x,\mu )=0\) consists of two \(C^{p-2}\) smooth curves \(\Gamma _1\) and \(\Gamma _2\) which intersect only at the point \((0,\mu _0)\); \(\Gamma _1\) is the curve \((0,\mu )\) and \(\Gamma _2\) can be parameterized as follows:

3.3 A continuation lemma

We need to establish the sharp estimates of the variable functions to compute the Fréchet derivatives, which is based on the following continuation lemma.

Lemma 3.3

(see Zhao and Hu 2021, Lemma 5.1) Let \(\{ \mathbf {Q}_\delta ^{(i)}\}_{i=1}^N\) be a finite collection of real vectors, and define the norm of the vector by \(|\mathbf {Q}_\delta |_{\max }=\max \limits _{1\le i \le N}|\mathbf {Q}_\delta ^{(i)}|\). Suppose that \(0<C_1<C_2\), and

-

(i)

\(|\mathbf {Q}_0|_{\max }\le C_1\);

-

(ii)

For any \(0< \delta \le 1\), if \(|\mathbf {Q}_\delta |_{\max }\le C_2\), then \(|\mathbf {Q}_\delta |_{\max }\le C_1\);

-

(iii)

\(\mathbf {Q}_\delta \) is continuous in \(\delta \).

Then \(|\mathbf {Q}_\delta |_{\max }\le C_1\) for all \(0< \delta \le 1\).

3.4 The Taylor’s expansion of the vector function

In the process of computing the Fréchet derivatives, we shall also use the following Taylor’s expansion for the vector function. Let \(f:\ {\mathbb {R}}^N\rightarrow {\mathbb {R}}^M\) be a \(C^2\) function.

Lemma 3.4

(see Zhao and Hu 2021, Lemma 3.3) For any \(y_*\), y and \(y_1\),

where the error term is estimated by

3.5 A supersolution

As in Friedman et al. (2015), we use the function

a lot when we apply the maximum principle. Recall that \(\xi \) satisfies

Taking

we easily verify that

Let \(\Vert S(z)\Vert _{C^{4+\alpha }([0,T])}\le 1\), then using (3.12), we derive the following useful inequality at \(r=1-\epsilon + \tau S\) with \(|\tau |\ll \epsilon \):

4 Bifurcations - The Frechét derivatives

We shall work with the Crandall-Rabinowitz theorem on the spaces

Remark 4.1

The functions in \(X_1^{l+\alpha }\) automatically extend to periodic functions for \(z\in (-\infty , \infty )\) with period T. It is clear that for \(S\in X_1^{l+\alpha }\) with \(l\ge 1\), we have \(S'(0)=S'(T)=0\).

A solution which is T-periodic in z and bifurcates from the \(\cos (\frac{2\pi n}{T} z)\) branch automatically satisfies the zero flux boundary conditions at \(z=0\) and \(z=T\). So rather than studying the original problem, we shall consider bifurcation as a solution which is periodic in z. Consider a family of perturbed domains \(\Omega _{\tau }=\{1-\varepsilon +{\widetilde{R}}<r<1, 0< z< T\}\), where \({\widetilde{R}}=\tau S(z)\), S(z) is T-periodic in z, \(|\tau | \ll \varepsilon \) and \(\Vert S\Vert _{C^{4+\alpha }([0,T])} \le 1\), and denote the corresponding one-period inner boundary to be \(\Gamma _{\tau }\). Let (L, H, F, p) be the solution of

We need to ensure the existence and uniqueness of the solution to the problem (4.1)-(4.7). Before showing this fact, we shall first derive an asymptotic formula for the mean curvature.

Lemma 4.1

If \(S\in C^2(-\infty ,\infty )\) and \(\Vert S\Vert _{C^2([0,T])}\le 1\), then

Proof

We use the notation \(\mathbf {e}_{r}, \mathbf {e}_{\theta }, \mathbf {e}_{z}\) to denote the unit normal vectors in \(r, \theta , z\) directions, respectively. Then, written in the rectangular coordinates in \({\mathbb {R}}^{3}\),

and the gradient is given by

For the surface \(r=1-\epsilon +\tau S(z)\), or, alternatively, \(\xi (r,\theta , z)=0\) where \(\xi (r, \theta , z)=r-(1-\epsilon )-\tau S(z)\), the normal vector is

and the mean curvature is then \(-\left. \frac{1}{2} {\text {div}} \frac{\nabla _{x} \xi }{\left| \nabla _{x} \xi \right| }\right| _{\xi =0}\), or

By direct computations,

Using \({\text {div}}(f \mathbf {g})=f{\text {div}}\mathbf {g}+\nabla _{x} f \cdot \mathbf {g}\), we obtain

Since

then the formula (4.8) follows. \(\square \)

We now establish the existence and uniqueness of the solution to the problem (4.1)-(4.7).

Lemma 4.2

Let \(S\in C^{4+\alpha }(-\infty ,\infty )\), periodic with period T, \(S'(0)=S'(T)=0\), and \(\Vert S\Vert _{C^{4+\alpha }([0,T])}\le 1\). For sufficiently small \(\epsilon \) and \(|\tau |\ll \epsilon \), the problem (4.1)-(4.7) admits a unique solution (L, H, F, p).

Proof

We shall prove this lemma by using the contraction mapping principle. Take

For each \((L,H,F)\in {\mathscr {M}}\), we solve the following linear equations:

Define a map \({\mathscr {L}}:(L,H,F)\rightarrow ({\widehat{L}},{\widehat{H}},{\widehat{F}})\), then we shall prove that \({\mathscr {L}}\) maps \({\mathscr {M}}\) into itself and is a contraction, which indicates that the unique fixed point of \({\mathscr {L}}\) is the unique classical solution of the system (4.1)-(4.7).

Step 1. \({\mathscr {L}}\) maps \({\mathscr {M}}\) into itself.

By the maximum principle, we clearly have

We now establish the estimate for \({\widehat{p}}\). Since L, H, F are all bounded, the right-hand side of (4.13) is bounded under supremum norm, i.e.,

where C is independent of \(\epsilon \) and \(\tau \). Here and hereafter we shall use the notation C to denote various different positive constants independent of \(\epsilon \) and \(\tau \). Also, we use the mean curvature formula (4.8) and the Taylor’s expansion to derive that

It follows from (4.18) and (4.19) that \(C(\xi (r)+\epsilon )\) is a supersolution for \({\widehat{p}}+\frac{1}{2}\), then

where \(\xi (r)\) is defined in Sect. 3.5. Next we are going to estimate \(\Vert {\widehat{p}}\Vert _{C^1(\Omega _\tau )}\) and show that it is actually independent of \(\epsilon \) and \(\tau \). Introduce the following transformation:

It maps \(r=1-\epsilon +\tau S(z)\) into \({\widetilde{r}}=\frac{1}{2}\). Notice that our function \({{\widehat{p}}}\) is independent of \(\theta \). Let \({\widetilde{p}}({\widetilde{r}}, {\widetilde{z}})={\widehat{p}}(r, z)+\frac{1}{2}\), then \({\widetilde{p}}\) satisfies

where coefficients \(A_1,A_2,A_3,A_4\in C^{3+\alpha }([0,T])\), \(A_5\) and \(A_6\) are bounded, \(A_j = O(\epsilon )\) for \(|\tau |\ll \epsilon \ll 1\) (\(1\le j\le 6\)), and \({\widetilde{f}}=\frac{4r}{M_0}\Big [\lambda \frac{(M_0-F)L}{\gamma +H}-\rho _3(M_0-F) - \rho _4 F\Big ]\) is also bounded based on (4.9). Applying the interior sub-Schauder estimates (Theorem 8.32, Gilbarg and Trudinger 1983) on the region \(\Omega _{i_0}: ({\widetilde{r}}, {\widetilde{z}})\in [\frac{1}{2},1]\times [z_{i_0}-2,z_{i_0}+2]\), recalling also (4.19) and (4.20), we obtain

We use a series of sets \([\frac{1}{2},1]\times [z_{i_0}-1,z_{i_0}+1]\) to cover the whole region \([\frac{1}{2},1]\times [0,\frac{T}{2\epsilon }]\), as a result,

We then relate \({\widetilde{p}}\) with \({\widehat{p}}\) to derive

and hence

Recalling equation (4.12) and the boundary conditions of \({\widehat{F}}\) in (4.14)-(4.15), we obtain, by the maximum principle,

We further claim that this bound for \({\widehat{F}}\) can be improved. By (4.9) and (4.22), the right-hand side of equation (4.12) is bounded, i.e.,

According to (4.21), (4.23) and Sect. 3.5, \(C(\xi (r)+c_1(\beta _2,\epsilon )+c_2(\beta _2,\tau ))\) is a supersolution for \({\widehat{F}}\), so that

Then in a similar way as we did for \({\widehat{p}}\), we derive

Above, we have shown that \(({\widehat{L}}, {\widehat{H}}, {\widehat{F}})\in {\mathscr {M}}\), which implies that \({\mathscr {L}}\) maps \({\mathscr {M}}\) into itself. We shall next prove that \({\mathscr {L}}\) is a contraction.

Step 2. \({\mathscr {L}}\) is a contraction.

Suppose that \(({\widehat{L}}_j, {\widehat{H}}_j, {\widehat{F}}_j)={\mathscr {L}}(L_j, H_j, F_j)\) for \(j=1,2\), and set

Recalling (4.10)-(4.13), (4.21) and (4.24), we get, for some constant \(C^*\) independent of \(\epsilon \) and \(\tau \),

The function \(C^*({\mathscr {A}}+{\mathscr {B}})(\xi (r) + c_1(\beta ,\epsilon ) + c_2(\beta ,\tau ))\) defined in Sect. 3.5 clearly serves as a supersolution and therefore by the maximum principle,

which leads to

where \(C^{**}\) is independent of \(\epsilon \) and \(\tau \). The above inequalities imply that

By taking \(\epsilon \) sufficiently small and \(|\tau |\ll \epsilon \), we have

so that \({\mathscr {L}}\) is a contraction mapping. Therefore, the proof is complete. \(\square \)

With p being uniquely determined in the system (4.1)-(4.7), we define \({\mathscr {F}}\) by

where \(\mu \) is our bifurcation parameter defined earlier, then (L, H, F, p) is a symmetry-breaking stationary solution if and only if \({\mathscr {F}}(\tau S,\mu )=0\).

To apply the Crandall-Rabinowitz theorem, we need to compute the Fréchet derivatives of \({\mathscr {F}}\). For a fixed small \(\epsilon \), we formally write (L, H, F, p) as

Substituting (4.26)-(4.29) into the equations (4.1)-(4.7) and dropping the \(O(\tau ^2)\) terms, we obtain the following linearized system in \(\Omega _*=\{1-\epsilon<r<1, 0< z< T\}\):

where \(\Gamma _1=\{r=1-\epsilon \}\times [0,T]\), and

We shall show that the formal expansions (4.26)-(4.29) are actually rigorous.

Remark 4.2

The functions \((L_*, H_*, F_*, p_*)\) are defined in \(\Omega _*\), a domain which is different from \(\Omega _\tau \), so we first need to extend them to a bigger domain. Since these functions are of r only, the equations they satisfy form a system of second order ODE. Therefore we can extend \((L_*, H_*, F_*, p_*)\) from \(1-\epsilon<r<1\) to \(1-2\epsilon<r<1\) by solving a nonlinear initial value problem of second order ODE with the right hand-side taking from (2.17)–(2.20) and keeping the values at \(r=1-\epsilon \) together with their first order derivatives. Using these equations we then find that derivatives of all orders are continuous across the boundary \(r=1-\epsilon \). Thus we produce a smooth solution, denoted again by the same notation \((L_*, H_*, F_*, p_*)\), satisfying (2.17)–(2.20) in \(\{1-2\epsilon<r<1\}\) while confirming the boundary conditions at \(r=1\) and \(r=1-\epsilon \) (rather than \(r=1-2\epsilon \)).

In the reminder of this paper, we assume that \((L_*, H_*, F_*, p_*)\) is the extended solution.

4.1 First-order \(\tau \) estimates

Lemma 4.3

Fix \(\epsilon \) sufficiently small, if \(|\tau |\ll \epsilon \) and \(\Vert S\Vert _{C^{4+\alpha }([0,T])}\le 1\), then we have

where C is independent of \(\epsilon \) and \(\tau \).

Proof

Combining (4.1) and the equation (2.17) that \(L_*\) satisfies, we obtain the following equation for \(L-L_*\),

where \(b_1=b_1(r,z)\) and \(b_2=b_2(r)\) are both bounded since \(0\le L_*,L\le L_0\) and \(0\le F \le M_0\) based on Lemma 4.2 and Lemma 3.1 in Friedman et al. (2015). In addition, the boundary conditions for \(L-L_*\) are

Since \(L_*, H_*, F_*\) are all bounded and \(|L_*'|\le C\epsilon \) by (3.2), we find from the equation (2.17) that \(|L_*''|\) is bounded with a bounded independent of \(\epsilon \) and \(\tau \). Hence by the Taylor’s expansion, we have

where \(\widetilde{C}\) does not depend upon \(\epsilon \) and \(\tau \). Similarly, \(H-H_*\), \(F-F_*\) and \(p-p_*\) satisfy

where \(b_i=b_i(r,z)\), \(i=3,4,\ldots ,10\) are all bounded and the last inequality is based on the formula of \(\kappa \) in (4.8). It is shown earlier that \(\Vert \nabla F\Vert _{L^\infty (\Omega _\tau )}\) and \(\Vert \nabla p\Vert _{L^\infty (\Omega _\tau )}\) are bounded; for simplicity, we use the same constant \(\widetilde{C}\) to control \(\Vert \nabla F\Vert _{L^\infty (\Omega _\tau )}\) and \(\Vert \nabla p\Vert _{L^\infty (\Omega _\tau )}\), namely,

We shall use the idea of continuation (Lemma 3.3) to complete the rest of the proof. Multiplying the right-hand sides of (4.43)-(4.47) by \(\delta \) with \(0\le \delta \le 1\), we then combine the proofs for the case \(\delta = 0 \) as well as the case \(0<\delta \le 1\).

In the case \(\delta >0\), we assume that, for some \(M_1>0\) to be determined later on,

where \(\widetilde{C}\) is from (4.44), (4.49)-(4.52), and \(C_s\) is a scaling factor which comes from applying the \(C^{1+\alpha }\) Schauder estimate as we did in Lemma 4.2; both \(\widetilde{C}\) and \(C_s\) are independent of \(\epsilon \) and \(\tau \). It follows from (4.53) that the right-hand side of (4.47) is bounded, i.e.,

Let

where \(\widetilde{C}\) is defined above. By a direct computation, we obtain

Fix \(\epsilon \) sufficiently small such that the right-hand side of (4.57) is smaller than \(-\Delta \phi _1\), i.e.,

Moreover, notice that \(\cos 1\approx 0.54>1/2\), then by (4.51) and the boundary condition for \(\phi _1\), we derive

Hence, by the maximum principle, we have

As in the proof of (4.21), we further get

We shall next consider \(L-L_*\), \(H-H_*\) and \(F-F_*\). It follows from (4.43), (4.45) and the assumption (4.53) that

where C is some universal constant. Recalling also (4.52) and (4.59), we have the following estimate for \(F-F_*\),

Let

where we set \(M_1\) as

We now proceed to prove that \(\phi _2(r)\) is a supersolution for \(L-L_*\), \(H-H_*\) and \(F-F_*\). Indeed, by a simple computation, we derive

Since \(\sin x \le x\) and \(\cos x\ge 1 - \frac{x^2}{2}\) for \(x\ge 0\), we have, for \(0<|\tau |\ll \epsilon \) and \(\epsilon \) small,

It follows that

It is clear that \(\phi _2'(1)=0\). For the boundary condition at \(\Gamma _\tau : r=1-\epsilon +\tau S\),

Noticing that the leading order term in \(-\Delta \phi _2\) is \(\frac{1}{\epsilon }\), we can take \(\epsilon \) small such that

where (4.60) is used. Hence, \(\phi _2\) is a supersolution for \(L-L_*\) as well as for \(H-H_*\). For \(F-F_*\), by our choice of \(M_1\) and \(M_2\),

and \(\frac{1}{D} \nabla p_*\cdot \nabla \phi _2\) is of order \(O(1/\sqrt{\epsilon })\), then we obtain

which implies that \(\phi _2\) is also a supersolution for \(F-F_*\). Hence, by the maximum principle,

where \(M_1\) is independent of \(\epsilon \) and \(\tau \). Using a scaling argument as before, we further have

Combining the above analysis, we find that condition (ii) of Lemma 3.3 is satisfied for the vector \(\Big \{ \frac{1}{M_1}\Vert L-L_*\Vert _{L^\infty }, \frac{1}{M_1}\Vert H-H_*\Vert _{L^\infty }, \frac{1}{M_1}\Vert F-F_*\Vert _{L^\infty }, \frac{\epsilon }{M_1 C_s}\Vert \nabla (F-F_*)\Vert _{L^\infty }, \frac{1}{2\widetilde{C}^{}}\Vert p-p_*\Vert _{L^\infty }, \frac{\epsilon }{2 C_s\widetilde{C}}\Vert \nabla (p- p_*)\Vert _{L^\infty } \Big \}\). Moreover, these estimates are also valid for the case \(\delta =0\) without the assumptions (4.53)–(4.56) since the right-hand sides are all zero in this case, so that condition (i) of Lemma 3.3 is satisfied. Condition (iii) is obvious, thus the proof is complete. \(\square \)

Based on Lemma 4.3, we further get, by applying the Schauder estimates on the equations for \(L-L_*\), \(H-H_*\), \(F-F_*\) and \(p-p_*\), the following lemma.

Lemma 4.4

Let \(\epsilon \) be sufficiently small. For \(|\tau |\ll \epsilon \) and \(\Vert S\Vert _{C^{4+\alpha }([0,T])}\le 1\),

where C is independent of \(\tau \), but is dependent upon \(\epsilon \).

4.2 Second-order \(\tau \) estimates

We now derive second-order \(\tau \) estimates. Notice that \(L_1\), \(H_1\), \(F_1\) and \(p_1\) are all defined in \(\Omega _*\), while \(L-L_*\), \(H-H_*\), \(F-F_*\) and \(p-p_*\) are defined in \(\Omega _\tau \), so we first need to transform the domain of \(L_1\), \(H_1\), \(F_1\) and \(p_1\) from \(\Omega _*\) to \(\Omega _\tau \). All our functions are independent of \(\theta \), and we introduce a transformation \(Y_\tau \):

We point out that \(Y_\tau \) maps \(\Omega _*\) onto \(\Omega _\tau \) and the inverse transformation \(Y_\tau ^{-1}\) maps \(\Omega _\tau \) onto \(\Omega _*\). Set

then \({\overline{L}}_1\), \({\overline{H}}_1\), \({\overline{F}}_1\), \({\overline{p}}_1\) and \(L-L_*\), \(H-H_*\), \(F-F_*\), \(p-p_*\) are all defined in the same domain \(\Omega _\tau \) so that we can establish the second-order \(\tau \) estimates.

For the equations (4.30)-(4.42), using the same techniques as in the proof of Lemma 4.3, also recalling Lemma 4.4, we can derive \(L_1\), \(H_1\), \(F_1\in C^{4+\alpha }(\Omega _*)\) and \(p_1\in C^{2+\alpha }(\Omega _*)\) (or \({\overline{L}}_1,\ {\overline{H}}_1,\ {\overline{F}}_1\in C^{4+\alpha }(\Omega _\tau )\) and \({\overline{p}}_1\in C^{2+\alpha }(\Omega _\tau )\)), their Schauder estimates may depend on \(\epsilon \), but it is crucial that the \(L^\infty \) estimates are independent of \(\epsilon \) and \(\tau \).

Lemma 4.5

Let \(\epsilon \) be sufficiently small. For \(|\tau |\ll \epsilon \) and \(\Vert S\Vert _{C^{4+\alpha }([0,T])}\le 1\), the following estimates hold:

where C is independent of \(\epsilon \) and \(\tau \).

Proof

We shall take the derivations of the estimate for \(F-F_*-\tau {\overline{F}}_1\) as an example. The estimates for \(L-L_*-\tau {\overline{L}}_1\), \(H-H_*-\tau {\overline{H}}_1\) and \(p-p_*-\tau {\overline{p}}_1\) are similar and are actually easier.

The first step in deriving second-order \(\tau \) estimate is to calculate the equation for \(F-F_*-\tau {\overline{F}}_1\). Recalling the transformation \(Y_\tau \) in (4.64), \({\overline{F}}_1(r,z)=F_1(Y_\tau ^{-1}(r,z))\) and (4.32), we obtain the equation for \({\overline{F}}_1\) in \(\Omega _\tau \),

where \({\overline{f}}^F\) comes from various terms of the transformation \(Y_\tau \) and it involves at most second order derivatives of \(\tau S\), hence

Combining (4.3), the equation (2.19) for \(F_*\) and (4.67), we derive that \(F-F_*-\tau {\overline{F}}_1\) satisfies

where by Lemma 3.4, I is written as, for bounded functions \(b_{11}(r), b_{12}(r),\) and \(b_{13}(r)\),

and II is bounded by \(|(L-L_*,H-H_*,F-F_*)|^2\), hence

here we have used Lemma 4.3.

In order to estimate \(F-F_*-\tau {\overline{F}}_1\), we rewrite the gradient terms of the left-hand side of (4.68) as

then (4.68) yields

By Lemma 4.3,

Furthermore, it follows from (4.33), (4.38) and \({\overline{p}}_1(r,z)=p_1(Y_\tau ^{-1}(r,z))\) in (4.66) that \({\overline{p}}_1\) satisfies

where \({\overline{f}}^p\) is generated after applying the transformation \(Y_\tau \), hence as \({\overline{F}}_1\),

Then we derive

as \(S\in C^{4+\alpha }\); using the same technique as in Lemma 4.2, we shall get

hence

for a constant C which is independent of \(\epsilon \) and \(\tau \). Together with (4.70), we obtain

Combining with the estimates we derived before, we have

Notice that the above inequality present similar structure as (4.61), and the presence of \(\epsilon \) in the denominator does not cause a problem since we do have the extra factor \(\frac{1}{\epsilon }\) on the right-hand side if we apply our operator on our supersolutions. Hence we can use the same technique and similar supersolution to establish

and

Therefore, our proof is complete. \(\square \)

Following Lemma 4.4, we further have

Lemma 4.6

Fix \(\epsilon \) sufficiently small, if \(|\tau |\ll \epsilon \) and \(\Vert S\Vert _{C^{4+\alpha }([0,T])}\le 1\), then

where C is independent of \(\tau \), but is dependent on \(\epsilon \).

Remark 4.3

The estimates (4.71)-(4.74) are uniformly valid for \(|\tau |\) small and \(\Vert S \Vert _{C^{4+\alpha }([0,T])}\le 1\), which implies that the expansions in (4.26)-(4.29) are rigorous. By now, we are ready to compute the Fréchet derivatives of \({\mathscr {F}}\). As in the proof of (Zhao and Hu 2021, Lemma 3.6), we can derive that the Fréchet derivatives of \({\mathscr {F}}({\widetilde{R}},\mu )\) at the point \((0,\mu )\) are given by

By (4.75), we find that the mapping \({\mathscr {F}}(\cdot ,\mu ): X^{4+\alpha }_1 \rightarrow X^{1+\alpha }_1\) is continuous with continuous first order Frechét derivatives, and the same argument shows that it is also true for Frechét derivatives of any order. (4.75) accomplishes the following: (a) the mapping \({\mathscr {F}}\) is Frechét differentiable at \({{\widetilde{R}}}=0\), and (b) the Fréchet derivatives at \({{\widetilde{R}}}=0\) is given explicitly by the formula on the right-hand side. Since \(\mu \) is a scalar, the Frechét derivatives in \(\mu \) coincide with the regular derivatives in \(\mu \), which is much simpler in rigorous derivations. Notice that the above argument shows that the differentiablity is eventually reduced to the regularity of the corresponding PDEs, and explicit formula is not needed if we are only interested in differentiability; therefore a similar argument shows that this mapping is Fréchet differentiable in \(({\widetilde{R}},\mu )\); furthermore \({\mathscr {F}}_{{\widetilde{R}}}({\widetilde{R}},\mu )\) (or \({\mathscr {F}}_\mu ({\widetilde{R}},\mu )\)) is obtained by solving a linearized problem about \(({\widetilde{R}},\mu )\) with respect to \({\widetilde{R}}\) (or \(\mu \)). By using the Schauder estimates we can then further obtain the differentiability of \({\mathscr {F}}({\widetilde{R}},\mu )\) to any order.

5 Bifurcations - Proof of Theorem 2.2

In this section, we shall employ the Frechét derivatives of \({\mathscr {F}}\) obtained in (4.75) and (4.76) to verify the four conditions of the Crandall-Rabinowitz theorem and complete the proof of Theorem 2.2. Since \(p_1\) cannot be solved explicitly, we need to derive its sharp estimates. Note that the estimate on \(p_*\) is given by (3.4) and (3.5).

5.1 Estimates for \(p_1\)

Set \(S(z)=\cos \Big (\frac{2\pi n}{T} z\Big )\) in the linearized system (4.30)-(4.38). It is clear that \(S'(0)=S'(T)=0\). Using a separation of variables, we seek a solution of the form

Substituting (5.1) and (5.2) into the equations (4.30)-(4.38), we derive that \((L_1^n(r),H_1^n(r),F_1^n(r),p_1^n(r))\) satisfies

where \(f_i\) \((i=1,2,3,4)\) is defined in (4.39)-(4.42). It has been shown earlier that the solution \((L_1^n(r),H_1^n(r),F_1^n(r),p_1^n(r))\) to the system (5.3)-(5.11) is unique. We now proceed to find out the structure of the solution and derive estimates needed for completing our proof of bifurcation.

For simplicity of the computation, we make the boundary conditions (5.8)-(5.10) homogeneous by setting

Accordingly, \({\widetilde{L}}_1^n(r)\), \({\widetilde{H}}_1^n(r)\), \({\widetilde{F}}_1^n(r)\) satisfy the following equations:

where

and \(p_1^n\) is defined by (5.6) and (5.11).

From now on we shall write \(m = \frac{2\pi n}{T}\), where \(n=0,1,2,\cdots \). Thus m takes values \(0, \frac{2\pi }{T}, \frac{4\pi }{T}, \frac{6\pi }{T}, \ldots \).

Lemma 5.1

Let \(\psi \) be a solution of

where \(\eta \) is a constant and \(m\ge 0\) is defined as above. Then the solution is given by

with

and

The special solution Q[f](r) satisfies

and

where C is independent of \(\varepsilon \) and m.

Proof

Recall from (10.25.1) of Frank et al. (2010) that \(I_0(\xi )\) and \(K_0(\xi )\) are two independent solutions of the equation \(\frac{d^2w}{d\xi ^2} + \frac{1}{\xi }\frac{dw}{d\xi } - w =0\), and they also satisfy ((10.29.3), (10.28.2) of Frank et al. (2010))

Using these identities, we find that \(I_0(m r)\) and \(K_0(m r)\) are our solutions to the homogeneous problem and Q[f](r) is a solution for the non-homogeneous problem. Moreover, the identities ((10.29.2) of Frank et al. (2010))

or,

are also very useful.

For \(m\ne 0\), it follows from (5.28), (5.33) and the last property of (5.32) that

Throwing away the negative terms, we obtain

Recall (10.30.4) and (10.25.3) in Frank et al. (2010) that for the real number \(\nu \ge 0\) fixed,

then there exists large \(m_0\) such that for \(m>m_0\),

where C is independent of \(\varepsilon \) and m. For \(m=\frac{2\pi }{T},\frac{4\pi }{T},\cdots , \frac{4\pi }{T}\Big (\Big [\frac{m_0 T}{4\pi }\Big ]+1\Big )\), we clearly have, for \(1-\epsilon \le r\le 1\),

Combining (5.36), (5.38) and (5.39), we derive, for \(m\ne 0\),

Since for fixed \(\xi >0\) (see (10.37) of Frank et al. (2010)),

then together with (5.28), (5.32) and (5.33), a direct computation shows, for \(m\ne 0\),

where we utilized the last property of (5.32) in deriving the last inequality. Using the similar method as in (5.38) and (5.39), we also obtain

This completes all the estimates for the case \(m\ne 0\). The case \(m=0\) is similar and is actually easier. \(\square \)

It is straightforward to verify:

Lemma 5.2

If we further assume the solution of (5.25) and (5.26) satisfies \(\psi (1-\epsilon ) = G\), then, for \(m\ne 0\),

and for \(m=0\),

Lemma 5.3

Define, for \(1-\epsilon \le r\le 1\), \(m = \frac{2\pi n}{T}\), \(n=0,1,2,\cdots \),

Then the following estimates hold:

where the constant M is independent of \(\epsilon \) and m (or n).

Proof

Since \(I_1(\xi )\) is increasing and \(K_1(\xi )\) is decreasing (see (10.37) of Frank et al. (2010)), then \(I_1(mr)K_1(m) - I_1(m)K_1(mr) < I_1(m)K_1(m) - I_1(m)K_1(m) = 0\) for \(r<1\). It follows that

By (5.37), we obtain that for \(m = \frac{2\pi n}{T}\), \(n>n_0\) (\(n_0\) large enough),

For each \(n\in [0,n_0]\), there exists \(C_n\), which is independent of \(\epsilon \), such that

Let \(M=\max \Big \{C_0,C_1,\cdots ,C_{n_0}, 2\Big \}\). Then by the above analysis, we have

where M is independent of \(\epsilon \) and n.

For \(n>n_0\) (\(n_0\) large enough), it follows from (5.37) that, for \(1-\epsilon \le r\le 1\),

For each \(n\in [0,n_0]\), it is obvious that \(|W_2(r,m)| \le {\widetilde{C}}_n\) for \(1-\epsilon \le r\le 1\). Therefore, our proof is complete. \(\square \)

We are ready to establish the following estimates.

Lemma 5.4

For sufficiently small \(\epsilon \), the following estimates hold:

where the constant C does not depend on \(\epsilon \) and n, but may depend on T.

Proof

We again use the idea of continuation (Lemma 3.3) to prove this lemma. To do that, we multiply the right-hand sides of (5.15)-(5.17) as well as (5.6) by \(\delta \).

Case I: \(\delta =0\). By the maximum principle, we derive that

and then (5.48) clearly holds. Moreover, \(p_1^n\) satisfies, \(m= \frac{2\pi n}{T},\; n=0,1,2,\ldots ,\)

By Lemmas 5.1 and 5.2 , we find for \(n\ge 1\),

Hence, by (5.32),

It follows from Lemma 5.3 that

where C is independent of \(\epsilon \) and n, then (5.49) holds. For the case \(n=0\), \(p_1^n(r)=\frac{1}{2(1-\epsilon )^2}\), which implies (5.49). Thus, condition (i) of Lemma 3.3 is true.

Case II: \(0<\delta \le 1\). We first assume that

where M is from Lemma 5.3.

By the definition of \({\widetilde{f}}_1\) in (5.22) and the assumption (5.54), we clearly have

Set

It is easily shown that \(\varphi _1(r)\) is a supersolution for \({\widetilde{L}}_1^n(r)\) when \(n\ge 1\), so that by (5.30),

where C is independent of n and \(\epsilon \). For the case \(n=0\), \(\big (\sup |{\widetilde{f}}_1|+1\big )\big [\xi (r) + c_1(\beta _1,\epsilon )\big ]\), here \(\xi (r)\) and \(c_1(\beta _1,\epsilon )\) are defined in Sect. 3.5, is a supersolution for \({\widetilde{L}}_1^n(r)\), then \(|{\widetilde{L}}_1^n(r)|\le C\epsilon \).

Similarly, we get \( |{\widetilde{H}}_1^n(r)| \le C(n^2+1)\epsilon \). Now we establish the estimate for \({\widetilde{F}}_1^n\). It follows from (3.2), the assumptions (5.54) and (5.55) that

then recalling that Q is a linear operator and using the respective estimates in the minimum expression of (5.30), we obtain

Let \(\varphi _2(r)\) be defined by

By (3.2), i.e., \(p_*'(r) = O(\epsilon )\), we find that \(\varphi _2(r)\) is a supersolution for \(\widetilde{F}^n_1(r)\) when \(n\ge 1\) and \(\epsilon \) small, hence

For \(n=0\), \(\frac{1}{D}\big (\sup |{\widetilde{f}}_3|+1\big )\big [\xi (r) + c_1(\beta _2,\epsilon ) + c_2(\beta _2,\tau )\big ]\), where \(\xi (r)\), \(c_1(\beta ,\epsilon )\) and \(c_2(\beta ,\tau )\) are defined in Sect. 3.5, is a supersolution for \({\widetilde{F}}_1^n(r)\), then \(|{\widetilde{F}}_1^n(r)|\le C\epsilon \). Finally, we estimate \((p^n_1)'\). Recall that \(p^n_1\) satisfies

It follows from (5.54) and (5.12)-(5.14) that

then together with (5.30) and (5.31), we have

For \(n\ge 1\), taking \(\eta =0\) in Lemma 5.1 and \(G = \frac{1}{2}\Big [\frac{1}{(1-\varepsilon )^2}-m^2\Big ]\) in Lemma 5.2, we solve

where A and B are defined in Lemma 5.2, namely,

Differentiating \(p_1^n(r)\) in r, we obtain, for \(\epsilon \) sufficiently small,

where we have used Lemma 5.3 and (5.57). For the case \(n=0\), by Lemmas 5.1 and 5.2 , we solve

then it follows from (5.57) that \(|(p_1^n)'|\le |Q[f_4]'(r)| \le C\epsilon \le \frac{3}{2} M \Big (\frac{2\pi }{T}\Big )^3\) for \(\epsilon \) small. Hence, condition (ii) of Lemma 3.3 is satisfied.

Since condition (iii) of Lemma 3.3 is obvious, then the proof is complete. \(\square \)

We have already established the estimate (5.49) for \(\frac{\partial p_1^n(1-\epsilon )}{\partial r}\). However, this estimate is not enough in verifying the four conditions of the Crandall-Rabinowitz theorem. We need to make the estimate on \(\frac{\partial p_1^n}{\partial r}\) more precise at \(r=1-\epsilon \). We shall extract dominate terms in this expression. Indeed, based on (5.48) and (5.49), we have the following more delicate estimate for \(\frac{\partial p_1^n(1-\epsilon )}{\partial r}\).

Lemma 5.5

For small \(0<\epsilon \ll 1\) and \(m=\frac{2\pi n}{T},\) \(n=0,1,2,\ldots ,\) the following estimate holds:

and

where \(G=\frac{1}{2}\Big [\frac{1}{(1-\varepsilon )^2}-m^2\Big ]\), and C is independent of \(\epsilon \) and m (or n).

Proof

We know from (5.6), (5.7) and (5.11) that \(p_1^n\) satisfies

The following computation has been carried out in (Zhao and Hu 2021, (4.53)):

which is based on the estimate (5.48). Taking \(\eta = \frac{\mu }{\gamma +H_0}\) and \(f(r) = f_4 - \eta \) in Lemma 5.1, we obtain

and then

By Lemmas 5.1 and 5.2, we can explicitly solve \(p_1^n\) as

where A and B are defined in Lemma 5.2. For \(\psi _1(r)\), it follows from (5.29) that

and

When \(n\ne 0\), combining (5.42), (5.43) and (5.64), we compute the first derivative of \(p_1^n\) at \(r=1-\varepsilon \),

where \(W_1(r,m)\) and \(W_2(r,m)\) are defined in Lemma 5.3. Then by Lemma 5.3 and (5.61), we derive

which implies (5.58). For the case \(n=0\), it follows from (5.63), (5.65) and (5.61) that

hence, (5.59) holds. \(\square \)

Denote

Then

As in the proof of (Zhao and Hu 2021, Lemma 4.8), we can establish the following lemma.

Lemma 5.6

There exists a constant C which is independent of \(\epsilon \) and n such that

5.2 Proof of Theorem 2.2

In this subsection, we shall derive some estimates that are essential in the proof of our bifurcation theorem and complete the proof of this theorem. The rest of the discussion is for \(n\ne 0\).

Lemma 5.7

The function

satisfies, uniformly for all \(0<\epsilon <1\) and all \(m>0\),

Proof

As in the proof of Lemma 5.3, we have

Using (5.32) and (5.34), we derive, by a direct computation,

If \(f(1-\epsilon , m) \ge \frac{1}{2}\), then the conclusion holds immediately.

If \(f(1-\epsilon , m) < \frac{1}{2}\), then by the ODE comparison theorem, we have \(f(x, m) < \frac{1}{2}\) for all \(1-\epsilon \le x \le 1\). Therefore by the mean value theorem, for some \(1-\epsilon<y < 1\),

This completes the proof. \(\square \)

This lemma implies that

Based on the preliminaries before, we are finally ready to prove our main result, Theorem 2.2.

Proof of Theorem 2.2

Substituting (5.2) into (4.75), we obtain the Fréchet derivative of \({\mathscr {F}}({\widetilde{R}},\mu )\) in \({\widetilde{R}}\) at the point \((0,\mu )\), namely,

then we combine the above formula with (3.4) and (5.66) to derive

where \(J_1=J_1(\mu ,\rho _4(\mu ))\) and \(J_2^n=J_2^n(\mu ,\rho _4(\mu ))\) are respectively estimated by (3.5) and (5.67), and f is defined by (5.68).

The expression \( [{\mathscr {F}}_{{\widetilde{R}}}(0,\mu )] \cos (mz)\) is equal to zero if and only if

i.e., for \(m=\frac{2\pi n}{T}\), \(\mu ^n(\epsilon )\) satisfies the equation

By (5.71), we find that

The above limit is uniformly valid for all bounded m. Therefore, by the implicit function theorem, we obtain that for some \(\epsilon ^{**} = \epsilon ^{**}(n)\) and \(0<\epsilon <\epsilon ^{**}\), there exists a unique solution \(\mu ^n(\epsilon )\) for the equation (5.73). Notice that \( \epsilon ^{**}(n)\) may shrink to 0 as \(n\rightarrow \infty \).

Now we proceed to verify the four assumptions of the Crandall-Rabinowitz theorem to show that for \(\epsilon \) sufficiently small, \(\mu =\mu ^n(\epsilon )>\mu _c\) with the assumption (2.27) is a bifurcation point for the system (2.17)-(2.25). To begin with, we shall

and verify the conditions for this fixed \(n=n_0\). This would allow the estimates below to depend on \(n_0\).

By Theorem 2.1, it is obvious that for each \(\mu ^n(\epsilon ) > \mu _c\), we can find a small \(\epsilon ^*>0\) such that for \(0<\epsilon <\epsilon ^*\), there exists a unique solution \(\left( L_{*}(r), H_{*}(r), F_{*}(r), p_{*}(r)\right) \), i.e., \({\mathscr {F}}(0,\mu ^n(\epsilon ))=0\). Hence, the assumption (i) is satisfied. Next we shall verify the assumptions (ii) and (iii) for a fixed small \(\epsilon \). It suffices to show that for every k,

or equivalently,

where

Case I: \(k> k_0\) for some large \(k_0\). By (3.5) and (5.67), there exists a constant C which does not depend on \(\epsilon \) and k such that

Substituting (5.79) and (5.71) into (5.78), we derive

Since the leading order term is \(-\frac{1}{2}k^4\), we can easily find a bound for \(\epsilon \), denoted by \(E_1\), such that for \(0<\epsilon <E_1\) and \(k>k_0=k_0(n_0)\),

Case II: \(k\le k_0\). For this case, the proof of (5.77) is similar to that of (Zhao and Hu 2021, Page 283, Case (iii)), but we need to verify the limiting \(\mu ^n(\epsilon )\) as \(\epsilon \rightarrow 0\) are all distinct from the one we are considering, namely,

With the definition from (5.74), it is easily verified that (5.80) is equivalent to the assumption (2.27), which is assumed in our theorem. This assumption is easily satisfied for almost all values with isolated exceptions for T and \(n_0\). (2.27) is obviously valid if \(\frac{2\pi n_0}{T}>1\).

Combining all these two cases, we obtain, for the mapping \({\mathscr {F}}: X_1^{4+\alpha }\rightarrow X_1^{1+\alpha }\),

and

which implies

Hence, the assumptions (ii) and (iii) are satisfied for a fixed small \(\epsilon \).

To finish the whole proof, it remains to show the last assumption. Differentiating (5.72) in \(\mu \), we have

It follows from (3.5) and (5.67) that there exists a constant \(C>0\), which is independent of \(\epsilon \) and \(n_0\), such that

By Lemma 5.7 and (5.81), we can choose \(E_2\) to be small such that for \(0<\epsilon <E_2\),

Therefore,

namely, the assumption (iv) is satisfied.

Taking \(E=\min \big (E_1,E_2\big )\), we derive that for \(0<\epsilon <E\), the four assumptions of the Crandall-Rabinowitz theorem are satisfied. Hence, the proof of Theorem 2.2 is complete. \(\square \)

6 Conclusion

Even though the plaque model is simplified into a reaction-diffusion free boundary system of 4 equations, the problem is still very challenging. It is certainly more complex than the classical Stefan problem. Through mathematical analysis, results have been established that confirm the biological phenomena. It was established in Friedman et al. (2015) that the stability of the plaque depends heavily on the balance between the “good” cholesterol and “bad” cholesterol, and more “good” cholesterol (or less “bad” cholesterol) would induce stability or shrinkage of the plaque, a biological observation known for a long time. The result of Friedman et al. (2015) is restricted to the radially symmetric case only. The shape of the plaque, however, is unlikely to be radially symmetric; the question of non-radially symmetric solutions arises naturally. Just like the classical Stefan problem, non-radially symmetric solutions of the general free boundary problem are extremely challenging. As a sub-problem, it is quite reasonable to study whether non-radially symmetric stationary solution exists, and we can see plenty of biological examples of non-radially symmetric case. In this effort, non-radially symmetric stationary solutions were produced in the cross-section direction in Zhao and Hu (2021, 2022) through a bifurcation approach. In the current paper we extend the study for the non-radially symmetric stationary solution through a bifurcation to the longitude direction, with the shape given in Fig. 1.

References

Calvez V, Ebde A, Meunier N, Raoult A (2009) Mathematical modelling of the atherosclerotic plaque formation. In: ESAIM: proceedings, vol 28, pp 1–12. EDP Sciences

Cohen A, Myerscough MR, Thompson RS (2014) Athero-protective effects of high density lipoproteins (HDL): an ODE model of the early stages of atherosclerosis. Bull Math Biol 76(5):1117–1142

Crandall MG, Rabinowitz PH (1971) Bifurcation from simple eigenvalues. J Funct Anal 8(2):321–340

Frank RFB, Olver WJ, Lozier Daniel W, Clark CW (2010) NIST handbook of mathematical functions. Cambridge University Press, Cambridge

Friedman A (2018) Mathematical biology, vol 127. American Mathematical Soc, New York

Friedman A, Hao W (2015) A mathematical model of atherosclerosis with reverse cholesterol transport and associated risk factors. Bull Math Biol 77(5):758–781

Friedman A, Hao W, Hu B (2015) A free boundary problem for steady small plaques in the artery and their stability. J Differ Equ 259(4):1227–1255

Gilbarg D, Trudinger N (1983) Elliptic partial differential equations of second order. Springer, New York

Hao W, Friedman A (2014) The LDL-HDL profile determines the risk of atherosclerosis: a mathematical model. PLoS ONE 9(3):e90497

McKay C, McKee S, Mottram N, Mulholland T, Wilson S, Kennedy S, Wadsworth R (2005) Towards a model of atherosclerosis. University of Strathclyde, pp 1–29

Mukherjee D, Guin LN, Chakravarty S (2019) A reaction-diffusion mathematical model on mild atherosclerosis. Model Earth Syst Environ 2:1–13

Zhao XE, Hu B (2021) Bifurcation for a free boundary problem modeling a small arterial plaque. J Differ Equ 288:250–287

Zhao XE, Hu B (2022) On the first bifurcation point for a free boundary problem modeling small arterial plaque. Math Methods Appl Sci 2:7666

Acknowledgements

The first author is supported by China Postdoctoral Science Foundation (Grant No. 2020M683014).

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to Professor Avner Friedman on the occasion of his 90th birthday.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Huang, Y., Hu, B. Symmetry-breaking longitude bifurcations for a free boundary problem modeling small plaques in three dimensions. J. Math. Biol. 85, 58 (2022). https://doi.org/10.1007/s00285-022-01827-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00285-022-01827-y