Abstract

In advance of grasping a visual object embedded within fins-in and fins-out Müller-Lyer (ML) configurations, participants formulated a premovement grip aperture (GA) based on the size of a neutral preview object. Preview objects were smaller, veridical, or larger than the size of the to-be-grasped target object. As a result, premovement GA associated with the small and large preview objects required significant online reorganization to appropriately grasp the target object. We reasoned that such a manipulation would provide an opportunity to examine the extent to which the visuomotor system engages egocentric and/or allocentric visual cues for the online, feedback-based control of action. It was found that the online reorganization of GA was reliably influenced by the ML figures (i.e., from 20 to 80% of movement time), regardless of the size of the preview object, albeit the small and large preview objects elicited more robust illusory effects than the veridical preview object. These results counter the view that online grasping control is mediated by absolute visual information computed with respect to the observer (e.g., Glover in Behav Brain Sci 27:3–78, 2004; Milner and Goodale in The visual brain in action 1995). Instead, the impact of the ML figures suggests a level of interaction between egocentric and allocentric visual cues in online action control.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

An overwhelming body of evidence has shown that goal-directed reaching and grasping movements executed with continuous vision (so-called visually guided actions) are structured to take maximal use of that information for the online, feedback-based control of action (e.g., Berthier et al. 1996; Carlton 1981; Churchill et al. 2000; Connolly and Goodale 1999; Gentilucci et al. 1994; Heath 2005; Heath et al. 2004a, 2005b; Jakobson and Goodale 1991; Keele 1968; Khan et al. 2002; Meyer et al. 1988; Wing et al. 1986; Woodworth 1899; see Elliott et al. 2001 for extensive review). Moreover, it has been proposed that visually based movement corrections rely on visual information (Goodale and Westwood 2004), or a visual representation (Glover 2004), which is refractory to the context-dependent properties of pictorial illusions (e.g., Müller-Lyer [ML] or Ebbinghaus/Titchener figures). Indeed, a number of studies have shown that visually guided grasping movements are mostly immune to the illusion-evoking properties of pictorial illusions (e.g., Aglioti et al. 1995; Brenner and Smeets 1996; Heath et al. 2005a, b; Jackson and Shaw 2000; Westwood et al. 2000a, b; Westwood and Goodale 2003)Footnote 1. This finding has frequently been framed within the theoretical tenets of the perception/action model (PAM: Milner and Goodale 1995) and the assertion that real-time movement planning and online control are mediated by dedicated visuomotor mechanisms residing in the dorsal visual pathway that compute absolute (i.e., Euclidean) object metrics with respect to the observer (so-called egocentric frame of reference). It has also been proposed that movement planning and movement control are mediated by distinct visual representations that are differentially influenced by pictorial illusions (planning/control model, PCM: Glover 2004). The PCM states that the initial planning of an action is supported by a context-dependent “visual planning representation” that is sensitive to the cognitive properties of pictorial illusions. As the action unfolds, however, the PCM asserts that a context-independent “ visual control representation” gradually assumes command of the unfolding action, thus rendering the later stages of the action refractory to pictorial illusions (see Glover and Dixon 2001a, b, c, 2002). Importantly, both the PAM and PCM assert that visually based movement corrections are computed using an egocentric frame of reference that is immune to the illusion-evoking properties of pictorial illusions.

It is, however, important to note that some studies have reported that pictorial illusions influence visually guided actions. For instance, Aglioti et al’s (1995) seminal work, which is frequently cited as explicit evidence that visually guided actions are refractory to pictorial illusions, showed that the Titchener circles illusion produced an illusory effect on action that was approximately 60% of that of their perceptual judgment task. Similar results on maximum grip aperture have been linked to the ML figures (Daprati and Gentilucci 1997; Westwood et al. 2001; see also Heath et al. 2004a). Moreover, visually guided as well as memory guided pointing movements executed along the shaft of the ML figures show endpoint bias’ consistent with the perceptual effects of the illusion (Elliott and Lee 1995; de Grave et al. 2004; Gentilucci et al. 1996; Glazebrook et al. 2005). Most interestingly, Meegan et al. (2004) reported that the illusory effects of ML figures on ultimate movement endpoints were not attenuated by visually based movement corrections. Those findings suggest that online corrections do not unfold entirely on the basis of absolute visual information; rather, it appears that online limb adjustments entail collaboration between egocentric and allocentric (i.e. scene-based) visual frames of reference.

The view that egocentric and allocentric visual frames interact to support the online, feedback-based control of action is congruent with evidence showing that geometric objects or contextual features—apart from illusory arrays—surrounding a target facilitate reaching/grasping movements. For example, Conti and Beaubaton (1980) and Velay and Beaubaton (1986) reported that visually guided reaching movements were more accurate when actions were directed to a target embedded in a structured visual background (i.e., a grid-like pattern surrounding the target) than when reaching movements were completed to a target in an otherwise empty or neutral visual background. More recent research has suggested that contextual features surrounding a target enhance the accuracy and effectiveness of visually guided reaching/grasping movements due to the evocation of improved feedback-based limb corrections (Krigolson and Heath 2004; see also Coello and Grealy 1997). As well, it has been shown that cognitive knowledge concerning the allocentric location of an object in peripersonal space can be used to support online action control (Carrozzo et al. 2002). In other words, converging evidence suggests that allocentric visual information surrounding a target can be explicitly identified by a performer and used in combination with vision of the moving limb (i.e., egocentric visual cue) to facilitate online limb adjustments.

The present investigation sought to determine whether the online reorganization of an initially biased grasping posture is based on absolute visual information specified in an egocentric visual frame of reference, or whether online corrections are influenced by the interaction of egocentric and allocentric visual frames of reference. To address this question, we asked participants to formulate a premovement grip aperture (GA) based on the perceived size of a preview object presented in a neutral (i.e., empty) visual background. The preview object was 2 cm smaller, veridical, or 2 cm larger than the to-be-grasped target object. As participants maintained their premovement GA, the preview object was replaced with a target object embedded within the fins-in or fins-out ML configuration. Participants were instructed to reach out and grasp the target object coincident with its visual presentation. In this scenario, two of the initial response sets elicited a premovement GA that was “too small” or “too large” to grasp the target object; hence, appropriate grasping of the target object required online reorganization of grip aperture. Notably, previous research has shown that when grasping an object presented in a neutral visual background, the visuomotor system is able to quickly reorganize an altered premovement GA based on extrinsic task characteristics (i.e., object size). For instance, Saling et al. (1996) and Timmann et al. (1996) reported that when thumb and finger are maximally separated prior to movement onset, GA is rapidly brought into a position consistent with a normal starting position (i.e., thumb-finger together). Those results show that visual feedback permits substantial reorganization of GA during the early stages of action based on visual object properties (see also Meulenbroek et al. 2001).

In the present investigation, however, the initially “too small” and “too large” premovement GA required online reorganization on the basis of a target object presented in an illusory background (i.e., fins-in and fins-out ML figures). In this context, a reasonable prediction arising from the PAM is that metrical visual information specified in an egocentric visual frame is used to support the online reorganization of grasping kinematics, thus resulting in a grasping movement that is entirely impervious to the illusion-inducing elements of the ML figures (Milner and Goodale 1995; see also Goodale and Westwood 2004). If, however, the PCM is correct, then one would predict the independent use of allocentric and egocentric visual cues at different stages in the grasping trajectory. More specifically, the PCM predicts a dynamic illusion effect such that an early intrusion of the ML figures on action is monotonically reduced during the later stages of the response (Glover 2004). Last, a prediction drawn from the visual background literature asserts that egocentric and allocentric visual cues interact to support GA reorganization, thus rendering the early and late stages of action entirely susceptible to the ML figures (e.g., Krigolson and Heath 2004).

Methods

Participants

Participants were 15 undergraduate students from the Indiana University community (seven men, eight women) ranging in age from 20 to 22 years. All participants had normal or corrected-to-normal vision and were right-handed as determined by a modified version of the University of Waterloo Handedness Questionnaire (Bryden 1977). All participants signed consent forms approved by the Human Subjects Committee, Indiana University, and this study was conducted in accordance with the ethical standards laid down in the 1964 Declaration of Helsinki.

Apparatus

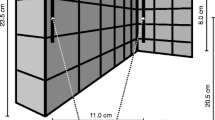

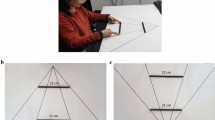

Visual stimuli were preview objects presented in a neutral visual background and target objects embedded within fins-in and fins-out ML configurations (30° fin angles). The preview objects were graspable 3, 5, 7 and 9 cm long rectangular bars (0.7 cm height x 0.7 cm width) painted flat black and centered on individual sheets of white paper (15x9 cm). The target objects were graspable 5 and 7 cm long (0.7 cm height x 0.7 cm width) rectangular bars painted flat black and placed over the horizontal shaft of appropriately sized fins-in and fins-out ML figures (i.e., the length of the horizontal shaft between ML vertices was 5 and 7 cm). ML figures were printed in black ink and centered on individual sheets of white paper (15x9 cm). The long axis of preview and target objects was oriented perpendicular to the midline of participants and presented on a blackened table surface at a distance of 35 cm from a home position (i.e., a telegraph key located 5 cm from the front edge of the table top). Grasping of the target object required 35 cm of limb displacement in the depth plane. Vision of the grasping environment was controlled via liquid-crystal shutter goggles (PLATO Translucent Technologies, Toronto, ON, Canada) interfaced to Eprime software (ver 1.0). The ML configurations used in this investigation have been shown to reliably influence manual estimates of object size (Heath and Rival 2005; Heath et al. 2004a; Westwood et al. 2000a, b, 2001).

Procedure

Participants stood during the duration of the experiment and grasped the target object “as quickly and accurately as comfortable” along its long axis using thumb and index finger. In advance of each trial, the shutter goggles were set in their opaque state until the appropriate preview object could be positioned on the tabletop. During this time, participants rested the medial surface of their right hand (i.e., the grasping hand) on the home position with thumb and index finger pinched lightly together. Once a preview object was positioned (i.e., 3, 5, 7 or 9 cm), the goggles were placed in their translucent state for a 2000 ms preview period. During the preview period, participants were instructed to formulate a stable grip aperture (SGA) based on the perceived size of the preview object by adjusting the separation between their thumb and index finger. At the end of the preview period, the goggles reverted to their opaque state for a 1500 ms “exchange period”. The exchange period was used so that the preview object could be replaced with one of the four target object arrays (i.e., fins-in 5 cm, fins-out 5 cm, fins-in 7 cm, fins-out 7 cm). Importantly, participants were instructed to maintain SGA during the exchange period. Following the exchange period, the goggles were set to their translucent state and participants were concurrently cued (via auditory tone) to grasp the newly presented target object.

The 3, 5, and 7 cm preview objects were presented in advance of grasping the 5 cm target object in each ML configuration (i.e., fins-in 5 cm and fins-out 5 cm). Similarly, the 5, 7, and 9 cm preview objects were presented in advance of grasping the 7 cm target object in each ML configuration (i.e., fins-in 7 cm and fins-out 7 cm). This combination of preview and target object allowed that SGA was based on an object 2 cm smaller, veridical, or 2 cm larger than size of the to-be-grasped target object. Participants completed 8 trials for each of the preview object, target object and ML background combinations specified above for a total of 96 experimental trials. Because the 5 and 7 cm preview objects were presented in advance of grasping both the 5 and 7 cm target objects, those preview objects were presented with greater frequency (i.e., 32 trials each) than the 3 and 9 cm preview objects (i.e., 16 trials each). The presentation of preview object, target object and ML configuration was ordered using the Eprime randomization protocol.

Data collection and reduction

We placed infrared-emitting diodes (IREDs) on the lateral edge of the index finger, the medial edge of the thumb, and the styloid process of the right wrist. The three-dimensional IRED position data were captured using an OPTOTRAK 3020 motion analysis system (NDI, Waterloo, ON, Canada) sampling at 200 Hz. Offline, we filtered displacement data via a second-order dual-pass Butterworth filter employing a low-pass cut-off frequency of 15 Hz. Subsequently, we differentiated displacement data using a three-point central finite difference algorithm to obtain instantaneous velocities. Movement onset was marked as the first sample frame in which resultant wrist velocity exceeded 50 mm/s for ten consecutive frames (50 ms). Similarly, movement offset was determined by the first sample frame in which resultant wrist velocity dropped below a value of 50 mm/s for ten consecutive frames (50 ms).

Dependent variables and statistical analyses

The dependent variables used in this investigation included: reaction time (RT: time from response cuing to movement onset) and movement time (MT: time from movement onset to movement offset). In line with other recent work (e.g., Danckert et al. 2002; Franz 2003; Glover and Dixon 2001a, b, c, 2002; Heath et al. 2004a, b, 2005a, b), we computed grip aperture (GA: absolute distance between thumb and index finger) at discrete points in the reaching trajectory to examine the extent to which the premovement manipulation of grasping posture influenced unfolding grasping control. Specifically, we measured GA at six time-points: a premovement interval (i.e., SGA formulation) and at 0, 20, 40, 60 and 80% of MTFootnote 2.

For all statistical analyses, an alpha level of 0.05 was set, and where appropriate, F-statistics were corrected for violations of the sphericity assumption using the appropriate Huynh-Feldt correction (corrected degrees of freedom reported to one decimal place). Simple effects analyses and, in some cases, examination of power polynomials were used to decompose significant main effects and interactions. Note that in most analyses (see below), we refer to a ‘small preview object’ if the size of the preview object was 2 cm less than the target object. Similarly, we use the term ‘veridical preview object’ if the size of the preview object matched the target object. Lastly, we use the term ‘large preview object’ to represent a preview object that was 2 cm more than the target object.

Results

The first analysis was designed to address two questions: first, to determine whether SGA remained stable from initial formulation until the time of response cuing, and second, to determine whether participants scaled their initial grasp posture in relation to the different sized preview objects. To that end, GA values for the preview objects (3, 5, 7 and 9 cm) were computed during the initial preview period (i.e., at SGA) and the time of response cuing (i.e., 0% of MT) and were subjected to 2 Time (SGA, 0% of MT) by 4 Preview Object (3, 5, 7, and 9 cm) repeated measures ANOVA. As expected, premovement GA scaled in relation to the size of the preview object, F(3, 42)=724.94, P<.0001. The 3 cm preview object produced the smallest SGA (39±1 mm), followed by step-wise increases for the 5 cm (56±1 mm), 7 cm (74±1 mm) and 9 cm (94±2) preview objects (only linear effect significant: F(1,14)=924.27, P<.0001). We did not observe a significant effect of Time (F<1), thus demonstrating that participants were able to maintain a constant premovement grasping posture from SGA until response cueing (see Figs. 1 and 2).

Average grip aperture (mm) data for an exemplar participant at stable grip aperture (SGA) and eleven normalized time-points (i.e., 0, 10...90, 100% of MT) as a function of small (top panel), veridical (middle panel), and large (bottom panel) preview objects and the 5 and 7 cm target objects embedded within fins-out and fins-in ML configurations. Error bars represent the within-participant SEM

The effect of the fins-out and fins-in ML configurations on absolute grip aperture (mm) averaged across participants as a function of stable grip aperture (SGA), 0, 20, 40, 60, and 80% of MT for the different preview objects (small = hatched and dotted line, veridical = solid line, large = dotted line). The offset bar graph represents illusion effects (fins-out minus fins-in) at 0, 20, 40, 60, and 80% of MT for the different preview objects. Error bars represent between-participant SEM

Figure 1 presents averaged GA profiles for an exemplar participant in each preview object, target object and ML background combination. A number of important elements can be gleaned from this figure. First, Fig. 1 shows that the different sized preview objects influenced premovement GA formulation as well as the early and middle stages of the unfolding grasping trajectory. It can be noted, however, that the later stage of the grasping trajectory was reorganized to meet the characteristics of the to-be-grasped target object (cf. Saling et al. 1996). Second, the exemplar participant demonstrates that the ML figures influenced the early stages of grasping (i.e., between 20 and 40% of MT) and remained present for upwards of 90% of MT. Of course, at the end of the grasping response (i.e., 100% of MT), the participant had physically touched the target object, thereby removing the impact of the illusion.

GA data were submitted to 5 Time (0, 20, 40, 60, and 80% of MT) by 3 Preview Object (small, veridical, large) by 2 Illusion (fins-in, fins-out) by 2 Target Size (5 cm, 7 cm) repeated measures ANOVA. Results for GA elicited significant effects for Time, F(2.4, 34.3)=20.90, P<.001; Preview Object, F(2, 28)=177.47, P<.001; Illusion, F(1, 14)=49.56, P<.001; and Target Size, F(1, 14)=442.62, P< .001, as well as interactions involving Time by Preview Object, F(2.9, 41.9)=181.43, P<.001; Time by Illusion, F(3.3, 46.6)=14.96, P<.001; Preview Object by Illusion, F(2, 28)=4.37, P<.03; and Time by Preview Object by Illusion, F(8, 112)=2.05, P<.04. As expected, the Target Size effect indicated that the 5 cm target yielded smaller GA values than the 7 cm target. In terms of the Time by Preview Object interaction, a clear separation between small, veridical, and large preview objects was observed at 0% (F (2,28)=822.58, P<.001), 20% (F (2, 28)=305.58, P<.001), and 40% (F(2, 28)=44.39, P<.001) of MT. At 60 and 80% of MT, GA values for the different preview objects no longer differed (60% of MT: F(2, 28)=1.99, P>.05; 80% of MT: F(2, 28)=1.54, P>.05) (Fig. 2). Examination of the Time by Illusion interaction showed that GA for the ML figures did not differ at 0% of MT (t(14)=1.02, P>.05); however, at 20% (t(14)=4.32, P<.01), 40% (t(14)=7.08, P< .001), 60% (t(14)=6.93, P<.001) and 80% (t (14)=6.82, P<.001) of MT, the fins-in figure elicited smaller GA values than the fins-out figure. In terms of the Time by Preview Object by Illusion interaction, we elected to decompose this interaction without including 0% of MT because our analysis above showed that the ML figures did not influence grasping at this time point. In terms of 20% through 80% of MT, we found that the fins-out ML figure produced larger GA values than the fins-in figure, regardless of whether a small, veridical, or large preview object was presented, ts(14)=6.84, 4.78, 5.62, respectively, ps<.001 (Fig. 2).

We elected to further examine the Time by Illusion and Preview Object by Illusion interactions noted above via examination of illusion effects (GA for the fins-out ML figure minus GA for the fins-in ML figure). Illusion effects were examined via 4 Time (20, 40, 60, 80% of MT) by Preview Object (small, veridical, large) by Target Size (5 cm, 7 cm) repeated measures ANOVA. We did not use 0% of MT in this analysis because we previously established (see above) null illusion effects at this time point. The illusion effects data elicited significant effects for Time, F(2.2, 31.1)=5.65, P<.01, and Preview Object, F(2, 28)=3.71, P<.04. The magnitude of illusion effects increased from 20 to 40% of MT and then reversed to asymptote at 60% through 80% of MT (significant third-order polynomial: F(1, 14)=10.57, P<.01) (Fig. 2). The effect for Preview Object indicated that illusion effects for the veridical preview objects were less than the small (t(14)=2.75, P<.02) or the large (t(14)=2.67, P<.04) preview objects (which did not differ) (t(14)=0.79, P>.43) (Fig. 2).

RT and MT data were subjected to 3 Preview Object (small, veridical, large) by 2 Illusion (fins-in, fins-out) by Target Size (5 cm, 7 cm) repeated measures ANOVA. The analyses of RT (Grand Mean = 259±12 ms) and MT (Grand Mean = 656±38 ms) did not yield significant effects or interactions.

Discussion

We found that SGA (a manual estimate of object size) scaled in relation to the size of the preview object and influenced the early stages of the grasping response (i.e., 20–40% of MT). As the action unfolded, however, the differently sized preview objects no longer influenced grasp parameters (i.e., 60–80% of MT). Moreover, and in spite of the altered premovement GA used here, Figs. 1 and 2 demonstrate the characteristic attainment of maximum GA at 60–70% of MT (see Jeannerod 1984). Thus, and in accordance with other work, substantial online reorganization of the altered premovement GA was undertaken as the hand approached the target object (Saling et al. 1996; Timmann et al. 1996). Most interestingly, we observed that the online reorganization was influenced by the ML figures (20–80% of MT), regardless of the size of the preview object, albeit with the small and large preview objects eliciting more robust illusory-effects than the veridical preview object.

Recall the PAM’s assertion that the real-time planning (e.g., Danckert et al. 2002; Haffenden et al. 2001; Haffenden and Goodale 1998; Hu and Goodale 2000; Hu et al. 1999) and online control (Westwood et al. 2000a, b) of visually guided action is mediated by dorsal stream visuomotor mechanisms that process absolute object metrics in an egocentric visual frame of reference (see Goodale and Westwood 2004 for recent review). Hence, a reasonable prediction arising from the PAM is that online GA reorganization is based on visual feedback that is immune to the illusion-inducing elements of the ML figures because dorsal stream mechanisms operate without the influence of the top–down contextual information driving cognitive illusions. Notably, this assertion is consistent with the previous work from the NeuroBehavioral Laboratory at Indiana University showing that consecutively executed visually guided grasping movements initiated with a typical start posture (i.e. thumb and finger together) are entirely immune (i.e., 20–80% of MT) to the same ML figures used in the present research (Heath et al. 2005a, b). The present findings however, showed that the altered premovement GA postures elicited reliable illusory effects for upwards of 80% of MT, a finding that is not consistent with the PAM.

In terms of the two-component PCM, a context-dependent “visual planning representation” and a context-independent “visual control representation” are thought to mediate the early and later stages of action resulting in a dynamic illusion effect (Glover 2004). In support of that position, Glover and Dixon (2002) showed that the Titchener circles illusion had an early effect on GA that was continuously reduced as the hand approached the target (i.e., 40–100% of MT) (see also Glover and Dixon 2001a, b, c). In keeping with the PCM, the present results showed a dynamic illusion effect such that an early increase in the illusion on action (i.e., from 20 and 40% of MT) was reduced at 60% of MT. It is, however, important to note that the reduction of the illusion was not continuous. Indeed, a reliable and constant impact of the ML figures was observed from 60 to 80% of MT, thus suggesting that the early and late stages of GA reorganization were influenced, albeit asymmetrically, by the context-dependent properties of the stimulus array.

The important question that remains is why the online reorganization of GA was influenced by the ML figures. One possibility is that the instantiation of a premovement GA disrupted the normally online operation of the visuomotor system and resulted in a grasping trajectory specified primarily offline via perception-based visual information. This argument stems from recent work by Heath and colleagues wherein participants adopted a premovement GA based on the perceived size of to-be-grasped target objects embedded with ML figures (Heath and Rival 2005; Heath et al. 2004a). In that paradigm, premovement GA was biased in a direction consistent with the perceptual effects of the ML figures. Notably, the biased premovement GA influenced ensuing motor output such that GA values associated with the fins-out ML figure were larger than GA values associated with the fins-in ML figure for upwards of 80% of MT. It was reasoned that advance scaling of GA based on the size of a target object embedded within the ML configuration encouraged participants’ use of perception-based (Milner and Goodale 1995) or offline (Glover 2004) visual information to support not only premovement GA but also the spatiotemporal characteristics of GA during the response. This explanation, however, does not appear to adequately account for the present results for at least two reasons. First, the current investigation presented the target object to participants only at the time of response cuing, thus precluding advance perception-based or extensive offline planning of the response (see Heath 2005; Heath et al. 2004b). In other words, even though the occlusion period used in this investigation mandated participants’ maintenance of a premovement GA based on either a stored sensory representation of the neutral preview object (Heath and Westwood 2003) or a stored postural value (Rosenbaum et al. 1993)—evidence from the extant literature suggests that unfolding grasping trajectories were specified on the basis of visual information available to the performer at the time of response cuing and not before (so-called real time control hypothesis; Klapp 1975, Westwood and Goodale 2003; see Goodale and Westwood 2004 for recent review). Second, because the small and large preview objects produced premovement GA values that were either “too small” or “too large” to appropriately grasp the target object, participants were required to engage real-time visual information for the online reorganization of GA.

A more tenable explanation of the present findings pertains to the interaction of egocentric and allocentric visual frames in online grasping control. Support for this proposal stems from some pictorial illusion studies as well as literature examining the impact of background visual cues (apart from illusory arrays) on visually guided actions. Concerning evidence from the pictorial illusions literature, it has been reported that single measures of visually guided grasping and pointing movements are influenced by the context-dependent properties of the ML figures. For example, Daprati and Gentilucci (1997) reported that grasping an object embedded with the ML figures reliably influenced maximum GA (see also Westwood et al. 2001). As well, the endpoints of visually guided pointing movements frequently demonstrate bias to the ML figures (Elliott and Lee 1995; Gentilucci et al. 1996). Most recently, Meegan et al. (2004) showed that in spite of feedback-based movement corrections, the spatial trajectory and ultimate movements endpoints of visually guided reaching movements were influenced by the ML figures. Concerning evidence from research investigating the influence of non-illusory background structure, it has been repeatedly shown that geometric features or contextual cues surrounding a target enhance the accuracy and effectiveness of visually guided actions (Conti and Beaubaton 1980; Coello and Grealy 1997; Velay and Beaubaton 1986). For instance, Krigolson and Heath (2004) found that reaches to a visual or remembered target surrounded by a structured visual background were controlled more online than reaches directed to a target in an empty or otherwise neutral visual background. That finding, in concert with other research (e.g., Lemay et al. 2004), has been taken as evidence that visual structure surrounding a target (i.e., allocentric frame of reference) is combined with online vision of the moving limb (i.e., egocentric frame of reference) to support highly accurate reaching and grasping movements. In other words, there is sufficient evidence from the extant literature to suggest that egocentric and allocentric visual cues interact to influence the online control of action.

One could argue that the degree to which egocentric and allocentric visual cues interact varies with the required level of online movement reorganization. This view is predicated on findings from the present investigation showing that the small and large preview objects produced more robust illusory effects than the veridical preview objects. Although dissociation between conscious perception and online (e.g., Goodale et al. 1986) and offline (Gentilucci et al. 1995) movement reorganization has been reported, the manipulation of premovement GA in the present investigation may have necessitated explicit awareness of the need for online reorganization, thus favoring putative interactions between egocentric and allocentric visual frames. More specifically, the degree to which participants are aware of the need for online reorganization might influence movement-related set (Schluter et al. 1999) and thus favor direct and/or indirect interactions that exist between the visual systems supporting egocentric and allocentric visual frames (see below). Such an explanation provides a congruent framework for understanding why the present work, but not our previous work employing a neutral premovement grasping posture (Heath et al. 2005a, b), showed visuomotor susceptibility to the ML figures. Indeed, it is entirely possible that explicit awareness of the need for online movement reorganization enhanced a cognitive allocentric representation of peripersonal space (Carrrozzo et al. 2002) and facilitated the interaction of multiple visual coordinate systems (Previc 1998; Schoumans et al. 2000).

Of course, it is possible that the visual system computes a single representation of 3D space that is used by the motor system to support actions (e.g., Franz et al. 2001). In other words, a single and context-dependent visual representation of 3D space may serve as the only source of visual information available to the visuomotor system. This idea, however, is countered by extensive neuroimaging and neuropsychology evidence indicating that anatomically distinct cortical regions mediate the computation of egocentric and allocentric space. Indeed, the lateral occipital complex, a structure in the ventral visual pathway has been shown to be involved in object recognition and scene-based tasks (i.e. allocentric-based frame of reference) (e.g., James et al. 2003). In contrast, regions within the intraparietal sulcus, and the extensive visuomotor networks of the dorsal visual pathway have been shown to play a pivotal role coding an egocentric-based representation of 3D space (e.g., Binkofski et al. 1998; Culham et al. 2003; Desmurget et al. 1999). Importantly, although allocentric visual cues are thought to be processed independently within the ventral visual pathway, relative size information may influence visuomotor control via the extensive interconnections between dorsal and ventral streams (Merigan and Maunsell 1993), or by bypassing the dorsal stream entirely and exerting influence on motor areas indirectly through projections to the prefrontal cortex (Lee and van Donkelaar 2002; Ungerleider et al. 1998). This level of direct and/or indirect connectivity between egocentric and allocentric visual maps might account for the reliable illusory effects observed in the present investigation.

Conclusions

Participants’ online reorganization of GA was reliably influenced by the illusion-evoking properties of the ML figures for upwards of 80% of MT. These findings are not in line with the PAM or PCM’s assertion that online control is supported by absolute visual information, or an absolute visual representation, which is refractory to pictorial illusions. Instead, we propose that conscious awareness of the need to reorganize altered premovement GA parameters facilitated the interaction between egocentric and allocentric visual maps, resulting in grasping movements “tricked” by the ML figures.

Notes

Scaled illusion effects are not reported in the present investigation. Our previous work employing absolute and scaled illusion measures has not shown a monotonic reduction in illusory effects when context-dependent visual information intrudes into the motor response (Heath and Rival 2005; Heath et al. 2004a, 2005a, b; see also Westwood 2004).

References

Aglioti S, DeSouza JFX, Goodale MA (1995) Size-contrast illusions deceive the eye but not the hand. Curr Biol 5:679–685

Berthier NE, Clifton RE, Gullapalli V, McCall DD, Robin DJ (1996) Visual information and object size in the control of reaching. J Mot Behav 28:187–197

Binkofski F, Dohle C, Possee S, Stephan KM, Hefter H, Seitz RJ, Freund HJ (1998) Human anterior intraparietal area subserves prehension: a combined lesion and functional MRI activation study. Neurology 50:1253–1259

Brenner E, Smeets JBJ (1996) Size illusion influences how we lift but not how we grasp an object. Neuropsychologia 111:473–476

Bryden MP (1977) Measuring handedness with questionnaires. Neuropsychologia 15:617–624

Carlton LG (1981) Visual information: the control of aiming movements. Q J Exp Psychol 33A:87–93

Carrozzo M, Stratta F, McIntyre J, Lacquaniti F (2002) Cognitive allocentric representations of visual space shape pointing errors. Exp Brain Res 147:426–436

Churchill A, Hopkins B, Rönnqvist, Vogt S (2000) Vision of the hand and environmental context in human prehension. Exp Brain Res 134:81–89

Coello Y, Grealy MA (1997) Effect of size and frame of visual field on the accuracy of an aiming movement. Percept 26:287–300

Connolly JD, Goodale MA (1999) The role of visual feedback of hand position in the control of manual prehension. Exp Brain Res 125:281–286

Conti P, Beaubaton D (1980) Role of structured visual field and visual reafference in accuracy of pointing movements. Percept Mot Skills 50:239–244

Culham JC, Danckert SL, Souza JF, Gati JS, Menon RS, Goodale MA (2003) Visually guided grasping produces fMRI activation in dorsal but not ventral stream brain areas. Exp Brain Res 153:180–189

Danckert JA, Sharif N, Haffenden AM, Schiff KC, Goodale MA (2002) A temporal analysis of grasping in the Ebbinghaus illusion: planning versus online control. Exp Brain Res 144:275–280

Daprati E, Gentilucci M (1997) Grasping an illusion. Neuropsychologia 35:1577–1582

De Grave DD, Brenner E, Smeets JBJ (2004) Illusions as a tool to study the coding of pointing movments. Exp Brain Res 155:56–62

Desmurget M, Epstein CM, Turner RS, Prablanc C, Alexander GE, Grafton ST (1999) Role of the posterior parietal cortex in updating reaching movements to a visual target. Nat Neurosci 2:563–567

Eliott D, Helsen WF, Chua R (2001) A century later: Woodworth’s (1899) two-component model of goal-directed aiming. Psychol Bull 127:342–357

Elliott D, Lee TD (1995) The role of target information on manual aiming bias. Psychol Res 58:2–9

Franz VH, Fahle M, Bulthoff HH, Gegenfurtner KR (2001). Effects of visual illusions on grasping. J Exp Psychol Hum Percept Perform 27:1124–1144

Gentilucci M, Toni I, Chieffi S, Pavesi G (1994) The role of proprioception in the control of prehension movements: a kinematic study in a peripherally deafferented patient and in normal subjects. Exp Brain Res 90:483–500

Gentilucci M, Daprati E, Toni I, Chieffi S, Saetti MC (1995) Unconscious updating of grasp motor programs. Neuropsychologia 105:291–303

Gentilucci M, Chieffi S, Daprati E, Saetti MC, Toni I (1996) Visual illusion and action. Neuropsychologia 34:369–376

Glazebrook CM, Shillon VP, Keetch KM, Lyons J, Amazeen E, Weeks DJ, Elliott D (2005) Perception-action and the Müller-Lyer illusion: amplitude or endpoint bias? Exp Brain Res 160:71–78

Glover S (2004) Separate visual representations in the planning and control of action. Behav Brain Sci 27:3–78

Glover S, Dixon P (2001a) Dynamic illusion effect in a reaching task: evidence for separate visual representations in the planning and the control of reaching. J Exp Psychol Hum Percept Perform 27:560–572

Glover S, Dixon P (2001b) Motor adaptation to an optical illusion. Exp Brain Res 137:254–258

Glover S, Dixon P (2001c) The role of vision in the on-line correction of illusion effects on action. Can J Exp Psychol 55:96–103

Glover S, Dixon P (2002) Dynamic effects of the Ebbinghaus illusion in grasping: support for a planning/control model of action. Percept Psychophys 64:266–278

Goodale MA, Pelisson D, Prablanc C (1986) Large adjustments in visually guided reaching do not depend on vision of the hand or perception of target displacement. Nature 20:748–750

Goodale MA, Westwood DA (2004) An evolving view of duplex vision: separate but interacting cortical visual pathways for perception and action. Curr Opin Neurobiol 14:203–211

Haffenden AM, Schiff KC, Goodale MA (2001) The dissociation between perception and action in the Ebbinghaus illusion: nonillusory effects of pictorial cues on grasp. Curr Biol 11:177–181

Haffenden AM, Goodale MA (1998) The effect of pictorial illusion on prehension and perception. J Cogn Neurosci 10:122–136

Heath M, Rival C, Binsted G. (2004a) Can the motor system resolve a premovement bias in grip aperture: online analysis of grasping the Műller-Lyer illusion. Exp Brain Res 158:378–384

Heath M, Westwood DA, Binsted G (2004b) The control of memory-guided reaching movements in peripersonal space. Motor Control 8:76–106

Heath M (2005) Role of limb and target vision in the online control of memory-guided reaches. Motor Control (in press)

Heath M, Rival C, Neely KA (2005a) Visual feedback schedules influence visuomotor resistant to the Műller-Lyer illusion. Exp Brain Res (in press)

Heath M, Rival C, Westwood, DA, Neely KA (2005b) Time course analysis of closed- and open-loop grasping of the Müller-Lyer illusion. J Mot Behav (in press)

Heath M, Rival C (2005) Role of the visuomotor system in on-line attenuation of a premovement illusory bias in grip aperture. Brain Cogn 57:111–114

Heath M, Westwood DA (2003) Can a visual representation support the online control of memory-dependent reaching? Evidence from a variable spatial mapping paradigm. Motor Control 7:346–361

Hu Y, Eagleson R, Goodale MA (1999) The effects of delay on the kinematics of grasping. Exp Brain Res 126:109–116

Hu Y, Goodale MA (2000) Grasping after a delay shifts size-scaling from absolute to relative metrics. J Cogn Neurosci 12:856–868

Jackson SR, Shaw A (2000) The Ponzo illusion affects grip-force but not grip-aperture scaling during prehension movements. J Exp Psychol Hum Percept Perform 26:418–423

Jakobson LS, Goodale MA (1991) Factors affecting higher-order movement planning: a kinematic analysis of human prehension. Exp Brain Res 86:199–208

James TW, Culham J, Humphrey K, Milner AD, Goodale MA (2003) Ventral occipital lesions impair object recognition but not object-directed grasping: an fMRI study. Brain 126:2463–2475

Jeannerod M (1984) The timing of natural prehension movements. J Mot Behav 16:235–254

Keele SW (1968) Movement control in skilled motor performance. Psychol Bull 70:387–403

Khan MA, Elliott D, Coull J, Chua R, Lyons D (2002) Optimal control strategies under different feedback schedules: kinematic evidence. J Mot Behav 32:227–240

Krigolson O, Heath M (2004) Background visual cues and memory-guided reaching. Hum Mov Sci 23:861–877

Lee J, van Donkelaar P (2002) Dorsal and ventral stream contributions to perception-action interactions during pointing. Exp Brain Res 143:440–446

Lemay M, Bertram CP, Stelmach GE (2004) Pointing to allocentric and egocentric remembered target. Motor Control 8:16–32

Meegan DV, Glazebrook CM, Dhillon VP, Tremblay L, Welsh TN, Elliott D (2004) The Műller-Lyer illusion affects the planning and control of manual aiming movements. Exp Brain Res 155:37–47

Merigan WH, Maunsell JH (1993) How parallel are primate visual pathways? Ann Rev Neurosci 16:369–402

Meulenbroek RGJ, Rosenbaum DA, Jansen C, Vaughan J, VoMT S (2001) Multijoint grasping movements: simulated and observed effects of object location, object size, and initial aperture. Exp Brain Res 138:219–234

Meyer DA, Abrams RA, Kornblum S, Wright CE, Smith JEK (1988) Optimality in human motor performance: ideal control of rapid aimed movements. Psychol Rev 95:340–370

Milner AD, Goodale MA (1995) The visual brain in action. Oxford University Press, Oxford

Previc FH (1998) The neuropsychology of 3–D space. Psychol Bull 124:123–164

Rosenbaum DA, Engelbrecht SE, Bushe MM, Loukopoulos LD (1993) Knowledge model for selecting and producing movements. J Mot Behav 25:217–277

Saling M, Mescheriakov S, Molokanova E, Stelmach GE, Berger M (1996) Grip reorganization during wrist transport: the influence of an altered aperture. Exp Brain Res 108:493–500

Schluter ND, Rushworth MF, Mills KR, Passingham RE (1999) Signal-, set-, and movement-related activity in the human premotor cortex. Neuropsychologia 37:233–243

Schoumans N, Koenderink JJ, Kappers AM (2000) Change in perceived spatial directions due to context. Percept Psychpophys 62:532–539

Timmann D, Stelmach GE, Bloedel JR (1996) Grasping component alterations and limb transport. Exp Brain Res 108:486–492

Ungerleider LG, Courtney SM, Haxby JV (1998) A neural system for human visual working memory. Proc Natl Acad Sci USA 95:883–890

Velay JL, Beaubaton D (1986) Influence of visual context on pointing movement accuracy. Cahiers de Psychologie Cognitive 6:447–456

Westwood DA (2004) Planning and control and the illusion of explanation: a reply to Glover. Behav Brain Sci 27:54–55

Westwood DA, Goodale MA (2003) Perceptual illusion and the real-time control of action. Spat Vis 16:243–254

Westwood DA, Chapman CD, Roy EA (2000a) Pantomimed actions may be controlled by the ventral visual stream. Exp Brain Res 130:545–548

Westwood DA, Heath M, Roy EA (2000b) The effect of a pictorial illusion on closed-loop and open-loop prehension. Exp Brain Res 134:456–463

Westwood DA, McEachern T, Roy EA (2001) Delayed grasping of a Müller-Lyer figure. Exp Brain Res 141:166–173

Wing AM, Turton A, Fraser C (1986) Grasp size and accuracy of approach in reaching. J Mot Behav 18:245–260

Woodworth RS (1899) The accuracy of voluntary movement. Psychol Rev 3:1–114

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Heath, M., Rival, C., Neely, K. et al. Müller-Lyer figures influence the online reorganization of visually guided grasping movements. Exp Brain Res 169, 473–481 (2006). https://doi.org/10.1007/s00221-005-0170-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-005-0170-3