Abstract

We propose a new nonconforming finite element algorithm to approximate the solution to the elliptic problem involving the fractional Laplacian. We first derive an integral representation of the bilinear form corresponding to the variational problem. The numerical approximation of the action of the corresponding stiffness matrix consists of three steps: (1) apply a sinc quadrature scheme to approximate the integral representation by a finite sum where each term involves the solution of an elliptic partial differential equation defined on the entire space, (2) truncate each elliptic problem to a bounded domain, (3) use the finite element method for the space approximation on each truncated domain. The consistency error analysis for the three steps is discussed together with the numerical implementation of the entire algorithm. The results of computations are given illustrating the error behavior in terms of the mesh size of the physical domain, the domain truncation parameter and the quadrature spacing parameter.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider a nonlocal model on a bounded domain involving the Riesz fractional derivative (i.e., the fractional Laplacian). For theory and numerical analysis of general nonlocal models, we refer to the review paper [24] and references therein. Particularly, several applications are modeled by partial differential equations involving the fractional Laplacian: obstacle problems from symmetric \(\alpha \)-stable Lévy processes [18, 34, 40]; image denoisings [27]; fractional kinetics and anomalous transport [45]; fractal conservation laws [5, 23]; and geophysical fluid dynamics [16, 17, 19, 30].

In this paper, we consider a class of fractional boundary problems on bounded domains where the fractional derivative comes from the fractional Laplacian defined on all of \({\mathbb {R}}^d\). The motivation for these problems is illustrated by an evolution equation considered by Meuller [38] of the form:

Here D is a convex polygonal domain in \({{\mathbb {R}}}^d\), \(D^c\) denotes its complement and

with \({\widetilde{u}} \) denoting the extension of u by zero to \({{\mathbb {R}}}^d\). This fractional Laplacian on \({{\mathbb {R}}}^d\) is defined using the Fourier transform \({{\mathcal {F}}}\):

The formula (3) defines an unbounded operator \((-\varDelta )^s\) on \(L^2({{{\mathbb {R}}}^d})\) with domain of definition

It is clear that the Sobolev space

is a subset of \(D((-\varDelta )^s)\) for any \(s\ge 0\). Note that \((-\varDelta )^sv\) for \(s=1\) and \(v\in H^2({{{\mathbb {R}}}^d})\) coincides with the negative Laplacian applied to v.

The term \(-\widetilde{\varLambda }_s\) along with the “boundary condition” (2) represents the generator of a symmetric s-stable Lévy process which is killed when it exits D (cf. [38]). The f(u) term in (1) involves white noise and will be ignored in this paper.

The goal of this paper is to study the numerical approximation of solutions of partial differential equations on bounded domains involving the fractional operator \(\widetilde{\varLambda }_s\) supplemented with the boundary conditions (2). As finite element approximations to parabolic problems are based on approximations to the elliptic part, we shall restrict our attention to the elliptic case, namely,

The above system is sometimes referred to as the “integral” fractional Laplacian problem.

We note that the variational formulation of (4) can be defined in terms of the classical spaces \({\widetilde{H}}^s(D)\) consisting of the functions defined in D whose extension by zero are in \(H^s({{{\mathbb {R}}}^d})\). This is to find \(u\in {\widetilde{H}}^s(D)\) satisfying

where

with \({\widetilde{u}}\) and \(\widetilde{\phi }\) denoting the extensions by 0. We refer to Sect. 8.1 for the description of model problems. The bilinear form \(a(\cdot ,\cdot )\) is obviously bounded on \({{\widetilde{H}}^s}(D)\times {{\widetilde{H}}^s}(D)\) and, as discussed in Sect. 2, it is coercive on \({{\widetilde{H}}^s}(D)\). Thus, the Lax–Milgram theory guarantees existence and uniqueness.

We consider finite element approximations of (5). The use of standard finite element approximation spaces of continuous functions vanishing on \(\partial D\) is the natural choice. The convergence analysis is classical once the regularity properties of solutions to problem (5) are understood (regularity results for (5) have been studied in [1, 41]). However, the implementation of the resulting discretization suffers from the fact that, for \(d>1\), the entries of the stiffness matrix, namely, \(a(\phi _i,\phi _j)\), with \(\{\phi _k\}\) denoting the finite element basis, cannot be computed exactly.

When \(d=1\), \(s\in (0,1/2)\cup (1/2,1)\) and, for example, \(D=(-1,1)\), the bilinear form can be written in terms of Riemann–Liouville fractional derivatives (cf. [33]), namely,

Here \((\cdot ,\cdot )_D\) denotes the inner product on \(L^2(D)\) and for \(t\in (0,1)\) and \(v\in H^1_0(D)\), the left-sided and right-sided Riemann–Liouville fractional derivatives of order t are defined by

and

Note that the integrals in (8) and (9) can be easily computed when v is a piecewise polynomial, i.e, when v is a finite element basis function. The computation of the stiffness matrix in this case reduces to a coding exercise.

A representation of the fractional Laplacian for \(d\ge 1\) is given by [44]:

where \({\mathcal {S}}\) denotes the Schwartz space of rapidly decreasing functions on \({{{\mathbb {R}}}^d}\), PV denotes the principle value and \(c_{d,{s}}\) is a normalization constant. It follows that for \(\eta ,\theta \in {\mathcal {S}}\),

A density argument implies that the stiffness entries are given by

where again \(\widetilde{\phi }\) denotes the extension of \(\phi \) by zero outside D. It is possible to apply the techniques developed for the approximation of boundary integral stiffness matrices [42] to deal with some of the issues associated with the approximation of the double integral above, namely, the application of special techniques for handling the singularity and quadratures. However, (12) requires additional truncation techniques as the non-locality of the kernel implies a non-vanishing integrand over \({{{\mathbb {R}}}^d}\). These techniques are used to approximate (12) in [1, 21]. In particular, Acosta and Borthagaray [1] use their regularity theory to do a priori mesh refinement near the boundary to develop higher order convergence under the assumption of exact evaluation of the stiffness matrix.

The method to be developed in this paper is based on a representation of the underlying bilinear form given in Sect. 4, namely, for \(s\in (0,1)\), \(0\le r \le s\), \(\eta \in H^r({{{\mathbb {R}}}^d})\) and \(\theta \in H^{s-r}({{{\mathbb {R}}}^d})\),

where \((\cdot ,\cdot )\) denotes the inner product on \(L^2({{{\mathbb {R}}}^d})\) (see also [3]). We note that for \(t>0\), \(( I-t^2\varDelta )^{-1}\) is a bounded map of \(L^2({{{\mathbb {R}}}^d})\) into \(H^2({{{\mathbb {R}}}^d})\) so that the integrand above is well defined for \(\eta ,\theta \in L^2({{{\mathbb {R}}}^d})\). In Theorem 4.1, we show that for \(\eta \in H^r({{{\mathbb {R}}}^d})\) and \(\theta \in H^{s-r}({{{\mathbb {R}}}^d})\), the formula (13) holds and the right hand side integral converges absolutely. It follows that the bilinear form \(a(\cdot ,\cdot )\) is given by

There are three main issues needed to be addressed in developing numerical methods for (5) based on (14):

-

(a)

The infinite integral with respect to t must be truncated and approximated by numerical quadrature;

-

(b)

At each quadrature node \(t_j\), the inner product term in the integrand involves an elliptic problem on \({{{\mathbb {R}}}^d}\). This must be replaced by a problem with vanishing boundary condition on a bounded truncated domain \(\varOmega ^M(t_j)\) (defined below);

-

(c)

Using a fixed subdivision of D, we construct subdivisions of the larger domain \(\varOmega ^M(t_j)\) which coincide with that on D. We then replace the problems on \(\varOmega ^M(t_j)\) of (b) above by their finite element approximations.

We address (a) above by first making the change of variable \(t^{-2}=e^y\) which results in an integral over \({{\mathbb {R}}}\). We then apply a sinc quadrature obtaining the approximate bilinear form

where k is the quadrature spacing, \(y_j=kj\), and \(N^-\) and \(N^+\) are positive integers. Theorem 5.1 shows that for \(\theta \in {{\widetilde{H}}^s}(D)\) and \(\eta \in \widetilde{H}^\delta (D)\) with \(\delta \in (s,2-s]\), we have

where \(0<\mathsf {d}<\pi \) is a fixed constant. Balancing of the exponentials gives rise to an \(O(e^{-2\pi \mathsf {d}/k})\) convergence rate with the relation \({N^+}+{N^-}=O(1/k^2)\).

The size of the truncated domain \(\varOmega ^M(t_j)\) in (b) is determined by the decay of \((e^{y_j}I-\varDelta )^{-1} f\) for functions f supported in D. For technical reasons, we first extend D to a bounded convex (star-shaped with respect to the origin) domain \(\varOmega \) and set (with \(t_j=e^{-y_j/2}\))

Let \(\varDelta _t\) denote the unbounded operator on \(L^2(\varOmega ^M(t))\) corresponding to the Laplacian on \(\varOmega ^M(t)\) supplemented with vanishing boundary condition. We define the bilinear form \(a^{k,M}(\eta ,\theta )\) by replacing \((-\varDelta )(e^{y_j}I-\varDelta )^{-1}\) in (15) by \((-\varDelta _{t_j}) (e^{y_j}I -\varDelta _{t_j})^{-1}\). Theorem 6.2 guarantees that for sufficiently large M, we have

Here c and C are positive constants independent of M and k. This addresses (b).

Step (c) consists in approximating \((-\varDelta _{t_j}) (e^{y_j}I -\varDelta _{t_j})^{-1}\) using finite elements. In this aim, we associate to a subdivision of \(\varOmega ^M(t_j)\) the finite element space \({\mathbb {V}}_h^M(t_j)\) and the restriction \(a^{k,M}_h(\cdot ,\cdot )\) of \(a^{k,M}(\cdot ,\cdot )\) to \({\mathbb {V}}_h^M(t_j) \times {\mathbb {V}}_h^M(t_j)\). As already mentioned, the subdivisions of \(\varOmega ^M(t_j)\) are constructed to coincide on D. Denoting by \({\mathbb {V}}_h(D)\) the set of finite element functions restricted to D and vanishing on \(\partial D\), our approximation to the solution of (5) is the function \(u_h\in {\mathbb {V}}_h(D)\) satisfying

Lemma 7.2 guarantees the \({\mathbb {V}}_h(D)\)-coercivity of the bilinear form \(a^{k,M}_h(\cdot ,\cdot )\). Consequently, \(u_h\) is well defined again from the Lax–Milgram theory. Moreover, given, for every \(t_j\), a sequence of quasi-uniform subdivisions of \(\varOmega ^M(t_j)\), we show (Theorem 7.5) that for v in \({\widetilde{H}}^\beta (D)\) with \(\beta \in (s,3/2)\) and for \(\theta _h\in {\mathbb {V}}_h(D)\),

Here C is a constant independent of M, k and h, and \(v_h\in {\mathbb {V}}_h(D)\) denotes the Scott–Zhang interpolation or the \(L^2\) projection of v depending on whether \(\beta \in (1,3/2)\) or \(\beta \in (s,1]\).

Strang’s Lemma implies that the error between u and \(u_h\) in the \({{\widetilde{H}}^s}(D)\)-norm is bounded by the error of the best approximation in \({{\widetilde{H}}^s}(D)\) and the sum of the consistency errors from the above three steps (see Theorem 7.7).

The outline of the paper is as follows. Section 2 introduces notations of Sobolev spaces followed by Sect. 3 introducing the dotted spaces associated with elliptic operators. The alternative integral representation of the bilinear form is given in Sect. 4. Based on this integral representation, we discuss the discretization of the bilinear form and the associated consistency error in three steps (Sects. 5–7). The energy error estimate for the discrete problem is given in Sect. 7. A discussion on the implementation aspects of the method together with results of numerical experiments illustrating the convergence of the method are provided in Sect. 8. We left to “Appendix” the proof of technical result regarding the stability and approximability of the Scott–Zhang interpolant in nonstandard norms.

2 Notations and preliminaries

2.1 Notation

We use the notation \(D\subset {{{\mathbb {R}}}^d}\) to denote the polygonal domain with Lipschitz boundary in problem (5) and \(\omega \subset {{{\mathbb {R}}}^d}\) to denote a generic bounded Lipschitz domain. For a function \(\eta {:}\,\omega \rightarrow {{\mathbb {R}}}\), we denote by \(\tilde{\eta }\) its extension by zero outside \(\omega \). We do not specify the domain \(\omega \) in the notation \(\tilde{\eta }\) as it will be always clear from the context.

2.2 Scalar products

We denote by \((\cdot ,\cdot )_{\omega }\) the \(L^2(\omega )\)-scalar product and by \(\Vert \cdot \Vert _{L^2(\omega )}:= (\cdot ,\cdot )_{\omega }^{1/2}\) the associated norm. The \(L^2({{{\mathbb {R}}}^d})\)-scalar product is denoted \((\cdot ,\cdot )_{{{\mathbb {R}}}^d}\). To simplify the notation, we write in short \((\cdot ,\cdot ):=(\cdot ,\cdot )_{{{\mathbb {R}}}^d}\) and \(\Vert \cdot \Vert :=\Vert \cdot \Vert _{L^2({{{\mathbb {R}}}^d})}\).

2.3 Sobolev spaces

For \(r>0\), the Sobolev space of order r on \({{{\mathbb {R}}}^d}\), \(H^r({{{\mathbb {R}}}^d})\), is defined to be the set of functions \(\theta \in L^2({{{\mathbb {R}}}^d})\) such that

In the case of bounded Lipschitz domains, \(H^r(\omega )\) with \(r \in (0,1)\), stands for the Sobolev space of order r on \(\omega \). It is equipped with the Sobolev–Slobodeckij norm, i.e.

where

When \(r\in (1,2)\) instead, the norm in \(H^r(\omega )\) is given by

where \(\Vert w\Vert _{H^1(\omega )}:=(\Vert w\Vert _{L^2(\omega )}^2+\Vert |\nabla w|\Vert _{L^2(\omega )}^2)^{1/2}\). In addition, \(H^1_0(\omega )\) denotes the set of functions in \(H^1(\omega )\) vanishing at \(\partial \omega \), the boundary of \(\omega \). Its dual space is denoted \(H^{-1}(\omega )\). We note that when we replace \(\omega \) with \({{{\mathbb {R}}}^d}\) and \(r\in [0,2)\), the norms using the double integral above are equivalent with those in (17) (see e.g. [35, 37]).

2.4 The spaces \({{\widetilde{H}}^r}(D)\)

For \(r\in (0,2)\), the set of functions in D whose extension by zero are in \(H^s({{{\mathbb {R}}}^d})\) is denoted \({\widetilde{H}}^r(D)\). The norm of \({{\widetilde{H}}^r}(D)\) is given by \(\Vert \tilde{\cdot }\Vert _{H^r({{{\mathbb {R}}}^d})}\). Note that for \(r\in (0,1)\), (10) implies that for \(\phi \) in the Schwartz space \({{\mathcal {S}}}\),

Thus, we prefer to use

as equivalent norm on \({\widetilde{H}}^r(D)\) for \(r\in (0,1)\). This is justified upon invoking a variant of the Peetre–Tartar compactness argument on \({{\widetilde{H}}^r}(D) \subset H^r(D)\).

2.5 Coercivity

Since \(C_0^\infty (D) \) is dense in \({{\widetilde{H}}^s}(D)\) for \(s\in (0,1)\) [28], (11) and a density argument imply that for \(\eta ,\theta \in {{\widetilde{H}}^s}(D)\), we have

In turn, from the definition (20) of the \({\widetilde{H}}^s(D)\) norm, we directly deduce the coercivity of \(a(\cdot ,\cdot )\) on \({{\widetilde{H}}^s}(D)\)

2.6 Dirichlet forms

We define the Dirichlet form on \(H^1(\omega )\times H^1(\omega )\) to be

On \(H^1({{{\mathbb {R}}}^d})\times H^1({{{\mathbb {R}}}^d})\) we write

3 Scales of interpolation spaces

We now introduce another set of functions instrumental in the analysis of the finite element method described in Sects. 6 and 7. In this section \(\omega \) stands for a bounded domain of \({{{\mathbb {R}}}^d}\).

Given \(f\in L^2(\omega )\), we define \(\theta \in H^1_0(\omega )\) to be the unique solution to

and define \(T_{\omega }{:}\,L^2({\omega })\rightarrow H^1_0({\omega }) \) by

As discussed in [32], this defines a densely defined unbounded operator on \(L^2({\omega })\), namely \(L_{\omega } f:=T_{\omega }^{-1} f\) for f in

The operator \(L_{\omega }\) is self-adjoint and positive so its fractional powers define a Hilbert scale of interpolation spaces, namely, for \(r\ge 0\),

with \(D(L_{\omega }^r)\) denoting the domain of \(L_{\omega }^r\). These are Hilbert spaces with norms

The space \(\dot{H}^{1}({\omega })\) coincides with \(H^1_0({\omega })\) while \(\dot{H}^{0}(\omega )\) with \(L^2(\omega )\), in both cases with equal norms. Hence for \(r\in [0,1]\), we have

where \((L^2({\omega }),H^1_0({\omega }))_{r,2}\) denotes the interpolation spaces defined using the real method.

Another characterization of these spaces stems from Corollary 4.10 in [14], which states that for \(r\in [0,1]\), the spaces \({{\widetilde{H}}^r}(\omega )\) are interpolation spaces. Since \({\widetilde{H}}^1(\omega )=H^1_0(\omega )\) and \({\widetilde{H}}^0(\omega )=L^2(\omega )\), \({{\widetilde{H}}^s}(\omega )\) coincides with \(\dot{H}^{r}(\omega )\). In particular, we have

for a constant C only depending on \(\omega \).

The intermediate spaces can also be characterized by expansions in the \(L^2({\omega })\) orthonormal system of eigenvectors \(\{\psi _i\}\) for \(T_{\omega }\), i.e.,

Here \(\lambda _i=\mu _i^{-1}\) where \(\mu _i\) is the eigenvalue of \(T_{\omega }\) associated with \(\psi _i\). In this case, we find that

and for \(r\in (0,1)\), (see e.g., [13])

Here

Note that if \({\omega }' \subset {\omega }\) then since the extension of a function \(\phi \) in \(H^1_0({\omega }')\) by zero is in \(H^1_0({\omega })\), the K-functional identity implies that for all \(r\in [0,1]\),

where \(\widetilde{\phi }\) denotes the extension by zero of \(\phi \) outside \(\omega '\).

The operator \(T_{\omega }\) extends naturally to \(F\in H^{-1}({\omega })\) by setting \(T_{\omega } F=u\) where \(u\in H^1_0({\omega })\) is the solution of (22) with \((f,\phi )_{\omega }\) replaced by \(\langle F,\phi \rangle \). Here \(\langle \cdot ,\cdot \rangle \) denotes the functional-function pairing. Identifying \(f\in L^2({\omega })\) with the functional \(\langle F,\phi \rangle := (f,\phi )_{\omega }\), we define the intermediate spaces for \(r\in (-1,0) \) by

and set \(\dot{H}^{-1}:= H^{-1}(\omega )\). Since \(T_{\omega }\) maps \(H^{-1}({\omega })\) isomorphically onto \(\dot{H}^{1}({\omega })\) and \(L^2({\omega })\) isomorphically onto \(\dot{H}^{2}({\omega })\), \(T_{\omega }\) maps \(\dot{H}^{-r}({\omega })\) isometrically onto \(\dot{H}^{2-r}(\omega )\) for \(r\in [0,1]\).

Functionals in \(H^{-1}({\omega })\) can also be characterized in terms of the eigenfunctions of \(T_{\omega }\), indeed, \(H^{-1}({\omega })\) is the set of linear functionals F for which the sum

is finite. Moreover,

for all \( F\in H^{-1}({\omega })\). This implies that for \(r\in [-\,1,0]\),

and

Remark 3.1

(Norm equivalence for Lipschitz domains) For \(r\in (1,3/2)\), it is known that \({{\widetilde{H}}^r}(\omega )=H^r(\omega )\cap H^1_0(\omega )\). On the other hand, we note that when \(\partial \omega \) is Lipschitz, \(-\,\varDelta \) is an isomorphism from \(H^r(\omega )\cap H^1_0(\omega )\) to \({\dot{H}}^{r-2}(\omega )\); see Theorem 0.5(b) of [31]. We apply this regularity result into Proposition 4.1 of [8] to obtain \(H^r(\omega )\cap H^1_0(\omega )={\dot{H}}^r(\omega )\). So the norms of \({{\widetilde{H}}^r}(\omega )\) and \({\dot{H}}^r(\omega )\) are equivalent for \(r\in [0,3/2)\) and the equivalence constant may depend on \(\omega \). In what follows, we use \({{\widetilde{H}}^r}(D)\) to describe the smoothness of functions defined on D. When functions defined on a larger domain (see Sects. 6, 7), we will use these interpolation spaces separately so that we can investigate the dependency of constants.

We end the section with the following lemma:

Lemma 3.1

Let a be in [0, 2] and b be in [0, 1] with \(a+b\le 2\). Then for \(\mu \in (0,\infty )\), we have

Proof

Let \(\phi \) be in \(\dot{H}^{a}({\omega })=D(L_\omega ^{a/2})\). Setting \(\theta :=L_{\omega }^{a/2} \phi \in L^2(\omega )\), it suffices to prove that

The operator \(T_{\omega }\) and its fractional powers are symmetric in the \(L^2({\omega })\) inner product. Therefore, we have

Inequality (26) follows from Young’s inequality

\(\square \)

4 An alternative integral representation of the bilinear form

The goal of this section is to derive the integral expression (13) and some of its properties.

Theorem 4.1

(Equivalent representation) Let \(s\in (0,1)\) and \(0\le r\le s\). For \(\eta \in H^{s+r}({{{\mathbb {R}}}^d})\) and \(\theta \in H^{s-r} ({{\mathbb {R}}}^d)\),

where

Proof

Let \(I(\eta ,\theta )\) denotes the right hand side of (27). Parseval’s theorem implies that

and so

In order to invoke Fubini’s theorem, we now show that

Indeed, the change of variable \(y=t|\zeta |\) and the definition (28) of \(c_s\) implies that the above integral is equal to

which is finite for \(\eta \in H^r({{{\mathbb {R}}}^d})\) and \(\theta \in H^{s-r} ({{\mathbb {R}}}^d)\). We now apply Fubini’s theorem and the same change of variable \(y=t|\zeta |\) in (30) to arrive at

This completes the proof. \(\square \)

Theorem 4.1 above implies that for \(\eta ,\theta \) in \({{\widetilde{H}}^s}(D)\),

where for \(\psi \in L^2({{{\mathbb {R}}}^d})\)

Examining the Fourier transform of \(w(\psi ,t)\), we realize that \(w(t):=w(\psi ,t):=\psi +v(\psi ,t)\) where \(v(t):=v(\psi ,t) \in H^1({{{\mathbb {R}}}^d}) \) solves

The integral in (31) is the basis of a numerical method for (5). The following lemma, instrumental in our analyze, provides an alternative characterization for the inner product appearing on the right hand side of (31).

Lemma 4.2

Let \(\eta \) be in \(L^2({{{\mathbb {R}}}^d})\). Then,

Proof

Let \(\eta \) be in \(L^2({{{\mathbb {R}}}^d})\). We start by observing that for any positive t and \(\zeta \in {{{\mathbb {R}}}^d}\),

solves the minimization problem

and so

We denote \(\phi \) to be the inverse Fourier transform of \(\hat{\phi }\). Note that \(\phi \) is in \(H^1({{{\mathbb {R}}}^d})\) [actually, \(\phi \) is in \( H^2({{{\mathbb {R}}}^d})\)].

Applying the Fourier transform, we find that

Now, \(\phi \) is the pointwise minimizer of the integrand in (35) and since \(\phi \in H^1({{{\mathbb {R}}}^d})\), it is also the minimizer of (33). In addition, (34), (35) and (29) imply that

This completes the proof of the lemma. \(\square \)

Remark 4.1

(Relation with the vanishing Dirichlet boundary condition case) The above lemma implies that for \(\eta \in {{\widetilde{H}}^s}(D)\),

It is observed in the Appendix of [13] that for any bounded domain \(\omega \), and \(\eta \in (L^2(\omega ),H^1_0(\omega ))_{s,2}\), the real interpolation space between \(L^2(\omega )\) and \(H^1_0(\omega )\), we have

where

Let \(\{\psi _i^0\}\subset H^1_0(\omega )\) denote the \(L^2(\omega )\)-orthonormal basis of eigenfunctions satisfying

As the proof in Lemma 4.2 but using the expansion in the above eigenfunctions, it is not hard to see that

with \(w_{{\omega }}(\eta ,t)=\eta +v\) and \(v \in H^1_0({\omega })\) solving

This means that if \(\eta \in L^2(\omega )\), \(K(\widetilde{\eta },t)\le K_\omega ^0(\eta ,t)\) and hence

5 Exponentially convergent sinc quadrature

In this section, we analyze a sinc quadrature scheme applied to the integral (31). Notice that the analysis provided in [6] does not strictly apply in the present context.

5.1 The quadrature scheme

We first use the change of variable \(t^{-2} = e^y\) so that (31) becomes

Given a quadrature spacing \(k>0\) and two positive integers \({N^-}\) and \({N^+}\), set \(y_j:=j k\) so that

and define the approximation of \(a(\eta ,\theta )\) by

5.2 Consistency bound

The convergence of the sinc quadrature depends on the properties of the integrand

More precisely, the following conditions are required:

-

(a)

\(g(\cdot ;\eta ,\theta )\) is an analytic function in the band

$$\begin{aligned} B=B(\mathsf {d}):=\left\{ z=y+iw \in {\mathbb {C}}{:}\, |w|< \mathsf {d}\right\} , \end{aligned}$$where \(\mathsf {d}\) is a fixed constant in \((0, \pi )\).

-

(b)

There exists a constant C independent of \(y\in {{\mathbb {R}}}\) such that

$$\begin{aligned} \int _{-\mathsf {d}}^{\mathsf {d}} |g(y+iw;\eta ,\theta )|\, dw\le C; \end{aligned}$$ -

(c)

\(\displaystyle N(B):=\int _{-\infty }^\infty \left( |g(y+i\mathsf {d};\eta ,\theta )|+|g(y-i\mathsf {d};\eta ,\theta )| \right) dy < \infty .\)

In that case, there holds (see Theorem 2.20 of [36])

In our context, this leads to the following estimates for the sinc quadrature error.

Theorem 5.1

(Sinc quadrature) Suppose \(\theta \in {{\widetilde{H}}^s}(D)\) and \(\eta \in \widetilde{H}^\delta (D)\) with \(\delta \in (s,2-s]\). Let \(a(\cdot ,\cdot )\) and \(a^k(\cdot ,\cdot )\) be defined by (5) and (39), respectively. Then we have

where \(c(\mathsf {d}):=\frac{1}{\sqrt{(1+\cos \mathsf {d})/2}}\).

Proof

We start by showing that the conditions (a), (b) and (c) hold. For (a), we note that \(g(\cdot ;\eta ,\theta )\) in analytic on B if and only if the operator mapping \(z \mapsto (e^{z}I-\varDelta )^{-1}\) is analytic on B. To see the latter, we fix \(z_0 \in B\) and set \(p_0 := e^{z_0}\). Clearly, \(p_0 I-\varDelta \) is invertible from \(L^2({{{\mathbb {R}}}^d})\) to \(L^2({{{\mathbb {R}}}^d})\). Let \(M_0:= \Vert (p_0 I -\varDelta )^{-1} \Vert _{L^2({\mathbb {R}}^d) \rightarrow L^2({\mathbb {R}}^d)}\). For \(p \in {\mathbb {C}}\), we write

so that the Neumann series representation

is uniformly convergent provided \(\Vert (p-p_0) (p_0I-\varDelta )^{-1}\Vert _{L^2({\mathbb {R}}^d) \rightarrow L^2({\mathbb {R}}^d)} < 1\) or

Hence \((pI-\varDelta )^{-1}\) is analytic in an open neighborhood of \(p_0=e^{z_0}\) for all \(p_0 \in B\) and (a) follows.

To prove (b) and (c), we first bound \(g(z;\eta ,\theta )\) for z in the band B. Assume \(\eta \in \widetilde{H}^\beta (D)\) and \(\theta \in {{\widetilde{H}}^s}(D)\) with \(\beta >s\). For \(z\in B\), we use the Fourier transform and estimate |g| as follows

where \(c(\mathsf {d})=\frac{1}{\sqrt{(1+\cos \mathsf {d})/2}}\) and upon noting that

If \(\mathfrak {Re}z<0\), we deduce that

Instead, when \(\mathfrak {Re}z\ge 0\), we write

Whence, Young’s inequality guarantees that

Gathering the above two estimates (43) and (44) gives

and N(B) in (41) satisfies

Estimates (45) and (46) prove (b) and (c) respectively.

Having established (a), (b), and (c), we can use the sinc quadrature estimate (41). In addition, from (43) and (44) we also deduce that

Combining (41) with (46) and (47) shows (42) and completes the proof. \(\square \)

Remark 5.1

(Choice of \(N^-\) and \(N^+\)) Balancing the three exponentials in (42) leads to the following choice

Hence, for given the quadrature spacing \(k>0\), we set

With this choice, (42) becomes

where

6 Truncated domain approximations

To develop further approximation to problem (5) based on the sinc quadrature approximation (39), we replace (32) with problems on bounded domains.

6.1 Approximation on bounded domains

Let \(\varOmega \) be a convex bounded domain containing D and the origin. Without loss of generality, we assume that the diameter of \(\varOmega \) is 1. This auxiliary domain is used to generate suitable truncation domains to approximate the solution of (32). We introduce a domain parameter \(M>0\) and define the dilated domains

The approximation of \(a^k(\cdot ,\cdot )\) in (39) reads

with \(t_j :=t(y_j)=e^{-y_j/2}\), according to (38), and

where \(v^M(t):=v^M(\widetilde{\eta },t)\) solves

compare with (32). The domains \(\varOmega ^M(t_j)\) are constructed for the truncation error to be exponentially decreasing as a function of M. This is the subject of next section.

6.2 Consistency

The main result of this section provides an estimate for \(a^k-a^{k,M}\). It relies on decay properties of \(v(\widetilde{\eta },t)\) satisfying (32). In fact, Lemma 2.1 of [2] guarantees the existence of universal constants c and C such that

provided \(\eta \in L^2(D)\) and \(v(t):= v(\widetilde{\eta },t)\) is given in (32). Here

so that the minimal distance between points in \(D\subset \varOmega \) and \(B^M(t)\) is greater than \(M\max (1,t)\). An illustration of the different domains is provided in Fig. 1.

Lemma 6.1

(Truncation error) Let \(\eta \in L^2(D)\), \(e(t):=v(\tilde{\eta }, t)-v^M(\tilde{\eta },t)\) and c be the constant appearing in (55). There is a positive constant C not depending on M and t satisfying

Proof

In this proof C denotes a generic constant only depending on \(\varOmega \). Note that e(t) satisfies the relations

Let \(\chi (t) \ge 0\) be a bounded cut off function satisfying \(\chi (t)=1\) on \(\partial \varOmega ^M(t)\) and \(\chi (t)=0\) on \(\varOmega ^M(t){\setminus } B^M(t)\). Without loss of generality, we may assume that \(\Vert \nabla \chi (t)\Vert _{L^\infty ({{{\mathbb {R}}}^d})} \le C/t\). This implies

Here we use the decay estimate (55) for last inequality above. Now, setting \(e(t):=\chi (t) v(t)+\zeta (t)\), we find that \(\zeta (t)\in H^1_0(\varOmega ^M(t))\) satisfies

for all \(\phi \in H^1_0(\varOmega ^M(t))\). Taking \(\phi =\zeta (t)\), we deduce that

Thus, combining the estimates for \(\zeta (t)\) and \(\chi (t)v(t)\) completes the proof. \(\square \)

Lemma 6.1 above is instrumental to derive exponentially decaying consistency error as \(M\rightarrow \infty \). Indeed, we have the following theorem.

Theorem 6.2

(Truncation error) Let c be the constant appearing in (55) and assume \(M>2(s+1)/c\). Then, there is a positive constant C not depending on M nor k satisfying

Proof

In this proof C denotes a generic constant only depending on \(\varOmega \). Let \(\eta ,\theta \) be in \(L^2(D)\). It suffices to bound

with \(v(t)=v(\widetilde{\eta },t)\) defined by (32) and \(v^M(t)=v^M(\widetilde{\eta },t)\) defined by (54). We estimate \(E_1\) and \(E_2\) separately, starting with \(E_1\).

From the definition \(t_j=e^{-y_j/2}\), we deduce that when \(j<0\), \(t_j>1\) so that (56) gives

Similarly, for \(j\ge 0\), i.e. \(t_j<1\), using (56) again, we have

where we have also used the property \(cM/2-s>1\) guaranteed by the assumption \(M> 2(s+1)/c\). \(\square \)

6.3 Uniform norm equivalence on convex domains

Since the domains \(\varOmega ^M(t)\) are convex, we know that the norms in \(\dot{H}^{r}(\varOmega ^M(t))\) are equivalent to those in \(H^r(\varOmega ^M(t)) \cap H^1_0(\varOmega ^M(t))\) for \(r \in [1,2]\), see e.g. [8]. However, as we mentioned in Remark 3.1, the equivalence constants depend a-priori on \(\varOmega ^M(t)\) and therefore on M and t. We show in this section that they can be bounded uniformly independently of both parameters.

To simplify the notation introduced in Sect. 3. We shall denote \(T_{\varOmega ^M(t)}\) by \(T_t\), \(L_{{\varOmega ^M(t)}}\) by \(L_t\) and \(\dot{H}^{s}({\varOmega ^M(t)})\) by \(\dot{H}^{s}\). We recall that \(\varOmega ^M(t)\) is a dilatation of the convex and bounded domain \(\varOmega \) containing the origin, see (51). We then have the following lemma.

Lemma 6.3

(Ellipitic regularity on convex domains) Let \(f\in L^2({\varOmega ^M(t)})\). Then \(\theta :=T_t f\) is in \(H^2(\varOmega ^M(t))\cap H^1_0(\varOmega ^M(t))\) and satisfies

where C is a constant independent of t and M.

Proof

It is well known that the convexity of \(\varOmega \) and hence that of \({\varOmega ^M(t)}\) implies that the unique solution \(\theta \) of (22) with \(\omega \) replaced by \({\varOmega ^M(t)}\) is in \(H^2(\varOmega ^M(t))\cap H^1_0(\varOmega ^M(t))\). Therefore, the crucial point is to show that the constant in (59) does not depend on M or t. To see this, the \(H^2\) elliptic regularity on convex domains implies that for \(\hat{\theta } \in H^1_0(\varOmega )\) with \(\varDelta \hat{\theta } \in L^2(\varOmega )\) then \(\hat{\theta } \in H^2(\varOmega )\) and there is a constant C only depending on \(\varOmega \) such that

Here \(|\cdot |_{H^2(\varOmega )}\) denotes the \(H^2(\varOmega )\) seminorm. Let \(\gamma \) be such that \({\varOmega ^M(t)}=\{\gamma x,\ x\in \varOmega \} \) [see (51)] and \(\hat{\theta }(\hat{x}) = \theta (\gamma \hat{x})\) for \(\hat{x}\in \varOmega \). Once scaled back to \(\varOmega ^M(t)\), estimate (60) gives

Now (22) immediately implies that \(\Vert \theta \Vert _{H^1({\varOmega ^M(t)}))}\le \Vert f\Vert _{L^2({\varOmega ^M(t)})}\) and (59) follows by the triangle inequality and obvious manipulations. \(\square \)

Remark 6.1

(Intermediate spaces) Lemma 6.3 implies that \(D(L_t)=\dot{H}^{2}=H^2({\varOmega ^M(t)})\cap H^1_0({\varOmega ^M(t)})\) with norm equivalence constants independent of M and t. As \(D(L_t^{1/2}) = \dot{H}^{1} =H^1_0({\varOmega ^M(t)})\), for \(s \in [1,2]\)

with norm equivalence constants independent of M and t.

Lemma 6.4

(Norm equivalence) For \(\beta \in [1,3/2)\), let \(\theta \) be in \(\dot{H}^{\beta }\) and \(\widetilde{\theta }\) denote its extension by zero outside of \({\varOmega ^M(t)}\). Then \(\widetilde{\theta }\) is in \(H^\beta ({{{\mathbb {R}}}^d})\) and

with C not depending on t or M.

Proof

Given \(\theta \in H^1({\varOmega ^M(t)})\), we denote \(R\theta \) to be the elliptic projection of \(\theta \) into \(H^1_0({\varOmega ^M(t)})\), i.e., \(R\theta \in H^1_0({\varOmega ^M(t)})\) is the solution of

It immediately follows that

Also, if \(\theta \in H^2({\varOmega ^M(t)})\), Lemma 6.3 (see also Remark 6.1) implies

with C not depending on t or M. Hence, it follows by interpolation that

Now when \(\theta \in \dot{H}^{\beta }\subset H^1(\varOmega ^M(t))\), \(R\theta = \theta \) so that in view of (62), it remains to show that

for a constant C independent of M and t. To see this, note that \(\widetilde{\theta }\) is in \(H^1({{{\mathbb {R}}}^d})\) and the extension of \(\nabla \theta \) by zero is in \(H^{\beta -1}({{{\mathbb {R}}}^d})\) for \(\beta < 3/2\). We refer to Theorem 1.4.4.4 of [28] for a proof when \(d=1\) and the techniques used in Lemma 4.33 of [22] for the extension to the higher dimensional spaces. This implies that \(\widetilde{\theta }\) belongs to \(H^\beta ({{{\mathbb {R}}}^d})\). Moreover, the restriction operator is simultaneously bounded from \(H^j({{{\mathbb {R}}}^d})\) to \(H^j({\varOmega ^M(t)})\) for \(j=1,2\). Hence, by interpolation again, we have that

This completes the proof of the lemma. \(\square \)

7 Finite element approximation

In this section, we turn our attention to the finite element approximation of each subproblems (54) in \(a^{k,M}(\cdot ,\cdot )\). Throughout this section, we omit when no confusion is possible the subscript j in \(t_j\), i.e. we consider a generic t keeping in mind that the subsequent statements only hold for \(t=t_j\) with \(j=-N^-,\ldots ,N^+\). We also make the additional unrestrictive assumption that \(\varOmega \) used to define \(\varOmega ^M(t)\) [see (51)] is polygonal. In turn, so are all the dilated domains \(\varOmega ^M(t)\).

7.1 Finite element approximation of \(a^{k,M}(\cdot ,\cdot )\)

For any polygonal domain \(\omega \), let \(\{\mathcal {T}_h(\omega ) \}_{h>0}\) be a sequence of conforming subdivisions of \(\omega \) made of simplices of maximal size diameter \(h\le 1\). We use the notation \({\mathcal {T}}^M_h(t):=\mathcal {T}_h(\varOmega ^M(t))\) for \(t=t_j\), \(j=-N^-,\ldots ,N^+\), given by (38). We assume that the subdivisions on D are shape-regular and quasi-uniform. This means that there exist universal constants \(\sigma ,\rho >0\) such that

where \(\text {diam}(T)\) stands for the diameter of T and r(T) for the radius of the largest ball contained in T. We also assume that these conditions hold as well for \({\mathcal {T}}^M_h(t_j)\) with constants \(\sigma ,\rho \) not depending on j. We finally require that all the subdivisions match on D, i.e.

for each j. We discuss in Sect. 8 how to generate subdivisions meeting these requirements.

Define \(\mathbb {V}_h(\omega )\subset H^1_0(\omega )\) to be the space of continuous piecewise linear finite element functions associated with \(\mathcal {T}_h(\omega )\) with \(\omega =D\) or \(\varOmega ^M(t)\). Also, we use the short notation \({\mathbb {V}}_h^M(t):={\mathbb {V}}_h(\varOmega ^M(t))\).

We are now in position to define the fully discrete/implementable problem. For \(\eta _h\) and \(\theta _h\) in \({\mathbb {V}}_h{(D)}\), the finite element approximation of \(a^{k,M}(\cdot ,\cdot )\) given by (52) is

with

and where \(v^M_h(t) \in {\mathbb {V}}_h^M(t)\) solves

Remark 7.1

Two critical properties follow from (65). On the one hand, our analysis below relies on the fact that the extension by zero \({\widetilde{v}}_h\) of \(v_h \in {\mathbb {V}}_h {(D)}\) belongs to all \({\mathbb {V}}_h^M(t)\). This property greatly simplifies the computation of \((w_h^M(\widetilde{\eta }_h,t_j),\theta _h)_{ {D}}\) in (66).

The finite element approximation of the problem (4) is to find \(u_h\in \mathbb {V}_h {(D)}\) so that

Analogous to Lemma 4.2, we have the following representation using K-functional. The proof of the lemma is similar to that of Lemma 4.2 and is omitted.

Lemma 7.1

(K-functional formulation on the discrete space) For \(\eta _h \in {\mathbb {V}}_h{(D)}\), there holds

where

We emphasize that for \(v_h \in {\mathbb {V}}_h^M(t)\), its extension by zero \(\tilde{\eta }_h\) belongs to \(H^1({{{\mathbb {R}}}^d})\) and therefore

This property is critical in the proof of next theorem, which ensures the \({\mathbb {V}}_h(D)\)-ellipticity of the discrete bilinear form \(a_h^{k,M}\). Before describing this next result, we recall that according to (49)

with \(\delta \) between s and \(\min (2-s,3/2)\) (since \({\mathbb {V}}_h(D)\subset {\widetilde{H}}^{3/2-\epsilon }(D)\) for any \(\epsilon >0\)) and \(\gamma (k) \sim Ce^{-2\pi \mathsf {d}/k}\). Also, we note that from the quasi-uniform (63) and shape-regular (64) assumptions, there exists a constant \(c_I\) only depending on \(\sigma \) and \(\rho \) such that for \(r^- \le r^+ < 3/2\), there holds

Theorem 7.2

\(({\mathbb {V}}_h{(D)}\)-ellipticity) Let \(\delta \) in Theorem 5.1 between s and \(\min (2-s,3/2)\), k be the quadrature spacing and \(c_I\) be the inverse constant in (71). We assume that the quadrature parameters \(N^-\) and \(N^+\) are chosen according to (48). Let \(\gamma (k)\) be given by (50) and assume that k is chosen sufficiently small so that

Then, there is a constant c independent of h, k and M such that

Proof

Let \(\eta _h \in {\mathbb {V}}_h^M(t)\) so that \(\widetilde{\eta }_h\in H^1({{{\mathbb {R}}}^d})\). We use the equivalence relations provided by Lemmas 4.2 and 7.1 together with the monotonicity property (70) to write

The quadrature consistency bound (49) supplemented by an inverse inequality (71) yields

The desired result follows from assumption \(c_I \gamma (k) h^{s-\delta }<1 \) and the coercivity of \(a(\cdot ,\cdot )\), see (21). \(\square \)

7.2 Approximations on \(\varOmega ^M(t)\)

The fully discrete scheme (69) requires approximations by the finite element methods on domains \(\varOmega ^M(t)\). Standard finite element argumentations would lead to estimates with constants depending on \(\varOmega ^M(t)\) and therefore M and t. In this section, we exhibit results where this is not the case due to the particular definition (51) of \(\varOmega ^M(t)\).

We can use interpolation to develop approximation results for functions in the intermediate spaces with constants independent of M and t. The Scott–Zhang interpolation construction [43] gives rise to an approximation operator \(\pi ^{sz}_h{:}\,H^1_0({\varOmega ^M(t)})\rightarrow {\mathbb {V}}_h^M(t)\) satisfying

for all \(\eta \in H^1_0({\varOmega ^M(t)})=\dot{H}^{1}\) and

for all \(\eta \in H^2({\varOmega ^M(t)})\cap H^1_0({\varOmega ^M(t)})=\dot{H}^{2}\). The Scott–Zhang argument is local so the constants appearing above depend on the shape regularity of the triangulations but not on t or M. Interpolating the above inequalities shows that for all \(r\in [0,1]\)

with C not depending on t or M.

Let \(T_{t,h}\) denote the finite element approximation to \(T_t\) given by (23), i.e., for \(F\in \dot{H}^{-1}\), \(T_{t,h}F:=w_h\) with \(w_h\in {\mathbb {V}}_h^M(t)\) being the unique solution of

The approximation result (72) and standard finite element analysis techniques implies that for any \(r\in [0,1]\),

where the last inequality follows from interpolation since \(\Vert T_t F\Vert _{H^1({\varOmega ^M(t)})}\le \Vert F\Vert _{H^{-1}({\varOmega ^M(t)})}\) and (59) hold.

For \(f\in L^2(\varOmega ^M(t))\), we define the operator

satisfying,

and let \(S_{t,h} f\in {\mathbb {V}}_h^M(t)\) denote its finite element approximation; compare with \(T_t\) and \(T_{h,t}\). Although the Poincaré constant depends on the diameter of \({\varOmega ^M(t)}\), we still have the following lemma.

Lemma 7.3

There is a constant C independent of h, t, or M satisfying

Proof

For \(f\in L^2({\varOmega ^M(t)})\), set \(e_h:=(S_t -S_{t,h}) f\). The elliptic regularity estimate (61) on convex domain and Cea’s Lemma imply

where C is a constant independent of h, t and M. Galerkin orthogonality and the above estimate give

Combining the above two inequalities and obvious manipulations completes the proof of the lemma. \(\square \)

We shall also need norm equivalency on discrete scales. Let \(({{{\mathbb {V}}}_h^M(t)},\Vert \cdot \Vert _{L^2({\varOmega ^M(t)})})\) and \(({{{\mathbb {V}}}_h^M(t)},\Vert \cdot \Vert _{H^1({\varOmega ^M(t)})})\) denote \({{{\mathbb {V}}}_h^M(t)}\) normed with the norms in \(L^2({\varOmega ^M(t)})\) and \(H^1({\varOmega ^M(t)})\), respectively. We define \(\Vert \cdot \Vert _{\dot{H}^{r}_h(\varOmega ^M(t))}\), or simply \(\Vert \cdot \Vert _{\dot{H}^{r}_h}\), to be the norm in the interpolation space

For \(r\in [0,1]\), as the natural injection is a bounded map (with bound 1) from \({{{\mathbb {V}}}_h^M(t)}\) into \(L^2({\varOmega ^M(t)})\) and \(H^1_0({\varOmega ^M(t)})\), respectively, \(\Vert v_h\Vert _{\dot{H}^{r}} \le \Vert v_h\Vert _{\dot{H}^{r}_h}\), for all \(v_h\in {{{\mathbb {V}}}_h^M(t)}\). For the other direction, one needs a projector into \({{{\mathbb {V}}}_h^M(t)}\) which is simultaneously bounded on \(L^2({\varOmega ^M(t)})\) and \(H^1_0({\varOmega ^M(t)})\). In the case of a globally quasi uniform mesh, it was shown by Bramble and Xu [12] that the \(L^2({\varOmega ^M(t)})\) projector \(\pi _h\) satisfies this property. Their argument is local, utilizing the inverse inequality (71) and hence leads to constants depending on those appearing in (63) and (64) but not t, h, or M. Interpolating these results gives, for \(r\in [0,1]\),

where c is a constant independent of h, M and t. The spaces for negative r are defined by duality and the stability of the \(L^2(\varOmega ^M(t))\)-projection \(\pi _h\) yields

We finally note that a discrete version of Lemma 3.1 holds. Its proof is essentially the same and is omitted for brevity.

Lemma 7.4

Let a be in [0, 2] and b be in [0, 1] with \(a+b\le 2\). Then for any \(\mu \in (0,\infty )\),

7.3 Consistency

The next step is to estimate the consistency error between \(a^{k,M}(\cdot ,\cdot )\) and \(a_{h}^{k,M}(\cdot ,\cdot )\) on \({\mathbb {V}}_h(D)\). Its decay depends on a parameter \(\beta \in (s,3/2)\), which will be related later to the regularity of the solution u to (4).

Theorem 7.5

(Finite element consistency) Let \(\beta \in (s,3/2)\). We assume that the quadrature parameters \(N^-\) and \(N^+\) are chosen according to (48). There exists a constant C independent of h, k and M satisfying

for all \(\eta _h, \theta _h\in \mathbb {V}_h {(D)}\).

Proof

In this proof, C denotes a generic constant independent of h, M, k and t.

Fix \(\eta _h \in {\mathbb {V}}_h(D)\) and denote by \(\tilde{\eta }_h\) its extension by zero outside D. We first observe that for \(\theta _h\in \mathbb {V}_h(D)\) and \(\tilde{\theta }_h\) its extension by zero outside D, we have

where \(\pi _h\) denotes the \(L^2\) projection onto \({\mathbb {V}}_h(\varOmega ^M(t))\). Using the above identity and recalling that \(t_j=e^{-y_j/2}\), we obtain

We bound the two terms separately and start with the latter.

\(\boxed {1}\) In view of the definitions (53) of \(w^M(t)\) and (67) of \(w^M_h(t)\), we have

We recall that \(T_t = T_{\varOmega ^M(t)}\) and \(S_t\) are defined by (23) and (74) respectively. Using these operators and the relations satisfied by \(v^M(t)\) and \(v_h^M(t)\) [see (54) and (68)], we arrive at

Thus,

Here we have used \(\Vert \cdot \Vert \) to denote the operator norm of operators from \(L^2({\varOmega ^M(t)})\) to \(L^2({\varOmega ^M(t)})\). Combining

and Lemma 7.3 gives

Whence,

\(\boxed {2}\) We now focus on \(E_1\) which requires a finer analysis using intermediate spaces. Also, we argue differently for \(\beta \in (1,3/2)\) and for \(\beta \in (s,1]\). In either case, we define

and note that

with c depending on s but not h.

When \(\beta \in (1,3/2)\), we invoke (79) again to deduce

We set \(\mu (t) := t^{-2}-1\) and compute

which is now estimated in three parts. Lemma 3.1 guarantees that

where we recall that \(\dot{H}^{s}\) stands for \(\dot{H}^{s}(\varOmega ^M(t))\). For the second part, the error estimate (73) with \(1+r = \beta \) reads

We estimate the last term of the product in the right hand side of (83) by

Thus, Lemma 7.4, the inverse estimate and (81) yield

Note that for \(t\in (0,1/2]\), \(0<t^2\le \mu (t)^{-1} \le \frac{4}{3} t^2 \le \frac{1}{3}\) so that

Combining the above estimates with (83) gives

Since \(t_j=e^{-y_j/2}\),

Estimates (82), (84) and (75) then yield

\(\boxed {3}\) We bound the norms on \(\varOmega ^M(t)\) by norms on D using (25) with \(r=s\) and Lemma 6.4 to arrive at

Applying the norm equivalence (24) gives

\(\boxed {4}\) When \(\beta \in (s,1]\), we bound (83) using different norms. In fact, we have

and by Lemma 7.4,

These estimates lead (84) and hence (85) as well when \(\beta \in (s,1]\). The remainder of the proof is the same as in the case \(\beta \in (1,3/2)\) except that the norm equivalence (24) is invoked in place of Lemma 6.4.

\(\boxed {5}\) The proof of the theorem is complete upon combining the estimates for \(E_1\) and \(E_2\). \(\square \)

7.4 Error estimates

Now that the consistency error between \(a(\cdot ,\cdot )\) and \(a_h^{k,M}(\cdot ,\cdot )\) is obtained, we can apply Strang’s lemma to deduce the convergence of the approximation \(u_h\) towards u in the energy norm. To achieve this, we need a result regarding the stability and approximability of the Scott–Zhang interpolant \(\pi ^{sz}_h\) [43] in the fractional spaces \(\widetilde{H}^\beta (D)\).

This is the subject of the next lemma. Its proof is somewhat technical and given in “Appendix A”.

Lemma 7.6

(Scott–Zhang interpolant) Let \(\beta \in (1,3/2)\). Then, there is a constant C independent of h such that

and for \(s\in [0,1]\),

for all \(v \in {\widetilde{H}}^\beta (D)\).

We note that the above lemma holds for \(\beta \in (0,1)\) and \(s\in (0,\beta )\) provided that \(\pi ^{sz}_h\) is replaced by \(\pi _h\), the \(L^2\) projection onto \({\mathbb {V}}_h(D)\); see e.g. Lemma 5.1 of [9]. In order to consider both case simultaneously in the following proof, we set \(\Pi _h = \pi _h \) when \(\beta \in [0,1]\) and \(\Pi _h =\pi ^{sz}_h \) when \(\beta \in (1,3/2)\).

Theorem 7.7

Assume that the solution u of (5) belongs to \({\widetilde{H}}^\beta (D)\) for \(\beta \in (s,3/2)\). Let \(\delta :=\min (2-s,\beta )\) be as in Theorem 5.1, k be the quadrature spacing and \(c_I\) be the inverse constant in (71). We assume that the quadrature parameters \(N^-\) and \(N^+\) are chosen according to (48). Let \(\gamma (k)\) be given by (50) and assume that k is chosen sufficiently small so that

Moreover, let \(u_h \in {\mathbb {V}}_h(D)\) be the solution of (69). Then there is a constant C independent of h, M and k satisfying

Proof

In our context, the first Strang lemma (see e.g. Theorem 4.1.1 in [15]) reads

where C is a constant independent of h, k and M. From the consistency estimates (49), (58) and (77), we deduce that

The desired estimate follows from the approximability and stability of \(\Pi _h\). \(\square \)

8 Numerical implementation and results

In this section, we present detailed numerical implementation to solve the following model problems.

8.1 Model problems

One of the difficulties in developing numerical approximation to (5) is that there are relatively few examples where analytical solutions are available. One exception is the case when D is the unit ball in \({{{\mathbb {R}}}^d}\). In that case, the solution to the variational problem

is radial and given by, (see [21])

It is also possible to compute the right hand side corresponding to the solution \(u(x)=1-|x|^2\) in the unit ball. The corresponding right hand side can be derived by first computing the Fourier transform of \({\widetilde{u}}\), i.e.,

where \(J_n\) is the Bessel function of the first kind. When \(0<s<1\), we obtain

where \({}_2F_1\) is the Gaussian or ordinary hypergeometric function.

Remark 8.1

(Smoothness) Even though the solution \(u(x)=1-|x|^2\) is infinitely differentiable on the unit ball, the right hand side f has limited smoothness. Note that f is the restriction of \((-\varDelta )^s {\widetilde{u}}\) to the unit ball. Now \({\widetilde{u}}\in H^{3/2-\epsilon }({{{\mathbb {R}}}^d})\) for \(\epsilon >0\) but is not in \(H^{3/2}({{{\mathbb {R}}}^d})\). This means that \((-\varDelta )^s {\widetilde{u}}\) is only in \(H^{3/2-2s-\epsilon }({{{\mathbb {R}}}^d})\) and hence f is only in \(H^{3/2-2s-\epsilon }(\varOmega )\). This is in agreement with the singular behavior of \( {}_2F_1\left( d/2+s, s-1,d/2,t\right) \) at \(t=1\) (see [39], Section 15.4). In fact,

This implies that for \(s\ge 1/2\), the trace on \(|x|=1\) of f(x) given by (92) fails to exist (as for generic functions in \(H^{3/2-2s}({{{\mathbb {R}}}^d})\)). This singular behavior affects the convergence rate of the finite element method when the finite element data vector is approximated using standard numerical quadrature (e.g. Gaussian quadrature).

8.2 Numerical implementation

Based on the notations in Sect. 6, we set \(\varOmega =D\) to be either the unit disk in \({{\mathbb {R}}}^2\) or \(D=(-1,1)\) in \({{\mathbb {R}}}\). Let \(\varOmega ^M(t)\) be corresponding dilated domains. In one dimensional case, we consider \({\mathcal {T}}_h(D)\) to be a uniform mesh and \({\mathbb {V}}_h(D)\) to be the continuous piecewise linear finite element space. For the two dimensional case, \({\mathcal {T}}_h(D)\) a regular (in the sense of p. 247 in [15]) subdivision made of quadrilaterals. In this case, \({\mathbb {V}}_h(D)\) is the set of continuous piecewise bilinear functions.

8.2.1 Non-uniform Meshes for \({\varOmega ^M(t)}\)

We extend \({\mathcal {T}}_h(D)\) to non-uniform meshes \({\mathcal {T}}^M_h(t)\), thereby violating the quasi-uniform assumption. For \(t\le 1\), we use a quasi-uniform mesh on \(\varOmega ^M(t)=\varOmega ^M(1)\) with the same mesh size h. When \(t>1\) and \(D=(-1,1)\), we use an exponentially graded mesh outside of D, i.e. the mesh points are \(\pm \,e^{ih_0}\) for \(i=1,\ldots ,\lceil M/h\rceil \) with \(h_0=h(\ln {\gamma })/M\), where \(\gamma \) is the radius of \({\varOmega ^M(t)}\) [see (51)]. Therefore, we maintain the same number of mesh points for all \(\varOmega ^M(t)\). When D is a unit disk in \({{\mathbb {R}}}^2\), we start with a coarse subdivision of \({\varOmega ^M(t)}\) as in the left of Fig. 2 (the coarse mesh of D in grey). Note that all vertices of a square have the same radial coordinates. We also point out that the position of the vertices along the radial direction and outside of D follow the same exponential distribution as in the one dimensional case. Then we refine each cell in D by connecting the midpoints between opposite edges. For the cells outside of D, we consider the same refinement in the polar coordinate system \((\ln r,\theta )\) with \(r>1\) and \(\theta \in [0,2\pi ]\). This guarantees that mesh points on the same radial direction still follows the exponential distribution after global refinements and the number of mesh points in \({\mathcal {T}}^M_h(t)\) is unchanged for all \(t>0\). The figure on the right of Fig. 2 shows the exponentially graded mesh after three times global refinement.

8.2.2 Matrix aspects

To express the linear system to be solved, we denote by U to be the coefficient vector of \(u_h\) and F to be the coefficient vector of the \(L^2\) projection of f onto \(\mathbb {V}_h(D)\). Let \(M_h(t)\) and \(A_h(t)\) be the mass and stiffness matrix in \(\mathbb {V}^M_h(t)\). Denote \(M_{D,h}\) to be the mass matrix in \({\mathbb {V}}_h(D)\). The linear system is given by

with \(y_i=ik\) and \(t_i=e^{-y_i/2}\). Here \({M_{D,h}},\ U\) and F are all extended by zeros so that the dimension of the system is equal to the dimension of \(\mathbb {V}^M_h(t)\).

8.2.3 Preconditioner

Since the linear system is symmetric, we apply the Conjugate Gradient method to solve the above linear system. Due to the norm equivalence between \(( L^2(D),H^1_0(D))_{s,2}\) and \(\widetilde{H}^s(D)\), the condition number of the system matrix is bounded by \(Ch^{-2s}\). In order to reduce the number of iterations in one dimensional space, we use fractional powers of the discrete Laplacian \(L_{D,h}\) as a preconditioner, where \(L_{D,h}{:}\,H^1_0(\varOmega )\rightarrow L^2(D)\) is defined by

This can be computed by the discrete sine transform similar to the implementation discussed in [7]. More precisely, the matrix representation of \(L_{D,h}\) is given by \({(M_{D,h})}^{-1}{A_{D,h}}\), where \(A_{D,h}\) is stiffness matrix in \({\mathbb {V}}_h(D)\). The eigenvalues of \(A_{D,h}\) and \(M_{D,h}\) (for the same eigenvectors) are \(a_j:=(2+\cos (j\pi h))/h\) and \(m_j:=h(4+2\cos (j\pi h))/6\) for \(j=1,\ldots ,\dim ({\mathbb {V}}_h(D))\), respectively. Therefore, the eigenvalues of \(L_h\) are given by \(\lambda _{j,h}:=a_j/m_j\). We use

as a preconditioner, where \(S_{ij}:= \sqrt{ 2 h}\sin ({ij \pi h})\) and \(\varLambda \) is the diagonal matrix whose diagonal entries are \(\lambda _{j,h}^{-s}/m_j\). We also note that \(S^{-1}=S\).

In two dimensional space, we use the multilevel preconditioner advocated in [11].

8.3 Numerical illustration for the non-smooth solution

We first consider the numerical experiments for the model problem (90) and study the behavior of the \(L^2(D)\) error.

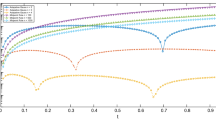

The above figures report the \(L^2(D)\)-error behavior when \(D=(-1,1)\). The left one shows the error as a function of the quadrature spacing k for a fixed mesh size (\(h=1/8192\)) and domain truncation parameter (\(M=20\)). The right plot reports the error as a function of the domain truncation parameter M with fixed mesh size (\(h=1/8192\)) and quadrature spacing \((k=0.2)\). The spatial error dominates when k is small (left) and M is large (right)

8.3.1 Influence from the sinc quadrature and domain truncation

When \(D=(-\,1,1)\), we approximate the solution on the fixed uniform mesh with the mesh size \(h=1/8192\). The domain truncation parameter M is also fixed to be 20. Thus, h is small enough and M is large enough so that the \(L^2(D)\)-error is dominant by the sinc quadrature spacing k. The left part of Fig. 3 shows that the \(L^2(D)\)-error quickly converges to the error dominant by the Galerkin approximation when k approaches zero. Similar results are observed from the right part of Fig. 3 when the domain truncation parameter M increases. In this case, the mesh size \(h=1/8192\) and the quadrature step size \(k=0.2\).

8.3.2 Error convergence from the finite element approximation

We note that we implement the numerical algorithm for the two dimensional case using the deal.ii Library [4] and we invert matrices in (93) using the direct solver from UMFPACK [20]. Figure 4 shows the approximated solutions for \(s=0.3\) and \(s=0.7\), respectively. Table 1 reports errors \(\Vert u-u_h\Vert _{L^2(D)}\) and rates of convergence with \(s=0.3, 0.5\) and 0.7. Here the quadrature spacing (\(k=0.25\)) and the domain truncation parameter (\(M=4\)) are fixed so that the finite element discretization dominates the error.

We note that Theorem 7.1 together with Theorem 5.4 in [29] (see also Proposition 2.7 in [10]) guarantees that when \(\partial D\) is of class \(C^\infty \) and f is in \(L^2(D)\), the solution of (5) is in \({\widetilde{H}}^{s+\alpha ^-}(D)\) where

and \(\alpha ^-\) denotes any number strictly smaller that \(\alpha \). This indicates that the expected rate of convergence in \(L^2(D)\) norm should be \(\beta +\alpha ^--s\) if the solution u is in \({\widetilde{H}}^\beta (D)\). Since the solution u is in \(H^{s+1/2-\epsilon }(D)\) (see [1] for a proof), Table 1 matches the expected rate of convergence \(\min (1,s+1/2)\).

Approximated solutions of (91) for \(s=0.3\) (left) and \(s=0.7\) (right) on the unit disk

8.4 Numerical illustration for the smooth solution

When the solution is smooth, the finite element error (assuming the exact computation of the stiffness entries, i.e. no consistency error) satisfies

where \(\alpha \) is given by (94). In contrast, because of the inherent consistency error, our method only guarantees (c.f., Theorem 7.7)

Table 2 reports \(L^2(D)\)-errors and rates for the problem (5) with the smooth solution \(u(x)=1-|x|^2\) and the corresponding right hand side data (92) in the unit disk. To see the error decay, here we choose the quadrature step size \(k=0.2\) and the domain truncation parameter \(M=5\). The observed decay in the error does not match the expected rate (95). We think this loss of accuracy may be due either to the deterioration of the shape regularity constant in generating the subdivisions of \(\varOmega ^M(t)\) (see Sect. 8.2) or to the imprecise numerical integration of the singular right hand side in (92).

To illustrate this, we consider the one dimensional problem. Instead of using (92) to compute the right hand side vector, similar to (7), we compute

with \(D=(-1,1)\). We note that when \(s< 1/2\), the fractional derivative with the negative power \(2s-1\) still makes sense for the local basis function \(\phi _j\). The right hand side of (96) can now be computed exactly.

We illustrate the convergence rate for the one dimensional case in Table 3 when the \(L^2(D)\)-projection of right hand side is computed from (96). In this case, we compute at \(s=0.3,0.4,0.7\) as the expression in (96) is not valid for \(s=0.5\). We also fix \(k=0.2\) and \(M=6\). In all cases, we observe the predicted rate of convergence \(\min (3/2,2-s)\), see (95).

References

Acosta, G., Borthagaray, J.P.: A fractional Laplace equation: regularity of solutions and finite element approximations. SIAM J. Numer. Anal. 55(2), 472–495 (2017). https://doi.org/10.1137/15M1033952

Auscher, P., Hofmann, S., Lewis, J.L., Tchamitchian, P.: Extrapolation of Carleson measures and the analyticity of Kato’s square-root operators. Acta Math. 187(2), 161–190 (2001). https://doi.org/10.1007/BF02392615

Bacuta, C.: Interpolation Between Subspaces of Hilbert Spaces and Applications to Shift Theorems for Elliptic Boundary Value Problems and Finite Element Methods. ProQuest LLC, Ann Arbor, MI (2000). http://gateway.proquest.com/openurl?url_ver=Z39.88-2004&rft_val_fmt=info:ofi/fmt:kev:mtx:dissertation&res_dat=xri:pqdiss&rft_dat=xri:pqdiss:9994203. Thesis (Ph.D.)—Texas A&M University

Bangerth, W., Hartmann, R., Kanschat, G.: deal.II—a general-purpose object-oriented finite element library. ACM Trans. Math. Softw. 33(4), 24 (2007). https://doi.org/10.1145/1268776.1268779

Biler, P., Karch, G., Woyczyński, W.A.: Critical nonlinearity exponent and self-similar asymptotics for Lévy conservation laws. Ann. Inst. H. Poincaré Anal. Non Linéaire 18(5), 613–637 (2001). https://doi.org/10.1016/S0294-1449(01)00080-4

Bonito, A., Lei, W., Pasciak, J.E.: On sinc quadrature approximations of fractional powers of regularly accretive operators. J. Numer. Math. (2018). https://doi.org/10.1515/jnma-2017-0116

Bonito, A., Lei, W., Pasciak, J.E.: The approximation of parabolic equations involving fractional powers of elliptic operators. J. Comput. Appl. Math. 315, 32–48 (2017). https://doi.org/10.1016/j.cam.2016.10.016

Bonito, A., Pasciak, J.E.: Numerical approximation of fractional powers of elliptic operators. Math. Comput. 84(295), 2083–2110 (2015). https://doi.org/10.1090/S0025-5718-2015-02937-8

Bonito, A., Pasciak, J.E.: Numerical approximation of fractional powers of regularly accretive operators. IMA J. Numer. Anal. 37(3), 1245–1273 (2017). https://doi.org/10.1093/imanum/drw042

Borthagaray, J.P., Del Pezzo, L.M., Martínez, S.: Finite element approximation for the fractional eigenvalue problem. J. Sci. Comput. 77(1), 308–329 (2018). https://doi.org/10.1007/s10915-018-0710-1

Bramble, J.H., Pasciak, J.E., Vassilevski, P.S.: Computational scales of Sobolev norms with application to preconditioning. Math. Comput. 69(230), 463–480 (2000). https://doi.org/10.1090/S0025-5718-99-01106-0

Bramble, J.H., Xu, J.: Some estimates for a weighted \(L^2\) projection. Math. Comput. 56(194), 463–476 (1991). https://doi.org/10.2307/2008391

Bramble, J.H., Zhang, X.: The analysis of multigrid methods. In: Ciarlet, P.G., Lions, J.L. (eds.) Handbook of Numerical Analysis, vol. VII, pp. 173–415. North-Holland, Amsterdam (2000)

Chandler-Wilde, S.N., Hewett, D.P., Moiola, A.: Interpolation of Hilbert and Sobolev spaces: quantitative estimates and counterexamples. Mathematika 61(2), 414–443 (2015). https://doi.org/10.1112/S0025579314000278

Ciarlet, P.G.: The finite element method for elliptic problems. In: Classics in Applied Mathematics, vol. 40. Society for Industrial and Applied Mathematics (SIAM), Philadelphia, PA (2002). https://doi.org/10.1137/1.9780898719208. Reprint of the 1978 original [North-Holland, Amsterdam; MR0520174 (58 #25001)]

Constantin, P.: Energy spectrum of quasigeostrophic turbulence. Phys. Rev. Lett. 89(18), 184,501 (2002)

Constantin, P., Majda, A.J., Tabak, E.: Formation of strong fronts in the \(2\)-D quasigeostrophic thermal active scalar. Nonlinearity 7(6), 1495–1533 (1994)

Cont, R., Tankov, P.: Financial Modelling with Jump Processes. Chapman & Hall/CRC Financial Mathematics Series. Chapman & Hall/CRC, Boca Raton (2004)

Córdoba, A., Córdoba, D.: A maximum principle applied to quasi-geostrophic equations. Commun. Math. Phys. 249(3), 511–528 (2004). https://doi.org/10.1007/s00220-004-1055-1

Davis, T.A.: Umfpack Version 5.2.0 User Guide. University of Florida, Gainesville (2007)

D’Elia, M., Gunzburger, M.: The fractional Laplacian operator on bounded domains as a special case of the nonlocal diffusion operator. Comput. Math. Appl. 66(7), 1245–1260 (2013). https://doi.org/10.1016/j.camwa.2013.07.022

Demengel, F., Demengel, G.: Functional spaces for the theory of elliptic partial differential equations. Universitext. Springer, London; EDP Sciences, Les Ulis (2012). https://doi.org/10.1007/978-1-4471-2807-6. Translated from the 2007 French original by Reinie Erné

Droniou, J.: A numerical method for fractal conservation laws. Math. Comput. 79(269), 95–124 (2010). https://doi.org/10.1090/S0025-5718-09-02293-5

Du, Q., Gunzburger, M., Lehoucq, R.B., Zhou, K.: Analysis and approximation of nonlocal diffusion problems with volume constraints. SIAM Rev. 54(4), 667–696 (2012). https://doi.org/10.1137/110833294

Dupont, T., Scott, R.: Polynomial approximation of functions in Sobolev spaces. Math. Comput. 34(150), 441–463 (1980). https://doi.org/10.2307/2006095

Faermann, B.: Localization of the Aronszajn–Slobodeckij norm and application to adaptive boundary element methods. II. The three-dimensional case. Numer. Math. 92(3), 467–499 (2002). https://doi.org/10.1007/s002110100319

Gatto, P., Hesthaven, J.S.: Numerical approximation of the fractional Laplacian via \(hp\)-finite elements, with an application to image denoising. J. Sci. Comput. 65(1), 249–270 (2015). https://doi.org/10.1007/s10915-014-9959-1

Grisvard, P.: Elliptic problems in nonsmooth domains. In: Classics in Applied Mathematics, vol. 69. Society for Industrial and Applied Mathematics (SIAM), Philadelphia, PA (2011). Reprint of the 1985 original [MR0775683], With a foreword by Susanne C. Brenner

Grubb, G.: Fractional Laplacians on domains, a development of Hörmander’s theory of \(\mu \)-transmission pseudodifferential operators. Adv. Math. 268, 478–528 (2015). https://doi.org/10.1016/j.aim.2014.09.018

Held, I.M., Pierrehumbert, R.T., Garner, S.T., Swanson, K.L.: Surface quasi-geostrophic dynamics. J. Fluid Mech. 282, 1–20 (1995). https://doi.org/10.1017/S0022112095000012

Jerison, D., Kenig, C.E.: The inhomogeneous Dirichlet problem in Lipschitz domains. J. Funct. Anal. 130(1), 161–219 (1995). https://doi.org/10.1006/jfan.1995.1067

Kato, T.: Fractional powers of dissipative operators. J. Math. Soc. Jpn. 13, 246–274 (1961). https://doi.org/10.2969/jmsj/01330246

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations, North-Holland Mathematics Studies, vol. 204. Elsevier Science B.V, Amsterdam (2006)

Levendorskiĭ, S.Z.: Pricing of the American put under Lévy processes. Int. J. Theor. Appl. Finance 7(3), 303–335 (2004). https://doi.org/10.1142/S0219024904002463

Lions, J.L., Magenes, E.: Non-Homogeneous Boundary Value Problems and Applications, vol. II. Springer, New York-Heidelberg (1972). Translated from the French by P. Kenneth, Die Grundlehren der mathematischen Wissenschaften, Band 182

Lund, J., Bowers, K.L.: Sinc Methods for Quadrature and Differential Equations. Society for Industrial and Applied Mathematics (SIAM), Philadelphia (1992). https://doi.org/10.1137/1.9781611971637

McLean, W.: Strongly Elliptic Systems and Boundary Integral Equations. Cambridge University Press, Cambridge (2000)

Mueller, C.: The heat equation with Lévy noise. Stoch. Process. Appl. 74(1), 67–82 (1998). https://doi.org/10.1016/S0304-4149(97)00120-8

Olver, F., Lozier, D., Boisvert, R., Clark, C.: NIST digital library of mathematical functions. Online companion to [65]: http://dlmf.nist.gov (2010)

Pham, H.: Optimal stopping, free boundary, and American option in a jump-diffusion model. Appl. Math. Optim. 35(2), 145–164 (1997). https://doi.org/10.1007/s002459900042

Ros-Oton, X., Serra, J.: The Dirichlet problem for the fractional Laplacian: regularity up to the boundary. J. Math. Pures Appl. (9) 101(3), 275–302 (2014). https://doi.org/10.1016/j.matpur.2013.06.003

Sauter, S.A., Schwab, C.: Boundary Element Methods. Springer Series in Computational Mathematics, vol. 39. Springer, Berlin (2011). https://doi.org/10.1007/978-3-540-68093-2. Translated and expanded from the 2004 German original

Scott, L.R., Zhang, S.: Finite element interpolation of nonsmooth functions satisfying boundary conditions. Math. Comput. 54(190), 483–493 (1990). https://doi.org/10.2307/2008497

Stinga, P.R., Torrea, J.L.: Extension problem and Harnack’s inequality for some fractional operators. Commun. Partial Differ. Equ. 35(11), 2092–2122 (2010). https://doi.org/10.1080/03605301003735680

Zaslavsky, G.M.: Chaos, fractional kinetics, and anomalous transport. Phys. Rep. 371(6), 461–580 (2002). https://doi.org/10.1016/S0370-1573(02)00331-9

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Andrea Bonito and Wenyu Lei were supported in part by the National Science Foundation through Grant DMS-1254618 while the Wenyu Lei and Joseph E. Pasciak were supported in part by the National Science Foundation through Grant DMS-1216551.

A. Proof of Lemma 7.6

A. Proof of Lemma 7.6

The proof of Lemma 7.6 requires the following auxiliary localization result. We refer to [26] for a similar result in two dimensional space.

Lemma A.1

For \(r\in (0,1/2)\), let v be in \(H^r(D)\) and \({\widetilde{v}}\) denote the extension by zero of u to \({{{\mathbb {R}}}^d}\). There exists a constant C independent of h such that

with a constant C independent of h.

Proof

Let \(\widetilde{\mathcal {T}}_h(D)\) be any quasi-uniform mesh [(satisfying (63) and (64)] which extends \({\mathcal {T}_h(D)}\) beyond a unit size neighborhood of D. Fix \(\delta >0\) and for \(\tau \in {\mathcal {T}_h(D)}\) set

Let

and let \(\widetilde{\mathcal {T}}_h(D^\delta _h)\) denote the set of \(\tau \in \widetilde{\mathcal {T}}_h(D)\) contained in \(D_h^\delta \). Finally, for \(\tau \in \widetilde{\mathcal {T}}_h(D^\delta _h){\setminus } {\mathcal {T}_h(D)}\), set

Fix \(v\in H^r(D)\). Since \({\widetilde{v}}\) vanishes outside of \(D_h^\delta \),

The second integral above is bounded by

Expanding the first integral gives

Applying the arithmetic-geometric mean inequality gives

As in (97),

Now,

Using this and Fubini’s Theorem gives

Thus \(J_4\le 4 J_5\) and is bounded by the right hand side of (100).

For \(J_3\), we clearly have

For any element \(\tau ' \in \widetilde{\mathcal {T}}_h(D^\delta _h)\), let \(v_{\tau '}\) denote \({\widetilde{v}}\) restricted to \(\tau '\) and extended by zero outside. As \(r\in (0,1/2)\), \(v_{\tau '} \in H^r({{{\mathbb {R}}}^d})\) and satisfies

The constant C above only depends on Lipschitz constants associated with \(\tau '\) (see [22, 28]), which in turn only depend on the constants appearing in (63). We use the triangle inequality to get

and hence a Cauchy–Schwarz inequality implies that

with \(N_\tau \) denoting the number of elements in \({\widetilde{\tau }}\). As the mesh is quasi-uniform, \(N_\tau \) can be bounded independently of h. In addition, the mesh quasi-uniformity condition also implies that each \(\tau ' \in {\mathcal {T}_h(D)}\) is contained in a most a fixed number (independent of h) of \({\widetilde{\tau }}\) (with \(\tau \in \widetilde{\mathcal {T}}_h(D^\delta _h)\)). Thus,

Combining the estimates for \(J_2,J_3\) and \(J_4\) completes the proof of the lemma. \(\square \)

Proof of Lemma 7.6

In this proof, C denotes a generic constant independent of h and j defined later. The inequality (4.1) of [43] guarantees that for \(\tau \in {{\mathcal {T}}}_h\), we have

for any linear polynomial p and \(v\in H^{1}(S_\tau )\). Here \(S_\tau \) denotes the union of \(\tau ^\prime \in {{\mathcal {T}}}_h\) with \(\tau \cap \tau ^\prime \ne \emptyset \).

Now, we map \(\tau \) to the reference element using an affine transformation. The mapping takes \(S_\tau \) to \({\widehat{S}}_\tau \). Our aim is to take advantage of the averaged Taylor polynomial constructed in [25], which requires the domain to be star-shaped with respect to a ball (of uniform diameter). The patch \({\widehat{S}}_\tau \) may not satisfy this property. However, it can be written as the (overlapping) union of domains \({\widehat{D}}_j\) with each \( {\widehat{D}}_j\) consisting of the union of pairs of elements of \({\widehat{S}}_\tau \) sharing a common face. These \({\widehat{D}}_j\) are star-shaped with respect to balls of diameter depending on the shape regularity constant of the subdivision, which is uniform thanks to (63). Hence, the averaged Taylor polynomial \({{\mathcal {Q}}}_j\) constructed in [25] satisfies (see Theorem 6.1 of [25]), for all \(v\in H^{\beta }({\widehat{S}}_j)\),

Taking \(\Vert \cdot \Vert _{{\widehat{D}}_j}\) to be \(\Vert \cdot \Vert _{L^2({\widehat{D}}_j)} \) or \(\Vert \cdot \Vert _{H^1({\widehat{D}}_j)}\) and \(|\cdot |_{{\widehat{D}}_j} = |\cdot |_{H^\beta ({\widehat{D}}_j)}\) in Theorem 7.1 of [25] implies that (102) holds with \({\widehat{D}}_j\) replaced by \({\widehat{S}}_j\). This, (101) and a Bramble-Hilbert argument implies that for \(v\in H^\beta (D)\cap H^1_0(D)\),

Inequality (88) follows from (103) and interpolation.

We cannot use Theorem 7.1 of [25] to derive (87) because of the non-locality of the norm \(|\cdot |_{H^\beta (D)}\). Instead, we apply Lemma A.1, (103), and the fact that \(|\pi _h^{sz}v|_{H^\beta (\tau )}=0\) to obtain, for \(v\in H^\beta (D)\cap H^1_0(D)\),

The norms in (87) can be replaced by \(\Vert \cdot \Vert _{H^\beta (D)}\) and hence (87) follows from (103) and (104). \(\square \)

Rights and permissions

About this article

Cite this article

Bonito, A., Lei, W. & Pasciak, J.E. Numerical approximation of the integral fractional Laplacian. Numer. Math. 142, 235–278 (2019). https://doi.org/10.1007/s00211-019-01025-x

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-019-01025-x