Abstract

In system reliability, practitioners may be interested in testing the homogeneity of the component lifetime distributions based on system lifetimes from multiple data sources for various reasons, such as identifying the component supplier that provides the most reliable components. In this paper, we develop distribution-free hypothesis testing procedures for the homogeneity of the component lifetime distributions based on system lifetime data when the system structures are known. Several nonparametric testing statistics based on the empirical likelihood method are proposed for testing the homogeneity of two or more component lifetime distributions. The computational approaches to obtain the critical values of the proposed test procedures are provided. The performances of the proposed empirical likelihood ratio test procedures are evaluated and compared to the nonparametric Mann–Whitney U test and some parametric test procedures. The simulation results show that the proposed test procedures provide comparable power performance under different sample sizes and underlying component lifetime distributions, and they are powerful in detecting changes in the shape of the distributions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In reliability studies involving systems, practitioners may be interested in comparing the lifetime characteristics of different systems and the lifetime characteristics of the components that make up those systems. For example, one wants to compare the lifetime characteristics of single-cell cylindrical dry batteries (i.e., components) when they are used in different devices (i.e., systems). The problem of comparing the lifetime characteristics of the components that make up the systems becomes challenging when the system structures are different, especially when the comparisons can only be made based on system lifetime data. For instance, the lifetimes of single-cell cylindrical dry batteries can be very different when used in high-drain devices (e.g., digital cameras and radio-controlled toys) compared to those used in low-drain devices (e.g., clocks and remote controls). In this case, the life testing experiments can be done only when the batteries are used in the devices. Therefore, if researchers want to obtain information about the lifetime of the cylindrical dry batteries, the only accessible data is the system lifetime data. Another example is when the life test involves fielded systems where data are gathered when systems are deployed to the field after the prototype stage. The information on which component leads to the system failure cannot usually be accessed because the experimenters often do not have the need or capability to measure the failed components one by one or the whole system may be discarded after failure. In this paper, the problem of interest is that, given the system structure and system lifetime data, we test if the components from different systems follow the same distribution. The lack of homogeneity of the component lifetime distributions in two different systems could be a consequence of the working conditions in both systems (i.e., the components can be identically distributed a prior, but they are not identically distributed when they are placed in systems with different structures).

Our study is of practical interest, as it will apply directly to the many situations in which the i.i.d. assumption is deemed reasonable, and the notion of system signature proposed by Samaniego (1985) can be applied. Statistical inference for the lifetime distribution of components based on system lifetimes is of interest and has been developed in the past decades. Early literature on this topic is based on “masked data”, which assumes that only partial information is available on the component failures that lead to the system’s failure. Under this framework, Meilijson (1981) and Bueno (1988) identified the component lifetime distribution estimation based on system failure times together with autopsy statistics on the components in the system. Miyakawa (1984) discussed parametric and nonparametric estimation methods for component reliability in the 2-component series system under competing risks with incomplete data. Boyles and Samaniego (1987) derived the nonparametric maximum likelihood estimate (MLE) of component reliability based on nomination sampling in parallel systems. Usher and Hodgson (1988) explored a general method for estimating component reliabilities from a J-component series system lifetime data. Guess et al. (1991) extended Miyakawa’s work and treated a broader class of estimation problems based on masked data. Given the coherent systems with known system structures described by signature, numerous papers were published for component lifetime distributions based on complete system lifetime data. More recent work includes the paper by Bhattacharya and Samaniego (2010) in which the authors estimated the component reliability from system failure data. Eryilmaz et al. (2011) discussed reliability properties of m-consecutive-k-out-of-n: F systems with exchangeable components. Balakrishnan et al. (2011a, b) developed the exact nonparametric method to measure characteristics of the component lifetime distribution based on the lifetimes of coherent systems with known signatures for complete and censored data, respectively. Navarro et al. (2012) developed a general method for inference on the scale parameter of the component lifetime distribution from the system lifetimes. Ng et al. (2012) discussed parametric statistical inference for the component lifetime distributions when they follow a proportional hazard rate model and the system signature is known. Chahkandi et al. (2014) constructed prediction intervals for the lifetime of a coherent system with known signature. In contrast, while most existing works dealt with system lifetimes from systems with the same signature, Hall et al. (2015) developed a novel nonparametric estimator of component reliability function by maximizing the combined system likelihood function when the systems have different system signatures. Then, Jin et al. (2017) extended the work by Hall et al. (2015) to the case that the system signatures are unknown. For testing the homogeneity of the component lifetime distributions based on system lifetime data, Zhang et al. (2015) developed a nonparametric test and several parametric tests under the exponential distribution and studied the power performance of these test procedures.

In this paper, we develop the nonparametric test procedures for testing the homogeneity of component lifetime distributions using the empirical likelihood ratio. The empirical likelihood was first proposed by Owen (1988). It is known that the empirical likelihood ratio behaves like an ordinary parametric likelihood ratio (Wilks 1938), such as the asymptotic chi-square distribution for the empirical likelihood ratio test statistic, and it has advantages over other nonparametric methods. The book by Owen (2001) on empirical likelihood provides an excellent theoretical background on empirical likelihood and its application. The idea of empirical likelihood has been applied to different areas of statistics such as time series analysis (Nordman and Lahiri 2014), survival analysis (Zhou 2019), longitudinal data analysis (Nadarajah et al. 2014), and regression analysis (Chen and Keilegom 2009). Zhang (2002) proposed a new parameterization approach to construct goodness-of-fit tests based on empirical likelihood ratio and showed that the proposed tests are more powerful than the Kolmogorov–Smirnov, the Cramér–von Mises, and Anderson–Darling tests. Later on, Zhang (2006) proposed a more powerful nonparametric statistic for testing the homogeneity of statistical distributions based on empirical likelihood ratio. In addition to empirical likelihood ratio tests, another nonparametric statistic for testing the homogeneity of distributions can be considered is the rank-based Mann–Whitney-type statistic (which is also known as U statistic) based on the work by Mann and Whitney (1947) and Wilcoxon (1992). The hypothesis testing using U statistic is a rank-sum test, and it can be used for comparing two unpaired groups of data.

In this paper, based on the empirical likelihood ratio, we propose two nonparametric test procedures for testing the homogeneity of components’ lifetime distributions given the system lifetimes and the system signatures. The rest of the paper is organized as follows. In Sect. 2, we introduce the mathematical notations and formulate the homogeneity test as a statistical hypothesis testing problem. We also review the existing parametric and nonparametric testing procedures for the homogeneity of component lifetime distributions based on system-level data. In Sect. 3, we introduce the two proposed test procedures based on the empirical likelihood ratio. Then, the computational approach to obtain the null distributions of the nonparametric test statistics by means of the Monte Carlo method is described in Sect. 4. In Sect. 5, a numerical example is used to illustrate the methodologies proposed in this paper. Monte Carlo simulation studies are used to evaluate the performances of those parametric and nonparametric test procedures in Sect. 6. Finally, concluding remarks and future research directions are provided in Sect. 7.

2 Tests for homogeneity based on system lifetime data

In this section, we introduce the mathematical notations and formulate the homogeneity test as a statistical hypothesis testing problem. Then, we review several existing parametric and nonparametric test procedures.

Suppose there are two different coherent systems, System 1 and System 2, with \(n_{1}\) and \(n_{2}\) i.i.d. components following the same absolutely continuous lifetime distribution, respectively. The system lifetime data are obtained by putting \(M_{1}\) System 1 and \(M_{2}\) System 2 on a life test. We denote the system lifetimes for System i as \({\varvec{T}}_{i} = (T_{i1}, T_{i2}, \ldots , T_{iM_{i}})\), and the lifetimes of the \(n_{i}\) components in the j-th system of System i (i.e., the system with lifetime \(T_{ij}\)) as \({\varvec{X}}_{ij} = (X_{ij1}, X_{ij2}, \ldots , X_{ijn_{{i}}})\), \(i = 1, 2\), \(j = 1, 2, \ldots , M_{i}\). The cumulative distribution function (CDF), survival function (SF), and probability density function (PDF) of the lifetimes of System i (i.e., \(T_{ij}\)) are denoted by \(F_{T_i}\), \({\bar{F}}_{T_i}\), and \(f_{T_i}\), for \(i = 1, 2\), and the CDF, SF, and PDF of the lifetimes of the components in System i (i.e., \(X_{ijk}\)) are denoted by \(F_{X_{i}}\), \({\bar{F}}_{X_i}\), and \(f_{X_i}\), for \(i = 1, 2\).

To describe the structure of a coherent system, we consider the system signature introduced by Samaniego (1985). The signature \({\textbf{s}}_i=(s_{i1}, \ldots , s_{in_i})\) of the coherent system i (\(i = 1, 2\)) is defined as

where \(s_{i k}\), \(k = 1, 2, \ldots , n_{i}\), are non-negative real numbers in [0, 1] that do not depend on the component lifetime distribution \(F_{X_{i}}\) with \(\sum _{k = 1}^{n_{i}} s_{i k} = 1.\) From Kochar et al. (1999) (see also, Samaniego 2007), the SF of the system lifetime \(T_{i}\) can be written in terms of the SF of the component lifetime \(X_{ijk}\) as

where \(p_{i}(t) = {{{\bar{F}}}}_{X_{i}}(t)\) and \(h_{i}(\cdot )\) is a polynomial function that can be obtained from the system signature or the minimal signature. We further denote the inverse function of \(h_{i}(\cdot )\) as

where \(q_{i}(t) = {{{\bar{F}}}}_{T_{i}}(t).\) Navarro et al. (2011) stated that the polynomial h(p) is strictly increasing for \(p \in (0, 1)\), with \(h(0)=0\) and \(h(1) = 1\), hence, its inverse function \(h^{-1}\) in (0, 1) exists and is also strictly increasing in (0, 1) with \(h^{-1}(0) = 0\) and \(h^{-1}(1) = 1\). Moreover, the PDF of the system lifetime \(T_{j}\) can be expressed in terms of \(f_{X_{i}}(t)\) and \(p_{i}(t) = {\bar{F}}_{X_{i}}(t)\) as

In addition to the system signatures, Navarro et al. (2007) noted that the SF of the system lifetime \(T_i\) can be expressed in terms of the SF of k-component series system lifetimes, \(X_{i,1:k} = \min (X_{i1}, X_{i2}, \ldots , X_{ik})\), \(k = 1, 2, \ldots , n_{i}\):

where \({{{\bar{F}}}}_{X_{i}}^{k}(t)\) is the SF of a k-component series system lifetime, for some non-negative and negative integers \(a_{i1}, a_{i2}, \ldots , a_{in_{i}}\), that do not depend on the component lifetime distribution with \(\sum _{k = 1}^{n_{i}} a_{ik} = 1\) (see also, Ng et al. 2012). The vector \({\varvec{a}}_{i} = (a_{i1}, a_{i2}, \ldots , a_{in_{i}})\) is called the minimal signature of System i. The values of the minimal signature are the coefficients of the polynomial function \(h_{i}(\cdot )\) defined in Eq. (2) (see, for example, Navarro 2022; Navarro and Rubio 2009).

Based on the system lifetime data \({\varvec{T}}_{i}\), \(i = 1, 2\), we are interested in testing the homogeneity of the component lifetime distributions, which can be formulated as a hypothesis testing problem as testing

2.1 Parametric test procedures

For comparative purposes, we consider two parametric tests—an asymptotic test under the assumption of exponentially distributed component lifetimes and a parametric likelihood ratio test—for testing the hypotheses in Eq. (4) based on system lifetime data \({\varvec{T}}_{i}\), \(i = 1, 2\).

2.1.1 Asymptotic tests under exponentially distributed component lifetimes assumption

We consider the asymptotic parametric test developed in Zhang et al. (2015) based on the assumption that the underlying lifetimes of components follow an exponential distribution. Specifically, we assume that the lifetimes of components from System i \((i = 1, 2)\) follow an exponential distribution with scale parameter \(\theta _i > 0\) (denoted as \({\textit{Exp}}(\theta _i))\) with PDF

To estimate the scale parameter \(\theta _{i}\) using the system lifetime data \({\varvec{T}}_{i}\), \(i = 1, 2\), we use the method of moments estimator (MME) (Ng et al. 2012) defined as

where \(T_{ij}\) is the j-th system lifetime of System i and \(\Delta _{i} = \sum _{k=1}^{n_{i}} (a_{ik}/k)\). Ng et al. (2012) showed that the MME \({\tilde{\theta }}_{i}\) is an unbiased estimator and they derived the variance of \(\tilde{\theta }_{i}\) as

where \(\Delta _i^{(2)} = \sum _{k=1}^{n_{i}} (a_{ik}/k^2).\) For other properties of the MME \(\tilde{\theta }_{i}\) and its exact distribution, one can refer to Ng et al. (2012) and Zhang et al. (2015). Under the assumption of exponentially distributed component lifetimes, testing the hypotheses of homogeneity in Eq. (4) is equivalent to testing

To test the hypotheses in Eq. (7), Zhang et al. (2015) developed different test procedures based on the MME \({\tilde{\theta }}_{i}\), \(i = 1,2\) and they recommend the test using the logarithm transformation of \(R = \tilde{\theta }_1/\tilde{\theta }_2\) based on the power performance in a simulation study. Therefore, we consider the test statistic

where \({\textit{Var}}(\ln \tilde{\theta }_i)\) can be approximated by the delta method as (see, for example, Section C.2. of Meeker et al. 2022)

for \(i=1,2\). Note that the approximated \({\textit{Var}}(\ln {{\tilde{\theta }}})\) can also be obtained using the delta method for approximating moments described in Theorem 5.3.1 and Corollary 5.3.2 of Bickel and Doksum (2001) by considering the system lifetime \(T_{ij}\) with PDF

and the function of the sample mean \({{{\bar{T}}}}_{i} = \sum _{j=1}^{M_{i}} T_{ij}\) as

The details of the approximation based on the results in Bickel and Doksum (2001) are provided in the Supplementary Materials.

Under the null hypothesis \(H_{0}^{*}\) in Eq. (7), the test statistic \(Z_{L}\) is asymptotically standard normally distributed. Therefore, the p-value of the asymptotic test based on test statistic \(Z_L\) can be calculated as \(2(1-\Phi (|Z_L|)\) where \(\Phi (\cdot )\) is the CDF of the standard normal distribution.

2.1.2 Parametric likelihood ratio test

Suppose that the component lifetime distributions for the components in System 1 and System 2 follow the same parametric family of distributions with PDF \(f_{X_{i}}(t; {\varvec{\theta }}_{i})\) \(i =1, 2\) with parameter vector \({\varvec{\theta }}_{1}\) and \({\varvec{\theta }}_{2}\), then testing the hypotheses of homogeneity in Eq. (4) is equivalent to testing

From Eq. (3), the likelihood function based on System i lifetime data \(\varvec{T}_i=(T_{i1}, T_{i2}, \ldots , T_{iM_i})\), \(i = 1, 2\), is

The MLE of \({\varvec{\theta }}_{i}\) based on \(\varvec{T}_i=(T_{i1}, T_{i2}, \ldots , T_{iM_i})\) alone, denoted as \({\varvec{{\hat{\theta }}}}_{i}\), can be obtained by maximizing \(L_{i}({\varvec{\theta }}_i \mid \varvec{T}_i)\) with respect to \({\varvec{\theta }}_{i}\).

Under the null hypothesis \(H_{0}^{**}: {\varvec{\theta }}_{1} = {\varvec{\theta }}_{2} = {\varvec{\theta }}\) in Eq. (9), the likelihood function based on \((\varvec{T}_1, \varvec{T}_2)\) can be expressed as

and the MLE of \({\varvec{\theta }}\) based on the pooled data \((\varvec{T}_1, \varvec{T}_2)\), denoted as \({\varvec{{\hat{\theta }}}}\), can be obtained by maximizing \(L_{12}({\varvec{\theta }}\mid \varvec{T}_1, \varvec{T}_2)\) with respect to \({\varvec{\theta }}\). The parametric likelihood ratio statistic is defined as

By the Wilk’s theorem (Wilks 1938), the asymptotic distribution of the likelihood ratio statistic \(\lambda _{LR}\) under null hypothesis is chi-square with degrees of freedom \(\nu\), where \(\nu\) is the difference in dimensionality of \({\varvec{\theta }}\) and \(({\varvec{\theta }}_{1}, {\varvec{\theta }}_{2})\). The p-value of the parametric likelihood ratio test based on test statistic \(\lambda _{LR}\) can be approximated as \(\Pr ( \chi ^{2}_{\nu } > \lambda _{LR})\) where \(\chi ^{2}_{\nu }\) is a random variable following the chi-square distribution with degrees of freedom \(\nu\).

2.2 Nonparametric Mann–Whitney U Statistic

To test the hypotheses in Eq. (4), Zhang et al. (2015) proposed a nonparametric test procedure based on the Mann–Whitney U test, also called the Mann–Whitney-Wilcoxon test (Mann and Whitney 1947; Wilcoxon 1992), which is a nonparametric test for comparing two component lifetime distributions. Based on the system lifetime data from System 1 and System 2, we define the indicator function between the two systems as

for \(j_1=1,2,\ldots ,M_1\) and \(j_2=1,2,\ldots ,M_2\). The U statistic for testing the homogeneity of component lifetime distributions is defined as

where \(R_j\) is the number of ordered observations from sample \({\varvec{T}}_1\) which are in between the \((j-1)\)-th and j-th order statistics from sample \(\varvec{T}_2\). The support of the statistic U is \(\{0, 1, \ldots , M_{1}M_{2}\}\) and the null hypothesis in Eq. (4) is rejected if U is too large or too small. Here, we reject the null hypothesis in Eq. (4) at \(\alpha\) level if \(U \le c_{U1}\) or \(U \ge c_{U2}\), where \(c_{U1}\) and \(c_{U2}\) are critical values and can be determined by \(\Pr (U \le c_{U1}\mid H_{0}) \le \alpha /2\) and \(\Pr (U \ge c_{U2}\mid H_{0}) \le \alpha /2\), respectively. The exact p-value can be calculated as presented in Zhang et al. (2015). Since the computation of the exact p-value requires the numerical evaluation of integration which can be computationally intensive, we consider using the Monte Carlo method to obtain the null distribution of U under different scenarios in this paper. The procedure to get the simulated null distribution of U is described in Sect. 4 below.

3 Proposed test procedures based on empirical likelihood ratio

In this section, we develop test procedures based on empirical likelihood ratio to test the hypotheses in Eq. (4) nonparametrically. From Hall et al. (2015), the empirical likelihood function for System i based on \(\varvec{T}_{i}\) only (\(i = 1, 2\)) can be expressed as

where \(Y_i(t)=\sum _{j=1}^{M_i}I_{(t, \infty )}(T_{ij}), \; i = 1, 2\), and \(I_{(t, \infty )}(T_{ij})\) is the indicator function defined as

The empirical likelihood function \(L_{T_{i}}(t)\) in Eq. (10) is maximized with respect to \(p_{i}(t) \in (0, 1)\) at

where \({{\hat{F}}}_{T_{i}}(t)\) is the empirical CDF of \(F_{T_{i}}(t)\) defined as

That is, based on \({\varvec{T}}_{i}\),

Under the null hypothesis that \(F_{X_{1}}(t) = F_{X_{2}}(t)\) (or equivalently \(p_{1}(t) = p_{2}(t) = p(t)\)), we can pool the samples from System 1 and System 2 into an ordered sample of size \(M = M_{1} + M_{2}\), denoted as \(\varvec{T}^{*} = (T^*_{(1)}< T^*_{(2)}< \cdots < T^*_{(M)})\). Following Hall et al. (2015), the empirical likelihood function based on the pooled data is

The nonparametric MLE of p(t), denoted as \({{\hat{p}}}(t)\), can be obtained by maximizing \(L^{*}_{T_{i}}(t)\) with respect to \(p(t) \in (0, 1)\), i.e.,

Due to the inversion of the function h(p) may not be explicit, a closed-form solution \({{\hat{p}}}(t)\) may not be obtained, and the maximization can be approximated by numerical methods such as the Newton–Raphson method. The nonparametric MLE \({{\hat{p}}}(t)\) can be written as

where \(q_j\) maximizes the likelihood function in Eq. (13) for t in \([T^*_{(j)}, T^*_{(j+1)})\), \(j = 1, 2, \ldots , M-1\). For the initial values of \(q_{j}\) (denoted as \(q_{j}^{(0)}\)) of the iterative maximization algorithm and \(t \in [T^*_{(j)}, T^*_{(j+1)})\), we consider a weighted function of \({{\hat{p}}}_{i}(t)\), \(i = 1, 2\), in Eq. (11)

The empirical likelihood ratio at time t is

Then, we obtain the log empirical likelihood ratio as

Based on the log empirical likelihood ratio, we define

which is a function of time t. We consider two functions based on \(G_{t}^{2}\):

where w(t) is a weight function. Following Zhang (2006), we propose two test statistics for testing the hypotheses in Eq. (4) with different weight functions:

-

1.

Take \(w(t)=1\) in Eq. (14), we obtain the test statistic

$$\begin{aligned} Z_K&\overset{\Delta }{=}\ \sup _{t\in (0,\infty )}[G^2_t]\\&= \max _{1\le k\le M} \left\{ 2\sum _{i=1}^2 Y_i(T^*_{(k)})\ln \left[ \frac{h_i({{\hat{p}}}_i(T^*_{(k)}))}{h_i({{\hat{p}}}(T^*_{(k)}))} \right] \right. \\&\quad \left. +(M_i-Y_i(T^*_{(k)})) \ln \left[ \frac{1-h_i({{\hat{p}}}_i(T^*_{(k)}))}{1-h_i({{\hat{p}}}(T^*_{(k)}))} \right] \right\} . \end{aligned}$$ -

2.

We denote the maximum likelihood estimator of component CDF for the pooled data as \(\hat{F}_{X}(t)\) and define \({{\hat{F}}}_X(T^*_{(0)})=0\). Then, \(\hat{F}_X(t)=1-\hat{p}(t)\). In Eq. (15), take the weight function

$$\begin{aligned} d w(t) =\frac{1}{\hat{F}_X(t)(1-\hat{F}_X(t))}d\hat{F}_X(t). \end{aligned}$$Then, the test statistic can be written as

$$\begin{aligned} Z_A&\overset{\Delta }{=} \int _0^{\infty }G^2_t\frac{1}{\hat{F}_X(t)(1-\hat{F}_X(t))}d\hat{F}_X(t)\\&= 2\sum _{k=1}^M\frac{\hat{p}(T^*_{(k)})-\hat{p}(T^*_{(k-1)})}{\hat{p}(T^*_{(k)})(1-\hat{p}({T^*_{(k)}}))} \\&\quad \times \left\{ \sum _{i=1}^2\left[ Y_i(T^*_{(k)})\ln \frac{h_i(\hat{p}_i(T^*_{(k)}))}{h_i(\hat{p}(T^*_{(k)}))} +(M_i-Y_i(T^*_{(k)}))\ln \frac{1-h_i(\hat{p}_i(T^*_{(k)}))}{1-h_i(\hat{p}(T^*_{(k)}))}\right] \right\} . \end{aligned}$$

Large values of \(Z_{K}\) and \(Z_A\) support the alternative hypothesis in Eq. (4), which leads to the rejection of the null hypothesis in Eq. (4).

4 Null distributions of \(Z_{K}\), \(Z_{A}\), and U based on Monte Carlo method

Since the distributions of \(Z_{K}\) and \(Z_{A}\) are intractable theoretically in general, even under the null hypothesis, we rely on the Monte Carlo method to obtain the null distributions of \(Z_{K}\) and \(Z_{A}\). We simulate the lifetimes of \(M_{1}\) systems for System 1 and the lifetimes of the \(M_{2}\) systems for System 2 from any component lifetime distribution \(F_{X}\) with \(F_{X_{1}} = F_{X_{2}} = F_{X}\) for given \(n_{1}\), \(n_{2}\), \(M_{1}\), \(M_{2}\), and system signatures \({\textbf{s}}_{1}\) and \({\textbf{s}}_{2}\). The statistics \(Z_{K}\) and \(Z_{A}\) are computed from the simulated lifetimes.

In practice, we can simulate the null distributions of \(Z_{K}\), \(Z_{A}\), and U by choosing \(F_X\) as a distribution that is easy to simulate (e.g., standard exponential distribution, \({\textit{Exp}}(1)\)) with a large number of Monte Carlo simulations (say, 1,000,000 times). For example, the simulated 90-th, 95-th, and 99-th percentage points for \(Z_{K}\) and \(Z_{A}\) with \({\textbf{s}}_1 = (0, 0, 0, 1)\) (i.e., \(n_{1} = 4\), with minimal signature \({\varvec{a}}_{1} = (4, -6, 4, -1)\)) and \({\textbf{s}}_2 = (1, 0, 0)\) (i.e., \(n_{2} = 3\), with minimal signature \({\varvec{a}}_{2} = (0, 0, 1)\)) based on 1,000,000 simulations with \(F_{X} \sim {\textit{Exp}}(1)\) are presented in Table 1.

For the null distribution of the Mann–Whitney U statistic, since U is discrete and the number of possible values of U can be small when the sample sizes \(M_{1}\) and \(M_{2}\) are small, the actual percentage when U equals to or less than the critical value might be much larger than the nominal significance level. Therefore, instead of using the exact distribution presented in Zhang et al. (2015), we propose to use a Monte Carlo simulation method to obtain the approximated percentage points of the null distribution and use a randomization procedure to obtain a test procedure based on U with the required significance level. To illustrate the procedure for obtaining the critical values, we consider the following example in which System 1 is a 4-component parallel system, and System 2 is a 3-component series system with system signatures \({\textbf{s}}_1=(0,0,0,1)\) and \({\textbf{s}}_2=(1,0,0)\), respectively. In Table 2, we present the critical values of the Mann–Whitney statistic U and the corresponding simulated probability that U is more extreme than or equal to the critical value for different significance levels and sample sizes. The values in Table 2 are generated based on 1,000,000 simulations. For illustrative purposes, we consider the case of \(M_1=M_2=10\) to demonstrate how to conduct the hypothesis test at 5% significance level.

From Table 2, for \(\alpha = 0.05\), the critical values are \(c_{U1} = 0\) and \(c_{U2} = 12\) and we have the following probability

If we use the critical values \(c_{U1} = 0\) and \(c_{U2} = 12\) directly, the significance level will be \(0.3254 + 0.0252 = 0.3506\), which is much higher than the 5% level. Therefore, we use the simulated null distribution with a randomization procedure to control the significance level of the test based on U. For example, to control \(\Pr ({\text{ reject }}\;H_{0}\) when U is too small \(\mid H_{0}) \le 0.025\), we do not reject \(H_{0}\) if the observed value of U is greater than 0 and less than 12. If the observed value of U is 0, we reject \(H_{0}\) with probability 0.025/0.3254, i.e.,

Similarly, to control \(\Pr ({\text{ reject }}\;H_{0}\) when U is too large \(\mid H_{0}) \le 0.025\), we do not reject \(H_{0}\) if the observed value of U is less than 12 and reject \(H_{0}\) if the observed value of U is larger than 12. If the observed value of U is 12, we reject \(H_{0}\) with probability \((0.025-\Pr (U>12)/\Pr (U=12) = (0.025 - (\Pr (U\ge 12)-\Pr (U=12))/\Pr (U=12)=0.9737\), i.e.,

Obviously, when \(\Pr (U \le c_{U1}) = 0.025\) and \(\Pr (U \ge c_{U2}) = 0.025\), no randomization procedure is needed. Following this procedure, we can control the significance level of the two-sided U test under \(H_0\) for \(\alpha = 0.01, 0.05, 0.1\) using the critical values \(c_{U1}\) and \(c_{U2}\) and the corresponding values of \(\Pr (U \le c_{U1})\) and \(\Pr (U \ge c_{U2})\) when these probabilities are not equal to \(\alpha /2\).

5 Illustrative example

To illustrate the test procedures developed in this paper, we analyze a data set based on the example presented in Yang et al. (2016) and Frenkel and Khvatskin (2006). The example given in Yang et al. (2016) and Frenkel and Khvatskin (2006) described the phosphor acid filter system as a real-life prototype of a consecutive 2-out-of-n system. For a consecutive 2-out-of-n system, the system fails when any two adjacent components fail. For illustrative purposes, we consider that System 1 is a consecutive 2-out-of-8 system with system signature \({\textbf{s}}_1=(0,1/4,11/28,2/7,1/14,0,0,0)\) (minimal signature \({\varvec{a}}_{1} = (0, 7, -6, 0, 0, 0, 0, 0)\) and component lifetimes follow a Birnbaum–Saunders distribution (Birnbaum and Saunders 1969) with CDF

where the shape parameter is \(a = 1\) and scale parameter \(b = 1\) and System 2 is a 4-component with system signature \({\textbf{s}}_2=(1/4,1/4,1/2,0)\) (minimal signature \({\varvec{a}}_{2} = (0, 3, -3, 1)\)) and component lifetimes follow a \({\textit{Weibull}}(3, 2)\) distribution. The system lifetime data for System 1 and System 2 with sample sizes \(M_{1} = M_{2} = 20\) are presented in Table 3. A hypothesis test is conducted to determine if the components from two different systems follow the same lifetime distribution.

The nonparametric MLE of the SF of the component lifetime distribution \(F_{X_{1}}\) based on \(\varvec{T}_{1}\) from Eq. (12) (denoted as \({\hat{{{\bar{F}}}}}_{X_{1}}\)), the nonparametric MLE of the SF of the component lifetime distribution \(F_{X_{2}}\) based on \(\varvec{T}_{2}\) from Eq. (12) (denoted as \({\hat{{{\bar{F}}}}}_{X_{2}}\)), the average of \({\hat{\bar{F}}}_{X_{1}}\) and \({\hat{{{\bar{F}}}}}_{X_{2}}\), and the nonparametric MLE of the component lifetime distribution based on the pooled data (\(\varvec{T}_{1}\), \(\varvec{T}_{2}\)) under \(H_{0}: F_{X_{1}} = F_{X_{2}}\) by maximizing Eq. (13), are plotted in Fig. 1.

The nonparametric MLE of the SF of the component lifetime distribution \(F_{X_{1}}\) based on \(\varvec{T}_{1}\) from Eq. (12) (denoted as \({\hat{{{\bar{F}}}}}_{X_{1}}\)), the nonparametric MLE of the SF of the component lifetime distribution \(F_{X_{2}}\) based on \(\varvec{T}_{2}\) from Eq. (12) (denoted as \({\hat{{{\bar{F}}}}}_{X_{2}}\)), the average of \({\hat{\bar{F}}}_{X_{1}}\) and \({\hat{{{\bar{F}}}}}_{X_{2}}\), and the nonparametric MLE of the component lifetime distribution based on the pooled data (\(\varvec{T}_{1}\), \(\varvec{T}_{2}\)) under \(H_{0}: F_{X_{1}} = F_{X_{2}}\) by maximizing Eq. (13)

To test the hypotheses in Eq. (4) using the nonparametric Mann–Whitney U test and the two proposed empirical likelihood ratio tests at 1% level of significance for the data set in Table 3, we obtain the critical values based on the procedures described in Sect. 4 with 1,000,000 simulations. The critical values for the empirical likelihood ratio tests based on \(Z_{K}\) and \(Z_{A}\) are 12.6394 and 18.4978, respectively, and the 0.5 and 99.5 percentile of the Mann–Whitney U statistic are 172 and 314, respectively.

For the data presented in Table 3, we can compute the test statistics \(Z_K=13.5608\), \(Z_A=22.0917\), and \(U=334\). We observe that all these test statistics are larger than their corresponding critical value at a 1% level of significance. Therefore, we reject the null hypothesis in Eq. (4) at 1% level with p-value of \(2 \times 10^{-6}\) based on test statistics \(Z_A\) and \(Z_K\) and p-value of 0.01 based on the test statistic U. These results agree with our expectation since the component lifetimes in System 1 are simulated from a Birnbaum–Saunders distribution, and the component lifetimes in System 2 are simulated from a Weibull distribution.

6 Monte Carlo simulation studies

In this section, Monte Carlo simulation studies are used to evaluate the performances of those parametric and nonparametric test procedures described in Sects. 2 and 3 for testing the hypotheses in Eq. (4). In these simulation studies, we consider simulating the system lifetimes for the systems with component lifetimes following the statistical distributions listed below:

-

1.

Exponential distribution: Exponential distribution with mean \(\theta\) has PDF in Eq. (5), denoted as \({\textit{Exp}}(\theta )\).

-

2.

Gamma distribution: Gamma distribution with shape parameter \(\alpha > 0\) and rate parameter \(\beta > 0\), denoted as \({\textit{Gamma}}(\alpha ,\beta )\), has PDF

$$\begin{aligned} f_{X}(t)=\frac{\beta ^{\alpha }}{\Gamma (\alpha )}t^{\alpha -1}e^{-\beta t}, \quad \text {for}\quad t\ge 0, \end{aligned}$$(16)where \(\Gamma (\alpha ) = \int _{0}^{\infty } x^{\alpha } \exp (-x) dx\) is the gamma function.

-

3.

Weibull distribution: Weibull distribution with scale parameter \(\lambda > 0\) and shape parameter \(\gamma > 0\), denoted as \({\textit{Weibull}}(\lambda ,\gamma )\), has PDF

$$\begin{aligned} f_{X}(t)=\frac{\gamma }{\lambda }\left( \frac{t}{\lambda }\right) ^{\gamma -1} \exp {\left[ -\left( \frac{t}{\lambda }\right) ^{\gamma }\right] } \quad \text {for}\quad t>0. \end{aligned}$$(17) -

4.

Lognormal distribution: Lognormal distribution with scale parameter \(\exp (\mu ) > 0\) and shape parameter \(\sigma > 0\), denoted as \({\textit{Lognormal}}(\mu ,\sigma ^2)\), has PDF

$$\begin{aligned} f_{X}(t) = \frac{1}{t\sigma \sqrt{2\pi }}\exp \left[ -\frac{(\ln t -\mu )^2}{2\sigma ^2}\right] , \quad \text {for}\quad t>0. \end{aligned}$$(18)

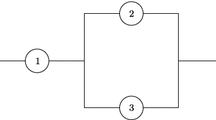

In the first simulation study, we conduct the parametric tests to examine how the Type-I error rates vary when the underlying distributions are misspecified. We consider applying the asymptotic parametric test under exponentially distributed component lifetimes assumption presented in Sect. 2.1.1 to cases when the component lifetimes follow different statistical distributions described above. The PDFs of the distributions considered in the first simulation study are plotted in Fig. 2. We consider that System 1 is a 4-component parallel system and System 2 is a 3-component series system with system signatures \({\textbf{s}}_1 = (0, 0, 0, 1)\) and \({\textbf{s}}_2 = (1, 0, 0)\), respectively, with different sample sizes \(M_{1} = M_{2} = 10\), 15, 20, 30, and 50. The simulated rejection rates of the asymptotic parametric test based on 10,000 simulations under the null hypothesis that the components in System 1 and System 2 have the same distribution with 5% level of significance are presented in Table 4.

Table 4 shows that the asymptotic parametric test developed under the exponential assumption inflates the simulated Type-I error rates when the underlying component lifetime distributions deviate from the exponential, such as \({\textit{Gamma}}(5, 2)\), \({\textit{Weibull}}(2.5, 5)\), and \({\textit{Lognormal}}(1, 2)\) (see Fig. 2). On the other hand, the simulated Type-I error rates are close to the nominal level of 5% when the underlying component lifetime distributions are exponential or similar to the exponential distribution, such as \({\textit{Gamma}}(1.1, 1)\), \({\textit{Weibull}}(1, 1.1)\) and \({\textit{Lognormal}}(0, 1)\) (see Fig. 2). These results indicate that those parametric tests for homogeneity of component lifetime distributions may not be appropriate, especially when the underlying distribution is unknown.

In the second simulation study, we evaluate the power performances of the proposed empirical likelihood ratio tests \(Z_{K}\) and \(Z_{A}\), and the Mann–Whitney U test and compare them with the parametric tests by assuming that the underlying distributions agree with the distributions the data are generated from.

For the parametric likelihood ratio test with a two-parameter distribution, we assume that both \(F_{X_{1}}\) and \(F_{X_2}\) are from the same class of distributions and the two parameters are unknown, but one of the parameters in \(F_{X_{1}}\) and \(F_{X_2}\) are the same under the alternative hypothesis. For example, in the simulation where the component lifetimes follow the Weibull distribution, \(F_{X_{1}}\) and \(F_{X_2}\) are \({\textit{Weibull}}(\lambda _1,\gamma _1)\) and \({\textit{Weibull}}(\lambda _2,\gamma _2)\), respectively, we use the hypotheses

As shown in the first simulation study, the asymptotic parametric test developed under the exponentially distributed components assumption may not be appropriate for distributions other than exponential distribution; we only consider the asymptotic parametric test when the data are generated from an exponential distribution. In this simulation study, we consider the following settings for the system structures of System 1 and System 2 (Navarro et al. 2007):

- [S1]:

-

System 1: 4-component parallel system with system signature \({\textbf{s}}_1 = (0, 0, 0, 1)\) (minimal signature \({\varvec{a}}_{1} = (4, -6, 4, -1)\));

System 2: 3-component series system with system signature \({\textbf{s}}_2 = (1, 0, 0)\) (minimal signature \({\varvec{a}}_{2} = (0, 0, 1)\)).

- [S2]:

-

System 1: 3 components system with system signature \({\textbf{s}}_1 = (0, 2/3, 1/3)\) (minimal signature \({\varvec{a}}_{1} = (1, 1, -1)\));

System 2: 4 components system with system signature \({\textbf{s}}_2 = (1/4, 1/4, 1/2, 0)\) (minimal signature \({\varvec{a}}_{2} = (0, 3, -3, 1)\)).

- [S3]:

-

System 1: 3 components system with system signature \({\textbf{s}}_1 = (0, 2/3, 1/3)\) (minimal signature \({\varvec{a}}_{1} = (1, 1, -1)\));

System 2: 4 components system with system signature \({\textbf{s}}_2 = (0, 1/2, 1/4, 1/4)\) (minimal signature \({\varvec{a}}_{2} = (1, 0, 1, -1)\)).

For comparative purposes, we also consider the nonparametric test procedures based on complete component-level data, i.e., all \(n_k M_k\) component lifetimes are observed for System k. This is equivalent to considering System 1 and System 2 be 1-component systems such that the lifetime of the component is equal to the lifetime of the system. Since this is an ideal scenario with complete information on the component lifetimes, we denote this case as “Full data”. Note that the power performance of the test procedures based on complete component-level data can be served as a benchmark for the power comparisons since this is the case that all the \(n_1 M_1 + n_2 M_2\) component lifetimes are observed. The critical values of nonparametric tests are obtained from the simulation mentioned in Sect. 4 based on 1,000,000 simulations.

The component lifetimes are generated from the following distribution settings:

- [D1]:

-

Exponential distributions with changes in the scale parameter: System 1: \({\textit{Exp}}(\theta _1)\) with \(\theta _1 = 1\) (i.e., \(\ln \theta _{1} = 0\));

System 2: \({\textit{Exp}}(\theta _2)\) with \(\ln \theta _{2}\) varies from \(-1.6\) to 1.6 with increment 0.1 (denoted by \(-1.6\ (0.1)\ 1.6\));

- [D2]:

-

Weibull distributions with changes in the shape parameter: System 1: \({\textit{Weibull}}(\lambda _1, \gamma _1)\) with \(\lambda _1 = 1\) and \(\gamma _{1} = 1\);

System 2: \({\textit{Weibull}}(\lambda _2, \gamma _{2})\) with \(\lambda _2 = 1\) and \(\gamma _{2}\) varies from 0.5 to 1.5 with increment 0.1 (denoted by 0.5 (0.1) 1.5);

- [D3]:

-

Lognormal distributions with changes in the standard deviation on the log-scale (i.e., change in the shape parameter): System 1: \({\textit{Lognormal}}(\mu _1, \sigma _1)\) with \(\mu _1 = 0\) and \(\sigma _{1} = 2\);

System 2: \({\textit{Lognormal}}(\mu _2, \sigma _{2})\) with \(\mu _{2}=0\) and \(\sigma _2\) varies from 1 to 3 with increment 0.1 (denoted by 1 (0.1) 3).

- [D4]:

-

Weibull distributions with changes in the scale parameter: System 1: \({\textit{Weibull}}(\lambda _1, \gamma _1)\) with \(\lambda _1 = 2.5\) and \(\gamma _{1} = 5\);

System 2: \({\textit{Weibull}}(\lambda _2, \gamma _{2})\) with \(\lambda _{2}\) varies from 1.5 to 3.5 with increment 0.1 (denoted by 1.5 (0.1) 3.5) and \(\gamma _2 = 5\);

- [D5]:

-

Gamma distribution with changes in the shape parameter: System 1: \({\textit{Gamma}}(\alpha _1, \beta _1)\) with \(\alpha _1 = 5\) and \(\beta _{1} = 2\);

System 2: \({\textit{Gamma}}(\alpha _2, \beta _{2})\) with \(\alpha _{2}\) varies from 3 to 7 with increment 0.2 (denoted by 3 (0.2) 7) and \(\beta _2 = 2\);

- [D6]:

-

Gamma distribution with changes in the rate parameter: System 1: \({\textit{Gamma}}(\alpha _1, \beta _1)\) with \(\alpha _1 = 5\) and \(\beta _{1} = 2\);

System 2: \({\textit{Gamma}}(\alpha _2, \beta _{2})\) with \(\alpha _2 = 5\) and \(\beta _{2}\) varies from 1 to 3 with increment 0.1 (denoted by 1 (0.1) 3);

- [D7]:

-

Lognormal distributions with changes in the mean on the log-scale (i.e., change in the scale parameter): System 1: \({\textit{Lognormal}}(\mu _1, \sigma _1)\) with \(\mu _1 = 0\) and \(\sigma _{1} = 1\);

System 2: \({\textit{Lognormal}}(\mu _2, \sigma _{2})\) with \(\mu _{2}\) varies from \(-1.6\) to 1.6 with increment 0.1 (denoted by \(-1.6\) (0.1) 1.6) and \(\sigma _2 = 1\).

The rejection rates with significance level 5% under different settings are estimated based on 10,000 simulations. For the sake of saving space, we present here the simulated power curves for setting [D1] with [S1] in Figure 3, the simulated power curves for setting [D2] with [S1] in Figure 4, the simulated power curves for setting [D3] with [S1], and the simulated power curves for settings [D4] with [S1], [S2], and [S3] in Figures 6–8 in the Supplementary Materials, respectively. We present the simulated power curves for each figure under the sample sizes \(M_1 = M_2 = 10\), 15, 20, 30, and 50. The simulated power curves for other settings, including [D5]–[D7] with system structures [S1]–[S3] are presented in Figures 11–25 in the Supplementary Materials.

The simulated power curves centered at the simulated rejection rates under the null hypothesis (i.e., \(F_{X_1} = F_{X_{2}}\)), which are expected to be close to the nominal significance level of 5%. When the differences between the parameters increase (i.e., moving away from the center), we expect the simulated power values to increase. The closer the power values to one, the better the performance of the test procedure. As mentioned above, the simulated power curves with “Full data” can serve as benchmarks for comparisons as the power values based on complete component-level data are larger than those based on system-level data. Moreover, we expect that the power values for the parametric likelihood ratio tests under the correct model specification of underlying distributions are better than those of nonparametric tests.

From Figures 3 and 4 in the Supplementary Materials, we observe that for the extreme setting [S1] with System 1 being a parallel system and System 2 being a series system, when the sample sizes are small (say, \(M_1 = M_2 \le 20\)), the power values are low when the mean lifetime of components in System 1 is smaller than the mean lifetime of components in System 2 (i.e., the left-hand side of the power curve) for the nonparametric tests. As pointed out by Zhang et al. (2015), it is due to the nature of the problem since we are comparing the worst component in one system to the best component in another system to determine if the lifetime characteristics of the components are the same.

From Figures 3–8 in the Supplementary Materials, the power values of the nonparametric tests with complete component-level data and the parametric tests under the correct specification of the underlying component lifetime distribution are larger than the power values of the nonparametric tests based on system-level data. We observe that the proposed empirical likelihood ratio tests provide comparative power performance in most cases compared to the Mann–Whitney U test. After considering the upper bound of the Monte Carlo error \(\sqrt{(0.5)(1-0.5)/10{,}000} = 0.005\), the proposed empirical likelihood ratio tests are more powerful for small sample sizes on the right-hand side. Between the two empirical likelihood ratio tests, the test based on \(Z_{A}\) tends to have better power performance than the test based on \(Z_{K}\) in most cases.

For comparative purposes, the differences between the simulated power values of the test procedures based on U statistic and \(Z_K\), and the test procedures based on U statistic and \(Z_A\) for Weibull distribution with \(M_1 = M_2 = 30\) and for lognormal distribution with different sample sizes are plotted in Figures 9 and 10 in the Supplementary Materials, respectively. Negative values of the differences indicate that the proposed tests based on \(Z_{A}\) and \(Z_{K}\) provide better power performance than the Mann–Whitney U test. We also included the lines for plus and minus three Monte Carlo errors (\(\pm 3 {\textit{MCE}}\)) to indicate if the differences are significant.

When the mean lifetime of the component lifetime distribution of System 2 is larger than the mean lifetime of the component lifetime distribution of System 1, the proposed empirical likelihood ratio test based on \(Z_{A}\) provides better power values among the three nonparametric tests considered here. The advantage of the test based on \(Z_{A}\) is more significant when the two systems are extremely different (i.e., setting [S1]). When the mean lifetime of the component lifetime distribution of System 2 is smaller than the mean lifetime of the component lifetime distribution of System 1, the Mann–Whitney U test provides better power values among the three nonparametric tests considered here.

The proposed nonparametric tests outperform when the shape of the component lifetime distributions are different (i.e., settings [D2] and [D3]). For example, from the top left panel of Figures 9 and 10 in the Supplementary Materials show that the proposed empirical likelihood ratio tests provide better power performance than the Mann–Whitney U test in detecting changes in the shape parameters. Despite the alteration of the shape parameter in setting [D5] (see Figures 11, 17, and 23 in the Supplementary Materials, the proposed tests do not outperform the U test, which may be due to minor deviations in the shape of the gamma distributions.

7 Concluding remarks

In this manuscript, we studied the problem of testing the homogeneity of component lifetime distributions based on system-level data, which can be applied to many practical situations in life testing procedures involving systems with known structures. We showed that those existing parametric test procedures might suffer from the inflation of Type-I error rates when the underlying probability distributions of the component lifetimes are misspecified. To address this issue, we focus on developing nonparametric statistical test procedures for the homogeneity of component lifetime distributions based on system-level data. We proposed two empirical likelihood ratio tests based on the empirical likelihood ratio and the nonparametric estimation of component lifetime distributions. We provided the computational algorithms for obtaining the null distributions of the test statistics using the Monte Carlo method. Our simulation results show that the proposed nonparametric procedures provide comparative power values with those existing tests. These proposed test procedures have advantages in power performance when the two systems are very different. The computer programs to execute the test procedures presented in this manuscript are written in R (R Core Team 2022), and they are available from the authors upon request.

For future research, since censoring and truncation are common in life testing procedures, one can consider the extensions of the test procedures discussed in this paper to the cases in which some system lifetimes are censored. On the other hand, in this work, the system structures are assumed to be known. This assumption may not be realistic in some situations in which the systems of interest are black boxes. Therefore, developing test procedures for the homogeneity problem will be interesting when complete information about the system structure is unavailable. In this situation, we can consider that auxiliary data, such as the number of failed components at the time of system failure, is available along with system lifetime (Jin et al. 2017).

Another possible research direction is to investigate using the homogeneity tests for detecting the dependence between the component lifetimes. Note that the homogeneity tests of component lifetime distributions based on system lifetime data studied here can also be used to detect the dependence between the components. However, when the null hypothesis in Eq. (4) is rejected, it may be due to the difference between the lifetime distributions of the components in the two systems or the dependence between the component lifetimes in the two systems, and these effects may not be distinguishable based on the current test procedures. It will be interesting to develop statistical procedures to detect the lack of independence between the component lifetimes using the homogeneity tests.

References

Balakrishnan N, Ng HKT, Navarro J (2011) Exact nonparametric inference for component lifetime distribution based on lifetime data from systems with known signatures. J Nonparametric Stat 23(3):741–752

Balakrishnan N, Ng HKT, Navarro J (2011) Linear inference for type-II censored lifetime data of reliability systems with known signatures. IEEE Trans Reliab 60(2):426–440

Bhattacharya D, Samaniego FJ (2010) Estimating component characteristics from system failure-time data. Naval Res Logist (NRL) 57(4):380–389

Bickel PJ, Doksum KA (2001) Mathematical statistics—basic ideas and selected topics, vol I, 2nd edn. Prentice-Hall, Upper Saddle River

Birnbaum ZW, Saunders SC (1969) A new family of life distributions. J Appl Probab 6(2):319–327

Boyles R, Samaniego F (1987) On estimating component reliability for systems with random redundancy levels. IEEE Trans Reliab 36(4):403–407

Bueno VC (1988) A note on the component lifetime estimation of a multistate monotone system through the system lifetime. Adv Appl Probab 20(3):686–689

Chahkandi M, Ahmadi J, Baratpour S (2014) Non-parametric prediction intervals for the lifetime of coherent systems. Stat Pap 55(4):1019–1034

Chen SX, Keilegom IV (2009) A review on empirical likelihood methods for regression. TEST 18:415–447

Eryilmaz S, Koutras MV, Triantafyllou IS (2011) Signature based analysis of m-consecutive-k-out-of-n: F systems with exchangeable components. Naval Res Logist (NRL) 58(4):344–354

Frenkel I, Khvatskin L (2006) Cost–effective maintenance with preventive replacement of oldest components. W SKRÓCIE, p 37

Guess FM, Usher JS, Hodgson TJ (1991) Estimating system and component reliabilities under partial information on cause of failure. J Stat Plan Inference 29(1–2):75–85

Hall P, Jin Y, Samaniego FJ (2015) Nonparametric estimation of component reliability based on lifetime data from systems of varying design. Statistica Sinica 1313–1335

Jin Y, Hall PG, Jiang J, Samaniego FJ (2017) Estimating component reliability based on failure time data from a system of unknown design. Statistica Sinica 479–499

Kochar S, Mukerjee H, Samaniego FJ (1999) The “signature’’ of a coherent system and its application to comparisons among systems. Naval Res Logist (NRL) 46(5):507–523

Mann HB, Whitney DR (1947) On a test of whether one of two random variables is stochastically larger than the other. Ann Math Stat 50–60

Meeker WQ, Escobar LA, Pascual FG (2022) Statistical methods for reliability data, 2nd edn. Wiley, New York

Meilijson I (1981) Estimation of the lifetime distribution of the parts from the autopsy statistics of the machine. J Appl Probab 18(4):829–838

Miyakawa M (1984) Analysis of incomplete data in competing risks model. IEEE Trans Reliab 33(4):293–296

Nadarajah T, Variyath AM, Loredo-Osti JC (2014) Empirical likelihood based longitudinal data analysis. Open J Stat 10:611–639

Navarro J (2022) Introduction to system reliability theory. Springer, Cham

Navarro J, Rubio R (2009) Computations of signatures of coherent systems with five components. Commun Stat Simul Comput 39(1):68–84

Navarro J, Ruiz JM, Sandoval CJ (2007) Properties of coherent systems with dependent components. Commun Stat Theory Methods 36(1):175–191

Navarro J, Samaniego FJ, Balakrishnan N (2011) Signature-based representations for the reliability of systems with heterogeneous components. J Appl Probab 48(3):856–867

Navarro J, Ng HKT, Balakrishnan N (2012) Parametric inference for component distributions from lifetimes of systems with dependent components. Naval Res Logist (NRL) 59(7):487–496

Ng HKT, Navarro J, Balakrishnan N (2012) Parametric inference from system lifetime data under a proportional hazard rate model. Metrika 75(3):367–388

Nordman DJ, Lahiri SN (2014) A review of empirical likelihood methods for time series. J Stat Plan Inference 155:1–18

Owen AB (1988) Empirical likelihood ratio confidence intervals for a single functional. Biometrika 75(2):237–249

Owen AB (2001) Empirical likelihood. CRC Press, Boca Raton

R Core Team (2022) R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria

Samaniego FJ (1985) On closure of the IFR class under formation of coherent systems. IEEE Trans Reliab 34(1):69–72

Samaniego FJ (2007) System signatures and their applications in engineering reliability, vol 110. Springer, Berlin

Usher JS, Hodgson TJ (1988) Maximum likelihood analysis of component reliability using masked system life-test data. IEEE Trans Reliab 37(5):550–555

Wilcoxon F (1992) Individual comparisons by ranking methods. Breakthroughs in statistics. Springer, Berlin, pp 196–202

Wilks SS (1938) The large-sample distribution of the likelihood ratio for testing composite hypotheses. Ann Math Stat 9(1):60–62

Yang Y, Ng HKT, Balakrishnan N (2016) A stochastic expectation-maximization algorithm for the analysis of system lifetime data with known signature. Comput Stat 31(2):609–641

Zhang J (2002) Powerful goodness-of-fit tests based on the likelihood ratio. J R Stat Soc Ser B (Stat Methodol) 64(2):281–294

Zhang J (2006) Powerful two-sample tests based on the likelihood ratio. Technometrics 48(1):95–103

Zhang J, Ng HKT, Balakrishnan N (2015) Tests for homogeneity of distributions of component lifetimes from system lifetime data with known system signatures. Naval Res Logist (NRL) 62(7):550–563

Zhou M (2019) Empirical likelihood method in survival analysis. Chapman and Hall/CRC, Boca Raton

Acknowledgements

The authors would like to thank the Editor, the Associate Editor, and the referees for their valuable comments, which helped to improve the quality of this article. We especially thank the Associate Editor for assisting us in addressing the reviewers’ comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Qu, J., Ng, H.K.T. & Moon, C. Empirical likelihood ratio tests for homogeneity of component lifetime distributions based on system lifetime data. Comput Stat 39, 3007–3029 (2024). https://doi.org/10.1007/s00180-023-01421-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-023-01421-w