Abstract

This article describes a new adaptive Kriging method combined with adaptive importance sampling approximating the optimal auxiliary by iteratively building a Gaussian mixture distribution. The aim is to iteratively reduce both the modeling and sampling errors simultaneously, thus avoiding limitations in cases of very rare failure events. At each iteration, a near optimal auxiliary Gaussian distribution is defined and new samples are drawn from it following the scheme of adaptive multiple importance sampling (MIS). The corresponding estimator is provided as well as its variance. A new learning function is developed as a generalization of the U learning function for MIS populations. A stopping criterion is proposed based on both the modeling error and the variance of the estimator. Results on benchmark problems show that the method exhibits very good performances on both efficiency and accuracy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Industrial projects are subjected to uncertainties regarding the state of systems and their forecasted evolution. In turn, they induce uncertainties on the performances of the system and eventually the occurrence of a failure event. The probabilistic framework approaches such a problem by modeling input uncertainties as random variables defined by a joined probability distribution. Considering a black-box input–output relationship representing the system (called performance function), the output performance is simply a random variable, defined as the image of the input distributions through the performance function. Given a failure criterion associated with the random output variable(s), the failure probability is comprehensively defined as the (unknown) probability of occurrence of this event. The problem associated with the evaluation of such a probability is the central problem of structural reliability.

Computation methods for structural reliability problems stem in two main branches, the simulation and approximation methods. Sampling methods aim at estimating the failure probability by estimating the performance of a dedicated population of samples. Among the most famous simulation methods are the Monte Carlo method as well as advanced sampling methods such as: Importance sampling (Melchers 1990), Subset-simulations (Au and Beck 2001), Line sampling (Pradlwarter et al. 2005; Schuëller et al. 2004) or Directional sampling (Ditlevsen et al. 1986; Nie and Ellingwood 2000).

The approximation methods reduce the computation cost by resolving a simplified problem calibrated to minimize the approximation error, well known examples are the first and second order reliability methods (FORM and SORM) (Hasofer and Lind 1974; Lee et al. 2012; Lemaire 2013). For the interested readers, a general overview and discussion on FORM/SORM and their variants can be found in the work of Rackwitz (2001). The surrogate modeling approach is a type of approximation aiming at calibrating an emulator of the system which predicts its behavior without simulating the physics, and thus with only a fraction of the computation burden. The calibration of a surrogate model requires the definition and evaluation of a calibration dataset often called design of experiment (DoE). The advantage of this approach is the ability to estimate the performance of any population with the expense of evaluating the physical model only for the samples of the DoE. This general framework regroups many types of surrogate strategies, among the most popular are: support vector machines (Cortes and Vapnik 1995), polynomial chaos (Crestaux et al. 2009; Sudret 2008), Neural networks (Hurtado and Alvarez 2001) and Kriging (Matheron 1973; Bichon et al. 2008). In the context of structural reliability, Kriging-based methods have become increasingly popular in the past decade. This popularity can be related to one of their most appealing features, i.e., the estimation of their own localized prediction uncertainty in the shape of the posterior standard deviation. It allowed the development of the so-called adaptive Kriging approach which combine a sampling reliability method with an adaptive scheme to iteratively improve the calibration of the surrogate.

In an adaptive Kriging scheme both the sampling method and the surrogate induce an error, respectively a sampling and modeling error. The main goal is then to iteratively build a near-optimal DoE minimizing the modeling error. Almost all well-known reliability method has been combined with adaptive Kriging and the Literature of existing methods is very extensive. Among the most successful are Markov Chain Monte Carlo (MCMC, see Zhao et al. 2015; Song et al. 2019; Wei et al. 2019) and importance sampling. This overview is limited to the most common sampling-based strategies with a focus on importance-sampling approaches. For a wider overview of existing methods the reader can refer to Sudret (2012), Teixeira et al. (2021) and Moustapha et al. (2021). Most method that fall in that family minimize the modeling and sampling errors independently, for example by generating beforehand a suitable population, assumed to carry a negligible variance of the estimator. Well known examples of such methods are AK-MCS (Echard et al. 2011) or AK-IS (Echard et al. 2013).

Fewer methods propose to actively reduce both errors simultaneously. Dubourg et al. (2013) proposed to use the Kriging model to approximate the optimal auxiliary density, using MCMC sampling and K-means clustering in method called with Meta-IS. In a related method called metaAK-IS2 Cadini et al. (2014), proposed to combine the work of Echard et al. (2013) and Dubourg et al. (2013). Several of these methods have in common to iteratively approximate the optimal auxiliary importance sampling distribution using the Kriging model. Balesdent et al. (2013) proposed to do so using the NAIS method (Morio 2011), Liu et al. (2020) used the adaptive-linked importance sampling method, Razaaly and Congedo (2018) using a Gaussian mixture calibrated using K-mean clustering, Yang et al. (2018) with kernel-density-estimation or Zhang and Taflanidis (2018) using intermediate condition distribution similar to subset simulation. Those methods usually then require advanced sampling strategies such as MCMC to draw sample from the auxiliary. Other related work combining adaptive Kriging with advanced importance sampling include Xiong and Tan (2018), Yun et al. (2018), Zhao et al. ( 2015), and Zhang et al. (2020).

In this work a related method is proposed where the optimal importance sampling distribution is iteratively approximated with a Gaussian mixture distribution using a scheme that can be related to the adaptive importance sampling (AMIS) and thus avoiding the limitations associated with advanced sampling techniques. The Gaussian mixture is refined by iteratively adding new normal distributions defined as a near-optimal from a variance-reduction point of view. The population is also built iteratively by simply drawing and adding samples from the last Gaussian distribution. For convenience, the proposed method will be referred to as AK-AMIS for adaptive-Kriging and adaptive multiple importance sampling (MIS).

The paper starts describing the method with the failure probability estimator in Sect. 2. A dedicated learning function is developed followed by the description of the iterative auxiliary distribution, defined as a near-optimal from a variance reduction perspective. Two stopping parameters are proposed based respectively on the expected error and the expected variance of the estimator and are combined in a single stopping criterion. The method is then validated in Sect. 3 through three academic benchmark problems and an industrial one highlighting its performances and limitations. The results are discussed, and the performances of AK-AMIS are compared to well established and closely related adaptive reliability methods. The paper finally concludes in Sect. 4 with some lead to further improve the performances.

2 The AK-AMIS method

One limitation of most sampling-based adaptive Kriging approaches (such as AK-MCS or AK-IS) is that they rely on a given population of samples. The method then adaptively minimizes the surrogate modeling error associated with the estimator on that given population. Considering that surrogate approaches combined with sampling techniques induce two types of error, respectively the modeling and sampling error, the simple adaptive Kriging scheme focuses on minimizing only the first. The second is considered given and comparatively negligible if the population is large enough. However, the validity of this hypothesis is usually impossible to evaluate beforehand.

One way to tackle this limitation is, instead of reasoning with a given population and distribution, to iteratively refine them according to the kriging surrogate. Several methods discussed in the previous section proposed schemes to do so, focusing on approximating the optimal distribution and then sampling with advanced sample generation techniques. The approaches described in this work is a new one falling into that category, where the optimal distribution is approximated with a Gaussian mixture and the population is generated simultaneously. The auxiliary distribution is built iteratively, starting from the standard Gaussian distribution at the first iteration and adding a new one every iteration. The method then relies on iteratively selecting both the best sample to enrich the DoE and the best Gaussian distribution to enrich the auxiliary mixture distribution.

The Gaussian mixture auxiliary requires to use a dedicated estimator of the failure probability, see Sect. 2.1. The sequential DoE relies on the definition of a dedicated learning function and is discussed in Sect. 2.2. The enrichment of the population is based on the scheme of the AMIS as proposed by Cornuet et al. (2012), see Sect. 2.3. Finally, each part can be associated with a stopping criterion, two are proposed and briefly discussed in Sect. 2.4.

2.1 Multi-IS estimator

In the proposed approach, the auxiliary population is adaptively refined, meaning that the population consists of an aggregate of sub-populations each from a different auxiliary distribution. The failure probability could be estimated with each sub-population directly using the IS estimator. If the auxiliary distributions are strictly identical the overall estimator would be the average of the individual IS estimators and if the distributions are strictly non-overlapping it would be the sum. However, in the general case, the MIS estimator requires a dedicated definition. Such an estimator has been proposed by Veach and Guibas (1995) and popularized by Owen and Zhou (2000). The estimator used in AK-AMIS and detailed in this section is a particular case the first and is referred to as the multi-sample model (with the balance heuristic) in the field of computer graphics (Víctor Elvira et al. 2019; Sbert et al. 2016). Notice that in the context of AMIS, the set of auxiliary distribution is built sequentially. Therefore, each time a set of new samples is generated, the weight all samples should be updated, not only the weight of the new samples. The procedure could thus be seen as a single importance sampling where the auxiliary population is drawn from a mixture made of the sum of the auxiliary distributions (Cornuet et al. 2012).

Consider a d-dimensional input vector X with a joint probability distribution function f. The reliability problem can be formulated as the estimation of the probability \({P}_{f}=P\left(g\left(\mathbf{X}\right)\le 0\right)\) with \(g: {\mathbb{R}}^{d}\to {\mathbb{R}}\) the performance function of the system such that a negative value indicates the failure event. After k iteration(s), the population \({S}_{k}\) is composed of \(\sum_{i=1}^{k}{n}_{i}\ne 0\) samples, each set of \({n}_{i}\) samples are drawn from an auxiliary distribution \({h}_{i}: {\mathbb{R}}^{d}\to {\mathbb{R}}^{*+}\). The failure probability can then be defined as follows:

with I the classification function such that \(I\left({\varvec{X}}\right)=1\) if \(g\left({\varvec{X}}\right)\le 0\), i.e., \({\varvec{X}}\) is failing and \(I\left({\varvec{X}}\right)=0\) otherwise. Each integral can be approximated by a sum using the Monte Carlo estimator to define the following MIS estimator:

with \({{\varvec{X}}}_{j,i}\) the jth sample drawn for the ith distribution, \({N}_{k}=\) \({\sum }_{i=1}^{k}{n}_{i}\) the total number of samples in the population and \({w}_{k}\left({\varvec{X}}\right)=\frac{1}{{N}_{k}}{\sum }_{i=1}^{k}{{n}_{i}h}_{i}\left({\varvec{X}}\right)\) the mixture of the auxiliary distributions. This latest expression can be simplified further into the classical IS estimator by noticing that the aggregated population is equivalent to a population of the same size directly drawn from the mixture \({w}_{k}\) (see, e.g., Cornuet et al. 2012; Victor Elvira et al. 2015):

This formulation holds for any set of (non-zero) auxiliary distribution \({h}_{i}\). In the context of the AK-AMIS method, we will adopt the simple scheme of the mean-shift importance sampling and only consider Gaussian distributions with unit standard deviation such that \({h}_{k}=\varphi ({\rm M}_{k},1)\) with \(\varphi\) the probability distribution function of the standard normal distribution. In addition, the set of auxiliary distributions starts with the actual joint distribution of the input parameters, i.e., \({h}_{1}=f\).

The variance of this estimator is defined as follows:

thus:

2.2 Definition of the learning function

In the context of reliability analysis, the only modeling errors that have an influence on the failure probability estimator are the misclassification of samples. The posterior probability of misclassification \(\Phi \left(\frac{-\left|\widehat{\mu }\left({\varvec{X}}\right)\right|}{ \widehat{\sigma }\left({\varvec{X}}\right)}\right)\) is a good indicator of the individual contribution of each sample to the overall modeling error, which is base principle behind U learning function of the AK-MCS algorithm.

In the framework of the AK-AMIS method, the learning function should also reflect that each sample is associated with a weight that affects its contribution to the estimated failure probability. It is thus proposed to consider another related quantity of interest which is more suited for the MIS population previously described: the expected absolute failure probability error induced by the Kriging misclassifications: \(E\left(\left|\Delta {P}_{f}\right|\right)\).

In this section, we are only interested in reducing the modeling error, let us then consider a given population \({S}_{k}= \left\{{{\varvec{X}}}_{i}, i=\left[1,\dots ,{N}_{k}\right], {N}_{k}= {\sum }_{i=1}^{k}{n}_{i}\right\}\). For each sample, the Kriging-based classification function \(\widehat{I}\left({{\varvec{X}}}_{i}\right)\) is considered as a random variable holding the uncertainty related to the Kriging prediction, i.e.:

The expected absolute prediction error can then be developed as follows:

with \({\widehat{P}}_{ \mathrm{krig}}^{\mathrm{MIS}}\) the MIS estimator associated with the Kriging surrogate classification function. Notice that since the population is given, the Kriging posterior is the only random variable in Eq. (7). Unfortunately, this quantity does not allow us to capture the contribution of each individual sample to the overall error, which is the goal of the learning function. It is therefore proposed to consider instead the following upper bound of the absolute error:

with \({L}_{k}\left({\varvec{X}}\right)=\frac{f\left({\varvec{X}}\right)}{{w}_{k}\left({\varvec{X}}\right)}\Phi \left(\frac{-\left|\widehat{\mu }\left({\varvec{X}}\right)\right|}{\widehat{\sigma }\left({\varvec{X}}\right)}\right)\) the proposed learning function for the AK-AMIS algorithm.

This expression is however prone to overly prioritize areas of small posterior variance. This behavior is due to the observed tendency of Kriging models to underestimate (respectively overestimate) the variance in regions of high (respectively low) variance in noise-free problems. In methods considering a given population this behavior doesn’t affect the performances significantly since the method eventually eliminates all affected samples. However, in the proposed approach, new samples are iteratively added which could lead the algorithm to focus only on areas already well calibrated (exploitation) and ignore areas poorly calibrated (exploration). The solution chosen to counteract this behavior is to introduce a hyperparameter \(p\) which is simply a power affected to the posterior standard deviation in the expression of the learning function:

From the authors experience, the value \(p=3\) yields satisfactory results and is the value used in the applications presented in Sect. 3. As any hyperparameter unrelated to interpretable metrics, the choice of p is at least partly arbitrary and any hypothetical optimal value would be problem dependent. The choice proposed in this article is based on the limited empirical knowledge available to the authors, a short presentation of them and their interpretation is proposed in Appendix 1.

The best candidate for enriching the DoE \({{\varvec{X}}}^{\boldsymbol{*}}\) is then defined as the sample satisfying:

2.3 Adaptive multiple importance sampling strategy

As previously mentioned, this algorithm approaches the optimal IS auxiliary by iteratively building a Gaussian mixture, however the proposed framework is presented in a broader context and would apply for a mixture of any distribution. The goal is to incrementally reduce the variance of the MIS estimator as described in Eq. (5). The purpose of this section is thus to find a way to iteratively define a set of near-optimal auxiliary distributions from a variance reduction perspective without additional calls to the performance function. The proposed AK-AMIS method uses the general scheme described in Cornuet et al. (2012) which reflects in the way the estimator is updated as described in Sect. 2.1. However, the sequential definition of the auxiliary distribution presented in this section differs from the AMIS method (Cornuet et al. 2012) and instead of minimizing a statistical distance metrics, it is proposed to directly minimize the variance of the estimator associated with the Kriging surrogate.

Considering k previously computed iterations, the goal is to estimate the next near-optimal auxiliary distribution \({h}_{k+1}\). Based on the expression of the variance as given in Eq. (5), the optimal mixture \({w}_{k+1}\) is reached when

thus

One solution for an optimal \({w}_{k+1}\) is thus (see, e.g., Morio 2011):

By definition of the mixture distribution, we have:

thus, the optimal new auxiliary \({h}_{k+1}^{*}\) can be defined as:

Or

Defining such an optimal distribution faces the same issue as the classical importance sampling method, which is that neither \({P}_{f}\) nor \(I\) are known. It is then proposed to chose \({h}_{k+1}^{*}\) as a near-optimal solution in a similar way as the classical mean-shift importance sampling, i.e., a normal distribution with unit standard deviation and mean \({\rm M}_{k+1}\) such that:

Considering that \({w}_{k}\), \({N}_{k}\) and \({n}_{k+1}\) are strictly positive, we reach the following equivalent expression:

In order to avoid extra evaluations of the performance function, the classification function and failure probability are replaced by their surrogate counterpart:

However, doing so induces a major issue that needs to be addressed: because of the behavior of \(\widehat{I}\), all samples outside of the failure domain have a zero-value and cannot be sorted. Therefore, at the initial stage, when the Kriging model is badly calibrated, \(\widehat{I}\) may be equivalent to the null function over \({\mathbb{R}}^{d}\) thus preventing the algorithm from converging. To overcome this limitation, the classification function is replaced by the function \(\Phi \left(\frac{-\widehat{\mu }\left({\varvec{X}}\right)}{\widehat{\sigma }\left({\varvec{X}}\right)}\right)\), which can be interpreted as the probability, according to the Kriging posterior, that the sample falls in the failure domain. In this case, it acts as a sigmoid-like function following the trend of the classification function while providing a sortable output value. A related issue occurs with \({\widehat{P}}_{\mathrm{krig}}^{\mathrm{MIS}}\) which may be equal to zero at initial stages, the same strategy of replacing \(\widehat{I}\) with \(\Phi \left(\frac{-\widehat{\mu }\left({\varvec{X}}\right)}{\widehat{\sigma }\left({\varvec{X}}\right)}\right)\) is therefore used in the \({\widehat{P}}_{\mathrm{krig}}^{\mathrm{MIS}}\) estimator. \({\mathrm{\rm M}}_{k+1}\) is finally defined by solving the optimization problem defined in Eq. (20).

It is further proposed to choose the last sample added to the DoE (\({{\varvec{X}}}^{\boldsymbol{*}}\)) as the starting point of the optimization algorithm. The reason being that, because of the computation error, the term \(\Phi \left(\frac{-\widehat{\mu }\left({\varvec{X}}\right)}{\widehat{\sigma }\left({\varvec{X}}\right)}\right)\) may be equal to zero for all samples in areas that are both far from the limit-state and well calibrated. The optimization process might then be unable to start if the initial point of the optimization algorithm is chosen in such an area. By the definition of the learning function, \({{\varvec{X}}}^{\boldsymbol{*}}\) is a good starting point candidate to avoid this issue. In practice the optimization problem is solved using a global optimization strategy and the sequential quadratic programming algorithm.

The behavior of \(\Phi \left(\frac{-\widehat{\mu }\left({\varvec{X}}\right)}{\widehat{\sigma }\left({\varvec{X}}\right)}\right)\) is illustrated in Fig. 1, in the context of application of the algorithm to the 4-modes benchmark problem presented in Sect. 3.1, for the early and late stages of the algorithm. Choosing this function instead of an actual sigmoid function or any other analytical equivalent allows us to avoid defining additional hyper-parameters.

Finally, it is necessary to define the number of new samples drawn from the last auxiliary distribution: \({n}_{k+1}\). The development presented in this paper did not focus on defining an adaptive population size for the iterative enrichment. It is proposed to use the simple scheme of iteratively adding a fixed number of new samples \({n}_{1}=\dots ={n}_{k+1}=n\). The choice of this parameter has an influence on the performance of the method, but it is found that this influence quickly decreases as the number of samples increases. Choosing a relatively low number of samples is more conservative since it ensures the variance-based stopping criterion (see Sect. 2.4) does not converge prematurely but can needlessly delay the termination of the algorithm. Increasing this number may in turn result in a significant computation time especially for the calibration of the Kriging model and the evaluation of the estimator. A few thousands of samples per dimension seems a good compromise for all the problems the method has been tested on.

2.4 Two stopping criteria

The enrichment of the DoE and the population are aimed at respectively minimizing the modeling error and the variance of the estimator. It is thus proposed to define a stopping criterion for each process and stop the algorithm when both are satisfied simultaneously.

The first criterion is directly related to the learning function. The most intuitive way of introducing such a criterion would be to set a threshold \({L}_{\mathrm{stop}}\) for the learning function and consider that the algorithm converged if \(L({{\varvec{X}}}^{\boldsymbol{*}})\le {L}_{\mathrm{stop}}\). However, the convergence of this parameter is usually quite noisy and may lead to premature termination. Therefore, the proposed criterion uses another related and more stable quantity: \(E\left(\left|\Delta {P}_{f}\right|\right)\), as defined in Eq. (8). The first stopping criterion can then be rephased as follows:

with \({E}_{\mathrm{stop}}\) a stopping threshold that can then be adjusted to a desired level of precision. This criterion is however affected by the inaccuracies mentioned in Sect. 2.2 associated with regions of high and low posterior variance. It is therefore proposed to use the conservative approach of evaluating both the expected error related to the unaltered Kriging surrogate and considering the power hyperparameters. The error-based criterion is then only satisfied when both errors are below the threshold:

The second termination criterion is based on the variance of the estimator as given by Eq. (5). However, since the classification function I is unknown is it replaced by the Kriging surrogate \(\widehat{I}\):

The related stopping criterion is then simply satisfied when the corresponding coefficient of variation crosses a threshold value \({\mathrm{CoV}}_{\mathrm{stop}}\) corresponding to a desired level of confidence, i.e.

Using both criteria simultaneously provides a much more robust termination of the algorithm than using them individually. The reason is that unexpected termination of the error-based criterion can occur when very few samples exist in badly calibrated regions. While premature termination of the variance-based criterion requires the Kriging surrogate to significantly underestimate the actual variance associated with the given population. Since the variance is estimated empirically this event requires that a significant part of the population falls in badly calibrated areas. These events are clearly mutually exclusive, therefore, if one is fooled the other one has to “work as intended”. Since these events are expected to occur only at early stages when the Kriging model is very badly calibrated, we can expect the later to acknowledge either that the modeling error is too high or that the population is too scarce to terminate. With this stopping strategy the complete flowchart of the algorithm can be drawn as illustrated in Fig. 2.

As noticed by Wang et al. (2019) estimators directly based on the Kriging model as the one proposed above usually do not directly correlate with the quantity they are intended to measure. This is the reason why they are associated with very conservative thresholds. Even though this has not been the scope of this work, more sophisticated stopping criteria such as the ESC (Wang and Shafieezadeh 2019; Yi et al. 2020) may improve significantly the robustness of the proposed method.

3 Applications

For the validation of the proposed approach the results will be compared with related methods from the literature namely AK-MCS (Echard et al. 2011) or AK-IS (Echard et al. 2013) when applicable, meta-AK-IS2 (Cadini et al. 2014) and MetaAL-OIS (Razaaly and Congedo 2018). The first three are chosen because their efficiency have been thoroughly proven, meta-AK-IS2 being one of the most popular method combining Kriging surrogate with adaptive importance sampling. The last is selected because it is more recent, exhibits challenging performances on the proposed examples and it is closely related to the proposed approach. In addition to the main comparative study, additional performance comparison are provided in Appendix 2 considering two other reference methods form the literature: AK-ALIS (Liu et al. 2020) and AKOIS (Zhang et al. 2020).

The performances are then compared over four test cases, three are challenging analytical examples which are standard in the literature of comparable methods. They are also the three most challenging of the five examples used for the performance validation of MetaAL-OIS, allowing a fair comparison between the two methods. These analytical examples are also included in the generalized benchmark for active learning from Moustapha et al. (2021), which aims at providing a broad overview of the performances of adaptive-reliability methods and facilitating performance comparison in the literature. The last example is an engineering problem in the context of radioactive waste storage and associated with extremely rare failure event.

The number of new samples drawn per iteration \(n\) was set equal to \({10}^{4}\). The stopping criterion is as discussed in Sect. 2.4 with \({E}_{\mathrm{stop}}=5\%\), and \({\mathrm{CoV}}_{\mathrm{stop}}=0.4\%\). It is assumed that no primary knowledge is available regarding the behavior of the performance functions and the deterministic part of the Kriging model is defined as a constant function. The covariance function chosen is anisotropic squared exponential. Since the population grows iteratively, its size can become an issue for the most challenging cases (e.g., requiring several hundred of iterations) and can significantly slow down the evaluation of the Kriging model. Therefore, the population is capped to \({10}^{6}\) samples per dimensions in the following application, once the cap is reached only the DoE keeps being refined.

In all the following applications, the initial DoE \({n}_{\mathrm{ini}}\) is defined using a Latin hypercube design of size equal to the dimension of the problem plus one. As the following section aims at demonstrating, the proposed approach is very efficient at exploring the input space and does not require an extensive initial training set. A sparse initial training set is therefore chosen to illustrate that advantage.

The results presented are the number of calls to the performance function (number of iterations + number of samples in the initial DoE), the estimated failure probability, the corresponding relative error and the coefficient of variation of the estimator. The results are given for the AK-AMIS method in terms of average and extrema over 100 applications of the algorithm, with the relative error considering the Monte Carlo estimation as a reference.

3.1 A four failure modes function

A well-known 2-D series system with four failure modes specifically designed to challenge methods based on location a single “design point”. The performance function is the following (Razaaly and Congedo 2018):

with \({x}_{1}\) and \({x}_{2}\) independent standard Gaussian random variables and \({k}_{1}\), \({k}_{2}\) parameters affecting the failure probability. Two cases are considered to observe how the algorithm performances scale with the failure probability; the first (\({k}_{1}=3\), \({k}_{2}=7\)) is associated with reasonable failure probability and the second (\({k}_{1}=5.5\), \({k}_{2}=11\)) induces extremely low failure probability). The first case has been extensively studied in the literature and the comparison with reference methods will be conducted on this case. The performances of the chosen reference methods have not been published for the second case and is therefore only used as an illustration of performance evolution of the proposed method.

Figure 3 illustrates how the surrogate approximate the limit-state on a case of multiple failure regions on both cases. It can be observed how the algorithm efficiently spends more iterations refining areas based on how significant they are for the overall failure probability. This behavior leads to observable inaccuracies in areas of low interest but represents a good compromise allowing the algorithm to quickly terminate while reaching the desired precision level.

Figure 4 illustrates the convergence of the algorithm with respect to the failure probability, the error-based criterion and the variance-base criterion. The modeling error seem to be the limiting criterion on this example, with the variance-based one being satisfied much earlier. These results can be related to the complicated shape of the limit-state and the fact that the failure probability is not very low. In addition, we can observe that both sampling strategies efficiently and quickly converge toward the correct failure probability. This figure also illustrates the necessity of using both of the proposed stopping criteria with the one considering only the covariance of the estimator leading to premature termination.

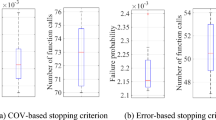

The results of AK-AMIS are given in Table 1 and compared with MCS, AK-MCS, MetaAK-IS2 and MetaAL-OIS. The variation of performances over the one hundred applications of the algorithm are further illustrated on Fig. 5. On that example, “design point” based methods such as AK-IS would fail at providing an accurate estimation of the failure probability, AK-MCS is therefore used as an alternative for reference. The proposed method converges significantly quicker than the selected references, with a reduction of about forty percent of the number of call compared to MetaAL-OIS. However, the precision of the estimation is also lower and interestingly reaches on average the error threshold (4%). The error amplitude for both cases in Table 1 and histogram on Fig. 5 show that some outliers exist in the performances with respectively ten and three applications terminating with an error above 10%. While the results illustrate how efficiently the algorithm converges, these outliers highlight that there is room for improvement with respect to the stopping criteria. The performance evolution between case one and two highlights how efficiently the method scales with decreasing failure probabilities with the number of calls being increased by a factor of less than two for a decrease in failure probability from an order \({10}^{-3}\) to \({10}^{-8}\).

3.2 The modified Rastrigin function

A standard 2-D analytical function with highly non-linear behavior known for involving non-convex and isolated failure domains. The shape of its limit-state makes this example particularly challenging. It is associated with a quite high failure probability (\({P}_{f}\sim 7\times {10}^{-2}\)). Considering the high probability of failure this case is not the kind of application AK-AMIS is designed for, allowing us to observe the limits of its applicability. The performance function reads as follows (Razaaly and Congedo 2018):

\({x}_{1}\) and \({x}_{2}\) are independent standard Gaussian random variables. Figure 6 illustrates that the algorithm successfully discovers and refine the calibration around the complex limit state. It is also worth noticing that the mean of the Gaussian components stayed quite close to the origin, suggesting that there is not a great added value from the population refinement scheme.

The limiting criterion in this application is the modeling error as illustrated in Fig. 7 illustrating a second time that relying solely on the covariance-based criterion would have led to a premature termination of the algorithm. The modeling error successfully converges in all applications of the method but the amplitude of variation is quite large and noisy even after a large number of iterations, which is probably linked to the complexity of the limit state geometry. Convergence of the other two parameters is overall steady but quite slow.

The results, given in Table 2 and illustrated in Fig. 8, show that the number of calls before convergence varies significantly between 297 and 562 with an average of 390. The error stays at satisfactory levels with a maximum of 8.14% and an average of 4.44. Compared with the reference methods the performances are mixed with slower convergence and higher error than MetaAL-OIS, but comparable performances as AK-MCS. This result is not particularly surprising since this application was designed to challenge the proposed method on cases it is not designed for. On this application there is very little benefit from the population refinement scheme since the limit-state is already well covered by a reasonable Monte Carlo population. Under those circumstances the proposed method simply behaves as AK-MCS and exhibit relatively similar performances.

3.3 Dynamic response of a non-linear oscillator

The last standard function is a dimension six problem with a relatively smooth limit-state and reasonable failure probability. The performance function corresponds to non-linear undamped single degree of freedom system an reads as (Razaaly and Congedo 2018):

with \({\omega }_{0}=\sqrt{\frac{{c}_{1}+{c}_{2}}{m}}\). The six input parameters are independent Gaussian random variables with parameters as detailed in Table 3. The random variables are not standard Gaussian; however, the problem is solved in the standard space. This example is not extremely challenging but allows us to compare the performances on a slightly higher dimension case.

The convergence plots in Fig. 9 illustrate how fast the algorithm converges to the failure probability in this example with a very narrow amplitude over all applications after only twenty iterations. On this example the limiting stopping criterion is the one related to the covariance of the estimator meaning that relying only on the error-based on would have led to termination of the algorithm before convergence of the failure probability. This result is the opposite of what was observed in the two last example highlighting the necessity of both criteria. The results in Table 4 and histograms in Fig. 10 highlight very good performances compared to the reference methods. It converges on average twice as fast as AK-MCS and MetaAL-OIS while providing very satisfactory accuracy with an average error of 2.8%. The histograms of performance metrics show that these results are quite consistent, event tough a few outliers exist with 2 of the 100 simulations reaching an error above 10%.

3.4 An industrial case with extremely rare failure event

The last example is an industrial example based on a finite-element model in dimension five and associated with an extremely rare failure event. The system is a low-alloy steel overpack for radioactive waste storage designed for the French deep geological repository project in collaboration with the French national radioactive waste management agency (Andra). The main characteristics of the system are discussed below, for more details the reader can refer to Persoons et al. (2021).

The overpack is the external part sealing the waste and isolating it from the environment during the first few centuries of storage. The part is near cylindrical and is stored in horizontal tunnels supported by a cylindrical steel lining as illustrated in Fig. 11. The overpack is subjected to uncertain and time-dependent corrosion and uncertain and time-dependent mechanical loading. Water can accumulate in the tunnel by diffusion through the rock environment, creating a time-dependent water level \({H}_{\mathrm{w}}\) in the tunnel and affecting the corrosion process. The mechanical loading is composed of a constant and deterministic external fluid pressure \({F}_{\mathrm{f}}\) and, after buckling of the liner, a contact pressure with second order pressure profile characterized by a time-dependent contact angle α.

Five uncertain parameters control the system, four describe the corrosion process and one additional for the mechanical loading:

-

\({C}_{\mathrm{a}}^{\mathrm{i}}\): initial stage (first century) atmospheric corrosion rate (μm/year).

-

\({C}_{\mathrm{a}}^{\mathrm{f}}\): long-term stabilized atmospheric corrosion rate (μm/year).

-

\({C}_{\mathrm{w}}^{\mathrm{f}}\): long term stabilized corrosion rate in water (μm/year).

-

\({C}_{\mathrm{lin}}\): corrosion rate at the outer surface of the liner (μm/year). This parameter is related to the buckling date of the liner and the start of the external mechanical contact pressure applied to the overpack.

-

\({P}_{\mathrm{c}}\): nominal contact pressure at the center of the pressure profile (MPa).

Each parameter is associated with independent lognormal distributions calibrated from expert’s judgement elicitation. The details about the distributions and their parameters are detailed in Table 5.

The overpack is considered as failing if it loses its sealing before 500 years either by plastic fracture or corrosion. The mechanical behavior of the system is studied through a finite element model with elastic–plastic behavior associated with a Gurson’s plastic degradation model (calibrated experimentally). The corrosion is simply considered as a time-dependent and non-homogeneous reduction of the thickness of the overpack. The continuous variation of the geometry though time is handled by iteratively solving static problems (one per century) corresponding to the state of the system at the given date.

Figure 12 illustrates the convergence of the method on this case. Due to the high computation time of the model, the method has only been applied 5 times which is why the convergence plots are much noisier than the previous examples. We can observe that the failure probability estimate clearly converged before termination of the algorithm. On that case the variance-based criterion is the limiting one, which is explained by the extremely low failure probability.

On the original study of the system AK-MCS was unable to estimate the failure probability because no-reasonably sized Monte Carlo population contained failing samples. The computation time associated with the model makes it impossible to apply classical simulation methods. The results are therefore only compared with FORM, see Table 6. It is worth mentioning that, in order to save computation time, the FORM analysis was initiated with a known failing sample, previously identified with the AK-AMIS method. The number of evaluations of the performance function therefore doesn’t account for the extra few iterations generally necessary to locate one when no information is available about the system.

We can observe that AK-AMIS provides a failure probability estimation compatible the FORM approximation for a reasonable computation cost (about 50% more evaluations than the search for the design point from FORM). The FORM estimation is smaller and very close (\(\sim 6\%\) difference) with those of AK-AMIS, which suggest that the limit-state is concave and quite linear around a unique design point.

From previous study of the system, we know that the failure is mainly driven by the corrosion process, and from observing the results in the 2D space \({C}_{\mathrm{a}}^{\mathrm{i}}\), \({C}_{\mathrm{w}}^{\mathrm{f}}\) we can see a rough estimation of the limit-state in the standard space (Fig. 13a) and in the physical space (Fig. 13b). The system exhibits two failure regions associated with a case of extremely fast early stage atmospheric corrosion and extremely fast late-stage corrosion under water. Both can be precisely located by solving an optimisation problem and their respective local failure probabilities can be estimated using FORM as \(2.96\times {10}^{-10}\) and \(4.12\times {10}^{-13}\). The figure illustrates that AK-AMIS managed to locate both failure modes by efficiently exploring the input space.

4 Conclusions and discussion

This article aims at describing a new adaptive Kriging method combined with adaptive importance sampling, reducing both the modeling and sampling errors dynamically. The solution developed with the AK-AMIS method is to adaptively build a near optimal auxiliary Gaussian mixture distribution simultaneously as enriching the DoE. To do so, AK-AMIS relies on a new scheme combining Adaptive Kriging with adaptive multiple importance sampling in which a sampling distribution is defined at each iteration and new samples are drawn from it before being added to the mixture population.

The proposed strategy exhibits very good performances especially on cases of low failure probability where it significantly outperforms comparable references methods, while still providing accurate and reasonably fast estimation on challenging cases it is not designed for. Two appealing results exhibited are first that even with a very sparse initial training dataset, the method is able to efficiently explore the input space and converge toward the correct failure probability. Second, the approach scales very efficiently with decreasing failure probabilities. Overall, the optimization-based sampling strategy performed better on the illustrated examples on rare failure events; efficiently exploring the input space and providing a comprehensible compromise between accuracy and computation time. The method is finally tested on an actual engineering case with extremely rare failure event, where it managed to efficiently explore the input space an locate two failure modes.

The proposed method could still benefit from improvements, the first main limitation being the cases of very high dimension, which is a well-known limitation of the Kriging models. For these cases, since the covariance function is based on Euclidean distance, the posterior standard deviation tends to be very high and roughly equal for all samples of the population. This result prevents the learning function from providing useful weights for the samples and in turn prevents the algorithm from efficiently converging toward the limit-state. Some methods exist in the literature to tackle this limitation the Kriging models such as additive Gaussian process regression models and are planned to be explored in future development. Second, the stopping criteria proposed are quite basic and, even though their combined usage provide satisfactory performances, they are not reliably correlated with the quantity they are intended to measure. More sophisticated error-based criteria have been explored and might provide improved robustness of the result.

References

Au S-K, Beck JL (2001) Estimation of small failure probabilities in high dimensions by subset simulation. Probab Eng Mech 16(4):263–277. https://doi.org/10.1016/S0266-8920(01)00019-4

Balesdent M, Morio J, Marzat J (2013) Kriging-based adaptive importance sampling algorithms for rare event estimation. Struct Saf 44(September):1–10. https://doi.org/10.1016/j.strusafe.2013.04.001

Bichon BJ, Eldred MS, Swiler LP, Mahadevan S, McFarland JM (2008) Efficient global reliability analysis for nonlinear implicit performance functions. AIAA J 46(10):2459–2468. https://doi.org/10.2514/1.34321

Cadini F, Santos F, Zio E (2014) An improved adaptive Kriging-based importance technique for sampling multiple failure regions of low probability. Reliab Eng Syst Saf 131(November):109–117. https://doi.org/10.1016/j.ress.2014.06.023

Cornuet J-M, Marin J-M, Mira A, Robert CP (2012) Adaptive multiple importance sampling. Scand J Stat 39(4):798–812. https://doi.org/10.1111/j.1467-9469.2011.00756.x

Cortes C, Vapnik V (1995) Support-vector networks. Mach Learn 20(3):273–297. https://doi.org/10.1007/BF00994018

Crestaux T, Le Maître O, Martinez J-M (2009) Polynomial chaos expansion for sensitivity analysis. Reliab Eng Syst Saf 94(7):1161–1172. https://doi.org/10.1016/j.ress.2008.10.008

Ditlevsen O, Olesen R, Mohr G (1986) Solution of a class of load combination problems by directional simulation. Struct Saf 4(2):95–109. https://doi.org/10.1016/0167-4730(86)90025-1

Dubourg V, Sudret B, Deheeger F (2013) Metamodel-based importance sampling for structural reliability analysis. Probab Eng Mech 33(July):47–57. https://doi.org/10.1016/j.probengmech.2013.02.002

Echard B, Gayton N, Lemaire M (2011) AK-MCS: an active learning reliability method combining Kriging and Monte Carlo simulation. Struct Saf 33(2):145–154. https://doi.org/10.1016/j.strusafe.2011.01.002

Echard B, Gayton N, Lemaire M, Relun N (2013) A combined importance sampling and Kriging reliability method for small failure probabilities with time-demanding numerical models. Reliab Eng Syst Saf 111(March):232–240. https://doi.org/10.1016/j.ress.2012.10.008

Elvira V, Martino L, Luengo D, Bugallo MF (2015) Efficient multiple importance sampling estimators. IEEE Signal Process Lett 22(10):1757–1761. https://doi.org/10.1109/LSP.2015.2432078

Elvira V, Martino L, Luengo D, Bugallo MF (2019) Generalized multiple importance sampling. Stat Sci 34(1):129–155. https://doi.org/10.1214/18-STS668

Hasofer AM, Lind NC (1974) Exact and invariant second-moment code format. J Eng Mech Div 100(1):111–121

Hurtado JE, Alvarez DA (2001) Neural-network-based reliability analysis: a comparative study. Comput Methods Appl Mech Eng 191(1–2):113–132. https://doi.org/10.1016/S0045-7825(01)00248-1

Lee I, Noh Y, Yoo D (2012) A novel second-order reliability method (SORM) using noncentral or generalized chi-squared distributions. J Mech Des 134(10):100912. https://doi.org/10.1115/1.4007391

Lemaire M (2013) Structural-reliability. Wiley, Hoboken

Liu F, Wei P, Zhou C, Yue Z (2020) Reliability and reliability sensitivity analysis of structure by combining adaptive linked importance sampling and Kriging reliability method. Chin J Aeronaut 33(4):1218–1227. https://doi.org/10.1016/j.cja.2019.12.032

Matheron G (1973) The intrinsic random functions and their applications. Appl Probab Trust 5(3):439–46831

Melchers RE (1990) Radial importance sampling for structural reliability. J Eng Mech 116(1):189–203. https://doi.org/10.1061/(ASCE)0733-9399(1990)116:1(189)

Morio J (2011) Non-parametric adaptive importance sampling for the probability estimation of a launcher impact position. Reliab Eng Syst Saf 96(1):178–183. https://doi.org/10.1016/j.ress.2010.08.006

Moustapha M, Marelli S, Sudret B (2021) A generalized framework for active learning reliability: survey and benchmark, June. ArXiv: 2106.01713 [Stat], http://arxiv.org/abs/2106.01713

Nie J, Ellingwood BR (2000) Directional methods for structural reliability analysis. Struct Saf 22(3):233–249. https://doi.org/10.1016/S0167-4730(00)00014-X

Owen A, Zhou Y (2000) Safe and effective importance sampling. J Am Stat Assoc 95(449):135–143

Persoons A, Serveaux J, Beaurepaire P, Labergere C, Chateauneuf A, Saanouni K, Bumbieler F (2021) “Reliability analysis of the ductile fracture of overpacks for high-level radioactive waste in repository conditions. ASCE–ASME J Risk Uncertain Eng Syst A 7(2):04021010. https://doi.org/10.1061/AJRUA6.0001121

Pradlwarter HJ, Pellissetti MF, Schenk CA, Schueller GI, Kreis A, Fransen S, Calvi A, Klein M (2005) Realistic and efficient reliability estimation for aerospace structures. Comput Methods Appl Mech Eng 194:1597–1617

Rackwitz R (2001) Reliability analysis—a review and some perspectives. Struct Saf 23(4):365–395. https://doi.org/10.1016/S0167-4730(02)00009-7

Razaaly N, Congedo PM (2018) Novel algorithm using active metamodel learning and importance sampling: application to multiple failure regions of low probability. J Comput Phys 368(September):92–114. https://doi.org/10.1016/j.jcp.2018.04.047

Sbert M, Havran V, Szirmay-Kalos L (2016) Variance analysis of multi-sample and one-sample multiple importance sampling. Comput Graph Forum 35(7):451–460. https://doi.org/10.1111/cgf.13042

Schuëller GI, Pradlwarter HJ, Koutsourelakis PS (2004) A critical appraisal of reliability estimation procedures for high dimensions. Probab Eng Mech 19(4):463–474. https://doi.org/10.1016/j.probengmech.2004.05.004

Song K, Zhang Y, Yu X, Song B (2019) A new sequential surrogate method for reliability analysis and its applications in engineering. IEEE Access 7:60555–60571. https://doi.org/10.1109/ACCESS.2019.2915350

Sudret B (2008) Global sensitivity analysis using polynomial chaos expansions. Reliab Eng Syst Saf 93(7):964–979. https://doi.org/10.1016/j.ress.2007.04.002

Sudret B (2012) Meta-models for structural reliability and uncertainty quantification. arXiv. http://arxiv.org/abs/1203.2062.

Teixeira R, Nogal M, O’Connor A (2021) Adaptive approaches in metamodel-based reliability analysis: a review. Struct Saf 89(March):102019. https://doi.org/10.1016/j.strusafe.2020.102019

Veach E, Guibas LJ (1995) Optimally combining sampling techniques for Monte Carlo rendering. In: Proceedings of the 22nd annual conference on computer graphics and interactive techniques—SIGGRAPH ’95. ACM Press, pp 419–428. https://doi.org/10.1145/218380.218498

Wang Z, Shafieezadeh A (2019) ESC: an efficient error-based stopping criterion for Kriging-based reliability analysis methods. Struct Multidisc Optim 59(5):1621–1637. https://doi.org/10.1007/s00158-018-2150-9

Wei P, Tang C, Yang Y (2019) Structural reliability and reliability sensitivity analysis of extremely rare failure events by combining sampling and surrogate model methods. Proc Inst Mech Eng O 233(6):943–957. https://doi.org/10.1177/1748006X19844666

Xiong B, Tan H (2018) A robust and efficient structural reliability method combining radial-based importance sampling and Kriging. Sci China Technol Sci 61(5):724–734. https://doi.org/10.1007/s11431-016-9068-1

Yang X, Liu Y, Fang X, Mi C (2018) Estimation of low failure probability based on active learning Kriging model with a concentric ring approaching strategy. Struct Multidisc Optim 58(3):1175–1186. https://doi.org/10.1007/s00158-018-1960-0

Yi J, Zhou Q, Cheng Y, Liu J (2020) Efficient adaptive Kriging-based reliability analysis combining new learning function and error-based stopping criterion. Struct Multidisc Optim 62(5):2517–2536. https://doi.org/10.1007/s00158-020-02622-3

Yun W, Lu Z, Jiang X (2018) An efficient reliability analysis method combining adaptive Kriging and modified importance sampling for small failure probability. Struct Multidisc Optim 58(4):1383–1393. https://doi.org/10.1007/s00158-018-1975-6

Zhang J, Taflanidis AA (2018) Adaptive Kriging stochastic sampling and density approximation and its application to rare-event estimation. ASCE–ASME J Risk Uncertain Eng Syst A 4(3):04018021. https://doi.org/10.1061/AJRUA6.0000969

Zhang X, Wang L, Sørensen JD (2020) AKOIS: an adaptive Kriging oriented importance sampling method for structural system reliability analysis. Struct Saf 82(January):101876. https://doi.org/10.1016/j.strusafe.2019.101876

Zhao H, Yue Z, Liu Y, Gao Z, Zhang Y (2015) An efficient reliability method combining adaptive importance sampling and Kriging metamodel. Appl Math Model 39(7):1853–1866. https://doi.org/10.1016/j.apm.2014.10.015

Acknowledgements

The authors gratefully acknowledge the support of the Research Foundation Flanders (FWO) under Grant GOC2218N (A. Persoons). In addition, we acknowledge the European Union’s Horizon 2020 Research and Innovation Program GREYDIENT under Grant Agreement n° 955393.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Replication of Results

The results related to applications 1–3 have been generated using the codes included as Supplementary Material. The results and model of application 4 are proprietary in nature, their availability is handled by Andra. Requests should be addressed to Dr. Bumbieler (frederic.bumbieler@ andra.fr).

Additional information

Responsible Editor: Zhen Hu

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Discussion of the p hyperparameter

As mentioned in Sect. 2.2 the choice of the p hyperparameter is at least partly arbitrary since it does not directly relate to an interpretable performance metric. In order to choose an adequate value one can only rely on empirical evidences. A good basis for such evidence is to study the sensitivity of the performances with respect to the parameter. The results of such a study are presented in this Appendix where the method is applied on three analytical examples (examples one and three from Sects. 3.1 and 3.2 and example four from Appendix 2) with varying values of p.

Thirty values of p are tested in the interval \(p\in [1, 6]\). For each, the method is applied ten times to observe the variation of performances (the same ten random number regenerator seeds are used for each value of p). The results are illustrated in Figs. 14 and 15 presenting respectively the mean and amplitude of number of calls to the performance function and relative error.

Average and extrema of the number of calls to the performance function as a function of the p hyperparameter for a example 1 (case 2), b example 3, and c example 4 (see) Appendix 2

Average and extrema of the failure probability error as a function of the p hyperparameter for a example 1 (case 2), b example 3, and c example 4 (see) Appendix 2

It can be observed from Fig. 14 that, in terms of computation time, there is a tendency for very high values of p to perform poorly, especially for the first two examples (a) and (b). For p between one and four no strong tendency is observable for the last two examples (b) and (c). For the first example (a) there seem to be an optimal value between \(p=3\) and \(p=4\).

In Fig. 15 it can be observed that for the first example (a) small values of p induce a higher variance of performance. In contrast, on the second example (b), the same tendency is observed for high values of p. No strong tendency can be observed from example three (c). From Fig. 15a the optimal value seem to be between \(p=3\) and \(p=5\), while for Fig. 15b the optimal value seem to be between \(p=1\) and \(p=3\)

From these results, the choice of \(p=3\) as proposed in Sect. 2.2 appears as a reasonable compromise providing satisfying performances and computation time on all tested examples.

Appendix 2: Additional performance comparison

In addition to the four examples presented in Sect. 3 the performances of the proposed approach are compared to two other relevant methods form the literature, i.e., AK-ALIS (Liu et al. 2020) and AKOIS (Zhang et al. 2020). The study is limited to the analytical example function presented in the respective articles and the reference results are reported as published. The performances of the proposed AK-AMIS method are studied as described in Sect. 3 and a hundred application have been performed on each application.

Overall the proposed AK-AMIS method performs very well on these four additional examples and reaches satisfying precision on all of them. AK-AMIS converges around 25% quicker than AK-ALIS while consistently staying below 2.4% error (Table 7). On examples six and seven AK-AMIS successfully converges but requires about 25% more evaluations of the performance function than AK-OIS (Tables 8 and 9). On example 8 (Table 10) AK-AMIS converges around 50% faster than AK-OIS while inducing a slightly higher error of about 2%.

Case 5: An analytical function from Liu et al. (2020),

Case 6: A bivariate example (Zhang et al. 2020),

Case 7: A polynomial function with multiple MPPs (Zhang et al. 2020),

Case 8: A polynomial function with multiple MPPs (Zhang et al. 2020).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Persoons, A., Wei, P., Broggi, M. et al. A new reliability method combining adaptive Kriging and active variance reduction using multiple importance sampling. Struct Multidisc Optim 66, 144 (2023). https://doi.org/10.1007/s00158-023-03598-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00158-023-03598-6