Abstract

Engineering computer codes are often computationally expensive. To lighten this load, we exploit new covariance kernels to replace computationally expensive codes with surrogate models. For input spaces with large dimensions, using the kriging model in the standard way is computationally expensive because a large covariance matrix must be inverted several times to estimate the parameters of the model. We address this issue herein by constructing a covariance kernel that depends on only a few parameters. The new kernel is constructed based on information obtained from the Partial Least Squares method. Promising results are obtained for numerical examples with up to 100 dimensions, and significant computational gain is obtained while maintaining sufficient accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction and main contribution

In recent decades, because simulation models have striven to more accurately represent the true physics of phenomena, computational tools in engineering have become ever more complex and computationally expensive. To address this new challenge, a large number of input design variables, such as geometric representation, are often considered. Thus, to analyze the sensitivity of input design variables or to search for the best point of a physical objective under certain physical constraints (i.e., global optimization), a large number of computing iterations are required, which is impractical when using simulations in real time. This is the main reason that surrogate modeling techniques have been growing in popularity in recent years. Surrogate models, also called metamodels, are vital in this context and are widely used as substitutes for time-consuming high-fidelity models. They are mathematical tools that approximate coded simulations of a few well-chosen experiments that serve as models for the design of experiments. The main role of surrogate models is to describe the underlying physics of the phenomena in question. Different types of surrogate models can be found in the literature, such as regression, smoothing spline (Wahba and Craven 1978; Wahba 1990), neural networks (Haykin 1998), radial basis functions (Buhmann 2003) and Gaussian-process modeling (Rasmussen and Williams 2006).

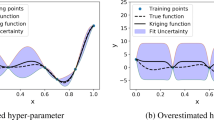

In this article, we focus on the kriging model because it estimates the prediction error. This model is also referred to as the Gaussian-process model (Rasmussen and Williams 2006) and was presented first in geostatistics (see, e.g., Cressie 1988 or Goovaerts 1997) before being extended to computer experiments and machine learning (Schonlau 1998; Sasena 2002; Jones et al. 1998; Picheny et al. 2010). The kriging model has become increasingly popular due to its flexibility in accurately imitating the dynamics of computationally expensive simulations and its ability to estimate the error of the predictor. However, it suffers from some well-known drawbacks in high dimension, which may be due to multiple causes. For starters, the size of the covariance matrix of the kriging model may increase dramatically if the model requires a large number of sample points. As a result, inverting the covariance matrix is computationally expensive. The second drawback is the optimization of the subproblem, which involves estimating the hyper-parameters for the covariance matrix. This is a complex problem that requires inverting the covariance matrix several times. Some recent works have addressed the drawbacks of high-dimensional Gaussian processes (Hensman et al. 2013; Damianou and Lawrence 2013; Durrande et al. 2012) or the large-scale sampling of data (Sakata et al. 2004). One way to reduce CPU time when constructing a kriging model is to reduce the number of hyper-parameters, but this approach assumes that the kriging model exhibits the same dynamics in all directions (Mera 2007).

Thus, because estimating the kriging parameters can be time consuming, especially with dimensions as large as 100, we present herein a new method that combines the kriging model with the Partial Least Squares (PLS) technique to obtain a fast predictor. Like the method of principle components analysis (PCA), the PLS technique reduces dimension and reveals how inputs depend on outputs. PLS is used in this work because PCA only exposes dependencies between inputs. Information given by PLS is integrated in the covariance structure of the kriging model to reduce the number of hyper-parameters. The combination of kriging and PLS is abbreviated KPLS and allows us to build a fast kriging model because it requires fewer hyper-parameters in its covariance function; all without eliminating any input variables from the original problem. In general, the number of kriging parameters is equal to the number of dimension which is reduced to at maximum 4 parameters with our approach.

The KPLS methods is used for many academic and industrial verifications, and promising results have been obtained for problems with up to 100 dimensions. The cases used in this paper do not exceed 100 input variables, which should be quite sufficient for most engineering problems. Problems with more than 100 inputs may lead to memory difficulties with the toolbox Scikit-learn (version 0.14), on which the KPLS method is based.

This paper is organized as follows: Section 2 summarizes the theoretical basis of the universal kriging model, recalling the key equations. The proposed KPLS model is then described in detail in Section 3 by using the kriging equations. Section 4 compares and analyzes the results of the KPLS model with those of the kriging model when applied to classic analytical examples and some complex engineering examples. Finally, Section 5 concludes and gives some perspectives.

2 Universal kriging model

To understand the mathematics of the proposed methods, we first review the kriging equations. The objective is to introduce the notation and to briefly describe the theory behind the kriging model. Assume that we have evaluated a cost deterministic function at n points x (i) (i = 1,…,n) with

and we denote by X the matrix [x (1)t,…,x (n)t]t. For simplicity, B is considered to be a hypercube expressed by the product between intervals of each direction space, i.e., B = ∏j = 1d[a j ,b j ], where a j ,b j ∈ ℝ with a j ≤ b j for j = 1,…,d. Simulating these n inputs gives the outputs y = [y (1),…,y (n)]t with y (i) = y(x (i)) for i = 1,…,n. We use ŷ(x) to denote the prediction of the true function y(x) which is considered as a realization of a stochastic process Y(x) for all x∈B. For the universal kriging model (Roustant et al. 2012; Picheny et al. 2010), Y is written as

where, for j = 1,…,m, f j is a known independent basis function, β j ∈ ℝ is an unknown parameter, and Z is a random variable defined by Z(x)∼(0,k), with k being a stationary covariance function, also called a covariance kernel. The kernel function k can be written as

where σ 2 is the process variance and r x x ′ is the correlation function between x and x ′. However, the correlation function r depends on some hyper-parameters 𝜃 and it is considered to be known. We also denote the n × 1 vector as r x X = [r x x (1),…,r x x (n)]t and the n × n covariance matrix as R = [r x (1) X,…,r x (n) X].

2.1 Derivation of prediction formula

Under the hypothesis above, the best linear unbiased predictor for y(x), given the observations y, is

where f(x)=[f 1(x),…,f m (x)]t is the m × 1 vector of basis functions, F = [f(x (1)),…,f(x (n))]t is the n × m matrix, and β̂ is the vector of generalized least-square estimates of β = [β 1,…,β m ]t, which is given by

Moreover, the universal kriging model provides an estimate of the variance of the prediction, which is given by

with

For more details of the derivation of the prediction formula, see, for instance, Sasena (2002) or Schonlau (1998). The theory of the proposed method has been expressed in the same way as for the universal kriging model. The numerical examples in Section 4 use the ordinary kriging model, which is a special case of the universal model, but with f(x)={1} (and m = 1). For the ordinary kriging model, (3), (4), and (6) are then replaced by the equations given in Appendix A.

Note that the assumption of known covariance with known hyper-parameters 𝜃 is unrealistic in reality. For this reason, the covariance function is typically chosen from among a parametric family of kernels. Table 12 in Appendix B gives some examples of typical stationary kernels. The number of hyper-parameters required for the estimate is typically greater than (or equal to) the number of input variables. In this work, we use in the following a Gaussian exponential kernel:

By applying some elementary operations to existing kernels, we can construct new kernels. In this work, we use the property that the tensor product of covariances is a covariance kernel in the product space. More details are available in Rasmussen and Williams (2006), Durrande (2011), Bishop (2007), Liem and Martins (2014).

2.2 Estimation of hyper-parameters 𝜃

The key point of the kriging approximation is how it estimates the hyper-parameters 𝜃, so its main steps are recalled here, along with some mathematical details.

One of the major challenges when building a kriging model is the complexity and difficulty of estimating the hyper-parameters 𝜃, in particular when dealing with problems with many dimensions or with a large number of sampling points. In fact, using (3) to make a kriging prediction requires inverting an n × n matrix, which typically has cost of (n 3), where n is the number of sampling points (Braham et al. 2014). The hyper-parameters are estimated using maximum likelihood (ML) or cross validation (CV), which are based on observations. Bachoc compared the ML and CV techniques (Bachoc 2013) and concluded that, in most cases studied, the CV variance is larger. The ML method is widely used to estimate the hyper-parameters 𝜃; it is also used in this paper. In practice, the following log-ML estimate is often used:

Inserting β̂ and σ̂2 given by (4) and (6), respectively, into the expression (7), we get the following so-called concentrated likelihood function, which depends only on the hyper-parameters 𝜃:

To facilitate reading, R(𝜃) has been replaced by R in the last line of (8).

Maximizing (8) is very computationally expensive for high dimensions and when using a large number of sample points because the (n × n) matrix R(𝜃) in (8) must be inverted. The maximization problem is often solved using genetic algorithms (see Forrester and Sobester 2008 for more details). In this work, we use the derivative-free optimization algorithm COBYLA that was developed by Powell (1994). COBYLA is a sequential trust-region algorithm that uses linear approximations for the objective and constraint functions.

Figure 1 recalls the principal stages of building a kriging model, and each step is briefly outlined below:

-

1.

The user must provide the initial design of experiments (X, y) and the parametric family of the covariance function k.

-

2.

To derive the prediction formula, the kriging algorithm assumes that all parameters of k are known.

-

3.

Under the hypothesis of the kriging algorithm, we estimate hyper-parameters 𝜃 from the concentrated likelihood function given by (8) and by using the COBYLA algorithm.

-

4.

Finally, we calculate the prediction (3) and the associated estimation error (5) after estimating all hyper-parameters of the kriging model.

3 Kriging model combined with Partial Least Squares

As explained above, maximizing the concentrated likelihood (8) can be time consuming when the number of covariance parameters is large, which typically occurs in large dimension. Solving this problem can be accelerated by combing the PLS method and the kriging model. The 𝜃 parameters from the kriging model represent the range in any spatial direction. Assuming, for instance, that certain values are less significant for the response, then the corresponding 𝜃 i (i = 1,…,d) will be very small compared to the other 𝜃 parameters. The PLS method is a well-known tool for high-dimensional problems and consists of maximizing the variance by projecting onto smaller dimensions while monitoring the correlation between input variables and the output variable. In this way, the PLS method reveals the contribution of all variables—the idea being to use this information to scale the 𝜃 parameters.

In this section we propose a new method that can be used to build an efficient kriging model by using the information extracted from the PLS stage. The main steps for this construction are as follows:

-

1.

Use PLS to define weight parameters.

-

2.

To reduce the number of hyper-parameters, define a new covariance kernel by using the PLS weights.

-

3.

Optimize the parameters.

The key mathematical details of this construction are explained in the following.

3.1 Linear transformation of covariance kernels

Let x be a vector space over the hypercube B. We define a linear map given by

where α 1,…,α d ∈ ℝ and B ′ is a hypercube included in ℝ d (B ′ can be different from B). Let k be an isotropic covariance kernel with k:B ′×B ′→ℝ. Since k is isotropic, the covariance kernel k(F(⋅),F(⋅)) depends on a single parameter, which must be estimated. However, if α 1,…,α d are well chosen, then we can use k(F(⋅),F(⋅)). In this case, the linear transformation F allows us to approach the isotropic case (Zimmerman and Homer 1991). In the present work, we choose α 1,…,α d based on information extracted from the PLS technique.

3.2 Partial Least Squares

The PLS method is a statistical method that finds a linear relationship between input variables and the output variable by projecting input variables onto a new space formed by newly chosen variables, called principal components (or latent variables), which are linear combinations of the input variables. This approach is particularly useful when the original data are characterized by a large number of highly collinear variables measured on a small number of samples. Below, we briefly describe how the method works. For now, suffice it to say that only the weighting coefficients are central to understanding the new KPLS approach. For more details on the PLS method, please see Helland (1988), Frank and Friedman (1993), Alberto and González (2012).

The PLS method is designed to search out the best multidimensional direction in X space that explains the characteristics of the output y. After centering and scaling the (n × d)-sample matrix X and the response vector y, the first principal component t (1) is computed by seeking the best direction w (1) that maximizes the squared covariance between t (1) = X w (1) and y:

The optimization problem (10) is maximized when w (1) is the eigenvector of the matrix X t y y t X corresponding to the eigenvalue with the largest absolute value; the vector w (1) contains the X weights of the first component. The largest eigenvalue of problem (10) can be estimated by the power iteration method introduced by Lanczos (1950).

Next, the residual matrix from X = X (0) space and from y = y (0) are calculated; these are denoted X (1) and y (1), respectively:

where p (1) (a 1×d vector) contains the regression coefficients of the local regression of X onto the first principal component t (1), and c 1 is the regression coefficient of the local regression of y onto the first principal component t (1). The system (11) is the local regression of X and y onto the first principal component.

Next, the second principal component, which is orthogonal to the first, can be sequentially computed by replacing X by X (1) and y by y (1) to solve the system (10). The same approach is used to iteratively compute the other principal components. To illustrate this process, a simple three-dimensional (3D) example with two principal components is given in Fig. 2. In the following, we use h to denote the number of principal components retained.

The principal components represent the new coordinate system obtained upon rotating the original system with axes, x 1,…,x d (Alberto and González 2012). For l = 1,…,h, t (l) can be written as

This important relationship is used for coding the method. The following matrix W ∗ = [w∗(1),…,.w∗(h)] is obtained by Manne (1987):

where W = [w (1),…,w (h)] and P = [p (1) t,…,p (h) t].

The vector w (l) corresponds to the principal direction in X space that maximizes the covariance of X (l−1) t y (l−1) y (l−1) t X (l−1). If h = d, the matrix W ∗ = [w∗(1),…,w∗(d)] rotates the coordinate space (x 1,…,x d ) to the new coordinate space (t (1),…,t (d)), which follow the principal directions w (1),…,w (d).

As mentioned in the introduction, the PLS method is chosen instead of the PCA method because the PLS method shows how the output variable depends on the input variables, whereas the PCA method focuses only on how the input variables depend on each other. In fact, the hyper-parameters 𝜃 for the kriging model depend on how each input variable affects the output variable.

3.3 Construction of new kernels for KPLS models

Let B be a hypercube included in ℝ d. As seen in the previous section, the vector w∗(1) is used to build the first principal component t (1) = X w∗(1), where covariance between t (1) and y is maximized. The scalars w∗1(1),…,w∗d(1) can then be interpreted as measuring the importance of x 1,…,x d , respectively, for constructing the first principal component where its correlation with the output y is maximized. However, we know that the hyper-parameters 𝜃 1,…,𝜃 d (see Table 12 in Appendix B) can be interpreted as measuring how strongly the variables x 1,…,x d , respectively, affect the output y. Thus, we define a new kernel k k p l s1:B × B→ℝ given by k 1(F 1(⋅),F 1(⋅)) with k 1:B × B→ℝ being an isotropic stationary kernel and

F 1 goes from B to B because it only works for the new coordinate system obtained by rotating the original coordinate axes, x 1,…,x d . Through the first component t (1), the elements of the vector w∗(1) reflect how x depends on y. However, such information is generally insufficient, so the elements of the vector w∗(1) are supplemented by the information given by the other principal components t (2),…,t (h). Thus, we build a new kernel k k p l s1:h sequentially by using the tensor product of all kernels k k p l s l , which accounts for all this information in only a single covariance kernel:

with k l :B × B→ℝ and

If we consider the Gaussian kernel applied with this proposed approach, we get

Table 13 in Appendix B presents new KPLS kernels based on examples from Table 12 (also in Appendix B) that contain fewer hyper-parameters because h ≪ d. The number of principal components is fixed by the following leave-one-out cross-validation method:

-

(i) We build KPLS models based on h = 1, then h = 2, … principal components.

-

(ii) We choose the number of components that minimizes the leave-one-out cross-validation error.

The flowchart given in Fig. 3 shows how the information flows through the algorithm, from sample data, PLS algorithm, kriging hyper-parameters, to final predictor. With the same definitions and equations, almost all the steps for constructing the KPLS model are similar to the original steps for constructing the ordinary kriging model. The exception is the third step, which is highlighted in the solid-red box in Fig. 3. This step uses the PLS algorithm to define the new parametric kernel k k p l s1:h as follows:

-

a.

initialize the PLS algorithm with l = 1;

-

b.

if l ≠ 1, compute the residual of X (l−1) and y (l−1) by using system (11);

-

c.

compute X weights for iteration l;

-

d.

define a new kernel k k p l s1:h by using (14);

-

e.

if the number of iterations is reached, return to step 3, otherwise continue;

-

f.

update data considering l = l+1.

Note that, if kernels k l are separable at this point, the new kernel given by (14) is also separable. In particular, if all kernels k l are of the exponential type (e.g., all Gaussian exponentials), the new kernel given by (14) will be the same type as k l . The proof is given in Appendix C.

4 Numerical examples

We now present a few analytical and engineering examples to verify the proper functioning of the proposed method. The ordinary kriging model with a Gaussian kernel provides the benchmark against which the results of the proposed combined approach are compared. The Python toolbox Scikit-learn v.014 (Pedregosa et al. 2011) is used to implement these numerical tests. This toolbox provides hyper-parameters for the ordinary kriging. The computations were done on an Intel® Celeron® CPU 900 2.20 GHz desktop PC. For the proposed method, we combined an ordinary kriging model with a Gaussian kernel with the PLS method with one to three principal components.

4.1 Analytical examples

We use two academic functions and vary the characteristics of these test problems to cover most of the difficulties faced in the field of substitution models. The first function is g07 (Michalewicz and Schoenauer 1996) with 10 dimensions, which is close to what is required by industry in terms of dimensions,

For this function, we use experiments based on a latin hypercube design with 100 data points to fit models.

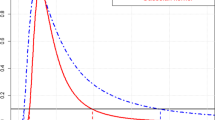

The second function is the Griewank function (Regis and Shoemaker 2013), which is used because of its complexity, as illustrated in Fig. 4 for the two-dimensional (2D) case. The function is

Two types of experiments are done with this function. The first is defined over the interval [−600, 600] and has varying dimensions (2, 5, 7, 10, 20, 60). This experiment serves to verify the effectiveness of the proposed approach in both low and high dimensions. It is based on the latin hypercube design and uses n data points to fit models, as mentioned in Table 1.

The second type of experiment is defined over the interval [−5, 5], where the Griewank function is more complex than for the first type of experiment (cf. Figs. 4 and 5). Over this reduced interval, experiments are done with 20 and 40 dimensions (20D and 40D) and with 50, 100, 200, and 300 sampling points. To analyse the robustness of the method, ten experiments, each with a different latin hypercube design, are used for this case.

To compare the three approaches (i.e., the g07 function and the Griewank function of the intervals [ −600, 600] and [ −5, 5]), 5000 random points are computed and the results are stored in a database. The following relative error is used to compare the performance of the ordinary kriging model with the KPLS model:

where ||⋅||2 represents the usual L 2 norm, and Ŷ and Y are the vectors containing the prediction and the real values of random points, respectively. The CPU time required to fit models is also noted (“h” refers to hours, “min” refers to minutes, and “s” refers to seconds).

4.1.1 Comparison with g07 function

The results listed in Table 2 show that the proposed KPLS surrogate model is more accurate than the ordinary kriging model when more than one component is used. Using just one component gives almost the same accuracy as the ordinary kriging model. In this case, only a single 𝜃 hyper-parameter from the space correlation needs be estimated compared to ten 𝜃 hyper-parameters for the ordinary kriging model. Increasing the number of components improves the accuracy of the KPLS surrogate model. Whereas the PLS method treats only linearly related input and output variables, this example shows that the KPLS model can treat nonlinear problems. This result is not contradictory because (23) shows that the KPLS model is equivalent to the kriging model with specific hyper-parameters.

4.1.2 Comparison with complex Griewank function over interval [−600, 600]

Table 3 compares the ordinary kriging model and the KPLS model in two dimensions.

If two components are used for the KPLS, we expect to obtain the same accuracy and time cost for the two approaches because the difference between the two models consists only of a transformation of the search-space coordinates when a Gaussian kernel is used (the space in which the 𝜃 hyper-parameters exist). In this case, the KPLS model with only one component degrades the accuracy of the solution.

Tables 4, 5, and 6, show the results for 5, 7, and 10 dimensions, respectively.

Varying the number of principal components does not significantly affect the accuracy of the model. The gain in computation time does not appear upon increasing the number of principal components: the computation time is reduced when we use the KPLS model. Upon increasing the number of principal components, the CPU time for constructing the KPLS model increases but still remains lower than for ordinary kriging. For these three examples, the combined approach with only one PLS component offers sufficient accuracy with a CPU time reduced 35-fold for 10 dimensions (i.e., 21 s for the ordinary kriging model and 0.6 s for the combined model).

In the 20-dimension (20D) example (Table 7), using KPLS with only one principal component leads to a poor relative error (10.15 %) compared with other models. In this case, two principal components are required to build the combined model. The CPU time remains less than that for the ordinary kriging model (11.7 s vs 107 s).

The results in Table 8 for the KPLS model with 60 dimensions (60D) show that this model is faster than the ordinary kriging model. Compared with the kriging model, the CPU time is reduced 42-fold when one principal component is used and over 17-fold when three principal components are used.

Thus, for the Griewank function over the interval [−600, 600] and at the highest dimensions, the majority of the results obtained for the analytical examples are better when using the KPLS model than when using the ordinary kriging model. The proposed method thus appears interesting, particularly in terms of saving CPU time while maintaining good accuracy.

4.1.3 Comparison with complex Griewank function over interval [−5, 5]

As shown in Fig. 4, the Griewank function looks like a parabolic function. This is because, over the interval [−600, 600], the cosine part of the Griewank function does not contribute significantly compared with the sum of x i2/4000. This is especially true given that the cosine part is a product of factors each of which is less than unity. If we reduce the interval from [−600, 600] to [−5, 5], we can see why the Griewank function is widely used as a multimodal test function with a very rugged landscape and a large number of local optima (see Fig. 5). Compared with the interval [−600, 600], the opposite happens for the interval [−5, 5]: the “cosine part” dominates; at least for moderate dimensions where the product contains few factors. For this case, which seems very difficult, we consider 20 and 60 input variables. For each problem, ten experiments based on the latin hypercube design are built with 50, 100, 200, and 300 sampling points. The mean and the standard error are given in Tables 14 and 15 in Appendix D. To better visualize the results, boxplots are used to show CPU time and the relative error R E given by Figs. 7, 8, 9, 10 and 11 in Appendix D.

For 20 input variables and 50 sampling points, the KPLS model gives a more accurate solution than the ordinary kriging model, as shown in Fig. 7a. The rate of improvement with respect to the number of sampling points is less for the KPLS model than for the kriging model (cf. Fig. 7b–d). Nevertheless, the results shown in Fig. 8 indicate that the KPLS model leads to an important reduction in CPU time for the various number of sampling points.

Similar results occur for the 60D Griewank function (Fig. 9). The mean R E obtained with the ordinary kriging model improves from approximately 1.39 to 0.65 % upon increasing the number of sampling points from 50 to 300 (cf. Fig. 9a, d). However, a very important reduction in CPU time is obtained, as shown in Fig. 10. The CPU time required for the KPLS model is hardly visible because it is much, much less than that required by the ordinary kriging model. We thus show in Fig. 11 the CPU time required by the KPLS model alone to show the different CPU times required for the various configurations (KPLS1, KPLS2, and KPLS3). For Griewank function over the interval [ −5, 5], the KPLS method seems to perform well when the number of observations is small compared to the dimension d. In this case, the standard separable covariance function for the ordinary kriging model is almost impossible to use because the number of parameters to be estimated is too large compared with the number of observations. Thus, the KPLS method seems more efficient in this case.

4.2 Industrial examples

The following engineering examples are based on results of numerical experiments done at SNECMA on multidisciplinary optimization. The results are stored in tables.

Aerospace turbomachinery consists of numerous blades that transfer energy between air and the rotor. The disks with compressor blades are particularly important because they must satisfy the dual criteria of aerodynamic performance and mechanical stress. Blades are mechanically and aerodynamically optimized by searching parameter space for an aerodynamic shape that ensures the best compromise that satisfies a set of constraints. The blade, which is a 3D entity, is first divided into a number of radial 2D profiles whose thickness is a given percentage of the distance from the hub to the shroud (see Fig. 6).

A new 3D blade is constructed by starting with the 2D profiles and then exporting them to various meshing tools before analyzing them in any specific way. The calculation is integrated into the Optimus platform (Noesis Solutions 2009), which makes it possible to integrate multiple engineering software tools (computational structural mechanics, computational fluid dynamics, … ) into a single automated work flow. Optimus, which is an industrial software package for screening variables, optimizing design, and analyzing the sensitivity and robustness, explores and analyzes the results of the work-flow to optimize product design. Toward this end, it uses high-fidelity codes or a reduced model of these codes. It also exploits a wide range of approximation models, including the ordinary kriging model.

Input variables designate geometric hyper-parameters at different percent height and outputs are related to aerodynamic efficiency, vibration criteria, mechanical stress, geometric constraints, and aerodynamic stress. Three numerical experiments are considered:

-

(i) The first experiment is denoted t a b 1 and contains 24 input variables and 4 output variables. It has 99 training points and 52 validation points. The outputs are denoted y 1, y 2, y 3, and y 4.

-

(ii) The second experiment is denoted t a b 2 and contains 10 input variables and only 1 output variable. It has 1295 training points and 500 validation points.

-

(iii) The third experiment is denoted t a b 3 and contains 99 input variables and 1 output variable. It has 341 training points and 23 validation points.

Points used in t a b 1, t a b 2, and t a b 3 come from previous computationally expensive computer experiments done at SNECMA, which means that this separation between training points and verification points was imposed by SNECMA. The goal is to compare the ordinary kriging model that is implemented in the Optimus platform with the proposed KPLS model. The relative error given by (16) and the CPU time required to fit the model are reported in Tables 9–11.

The relative errors for the four models are very similar: the KPLS model results in a slightly improved accuracy for the solutions y 1, y 2, y 4 from t a b 1, y 1 from t a b 2, and y 1 from t a b 3 but degrades slightly the solution y 3 from t a b 1. The main improvement offered by the proposed model relates to the time required to fit the model, particularly for a large number of training points. Table 10 shows that, with only one principal component, the CPU time is drastically reduced compared with the Optimus model. More precisely, for t a b 2, the ordinary kriging model requires 1 h 30 min whereas the KPLS1 model requires only 11 s and provides better accuracy. In addition, the results for KPLS2 and KPLS3 models applied to a 99D problem are very promising (see Table 11).

One other point of major interest for the proposed method is its natural compatibility with sequential enrichment techniques such as the efficient global optimization strategy (see Jones et al. 1998).

4.3 Dimensional limits

This project is financed by SNECMA and most of their design problems do not exceed 100 input variables. In addition, the toolbox Scikit-learn (version 0.14) may have memory problems when a very large number of input variables is considered. Thus, problems with more than 100 input variables are not investigated in this work. However, by optimizing memory access and storage, this limit could easily be increased.

5 Conclusion and future work

Engineering problems that require integrating surrogate models into an optimization process are receiving increasing interest within the multidisciplinary optimization community. Computationally expensive design problems can be solved efficiently by using, for example, a kriging model, which is an interesting method for approximating and replacing high-fidelity codes, largely because these models give estimation errors, which is an interesting way to solve optimization problems. The major drawback involves the construction of the kriging model and in particular the large number of hyper-parameters that must be estimated in high dimensions. In this work, we develop a new covariance kernel for handling this type of higher-dimensional problem (up to 100 dimensions). Although the PLS method requires a very short computation time to estimate 𝜃, the estimate is often difficult to execute and computationally expensive when the number of input variables is greater than 10. The proposed KPLS model was tested by applying it to two analytic functions and by comparing its results to those tabulated in three industrial databases. The comparison highlights the efficiency of this model for up to 99 dimensions. The advantage of the KPLS models is not only the reduced CPU time, but also in that it reverts to the kriging model when the number of observations is small relative to the dimensions of the problem. Before using the KPLS model, however, the number of principal components should be tested to ensure a good balance between accuracy and CPU time.

An interesting direction for future work is to study how the design of the experiment (e.g., factorial) affects the KPLS model. Furthermore, other verification functions and other types of kernels can be used. In all cases studied herein, the first results with this proposed method reveal significant gains in terms of computation time while still ensuring good accuracy for design problems with up to 100 dimensions. The implementation of the proposed KPLS method requires minimal modifications of the classic kriging algorithm and offers further interesting advantages that can be exploited by methods of optimization by enrichment.

Abbreviations

- Matrices and:

-

vectors are in bold type.

- Symbol:

-

Meaning

- det:

-

Determinant of a matrix

- |⋅|:

-

Absolute value

- ℝ :

-

Set of real numbers

- ℝ + :

-

Set of positive real numbers

- n :

-

Number of sampling points

- d :

-

Dimensions

- h :

-

Number of principal components retained

- x :

-

1×d vector

- x j :

-

j th element of a vector x

- X :

-

n × d matrix containing sampling points

- y :

-

n × 1 vector containing simulation of X

- x (i) :

-

i th training point for i = 1,…,n (a 1 × d vector)

- w (l) :

-

d × 1 vector containing X weights given by the l th PLS iteration for l = 1,…,h

- X (0) :

-

X

- X (l−1) :

-

Matrix containing residual of inner regression of (l − 1)st PLS iteration for l = 1,…,h

- k(⋅, ⋅):

-

Covariance function

- (0, k(⋅, ⋅)):

-

Distribution of a Gaussian process with mean function 0 and covariance function k(⋅, ⋅)

- x t :

-

Superscript t denotes the transpose operation of the vector x

References

Alberto P, González F (2012) Partial Least Squares regression on symmetric positive-definite matrices. Rev Col Estad 36(1):177–192

Bachoc F (2013) Cross Validation and Maximum Likelihood estimation of hyper-parameters of Gaussian processes with model misspecification. Comput Stat Data Anal 66:55–69

Bishop CM (2007) Pattern recognition and machine learning (information science and statistics). Springer

Braham H, Ben Jemaa S, Sayrac B, Fort G, Moulines E (2014) Low complexity spatial interpolation for cellular coverage analysis. In: 2014 12th international symposium on modeling and optimization in mobile, ad hoc, and wireless networks (WiOpt). IEEE, pp 188–195

Buhmann MD (2003) Radial basis functions: theory and implementations, vol 12. Cambridge University Press, Cambridge

Cressie N (1988) Spatial prediction and ordinary kriging. Math Geol 20(4):405–421

Damianou A, Lawrence ND (2013) Deep gaussian processes. In: Proceedings of the sixteenth international conference on artificial intelligence and statistics, AISTATS 2013, Scottsdale, pp 207–215

Durrande N (2011) Covariance kernels for simplified and interpretable modeling. A functional and probabilistic approach. Theses, Ecole Nationale Supérieure des Mines de saint-Etienne

Durrande N, Ginsbourger D, Roustant O (2012) Additive covariance kernels for high-dimensional gaussian process modeling. Ann Fac Sci Toulouse Math 21(3):481–499

Forrester A, Sobester A, Keane A (2008) Engineering design via surrogate modelling: a practical guide. Wiley, New York

Frank IE, Friedman JH (1993) A statistical view of some chemometrics regression tools. Technometrics 35:109–148

Goovaerts P (1997) Geostatistics for natural resources evaluation (applied geostatistics). Oxford University Press, New York

Haykin S (1998) Neural networks: a comprehensive foundation, 2nd edn. Prentice Hall PTR, Upper Saddle River

Helland I (1988) On structure of partial least squares regression. Commun Stat - Simul Comput 17:581–607

Hensman J, Fusi N, Lawrence ND (2013) Gaussian processes for big data. In: Proceedings of the twenty-ninth conference on uncertainty in artificial intelligence, Bellevue, p 2013

Jones DR, Schonlau M, Welch WJ (1998) Efficient global optimization of expensive black-box functions. J Glob Optim 13(4):455–492

Lanczos C (1950) An iteration method for the solution of the eigenvalue problem of linear differential and integral operators. J Res Natl Bur Stand 45(4):255–282

Liem RP, Martins JRRA (2014) Surrogate models and mixtures of experts in aerodynamic performance prediction for mission analysis. In: 15th AIAA/ISSMO multidisciplinary analysis and optimization conference, Atlanta, GA, AIAA-2014-2301

Manne R (1987) Analysis of two Partial-Least-Squares algorithms for multivariate calibration. Chemom Intell Lab Syst 2(1–3):187–197

Mera NS (2007) Efficient optimization processes using kriging approximation models in electrical impedance tomography. Int J Numer Methods Eng 69(1):202–220

Michalewicz Z, Schoenauer M (1996) Evolutionary algorithms for constrained parameter optimization problems. Evol Comput 4: 1–32

Noesis Solutions (2009) OPTIMUS. http://www.noesissolutions.com/Noesis/optimus-details/optimus-design-optimization

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V et al (2011) Scikit-learn: machine learning in python. J Mach Learn Res 12:2825–2830

Picheny V, Ginsbourger D, Roustant O, Haftka RT, Kim NH (2010) Adaptive designs of experiments for accurate approximation of a target region. J Mech Des 132(7):071008

Powell MJ (1994) A direct search optimization method that models the objective and constraint functions by linear interpolation. In: Advances in optimization and numerical analysis. Springer, pp 51–67

Rasmussen C, Williams C (2006) Gaussian processes for machine learning. Adaptive computation and machine learning. MIT Press, Cambridge

Regis R, Shoemaker C (2013) Combining radial basis function surrogates and dynamic coordinate search in high-dimensional expensive black-box optimization. Eng Optim 45(5):529–555

Roustant O, Ginsbourger D, Deville Y (2012) DiceKriging, DiceOptim: two R packages for the analysis of computer experiments by kriging-based metamodeling and optimization. J Stat Softw 51(1):1–55

Sakata S, Ashida F, Zako M (2004) An efficient algorithm for Kriging approximation and optimization with large-scale sampling data. Comput Methods Appl Mech Eng 193(3):385–404

Sasena M (2002) Flexibility and efficiency enhancements for constrained global design optimization with Kriging approximations. PhD thesis, University of Michigan

Schonlau M (1998) Computer experiments and global optimization. PhD thesis, University of Waterloo

Wahba G (1990) Spline models for observational data, CBMS-NSF regional conference series in applied mathematics, vol 59. Society for Industrial and Applied Mathematics (SIAM), Philadelphia

Wahba G, Craven P (1978) Smoothing noisy data with spline functions. Estimating the correct degree of smoothing by the method of generalized cross-validation. Numer Math 31:377–404

Zimmerman DL, Homer KE (1991) A network design criterion for estimating selected attributes of the semivariogram. Environmetrics 2(4):425–441

Acknowledgments

The authors thank the anonymous reviewers for their insightful and constructive comments. We also extend our grateful thanks to A. Chiplunkar from ISAE SUPAERO, Toulouse and R. G. Regis from Saint Joseph’s University, Philadelphia for their careful correction of the manuscript and to SNECMA for providing the tables of experiment results. Finally, B. Kraabel is gratefully acknowledged for carefully reviewing the paper prior to publication.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Equations for ordinary kriging model

The expression (3) for the ordinary kriging model is transformed into (see Forrester and Sobester 2008)

where 1 denotes an n-vector of ones and

In addition, (6) is written as

Appendix B: Examples of kernels

Table 12 presents the most popular examples of stationary kernels. Table 13 presents the new KPLS kernels based on the examples given in Table 12.

Appendix C: Proof of equivalence kernel

For l = 1,…,h, k l are separable kernels (or a d-dimensional tensor product) of the same type, so ∃ ϕ l1,…,ϕ l d such that

where F l (x) i is the ith coordinate of F l (x). If we insert (20) in (14) we get

with

corresponding to an one-dimensional kernel. Hence, k k p l s1:h is a separable kernel. In particular, if we consider a generalized exponential kernel with p 1 = ⋯ = p h = p ∈ [0, 2], we obtain

with

We thus obtain

Appendix D: Results of Griewank function in 20D and 60D over interval [−5, 5]

In Tables 14 and 15, the mean and standard deviation (std) of the numerical experiments with the Griewank function are given for 20 and 60 dimensions, respectively. To better visualize the results, boxplots are used in Figs. 7–11.

Rights and permissions

About this article

Cite this article

Bouhlel, M.A., Bartoli, N., Otsmane, A. et al. Improving kriging surrogates of high-dimensional design models by Partial Least Squares dimension reduction. Struct Multidisc Optim 53, 935–952 (2016). https://doi.org/10.1007/s00158-015-1395-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-015-1395-9