Abstract

In this paper, we consider the parameter estimation problem of dual-frequency signals disturbed by stochastic noise. The signal model is a highly nonlinear function with respect to the frequencies and phases, and the gradient method cannot obtain the accurate parameter estimates. Based on the Newton search, we derive an iterative algorithm for estimating all parameters, including the unknown amplitudes, frequencies, and phases. Furthermore, by using the parameter decomposition, a hierarchical least squares and gradient-based iterative algorithm is proposed for improving the computational efficiency. A gradient-based iterative algorithm is given for comparisons. The numerical examples are provided to demonstrate the validity of the proposed algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Signal models are often encountered in scientific disciplines and engineering applications. The parameter estimation of signal models is very important, and then many estimation approaches have been presented [32, 41, 42]. As an original way, Fourier analysis plays a key role in signal processing [48, 50]. However, there are certain limitations because the frequencies of the Fourier transform are just a collection of the individual frequencies of the periodic signals. Another classical method is the phase-locked loop technique, which automatically adjusts the frequency by locking the phase between the reference and the internal sine waves. Additionally, a self-correlation phase method [4] has been developed to estimate the frequencies. The parameter estimation methods can be applied to many areas [2, 3, 5, 51].

Many methods can estimate the parameters of the signal models. For example, Stoica et al. [37] used the weighted least squares method to estimate the amplitudes of the sinusoidal signals. The amplitude and frequency of a sine signal were estimated with the property of global convergence in [20]. The amplitude and frequency estimation problem of multiple sine signals was investigated based on the adaptive identifier [21]. Recently, Hou [22] has presented a solution for estimating parameters of a biased multiple sinusoids using a combination of a frequency identifier and gradient algorithms. This paper is devoted to identify the parameters for dual-frequency signals, including the unknown amplitudes, frequencies, and phases.

The iterative methods play an important role not only in finding the solutions of nonlinear matrix equations, but also in deriving parameter estimation algorithms for signal models. Combining with some optimization tools, the iterative methods can be applied to estimate the parameters of the nonlinear systems [9, 34]. For example, Xu [40] derived a Newton iterative algorithm for the parameter estimation of dynamical systems. Ding et al. [12] studied the iterative parameter identification for pseudo-linear systems with ARMA noise using the filtering technique.

As a classical optimization tool, the Newton method is a root-finding algorithm that utilizes the first few terms of the Taylor series of a function in the neighborhood of a suspected root. For a long time, the Newton method has received a great deal of attention, and there have been lots of applications in many fields, such as the minimization and maximization problems, the transcendental equations, the numerical verification for the solutions of nonlinear equations [30, 35, 49].

The previous work in [29, 43] considered the Newton iterative (NI) algorithm for the nonlinear parameter estimation problems. The NI algorithm possesses high parameter estimation accuracy, while the computational cost is large for the reason that the inverse of the Hessian matrix needs to be computed at each iteration, and its components are second-order derivatives. In order to reduce the computational cost, this paper presents a hierarchical least squares and gradient-based iterative (HI) algorithm using the parameter decomposition. The key is to decompose the parameter vector into three parts and to estimate the parameters of each part, so the dimension of the parameter vector is reduced and the computational efficiency and the parameter estimation accuracy are improved.

The rest of this paper is organized as follows. Sections 2 and 3 derive two iterative algorithms based on the gradient search and the Newton search, respectively. Section 4 provides a HI algorithm. Section 5 gives two numerical examples to illustrate the effectiveness of the proposed algorithms. Finally, some conclusions are drawn in Sect. 6.

2 The Gradient-Based Iterative Algorithm

The sine and cosine signals are typical periodic signals, whose waveforms are the sine and cosine curves in mathematics. Many complex signals can be decomposed into numerous sinusoidal signals with different frequencies and amplitudes by the Fourier series [1]. The sine signal differs from the cosine signal by \(\pi /2\) in the initial phase. This paper derives the parameter estimation algorithms for the dual-frequency cosine signals, which can be applied to the sine signals.

Consider the following dual-frequency cosine signal model:

where \(a>0\) and \(b>0\) are the amplitudes, \(\omega _1>0\) and \(\omega _2>0\) are the angular frequencies, \(\phi \) and \(\psi \) are the initial phases, t is a continuous-time variable, y(t) is the observations, and v(t) is a stochastic disturbance with zero mean and variance \(\sigma ^2\).

The actual signals are always interfered with various stochastic noises. In practical engineering, we can only get the discrete observed data. Suppose that the sampled data are \(y(t_i)\), \(i=1,2,3,\ldots ,L\), where \(t_i\) is the sampled time and L denotes the data length.

Use the observed data \(y(t_i)\) and the model output to construct the criterion function

The criterion function represents the square sum of the errors between the model outputs and the observed data. It is desired that this criterion function is as small as possible, which is equivalent to minimizing \(J_1({\varvec{\theta }})\) and obtaining the estimates of the parameter vector \({\varvec{\theta }}:=[a,b,\omega _1,\omega _2,\phi ,\psi ]^{\tiny \text{ T }}\in {{\mathbb {R}}}^6\).

Letting the partial derivative of \(J_1({\varvec{\theta }})\) with respect to \({\varvec{\theta }}\) gives

Define the stacked observed data \({\varvec{Y}}(L)\) and the stacked information matrix \({\varvec{\varPhi }}({\varvec{\theta }},L)\) as

Define the information vector

Let \(f(t_i):=a\cos (\omega _1t_i+\phi )+b\cos (\omega _2t_i+\psi )\in {{\mathbb {R}}}\), and the stacked model output vector

Then, the gradient vector can be expressed as

Let \(k=1,2,3,\ldots \) be an iterative variable and \({\hat{{\varvec{\theta }}}}_k\) be the estimate of \({\varvec{\theta }}\) at iteration k. The partial derivatives at \({\varvec{\theta }}={\hat{{\varvec{\theta }}}}_{k-1}\) are given by

Minimizing \(J_1({\varvec{\theta }})\) and selecting an iterative step size \(\mu _k\geqslant 0\), we can get the gradient-based iterative (GI) algorithm for dual-frequency signal models:

\(\mu _k\leqslant \lambda _{\max }^{-1}[{\varvec{\varPhi }}^{\tiny \text{ T }}({\hat{{\varvec{\theta }}}}_{k-1},L){\varvec{\varPhi }}({\hat{{\varvec{\theta }}}}_{k-1},L)]\), where the \(\lambda _{\max }[{\varvec{\varPhi }}^{\tiny \text{ T }}({\hat{{\varvec{\theta }}}}_{k-1},L){\varvec{\varPhi }}({\hat{{\varvec{\theta }}}}_{k-1},L)]\) denotes the maximum eigenvalue of the matrix \([{\varvec{\varPhi }}^{\tiny \text{ T }}({\hat{{\varvec{\theta }}}}_{k-1},L){\varvec{\varPhi }}({\hat{{\varvec{\theta }}}}_{k-1},L)]\). The procedure of the GI algorithm in (2)–(9) for dual-frequency signals is as follows.

-

1.

Let \(k=1\), give a small number \(\varepsilon \) and an integer L, set the initial value \({\hat{{\varvec{\theta }}}}_0=\mathbf{1}_6/p_0\), where \(p_0\) is generally taken as a large positive number, for example, \(p_0=10^6\).

-

2.

Collect the observed data \(y(t_i)\) and \(u(t_i)\), \(i=1,2,\ldots ,L\), form \({\varvec{Y}}(L)\) by (3).

-

3.

Form the information vector \({\varvec{\varphi }}({\hat{{\varvec{\theta }}}}_{k-1},t_i)\) by (6) and the stacked information matrix \({\varvec{\varPhi }}({\hat{{\varvec{\theta }}}}_{k-1},L)\) by (4).

-

4.

Form \(f({\hat{{\varvec{\theta }}}}_{k-1},t_i)\) by (7) and the stacked model output vector \({\varvec{F}}({\hat{{\varvec{\theta }}}}_{k-1},L)\) by (5).

-

5.

Select a largest \(\mu _k\) satisfying (8), and update the estimate \({\hat{{\varvec{\theta }}}}_k\) by (9).

-

6.

Compare \({\hat{{\varvec{\theta }}}}_k\) with \({\hat{{\varvec{\theta }}}}_{k-1}\), if \(\Vert {\hat{{\varvec{\theta }}}}_k-{\hat{{\varvec{\theta }}}}_{k-1}\Vert \geqslant \varepsilon \), then increase k by 1 and go to Step 3; otherwise, obtain the iterative time k and the estimate \({\hat{{\varvec{\theta }}}}_k\) and terminate this procedure.

Remark 1

In fact, the one-dimensional search for finding the best step size \(\mu _k\) needs to solve a complicated nonlinear equation at each iteration, so we cannot obtain the algebraic solution for computing \(\mu _k\) by Eq. (8). To figure out this problem, we can use the cut-and-try method or the Newton iterative method to acquire the approximation. In what follows, we derive a Newton iterative algorithm for estimating the parameters.

3 The Newton Iterative Algorithm

The gradient method requires the first derivative, but the Newton method is quadratically convergent. In order to improve the parameter estimation accuracy, calculating the second partial derivative of the criterion function \(J_1({\varvec{\theta }})\) with respect to \({\varvec{\theta }}\) gives the Hessian matrix

For the sake of brevity, the derivation results of all \(h_{mn}\), \(1=n<m\leqslant 6\) are not listed here, and the rest are presented in (10)–(39). Let \({\hat{{\varvec{\theta }}}}_k:=[{\hat{a}}_k,{\hat{b}}_k,{\hat{\omega }}_{1,k},{\hat{\omega }}_{2,k},{{\hat{\phi }}}_k,{{\hat{\psi }}}_k]^{\tiny \text{ T }}\) be the estimate of \({\varvec{\theta }}\) at iteration k. Based on the Newton search, we can derive the Newton iterative (NI) algorithm for dual-frequency signal models:

The steps of the NI algorithm for computing \({\hat{{\varvec{\theta }}}}_k\) are listed as follows.

-

1.

To initialize, let \(k=1\), \({\hat{{\varvec{\theta }}}}_0=\mathbf{1}_6/p_0\), and give a small number \(\varepsilon \).

-

2.

Collect the observed data \(y(t_i)\) and \(u(t_i)\), \(i=1,2,\ldots ,L\), form \({\varvec{Y}}(L)\) by (11).

-

3.

Form the information vector \({\hat{{\varvec{\varphi }}}}_k(t_i)\) using (14), the stacked information matrix \({\hat{{\varvec{\varPhi }}}}_k\) using (12).

-

4.

Form \({\hat{f}}_k(t_i)\) using (15) and the stacked model output vector \({\hat{{\varvec{F}}}}_k\) using (13).

-

5.

Compute \(h_{mn}({\hat{{\varvec{\theta }}}}_{k-1})\) by (17)–(38), \(1=n<m\leqslant 6\), form the Hessian matrix \({\varvec{H}}({\hat{{\varvec{\theta }}}}_{k-1})\) by (16).

-

6.

Update the estimate \({\hat{{\varvec{\theta }}}}_k\) by (39).

-

7.

Compare \({\hat{{\varvec{\theta }}}}_k\) with \({\hat{{\varvec{\theta }}}}_{k-1}\), if \(\Vert {\hat{{\varvec{\theta }}}}_k-{\hat{{\varvec{\theta }}}}_{k-1}\Vert >\varepsilon \), then increase k by 1, and go to Step 3; otherwise, obtain the estimate \({\hat{{\varvec{\theta }}}}_k\) and terminate this procedure.

Remark 2

The NI algorithm is an effective parameter estimation algorithm; however, it must calculate the inverse of the Hessian matrix at each iteration, which requires much computational cost. The following will derive a hierarchical least squares and gradient-based iterative algorithm, which not only ameliorates the estimation accuracy but also improves the computational efficiency.

4 The Hierarchical Iterative Algorithm

Inspired initially by the hierarchical control for large-scale systems based on the decomposition–coordination principle, we derive a hierarchical identification algorithm for the parameter estimation problem in this paper. According to the parameter decomposition, the original optimization problem is transformed into a combination of the nonlinear optimization and the linear optimization. In this way, the computational complexity is reduced greatly. The hierarchical method can be devoted to signal processing [44, 47], nonlinear systems [26, 31, 52,53,54], and other fields [10, 11, 25].

Define three parameter vectors \({\varvec{{\alpha }}}:=[a,b]^{\tiny \text{ T }}\in {{\mathbb {R}}}^2\), \({\varvec{\omega }}:=[\omega _1,\omega _2]^{\tiny \text{ T }}\in {{\mathbb {R}}}^2\) and \({\varvec{\beta }}:=[\phi ,\psi ]^{\tiny \text{ T }}\in {{\mathbb {R}}}^2\). The information vector with respect to \({\varvec{{\alpha }}}\) can be written as

Define the information matrix with respect to \({\varvec{{\alpha }}}\) as

Define three criterion functions:

where \(e({\varvec{\omega }},t_i)\)=\(e({\varvec{\beta }},t_i)\):=\(y(t_i)-a\cos (\omega _1t_i+\phi )-b\cos (\omega _2t_i+\psi )\). Letting the first-order derivative of \(J_2({\varvec{{\alpha }}})\) with respect to \({\varvec{{\alpha }}}\) be zero, we have

The least squares (LS) solution is given by

Unfortunately, there are the unknown terms \(\omega _1\), \(\omega _2\), \(\phi \), and \(\psi \) in \({\varvec{\varPhi }}_{\alpha }(L)\). The parameter estimates cannot be obtained.

Taking the first-order derivative of \(J_3({\varvec{\omega }})\) with respect to \({\varvec{\omega }}\) gives

Taking the first-order derivative of \(J_3({\varvec{\beta }})\) with respect to \({\varvec{\beta }}\) gives

Let \({\hat{{\varvec{\omega }}}}_k:=[{\hat{\omega }}_{1,k},{\hat{\omega }}_{2,k}]^{\tiny \text{ T }}\) be the estimate of \({\varvec{\omega }}\) at iteration k and \({\hat{{\varvec{\beta }}}}_k:=[{\hat{\phi }}_k,{\hat{\psi }}_k]^{\tiny \text{ T }}\in {{\mathbb {R}}}^2\) be the estimate of \({\varvec{\beta }}\) at iteration k. The gradient-based iterative (GI) algorithm for estimating the parameter vector \({\varvec{\omega }}\) is given as follows:

and the GI parameter estimation algorithm for estimating the parameter vector \({\varvec{\beta }}\) is given as follows:

where two step sizes \(\nu _k\) and \(\lambda _k\) can be determined by using the one-dimensional search. Here, we replace the unknown parameter vectors \({\varvec{{\alpha }}}\), \({\varvec{\omega }}\), and \({\varvec{\beta }}\) in (40), (41), and (42) with their previous estimates and obtain the hierarchical least squares and gradient-based iterative (HI) algorithm for the dual-frequency signals:

The steps of the HI algorithm in (43)–(53) for dual-frequency signals are as follows.

-

1.

To initialize, let \(k=1\), \({\hat{{\varvec{{\alpha }}}}}_0=\mathbf{1}_2/p_0\), let \({\hat{{\varvec{\omega }}}}_0\) and \({\hat{{\varvec{\beta }}}}_0\) be small real number vectors, give a small number \(\varepsilon \).

-

2.

Collect the observed data \(y(t_i)\) and \(u(t_i)\), \(i=1,2,\ldots ,L\), and form \({\varvec{Y}}(L)\) by (46).

-

3.

Form the information vector \({\hat{{\varvec{\varphi }}}}_{\alpha ,k-1}(t_i)\) using (48) and the stacked information matrix \({\hat{{\varvec{\varPhi }}}}_{\alpha ,k-1}(L)\) using (47).

-

4.

Compute \(\frac{\partial J_3({\hat{{\varvec{\omega }}}}_{k-1})}{\partial \omega _i}\), \(i=1,2\), using (49) and form \(\mathrm{grad}[J_3(\hat{\varvec{\omega }}_{k-1})]\).

-

5.

Compute \(\frac{\partial J_4(\hat{\varvec{\beta }}_{k-1})}{\partial \phi }\), \(\frac{\partial J_4(\hat{\varvec{\beta }}_{k-1})}{\partial \psi }\) using (51) and form \(\mathrm{grad}[J_4({\hat{{\varvec{\beta }}}}_{k-1})]\).

-

6.

Select the step sizes \(\nu _k\) by (50) and \(\lambda _k\) by (52).

-

7.

Update the estimate \({\hat{{\varvec{{\alpha }}}}}_k\) by (43), update the estimate \({\hat{{\varvec{\omega }}}}_k\) by (44), and update the estimate \({\hat{{\varvec{\beta }}}}_k\) using (45). Form \({\hat{{\varvec{\theta }}}}_k\) using (53).

-

8.

If \(\Vert {\hat{{\varvec{\theta }}}}_k-{\hat{{\varvec{\theta }}}}_{k-1}\Vert >\varepsilon \), then increase k by 1, and go to Step 3; otherwise, obtain the estimate \({\hat{{\varvec{\theta }}}}_k\) and terminate this procedure.

5 Examples

Example 1

Consider the following dual-frequency cosine signal model:

The parameter vector to be estimated is

In simulation, the white noise signal v(t) with variance \(\sigma ^2=1.00^2\) is added to the cosine combination signal. Set the sampling period \(T=0.01\,{\hbox {s}}\), \(t=nT\), \(n=1,2,3,\ldots \), let the iterative time \(k=500\), the data length \(L=2000\). Apply the GI algorithm and the NI algorithm to estimate the parameter vector \({\varvec{\theta }}\). The parameter estimation errors \(\delta :=\Vert {\hat{{\varvec{\theta }}}}_k-{\varvec{\theta }}\Vert /\Vert {\varvec{\theta }}\Vert \times 100\%\) versus k are illustrated in Fig. 1, and the parameter estimates and their estimation errors are exhibited in Table 1.

From the comparison of the GI algorithm and the NI algorithm, we can draw the following conclusions:

-

The parameter estimation errors obtained by the GI algorithm and the NI algorithm gradually decrease as iteration k increases.

-

The NI parameter estimates approach their true values only in the dozens of iterations.

-

Table 1 and Fig. 1 show that the NI algorithm has more accurate parameter estimates than those of the GI algorithm under the same condition.

Example 2

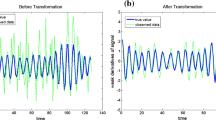

Consider the signal model in Example 1. In this simulation, the sampling period is 0.1s and the iterative time \(k=100\); v(t) is the noise with zero mean and variances \(\sigma ^2=0.50^2\) and \(\sigma ^2=1.00^2\), respectively. The distribution of the measured data is shown in Fig. 2.

Apply the HI algorithm in (43)–(53) to estimate the parameter vectors \({\varvec{{\alpha }}}\), \({\varvec{\omega }}\), and \({\varvec{\beta }}\) of the dual-frequency signal model. The parameter estimates and errors \(\delta \) are shown in Table 2 and Fig. 3. In order to test the effectiveness of the estimated signal, the actual signal and its estimates are drawn in Fig. 4, where the solid line denotes the actual signal and the dot line denotes its estimates.

From Fig. 3, we can see that the parameter estimation errors \(\delta \) obtained by the HI algorithm become small with k increasing. As the noise levels decrease, the HI algorithm can give more accurate parameter estimates; see the parameter estimation errors in the last column in Table 2 and the error curves in Fig. 3.

6 Conclusions

This paper presents a hierarchical least squares and gradient-based iterative algorithm to estimate all parameters of dual-frequency signal models from the observed data. The simulation results indicate that the proposed algorithm can improve the parameter estimation accuracy and reduce the computational burden. Similarly, the idea can be extended to estimate the parameters of multi-frequency signals and applied to signal detection and reconstruction, as well as in signal processing. The proposed algorithms in this paper can combine other methods such as the neural network methods [8, 27] and the kernel methods [16, 24] to study parameter identification of different systems [6, 7, 13,14,15, 18, 19, 23, 28, 38, 45, 58] and can be applied to other fields [17, 33, 36, 39, 46, 55,56,57].

References

B. Boashash, Estimating and interpreting the instantaneous frequency of a signal—part 1: fundamentals. Proc. IEEE 80(4), 520–538 (1992)

Y. Cao, P. Li, Y. Zhang, Parallel processing algorithm for railway signal fault diagnosis data based on cloud computing. Future Gener. Comput. Syst. 88, 279–283 (2018)

Y. Cao, L.C. Ma, S. Xiao et al., Standard analysis for transfer delay in CTCS-3. Chin. J. Electron. 26(5), 1057–1063 (2017)

Y. Cao, G. Wei, F.J. Chen, An exact analysis of modified covariance frequency estimation algorithm based on correlation of single-tone. Signal Process. 92(11), 2785–2790 (2012)

Y. Cao, Y. Wen, X. Meng, W. Xu, Performance evaluation with improved receiver design for asynchronous coordinated multipoint transmissions. Chin. J. Electron. 25(2), 372–378 (2016)

G.Y. Chen, M. Gan, C.L.P. Chen et al., A regularized variable projection algorithm for separable nonlinear least squares problems. IEEE Trans. Autom. Control. 64(2), 1 (2019). https://doi.org/10.1109/TAC.2018.2838045

G.Y. Chen, M. Gan, F. Ding et al., Modified Gram–Schmidt method-based variable projection algorithm for separable nonlinear models. IEEE Trans. Neural Netw. Learn. Syst. 1, 1 (2018). https://doi.org/10.1109/TNNLS.2018.2884909

M.Z. Chen, D.Q. Zhu, A workload balanced algorithm for task assignment and path planning of inhomogeneous autonomous underwater vehicle system. IEEE Trans. Cogn. Dev. Syst. PP, 1 (2018). https://doi.org/10.1109/TCDS.2018.2866984

J.L. Ding, Recursive and iterative least squares parameter estimation algorithms for multiple-input–output-error systems with autoregressive noise. Circuits Syst. Signal Process. 37(5), 1884–1906 (2018)

J.L. Ding, The hierarchical iterative identification algorithm for multi-input–output-error systems with autoregressive noise. Complexity (2017) https://doi.org/10.1155/2017/5292894 (Article ID 5292894)

F. Ding, H.B. Chen, L. Xu et al., A hierarchical least squares identification algorithm for Hammerstein nonlinear systems using the key term separation. J. Frankl. Inst. 355(8), 3737–3752 (2018)

F. Ding, L. Xu, F.E. Alsaadi, T. Hayat, Iterative parameter identification for pseudo-linear systems with ARMA noise using the filtering technique. IET Control Theory Appl. 12(7), 892–899 (2018)

M. Gan, C.L.P. Chen, G.Y. Chen et al., On some separated algorithms for separable nonlinear squares problems. IEEE Trans. Cybern. 48(10), 2866–2874 (2018)

M. Gan, H.X. Li, H. Peng, A variable projection approach for efficient estimation of RBF-ARX model. IEEE Trans. Cybern. 45(3), 462–471 (2015)

Z.W. Ge, F. Ding, L. Xu, A. Alsaedi, T. Hayat, Gradient-based iterative identification method for multivariate equation-error autoregressive moving average systems using the decomposition technique. J. Frankl. Inst. 356, 1–19 (2019). https://doi.org/10.1016/j.jfranklin.2018.12.002

F.Z. Geng, S.P. Qian, An optimal reproducing kernel method for linear nonlocal boundary value problems. Appl. Math. Lett. 77, 49–56 (2018)

P.C. Gong, W.Q. Wang, F.C. Li et al., Sparsity-aware transmit beamspace design for FDA-MIMO radar. Signal Process. 144, 99–103 (2018)

Y. Gu, F. Ding, J.H. Li, States based iterative parameter estimation for a state space model with multi-state delays using decomposition. Signal Process. 106, 294–300 (2015)

Y. Gu, J. Liu, X. Li, State space model identification of multirate processes with time-delay using the expectation maximization. J. Frankl. Inst. 356, 1–17 (2019). https://doi.org/10.1016/j.jfranklin.2018.08.030

M. Hou, Amplitude and frequency estimator of a sinusoid. IEEE Trans. Autom. Control 50(6), 855–858 (2005)

M. Hou, Estimation of sinusoidal frequencies and amplitudes using adaptive identifier and observer. IEEE Trans. Autom. Control 52(3), 493–499 (2007)

M. Hou, Parameter identification of sinusoids. IEEE Trans. Autom. Control 57(2), 467–472 (2012)

Y.B. Hu, Q. Zhou, H. Yu, Z. Zhou, F. Ding, Two-stage generalized projection identification algorithms for controlled autoregressive systems. Circuits Syst. Signal Process. 38, 1–17 (2019). https://doi.org/10.1007/s00034-018-0996-0

X.Y. Li, B.Y. Wu, A new reproducing kernel collocation method for nonlocal fractional boundary value problems with non-smooth solutions. Appl. Math. Lett. 86, 194–199 (2018)

F.B. Li, L.G. Wu, P. Shi, C.C. Lim, State estimation and sliding mode control for semi-Markovian jump systems with mismatched uncertainties. Automatica 51, 385–393 (2015)

J.H. Li, W. Zheng, J.P. Gu, L. Hua, A recursive identification algorithm for Wiener nonlinear systems with linear state-space subsystem. Circuits Syst. Signal Process. 37(6), 2374–2393 (2018)

X. Li, D.Q. Zhu, An adaptive SOM neural network method for distributed formation control of a group of AUVs. IEEE Trans. Ind. Electron. 65(10), 8260–8270 (2018)

Q.Y. Liu, F. Ding, Auxiliary model-based recursive generalized least squares algorithm for multivariate output-error autoregressive systems using the data filtering. Circuits Syst. Signal Process. 38, 1–21 (2019). https://doi.org/10.1007/s00034-018-0871-z

S.Y. Liu, L. Xu, F. Ding, Iterative parameter estimation algorithms for dual-frequency signal models. Algorithms 10(4), 1–13 (2017). https://doi.org/10.3390/a10040118

P.P. Liu, S.G. Zhang, Newton’s method for solving a class of nonlinear matrix equations. J. Comput. Appl. Math. 255, 254–267 (2014)

P. Ma, F. Ding, Q.M. Zhu, Decomposition-based recursive least squares identification methods for multivariate pseudolinear systems using the multi-innovation. Int. J. Syst. Sci. 49(5), 920–928 (2018)

M. Nafar, G.B. Gharehpetian, T. Niknam, Using modified fuzzy particle swarm optimization algorithm for parameter estimation of surge arresters model. Int. J. Innov. Comput. Inf. Control 8(1B), 567–581 (2012)

J. Pan, W. Li, H.P. Zhang, Control algorithms of magnetic suspension systems based on the improved double exponential reaching law of sliding mode control. Int. J. Control Autom. Syst. 16(6), 2878–2887 (2018)

J. Pan, H. Ma, X. Jiang, et al., Adaptive gradient-based iterative algorithm for multivariate controlled autoregressive moving average systems using the data filtering technique. Complexity (2018). https://doi.org/10.1155/2018/9598307 (Article ID 9598307)

H. Ramos, M.T.T. Monteiro, A new approach based on the Newton’s method to solve systems of nonlinear equations. J. Comput. Appl. Math. 318, 3–13 (2017)

Z.H. Rao, C.Y. Zeng, M.H. Wu et al., Research on a handwritten character recognition algorithm based on an extended nonlinear kernel residual network. KSII Trans. Internet Inf. Syst. 12(1), 413–435 (2018)

P. Stoica, H.B. Li, J. Li, Amplitude estimation of sinusoidal signals: survey, new results, and an application. IEEE Trans. Signal Process. 48(2), 338–352 (2000)

L.J. Wan, F. Ding, Decomposition-based gradient iterative identification algorithms for multivariable systems using the multi-innovation theory. Circuits Syst. Signal Process. 38, 1–20 (2019). https://doi.org/10.1007/s00034-018-1014-2

Y. Wang, Y. Si, B. Huang, S.X. Ding, Survey on the theoretical research and engineering applications of multivariate statistics process monitoring algorithms: 2008–2017. Can. J. Chem. Eng. 96(10), 2073–2085 (2018)

L. Xu, Application of the Newton iteration algorithm to the parameter estimation for dynamical systems. J. Comput. Appl. Math. 288, 33–43 (2015)

L. Xu, The parameter estimation algorithms based on the dynamical response measurement data. Adv. Mech. Eng. 9(11), 1–12 (2017). https://doi.org/10.1177/1687814017730003

L. Xu, F. Ding, Iterative parameter estimation for signal models based on measured data. Circuits Syst. Signal Process. 37(7), 3046–3069 (2018)

L. Xu, F. Ding, Parameter estimation for control systems based on impulse responses. Int. J. Control Autom. Syst. 15(6), 2471–2479 (2017)

L. Xu, F. Ding, Y. Gu et al., A multi-innovation state and parameter estimation algorithm for a state space system with d-step state-delay. Signal Process. 140, 97–103 (2017)

H. Xu, F. Ding, E.F. Yang, Modeling a nonlinear process using the exponential autoregressive time series model. Nonlinear Dyn. (2019). https://doi.org/10.1007/s11071-018-4677-0

G.H. Xu, Y. Shekofteh, A. Akgul et al., A new chaotic system with a self-excited attractor: entropy measurement, signal encryption, and parameter estimation. Entropy 20(2), 1–23 (2018). https://doi.org/10.3390/e20020086

L. Xu, W.L. Xiong et al., Hierarchical parameter estimation for the frequency response based on the dynamical window data. Int. J. Control Autom. Syst. 16(4), 1756–1764 (2018)

C. Yang, G. Wei, A noniterative frequency estimator with rational combination of three spectrum lines. IEEE Trans. Signal Process. 59(10), 5065–5070 (2011)

C. Yin, S.S. Wu et al., Design and stability analysis of multivariate extremum seeking with Newton method. J. Frankl. Inst. 355(4), 1559–1578 (2018)

Q.G. Zhang, The Rife frequency estimation algorithm based on real-time FFT. Signal Process. 25(6), 1002–1004 (2009)

Y.Z. Zhang, Y. Cao, Y.H. Wen, L. Liang, F. Zou, Optimization of information interaction protocols in cooperative vehicle-infrastructure systems. Chin. J. Electron. 27(2), 439–444 (2018)

X. Zhang, F. Ding, F.E. Alsaadi, T. Hayat, Recursive parameter identification of the dynamical models for bilinear state space systems. Nonlinear Dyn. 89(4), 2415–2429 (2017)

X. Zhang, F. Ding, L. Xu et al., State filtering-based least squares parameter estimation for bilinear systems using the hierarchical identification principle. IET Control Theory Appl. 12(12), 1704–1713 (2018)

X. Zhang, L. Xu, F. Ding et al., Combined state and parameter estimation for a bilinear state space system with moving average noise. J. Frankl. Inst. 355(6), 3079–3103 (2018)

W.H. Zhang, L. Xue, X. Jiang, Global stabilization for a class of stochastic nonlinear systems with SISS-like conditions and time delay. Int. J. Robust Nonlinear Control 28(13), 3909–3926 (2018)

N. Zhao, R. Liu, Y. Chen et al., Contract design for relay incentive mechanism under dual asymmetric information in cooperative networks. Wirel. Netw. 24(8), 3029–3044 (2018)

D. Zhao, Y. Wang, Y. Li, S.X. Ding, H-infinity fault estimation for two-dimensional linear discrete time-varying systems based on Krein space method. IEEE Trans. Syst. Man Cybern. Syst. 48(12), 2070–2079 (2018)

Z.P. Zhou, X.F. Liu, State and fault estimation of sandwich systems with hysteresis. Int. J. Robust Nonlinear Control 28(13), 3974–3986 (2018)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No. 61873111) and the 111 Project (B12018).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, S., Ding, F., Xu, L. et al. Hierarchical Principle-Based Iterative Parameter Estimation Algorithm for Dual-Frequency Signals. Circuits Syst Signal Process 38, 3251–3268 (2019). https://doi.org/10.1007/s00034-018-1015-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-018-1015-1