Abstract

In this paper, the weak derivatives (WD) criterion is introduced to solve the frequency estimation problem of multi-sinusoidal signals corrupted by noises. The problem is therefore modeled as a new least squares optimization task combined with WD. To overcome the potential basis mismatch effect caused by discretization of the frequency parameters, a modified orthogonal matching pursuit algorithm is proposed to solve the optimization problem by coupling it with a novel multi-grid dictionary training strategy. The proposed algorithm is validated on a set of simulated datasets with white noise and stationary colored noise. The comprehensive simulation studies show that the proposed algorithm can achieve more accurate and robust estimation than state-of-the-art algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The frequency estimation of multi-sinusoidal signals in additive noise can be easily applied in diverse areas, such as the direction estimation of arrival narrowed sources signals, power supply systems, speech, radar, and sonar signal processing, and biomedical engineering [6, 14, 17, 19, 26, 28]. Given white additive noise, a variety of approaches have been proposed for the frequency estimation problem, and they demonstrate differences in the estimation accuracy and computational complexity. These approaches include fast Fourier transform (FFT), modified iteration scheme algorithm, characteristic polynomial-based methods, and subspace-based methods [4, 5, 15, 17, 18, 26, 27, 29]. The colored additive noise is frequently encountered in practice [10, 22]. Computational methods developed for this scenario include higher-order statistics [7, 22, 23], prefiltering-based ESPRIT [10], and parametric least square estimator [11,12,13, 24]. The least squares (LS) method [2, 3, 20] is the most widely used among these methods, because it is simple and does not require significant prior knowledge in signal statistics. However, just as most of the existing state-of-the-art methods, the LS algorithm suffers from middle or low signal-to-noise ratio (SNR).

The multi-sinusoidal signals generally contain a parsimonious structure with a small number of unit norm sinusoids. Therefore, the sparse representation theory is commonly used in the frequency estimation of multi-sinusoidal signals to obtain the best sparse approximation of the observed signals. For efficient implementation, the orthogonal matching pursuit (OMP) algorithm is a greedy algorithm that iteratively identifies the sinusoidal atom that yields the greatest improvement in approximation quality in the dictionary. The dictionary is generally designed by discretizing the frequency parameter using a grid. When the frequency is roughly discretized, the size of the dictionary will be small, and OMP becomes computationally attractive for identifying the best atom at each iteration. However, it is more likely that the true underlying frequencies lie outside a rougher grid. This is called the “basis mismatch” or “off-grid” effect, which significantly comprises the reconstructing performance. More recent methods, such as gradient basis local search and Newton-basis cyclic refinement [16], have been developed to alleviate the problem.

To enhance the robustness of frequency estimation given noisy signals, this paper introduces the weighted LS optimization with a penalized term of the weak derivation (WD). Mathematically, WD generalizes the concept of derivative for non-differentiable functions. WD has the ability to suppress the noises. The LS criterion is a commonly used criterion which minimizes the sum of the squared residuals between the observed and the fitted values. However, the LS method ignores the connections among the data points, especially for the time series signals. The individual data points are connected with each other through the derivatives of the time-continuous function. Ignoring the connection information may easily lead to overfitting. Therefore, the usage of derivatives has been studied in some system identification tasks [1, 21], and WD has been successfully utilized in the identification of nonlinear dynamic systems and has achieved more accurate approximation [8]. Thus, to some extent, WD can prevent overfitting caused by the LS optimization and can suppress the noise. This proposed LS + WD optimization model is solved by a modified OMP algorithm. In addition, a novel multi-grid dictionary learning (MGDL) strategy is applied to alleviate the possible basis mismatch effect. Each MGDL iteration ranks the atoms by the OMP and then inserts new samples around the frequency vectors corresponding to the top atoms. The proposed method is validated using simulated data with both white and colored noises at different SNRs. Compared to state-of-the-art algorithms, the proposed algorithm can obtain more accurate estimation with higher computational efficiency.

2 Background

2.1 LS Frequency Estimation for Multi-sinusoidal Signals Model

We use \( y(n) \) to denote the observed p-component multi-sinusoidal signal, which is modeled as

where the parameters \( x_{i}^{ls} \) and \( \omega_{i} \) are, respectively, the amplitude and frequency of the i-th component, \( t_{s} \) is the sampling period,\( e(n) \) denotes the additive noise process and \( N \) is the number of samples.

Let

then the LS frequency estimation can be achieved by minimizing the following loss function

where \( {\mathbf{y}} = [\begin{array}{*{20}c} {y(1)} & {y(2)} & \cdots & {y(N)} \\ \end{array} ]^{\text{T}} \).

2.2 Weak Derivation

Let \( z \) be a function in the Lebesgue space \( L^{1} ([a,\;b]) \), and \( \varphi (t) \in C_{0}^{\infty } ([a,b]) \) is a smooth function with compact support on \( [a,b] \). Then \( D(z(t)) \) is a weak derivative of \( z(t) \), if \( \int_{a}^{b} {z(t)D(\varphi (t)){\text{d}}t = - \int_{a}^{b} {\varphi (t)D(z(t)){\text{d}}t} } \), and \( D( \cdot ) \) is called the weak derivation operator. An efficient method to calculate \( D(\, \cdot \,) \) was derived in [8].

Here, the filter \( \varphi (n) \) is set as a Gaussian function since it is widely used to achieve smoothing effect on the observed signals. \( \frac{\text{d}}{{{\text{d}}n}}\varphi (n) \) is calculated using the first-order difference to approximate the derivative of \( \varphi (n) \).

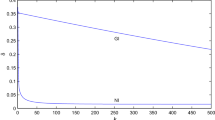

To illustrate the operator D’s ability to suppress the noise, an example with a mixture of three sinusoidal components corrupted by the additive Gaussian noise is shown in Fig. 1. We investigate the noise effect on the signal before and after transformation by the operator D. The left panel indicates that the noise effect on the signal is evident and the discrepancy between the true values and the noisy observed data is huge before transformation. In contrast, the discrepancy is largely reduced after both the true values and the noisy signal are transformed by the operator D. These results indicate that the associated weak derivatives are capable of reducing the noise effect.

3 The Modified OMP Algorithm

3.1 The Weighted Criterion

To enhance the robustness of the algorithm, a LS + WD criterion is introduced to modify the objective function on (3):

The first term measures the local approximation error by calculating the difference between the observed and reconstructed signals, while the second term characterizes a more general divergence between the trend of the observed and reconstructed signals. The tradeoff parameter \( \mu \) is applied to balance the importance of the “local error” and the “general error” in the training phase, which can be selected by cross-validation.

Based on the definition of the weak derivatives, minimizing the improved weighted criterion (6) is equivalent to solving a new least squares problem

3.2 Modified Orthogonal Matching Pursuit

The classic OMP is an efficient scheme to iteratively solve the LS-type optimization problems by constructing the model term by term. Given discrete observations of the multi-sinusoidal signal \( y(n),n = 1, \ldots ,N \) and the original dictionary \( g_{i} (n),\;n = 1, \ldots ,N \), the discrete forms of (4) and (5) can be written as

where \( \varphi^{\prime } ( \cdot ) = \frac{\text{d}}{{{\text{d}}n}}\varphi ( \cdot ) \), \( n_{0} \) is the support of the function \( \varphi ( \cdot ) \), and \( n = 1, \ldots ,N - n_{0} \).

The matrix form of (7) can be written as

where

It follows that a classic OMP can be applied to solve (8) by minimizing the loss function (6).

3.3 Multi-grid Dictionary Training Strategy

In the classic OMP, the candidate parameters are typically sampled uniformly using a grid. However, it is rather challenging to select a proper sampling rate. A low-resolution grid with a small sampling rate is likely to cause serious off-grid effect and lead to bad reconstruction performance. On the other hand, a high-resolution dictionary with a large amount of atoms results in a heavy computational burden. Therefore, instead of defining a large size of dictionary, we propose a multi-grid scheme to adaptively refine a coarse dictionary. The coarse dictionary \( {\mathbf{G}}^{(0)} \) is constructed by discretizing the frequency parameters with a sampling rate of 10 within the frequency range of the signal. Table 1 describes the procedure of updating the dictionary \( {\mathbf{G}}^{(l - 1)} \) to \( {\mathbf{G}}^{(l)} \). At steps 1 and 2, OMP is utilized to select the index set \( {\text{ind}}_{l} \) of \( {\mathbf{G}}^{(l - 1)} \), and the size of \( {\text{ind}}_{l} \) is \( nf_{l}^{\text{cand}} \). At step 3, the active frequency set \( \varOmega_{l} \) is a subset of the frequency sampling set \( {\text{fus}}_{l - 1} \) with the indices of \( {\text{ind}}_{l} \). The new frequency set \( {\text{fus}}_{l} \) is obtained at step 4 by inserting new samples in the neighborhood of \( \varOmega_{l} \). Finally, the new dictionary \( {\mathbf{G}}^{(l)} \) can be constructed according to frequency set \( {\text{fus}}_{l} \) based on Eqs. (2) and (10). The iteration terminates when the maximum level is reached.

4 The Modified OMP (MOMP) Algorithm

The modified OMP algorithm for the frequency estimation of multi-sinusoidal signals can be illustrated as Fig. 2.

Initialization: Set hyper-parameters \( \mu \), Gaussian function \( \varphi (n) \) with support \( n_{0} \), original dictionary \( {\mathbf{G}}^{(0)} \) built from the initial frequency set \( {\text{fus}}_{0} = \{ \omega_{1} ,\omega_{2} , \ldots ,\omega_{{nf_{0} }} \} \).

Step 1 Given the current dictionary \( {\mathbf{G}}^{(0)} \), use OMP to obtain a solution \( {\mathbf{x}}^{(0)} \) and \( {\text{fus}}_{1} \).

-

(1)

Let \( l = 1 \). For the dictionary \( {\mathbf{G}}^{(0)} \), use the test function to get the new

$$ {\tilde{\mathbf{G}}}^{(0)} = [{\tilde{\mathbf{g}}}_{1} \cdots {\tilde{\mathbf{g}}}_{{nf_{0} }} ] = \left[ {\begin{array}{*{20}c} {{\mathbf{g}}_{1} } & \cdots & {{\mathbf{g}}_{{nf_{0} }} } \\ {D{\mathbf{g}}_{1} } & \cdots & {D{\mathbf{g}}_{{nf_{0} }} } \\ \end{array} } \right], $$with \( {\mathbf{g}}_{i} = [\begin{array}{*{20}c} {\sin (\omega_{i} \cdot t_{s} )} & {\sin (\omega_{i} \cdot 2t_{s} )} & \cdots & {\sin (\omega_{i} \cdot Nt_{s} )} \\ \end{array} ]^{\text{T}} \).

-

(2)

For \( {\mathbf{r}}_{0} = {\mathbf{y}} \), \( {\mathbf{Y}}_{{I - {\text{LS}}}}^{0} = [{\tilde{\mathbf{r}}}_{0} ] = \left[ {\begin{array}{*{20}c} {\lambda {\mathbf{r}}_{0} } \\ {\mu D{\mathbf{r}}_{0} } \\ \end{array} } \right] \); therefore, we obtain

$$ l_{1} = \mathop {\arg \hbox{max} }\limits_{{1 \le j \le n_{M} }} \{ C({\tilde{\mathbf{r}}}_{0} ,{\tilde{\mathbf{g}}}_{j} )\} , $$where the function \( C(\; \cdot \;,\; \cdot \;) \) denotes the correlation coefficient. Then, the first orthogonal regressor can be chosen as \( {\mathbf{h}}_{1} = {\mathbf{g}}_{{l_{1} }} \), and the residual vector can be updated as

$$ {\mathbf{r}}_{1} = {\mathbf{r}}_{0} - \frac{{{\mathbf{r}}_{0}^{\text{T} } {\mathbf{h}}_{1} }}{{{\mathbf{h}}_{1}^{\text{T} } {\mathbf{h}}_{1} }}{\mathbf{h}}_{1} . $$ -

(3)

In general, let us assume that at the (m − 1)-th step, a subset \( {\mathbf{G}}_{m - 1}^{{^{(0)} }} \) consisting of \( (m - 1) \) significant bases \( {\mathbf{g}}_{{l_{1} }} ,{\mathbf{g}}_{{l_{2} }} \ldots {\mathbf{g}}_{{l_{m} - 1}} \) has been determined, and these bases have been transformed into a new group of orthogonal basis \( {\mathbf{h}}_{1} , \ldots {\mathbf{h}}_{m - 1} \). Let

$$ {\mathbf{h}}_{j}^{(m)} = {\mathbf{g}}_{j} - \sum\limits_{k = 1}^{m - 1} {\frac{{{\mathbf{g}}_{j}^{\text{T} } {\mathbf{h}}_{k} }}{{{\mathbf{h}}_{k}^{\text{T} } {\mathbf{h}}_{k} }}} {\mathbf{h}}_{k} , $$$$ l_{m} = \mathop {\arg \hbox{max} }\limits_{{j \ne l_{k} ,1 \le j \le m - 1}} \left\{ {C\left( {{\tilde{\mathbf{r}}}_{m - 1} ,\left[ {\begin{array}{*{20}c} {{\mathbf{h}}_{j}^{(m)} } \\ {D{\mathbf{h}}_{j}^{(m)} } \\ \end{array} } \right]} \right)} \right\}, $$The m-th significant basis can then be chosen as \( {\mathbf{h}}_{m} = {\mathbf{h}}_{{l_{m} }}^{(m)} \). The residual vector is given by

$$ {\mathbf{r}}_{m} = {\mathbf{r}}_{m - 1} - \frac{{{\mathbf{r}}_{m - 1}^{\text{T} } {\mathbf{h}}_{m} }}{{{\mathbf{h}}_{m}^{\text{T} } {\mathbf{h}}_{m} }}{\mathbf{h}}_{m} . $$

The algorithm terminates until the maximum level \( nf_{1}^{\text{cand}} \) is reached. Then, \( \varOmega_{1} = \{ \omega_{{l_{1} }} ,\omega_{{l_{2} }} , \ldots \omega_{{l_{{nf_{1}^{\text{cand}} }} }} \} \), so \( {\text{fus}}_{1} \) by inserting new samples in the neighborhood of \( \varOmega_{1} \).

Step 2 Update \( {\mathbf{G}}^{(l)} \) using MGDL strategy in Table 1. Obtain the MOMP solution \( {\mathbf{x}}^{(l)} \) and \( {\text{fus}}_{l + 1} \) at the current dictionary \( {\mathbf{G}}^{(l)} \).

Step 3 If \( l < L - 1 \), use MOMP to update the dictionary; otherwise, terminate the algorithm. At the last iteration, p components are extracted by OMP, and their corresponding frequencies \( {\text{fus}}_{L} \) are the estimated frequencies output by the algorithm.

5 Simulation Results

We use simulation studies to evaluate the performance of the proposed MOMP method compared with five state-of-art methods: MUSIC, OW-HMUSIC [29], OMP, Newtonized OMP (NOMP), and ZZB [18]. The simulation results show that MOMP outperforms the above-mentioned methods given signals with low SNR. All the phases are set to zero in these studies.

5.1 Frequency Estimation of Sinusoidal Signal with White Additive Noise

The MOMP is compared with the other five methods in the frequency estimation of two-component sinusoidal signals polluted by white Gaussian noise:

The frequency and amplitude parameters are set as \( \omega_{1} = 0.6,\;\;\omega_{2} = 1.7,\;\;a_{1} = 1,\;\;{\text{and}}\;\;a_{2} = 2 \), respectively. The additive noise \( v(n) \) follows the Gaussian distribution, with mean zero, standard deviation \( \sigma \), and support \( n_{0} = 90 \). The sampling interval \( t_{s} = 0.1 \). A total of 256 data points are generated according to (11). In the MOMP algorithm, the trade-off parameter \( \mu \) is selected from the candidate set \( \{ 0.15,0.2,0.25, \ldots ,0.5\} \) using cross-validation. For MOMP, OMP, and NOMP, the dictionary is constructed by discretizing the frequency parameters with a sampling rate of 10 within \( [0,\pi ] \), and thus the dictionary is of size \( 62 \times 256 \). In ZZB, the stopping criterion parameter \( \tau \) is set as \( \sigma^{ 2} \log N - \sigma^{ 2} \log \log \left( {\frac{1}{{1 - p_{0} }}} \right) \) with \( p_{0} = 10^{ - 2} \). In OW-HMUSIC, the parameter M is set to 100.

To evaluate the robustness of different methods against noise, signals with different SNRs are obtained by properly scaling the noise variance \( \sigma^{ 2} \). The SNR is defined as

We assess the detection result of a method using detection acceptance condition (DAC), which means that we accept a detection only if all the frequencies have been accurately estimated, i.e.,

Finally, the detection rate is defined as

The detection rate is a value in [0, 1], with 1 indicating best accuracy. All the presented simulation results are averaged over 100 independent experiments.

The detection rates of MOMP and the other five methods given varying SNRs are shown in Fig. 3. Both the ZZB algorithm and the newly proposed method MOMP are the only two methods that achieve 100% detection rate at SNR = − 1 or 0 dB. However, with the lower SNR (< − 5 dB), the accuracy of ZZB, MUSIC, and OW-HMUSIC decreases greatly and all of their detection rates are smaller than 40%. The detection rate of MOMP is nearly 10% higher than the other methods when SNR < − 5 dB. These results demonstrate the superiority of the proposed MOMP method compared with the other methods, especially when SNR is relatively low.

5.2 Frequency Estimation of Sinusoidal Signal with Stationary Colored Additive Noise

The newly proposed algorithm MOMP can also be used to improve frequency estimation in the existence of the stationary colored additive noise. It was proved that if the additive noise satisfies a certain assumption [11,12,13, 24], the strong consistency or the asymptotic normality results can be obtained [12], even if the noise does not satisfy the standard sufficient condition (Jennrich [9] or Wu [25]) for the least squares estimators to be consistent.

In this experiment, the sinusoidal signal is polluted by colored additive noise, and the two frequency parameters are very close with \( \omega_{1} = 0.63 \) and \( \omega_{2} = 0.66 \). The amplitude parameters are set as \( a_{1} = 1,a_{2} = 2 \). The noise \( v(n) \) is a random variable following the exponential distribution:

where \( s_{1} = 2.8,{\kern 1pt} \;s_{2} = 0.2 \), and 256 sample points are generated with sample rate 10. The values of \( \mu \) and \( n_{0} \) are the same as in experiment 1. With the stationary colored additive noise, both MUSIC and OW-HMUSIC can extract only one harmonic component when the two frequencies to be estimated have very close true values. That is, the frequency resolution rates of both methods are significantly decreased with the colored additive noise. Moreover, ZZB cannot detect any harmonic component in this case, because ZZB is specially designed for data with the Gaussian white noise. Therefore, we compare the detection rate of MOMP with that of OMP and NOMP in this experiment.

The MOMP algorithm coupled with the multi-grid approach is applied to estimate the frequency of the sinusoidal signal with 256 samples embedded in the colored additive noise with different SNRs. Here, the SNR is defined as

Because the frequency parameters in this experiment are not initially in the dictionary, we used the multi-grid procedure presented in Sect. 3.3. Figure 4 illustrates the detection rates of the MOMP, OMP, and NOMP algorithms. All the three methods are able to distinguish the two frequency parameters when the SNR is 5 dB. However, when SNR is decreased to 2 dB, only MOMP is still able to estimate the two frequencies with 100% accuracy, while the accuracy of the other two methods decreases to 95%. The NOMP estimates the frequency with a rate higher than 80% for an SNR more than − 1 dB, while the rate of both OMP and NOMP is almost 60%.

6 Conclusion

Sparse representation is an effective way to solve the frequency estimation problem of the multi-sinusoidal signals. However, when the signals are polluted by additive noise, the commonly used least squares criterion cannot reconstruct the signal well. To address this issue, a new stricter measurement of the residuals is proposed to improve the frequency estimation performance. The weak derivatives of the observed data and the model-predicted values are combined with the classic least square criterion via a weighted parameter to construct an improved weighted criterion.

The MOMP algorithm is proposed to efficiently detect the true underlying frequency. A multi-grid dictionary training strategy is used to assist the selection of dictionary and improve the efficiency of sparse construction. The idea behind the multi-grid approach is to refine the dictionary over several levels of resolution. Simulation results showed that the new algorithm significantly improved the accuracy of frequency estimation.

Despite the good performance of the proposed MOMP algorithm in the simulation, a mathematical proof is in need to guarantee its effectiveness. As a continuous effort, we will analyze the theoretical properties and potential extensions of MOMP in the future work.

References

D. Brewer, M. Barenco, R. Callard, M. Hubank, J. Stark, Fitting ordinary differential equations to short time course data. Philos. Trans. R. Soc. A 366, 519–544 (2008)

A.E. Brito, C. Villalobos, S.D. Cabrera, Interior-point methods in l(1) optimal sparse representation algorithms for harmonic retrieval. Optim. Eng. 5, 503–531 (2004)

C. Cai, K. Zeng, L. Tang, D. Chen, W. Peng, J. Yan, X. Li, Towards adaptive synchronization measurement of large-scale non-stationary non-linear data. Future Gener. Comput. Syst. 43–44, 110–119 (2015)

C. Candan, Analysis and further improvement of fine resolution frequency estimation method from three DFT samples. IEEE Signal Process. Lett. 20, 913–916 (2013)

P. Dash, S. Hasan, A fast recursive algorithm for the estimation of frequency, amplitude, and phase of noisy sinusoid. IEEE Trans. Ind. Electron. 58, 4847–4856 (2011)

S. Djukanovic, An accurate method for frequency estimation of a real sinusoid. IEEE Signal Process. Lett. 23, 915–918 (2016)

M. Geng, H. Liang, J. Wang, Research on methods of higher-order statistics for phase difference detection and frequency estimation, in 2011 4th International Congress on Image and Signal Processing (IEEE 2011), pp. 2189–2193

Y.Z. Guo, L.Z. Guo, S.A. Billings, H.L. Wei, Ultra-orthogonal forward regression algorithms for the identification of non-linear dynamic systems. Neurocomputing 173, 715–723 (2016)

R. Jennrich, Asymptotic properties of the nonlinear least squares estimators. Ann. Math. Stat. 40, 633–643 (1969)

T. Jin, S. Liu, R. Flesch, Mode identification of low-frequency oscillations in power systems based on fourth-order mixed mean cumulant and improved TLS-ESPRIT algorithm. IET Gener. Transm. Dis. 11, 3737–3748 (2017)

D. Kundu, A. Mitra, Genetic algorithm based robust frequency estimation of sinusoidal signals with stationary errors. Eng. Appl. Artif. Intell. 23, 321–330 (2010)

D. Kundu, S. Nandi, Parameter estimation of chirp signals in presence of stationary noise. Stat. Sin. 18, 187–201 (2008)

D. Kundu, S. Nandi, Determination of discrete spectrum in a random field. Stat. Neerl. 57, 258–283 (2003)

S. Liu, Y. Zhang, T. Shan, R. Tao, Structure-aware Bayesian compressive sensing for frequency-hopping spectrum estimation with missing observations. IEEE Trans. Signal Process. 66, 2153–2166 (2018)

B. Mamandipoor, R. Dinesh, M. Upamanyu, Frequency estimation for a mixture of sinusoids: A near-optimal sequential approach, in IEEE Global Conference on Signal and Information Processing (IEEE, 2015), pp. 205–209

B. Mamandipoor, D. Ramasamy, U. Madhow, Newtonized orthogonal matching pursuit: frequency estimation over the continuum. IEEE Trans. Signal Process. 64, 5066–5081 (2016)

U. Orguner, C. Candan, A fine-resolution frequency estimator using an arbitrary number of DFT coefficients. Signal Process. 105, 17–21 (2014)

D. Ramasamy, S. Venkateswaran, U. Madhow, Compressive parameter estimation in AWGN. IEEE Trans. Signal Process. 62, 2012–2027 (2014)

P. Rodriguez, A. Tionbus, R. Teodorescu, M. Liserre, F. Blaabjerg, Flexible active power control of distributed generation systems during grid faults. IEEE Trans. Ind. Electron. 54, 2583–2592 (2007)

S. Sahnoun, E.H. Djermoune, C. Soussen, D. Brie, Sparse multidimensional modal analysis using a multigrid dictionary refinement. EURASIP J. Adv. Signal Process. 12, 1–10 (2012)

M. Schmidt, H. Lipson, Distilling free-form natural laws from experimental data. Science 324, 81–85 (2009)

Z. Shi, F. Fairman, Harmonic retrieval via state space and fourth-order cumulants. IEEE Trans. Signal Process. 42, 1109–1119 (1994)

R. van Vossen, H. Naus, A. Zwamborn, High-resolution harmonic retrieval using the full fourth-order cumulant. Signal Process. 90, 2288–2294 (2010)

A. Walker, On the estimation of a harmonic component in a time series with stationary independent residuals. Biometrika 58, 21–36 (1971)

C. Wu, Asymptotic theory of the nonlinear least squares estimation. Ann. Stat. 9, 501–513 (1981)

S. Yang, H. Li, Estimation of the number of harmonics using enhanced matrix. IEEE Signal Process. Lett. 14, 137–140 (2007)

P. Yannis, O. Rosec, S. Yannis, Iterative estimation of sinusoidal signal parameters. IEEE Signal Process. Lett. 17, 461–464 (2010)

M. Zhang, L. Fu, H. Li, G. Wang, Harmonic retrieval in complex noises based on wavelet transform. Digit. Signal Process. 18, 534–542 (2008)

Z. Zhou, S. Cheung, F. Chan, Optimally weighted music algorithm for frequency estimation of real harmonic sinusoids, in IEEE International Conference on Acoustics, Speech and Signal Processing (IEEE, 2012), pp. 3537–3540

Acknowledgements

This work is supported by the open research project of The Hubei Key Laboratory of Intelligent Geo-Information Processing with Grants KLIGIP2016A01 and KLIGIP2016A02, the specific funding for education science research by self-determined research funds of CCNU from the colleges’ basic research and operation of MOE with Grants 230-20205160288 and CCNU15A05022.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fu, L., Zhang, M., Liu, Z. et al. Robust Frequency Estimation of Multi-sinusoidal Signals Using Orthogonal Matching Pursuit with Weak Derivatives Criterion. Circuits Syst Signal Process 38, 1194–1205 (2019). https://doi.org/10.1007/s00034-018-0906-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-018-0906-5