Abstract

Damage investigation and economic loss assessment in seismic events estimated by risk analysis are serious concerns of safety managers. There are two types of risk analyses: conventional performance-based seismic analysis employing conditional probability, and reliability-based risk analysis implementing the unified reliability analysis. Although reliability-based risk analysis requires further development in certain areas, it gives distinct advantages compared to conventional risk analysis. This study proposes a new modification of the reliability-based risk analysis to simulate the Poisson point process in which the unified reliability analysis is reformulated based on the fast integration analysis. This reformulation eliminates the need for the simulation process of the sampling-based approach by employing the point estimation procedure, as the result yields a limited number of component reliability problems that can be addressed through the application of numerical nonlinear programming optimization. Another part of this study is allocated to define different parts of the unified reliability analysis such as modeling the ground motion by employing an artificial earthquake generation, etc., and these new tools aim to enhance the functionality of the reliability-based risk analysis. The performance and robustness of the proposed unified reliability analysis are completely investigated through a comprehensive numerical example, involving a linear dynamic analysis of a finite-element model of a concrete circular tunnel. The results demonstrate the successive performance of the proposed framework in achieving accurate results and a significant decrease in computational cost.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Tunnels, as essential parts of civil infrastructures, have many applications in different forms such as roadway and railway tunnels in transportation and utility systems (Spyridis and Proske xxxx; Jin and Jin 2022; Ekmen and Avci 2023). Over the past three decades, according to several reports on earthquake events, tunnels have undergone severe damages. Since then, special attention has been paid to the seismic behavior of tunnels (Çakır and Coşkun xxxx). There are many studies reporting the damage incurred on underground structures during strong earthquakes, including 1923 Kanto earthquake (Okamoto 1984), 1995 Kobe earthquake (Asakura and Sato 1998), 1999 Chi-Chi earthquake (Wang et al. 2001), 1999 Kocaeli earthquake (Brandl and Neugebauer 2002), 2004 Mid Niigata Prefecture earthquake (Jiang et al. 2010), and 2008 Wenchuan earthquake (Wang et al. 2009). Hence, the risk assessment is an important issue for tunnel engineers in seismically active areas (Huang et al. xxxx; Liu et al. 2023; Abed and Rashid 2023; Choudhuri and Chakraborty 2023).

It is necessary to utilize a robust risk analysis in order to have a reasonable estimation of possible damages and probable costs. A conventional risk analysis approach proposed by the Pacific Earthquake Engineering Research (PEER) Center (Moehle and Deierlein 2004) applies conditional probability functions to estimate exceedance probability. The functions include seismic loss distribution conditioned to damage measures; damage measures known as fragility function conditioned to structural responses: structural responses conditioned to earthquake intensity (Vamvatsikos and Cornell 2002); and a function that models earthquake intensity. Damage classification is a major part of conventional risk analysis implemented to estimate the severity of damage and depends on the seismic source, site, and structural behavior (Wang and Zhang 2013; Zhao et al. 2021). A damage classification of tunnels might involve several parameters such as the functionality and extent of damage including crack width and length (Wang and Zhang 2013; Qiu et al. 2021). In early stages, empirical fragility curves were derived from the damage records of the past earthquakes (Dowding and Rozan 1978; Jing-Ming and Litehiser 1985). If the seismic damage records are inadequate, numerical approaches are considered as alternative sources that aim to derive fragility curves (Mohammadi-Haji and Ardakani 2020).

On the other hand, the reliability-based risk analysis proposed by Haukaas (Haukaas 2008) is a novel risk analysis focused on structural damage in earthquake engineering with a unified limit state formulation expressed in terms of seismic loss rather than traditional forms representing demand and capacity. The seismic loss assessment of a building in Vancouver, Canada, was an attempt in reliability-based risk analysis that concentrated on losses due to repair costs and implemented the simple sine curves to estimate the damage (Koduru and Haukaas 2010). Later, Mahsuli and Haukaas (Mahsuli and Haukaas 2013) released an updated version of the reliability-based risk analysis that included three levels of refinement as component, building, and region levels. They computed the loss exceedance probabilities using generic models that only needed to implement the observable data of a region or a portfolio of buildings. Recently, Aghababaei and Mahsuli (Aghababaei and Mahsuli 2018, 2019) developed a new paradigm of reliability-based risk analysis that included many interacting predictive models to simulate the hazards, structural responses, damages, and economic and social losses. In particular, they presented the next level of reliability-based risk analysis by adding the detailed risk analysis at the component level. Their study addressed various loss assessment features, which could be found in the FEMA P-58 (Michael Mahoney et al. xxxx). Generating the probabilistic damage models by Bayesian regression modeling methodology was also implemented.

In the modified format of the unified reliability analysis (Mahsuli and Haukaas 2013), it is tried to reduce the computational burden by integrating the Monte Carlo simulation (Naess and Bo xxxx) and the search-based reliability method such as the first-order reliability method (FORM) (Arabaninezhad and Fakher 2021). Specifically, the Monte Carlo simulation simulates the timeline samples in which several time points exist associated with an event's mean occurrence. After that, the search-based algorithms estimate the failure probability of each time point in the timelines. Consequently, if all the time points in a timeline result in the safety condition, this timeline sample is labeled by safety. In other words, the difference between this modified approach and the pure sampling approach is that the failure probability estimation is conducted by search-based algorithms instead of sampling simulation (Haukaas 2008; Mahsuli and Haukaas 2013; Aghababaei and Mahsuli 2018, 2019). Although this novelty reduces the computational effort compared to the pure sampling approach, too many component reliability problems must be solved because of too many timeline simulations.

In terms of decreasing the computational effort, Liu et al. (Lu et al. 2019) proposed a new method called the fast integration algorithm for the time-dependent reliability analysis that estimates the failure probability by the point-estimate method. This method discretizes time domain and random-variate space based on the Gauss quadrature and the Gauss–Legendre quadrature methods, respectively. The result is that the algorithm generates a limited number of reliability problems based on the product of the defined points in two discretizations. The fast integration method makes it possible to choose the internal solver for solving component reliability problems. These solvers can be the search-based method such as the first-order reliability analysis. Another solver is numerical nonlinear programming optimization such as the trust-region sequential quadratic programming (trust-region SQP) (Luo et al. 2021; Yamashita et al. 2020) which is a powerful method to solve the nonlinear constrained optimization problems. In other words, the accuracy and computational cost of an analysis depend on the internal solver of the fast integration method.

This article proposes a modified version of the unified reliability analysis based on the fast integration method to eliminate the time-consuming sampling approach from the algorithm. To this aim, the reformulation of the fast integration method is defined to show how the implicit limit state function can be considered based on loss cost instead of representing demand and capacity. After that, the different components of the proposed unified reliability analysis are discussed including ground motion model, structural response, damage model, and loss model. Next, this proposed framework is applied to a circular concrete tunnel structure. A significant reduction in the cost of calculations besides the obtained accuracy are the main advantages of the proposed method. The rest of this article is structured as follows: Sect. 2 firstly addresses the theoretical backgrounds of the unified reliability analysis followed by the proposed fast integration method for time-dependent reliability analysis (i.e., the reliability-based risk analysis). Occurrence simulation including model library of seismic magnitude, intensity, tunnel response, etc. is presented in another part of Sect. 2. In Sect. 3, the functionality of the proposed unified reliability analysis based on the fast integration method is investigated in detail using a numerical example. This section is followed by concluding remarks in Sects. 4 and 5.

2 Unified Reliability Analysis Based on Fast Integration Method

In the following section, the principles of unified reliability analysis are introduced. Moreover, it is argued that how the probability models can be employed in this framework. The conventional reliability problem can be shown by Eq. (1) (Ditlevsen and Madsen 2005).

where pf is the probability sought; f(u) is the joint probability distribution of random variables u; and g(u) is the limit state function. In modern structural reliability analysis, the limit state function often is an implicit function of the random variables that needs a structural analysis in which random variables used as input parameters. In the unified reliability approach, the limit state function is expressed as structural loss to estimate the probability of exceedance. Equation (2) shows this limit state function.

where lo is the structural loss related to a specific event which is an implicit function of random variables and lp is the threshold value. Estimating a realization of lo requires the employment of series of probabilistic models. Figure 1 illustrates schematically the steps for estimating the limit state function (structural loss). Generally, these models work based on realizations of input random variables and yield deterministic outputs.

It is can be seen from Fig. 1 that the realizations of random variables and deterministic outputs of models are utilized to estimate the ground motion, structural response, damage ratio, and loss cost. Next step is to transfer the loss value to reliability analysis module. It is noted that other approaches employ conditional probability distributions, which is known as fragility curves, for estimating damage and loss values. Although fragility curves have upsides to combine the empirical information from observed earthquake damage, they are not exactly probabilistic models. Moreover, the fragility curves often receive a single structural response parameter to estimate the value of damage. However, utilizing the implicit models mentioned in unified reliability analysis is the method that uses one or more structural responses for considering in a damage model. Furthermore, any type of random variables can be used as inputs of any or all the models with considering correlation coefficients between them in unified reliability analysis. A significant benefit of this framework is the considering both aleatory and epistemic uncertainties. The final result of the unified reliability analysis can be more trustworthy because it is possible to simulate the synthetic probabilistic ground motion model instead of scaled records. In other words, unified reliability analysis reveals the probabilistic nature of loading condition more than other approaches.

Being time-consuming is a negative feature of the unified reliability analysis because this method employs the random sampling algorithm to simulate the timelines consisting of several samples in each timeline that need to calculate the safety or failure state of any sample.

In the current study the fast integration method is employed instead of sampling approach to estimate the failure probability related to a specific cost threshold. Therefore, the utilizing of fast integration is firstly investigated, after that, the other parts of unified reliability method are discussed as occurrence model.

2.1 Reformulation the Fast Integration Method for Time-Dependent Reliability Analysis Based on Loss Cost

In order to evaluate the time-dependent reliability of a structure over time interval (0, T], the time-varying limit state function could be defined as Eq. (3) (Mori and Ellingwood 1993), which is the original version of limit state function utilized in the fast integration method.

where R is structural resistance and S is load effect and both are implicit function of random variables separated in two categories. Parameter u shows the vector containing random variables. This limit state function can be expanded and rewritten as a function based on loss cost as Eq. (4) to show more details in comparison with Eq. (3) including implicit loss function and loss threshold.

This implicit limit state function shows that the employed loss function, lo, contains both structural resistance and load effect. Therefore, the rest of calculation to estimate probability of failure within given time interval (0, T] is possible to estimate.

If it is assumed that the duration of load event is very short and the load intensity remains constant during a load event, it is possible to model the load event as a sequence of randomly occurring pulses with random intensity Si (i = 1, 2, …, n) at time ti and short duration τ during time interval (0, T]. According to the above-mentioned assumptions, it can be considered that resistance is treated as constant during the load event. The structural time-dependent failure probability should be expressed as Eq. (5) if n independent events occur within time interval (0, T] (Li et al. 2015).

The parameters implemented in the structural reliability analysis could be divided into time-independent and time-dependent random variables. The time-independent random variables such as dead load, initial material strength, and geometry parameters that could be expressed as vector X remain constant during time interval (Hong 2000). Time-dependent random variables such as live load, earthquake load, environmental actions, corrosion, etc. that could be expressed as vector Y(t) are generated randomly at arbitrary time instants. Then, the time-dependent limit state function of a structure can be demonstrated as G [X, Y(t), t, lp] and G [X, Y(t), t, lp] > 0 that refers to the safe domain. Under the assumptions that the correlation between X and Y(t) at specified time point is considered and the occurrence in time of Y(t) is modeled as a Poisson point process, general form of failure probability within a given time interval (0, T] can be estimated as Eq. (6) (Hohenbichler and Rackwitz 1981; Liu and Kiureghian xxxx; Lu et al. 2017).

where T−1(.) is the inverse transformation, e.g., Nataf transformation (Li et al. 2008); ΩUx is the domain region of X in the standard normal space; \(\lambda_{y}\) is the mean occurrence rate of load; UX and UY are independent standard normal random vectors, respectively; and ux and uy are the linearization of UX and UY, respectively. According to Eq. (6), the time-dependent reliability analysis includes a multi-dimensional integral over time space and random-variate space. Generally, if the limit state function is implicit, highly nonlinear, or includes multiple random variables, the failure probability evaluation using direct integration is impossible. Gauss–Legendre quadrature and the point-estimate method are the choices assumed in the fast integration algorithm to discretize the integrals with respect to time domain and random-variate space (Lu et al. 2019).

In the fast integration method, the first action is to disassemble the integral with respect to time domain using Gauss–Legendre quadrature. Then, the point-estimate method is utilized for disassembling the integral corresponding to random variables. Equation (7) presents the time-dependent failure probability of the fast integration method when disassembling process is done (Lu et al. 2019).

where E(.) denotes the expectation and PY|X,t (X, T, lp) is the conditional probability associated with the time domain disassembling process; τk and wk are the abscissa and the corresponding weights for Gauss quadrature with weight function 1, respectively; mT is the number of the abscissa; UX is nx-dimensional independent standard normal random vector; and nx is the number of random variables in X (Lu et al. 2019).

The bivariate dimension-reduction method (Xu et al. 2004; Zhao and Ono 2000) can be adopted to approximate the answer of Eq. (7) and reduce the computation burden. For more information about how to estimate the abscissa limit and weight of Gauss–Legendre quadrature algorithm and the point-estimate method, required computation of the bivariate dimension-reduction method, and other mathematics issues, see (Lu et al. 2019).

Finally, Eqs. (8) to (10) should be calculated to obtain the probability of failure.

where pi and ui are the estimating points and corresponding weights in the standard normal space, respectively; nX is the number of independent standard normal random variable; mX is the number of estimating points in one dimensional case; and h0 (0, …, 0, …, 0) is the result of limit state function at time tk when all random variables have the 0.0 value in the standard normal space. For understanding how to estimate points and their corresponding weights, see Eqs. (21) and (22) in Lu et al. (2019). Equation (7) can be estimated using traditional structural reliability analysis methods, including the first-order reliability methods (searched-based), sampling methods, etc., where the two parameters ui and τk are determined in PY|X,t (X, T, lp).

This framework has been reformulated based on the original version of the fast integration method to be employed in the unified reliability analysis. Consequently, employing the sampling approach to estimate the time-dependent failure probability is unnecessary anymore. It is noted that the fast integration method has advantages and disadvantages. Decreasing the computational cost is the most significant advantage of the fast integration method achieved by converting the initial time-dependent problem to a limited number of time-independent problems using a point estimation procedure. Therefore, there is no need to implement a complete simulation procedure, and it is easier to solve the time-independent component problems. However, the accuracy of the approximation of the failure probability depends on the number of points in the point estimation procedure. The five points for disassembling the time domain and seven points for the random-variate space are suggested based on previous research studies (Lu et al. 2019; Zhao and Ono 2000; Ghorbanzadeh and Homami 2023).

Similar to the simulation methods, the fast integration method cannot provide some valuable data including importance and sensitivity vectors. However, the mean value of the component problems can be considered as an approximation of these two vectors. Moreover, it needs to be run for each time interval separately and it is not possible to use the result of a time interval for other ones.

2.2 Occurrence Model

This section represents the different parts of the unified reliability analysis mentioned in Fig. 1. In other words, the aim is to show the estimation of a realization of structural loss based on a sequence of probabilistic models that are adaptive to the numerical example of the current study. To achieve this aim, it is required to define the ground motion model, the structural response model, the damage model, and the structural loss model. Each part has its own calculation and branches such as ground motion simulation consisting of the seismic magnitude model and the seismic intensity model. These steps are the same for both the sampling approach and the proposed fast integration method. The difference relates to the number of calculations. It means that the sampling approach repeats these steps for each sample existing in each timeline; however, the reformulated fast integration only does these steps for specific points, which are defined based on the disassembling related to the given time domain and the random-variate space.

Therefore, two steps of modeling the ground motion embracing the seismic magnitude and seismic intensity models, the structural response model called the tunnel response model, and three steps of modeling structural loss consisting of the tunnel direct damage model, the tunnel loss model as well as the discounting model are represented in the following subsections.

2.2.1 Seismic Magnitude Model

The first step of unified reliability analysis is the modeling of ground motion. Seismic regression models are well-known models in which both the magnitude and the intensity are modeled with a function, but these two parts can be separated to cover more details. Therefore, a double-truncated exponential distribution model is selected to model the magnitude of seismic source (McGuire 2004). As shown by the preservation of the probability equation, magnitude, m, can be related to an auxiliary standard normal variable, θm (Mahsuli and Haukaas 2013; Aghababaei and Mahsuli 2018) that leads to the realization of m as shown in Eq. (11), where θm and b are considered as model input random variables.

where b is the coefficient addressing the relative earthquake occurrence of different magnitudes; Mmin and Mmax are the lower-bound and upper-bound magnitude that the source can produce, respectively; Φ is the cumulative density function associated with a standard normal random variable.

2.2.2 Seismic Intensity Model

The second step of modeling ground motion based on what is mentioned in the previous subsection is the simulation of the seismic intensity model. The seismic intensity model assumes earthquakes and shock wave propagation characteristics to specify the characteristics of the site ground movement. Several models and intensity measures have been presented in the literature. The model that generates an artificial time history ground motion presented by Rezaeian and Der Kiureghian (Rezaeian and Der 2010) is implemented in this study. Moreover, is considers both aleatory and epistemic uncertainties in the ground motion. This adopted model is the most important source of uncertainties in the present study. The response of a linear filter time-dependent parameters to a white-noise process is implemented to simulate an acceleration process, x(t), via Eq. (12).

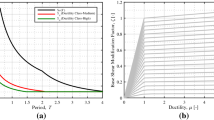

where q (t, ag) is the time-modulating function in which ag is a parameter controlling the shape that describes the temporal characteristic, intensity, and duration of the motion; σh(t) is the standard deviation of the integral process; ω is a white-noise process; h [t − τ, λ(τ)] is the impulse response function (IRF) of the filter that describes the spectral characteristics of the process with time-varying parameters λ(τ) and depends on the six key characteristics of the ground motion. The key characters are \({\overline{I}}_{a}\): the arias intensity; D5-95: the effective duration of the motion; tmid: the time in the middle of the strong motion phase; ωmid: filter frequency at tmid, \({\omega }{\prime}\): the rate of the change in the filter frequency in time, and \({\zeta }_{{f}^{{\prime}{\prime}}}\): the filter damping. The realization of the seismic model with four required inputs is shown in Fig. 2. Although this model is able to generate acceleration, velocity, and displacement time history records, the displacement time history is only utilized in the middle of calculation shown in Fig. 2.

There is an alternative method to reduce the computational cost. This method utilizes the peak ground displacement (PGD) distribution using simulation procedure and random variable fitting. For this purpose, a sufficient number of random parameters can be generated and the probability distribution of PGD is obtained. This probability distribution can be employed instead of simulation of seismic magnitude model and seismic intensity model in order to reduce the calculation burden. Figure 3 illustrates an example of this process in which a lognormal probability distribution is employed. Accordingly, having the average occurrence rate and probability distribution of PGD, it is possible to determine the input for the finite element model that is investigated in the following section.

2.2.3 Tunnel Response Model

After modeling the ground movement, the output of this model can used as an input for the structural response model. In this study, the utilized model is required to be compatible with the dominant condition. If ground failure including liquefaction, slope instability, and fault displacement is disregarded, the design code of underground structure concentrates on the transient ground deformation generated by seismic wave passage. These deformations involve three primary modes including compression-extension, longitudinal bending, and ovalling deformation (Saleh Asheghabadi and Rahgozar 2019; Zhang et al. 2021; Wang et al. 2019). The critical case is the ovalling deformation between these three cases in a circular tunnel lining during a seismic event. Ovalling deformation emerges when wave propagate is perpendicular to the tunnel axis (Çakır and Coşkun xxxx; Hashash et al. xxxx). The simplest form of computing ovalling deformation is achieved when the deformation of circular tunnel lining is assumed to be identical to the free-field deformation of the surrounding ground. This leads to the first-order structure deformation and may result in the overestimated or underestimated deformations on the basis of structure rigidity. Another method implemented to estimate ovalling deformation is the lining-ground interaction. Figure 4 shows the plan view of the tunnel model with surrounded ground and ovalling deformation under seismic load. This tunnel involves a main structure, a support system for installing tools inside the tunnel, and installation tools which involve pipes and instruments for moving materials, etc. Oval deformation is the deformation imposed on the tunnel main structure during shear wave propagation.

A finite element model is employed to compute the linear response (elastic behaviour) of the tunnel. The soil and tunnel structure component are recognizable in Fig. 5. Given that the pushover analysis is implemented, the left-highest node in Fig. 5 is considered as the target control displacement node for checking the pushing value. The left and right sides of the finite element model are constrained against vertical movement, and the bottom side of the finite element model is constrained against vertical movement. The upper side nodes have to be pushed until the target displacement (the output of PGD model) is reached. The tunnel is 5 m in the inner diameter, has a concrete lining with a thickness of 0.6 m, and is built at a depth of 20 m from surface to the central part of the circular tunnel. It is noted that the effects of the reinforcement rebars are ignored for the purpose of observing simplicity.

Any finite element software package such as OpenSees (PEER). Opensees 1999) could be implemented to model tunnel structure. After assigning load and pushing the model to the target deformation, the horizontal and vertical deflection can be obtained. The realization of the output produced by adopting finite element method is shown in Fig. 6. The displacement of the node placed on 45 degrees of the main structure of the tunnel is recorded as an output of finite element model that is an input for the next section which is a damage model.

2.2.4 Tunnel Direct Damage Model

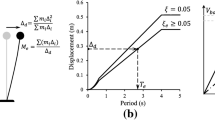

The final output of the structural response model is an input for the loss computing section that begins with the tunnel direct damage model and is followed by the tunnel loss model and the discounting model, respectively. There are various methods to model the damage of a given structure, including using the cumulative density function of a probability distribution and linear/nonlinear polynomials, provided that it needs to be an incremental function that returns a value between two numbers related to the no-damage and the full-damage condition, respectively. In this article, a simple damage model is employed based on sinusoidal function, where the received node displacement acts an input and is implemented accordingly. This function is shown in Eq. (13).

where Δdlining is the target node displacement obtained by finite element model, Δmin and Δmax are the minimum and maximum thresholds associated with no-damage and full-damage state, respectively, and εη is the model error assumed as a normal random variable with zero mean and 0.001 standard deviation. Δmin and Δmax are considered as different values associated to damage types. Figure 7 shows Eq. (13) with the realization of random variables in ovalling deflection, where Δmin and Δmax are 0.5 mm and 0.15 cm, respectively.

2.2.5 Tunnel Loss Model

The next step of loss computing is to estimate the cost of the damage using the damage ratio and other necessary parameters. Once the general damage ratio is estimated by Eq. (13), the tunnel loss model can be computed by Eq. (14).

where L is the length of the investigated tunnel and C is the replacement cost of the tunnel structure measured per unit length. If other damages such as indirect damage model are considered, this equation has to be converted into summation form.

2.2.6 Discounting Model

Discounting the future loss to a present loss value is the final step of loss computing that can be expressed by Eq. (15).

where lp is the present loss value; lf is the future loss; r is the effective annual interest rate; and t is the time variable. The interest rate in this study is considered 0.5% for all types of economic losses. This discounting model is useful for decision making about investments such as retrofit actions.

3 Numerical Examples

This section addresses the practical usage of the reformulated unified reliability analysis based on the fast integration method to simulate earthquake events as sources of risks. All applied models were defined in the previous section and the required parameters are demonstrated in Fig. 8. This example represents the entire procedure of probabilistic loss assessment based on the reliability risk analysis. Figure 8 shows how the proposed fast integration analysis implements different scenarios to compute limit state function, g. As illustrated in Fig. 8, computing g requires a chain of interacting probabilistic models as mentioned in the previous section.

The inputs of these models involve random variables, constant variables, and the outputs of upstream models. First, an occurrence model generates an event that leads to the algorithm generating a time variable, t. As mentioned before, there is a difference between the sampling approach and the proposed fast integration method in this step. The time variable is obtained by a random process in the sampling approach; however, it is a deterministic value based on disassembling time domain utilized by the proposed fast integration algorithm. Then, the magnitude model with four input requirements begins to estimate the magnitude and send this magnitude to the tunnel response model and seismic intensity model. At this level, the seismic intensity model considers fault and site characteristics to generate the peak ground displacement. It is noted that this part can be replaced by random variable fitting procedure to reduce the cost of the computation. By gaining access to the required inputs of the tunnel response model, it is possible to make a tunnel deflection, Δdlining. Subsequently, the damage ratio is calculated using the damage model and parameters models. The damage ratio is inserted into the corresponding economic loss model to obtain the future loss value. Finally, discounting model is implemented to convert the future loss to the present loss value before achieving limit state function. All characteristics of the models are summarized in Table 1. The proper description is defined to show the performance of each character. These characters pertain to the tunnel lining structure, seismic sources, and damage dependent models.

The limit state function is formulated as g = l0–lp. It is mentioned that four abscissas and weights are used for Gauss–Legendre quadrature method, and seven points and weights are employed for the point-estimate method in the standard normal space. By substituting the obtained results in Eq. (7), the time-dependent failure probability, Pf (60), can be estimated. Figure 9 shows the time-dependent failure probability obtained from the fast integration method that is based on trust-region SQP for four seismic source effects in the next 60 years when the 30% of construction cost is chosen as a threshold.

The initial insight from this outcome indicates that the different assumptions made for establishing four seismic sources including different types of mean occurrence rate, horizontal distance to the top edge of rupture, etc., result in unique dominant regions for each source. For instance, source number 4 characterized by a time-variant mean occurrence rate, exhibits the highest failure probability after approximately 50 years. Conversely, source number 1, with a constant and large mean occurrence rate as well as differing maximum and minimum magnitude values compared to source number 1, dominates the initial segment of the diagram from 5 to nearly 20 years. Consequently, this diagram facilitates ranking the seismic sources according to their influence on the structural loss within the specified time frame. Additionally, it highlights the optimal timing for making informed decisions to enhance structural performance. Essentially, if a specific performance target for a structure is identified and must be consistently met, this diagram forecasts the timeframe required to achieve that target. An additional observation derived from this time-dependent reliability analysis pertains to the escalating probability of failure as time progresses. Each of the four seismic sources exhibits a heightened probability of failure towards the end of 60 years, as the likelihood of failure due to severe earthquakes significantly increases compared to the initial stages of the timeframe. It is important to note that the insights presented in the preceding sections do not necessarily entail a redundant analysis and can be derived from the same analysis.

The primary outcome of the risk analysis is to estimate the exceedance probability. Figure 10 illustrates the contribution of the main structure and other components of the tunnel lining to the overall losses. Additionally, this diagram displays the cumulative total loss over 60 years. The vertical axes are presented on logarithmic scales to emphasize the tail probabilities. The main structure loss plays a crucial role in the total loss. It is important to note that the tail end of the diagrams corresponds to events with lower probabilities of occurrence but higher intensities. The markers indicate the l0 thresholds in equation g = l0–lp. In other words, in this diagram, the value of time is fixed at 60, but the value of lp is changed to illustrate the impact of a variety of structural losses. The purpose of this example, as outlined in the Abstract and Introduction sections, is to demonstrate the functionality of this modified framework. However, further insights can be obtained from the analysis by employing more detailed modeling of components. For instance, dividing the main structure into multiple parts allows for a comprehensive investigation from all perspectives.

Figure 11 shows the outcomes of two classes of proposed fast integration analysis. One implements the optimization technique and the other employs the importance sampling as an interval solver. Moreover, the results of the sampling-based approach or Monte Carlo (MCS) method for simulating the Poisson point process within the time interval (0, 60] are depicted in this diagram. The results underscore a reasonable agreement between the results obtained through MCS and two types of proposed fast integration methods. A slight difference is observed in the upper tail on the right side, which corresponds to severe earthquake events. Although this difference can be overlooked, it can be reduced by considering more points for discretizing the time domain and random-variate space in the proposed fast integration method. Consequently, establishing the combination of the fast integration method with importance sampling is unnecessary because utilization of the fast integration method and the optimization technique is more convenient and straightforward with nearly the same accuracy. Although the accuracy of the methods is acceptable, the computational cost for determining the exceedance probability varies. The Monte Carlo simulation method requires 5 million samples to obtain the complete diagram as demonstrated in Fig. 11. The fast integration method based on importance sampling completes this process with 1.25 million simulations. However, the fast integration method based on proposed optimization method estimates the final result with 250,000 calls of the limit state function.

Table 2 summarizes the final results of the different methods related to computational effort for all the labeled points in Fig. 11. The number of call functions for Monte Carlo is equal to its iteration number. However, the number of call functions for the fast integration-Importance sampling and the fast integration-TRSQP is different from the number of iterations. The fast integration-Importance sampling utilizes the first-order reliability method to find the design point and then starts the simulation. The fast integration-TRSQP uses the differentiation of the limit state function in the middle of computation that results in more call functions compared to the number of iterations. The difference in computational burden is obvious but the final results are close to each other. That is one of the important reasons why the conventional framework of risk analysis based on reliability method needs to be developed and equipped with robust tools and methods.

4 Discussion

A reformulation of the unified reliability analysis based on the fast integration method was proposed. Then, the algorithm was employed on a tunnel structure and its results associated with three types of loss models were reported. To this end, several probabilistic models including seismic magnitude, seismic intensity, tunnel response, tunnel damage, and loss model were defined. First, the failure probability due to the occurrence of each earthquake source was estimated in a time interval of 60 years, as shown in Fig. 9. The effect of the occurrence rate of different seismic sources, site distances from seismic sources, etc., caused that the source number 4 with time domain mean occurrence rate reached the maximum value at the end of 60 years. Moreover, this curve facilitated monitoring the total performance index variation and finding the optimal timing for making informed decisions related to strengthening structural members (see Figs. 9). Then, the loss curves based on three loss models were approximated, as depicted in Fig. 10, that was the total exceedance probability curve associated to different loss thresholds and considered the impact of all the seismic occurrences. The exceedance probability of the main structure loss was higher than the support system and installation tools because of the assumptions considered for the case study example.

The main concern of this paper was to develop the risk analysis framework to save time and maintain accuracy. Although the fast integration-TRSQP method needed to estimate differentiation, its number of call functions was significantly smaller than the simulation methods. Moreover, the fast integration-TRSQP was equipped with numerical nonlinear programming optimization that allowed the algorithm to be used for highly nonlinear problems. However, for some specific time-dependent problems with complicated conditions and high accuracy required the simulation method is still the best option.

5 Conclusion

In the present study, the reliability-based risk methodology called the unified reliability analysis based on the fast integration method has been formulated and utilized for a linear concrete circular tunnel structure under seismic conditions. The first novelty was about employing the proposed fast integration algorithm to simulate the Poisson point process instead of the complete simulation based on Monte Carlo family methods. At the end of the analysis procedure, the expected result, which was the considerable decrease in the amount of computational cost, was obtained because the sampling approach was replaced by the point estimation process. The second achievement was related to employing numerical nonlinear programming optimization instead of the search-based reliability methods. The importance of this change was to utilize a stable and powerful analysis method for solving several component reliability problems obtained by time domain and random-variate space disassembling. The accuracy of the final result could support the successful combination of the fast integration method and numerical nonlinear programming optimization in comparison with a complete time-consuming simulation procedure as well as the combination of the fast integration method with the importance sampling. It is noted that the fast integration method needs results of all component reliability problems to estimate the failure probability of a time-dependent problem. Showing how can use the sequential probability models in the middle of risk analysis problems was another development in this framework. Moreover, it was shown that there were a variety of probability models that could be implemented based on the modeling conditions. The seismic intensity model is the most important probability model in this paper considering both aleatory and epistemic uncertainties in the ground motion. In addition, a few seismic sources have been implemented and the probability of exceedance of each one was shown separately, and jointly.

In conclusion, it is emphasized that the ongoing development of risk analysis based on reliability methods in the field of probabilistic models for earthquake intensity, structural damage, and analysis tools for solving internal problems is strongly endorsed.

Data Availability

Some or all data, models, or codes that support the findings of this study are available from the corresponding author upon reasonable request.

References

Abed HR, Rashid HA (2023) A new risk assessment model for construction projects by adopting a best-worst method–fuzzy rule-based system coupled with a 3D risk matrix. Iran J Sci Technol Trans Civ Eng. https://doi.org/10.1007/s40996-023-01105-x

Aghababaei M, Mahsuli M (2018) Detailed seismic risk analysis of buildings using structural reliability methods. Probab Eng Mech 53:23–38. https://doi.org/10.1016/j.probengmech.2018.04.001

Aghababaei M, Mahsuli M (2019) Component damage models for detailed seismic risk analysis using structural reliability methods. Struct Saf 76:108–122. https://doi.org/10.1016/j.strusafe.2018.08.004

Arabaninezhad A, Fakher A (2021) A practical method for rapid assessment of reliability in deep excavation projects. Iran J Sci Technol Trans Civ Eng 45:335–357. https://doi.org/10.1007/s40996-020-00499-2

Asakura T, Sato Y (1998) Mountain tunnels damage in the 1995 Hyogoken-Nanbu earthquake. Q Rep RTRI (railw Tech Res Inst) 39:9–14

Brandl J, Neugebauer E (2002) Turkish motorway network–challenges to tunnel design. Felsbau 20:24–33

Çakir Ö, Coşkun N (2022) Dispersion of Rayleigh surface waves and electrical resistivities utilized to invert near surface structural heterogeneities. J Human, Earth, Future 3:1–16. https://doi.org/10.28991/HEF-2022-03-01-01

Choudhuri K, Chakraborty D (2023) Risk assessment of three-dimensional bearing capacity of a circular footing resting on spatially variable sandy soil. Iran J Sci Technol Trans Civ Eng. https://doi.org/10.1007/s40996-023-01129-3

Ditlevsen O, Madsen HO (2005) Structural reliability methods, vol 178. Wiley, New York

Dowding CH, Rozan A (1978) Damage to rock tunnels from earthquake shaking. J Geotech Eng Div 104:175–191. https://doi.org/10.1061/AJGEB6.0000580

Ekmen AB, Avci Y (2023) Artificial intelligence-assisted optimization of tunnel support systems based on the multiple three-dimensional finite element analyses considering the excavation stages. Iran J Sci Technol Trans Civ Eng 47:1725–1747. https://doi.org/10.1007/s40996-023-01109-7

Ghorbanzadeh M, Homami P (2023) An object-oriented computer program for structural reliability analysis (BI): components and methods. Iran J Sci Technol Trans Civ Eng 2023:1–12. https://doi.org/10.1007/S40996-023-01244-1

Hashash YMA, Hook JJ, Schmidt B, I-Chiang Yao J (2001) Seismic design and analysis of underground structures. Tunn Undergr Sp Technol 16:247–293. https://doi.org/10.1016/S0886-7798(01)00051-7

Haukaas T (2008) Unified reliability and design optimization for earthquake engineering. Probab Eng Mech 23:471–481. https://doi.org/10.1016/j.probengmech.2007.10.008

Hohenbichler M, Rackwitz R (1981) Non-normal dependent vectors in structural safety. ASCE J Eng Mech Div 107:1227–1238

Hong HP (2000) Assessment of reliability of aging reinforced concrete structures. J Struct Eng 126:1458–1465. https://doi.org/10.1061/(asce)0733-9445(2000)126:12(1458)

Huang Z, Argyroudis S, Zhang D, Pitilakis K, Huang H, Zhang D (2022) Time-dependent fragility functions for circular tunnels in soft soils. ASCE-ASME J Risk Uncertain Eng Syst Part A Civ Eng. https://doi.org/10.1061/AJRUA6.0001251

Jiang Y, Wang C, Zhao X (2010) Damage assessment of tunnels caused by the 2004 Mid Niigata prefecture earthquake using Hayashi’s quantification theory type II. Nat Hazards 53:425–441. https://doi.org/10.1007/s11069-009-9441-9

Jin H, Jin X (2022) Performance assessment framework and deterioration repairs design for highway tunnel using a combined weight-fuzzy theory: a case study. Iran J Sci Technol Trans Civ Eng 46:3259–3281. https://doi.org/10.1007/s40996-021-00734-4

Jing-Ming W, Litehiser JJ (1985) The distribution of earthquake damage to underground facilities during the 1976 Tang-Shan earthquake. Earthq Spectra 1:741–757. https://doi.org/10.1193/1.1585291

Koduru SD, Haukaas T (2010) Probabilistic seismic loss assessment of a vancouver high-rise building. J Struct Eng 136:235–245. https://doi.org/10.1061/(ASCE)ST.1943-541X.0000099

Li HS, Lü ZZ, Yuan XK (2008) Nataf transformation based point estimate method. Chin Sci Bull 53:2586–2592. https://doi.org/10.1007/s11434-008-0351-0

Li Q, Wang C, Ellingwood BR (2015) Time-dependent reliability of aging structures in the presence of non-stationary loads and degradation. Struct Saf 52:132–141. https://doi.org/10.1016/j.strusafe.2014.10.003

Liu P-LL, Der Kiureghian A (1986) Multivariate distribution models with prescribed marginals and covariances. Probab Eng Mech 1:105–112. https://doi.org/10.1016/0266-8920(86)90033-0

Liu W, Dong S, Yin S, Dai Z, Xu B, Soltanian MR et al (2023) A new approach for quantitative definition of asymmetrical loading tunnels. Iran J Sci Technol Trans Civ Eng 47:457–468. https://doi.org/10.1007/s40996-022-00958-y

Lu Z-H, Cai C-H, Zhao Y-G (2017) Structural reliability analysis including correlated random variables based on third-moment transformation. J Struct Eng 143:04017067. https://doi.org/10.1061/(asce)st.1943-541x.0001801

Lu ZH, Leng Y, Dong Y, Cai CH, Zhao YG (2019) Fast integration algorithms for time-dependent structural reliability analysis considering correlated random variables. Struct Saf 78:23–32. https://doi.org/10.1016/j.strusafe.2018.12.001

Luo X, Lv J, Xiao H (2021) Explicit continuation methods with L-BFGS updating formulas for linearly constrained optimization problems. ArXiv Prepr ArXiv210107055 2021

Mahsuli M, Haukaas T (2013) Seismic risk analysis with reliability methods, part I: models. Struct Saf 42:54–62. https://doi.org/10.1016/j.strusafe.2013.01.003

McGuire RK (2004) Seismic hazard and risk analysis. Earthquake Engineering Research Institute

Michael Mahoney PO, Robert D, Hanson (2012) Technical Monitor Washington DC. FEMA P-58-2 seismic performance assessment of buildings—implementation Guide. Fema P-58-2. 2:357

Moehle JP, Deierlein G (2004) A framework methodology for performance-based earthquake engineering

Mohammadi-Haji B, Ardakani A (2020) Numerical prediction of circular tunnel seismic behaviour using hypoplastic soil constitutive model. Int J Geotech Eng 14:428–441. https://doi.org/10.1080/19386362.2018.1438152

Mori Y, Ellingwood BR (1993) Reliability-based service-life assessment of aging concrete structures. J Struct Eng 119:1600–1621. https://doi.org/10.1061/(asce)0733-9445(1993)119:5(1600)

Naess A, Bo HS (2018) Reliability of technical systems estimated by enhanced monte Carlo simulation. ASCE-ASME J Risk Uncertain Eng Syst Part A Civ Eng. https://doi.org/10.1061/AJRUA6.0000937

Okamoto S (1984) Introduction to earthquake engineering. University of Tokyo press

(PEER). Opensees, The open system for earthquake engineering simulation. Copyr @ 1999,2000 Regents Univ California All Rights Reserv 2000

Qiu D, Liu Y, Xue Y, Su M, Zhao Y, Cui J et al (2021) Prediction of the surrounding rock deformation grade for a high-speed railway tunnel based on rough set theory and a cloud model. Iran J Sci Technol Trans Civ Eng 45:303–314. https://doi.org/10.1007/s40996-020-00486-7

Rezaeian S, Der KA (2010) Simulation of synthetic ground motions for specified earthquake and site characteristics. Earthq Eng Struct Dyn 39:1155–1180. https://doi.org/10.1002/eqe.997

Saleh Asheghabadi M, Rahgozar MA (2019) Finite element seismic analysis of soil-tunnel interactions in clay soils. Iran J Sci Technol Trans Civ Eng 43:835–849. https://doi.org/10.1007/s40996-018-0214-0

Spyridis P, Proske D (2021) Revised comparison of tunnel collapse frequencies and tunnel failure probabilities. ASCE-ASME J Risk Uncertain Eng Syst Part A Civ Eng 7(2):04021004. https://doi.org/10.1061/AJRUA6.0001107

Vamvatsikos D, Cornell CA (2002) Incremental dynamic analysis. Earthq Eng Struct Dyn 31:491–514. https://doi.org/10.1002/eqe.141

Wang ZZ, Zhang Z (2013) Seismic damage classification and risk assessment of mountain tunnels with a validation for the 2008 Wenchuan earthquake. Soil Dyn Earthq Eng 45:45–55. https://doi.org/10.1016/j.soildyn.2012.11.002

Wang WL, Wang TT, Su JJ, Lin CH, Seng CR, Huang TH (2001) Assessment of damage in mountain tunnels due to the Taiwan Chi-Chi earthquake. Tunn Undergr Sp Technol 16:133–150. https://doi.org/10.1016/S0886-7798(01)00047-5

Wang Z, Gao B, Jiang Y, Yuan S (2009) Investigation and assessment on mountain tunnels and geotechnical damage after the Wenchuan earthquake. Sci China Ser E Technol Sci 52:546–558

Wang L, Wu C, Li Y, Liu H, Zhang W, Chen X (2019) Probabilistic risk assessment of unsaturated slope failure considering spatial variability of hydraulic parameters. KSCE J Civ Eng 23:5032–5040. https://doi.org/10.1007/s12205-019-0884-6

Xu H, Rahman S, Xu H (2004) A generalized dimension-reduction method for multidimensional integration in stochastic mechanics. Int J Numer Methods Eng 61:1992–2019. https://doi.org/10.1002/nme.1135

Yamashita H, Yabe H, Harada K (2020) A primal-dual interior point trust-region method for nonlinear semidefinite programming. Optim Methods Softw 2020:1–33. https://doi.org/10.1080/10556788.2020.1801678

Zhang D, Sun W, Wang C, Yu B (2021) Reliability analysis of seismic stability of shield tunnel face under multiple correlated failure modes. KSCE J Civ Eng 25:3172–3185. https://doi.org/10.1007/s12205-021-2174-3

Zhao Y-G, Ono T (2000) New point estimates for probability moments. J Eng Mech 126:433–436. https://doi.org/10.1061/(asce)0733-9399(2000)126:4(433)

Zhao Y, Shi Y, Yang J (2021) Study of the concrete lining cracking affected by adjacent tunnel and oblique bedded rock mass. Iran J Sci Technol Trans Civ Eng 45:2853–2860. https://doi.org/10.1007/s40996-021-00710-y

Acknowledgements

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Funding

No funds, grants, or other support was received.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ghorbanzadeh, M., Homami, P. & Shahrouzi, M. A Modified Framework for Reliability-Based Risk Analysis of Linear Concrete Circular Tunnel. Iran J Sci Technol Trans Civ Eng 48, 3467–3482 (2024). https://doi.org/10.1007/s40996-024-01497-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40996-024-01497-4