Abstract

In this paper, a one-step new iterative method (OSNIM) is introduced to obtain an exact solution for Bagley–Torvik fractional differential equation. The suggested method OSNIM is a modification to a well-known iterative method called new iterative method. This modification enabled us to get the exact solution for linear as well as nonlinear fractional differential equations. Bagley–Torvik fractional differential equation arises naturally in the description of the motion of a rigid plate immersed in a Newtonian fluid. The convergence analysis for this method is also discussed. Several examples are presented and their solution is compared to that found by other well-known methods, showing the accuracy and fast convergence of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional calculus theory made it possible to take real number powers of the differential and the integral operators. This generalized calculus made a huge leap in describing and modeling a lot of phenomena in science and engineering. Take a mechanics example, for instance, fractional-order derivatives have been successfully used to model damping forces with memory effect. They also used it to describe state feedback controllers (Hemeda and Al-Luhaibi 2014; Kilbas et al. 2006; Mahdy and Mukhtar 2017; Manafian et al. 2014; Ramadan and Al-luhaibi 2015; Saadatmandi and Mehdi 2010; Samko et al. 1993; Sontakke and Shaikh 2016; Zaslavsky 2005). That is because of the fact that realistic modeling of a physical phenomenon having dependence not only on the time instant but also on the previous time history can be successfully achieved by using fractional calculus. Such calculus can be named as non-integer order of calculus, and the subject of it can be traced back to the genesis of integer-order differential calculus itself. Though G.W. Leibniz made some remarks on the meaning and possibility of fractional derivatives of order \(\frac{1}{2}\) in the late seventeenth century, a rigorous investigation was first carried out by Liouville in a series of papers from 1832 to 1837, where he defined first an operator of fractional integration. Today, fractional calculus generates the derivative and antiderivative operations of differential and integral calculus from non-integer orders to the entire complex plane. Many examples and techniques for solving fractional differential equations will be found in S. Kumar et al. work (Sunil et al. 2016, 2017; Sunil 2014; Sunil and Mohammad 2014; Sunil and Amit 2018). There are several approaches to the generalization of the notion of differentiation to fractional orders, e.g., Riemann–Liouville, Grunwald–Letnikow, Caputo and generalized function approach. Riemann–Liouville fractional derivative is mostly used by mathematicians, but this approach is not suitable for real-world physical problems since it requires the definition of fractional-order initial conditions, which have no physically meaningful explanation yet. Caputo introduced an alternative definition, which has the advantage of defining integer-order initial conditions for fractional-order differential equations. Unlike the Riemann–Liouville approach, which derives its definition from repeated integration, the Grunwald–Letnikow formulation approaches the problem from the derivative side. This approach is mostly used in numerical algorithms. In this work, we will work on the famous well-known fractional differential equation, in particular, the Bagley–Torvik equation (BTE)

(where \(1 \le n,\)\(n \in {\mathbb{N}}, r - 1 < \alpha \le r\), the constants B\(\ne\) and A, C\(\in {\mathbb{R}}\), δi can be identified for the initial conditions given in the problem and f : [0, 1] × R → R is a given continuous function) arises, for example, in the modeling of the motion of a rigid plate immersed in a Newtonian fluid. It was originally proposed in 1984 in (Raja et al. 2011) and is thoroughly discussed. Initially, in (Mahdy and Mukhtar 2017), inhomogeneous BTE was studied with an analytical solution being proposed. Since then, there were several works to solve BTE, starting with numerical procedures for a reformulated BTE as a system of functional differential equations of order \(\frac{3}{2}\). Following a numerical way for solving BTE, a generalization of Taylor’s and Bessel’s collocation method (Daftardar-Gejji and Jafari 2006; Ramadan and Al-luhaibi 2014) and the use of evolutionary computation (Mainardi 2010) provided acceptable solutions from an engineering point of view. In order to obtain a unique solution for BTE, homogeneous initial conditions are assumed. Here, in particular, \(D_{t}^{q}\) denotes the fractional differential operator of order \(q \notin {\mathbb{N}}\) in the sense of Caputo, denoted and defined by

where m is the integer defined by the relation \(m - 1 < q < m\) and \(J^{\alpha }\) is the fractional integral operator,

2 Different Approaches to the New Iterative Method (NIM)

During the past decades, many mathematicians introduced and developed the NIM. After working with that technique, they developed many approaches, so it can handle differential as well as partial differential equations. The last few years though they concentrated on developing the NIM to work on all types of fractional differential equations. We will discuss in detail each approach and introduce our approach in order to find the exact solution instead of an approximate one for some special problems.

2.1 First Approach

To describe the idea of the first approach of the NIM (Manafian et al. 2014; Podlubny 1999; Raja et al. 2011; Ramadan and Al-luhaibi 2014, 2015), consider the following general functional equation

where N is the nonlinear operator and g is a known function. We are looking for y which has the series solution in the form

The operator N can be decomposed into the following

We define the following recurrence relation

The k-term series solution of the general Eq. (2) takes the following form:

2.2 Second Approach

The basic mathematical theory of the second approach to the NIM is described as follows. This approach is preferred to be used for nonlinear problems. Let us consider the following nonlinear equation:

where \(\varepsilon\) and N are the linear and nonlinear operators of \(y\left( t \right)\) and \(g\left( t \right)\) is a known function. We are looking for y which has the series solution in the form

The linear operator \(\varepsilon\) can be decomposed into the following

The nonlinear operator N can be decomposed into the following

We define the following recurrence relation

The k-term series solution of the general Eq. (7) takes the following form:

Example 2.1

Consider the following nonlinear initial value problem (Yuzbas 2013)

Isolating the unknown function

by using Eq. (4), we get

We get only an approximate solution of the form

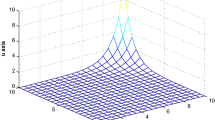

the graph of this solution

The same problem will be solved later on in this paper using the newly introduced method.

Example 2.2

Consider the time-dependent one-dimensional heat conduction equation (Torvik and Bagley 1984) as follows:

Isolating the unknown function

by using the second approach of NIM in Eq. (10), suppose that

We get the exact solution taking the form

where \(E_{\alpha ,1} \left( t \right)\) is the Mittag-Leffer function.

3 One-Step New Iterative Method (OSNIM)

A variety of problems in physics, chemistry, biology and engineering can be formulated in terms of the nonlinear functional equation

where f is a given function and N is the nonlinear operator. Equation (18) represents integral equations, ordinary differential equations (ODEs), partial differential equations (PDEs), differential equations involving fractional-order, systems of ODE/PDE and so on. Various methods such as Laplace and Fourier transform and Green’s function method have been used to solve linear equations. For solving nonlinear equations, however, one has to resort to numerical/iterative methods. Adomian decomposition method (ADM) has proved to be a useful tool for solving functional Eq. (18) (Adomian 1988, 1994; Daftardar-Gejji and Jafari 2005). Though the study of fractional differential equations (FDE) in general and BTE to be specified has been obstructed due to the absence of proficient and accurate techniques, the derivation of approximate solution of FDEs remains a hotspot and demands to attempt some dexterous and solid plans which are of interest. Daftardar-Gejji and Jafari proposed an iterative method called the new iterative method (NIM) for finding the approximate solution of differential equations (Al-Luhaibi 2015; Samreen et al. 2018). NIM does not require the need for calculation of tedious Adomian polynomials in nonlinear terms like ADM, the need for determination of a Lagrange multiplier in its algorithm like VIM and the need for discretization like numerical methods. The proposed method handles linear and nonlinear equations in an easy and straightforward way. Recently, the method has been extended for differential equations of the fractional order (Al-Luhaibi 2015; Kazem 2013; Podlubny 1999). This method yields solutions in the form of rapidly converging infinite series which can be effectively approximated by calculating only first few terms.

In the present study, we have implemented NIM for finding the approximate solution of the following fractional-order BTE. We generalized an algorithm in order to make it easier to solve BTE. To describe the idea of the new generalized algorithm for the NIM, consider the following general Bagley–Torvik (BTE) equation

then by isolating the fractional derivative term

where \(f_{1} \left( t \right) = \frac{f\left( t \right)}{B},\;A_{1} = \frac{A}{B}\) and \(C_{1} = \frac{C}{B}\), and then

Suppose we divide this equation into two parts as follows

where

where N is usually the nonlinear operator in the Banach space \(B \to B\); however, for the BTE it is applied to linear functions and \(g\left( t \right)\) is a known function defined as

where we are looking for a solution \(y\left( t \right)\) of Eq. (22) having the series form

The operator N can be decomposed into the following

By getting different \(y_{i}\)

from this, we can deduce the following

then, the k-term series solution will be in the form

4 Convergence and Error Analysis

In this section, we will prove that the method is convergent for the BTE and the error is almost neglectable as done by A. A. Hemeda (Enesiz et al. 2010). Using Eq. (27), we define the following recurrence relation

Let \(e = u^{*} - u\), where \(u^{*}\) is the exact solution, u is the approximate solution and e is the error in the solution of Eq. (22); obviously, e satisfies Eq. (22); that is,

the recurrence relation in Eq. (30) becomes

If

Thus, \(e_{n + 1} \to 0\) as \(n \to \infty ,\) which proves the convergence of the new iterative method for solving the general functional Eq. (22).

5 Illustrating Examples

In this section, we apply the present algorithm which is presented in Sect. 4 to some special types of linear and nonlinear fractional differential equations. Numerical outcomes show that the method is very convenient and efficient.

Example 5.1

Consider the following BTE (Abu and Maayah 2018)

subject to

where the exact solution is \(y\left( t \right) = 1 + t.\) By using Eq. (28), we get

then, we can find out easily that the exact solution will take the form

It worth mentioning that A. A. Hemeda in 2013 (Raja et al. 2011) solved the same problem but with a slight change. He took different \({\text{y}}_{0}\) which led him to an approximate solution. The 6-term approximation took the form

However, here we get the exact solution after this slight modification.

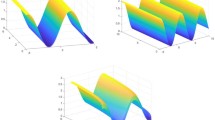

Example 5.2

Consider the following BTE (Abu and Maayah 2018)

subject to

where the exact solution is \(y\left( t \right) = t^{2} .\) By using Eq. (28), we get

By getting the higher-order terms, we notice that there are a lot of noise terms that appear. After canceling those noise terms, then we can find out easily that the exact solution will take the form

Example 5.3

Consider the following BTE (Abu and Maayah 2018)

subject to

where the exact solution is \(y\left( t \right) = t^{2} + 1.\) By using Eq. (28), we get

By getting the higher-order terms, we notice that there are a lot of noise terms that appear. After canceling those noise terms, then we can find out easily that the exact solution will take the form

Example 5.4

Consider the following BTE (Hemeda 2013)

subject to

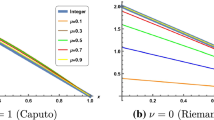

the second condition is only applied when \(\alpha > 1.\) In this problem, \(A_{1} = f_{1} \left( t \right) = 0.\) By using Eq. (28), we get

and so on. We continue getting the higher-order terms. We find out that the series continues in the same pattern. We then deduce that

which is the exact solution.

Example 5.5

Consider the following BTE (Hemeda 2013)

subject to

the second condition is only applied when \(\alpha > 1.\) In this problem, \(A_{1} = 0.\) By using Eq. (28), we get

By getting the higher-order terms, we notice that there are a lot of noise terms that appear in a certain pattern. After canceling those noise terms, then we can find out easily that the exact solution will take the form

Example 5.6

Consider the following nonlinear initial value problem (Torvik and Bagley 1984)

Isolating the unknown function and using Eq. (27)

by using Eq. (28), we get

By getting the higher-order terms, we notice that they all vanish due to using the one-step NIM. We can find out easily that the exact solution will take the form

We must also point that we tried the usual NIM, but we get only an approximate solution to the same problem taking the form

the graph of this solution.

6 Conclusion

The aim of this article is to modify the NIM is to provide a basic concept on obtaining an exact solution of Bagley–Torvik equation. The suggested modification is called a one-step new iterative method. Therefore, through this article, we have presented a successful algorithm to solve BTE. The introduced algorithm gave the exact solution in all examples studied in this paper. This indicates that the method is efficient, accurate and reliable when it is used to solve linear and nonlinear fractional differential equation or namely the BTE.

References

Abu AO, Maayah (2018) Solutions of Bagley–Torvik and Painlevé equations of fractional order using iterative reproducing kernel algorithm with error estimates. Neural Comput Appl 29:1465–1479

Adomian G (1988) A review of the decomposition method in applied mathematics. J Math Anal Appl 135:501–544

Adomian G (1994) Solving Frontier problems of physics: the decomposition method. Fundamental theories of physics. Kluwer Academic Publishers, Dordrecht

Al-Luhaibi MS (2015) New iterative method for fractional gas dynamics and coupled Burger’s equations. Sci World J 2015:153124

Daftardar-Gejji V, Jafari H (2005) Adomian decomposition: a tool for solving a system of fractional differential equations. J Math Anal Appl 301:508–518

Daftardar-Gejji V, Jafari H (2006) An iterative method for solving nonlinear functional equations. J Math Anal Appl 316:753–763

Enesiz YC, Keskin Y, Kurnaz A (2010) The solution of the Bagley–Torvik equation with the generalized Taylor collocation method. IEEE Eng Appl Math 347:452–466

Hemeda AA (2013) New iterative method: an application for solving fractional physical differential equations. Abstr Appl Anal 2013:49–52

Hemeda AA, Al-Luhaibi MS (2014) New iterative method for solving gas dynamic equation. Int J Appl Math Res 3:190–195

Kazem S (2013) Exact solution of some linear fractional differential equations by laplace transform. Int J Nonlinear Sci 16:3–11

Kilbas A, Srivastava H, Trujillo J (2006) Theory and applications of fractional differential equations. Elsevier, Amsterdam

Mahdy AMS, Mukhtar NAH (2017) New iterative method for solving nonlinear partial differential equations. J Progress Res Math 11:1701–1711

Mainardi F (2010) Fractional calculus and waves in linear viscoelasticity. Imperial College Press, London

Manafian J, Guzali A, Jalali J (2014) Application of homotopy analysis method for solving nonlinear fractional partial differential equations. Asian J Fuzzy Appl Math 2:1–14

Podlubny I (1999) Fractional differential equations. Academic Press, San Diego

Raja MA, Khan JA, Qureshi IM (2011) Solution of fractional order system of Bagley-Torvik equation using evolutionary computational intelligence. Math Probl Eng 2011:675075

Ramadan MA, Al-luhaibi MS (2014) New iterative method for solving the fornberg-whitham equation and comparison with homotopy perturbation transform method. Br J Math Comput Sci 4:1213–1227

Ramadan MA, Al-luhaibi MS (2015) New iterative method for Cauchy problems. J Math Comput Sci 5:826–835

Saadatmandi A, Mehdi D (2010) A new operational matrix for solving fractional-order differential equations. Comput Math Appl 59:1326–1336

Samko SG, Kilbas AA, Marichev OI (1993) Fractional integrals and derivatives theory and applications. Gordon and Breach, New York

Samreen F, Rashid N, Khan MJ, Javed I (2018) New iterative method for the solution of fractional damped burger and fractional Sharma-Tasso-Olver equations. Complexity 5:1–7

Sontakke BR, Shaikh A (2016) Approximate solutions of time fractional Kawahara and modified Kawahara equations by fractional complex transform. Commun Numer Anal 2016:218–229

Sunil K (2014) A new analytical modeling for fractional telegraph equation via Laplace transform. Appl Math Model 38:3154–3163

Sunil K, Amit K (2018) A modified analytical approach for fractional discrete KdV equations arising in particle vibrations. Natl Acad Sci 8:95–106

Sunil K, Mohammad MR (2014) New analytical method for gas dynamics equation arising in shock fronts. Comput Phys Commun 185:1947–1954

Sunil K, Amit K, Dumitru B (2016) Two analytical methods for time-fractional nonlinear coupled Boussinesq-Burger’s equations arise in propagation of shallow water waves. J Nonlinear Dyn 85:699–715

Sunil K, Amit K, Argyros IK (2017) A new analysis for the Keller-Segel model of fractional order. Numer Algorithms 75:213–228

Torvik PJ, Bagley RL (1984) On the appearance of the fractional derivative in the behavior of real materials. J Appl Mech 51:294–298

Yuzbas S (2013) Numerical solution of the Bagley–Torvik equation by the Bessel collocation method. Math Methods Appl Sci 36:300–312

Zaslavsky GM (2005) Hamiltonian chaos and fractional dynamics. Oxford University Press, Oxford

Acknowledgements

The authors would like to thank the review and the editor for their accurate revision of this paper and their precious remarks which enhanced the work in this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ramadan, M.A., Moatimid, G.M. & Taha, M.H. One-Step New Iterative Method for Solving Bagley–Torvik Fractional Differential Equation. Iran J Sci Technol Trans Sci 43, 2493–2500 (2019). https://doi.org/10.1007/s40995-019-00727-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40995-019-00727-z

Keywords

- Bagley–Torvik equation

- Fractional differential equation

- New iterative technique

- One–step new iterative method