Abstract

This study overviews how to strengthen railway embankments using a geo-grid in the cohesive soil embankment layer and the Bishop method to determine a safety factor using Geo-Studio software. The primary goal of this study is to show the relationship between an embankment’s safety factor with and without a geo-grid. These parameters, such as angle of internal friction, cohesion value, and unit weight for both the subsoil and embankment layer, respectively, pull-out resistance, and tensile capacity for geo-grid, have been used as input in this test. The safety factor has increased continually after altering its features and incorporating a geo-grid into the embankment layer. Based on the Geo-Studio results, the ideal choice was to strengthen the railway embankment by adding a geo-grid to the embankment layer and employing a reliable computational technique to analyse the corridor’s probabilistic slope stability for heavy-duty freight trains. The current method, which has been utilised to undertake a probabilistic study of a high embankment of 12.29 m taken by the Ministry of Indian Railways for a heavy-haul freight corridor, consists of four model analyses: convolutional neural networks (CNN), deep neural networks (DNN), artificial neural networks (ANN), and multiple linear regression (MLR). Performance indicators assessed the models’ performance, such as R2, RMSE, RSR, WI, MAE, NS, and PI. According to the analysis of the results, the CNN model outperformed DNN, ANN, and MLR. CNN is, therefore, a trustworthy soft computing technique for determining the safety of a railway embankment slope.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The development of its infrastructure facilities significantly influences the growth and advancement of a nation. Infrastructure, including trains, roads, bridges, and airports, is a crucial factor in the country’s economic growth and helps to reduce poverty. There has been a constant need for engineering and building research and innovation since industrialisation and urbanisation are accelerating. Recently, transportation engineering has witnessed significant changes in how infrastructure facilities are built (Huang et al. 2020; Démurger 2001; Straub 2008).

Most nations rely mostly on rail and road transit for public transportation. With 8.25 billion passengers and over nine hundred eighty million metric tonnes of goods transported annually, India had the world’s second-largest and busiest road network in 2015. India’s rail network will be the second busiest and fourth largest globally by 2020, carrying 8.1 billion people and 1.2 billion metric tonnes of freight annually. Railways utilise wheeled vehicles that move along tracks to transport people and cargo, making them a form of public transportation. Constructors commonly use steel rails to build tracks, placing them over ballast cushions and securing them to sleepers. The Permanent Way is the prepared foundation for the entire track system (P-way). When creating P-ways, it is essential to adhere to specified standards to ensure equal distribution of train load and proper passage to the sub-soils. Engineers commonly make P-ways using either earthwork in formation and cutting or a combination of the two. Cutting and forming procedures comply with the specifications for cross-sectional and longitudinal gradients (Sushma et al. 2022; Kabongo Booto et al. 2020). A large railway project requires significant earthwork due to the frequent construction of P-ways on naturally existing ground surfaces. The building of a rail track system also requires a substantial financial investment. Carefully evaluating the stability and dependability of a railway embankment is crucial for successfully operating the entire procedure.

Ground enhancement improves the soil’s engineering qualities and is primarily used for embankments, retaining walls, and other structures. The stability of the soil mass is one of the most crucial factors to consider when designing and building systems that support the soil. The shear strength of the soil directly affects its bearing capacity and stability against retaining structures. The demand for ecologically benign, practical, and cost-effective geotechnical systems is rising worldwide. There has been a negative environmental impact from the removal of aggregate. There has been a tendency to employ cohesive soil as a building material to lessen this impact and make it more affordable. Despite being one of the least expensive building materials, cohesive soil is widely available. However, due to its reduced frictional strength, it should not be utilised directly as a backfill material for embankment construction due to its pore water pressure and compatibility.

Reinforcing components like geosynthetics can expand their application. Geosynthetics are employed due to their quick construction, adaptability to various site conditions, flexibility, durability, and cost-effectiveness. To transfer stress from the soil to the reinforcement to improve the behaviour of cohesive soil, adequate clay-geogrid interaction must be achieved. Adding geogrid improves the cohesive soil’s stability while providing sideways drainage and preventing excessive pore water pressure during saturation. Using geosynthetic materials as filters and for material separation is well-recognised (Faure et al. 2006). For a safe and economically sensible design of soil reinforcement, it is crucial to understand the dynamics between the soil and the reinforcement (Giroud 1986). The effectiveness of adding geogrids to thin layers of sand has been shown to increase the reactivity of clay soils significantly. However, the interactions between cohesive soils and geosynthetics have only been the subject of a few investigations (Bergado et al. 1991; Abdi et al. 2009; Chen et al. 2007; Das et al. 1998).

Researchers have developed numerous techniques for analysing slope stability. Practices often employed include the limit analysis method, the strength reduction approach, and the limit equilibrium method (LEM) (Assefa et al. 2017; Reale et al. 2015). The factor of safety (FOS) is used by the deterministic LEM and SRM techniques to estimate the slope’s stability of the soil using fixed values for several soil properties (Reale et al. 2015; Das et al. 2022). Despite repeatedly proven ineffective, the FOS-based approach generates a careful analysis (Zhao 2008). The soil’s uncertainty parameter consists of pore-water pressures, angle of internal friction (φ), cohesion (c), bulk density (γ), and external loads, which have not been expressed explicitly in the FOS, which is a significant drawback of a deterministic method (Zhao 2008). In addition, it is usual to use the evaluated value of the factor of safety for a specific type of application, such as long-term slope stability, regardless of the magnitude of the calculational error. However, using the same FOS value in situations with vastly different levels of uncertainty is not reasonable. The main things that complicate soil materials are how they react to non-linear stress–strain relationships, how they change over time in response to stress and strain, and how they behave when loaded and unloaded (Majedi et al. 2021; Afrazi and Yazdani 2021; Rezamand et al. 2021; Shariati et al. 2020a). A thorough investigation of geotechnical parameters is required to consider the uncertainty in slope stability estimations. In this case, reliability analysis (RA) is a beneficial technique.

Geotechnical assessments can logically account for the uncertainty in soil parameters with the help of probability theory and statistics (Cao et al. 2017). Engineers have evaluated the performance of geotechnical structures based on this approach. Probabilistically calculated reliability index (R) and probability of failure (POF or P_f) are often used. The likelihood that the performance standards have not been met is the POF (Cao et al. 2017; Phoon and Ching 2015). Numerous techniques, including the first-order second-moment method, have been developed during the last few decades to perform RA of geotechnical structures (FOSM) (Cao et al. 2017), the first-order reliability method (FORM) (Cao et al. 2017; Phoon and Ching 2015), and the direct Monte Carlo simulation (MCS) method (Cao et al. 2017; Phoon and Ching 2015; Baecher and Christian 2005). The abovementioned techniques return β and P_f of predetermined probabilistic estimates of soil characteristics and subsurface stratigraphy as inputs for developing geotechnical structures (Cao et al. 2017). However, there has not been much focus on using these techniques to address issues with railway slope stability (Assefa et al. 2017).

The most effective slope RA utilises the FOSM. The FOSM requires the computation of performance functions and their partial derivatives for the fundamental random variables at each iteration step. As a result, researchers must calculate the performance functions and their partial products numerous times (Zhao 2008). When the performance functions are defined directly concerning the fundamental variables, they can effectively perform such computations. On the other hand, these calculations require additional work and may take a while when the performance functions are implicit. Implicit performance functions are frequently encountered when using LEMs for slope stability analysis (Zhao 2008; Deng 2006; Deng et al. 2005).

There are a few established methods for handling implicit performance functions. The response surface approach is one of them (RSM) (Zhao 2008; He et al. 2020). To estimate the implicit performance functions, RSM employs a polynomial function. A reasonably good approximation of β will be constructed if the designated polynomial closely fits the limit state (Zhao 2008). Two other techniques that can help improve the accuracy of the results are the multi-tangent plane surface method and the multi-plane surface method. However, in non-linear dynamics, they can only be used with limit-state surfaces that are concave or convex (Zhao 2008).

Researchers have performed RA of soil slopes. Examples of machine learning (ML) techniques include the use of least-square SVM, artificial neural network (ANN), multivariate adaptive regression splines (MARS), relevance vector machine (RVM), extreme learning machine (ELM), and support vector regression (SVR) (Deng 2006; Deng et al. 2005; He et al. 2020; Cho 2009; Kumar et al. 2019). Because they are good at non-linear modelling, ML algorithms can simulate slope reliability problems well by getting close to the implicit performance functions (Zhang et al. 2022; Shariati et al. 2020b, 2021; Safa et al. 2020).

In the present work, a railway embankment of a height of 12.29 (Bardhan and Samui 2022) is assumed, in which the embankment layer is made up of cohesive soil, and the parameter that affects the railway embankment is based on a factor of safety criteria. After selecting parameters, find the range of parameters in which they vary. Generate 100 datasets using these parameters to calculate the safety factor for the actual data set. These actual data sets are utilised to run on the Geo-Studio software using the Bishop, a method to acquire the trend chart of a safety factor. A soft computing model consisting of ANN, CNN, DNN, and MLR was used to calculate the RA of a 12,293-m-tall Indian railway embankment. The FOSM was used to map the soil’s uncertainty by applying probability theory and statistics. The reliability and the POF were exclusively computed during non-seismic situations to analyse the influence of soil parameter errors.

2 Methodology

2.1 Deterministic Analysis

The ratio along a potential slope surface of resistance to disturbance is what LEM refers to as a slope’s FOS (Reale et al. 2015). There are many documented techniques for calculating the FOS, and many of them are based on the slice technique (Reale et al. 2015). Using the simplified Bishop’s method of slices (Bishop 1955), a typical profile of a given soil slope’s FOS is depicted in Fig. 1.

Ci and \({\phi }_{i}\) have been defined as cohesion and angle of internal friction at the base of the ith slice of soil; xi and Wi are the ith slice’s width and weight, respectively; ui is the ith slice of the pore water pressure; and αi is the ith slice tangential angle of the base. N stands for the total number of slices. It should be mentioned that optimisation or trial-and-error methods are used to determine the minimum safety factor. Theoretically, a stable slope has a factor of safety greater than 1. But in practice, the stability level is rarely considered sufficient unless the safety factor is far greater than 1. It is mainly done to make up for the cumulative effect of parameter uncertainty in the slope stability analysis. The Research Designs and Standards Organization (RDSO) of IR recommends that a factor of safety of 1.4 be used as a standard measure of protection against slope failure. Smaller embankments up to 4 m in a seismic-free environment should have a minimum safety factor of 1.6 for slope stability (RDSO, GE:IRS-0004 2020).

2.2 Probabilistic Analysis

Numerous uncertainties might affect a slope stability analysis, including the natural geographic variability of soil characteristics, unknowns regarding subsurface stratigraphy, and modelling flaws (He et al. 2020; Cho 2009). Using Statistics and the theory of probability, it is possible to logically incorporate the uncertainties, including the intrinsic soil spatial variability in soil properties, into geotechnical designs and analysis; minimising these uncertainties in slope stability analysis requires a balanced strategy (He et al. 2020; Cho 2009). The dependability of a soil slope is quantified to POF or P_f, which, as previously stated, is specified as the chance that the performance requirements will not be met. In other words, P_f = P (FOS < 1) means that the POF is the likelihood that the minimum safety factor is less than unity. Below is a presentation of the completed methodology of applied FOSM. Suppose \(g(\underline{x})\) is the deterministic performance function or model utilised in the stability of slope study to calculate the factor of safety, where \(g(\underline{x})\) has a set of random variables \(\underline{x}\) = [\({x}_{1},{x}_{2},{x}_{3}, \dots .., {x}_{k}\)], representing uncertain model parameters. Therefore, the \(\beta\) can be determined as follows (Cao et al. 2017; Baecher and Christian 2005):

where \({\mu }_{{\text{FOS}}}\) and \({\sigma }_{{\text{FOS}}}\) are the mean and standard deviation of the factor of safety. The \({\mu }_{{\text{FOS}}}\) denotes the value of \(g(\underline{x})\) determined at mean values of \({\mu }_{1}, {\mu }_{2},{\mu }_{3}, \dots .., {\mu }_{{\text{k}}}\) of random variables \({x}_{1}, {x}_{2},{x}_{3}, \dots .., {x}_{{\text{k}}}\) and can be written as follows:

and the \({\sigma }_{{\text{FOS}}}\) can be described as follows:

where \({\sigma }_{i}\) (\(i=\mathrm{1,2},3, \dots .., k\)) is the random variables’ standard deviation \({x}_{i}\); \({\rho }_{ij}\) is the coefficient of correlation between two different uncertain variables, \({x}_{i}\) and \({x}_{j}\); \(\frac{\delta g}{\delta {x}_{i}}\) is the performance function’s partial derivative about \({x}_{i}\). Therefore, the \({\text{POF}}\) of slope can be depicted as follows:

where \(\Phi\) is depicted as a function of cumulative standard distribution with a standard deviation of one and mean 0, usually expressed as \(z=\upbeta \sim N(0,{1}^{2})\) (Cao et al. 2017). The calculation of probabilistic slope stability using FOSM is simple. However, an analytical model for slope stability analysis is required.

3 Theoretical Details of Soft Computing Methods Used

This section explains how all soft computing techniques operate. The building method of all models is illustrated in completing probabilistic studies of a heavy-haul railway embankment’s soil slope, which is 12.435 m high in an IR dedicated freight corridor (DFCC).

3.1 Artificial Neural Network

The human brain, specifically how our nervous system’s neurons may retain information from the past, is the inspiration for ANNs, who frequently use machine learning algorithms (Golafshani et al. 2020; Bardhan et al. 2021). Artificial neural networks (ANNs) can conclude data and respond with predictions or classifications like humans. One or more hidden layers, one input layer, and one output layer comprise an ANN (as shown in Fig. 2). In contrast to computational neurons performing linear and non-linear computations in the hidden and output layers, non-computational neurons collect data in the input layer. Remember that weighted linkages connect each neuron in the input, hidden, and output layers.

Furthermore, the biases in the hidden and output layers are proportional to the number of neurons. Inverse relationships exist between the counts of the respective input and output variables and the number of neurons in those layers. On the other hand, the quantity of neurons in the hidden layers varies from issue to issue and can be established through experimentation.

In ANN, for every neuron i, the individual input dataset \({p}_{1}, .., { p}_{r}\) are multiplied with the corresponding values of weights\({w}_{i,1}, .., { w}_{i,r}\), and the weighted values are fed into the summation function’s junction, where the dot product (\(p.{\text{W}}\)) of the input vector \(p={\left[{p}_{1}, .., { p}_{r}\right]}^{T}\) and the weight vector \(W=\left[{w}_{i,1}, .., {w}_{i,r}\right]\) is generated. The bias value, b, is added to the dot-product to form the net input n, which is the activation function’s argument.

In ANN, given a set of inputs, a non-linear activation function, also known as the transfer function, determines the output. The most well-known activation activities are listed below.

The appropriate values of biases and weights are established iteratively to reduce the error between calculated and actual values (Zhu et al. 2021). The root mean square error (RMSE) index frequently serves as the fitness function. Many training methods, such as gradient descent, conjugate gradient, and Levenberg–Marquardt functions, start with random biases and weights and iteratively advance to the best possible result. In the literature, ANNs are used extensively to address engineering issues (Cho 2009; Golafshani et al. 2020; Bardhan et al. 2021; Le et al. 2019).

3.2 Convolutional Neural Network

Researchers primarily developed deep learning algorithms that use convolutional neural networks for image and video processing. It uses photos as inputs, extracts and learns about the qualities of the pictures, and then, categorises the images using the features it has discovered. The visual cortex, a component of the human brain responsible for processing external visual input, was used to construct this application. The visual cortex in the human brain is responsible for processing external visual input. Each level serves a distinct function; for instance, each layer extracts data from an image or other graphic. After combining the data from those layers, the image or visual undergoes evaluation or classification. The CNN model has two steps: feature extraction and classification. The CNN model applies various filters and layers to the images during feature extraction to extract their information and features. The CNN model then forwards the photos to the classification step and categorises them based on the problem’s target variable. The CNN model has an input layer, a convolution layer, an activation function, a polling layer, and a fully connected layer (Sabri et al. 2023), as shown in Fig. 3.

3.3 Deep Neural Network

An ANN with several hidden layers (more than one) between the input and output layers is known as a deep neural network (DNN). DNNs may represent complex non-linear interactions similar to shallow ANNs (Kumar et al. 2023). When dealing with practical issues like categorisation, a neural network’s primary operation is to take in a set of inputs, analyse those inputs using progressively complex computations, and then, output the results.

As the diagram below indicates, a deep neural network has a flow of sequential data, an input, and an output. Figure 4 depicts the basic structure of DNN.

3.4 Multiple Linear Regression

Regression analysis is a statistical technique used to forecast the nature of relationships between several variables (Fig. 5). Learning more about the link between several independent or predictive factors, together with a dependent variable (Raja et al. 2021), is the primary goal of MLR. This method frequently forecasts landslides and slope failures (Pradhan et al. 2012). In multiple regressions, the criterion is predicted by two or more variables, unlike simple linear regression, which indicates a single criterion value from one predictor variable. So, examining many independent variables and the dependent variable correlations is the focus of multiple regressions. The general equation of MLR is as follows:

where Y is the dependent variable, x1, x2…..xn is the independent variable, and b1, b2…….,bn is the regression coefficient.

4 Data collection and Analysis

4.1 Material Properties

The values of the various layers’ material attributes are shown in Table 1 (Bardhan and Samui 2022) and Fig. 6 for the filling of earthworks. Information on soil parameters, such as cohesion (c in kN/m2), angle of internal friction (ɸ in °), and unit weight of soil (γ in kN/m3), was gathered for this inquiry. Information on the properties of the embankment fill material prepared, sub-grade, blanket layer, and sleeper was also acquired. The blanket layer is 600 mm thick, the prepared subgrade is 1000 mm wide, and the varying height of the embankment fill that makes up the embankment’s overall height should be noted.

Table 1 shows the material properties of various layers. Note that the constant material properties of sleepers (\(c\) = 50 kN/m2, ɸ = 40°, and \(\gamma\) = 24 kN/m3), ballast (\(c\) = 0 kN/m2, ɸ = 40°, and \(\gamma\) = 18 kN/m3), and blanket layer (\(c\) = 0 kN/m2, ɸ = 32°, and \(\gamma\) = 19 kN/m3) were taken into account. The parameter \(c\) has a value of 17.25 kN/m2 for the sub-soils (layers 1 and 2) and 62 kN/m2 for the prepared sub-grade and embankment fill. The four levels are prepared sub-grade, embankment fill, sub-soil layer-1, and sub-soil layer-2. The parameters ɸ and \(\gamma\) have the values of 26°, 10°, 15.5°, and 17 kN/m3 to 20.89 kN/m3, 39.5 kN/m3, respectively. We used Table 1 data to generate random samples for the slope stability experiment. We used tri-axial and direct shear tests to determine the soil shear strength values for the undisturbed and disturbed samples.

4.2 12.29-m-High Embankment Details

For the probabilistic analysis, the embankment’s maximum height in this study, 12.29 m, has been considered. Figure 7 shows the geometry of the slope. We selected the study’s critical cross-section based on the embankment’s maximum fill height.

After measuring this material property, we measured the pull-out resistance as 75 kN/m2 and the tensile capacity as 180 kN of geogrid to analyse the data collected while comparing the factor of safety with and without encapsulating the geogrid as a thin layer in the cohesive soil embankment.

4.3 Computational Modelling for Slope Stability Analysis

The workflow for the whole methodology utilised to create ML models for the estimation of slope stability of the 12.29 m soil slope is presented in Fig. 8. The procedure consists of two phases. Slope stability analysis was carried out in the first phase using various combinations of input parameters, including soil parameters (c, ϕ, and γ) for both embankment fill and subsoil layer and geogrid parameters (PR and TC). We fed each combination, representing a single sample of data, into the GeoStudio Slope/W module and used Bishop’s approach to determine the FOS of the slope. The output FOS values and the associated input parameters (c, ϕ, γ, PR, and TC) were used to create the primary dataset. For slope stability analysis, random samples were generated with coefficient of variation values of 0.10 for c, 0.05 for \(\phi\), 0.04 for \(\gamma\), 0.135 for PR, and 0.1 for TC. In particular, 100 datasets were developed considering the values of c, \(\phi\), \(\gamma\), PR, and TC. We normalised and divided the entire dataset into subgroups for training and testing to construct and validate the employed computational models.

4.4 AI-Based Modelling for Probabilistic Analysis

There are two parts to creating AI-based models for the soil slope of 12.29 m using probabilistic analysis. In the first step, a slope stability study was conducted utilising several input parameter combinations, specifically (a) soil parameters (c, ɸ, γ, PR, and TC). We applied Bishop’s method to these combinations to calculate the FOS of the slope. Each combination represents a single data sample submitted to the Geo-Studio Slope/W module. The primary dataset was created by combining the output FOS values with their associated input parameters (i.e. c, ɸ, γ, PR, and TC). The values of c, ɸ, γ, PR, and TC for three soil layers (i.e. embankment fill, sub-soil layer-1, and sub-soil layer-2) were combined to create 100 datasets. The entire dataset was then standardised and split into subgroups for training and testing. We randomly selected 70% of the overall dataset as the training subset, and the remaining 30% was designated as the testing subset. The testing subgroup evaluated the models’ capacity to generalise, while the training subgroup built the models outlined above. The best model for forecasting the soil slope’s safety factor was chosen (based on the testing subset’s performance), utilising many performance indices.

5 Results and Discussion

The results of slope stability analyses, with or without using an encapsulating geo-grid as a thin layer in the embankment fill of cohesive soil, are presented. Additionally, an assessment of the performances of the constructed models is included. This section presents a probabilistic study using the top-performing model with a soil slope of 12.29 m.

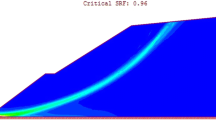

5.1 Slope Stability Analysis

As previously mentioned, geotechnical engineering utilises the factor of safety as a standard index to evaluate the safety of a slope. The FOS exhibits the smallest ratio of resistive and overturning moments among all possible sliding surfaces. We completed the deterministic slope stability analysis using the SLOPE/W module of the Geo-Studio 2016 program. We randomly generated one hundred datasets using the mean values of the soil parameters and entered them into the SLOPE/W module to determine the FOS of the specified soil slope. Each dataset’s FOS has been estimated. Figure 9 shows the results of slope stability calculations with or without geogrid. Annexure provides the data on these safety factors with or without encapsulating geogrid.

5.2 Computational Modelling

The soil parameters (c, ɸ, and γ) and the geogrid parameter (PR and TC) were utilised as the input variables, and the derived FOS values were produced as FOS as (clay + geogrid) was used as the output variable. Table 2 lists the specific input and output parameters when building models. This study looked into the number of hidden layers of NH, which ranged from 5 to 20. We also examined Levenberg–Marquardt back-propagation and tan-sigmoid activation functions to establish the optimal topology of neural networks (NNs). The model utilised eight input parameters throughout.

5.3 Performance Assessment

We identified and evaluated multiple performance indices to assess the built models’ performance in calculating the safety factor of the specified soil slope. We computed the following metrics using the corresponding mathematical formulas: the terms root mean square error (RMSE), performance index (PI), Nash–Sutcliffe efficiency (NS), mean absolute error (MAE), determination coefficient (R2) (Sabri et al. 2023), Willmott’s index of agreement(WI), and weighted mean absolute percentage error (WMAPE) (Ahmad et al. 2023) are some of those used in statistics.

where \({y}_{i}\) and \({\widetilde{y}}_{i}\) define actual and modelled ith value, \(n\) is the sample numbers, \({y}_{{\text{avg}}}\) is the mean of the actual values and the variance account factor (VAF). It is important to note that for a perfect model, these indices’ values should match their ideal (Kumar et al. 2019; Bardhan et al. 2021; Raja et al. 2021; Raja and Shukla 2021a, 2020, 2021b). Furthermore, selecting various indices allows for estimating the model’s performance regarding multiple factors, such as variance, accuracy, and the error between estimated and actual values.

Table 3 displays the prediction outcomes of the developed models. Table 3 exclusively presents information on the performance of the models created using training data. The RMSE and R2 figures demonstrate that the CNN maintained the expected accuracy throughout the training phase (RMSE = 0.00208 and R2 = 0.99992). Furthermore, the R2 value indicates that every model achieved greater than 90% accuracy, demonstrating an excellent fit to the gathered dataset. For the best models, CNN (RMSE = 0.00208), DNN (RMSE = 0.0079), ANN (RMSE = 0.0283), and MLR (RMSE = 0.0307), regressions of the measured and predicted FOS values are provided in Fig. 10 to illustrate the fit further.

After developing the models, a testing subset of the new dataset was used to evaluate the generalisation potential. We established the performance indicators to gauge model performance during the training phase for the testing subset. Table 4 displays the performance of the testing dataset, where it can be observed that the suggested CNN consistently achieved the highest precision (R2 = 0.9746 and RMSE = 0.0342). The ANN model was determined to be the least efficient, with R2 = 0.9430 and RMSE = 0.0558. Figure 11 shows scatterplots for the top three models, MLR (RMSE = 0.0387), CNN (RMSE = 0.0342), and DNN (RMSE = 0.0398), based on their RMSE values.

5.4 Accuracy Matrix

This section explores using accuracy matrices as a novel graphical method for analysing efficiency using a heat map. The matrices incorporate multiple statistical factors, enabling the estimation of predictive accuracy in testing and training datasets. During the training and testing phases, Fig. 10 displays the accuracy matrices of the models related to FOS. The accuracy matrix compares the performance factor accuracy outcomes to the ideal accuracy in the following ways:

where pe represents the acquired values for RMSE, MAE, RSR, and WMAPE; pa represents the obtained values for R2, WI, NSE, and PI; and it means the ideal value for R2, WI, NSE, and PI. We used Eq. (17) to evaluate the accuracy of the created models in terms of RMSE, MAE, RSR, and WMAPE indices. On the other hand, Eq. (18) assessed the predictive precision of the developed models in terms of R2, WI, NSE, and PI indices.

For instance, the RMSE value of the CNN model was determined to be 0.0017135; consequently, the accuracy of (1 − 0.0017135) \(\times\) 100 = 0.9983 \(\times\) 100 = 99.83% as depicted in Fig. 12a for the CNN model vs. the RMSE index. The R2 value of the CNN model was determined to be 0.99996, resulting in an accuracy of (0.99996/1) × 100 = 0.99996 × 100 = 100%, as depicted in Fig. 12a.

5.5 Reliability Analysis

The reliability index is a comparative indicator of the state of things and offers a qualitative structural performance assessment. Structures with a relatively high-reliability index are assumed to work well. In this section, we compute the reliability index independently for the measured and predicted neural network training and testing models. The reliability index must be greater than one for a safe and dependable situation. We use the first-order reliability (FORM) method to calculate the reliability index. The proposed model exhibits an extremely low failure probability, indicating a very high level of safety. We compare the reliability index values to the actual values and select the model with the slightest divergence between the actual and model values. In the training and testing phase, CNN performed the best, followed by DNN, ANN, and MLR. We thoroughly calculated the POF by estimating the high-speed heavy-haul freight corridor’s 12.29-m-high soil slope, as indicated in Annexure. Tables 5and 6 report the reliability index values of the actual and suggested models for training and testing, respectively. Figure 13 compares these values.

6 Summary and Conclusion

This research utilised convolutional neural networks (CNN), deep neural networks (DNN), artificial neural networks (ANN), and multiple linear regression (MLR) to examine the reliability analysis of railway embankments located on clay, focusing on a factor of safety criteria. These models were comprehensively evaluated, employing diverse fitness measures, resulting in enlightening comparisons. The reliability index demonstrates that all four models had reliable prediction skills. CNN had a notably higher level of performance in comparison to other fitness-based models when it came to predicting the safety factor of railway embankments. The strategy is a reliable soft computing method for accurately forecasting the slope factor of safety for railway embankments composed of clay.

Furthermore, this technique shows promise for applying to various slope scenarios in embankments. The built models demonstrated a high degree of generalizability, increasing their potential for practical use. Therefore, the existing models exhibit potential in forecasting the dependability index of embankment slope safety.

The proposed convolutional neural network (CNN) presents several benefits from a computational modelling perspective. These advantages encompass enhanced accuracy in predicting outcomes during training and testing stages, accelerated convergence, and enhanced ability to generalise to new data. However, a limitation of the suggested CNN model is the challenge of determining the search space for CNN parameters, which imposes restrictions on the movement of particles. The investigation of alternate sets of mechanisms of action (MOAs) can potentially improve the performance of convolutional neural networks (CNNs), given the study’s exclusive emphasis on supervised learning methods. In addition, a thorough probabilistic examination of soil slope should include pertinent factors such as pore-water pressure, the width of the embankment at its upper and lower sections, the angle of the side slope, and the height of the embankment. By adopting a more inclusive methodology, the slope reliability assessment can be conducted by utilising a well-established machine learning-based model. Moreover, the prospective utilisation of a hybrid machine learning paradigm in forthcoming research exhibits possibilities for additional progressions.

Data Availability

The author confirms that the data supporting the findings of this study are available from the corresponding author upon reasonable request from the reader.

References

Abdi, M.R., Sadrnejad, A., Arjomand, M.A.: Strength enhancement of clay by encapsulating geogrids in thin layers of sand. Geotext. Geomembranes. 27, 447–455 (2009)

Afrazi, M., Yazdani, M.: Determination of the effect of soil particle size distribution on the shear behavior of sand. J. Adv. Eng. Comput. 5, 125–134 (2021)

Ahmad, F., Samui, P., Mishra, S.S.: Probabilistic analysis of slope using Bishop method of slices with the help of subset simulation subsequently aided with hybrid machine learning paradigm. Indian Geotech. J. 1–21 (2023)

Assefa, E., Lin, L.J., Sachpazis, C.I., Feng, D.H., Shu, S.X., Xu, X.: Slope stability evaluation for the new railway embankment using stochastic finite element and finite difference methods. Electron. J. Geotech. Eng. 22, 51–79 (2017)

Baecher, G.B., Christian, J.T.: Reliability and statistics in geotechnical engineering, John Wiley & Sons (2005)

Bardhan, A., Manna, P., Kumar, V., Burman, A., Žlender, B., Samui, P.: Reliability analysis of piled raft foundation using a novel hybrid approach of ANN and equilibrium optimizer. Comput. Model. Eng. Sci. 128 (2021). https://doi.org/10.32604/cmes.2021.015885

Bardhan, A., Samui, P.: Probabilistic slope stability analysis of heavy-haul freight corridor using a hybrid machine learning paradigm. Transp. Geotech. 37, 100815 (2022). https://doi.org/10.1016/j.trgeo.2022.100815

Bergado, D.T., Sampaco, C.L., Shivashankar, R., Alfaro, M.C., Anderson, L.R., Balasubramaniam, A.S.: Performance of a welded wire wall with poor quality backfills on soft clay. In: Geotech. Eng. Congr., pp. 909–922 (1991)

Bishop, A.W.: The use of the slip circle in the stability analysis of slopes. Géotechnique. 5, 7–17 (1955). https://doi.org/10.1680/geot.1955.5.1.7

Cao, Z., Wang, Y., Li, D.: Probabilistic approaches for geotechnical site characterization and slope stability analysis, Springer (2017)

Chen, H.-T., Hung, W.-Y., Chang, C.-C., Chen, Y.-J., Lee, C.-J.: Centrifuge modeling test of a geotextile-reinforced wall with a very wet clayey backfill. Geotext. Geomembranes. 25, 346–359 (2007)

Cho, S.E.: Probabilistic stability analyses of slopes using the ANN-based response surface. Comput. Geotech. 36, 787–797 (2009)

Das, B.M., Khing, K.H., Shin, E.C.: Stabilization of weak clay with strong sand and geogrid at sand-clay interface. Transp. Res. Rec. 1611, 55–62 (1998)

Das, G., Burman, A., Bardhan, A., Kumar, S., Choudhary, S.S., Samui, P.: Risk estimation of soil slope stability problems. Arab. J. Geosci. 15, 1–16 (2022)

Démurger, S.: Infrastructure development and economic growth: an explanation for regional disparities in China? J. Comp. Econ. 29, 95–117 (2001)

Deng, J.: Structural reliability analysis for implicit performance function using radial basis function network. Int. J. Solids Struct. 43, 3255–3291 (2006)

Deng, J., Gu, D., Li, X., Yue, Z.Q.: Structural reliability analysis for implicit performance functions using artificial neural network. Struct. Saf. 27, 25–48 (2005)

Faure, Y.H., Baudoin, A., Pierson, P., Plé, O.: A contribution for predicting geotextile clogging during filtration of suspended solids. Geotext. Geomembranes. 24, 11–20 (2006). https://doi.org/10.1016/j.geotexmem.2005.07.002

Giroud, J.P.: From geotextiles to geosynthetics: a revolution in geotechnical engineering--3rd International Conference on Geotextiles (1986)

Golafshani, E.M., Behnood, A., Arashpour, M.: Predicting the compressive strength of normal and High-Performance Concretes using ANN and ANFIS hybridized with Grey Wolf Optimizer. Constr. Build. Mater. 232, 117266 (2020). https://doi.org/10.1016/j.conbuildmat.2019.117266

He, X., Xu, H., Sabetamal, H., Sheng, D.: Machine learning aided stochastic reliability analysis of spatially variable slopes. Comput. Geotech. 126, 103711 (2020)

Huang, Y., Chen, C., Su, D., Wu, S.: Comparison of leading-industrialisation and crossing-industrialisation economic growth patterns in the context of sustainable development: lessons from China and India. Sustain. Dev. 28, 1077–1085 (2020)

KabongoBooto, G., Run Vignisdottir, H., Marinelli, G., Brattebø, H., Bohne, R.A.: Optimizing road gradients regarding earthwork cost, fuel cost, and tank-to-wheel emissions. J. Transp. Eng. Part A Syst. 146, 4019079 (2020)

Kumar, V., Samui, P., Himanshu, N., Burman, A.: reliability-based slope stability analysis of Durgawati Earthen Dam considering steady and transient state seepage conditions using MARS and RVM. Indian Geotech. J. 49, 650–666 (2019). https://doi.org/10.1007/s40098-019-00373-7

Kumar, D.R., Samui, P., Burman, A., Wipulanusat, W., Keawsawasvong, S.: Liquefaction susceptibility using machine learning based on SPT data. Intell. Syst. with Appl. 20, 200281 (2023)

Le, L.T., Nguyen, H., Dou, J., Zhou, J.: A comparative study of PSO-ANN, GA-ANN, ICA-ANN, and ABC-ANN in estimating the heating load of buildings’ energy efficiency for smart city planning. Appl. Sci. 9, 2630 (2019)

Majedi, M.R., Afrazi, M., Fakhimi, A.: A micromechanical model for simulation of rock failure under high strain rate loading. Int. J. Civ. Eng. 19, 501–515 (2021)

Phoon, K.-K., Ching, J.: Risk and reliability in geotechnical engineering. CRC Press, Boca Raton (2015)

Pradhan, B., Chaudhari, A., Adinarayana, J., Buchroithner, M.F.: Soil erosion assessment and its correlation with landslide events using remote sensing data and GIS: a case study at Penang Island, Malaysia. Environ. Monit. Assess. 184, 715–727 (2012). https://doi.org/10.1007/s10661-011-1996-8

Raja, M.N.A., Shukla, S.K.: An extreme learning machine model for geosynthetic-reinforced sandy soil foundations. Proc. Inst. Civ. Eng. Eng. 1–21 (2020)

Raja, M.N.A., Shukla, S.K.: Multivariate adaptive regression splines model for reinforced soil foundations. Geosynth. Int. 1–23 (2021)

Raja, M.N.A., Shukla, S.K., Khan, M.U.A.: An intelligent approach for predicting the strength of geosynthetic-reinforced subgrade soil, Int. J. Pavement Eng. 0, 1–17 (2021). https://doi.org/10.1080/10298436.2021.1904237.

Raja, M.N.A., Shukla, S.K.: Predicting the settlement of geosynthetic-reinforced soil foundations using evolutionary artificial intelligence technique. Geotext. Geomembranes. (2021a). https://doi.org/10.1016/j.geotexmem.2021.04.007

RDSO/GE:IRS-0004.: Comprehensive guidelines and specifications for railway formation, research designs and standards organisation, Lucknow. (2020)

Reale, C., Xue, J., Pan, Z., Gavin, K.: Deterministic and probabilistic multi-modal analysis of slope stability. Comput. Geotech. 66, 172–179 (2015). https://doi.org/10.1016/j.compgeo.2015.01.017

Rezamand, A., Afrazi, M., Shahidikhah, M.: Study of convex corners’ effect on the displacements induced by soil-nailed excavations. J. Adv. Eng. Comput. 5, 277–290 (2021)

Sabri, M.S., Ahmad, F., Samui, P.: Slope stability analysis of heavy-haul freight corridor using novel machine learning approach. Model. Earth Syst. Environ. 1–19 (2023)

Safa, M., Sari, P.A., Shariati, M., Suhatril, M., Trung, N.T., Wakil, K., Khorami, M.: Development of neuro-fuzzy and neuro-bee predictive models for prediction of the safety factor of eco-protection slopes. Phys. A Stat. Mech. Its Appl. 550, 124046 (2020)

Shariati, M., Azar, S.M., Arjomand, M.-A., Tehrani, H.S., Daei, M., Safa, M.: Evaluating the impacts of using piles and geosynthetics in reducing the settlement of fine-grained soils under static load. Geomech. Eng. 20, 87–101 (2020a)

Shariati, M., Mafipour, M.S., Haido, J.H., Yousif, S.T., Toghroli, A., Trung, N.T., Shariati, A.: Identification of the most influencing parameters on the properties of corroded concrete beams using an Adaptive Neuro-Fuzzy Inference System (ANFIS). Steel Compos. Struct. 34, 155 (2020b)

Shariati, M., Davoodnabi, S.M., Toghroli, A., Kong, Z., Shariati, A.: Hybridization of metaheuristic algorithms with adaptive neuro-fuzzy inference system to predict load-slip behavior of angle shear connectors at elevated temperatures. Compos. Struct. 278, 114524 (2021)

Straub, S.: Infrastructure and growth in developing countries: recent advances and research challenges, World Bank Policy Res. Work. Pap. (2008)

Sushma, M.B., Roy, S., Maji, A.: Exploring and exploiting ant colony optimization algorithm for vertical highway alignment development. Comput. Civ. Infrastruct. Eng. (2022).

Zhang, W., Li, H., Tang, L., Gu, X., Wang, L., Wang, L.: Displacement prediction of Jiuxianping landslide using gated recurrent unit (GRU) networks. Acta Geotech. 1–16 (2022)

Zhao, H.: Slope reliability analysis using a support vector machine. Comput. Geotech. 35, 459–467 (2008)

Zhu, W., Rad, H.N., Hasanipanah, M.: A chaos recurrent ANFIS optimized by PSO to predict ground vibration generated in rock blasting. Appl. Soft Comput. 108, 107434 (2021)

Author information

Authors and Affiliations

Contributions

FA: research methodology, resources, software, validation, visualisation, original draft, review, and editing writing; PS: guidance. SSM: guidance.

Corresponding authors

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Annexure

Annexure

Calculating the reliability index (β) and the failure probability (POF).

-

1.1.

Determination of β and POF

Steps: The following steps can be followed:

-

a)

Generation of random values of \(c\) 1, ɸ 1, \(\gamma\) 1, \(c\) 2, ɸ 2, \(\gamma\) 2, PR, and TC

-

b)

Calculation of FOS using CNN, DNN, ANN, and MLR.

-

c)

Calculate \({\mu }_{{\text{FOS}}}\) and \({\sigma }_{{\text{FOS}}}\).

-

d)

Calculation of \(\beta\) and POF as per Eq. (2) and Eq. (5), respectively.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ahmad, F., Samui, P. & Mishra, S.S. Probabilistic Slope Stability Analysis on a Heavy-Duty Freight Corridor Using a Soft Computing Technique. Transp. Infrastruct. Geotech. 11, 2090–2113 (2024). https://doi.org/10.1007/s40515-023-00365-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40515-023-00365-4