Abstract

This article investigates the finite-time synchronization (FTS) of memristive neural networks (MNNs) with leakage and mixed delays by using state feedback and adaptive control techniques. The solution of all the systems has been obtained in the Filippov sense using theories of differential inclusion and set-valued maps. To assure the synchronization of memristive neural networks, a few sufficient conditions based on the Filippov solution and Lyapunov functional technique rather than the finite-time stability theorem have been obtained. In order to achieve synchronization within finite time, a discontinuous state feedback controller has been constructed, and settling time has been determined explicitly. A novel adaptive controller has been constructed to minimize the control gain. The numerical examples authenticate the efficacy of the theoretic outcomes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

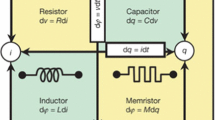

The last few decades have witnessed at growing attention of the researchers toward the study of neural networks. This is due to its wide range of applications. Nowadays, memristors become quite useful devices in electronic appliances due to their non-volatile characteristics, i.e., ability to preserve memory without power. A memristor is a type of electronic device that regulates or confines the flow of electric current in a circuit and memorizes the previously flowed current through it. Basically, memristor is an acronym for memory resistor having memory effect and firstly perceived by Chua [1] in 1971. Chua demonstrated the relation between magnetic flux and electric charge. He also concluded that memristor would be the fourth essential component of a circuit. However, due to the nascent nanotechnology at that time the physical implementation of it was quite challenging. In addition, synapses are very essential component of neural networks, since they may store information and execute computation in a variety of ways. It is necessary to remember how they have previously operated with respect to presynaptic input and postsynaptic actions. In 2008, Stanley Williams and his team developed the practical memristor [2]. The most intriguing feature of the memristor is that it remembers the prior direction of the passage of electric charge in the past. Thus, the memristor may completely replicate the synaptic function in artificial neural networks (ANNs) [2].

The human brain is a highly complex, nonlinear, and self-organized system that effectively exploits complicated dynamics to carry out its “computations”. The only way to accurately imitate its extensive capability is to improve the hardware emulated. The most promising options to create bio-realistic neuromorphic systems are artificial neurons constructed with active memristors, nonlinear memory components with programmable resistance.

Since the memristor mimics the forgetting and remembering (memory) processes in human brains, it is widely recognized and has potential uses in powerful brain-like computers and future computers. There are various biological reasons that an inductance term should be included in a neurological system. When inductance is added, the membrane develops electrical tuning and filtering behaviors. In order to better emulate the artificial neural networks of the human brain; the traditional resistor of self-feedback connection weights are replaced by the memristor. For instance, comparable circuits with an inductance can be used to model the membrane of a hair cell in the semicircular canals of some animals [3, 4].

The ordinary resistor of self-feedback connection weights and the connection weights of primitive neural networks is replaced by the memristor in order to more accurately mimic the artificial neural networks of the human brain. Further, memristive neural networks (MNNs) have more significance in the study of human brain simulation. It is also observed that MNNs are more sensitive to the initial states as compared to other neural networks, which leads to a more complex chaotic path for MNNs. Therefore, the findings of MNNs synchronization have been substantially applied in the domain of science and engineering. In 2008, the Hewlett-Packard research team produced the first memristor prototype [5, 6]. The stability of the neural network is an essential condition for application aspect and received much more attention of the researchers [7, 8]. However, neuron experiences delay during signal transmission due to finite processing speed of neurons, which may cause the divergence, oscillation, and even instability of neural networks [9]. Therefore, time delay should be considered when examining the dynamics of MNNs. Under some conditions, there could be either a distribution of propagation delay over a period of time or a dispersion of conduction velocities along those pathways, which could lead to distributed delays in neural networks [10]. There are some remarkable outcomes published in recent year [11,12,13] related to the stability analysis of delayed MNNs.

In 2003, Li and Tian [14] developed the continuous state feedback controller to resolve the issue of synchronization by the help of a finite-time control method between two chaotic systems. Mei et al. [15] derived some effective criteria to analyze the FTS of complex neural networks (CNNs) through impulsive periodically intermittent control technique with delayed and non-delayed coupling. Yan et al. [16] discussed the synchronization in finite-time using decomposition method. They have explained some of the complication associated with it with the help of Mittag-Leffler function and inequalities.

Time delay is a phenomenon which shows that the future state of system depends on both its past and current states. It is frequently used in various fields including biological and economic systems. In neural networks, the time delay occurs in the dynamical behavior of the networks and processing of information storage. Consequently, it makes sense to take time delay into account while modeling dynamical networks [17].

Further, the dynamics of delayed MNNs have been examined by several researchers [18,19,20,21,22]. The FTS of neural networks (NNs) without settling time was examined by Yang [23]. However, finite-time results without a settling time are inexpedient to the engineers in practical applications.

Cao et al. [24] discussed the problem of synchronization for delayed memristive neural networks (MNNs) with mismatch parameters via event-triggered control. They have used matrix measure approach to derive the criteria of quasi-synchronization and generalized Halanay inequality. Wang et al. [25] considered the problem of MNNs with multi-directional associative memory of finite-time synchronization and constructed nonlinear chaotic models for finite-time synchronization. Due to its wide range of possible applications in the field of information sciences and secure communication; synchronization is a critical dynamical characteristic of neural networks and has equal importance as the stability of the system [26,27,28]. Miaadi and Li [29] investigated the issue of fixed-time stabilization for uncertain impulsive distributed delay neural networks and derived some new criteria to deal with the impulsive effect on fixed time stabilization. The stability criteria of delayed MNNs have been examined by Li and Cao [30]. Further, event-triggered sample control was used to stabilize MNNs with communication delays and achieve global asymptotic stability in [31].

Generally, the switching parameters of MNN are state-dependent and always not same if the initial conditions of the systems are different. Therefore, it is impossible to synchronize MNNs using typical robust analytical approaches or classical analytical procedures for robust synchronization of neural networks with mismatched unknown parameters [32, 33]. Therefore, many researchers are interested to overcome the hurdle of mismatched parameters and achieve the synchronization of MNNs, error stability of the system. It has also emphasized that the majority of published research work on synchronization of delayed MNNs are asymptotic and none of them taken into account the synchronization of MNNs with mixed and leakage delays in finite-time with mismatched switching parameters. In general, it is necessary for designed controllers to be straight forward and to lower control costs. Designing an appropriate controller to achieve MNNs synchronization in finite-time with mixed and leakage delay is a difficult task.

In Cauchy problem \(\dot{x}(t) = g(x(t))\),\(\forall \,t\, \ge 0\), \(x(0) = x_{0}\) the measurability of function g is not enough to guarantee the existence of solutions. This can be fixed by substituting the function g with its Filippov regularization G, i.e., the differential inclusion \(\dot{x}(t) \in G(x(t))\), is always solvable. The set-valued maps are said to be Filippov representable if they can be obtained from Filippov regularization of a single valued measurable function. A single-valued map G is identical to g if the function g is continuous. For the purpose of getting solutions to discontinuous differential equations, the Krasovskii and Filippov regularization approaches were introduced.

The above discussion has motivated our work to examine the finite-time synchronization of memristive neural networks with mixed and leakage delays along with mismatched switching parameters. The main contributions of this paper are as follows:

-

(a)

Derivation of sufficient conditions to assure the synchronization of MNNs with the help of Filippov solution and Lyapunov functional technique without utilizing the finite-time stability theorem.

-

(b)

Design discontinuous state feedback controller to guarantee the synchronization of MNNs within finite settling time, and consideration of an adaptive control technique to ensure FTS of master–slave MNNs with lower control gains.

-

(c)

Explicit estimation of the settling time.

Finally, based on the addressed elaborations, the finite-time synchronization of memristive neural networks with mixed and leakage delays via state feedback controller and adaptive controller has been achieved. The mixed and leakage delays make the manuscript more complex and comprehensive than previous works. To the best of the authors’ knowledge, this work has not been done before.

The rest of the manuscript is organized as follows: some basic concepts, viz. finite-time control technique, synchronization error system and model description, have been incorporated in Sect. 2. The required definitions and conventions are also provided in Sect. 2. The finite-time synchronization of MNNs with mixed and leakage delays is detailed in Sect. 3. The theoretical outcomes are authenticated with numerical simulations in Sect. 4. A brief conclusion has been incorporated into Sect. 5.

2 Preliminaries

In this article, the solution of the MNNs has been considered in Filippov’s sense. Throughout the paper \(R\) represents a set of real numbers, \(R^{n}\) stands for a real vector space of dimension n and \(R^{nm}\) stands for collection of \(n \times m\) matrices; \(D^{ + } ( \cdot )\) denotes the positive Dini derivative. \(c\overline{o}[\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{\Theta } ,\,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{\Theta } ]\) denotes the closure of the convex hull generated by \(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{\Theta }\) and \(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{\Theta }\), where \(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{\Theta } ,\,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{\Theta } \in R\). \(A^{T}\) represents the transpose matrix of A, and \(\left\| {} \right\|_{1}\) is the representation of standard 1-norm of a vector or a matrix. Let us consider \(C_{\gamma } = (C[ - \gamma ,\,\,0],\,\,R^{n} )\) is a Banach space of continuous functions \(\hbar :[ - \gamma ,\,0] \to R^{n}\) with norm \(\left\| \hbar \right\| = \sup_{s \in [ - \gamma ,\,0]} \,\left\| {\hbar (s)} \right\|\) and \(\gamma = \max \left\{ {\delta ,\tau ,\,\left. \sigma \right\}} \right.\).

Now, first of all we present some basic concepts regarding set-valued analysis and functional differential inclusions. Let us consider the Lebesgue measurable space \(\left( {[0,\omega ],L} \right)\) and an n-dimensional real Euclidean space \(\left( {R^{n} ,\,\left\| {( \cdot )} \right\|} \right),\,\,(n > 1)\) with induced Euclidean norm || ||. Assume \(Z \subseteq R^{n}\), initially, we introduce the following notations:

For convenience, we sometimes denote \(2^{Z} = \wp_{0} (Z)\). For a given \(C \subset \wp_{g} (Z)\), \(z \in Z\), the distance function can be defined as \({\text{dist}}(z,C) = \inf \left\{ {\left\| {z - c} \right\|:c \in C} \right\}\). The Hausdorff metric on \(\wp_{g} (Z)\) is defined as [34],

where \(\beta (C,D) = \sup \left\{ {{\text{dist}}(x,D):x \in C} \right\}\), \(\beta (B,A) = \sup \left\{ {{\text{dist}}(y,D):y \in D} \right\}\).

It is obvious that \(\left( {\wp_{g} (Z),d_{H} } \right)\) is a complete metric space and a closed subset of it is \(\wp_{g(c)} (Z)\). Suppose \(Z \subseteq R^{n}\), if there exists a non-empty set \(G(z) \subset R^{n}\) for each \(z \in Z\), then a map \(z \mapsto G(z)\) is said to be a set valued map \(Z\)↪\(R^{n}\). An upper semi-continuous (USC) map is a set valued map G with non-empty values at \(z_{0} \in Z\), if \(\beta (G(z),\,G(z_{0} )) \to 0\) as \(z \to z_{0}\). \(G(z)\) is said to have a closed (compact, convex) image if for each \(z \in Z\), G(z) is closed (compact, convex). We say \(G:\,[0,\,\omega ] \to \wp_{g} (R^{n} )\) a set-valued map is measurable, if for all \(z \in R^{n}\), a positive real valued function \(t \mapsto G(z(t))\) is measurable, i.e., \({\text{Graph}}\;(G) = \left\{ {(t,\upsilon ) \in [0,\omega ] \times R^{n} ,\upsilon \in G(t)} \right\} \in L \times B(R^{n} )\) is measurable,where \(L[0,\,\omega ]\) stands for Lebesgue σ-field and \(B(R^{n} )\) represents a Borel σ-field of \(R^{n}\)

3 Model description

Consider a class of MNNs with mixed and leakage delay defined as

where \(\eta_{p} (t) \in R\) is the state of \(p{\text{th}}\) neuron and \(f_{q} (\, \cdot )\) is the activation function. The time delays \(\delta_{p} (t)\), \(\tau_{q} (t)\) and \(\sigma_{q} (t)\) represent the leakage delay, time-varying delay and distributive delay, respectively. The time delays \(\delta_{p} (t)\), \(\tau_{q} (t)\) and \(\sigma_{q} (t)\) satisfy \(0 \le \delta_{p} (t) \le \delta ,\) \(\dot{\delta }_{p} (t) \le h_{\delta } ,\)\(0 \le \tau_{p} (t) \le \tau\), \(\dot{\tau }_{p} (t) \le h_{\tau }\), \(0 \le \sigma_{p} (t) \le \sigma\), \(\dot{\sigma }_{p} (t) \le h_{\sigma }\), where \(\delta ,\,\,h_{\delta } ,\,\,\tau ,\,\,\,h_{\tau } ,\,\,\,\sigma\) and \(h_{\sigma }\) are positive constants, \(J_{p}\) is the external input and the synaptic connection weights of memristive neural network can be expressed as:

where \(a{}_{p} > 0,\,\,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{b}_{pq} ,\,\,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{b}_{pq} ,\,\,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{c}_{pq} ,\,\,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{c}_{pq} ,\,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{d}_{pq}\) and \(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{d}_{pq}\) are constant quantities, such that \(\,\,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{b}_{pq} \ne \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{b}_{pq} ,\,\,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{c}_{pq} \ne \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{c}_{pq}\) and \(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{d}_{pq} \ne \,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{d}_{pq}\) \(p,\,q = \,1,\,2,\,...,n\). The initial condition for network (1) is given as

\(\eta_{p} (s) = \varphi_{p} (s),\,\,s \in \left[ { - \gamma ,\,0} \right],\,\,p = \,1,\,2,\,...,n\), where \(\varphi_{p} (s) \in \left( {C[ - \gamma ,\,0],\,\,R} \right),\)\(\gamma = \max \left\{ {\delta ,\,\tau ,\,\,\left. \sigma \right\}} \right.\) and \(0 < \delta_{p} (t) \le \delta ,\)\(0 < \tau_{p} (t) \le \tau ,\)\(0 < \sigma_{p} (t) \le \sigma\), \(p = \,1,\,2,\,...,n\).

Now, consider the network (1) as a drive system, and the controlled response of it can be expressed as

Here, \(u_{p} (t)\) is the control input, the initial condition of network (2) is given as.

\(\xi_{p} (s) = \psi_{p} (s),\,\,\,s \in [ - \gamma ,\,0],\,\,\,p = 1,\,2,..,n,\) where \(\psi_{p} (s) \in \left( {C[ - \gamma ,\,0],\,\,R} \right),\,\gamma = \max \left\{ {\delta ,\,\tau ,\,\,\left. \sigma \right\}} \right.\) and \(0 < \delta_{p} (t) \le \delta ,\)\(0 < \tau_{p} (t) \le \tau ,\)\(0 < \sigma_{p} (t) \le \sigma\), \(p = \,1,\,2,\,...,n\).

Since a memristive neural network is a state-dependent nonlinear family of systems and exhibits nonlinear behavior, coexisting solutions, jumping solutions and transient chaos. Therefore, systems (1) and (2) become discontinuous, and the existence of a solution cannot be guaranteed in the traditional manner. Thus, theories of differential inclusions and set-valued maps along with Filippov framework have been used to transform systems (1) and (2) into traditional neural networks.

Definition 1 (Filippov regularization [35])

The Filippov regularization of a measurable function \(g(\eta )\,\,\,at\,\,\,\eta \in R^{n}\), which is allowed to be discontinuous at \(\eta\), can be expressed as:

where \(B(\eta ,\,\lambda ) = \{ z:\left\| {\,z - \eta \,} \right\| \le \lambda \}\), and \(\mu\) is Lebesgue measure. Suppose \(\overline{a} = \max \left( {a_{1} ,a_{2} ,a_{3} , \ldots a_{n} } \right)\), \(\tilde{b}_{pq} = \min \left\{ {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{b}_{pq} ,\left. {\,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{b}_{pq} } \right\}} \right.\), \(\overline{b}_{pq} = \max \left\{ {\,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{b}_{pq} ,\left. {\,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{b}_{pq} } \right\}} \right.\), \(\tilde{c}_{pq} = \min \left\{ {\,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{c}_{pq} ,\,\,\left. {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{c}_{pq} } \right\}} \right.\), \(\overline{c}_{pq} = \max \left\{ {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{c}_{pq} ,\,\,\left. {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{c}_{pq} } \right\}} \right.\), \(\tilde{d}_{pq} = \min \left\{ {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{d}_{pq} ,\,\left. {\,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{d}_{pq} } \right\}} \right.\) and \(\overline{d}_{pq} = \max \left\{ {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{d}_{pq} ,\left. {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{d}_{pq} } \right\}} \right.\).

The concept behind the Filippov regularization is that the relaxed dynamics should not involve with the sets of measure zero. The theories of differential inclusion and Filippov regularization yields:

The measurable selection theorem [36, 37] implies that there exist measurable functions \(\Gamma_{pq}^{{\eta_{01} }} \in \overline{co} \left[ {\tilde{b}_{pq} ,\overline{b}_{pq} } \right]\), \(\Gamma_{pq}^{{\eta_{02} }} \in \overline{co} \left[ {\tilde{c}_{pq} ,\overline{c}_{pq} } \right]\), \(\Gamma_{pq}^{{\eta_{03} }} \in \overline{co} \left[ {\tilde{d}_{pq} ,\overline{d}_{pq} } \right]\) as

Similarly, for network (2) there exist measurable functions \(\Gamma_{pq}^{{\xi_{01} }} \in \overline{co} \,[\tilde{b}_{pq} ,\overline{b}_{pq} ]\),\(\Gamma_{pq}^{{\xi_{02} }} \in \overline{co} [\tilde{c}_{pq} ,\overline{c}_{pq} ]\), \(\Gamma_{pq}^{{\xi_{03} }} \in \overline{co} \,[\tilde{d}_{pq} ,\,\overline{d}_{pq} ]\) such that:

Now, the synaptic weights of the MNNs (1) and (2) have been converted into state independent switching parameters with the help of Filippov regularization” [35] and measurable selection theorem [36, 37]. The bounded and Lipchitz continuous feedback functions of MNNs are commonly used in electronic applications.

Consider a vector function \(f \in C(R^{n} ,\,R^{n} ),\,\) where \(f(\eta ) = (f_{1} (\eta_{1} ),f_{2} (\eta_{2} ),f_{3} (\eta_{3} ), \ldots ,f_{n} (\eta_{n} ))\) and \(\eta = (\eta_{1} ,\,\,\eta_{2} ,\,...,\eta_{n} )\).

(A1): The bounded activation function can be considered as:

where \(P_{p} ,\;\;\;p = 1,2,...,n\) are the saturation constants.

(A2): The Lipchitz type activation function can be considered as.

\(\wp : = \Big\{ g( \cdot ):g_{p} \in C(R,R),\exists l_{p} > 0,\big| {g_{p} (\eta_{p} ) - g_{p} (\xi_{p} )} \big| < l_{p} \big| {\eta_{p} - \xi_{p} } \big|,\;\;\;\forall \xi_{p} \in R,\;\;\;p = 1,2,...,n \Big\}\),where \(\ell_{p} ,\;\;p = 1,2,...,n\) are the Lipchitz constants.

4 Finite-time synchronization

In our context, synchronization implies the convergence between the states of the drive and response systems. Generally, two different kinds of synchronization occur, infinite time synchronization such as asymptotic or exponential synchronization, and finite-time synchronization. The trajectories of the drive and response system must remain exactly the same after a specified amount of time, known as the “settling time” subjected to finite time synchronization, contrary to asymptotic or exponential synchronization.

Here, we have constructed two different kinds of controllers in such a way that the drive and response networks are synchronized in finite time. Firstly, a state feedback controller has been used to handle the finite-time synchronization problem, and then, an adaptive controller has been constructed to moderate the control gains. Meanwhile, a few mathematical arguments have been derived that yield certain adequate conditions for the synchronization between memristive neural networks (1) and (2) in finite time.

Definition 2

The network (2) is said to be finite-time synchronized with (1) by designing a suitable controller \(u_{p} (t),\,p = 1,\,2,...,n\); there exists a time instant \(t^{ * }\) (depending upon the initial conditions) such that \( \mathop {\lim }\limits_{{t \to t^{*} }} \left\| {\xi (t) - \eta (t)} \right\|_{1} = 0 \) and \(\left\| {\xi (t) - \eta (t)} \right\|_{1} \equiv 0\) for \(t > t^{ * }\) and \(\eta (t) = (\eta_{1} (t),\eta_{2} (t),...,\eta_{n} (t)){}^{T}\),\(\xi (t) = (\xi_{1} (t),\xi_{2} (t),...,\xi_{n} (t)){}^{T}\).

Let us define the error \(e_{p} (t) = \xi_{p} (t) - \eta_{p} (t)\), \(p = 1,2,...,n\) yields

where \(g_{q} (e_{q} (.)) = f_{q} (\xi_{q} (.) - f_{q} (\eta_{q} (.)),\,\,q = 1,2,...,n.\)

The networks (1) and (2) are highly sensitive to the initial conditions due to which \(\Gamma_{pq}^{{\xi_{01} }} = \Gamma_{pq}^{{\eta_{01} }}\), \(\Gamma_{pq}^{{\xi_{02} }} = \Gamma_{pq}^{{\eta_{02} }}\) and \(\Gamma_{pq}^{{\xi_{03} }} = \Gamma_{pq}^{{\eta_{03} }}\) need not be true. Thus, we have to construct an appropriate controller to overcome the hurdle of mismatched parameters. Now, define a controller function such as

Theorem 1

Let us suppose conditions (A1) and (A2) hold, then the controlled network (2) can be synchronized with network (1) under controller (6) in finite-time if the control gains \(r_{p}\) and \(\kappa_{p}\) satisfy the following conditions:

and

where \(\varsigma\) is a positive constant, \(\left| {\overline{b}_{pq} } \right| = \left| {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{b}_{pq} - \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{b}_{pq} } \right|\), \(\left| {\overline{c}_{pq} } \right| = \left| {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{c}_{pq} - \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{c}_{pq} } \right|\), \(\left| {\overline{d}_{pq} } \right| = \left| {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{d}_{pq} - \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{d}_{pq} } \right|\) and the settling time can be estimated as

where \(\dot{\delta }_{p} (t) \le h_{\delta }\), \(\dot{\tau }_{p} (t) \le h_{\tau }\), \(\dot{\sigma }_{p} (t) \le h_{\sigma }\), \(\gamma = \max \left\{ {\delta ,\tau ,\,\left. \sigma \right\}} \right.\), \(\delta_{p} (t) \le \delta ,\)\(\tau_{p} (t) \le \tau ,\)\(\sigma_{p} (t) \le \sigma\), and

Proof

Let us consider a Lyapunov functional candidate as:

where \(v_{1} (t) = \sum\limits_{p = 1}^{n} {\left| {e_{p} (t)} \right|}\), \(v_{2} (t) = \sum\limits_{p = 1}^{n} {\frac{{a_{p} }}{{1 - h_{\delta } }}\int\limits_{{t - \delta_{p} (t)}}^{t} {\left| {e_{p} (s)} \right|} \,ds}\), \(v_{3} (t) = \sum\limits_{p = 1}^{n} {\sum\limits_{q = 1}^{n} {\frac{{\overline{c}_{pq} }}{{1 - h_{\tau } }}} } \,\,\int\limits_{{t - \tau_{q} (t)}}^{t} {\left| {g_{q} (e_{q} (s))} \right|} ds\), \(v_{4} (t) = \sum\limits_{p = 1}^{n} {\sum\limits_{q = 1}^{n} {\frac{{\overline{d}_{pq} }}{{1 - h_{\sigma } }}\int\limits_{{ - \sigma_{q} (t)}}^{0} {\,\,\int\limits_{t + \theta }^{t} {\left| {g_{q} (e_{q} (s)))} \right|\,} } } ds\,d\theta }\) and \(\dot{\delta }_{p} (t) \le h_{\delta }\), \(\dot{\tau }_{p} (t) \le h_{\tau }\), \(\dot{\sigma }_{p} (t) \le h_{\sigma }\).

The “signum function” is defined as

The upper right Dini derivative of Lyapunov functional \(V\left( t \right)\) can be expressed as

Now the upper right Dini derivative of \(v_{1} \left( t \right)\) can be expressed as:

Assumption (A2) yields:

Let us suppose

Assumption (A1) yields:

Combining the above inequalities with (11), one has

Let us define an indicator function as follows:

By combining (12) and (13), \(D^{ + } \left( {v_{1} \left( t \right)} \right)\) in terms of indicator function can be expressed as

\(\dot{v}_{2} \left( t \right)\) can be expressed as

\(\dot{v}_{3} \left( t \right)\) can be expressed as

\(\dot{v}_{4} \left( t \right)\) can be expressed as

Combining inequalities (13)–(17), we obtain

Let \(\omega_{p} = \,\,\,\frac{{\overline{a}_{p} }}{{1 - h_{\delta } }} + \sum\limits_{q = 1}^{n} {\left( {\overline{{b_{q} }} + \frac{{\overline{a}_{pq} }}{{1 - h_{q} }} + \frac{{\overline{d}_{pq} \,\sigma }}{{1 - h_{\sigma } }}} \right)\,} l_{q}\) and apply the given condition (8), one can express \(D^{ + } \left( {V\left( t \right)} \right)\) as

If \(\left\| {e(t)} \right\|_{1} \ne 0\) and condition (7) holds, then the inequality (19) can be expressed as

From above inequality (20) and (9), there exists a non-negative constant \(V^{*}\) as follows:

The integration of Eq. (21) from \(0\;{\text{to}}\;\infty\) yields

Now, two possibilities may arise, either \(\left\| {e(t)} \right\|_{1} = 0\) or \(\left\| {e(t)} \right\|_{1} \ne 0\).

Case I when \(\left\| {e(t)} \right\|_{1} = \sum\limits_{p = 1}^{n} {\left| {e_{p} (t)} \right|} = 0\), \(\forall \,t \ge 0\), there is nothing to prove and the finite-time synchronization has been established between networks (1) and (2).

Case II when \(\left\| {e(t)} \right\|_{1} = \sum\limits_{p = 1}^{n} {\left| {e_{p} (t)} \right|} \ne 0\), there exist \(p_{0} \in \{ \,1,\,2,\,...\,,\,n\}\) such that \(\left| {e_{{p_{0} }} (t)} \right| > 0\) \(\forall \,t \ge 0\), which implies that \(- \,\varsigma \sum\limits_{p = 1}^{n} {\mu_{p} } \, < 0\) and \(\mathop {\lim }\limits_{t \to \infty } V(t) = - \infty\).

This is a contradiction of Eq. (21), so there exist \(t^{*} \in (0,\,\infty )\) such that

Now, we have to prove that \(\left\| {e(t)} \right\|_{1} = 0\), \(\forall \,t \ge t^{*}\). Firstly, we claim that \(\left\| {e(t^{*} )} \right\|_{1} = 0\). Since norm-\(\,\left\| {( \cdot )} \right\|_{1}\) is a positive continuous function, if \(\left\| {e(t)} \right\|_{1} \ne 0\), then there exists a constant \(\lambda > 0\) such that \(\left\| {e(t)} \right\|_{1} > 0\), \(t \in [t^{*} ,\,t^{*} + \lambda ]\) which implies that there exist at least one \(p_{01} \in \{ \,1,\,2,\,...,\,n\}\) such that \(\left| {e_{{p_{01} }} (t)} \right| > 0\), \(\forall \,\,t \in [t^{*} ,\,t^{*} + \lambda ]\). We can conclude from the previous analysis that the derivative of Lyapunov function is negative definite, i.e., \(\dot{V}(t) < 0\), \(\forall \,\,t \in [t^{*} ,\,t^{*} + \lambda ]\), which contradicts Eq. (21). Hence \(\left\| {e(t^{*} )} \right\|_{1} = 0\).

Now, it is required to prove \(\left\| {e(t)} \right\|_{1} = 0\), \(\forall \,t > t^{*}\). If possible suppose that \(\left\| {e(t)} \right\|_{1} \ne 0\), for some \(t_{1} > t^{*}\). Let us define \(t_{\alpha } = \sup \left\{ {\,t \in [t^{*} ,\,\,t_{1} ]:\,\,\left\| {e(t)} \right\|_{1} = 0} \right\}\), then there exist a \(t_{2} \in (t_{\alpha } ,\,t_{1} ]\) such that \(\left\| {e(t)} \right\|_{1}\) is monotonic increasing \(\forall \,t\, \in (t_{\alpha } ,\,t_{2} ]\). This also implies that the Lyapunov function is also monotonic increasing, i.e., \(\dot{V}(t) > 0\). On the other side \(\left\| {e(t)} \right\|_{1} > 0\),\(\forall \,t\, \in (t_{\alpha } ,\,t_{2} ]\) then for some \(p_{02} \in \{ \,1,\,2,\,...\,,\,n\}\), \(\left| {e_{{p_{02} }} (t)} \right| > 0\), \(\forall \,t\, \in (t_{\alpha } ,\,t_{2} ]\). This again implies that \(\dot{V}(t) < 0\), \(\forall \,t\, \in (t_{\alpha } ,\,t_{2} ]\), which is a contradiction. Therefore, finite-time synchronization has been established between networks (1) and (2) through the controller (6) and condition (23) holds.

5 Settling time

For the settling time, we have to show that \(V^{*} = 0\). Now suppose if \(V^{*} > 0\), then \(v{}_{k}(t^{*} ) > 0\), for some k, \(k \in \{ \,1,\,2,\,3,\,4\}\). If \(v{}_{1}(t^{*} ) > 0\), then there exist \(p_{03} \in \{ \,1,\,2,\,...,n\}\) such that \(\left| {e_{{p_{03} }} (t)} \right| > 0\) and \(\dot{V}(t) < 0\), which is a contradiction of Eq. (21).

If \(v{}_{2}(t^{*} ) > 0\), i.e., \(\sum\limits_{p = 1}^{n} {\frac{{a_{p} }}{{1 - h_{\delta } }}\int\limits_{{t^{*} - \delta_{q} (t^{*} )}}^{{t^{*} }} {\left| {e_{p} (s)} \right|} {\text{d}}s} > 0\), there exist \(t_{4} \in [t^{*} - \delta ,\,\,t^{*} ]\) and a constant \(\beta_{1} > 0\), such that \(\left\| {e(t)} \right\|_{1} > 0\), \(\forall \,\,t \in [t_{4} - \beta_{1} ,\,t_{4} + \beta_{1} ]\), which contradicts the Eq. (21), hence \(v{}_{2}(t^{*} ) = 0\). If \(v{}_{3}(t^{*} ) > 0\), i.e., \(\sum\limits_{p = 1}^{n} {\sum\limits_{q = 1}^{n} {\frac{{\overline{c}_{pq} }}{{1 - h_{\tau } }}} } \int\limits_{{t^{*} - \tau_{q} (t^{*} )}}^{{t^{*} }} {\left| {g_{q} (e_{q} (s))} \right|} {\text{d}}s > 0\), there exist \(t_{5} \in [t^{*} - \tau ,\,\,t^{*} ]\) and a constant \(\beta_{2} > 0\), such that \(\left\| {\,g(e(t))\,} \right\|_{1} > 0\), \(\forall \,\,t \in [t_{4} - \beta_{2} ,\,t_{4} + \beta_{2} ]\). Now the assumption (A1) yields \(\left\| {\,e(t)\,} \right\|_{1} > 0\), \(\forall \,\,t \in [t_{4} - \beta_{2} ,\,\,\,t_{4} + \beta_{2} ]\), which contradicts the Eq. (21), hence \(v_{3} (t^{*} ) = 0\). If \(v{}_{4}(t^{*} ) > 0\), i.e., \(\sum\limits_{p = 1}^{n} {\sum\limits_{q = 1}^{n} {\frac{{\overline{d}_{pq} }}{{1 - h_{\sigma } }}\int\limits_{{ - \sigma_{q} (t^{*} )}}^{0} {\int\limits_{{t^{*} + \theta }}^{{t^{*} }} {\left| {g_{q} (e_{q} (s)))} \right|} } } {\text{d}}s{\text{d}}\theta } > 0\), apply the assumption (A1) to the above inequality, there exist a \(t_{6} \in [t^{*} - \sigma ,\,\,t^{*} ]\) and a constant \(\beta_{3} > 0\) such that \(\left\| {\,e(t)\,} \right\|_{1} > 0\), \(\forall \,\,t \in [t_{6} - \beta_{3} ,\,t_{6} + \beta_{3} ]\). This is the violation of Eq. (21). Hence, \(V^{*} = 0\).

From the above discussion, we can conclude that if \(\left\| {\,e(t^{*} - \gamma )\,} \right\|_{1} = 0\), then \(\left\| {\,e(t^{*} )\,} \right\|_{1} = 0\), where \(\gamma = \max \left\{ {\delta ,\tau ,\,\left. \sigma \right\}} \right.\).

In order to estimate the settling time, suppose that when \(\left\| {\,e(t)\,} \right\|_{1} \ne 0\), then

The integration of the above inequality (24) from 0 to \(\,t^{*} \,\) yields

Therefore, \(\left\| {\,e(T)\,} \right\|_{1} = 0\) and \(\left\| {\,e(t)\,} \right\|_{1} = 0\),\(\forall \,\,t > T\).where \({\rm T} = \,(t^{*} - \gamma )\), \(T \le \frac{V(0)}{\varsigma } - \gamma\), and \(\gamma = \max \left\{ {\delta ,\tau ,\,\left. \sigma \right\}} \right.\).

Note 1 It can be observed that the controlled MNN (2) is synchronized with MNN (1) with in the estimated settling time through a discontinuous state feedback controller. The settling time \({\rm T} = \,(t^{*} - \gamma ) < t^{*}\) is more applicable and efficient for practical perspective.

Note 2 It should be observed that the parameter \(\eta_{p}\) of the controller (6) has been used to eradicate the mismatch parameters of the networks and the length of the settling time can be scaled via parameter \(\varsigma\) [see (25)]. Finally, we can conclude that larger the value of scaling factor \(\varsigma\), faster will be the synchronization (as shown in Fig. 2).

Generally, the adaptive control technique diminishes control gains through adaptive law as compared to state feedback control. Therefore, some criteria have been suggested to construct adaptive controllers to ensure the synchronization between MNNs (1) and (2). Design adaptive controller as follows

Here, \(\mu_{p}\) is an indicator function defined in the previous theorem \(\kappa_{p}\),\(\delta_{p}\) are positive constants and \({\text{sgn}} \,\left( {e_{p} \left( t \right)} \right)\) stands for signum function for \(p = 1,2,...,n\).

Theorem 2

The synchronization between memristive neural networks (1) and (2) can be achieved through adaptive controller (26) within a finite-time provided assumptions (A1) and (A2) hold.

Proof

Consider a Lyapunov–Krasovskii functional as.

where \(v_{1} (t) = \sum\nolimits_{p = 1}^{n} {\left| {e_{p} \left( t \right)} \right|} + \sum\nolimits_{p = 1}^{n} {\frac{{(r_{p} (t) - \zeta_{p} )^{2} }}{{2\upsilon_{p} }}} + \frac{{(\kappa_{p} (t) - {\mathchar'26\mkern-10mu\lambda} _{p} )^{2} }}{{2\delta_{p} }}\), \(v_{2} (t) = \sum\nolimits_{p = 1}^{n} {\frac{{a_{p} }}{{1 - h_{s} }}\int\nolimits_{t - \delta (t)}^{t} {\left| {e_{p} (\delta )} \right|} {\text{d}}s}\), \(v_{3} (t) = \sum\nolimits_{p = 1}^{n} {\sum\nolimits_{q = 1}^{n} {\frac{{\overline{c}_{pq} }}{{1 - h_{\tau } }}} } \,\,\int\nolimits_{t - \tau (t)}^{t} {\left| {g_{q} (e_{q} (s))} \right|} ds\) and \(v_{4} (t) = \sum\nolimits_{p = 1}^{n} {\sum\nolimits_{q = 1}^{n} {\frac{{\overline{d}_{pq} }}{{1 - h_{\sigma } }}\int\nolimits_{ - \sigma }^{0} {\,\,\int\nolimits_{t + \theta }^{t} {\left| {g_{q} (e_{q} (s)))} \right|\,} } } ds\,d\theta }\)

The \({\mathchar'26\mkern-10mu\lambda} _{p}\) and \(\zeta_{p}\) for \(p = 1,\,2,...,n\) are the unknown constants to be determined. After few mathematical steps, the upper right Dini’s derivative of \(\overline{V}(t)\) can be given as “

Let us suppose

and

Substituting the values of \(\zeta_{p}\) and \(\lambda_{p}\) in Eq. (28) yields:

The remaining proof of this theorem is the same as the previous theorem.

Finite-time synchronization has more application in our daily lives, but from our perspective, the adaptive control technique is quite useful and easily applicable. In short, we have a variety of synchronization techniques, and we can choose them as per our requirements.

Note 3 It is observed that the control gain has been reduced by the adaptive controller (26) as compared to controller (6). Theorems 1 and 2 have been derived without using any published theorems.

Note 4 The settling time of the adaptive controller depends on the system parameter initial values, the adaptive law parameters, and the synchronization error. On the other hand, the settling time cannot be clearly estimated since it is difficult to quantify the time interval of growing adaptive law parameters from initial values to theoretical values.

6 Numerical results and discussion

This section authenticates the efficiency of the theoretic results through the numerical simulation technique. The numeric values of the parameters used in MNNs (1) and (2) are as follows: \(a_{1} = 3.5,\) \(a_{2} = 5.1\), \(\delta_{1} (t) = \delta_{2} (t) = 0.2 - 0.1\,\cos (t),\) \(\tau_{1} (t) = \tau_{2} (t) = 0.5 - 0.1\,\sin (t),\) \(\delta = 0.2,\)\(\tau = \sigma = 0.5\), \(\sigma_{1} (t) = \sigma_{2} (t) = 0.5 - 0.1\,\sin (t)\), \(\gamma = 0.5\), \(h_{\delta } = h_{\tau } =\)\(h_{\sigma } = 0.1\), \(J_{1} = \exp ( - t) + 0.7\cos (t)\), \(J_{2} = 0.5\exp ( - t) - 0.3\sin (t)\), \(f_{p} (x_{p} ) = \tanh (x_{p} )\), \(p = 1,2\).

It can be noted that the assumptions \({\text{(A}}_{{1}} {\text{) - (A}}_{{2}} {) }\) are satisfied with \(P_{1} = P_{2} = l_{1} = l_{2} = 1\). The numerical simulations have been carried out via the forward Euler’s method using MATLAB.

The phase portrait of MNNs for different initial conditions is revealed in Fig. 1, which shows that the nature of the trajectories are different for different initial conditions. Hence, Fig. 1 validates the sensitive dependency of the MNNs on initial conditions.

Example 1

Here, our goal is to authenticate the findings of Theorem 1. Few mathematical calculations yields gain \(r_{1} = 2.2\), \(r_{2} = 4.15\) and \(\kappa_{1} = 2.7 + \varsigma\), \(\kappa_{2} = 2.2 + \varsigma\) for all \(\varsigma > 0\) and MNNs (1) and (2) can be synchronized in finite-time using controller (6) for initial conditions \(\eta (t) = ( - 0.8,0.6)^{T}\) and \(\xi (t) = ( - 2.0,2.2)^{T}\), respectively. The graphical presentation of drive and response systems has been incorporated through (a) and (b) of Fig. 1. The trajectory of synchronization error \(\left\| {\,e(t)\,} \right\|_{1}\) subjected to the controller (6) for different particular values of \(\varsigma\) is shown in Fig. 2, which validates the discussions of Note: 2, i.e., larger the value of \(\varsigma\) faster will be synchronization. Since the synchronization between the drive and response MNNs has been obtained within estimated time, if we choose \(\varsigma\) = 1.5, then the estimated settling time is \(T = 2.8534.\) It can be observed from Fig. 2 that the MNNs synchronized at time instant t = 2.21, i.e., synchronization achieved prior to the estimated settling time, which reveals the legitimacy of the theoretic outcomes.

The phase portrait of synchronization error \(\left\| {\,e(t)\,} \right\|_{1}\) subjected to the controller (6) for \(\varsigma\) = 1.0 (blue) and \(\varsigma\) = 1.5 (red)

Example 2

This example is concerned with the adaptive control mechanism for the validation of Theorem 2. In order to synchronize the MNNs via adaptive control technique, the numeric values of parameters are taken as \(\upsilon = (0.1,\,0.15)^{T}\), \(\delta = (0.12,\,0.1)^{T}\) along with the initial values of \(r(t) = (0.3,\,0.3)^{T}\), \(\kappa (t) = (0.2,0.2)^{T}\), and the remaining parameters are the same as previous one. The trajectories of synchronization error are depicted in Fig. 3.

The phase portrait of synchronization error \(\left\| {\,e(t)\,} \right\|_{1}\) subjected to the adaptive controller (26)

The trajectories of the control gains \(r_{p} (t)\,\,\) and \(\kappa_{p} (t)\) for \(\,p = 1,\,2\) are illustrated in Fig. 4. Note that the obtained control gains are reduced using the adaptive control technique and the synchronization time increased as compared to Fig. 2. This verifies the earlier discussion incorporated in note 3. The graphical presentation of trajectories of reduced control gain is shown in Fig. 4.

The trajectories of control gains of the adaptive controller (26): a \(r_{1} (t)\;{\text{and}}\;r_{2} (t)\) b \(\kappa_{1} (t)\;{\text{and}}\;\kappa_{2} (t)\)

7 Conclusion

This article deals with the finite-time synchronization of MNNs with mixed and leakage delays. Three important goals have been achieved. Firstly, in order to achieve finite-time synchronization, a state feedback controller has been derived. Moreover, to minimize the control gains, an adaptive controller has been constructed. Secondly, some sufficient conditions are derived to assure the synchronization of MNNs with the help of Filippov solution and Lyapunov functional technique without utilizing the finite-time stability theorem. The third one is the explicit estimation of settling time, which is dependent on the initial condition. Finally, the effectiveness of the proposed approach is authenticated by numerical simulation. Synchronization between MMNs has been achieved prior to the estimated settling time. In our future endeavors, we will study the impact of impulses on the stability and synchronization of mixed and leakage-delayed MNNs along with the settling time independent of initial conditions.

Availability of data and materials

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

References

Chua LO (1971) Memristor the missing circuit element. IEEE Trans Circuit Theory 18(5):507–519

Strukov DB, Snider GS, Stewart DR, Williams RS (2008) The missing memristor found. Nature 453(7191):80–83

Jagger DJ, Ashmore JF (1999) The fast activating potassium current, Ik, f, fk, f, in guinea-pig inner hair cells is regulated by protein kinase A. Neurosci Lett 263:145–148

Ospeck M, Eguiluz VM, Magnasco MO (2001) Evidence of a Hopf bifurcation in frog hair cells. Biophys J 80:2597–2607

Li B, Zhou W (2021) Exponential synchronization of memristive neural networks with discrete and distributed time-varying delays via event-triggered control. Discret Dyn Nat Soc 2021(5575849):1–15

Tour M, He T (2008) The fourth element. Nature 453(7191):42–43

Wen S, Zeng Z, Huang T (2012) Exponential stability analysis of memristor-based recurrent neural networks with time varying delays. Neurocomputing 97:233–240

Wang H, Duan S, Huang T, Li C, Wang L (2016) Novel stability criteria for impulsive memristive neural networks with time-varying delays. Circuits Syst Signal Process 35(11):3935–3956

Zhang F, Zeng Z (2018) Multistability and instability analysis of recurrent neural networks with time-varying delays. Neural Netw 97:116–126

Li H, Gao H, Shi P (2010) New passivity analysis for neural networks with discrete and distributed delays. IEEE Trans Neural Netw 21(11):1842–1847

Wu A, Zeng Z (2014) Lagrange stability of memristive neural networks with discrete and distributed delays. IEEE Trans Neural Netw Learn Syst 25(4):690–703

Zhang G, Zeng Z (2018) Exponential stability for a class of memristive neural networks with mixed time-varying delays. Appl Math Comput 321:544–554

Zhou Y, Li C, Chen L, Huang T (2018) Global exponential stability of memristive Cohen–Grossberg neural networks with mixed delays and impulse time window. Neurocomputing 275:2384–2391

Li S, Tian YP (2003) Finite-time synchronization of chaotic systems. Chaos Solitons Fractals 15(2):303–310

Mei J, Jiang M, Xu W, Wang B (2013) Finite-time synchronization control of complex dynamical networks with time delay. Commun Nonlinear Sci Numer Simul 18(9):2462–2478

Yan H, Qiao Y, Duan L, Miao J (2022) New inequalities to finite-time synchronization analysis of delayed fractional-order quaternion-valued neural networks. Neural Comput Appl 34(12):9919–9930

Cao J, Rakkiyappan R, Maheswari K, Chandrasekar A (2016) Exponential H∞ filtering analysis for discrete-time switched neural networks with random delays using sojourn probabilities. Sci China Technol Sci 59:387–402

Rakkiyappan R, Premalatha S, Chandrasekar A, Cao J (2016) Stability and synchronization analysis of inertial memristive neural networks with time delays. Cogn Neurodyn 10(5):437–451

Xiong X, Tang R, Yang X (2019) Finite-time synchronization of memristive neural networks with proportional delay. Neural Process Lett 50:1139–1152

Yang X, Ho DWC, Lu J, Song Q (2015) Finite-time cluster synchronization of T–S fuzzy complex networks with discontinuous subsystems and random coupling delays. IEEE Trans Fuzzy Syst 23(6):2302–2316

Wu H, Li R, Zhang X, Yao R (2015) Adaptive finite-time complete periodic synchronization of memristive neural networks with time delays. Neural Process Lett 42(3):563–583

Wu H, Zhang X, Li R, Yao R (2015) Finite-time synchronization of chaotic neural networks with mixed time-varying delays and stochastic disturbance. Memet Comput 7(3):231–240

Yang X (2014) Can neural networks with arbitrary delays be finite-timely synchronized. Neurocomputing 143(16):275–281

Cao J, Wang J (2005) Global asymptotic and robust stability of recurrent neural networks with time delays. IEEE Trans Circuits Syst I(52):417–426

Wang W, Jia X, Luo X, Kurths J, Yuan M (2019) Fixed-time synchronization control of memristive MAM neural networks with mixed delays and application in chaotic secure communication. Chaos Solitons Fractals 126:85–96

Lakshmanan S, Prakash M, Lim CP, Rakkiyappan R, Balasubramaniam P, Nahavandi S (2018) Synchronization of an inertial neural network with time-varying delays and its application to secure communication. IEEE Trans Neural Netw Learn Syst 29(1):195–207

Alimi AM, Aouiti C, Assali EA (2019) Finite-time and fixed-time synchronization of a class of inertial neural networks with multi-proportional delays and its application to secure communication. Neurocomputing 332:29–43

Kalpana M, Ratnavelu K, Balasubramaniam P, Kamali MZM (2018) Synchronization of chaotic-type delayed neural networks and its application. Nonlinear Dyn 93(2):543–555

Miaadi F, Li X (2021) Impulsive effect on fixed-time control for distributed delay uncertain static neural networks with leakage delay. Chaos Solitons Fractals 142:110389

Li R, Cao J (2016) Stability analysis of reaction-diffusion uncertain memristive neural networks with time-varying delays and leakage term. Appl Math Comput 278:54–69

Zhang R, Zeng D, Zhong S, Yu Y (2017) Event-triggered sampling control for stability and stabilization of memristive neural networks with communication delays. Appl Math Comput 310:57–74

Yan JJ, Hung ML, Chiang TY, Yang YS (2006) Robust synchronization of chaotic systems via adaptive sliding mode control. Phys Lett A 356(3):220–225

Liang J, Wang Z, Liu Y, Liu X (2008) Robust synchronization of an array of coupled stochastic discrete time delayed neural networks. IEEE Trans Neural Netw 19(11):1910–1921

Arscott FM (1988) Differential equations with discontinuous right hand sides. Kluwer, Dordrecht

Aubin JP, Cellina A (1986) Differential inclusions: set-valued maps and viability theory. Acta Appl Math 6(2):215–217

Bao H, Ju HP, Cao J (2015) Matrix measure strategies for exponential synchronization and anti-synchronization of memristor-based neural networks with time-varying delays. Appl Math Comput 270:543–556

Cai Z, Huanga L, Wang D, Zhanga L (2015) Periodic synchronization in delayed memristive neural networks based on Filippov systems. J Frankl Inst 352:4638–4663

Boonsatit N, Rajchakit G, Sriraman R, Lim CP, Agarwal P (2021) Finite-/fixed-time synchronization of delayed Clifford-valued recurrent neural networks. Adv Difference Equ 2021(1):1–25

Acknowledgements

The authors wish to thank Prof. Subir Das for his valuable suggestions on preparing the manuscript.

Funding

This work has not been supported by any funding agency.

Author information

Authors and Affiliations

Contributions

The authors confirm contribution to the paper as follows: The presented idea was conceived by VKS and AF. Drafting of the manuscript was performed by MCJ and PKM. Theory and computations were performed by VKS, AF and MCJ. Analysis and interpretation of results of this work were done by MCJ and PKM. All authors discussed the results and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Shukla, V.K., Fekih, A., Joshi, M.C. et al. Study of finite-time synchronization between memristive neural networks with leakage and mixed delays. Int. J. Dynam. Control 12, 1541–1553 (2024). https://doi.org/10.1007/s40435-023-01252-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40435-023-01252-z