Abstract

This paper is concerned with the problem of stability and pinning synchronization of a class of inertial memristive neural networks with time delay. In contrast to general inertial neural networks, inertial memristive neural networks is applied to exhibit the synchronization and stability behaviors due to the physical properties of memristors and the differential inclusion theory. By choosing an appropriate variable transmission, the original system can be transformed into first order differential equations. Then, several sufficient conditions for the stability of inertial memristive neural networks by using matrix measure and Halanay inequality are derived. These obtained criteria are capable of reducing computational burden in the theoretical part. In addition, the evaluation is done on pinning synchronization for an array of linearly coupled inertial memristive neural networks, to derive the condition using matrix measure strategy. Finally, the two numerical simulations are presented to show the effectiveness of acquired theoretical results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

In 1971, Chua discovered the fourth element in circuit theory and named it as memristor (short for memory resistor). Chua mathematically revealed that memristor has the relationship between the magnetic flux and electric charge as in Chua (1971). Almost after 40 years, Stanley Williams and his group formulated the practical memristor in May 2008 in Strukov et al. (2008). The rapid variation of voltage at certain instants leads to irregular change of memristance which is similar to the switching behaviors in a dynamical system. The most fascinating trait of the memristor is that it can memorize the direction of flow of electric charge in the past. Thus, it performs as a forgetting and remembering (memory) process in human brains and hence the memristor is acknowledged well and its potential applications are in next generation computers and powerful brain-like computers. In order to replicate the artificial neural networks of human brain better, the conventional resistor of self feedback connection weights and connection weights of the primitive neural networks are replaced by the memristor.

Time delay is a common phenomenon that describes the fact that the future state of a system depends not only on the present state but also on the past state, and often encountered in many fields such as engineering, biological and economical systems. In reality, time delays often occur in many systems due to the finite switching speed of amplifiers in electronic neural networks or due to the finite signal propagation time in biological networks. In neural networks, the inner delay time that frequently occurs in the processing of information storage and telecommunication affects the dynamical behavior of the networks. Hence it is reasonable to consider time delay in modeling dynamical networks (Cao et al. 2016). In neural networks literature, research work on second-order states are very few compare to the first-order states. The second order states of the system is due to the inertial term or influence of inductance. There are some biological background for the inclusion of an inductance term in a neural system. For example, the membrane of a hair cell in the semicircular canals of some animals can be described by equivalent circuits that contain an inductance (Jagger and Ashmore 1999; Ospeck et al. 2001). The addition of inductance makes the membrane to have an electrical tuning, filtering behaviors and so on. The inertia can be treated as a helpful tool in the generation of chaos in neural systems. From the literature review of inertial neural networks, the bifurcation in a single inertial neuron model is discussed in He et al. (2012), Li et al. (2004), Liu et al. (2009) and the stability of an inertial two-neuron system in Wheeler and Schieve (1997). Furthermore, the stability analysis of Bidirectional Associative Memory (BAM) inertial neural networks has been discussed in Cao and Wan (2014), Yunkuan and Chunfang (2012), Zhang et al. (2015) and Ke and Miao (2013) deals with the inertial Cohen–Grossberg type neural networks in the literature.

The stability analysis of neural networks with time delay has received much more attention. Noticeable results on the stability analysis of neural networks with time delay has been projected by various authors. For instance, in Yunkuan and Chunfang (2012), the stability of an inertial BAM neural network with time delay is discussed by constructing suitable Lyapunov functional. Further, the stability of an inertial BAM neural network with time delay has been investigated by matrix measure theory in Cao and Wan (2014). The authors in Qi et al. (2014), Rakkiyappan et al. (2015), deals with the stability of a class of memristor-based recurrent neural networks with time delays using Lyapunov method and Banach contraction principle. In Chen et al. (2015), the global asymptotic stability of fractional memristor-based delayed neural networks has been discussed by employing the comparison theorem of fractional-order linear systems with time delay.

Synchronization of a complex network is a fascinating phenomena which is observed in fields such as physical, biological, chemical, technological, etc., and it has potential applications in biological systems, chemical reactions, secure communication, image processing and so on (Pan et al. 2015; Yang and Cao 2014, 2012). Synchronization of coupled inertial neural networks means that multiple neural networks can achieve a common trajectory, such as a common equilibrium, limit cycle or chaotic trajectory. The authors in Dai et al. (2016) have analyzed, the problem of neutral-type coupled neural networks with Markovian switching parameters by placing the adaptive controllers to part of nodes, and the sufficient conditions for exponential synchronization are drawn with the help of Lyapunov stability theory, stochastic analysis and matrix theory. Outer synchronization of partially coupled dynamical networks via pinning impulsive controller has been discussed in Lu et al. (2015). The global exponential synchronization of coupled neural networks with stochastic perturbations and mixed time-varying delays has been discussed in Wang et al. (2015) and synchronization criteria have been derived based on multiple Lyapunov theory. Two types of coupled neural networks with reaction–diffusion terms have been considered in Wang et al. (2016) and the general criterion for ensuring network synchronization has been derived by pinning a small fraction of nodes with adaptive feedback controllers. A sufficient condition for the exponential synchronization of fractional-order complex networks via pinning impulsive control has been derived using Lyapunov function and Mittag-Leffler function in Wang et al. (2015). In Yang et al. (2015), exponential synchronization of neural networks with discontinuous activations with mixed delays has been discussed by combining state feedback control and impulsive control techniques. Moreover, Pinning synchronization of coupled inertial delayed neural networks using matrix measure and Lyapunov–Krasovskii functional has been done in Hu et al. (2015). To the best of our knowledge, upto now no work in the literature have been carried out on the stability and synchronization problem for inertial Memristive neural networks (MNNs) with time-delay by using matrix measure and Halanay inequality.

Motivated by the above discussion, in this paper the stability of inertial memristive neural networks and pinning synchronization of coupled inertial memristive neural networks are presented. The main contribution of this paper are as follows: (1) The analysis on inertial MNNs is discussed. (2) The stability of inertial MNNs using matrix measure strategy is introduced. (3) In the literature, the stability of memristive-based first order system and fractional-order system are discussed but no work is done on inertial MNNs. Further, the synchronization of inertial MNNs is considered and pinning feedback control is used to synchronize the coupled inertial MNNs to the objective trajectory. In general, the matrix measure method utilizes the information of matrix elements, especially the diagonal elements of a matrix more sufficiently.

The rest of the paper is organized as follows. In “Problem formulation” section, model description and preliminaries are presented. Stability of the equilibrium point is investigated in “Stability analysis” section, and the pinning synchronization analysis of inertial MNNs are stated in “Synchronization analysis” section. In “Numerical simulation” section, some examples are given to show the validity of our results. Finally, in “Conclusion” section, conclusions are drawn.

Notations

Throughout this paper, \(\mathbb {R}^{n}\) and \(\mathbb {R}^{n\times n}\) denotes the n-dimensional Euclidean space and the set of all \(n \times n\) matrices, respectively. \(\overline{a}_{ij}=\max \{\hat{a}_{ij}, \check{a}_{ij}\}\), \(\underline{a}_{ij}=\min \{\hat{a}_{ij}, \check{a}_{ij}\}\), \(\overline{b}_{ij}=\max \{\hat{b}_{ij}, \check{b}_{ij}\}\), \(\underline{b}_{ij}=\min \{\hat{b}_{ij}, \check{b}_{ij}\}\) for \(i,j=1,2,\ldots ,n\). \(\overline{a}_{rs}=\max \{\hat{a}_{rs}, \check{a}_{rs}\}, \underline{a}_{rs}=\min \{\hat{a}_{rs}, \check{a}_{rs}\}, \overline{b}_{rs}=\max \{\hat{b}_{rs}, \check{b}_{rs}\}, \underline{b}_{rs}=\min \{\hat{b}_{rs}, \check{b}_{rs}\}\) for \(r,s=1,2,\ldots ,n\). \(\overline{A}=(\overline{a}_{rs})_{n\times n}\), \(\underline{A}=(\underline{a}_{rs})_{n\times n}\), \(\overline{B}=(\overline{b}_{rs})_{n\times n}\), \(\underline{B}=(\underline{b}_{rs})_{n\times n}\). co\(\{u,v\}\) denotes closure of the convex hull generated by real numbers u and v or real matrices u and v. \(AC^{1}([0,1],\mathbb {R}^{n})\) denote the space of differential functions \(x:[0,1]\rightarrow \mathbb {R}\), whose first derivative, \(x^{'}\), is absolutely continuous. \(I_{n}\) and \(diag\{\alpha _{1},\alpha _{2},\ldots ,\alpha _{n}\}\) denotes the identity matrix and the diagonal matrix of order \(n \times n\). \(I_{Nn}\) stands for the identity matrix with Nn dimension. Let A and B be the arbitrary matrices, then \(A \otimes B\) denotes the Kronecker product of the matrices A and B.

Problem formulation

In this paper, we consider the following inertial memristive delayed neural networks,

where \(s_{i}(t)\) is the state vector of the ith neuron, \(d_{i}>0\), \(c_{i}>0\) are constants, \(c_{i}\) denotes the rate with which the ith neuron will reset its potential to the resetting state in isolation when disconnected from the networks and external inputs. The second derivative of \(s_{i}(t)\) is known as inertial term of system (1). \(a_{ij}(s_{i}(t))\) and \(b_{ij}(s_{i}(t))\) represent the memristive connective weights which changes based on the feature of memristor and current–voltage characteristic. They are given as,

for \(i,j=1,2,\ldots ,n\) in which switching jumps \(T_{i}>0\), \(\hat{a}_{ij}\), \(\check{a}_{ij}\), \(\hat{b}_{ij}\), \(\check{b}_{ij}\) are known constants with respect to memristance. \(f_{i}\) denotes the nonlinear activation function of the ith neuron at time t. \(I_{i}\) is the external input of the ith neuron. The time delay of the system (1) is defined as \(\tau (t)\ge 0\). The initial conditions of the system (1) is given as,

where \(\Phi _{i}(\chi ),\Psi _{i}(\chi )\in \mathrm {C}^{(1)}([-\tau ,0],\mathbb {R}^n)\), \(\mathrm {C}^{(1)}([-\tau ,0],\mathbb {R}^n)\) denotes the set of all n-dimensional continuously differentiable functions defined on the interval \([-\tau ,0]\) with \(\tau =\text{ sup }_{t\ge 0}\{\tau (t)\}\).

To proceed, the following assumption, definitions and lemmas are given.

Assumption 1

The activation function \(f_{i}\) satisfies the Lipschitz condition, i.e., there exist constants \(l_{i}>0\) such that \(\forall\) x, y \(\in \mathbb {R}\), we have

Definition 1

(Benchohra et al. 2010) A function \(s_{i} \in \mathrm {AC^{1}}((0,1),\mathbb {R}^{n})\) is said to be a solution of (1), (3) if \(s_{i}''(t)+d_{i}s_{i}'(t) \in F(t,s_{i}(t))\) almost everywhere on [0, 1], where \(d_{i}>0\) and the function \(s_{i}\) satisfies conditions (3). For each \(s_{i} \in \mathrm {C}([0,1],\mathbb {R}^{n})\), define the set of selections of F by,

By applying the theories of set-valued maps and differential inclusion to system (1) as stated in Definition 1, where \(d_{i}>0\) and \(F(s_{i},t)=-c_{i}s_{i}(t)+\sum \nolimits _{j=1}^na_{ij}(s_{i}(t))f_{j}(s_{j}(t))+\sum \nolimits _{j=1}^nb_{ij}(s_{i}(t))f_{j}(s_{j}(t-\tau (t)))+I_{i}\).

The system (1) can be written as the following differential inclusion:

or equivalently, for \(i,j=1,2,\ldots ,n\) there exist measurable functions \(\tilde{a}_{ij}(s_{i}(t)) \in co[\underline{a}_{ij},\overline{a}_{ij}], \tilde{b}_{ij}(s_{i}(t)) \in co[\underline{b}_{ij},\overline{b}_{ij}]\), such that

where \(i=1,2,\ldots ,n\).

Consider the following variable transformation to the system (5):

Then we have the system (5) as,

where \(\mathbf {c}_{i}=c_{i}+1-d_{i}\) and \(\mathbf {d}_{i}=d_{i}-1\) with the initial values \(p_{1i}(\chi )=\Phi _{i}(\chi )\) and \(p_{2i}(\chi )=\Psi _{i}(\chi )+\Phi _{i}(\chi ), -\tau \le \chi \le 0\).

Definition 2

For the system \(p_{i}(t) \in f(p_{i})\). Since \(0 \in f(0)\) it follows that \(p_{i}=0\) is the equilibrium point. i.e.,

Definition 3

(He and Cao 2009) The matrix measure of a real square matrix \(W=(w_{ij})_{n\times n}\) is as follows:

where \(\Vert \cdot \Vert _{p}\) is an induced matrix norm on \(\mathbb {R}^{n\times n}\), \(I_{n}\) is an identity matrix and \(p=1,2,\infty ,\omega\). When the matrix norm,

We can obtain matrix measures as,

where \(\lambda _{max}(\cdot )\) represents the maximum eigenvalue of the matrix \((\cdot )\) and \(\omega _{i} > 0\) for \(i=1,2,\ldots ,n\) are any constant numbers.

Remark 1

\(\mu _{p}(W)\) is the one-sided directional derivative of the mapping \(\Vert \cdot \Vert _{p} : \mathbb {R}^{n \times n}\rightarrow \mathbb {R}_{+}\) at the point \(I_{n}\), in the direction of W. Matrix norm \(\Vert \cdot \Vert _{p}\) holds the non-negative property. But \(\mu _{p}(\cdot )\) is not restricted to be non-negative. It may take negative values since it emphasizes more information about the diagonal elements of the matrix.

Definition 4

(Ke and Miao 2013) The equilibrium point \(s^{*}\) of the system (1) is said to be globally exponentially stable, if there exist constants \(\eta > 0\) and \(M > 0\) such that

where \(i=1,\cdots ,n\), \(s(t)=(s_{1}(t),s_{2}(t),\ldots ,s_{n}(t))^{T}\) is a solution of system (1) with the initial value (3).

Lemma 1

(Chandrasekar et al. 2014) Under Assumption 1, if \(f_{j}(\pm T_{j})=0\) \((j=1,2,\ldots ,n)\) then

for \(i,j=1,2,\ldots ,n\), i.e., for any \(\eta _{ij}(u_{j})\in K(a_{ij}(u_{j})), \eta _{ij}(v_{j})\in K(a_{ij}(v_{j})),\)

for \(i,j=1,2,\ldots ,n\), where \(\tilde{a}_{ij}^{u}=\text{ max }\{|\hat{a}_{ij}|,|\check{a}_{ij}|\}\).

Lemma 2

If the activation function \(f_{i}\) is bounded, i.e., \(|f_{i}(x)|\le M_{i}\), for each \(i=1,2,\ldots ,n\) then for any given input \(I=diag\{I_{1},\ldots ,I_{n}\}\), there exists an equilibrium for (1).

Proof

It is clear that \(s^{*}\) is an equilibrium of (1) if and only if it is a solution of the following equation

By applying the theories of set-valued maps and differential inclusion, we have

or equivalently, for \(i,j=1,2,\ldots ,n\), there exist measurable functions \(\tilde{a}_{ij}(s_{i}^{*}(t)) \in co[\underline{a}_{ij},\overline{a}_{ij}], \tilde{b}_{ij}(s_{i}^{*}(t)) \in co[\underline{b}_{ij},\overline{b}_{ij}]\), such that

Noting that \(c_{i}>0\), \(|f_{i}(x)| \le M_{i}, \forall i,j=1,2,\ldots ,n\), one yields that,

Thus the function \(h(s)=(h_{1},h_{2},\ldots ,h_{n})^{T}\) maps \(D=[-D_{1},D_{1}]\times \ldots \times [-D_{n},D_{n}]\) into itself. According to Brower’s fixed point theorem, the existence of an equilibrium is obtained. \(\square\)

Lemma 3

(Vidyasagar 1993) Let \(\Vert \cdot \Vert _{p}\) be an induced matrix norm on \(\mathbb {R}^{n\times n}\) and \(\mu _{p}(\cdot )\) be the corresponding matrix measure. Then \(\mu _{p}(\cdot )\) has the following properties:

-

1.

For each \(W \in \mathbb {R}^{n \times n}\), the limit indicated in Definition 3 exists and is well-defined;

-

2.

\(-\Vert W\Vert _{p} \le \mu _{p}(W) \le \Vert W\Vert _{p}\);

-

3.

\(\mu _{p}(\alpha W)=\alpha \mu _{p}(W)\), \(\forall\) \(\alpha \ge 0\), \(\forall W \in \mathbb {R}^{n\times n}\) and in general, \(\mu _{p}(W) \not = \mu _{p}(-W)\);

-

4.

\(\max \{\mu _{p}(A)-\mu _{p}(-B),\mu _{p}(B)-\mu _{p}(-A)\} \le \mu _{p}(A+B)\le \mu _{p}(A)+\mu _{p}(B)\), \(\forall\) \(A,B \in \mathbb {R}^{n \times n}\);

-

5.

\(\mu _{p}(\cdot )\) is a convex function, i.e., \(\mu _{p}(\alpha A +(1-\alpha )B) \le \alpha \mu _{p}(A)+(1-\alpha )\mu _{p}(B)\), \(\forall \,\alpha \in [0,1]\), \(\forall \,A,B \in \mathbb {R}^{n \times n}\).

Lemma 4

(Halanay 1966) Let \(k_{1}\) and \(k_{2}\) be constants with \(k_{1}>k_{2}>0\) and y(t) is a non-negative continuous function defined on \([t_{0}-\tau ,+\infty ]\) which satisfies the following inequality for \(t\ge t_{0}\)

where \(\overline{y}(t) \triangleq \sup \nolimits _{t-\tau \le s \le t} y(s)\). Then, \(y(t) \le \overline{y}(t_{0}) e^{-r(t-t_{0})}\), where r is a bound on the exponential convergence rate and is the unique positive solution of \(r=k_{1}-k_{2}e^{r\tau }\), where the upper right Dini derivative \(D^{+} y(t)\) is defined as

where \(h\rightarrow 0^{+}\) means that h approaches zero from the right-hand side.

Lemma 5

(He and Cao 2009) Under Assumption 1, let \(\mu _{p}(\cdot )\) be the corresponding matrix measure associated with the induced matrix norm \(\Vert \cdot \Vert _{p}\) on \(\mathbb {R}^{n\times n}\). Then

where \(F(e(t))=diag\left\{ \frac{f_{1}(e_{1}(t))}{e_{1}(t)},\ldots ,\frac{f_{n}(e_{n}(t))}{e_{n}(t)}\right\}\), \(L=diag\{l_{1},\ldots ,l_{n}\}\), \(p=1,\infty ,\omega\) and \(A^{*}=(a^{*}_{ij})_{n\times n}=\left\{ \begin{array}{ll} max\{0,a_{ii}\}, &\quad i=j, \\ a_{ij}, &\quad i\not =j. \end{array}\right.\)

Stability analysis

In this section, we will investigate the exponential stability of inertial memristive neural networks using matrix measure strategy. The main result is given in the following theorem.

Theorem 1

Under the Assumption 1, further

where \(\mathbb {G}=\left( \begin{array}{cc} -I_{n} &\quad I_{n} \\ -\mathcal {C} &\quad -\mathcal {D} \end{array} \right)\), \(\mathcal {C}=C+I_{n}-D\), \(\mathcal {D}=D-I_{n}\) and \(l=\max \nolimits _{1\le i \le n} \{l_{i}\}\). Then the equilibrium \((p_{1}^{*}(t),p_{2}^{*}(t))^{T}\) of the system (6) is globally exponentially stable for \(p=1,2,\infty ,\omega\).

Proof

Let us define the error between the trajectory of the system (6) and the corresponding equilibrium \((p_{1}^{*}(t),p_{2}^{*}(t))^{T}\) as,

According to the error system, the upper-right Dini derivative of \(\Vert e(t)\Vert _{p}\) with respect to ‘t’ is calculated as follows:

We have,

According to Lemma 1, the above equation can be written as follows:

where \(\tilde{a}_{ij}^{u}=max\{|\hat{a}_{ij}|,|\check{a}_{ij}|\}\quad \text{ and }\quad \tilde{b}_{ij}^{u}=max\{|\hat{b}_{ij}|,|\check{b}_{ij}|\}\).

The vector form of the above equation is,

where \(\mathcal {C}=C+I_{n}-D\), \(\mathcal {D}=D-I_{n}\), \(\tilde{A}=(\tilde{a}_{ij}^{u})_{n\times n}\) and \(\tilde{B}=(\tilde{b}_{ij}^{u})_{n\times n}\).

We have the upper right Dini-derivative as,

where \(\xi =-\mathcal {C}e_{1}(t)-\mathcal {D}e_{2}(t)+\tilde{A}(f(p_{1}(t))-f(p_{1}^{*}(t)))+\tilde{B}(f(p_{1}(t-\tau (t)))-f(p_{1}^{*}(t)))\). Using Lemma 4, by considering \(k_{1}=-(\mu _{p}(\mathbb {G})+l\Vert \tilde{A}\Vert _{p})\), \(k_{2}=l\Vert \tilde{B}\Vert _{p}\) and the assumption of Theorem 1, \(-(\mu _{p}(\mathbb {G})+l\Vert \tilde{A}\Vert _{p})>l\Vert \tilde{B}\Vert _{p}>0\). We have the result as,

where r is a bound on the exponential convergence rate with

Thus we conclude that, e(t) converges exponentially to zero with a convergence rate r. That is, every trajectory of the system (6) converges exponentially towards the equilibrium \((p_{1}^{*}(t),p_{2}^{*}(t))^T\) with a convergence rate r. This completes the proof. \(\square\)

Remark 2

The fundamental concept of the Lyapunov direct method is that if the total energy of a system is continuously dissipating, then the system will eventually reach an equilibrium point and remain at that point. Hence, the Lyapunov direct method consists of two steps. Firstly, a suitable scalar function is chosen and this function is referred as Lyapunov function Hahn (1967), Bacciotti and Rosier (2005). Secondly, we have to evaluate its first-order time derivative along the trajectory of the system. If the derivative of a Lyapunov function is decreasing along the system trajectory as time increases, then the system energy is dissipating and the system will finally settle down. However, it should be noted that much of the previous work in the stability analysis of neural networks is based on Lyapunov direct methods, where constructing a proper Lyapunov function is important for the stability analysis. Moreover, to construct a proper Lyapunov function for a given system is very difficult and there are no general rules to follow. Compared with Lyapunov direct method, matrix measure strategy is an efficient tool to address the stability problem of nonlinear systems. Generally, the established results by using matrix measure are more superior than common algebraic criterion due to the fact that matrix measure can not only be taken to be positive value but also it can be a negative value. Inspired by the above method, we introduce the so called matrix measure and Halanay inequality to study the stability of the system (1), and some simple but generic criteria have been derived.

Remark 3

Compared to the matrix norm \(\Vert \cdot \Vert _{p}\), the matrix measure, \(\mu _{p}(\cdot )\) are sign sensitive which ensure that the obtained results are more precise and less computational burden those obtained by using matrix norm.

Corollary 1

Under the Assumption 1 and if further there exists matrix \(\Lambda =diag\{\xi _{1},\xi _{2},\ldots ,\xi _{n}\}\) such that

holds where \(\mathbb {G}=\left( \begin{array}{cc} -\Lambda &\quad I_{n} \\ -\mathcal {C} &\quad -\mathcal {D} \end{array} \right)\), \(\mathcal {C}=C+I_{n}-D\), \(\mathcal {D}=D-I_{n}\) and \(l=\max \nolimits _{1\le i \le n} \{l_{i}\}\). Then the equilibrium \((p_{1}^{*}(t),p_{2}^{*}(t))^{T}\) of the system (6) is globally exponentially stable for \(p=1,2,\infty ,\omega\).

Proof

To the system (5), we consider the following transformation:

and proceed as stated before and the proof is similar to the Theorem 1. \(\square\)

Remark 4

The condition of Theorem 1 certainly does not holds for \(p=1,\infty\). On that case, it is better to introduce \(\xi _{i}>0\), \(i=1,2,\ldots ,n\) in the variable transformation as in Corollary 1. So that the condition holds for \(p=1,\infty\) and leads to the better performance.

Theorem 2

Under the Assumption 1, further

holds where \(L^{*}=diag\{l_{1},l_{2},\ldots ,l_{n},1,1,\ldots ,1\}\) and \(\mathcal {A}=\left( \begin{array}{cc} 0 &\quad 0 \\ \tilde{A} &\quad 0 \end{array} \right)\). Then the equilibrium \((p_{1}^{*}(t),p_{2}^{*}(t))^{T}\) of the system (6) is globally exponentially stable for \(p=1,\infty ,\omega\).

Proof

Similar to the proof of Theorem 1, first let us calculate the upper-right Dini-derivative as,

The error system is defined as,

Also note that, \(e_{1}(t)=(e_{11}(t),e_{12}(t),\ldots ,e_{1n}(t))\), \(f_{i}(p_{1i}(t))-f_{i}(p_{1i}^{*}(t))=f_{i}(p_{1i}^{*}(t){+}e_{1i}(t))-f_{i}(p_{1i}^{*}(t))\), \(f(p_{1}(t))-f(p_{1}^{*}(t))=(f_{11}(p_{11}(t))-f_{11}(p_{11}^{*}(t)),\ldots ,f_{1n}(p_{1n}(t))-f_{1n}(p_{1n}^{*}(t)))\).

Define the function as,

Then, \(f(p_{1}(t))-f(p_{1}^{*}(t))\) and \(f(p_{2}(t))-f(p_{2}^{*}(t))\) can be written as,

It follows from (8) that,

Now by letting,

We have the error system as,

Then, we have the upper-right Dini derivative as,

It follows from Lemmas 3 and 5 that,

where \(L^{*}=diag\{l_{1},l_{2},\ldots ,l_{n},1,1,\ldots ,1\}\). Hence, we have

Using Lemma 4, by considering \(k_{1}=-(\mu _{p}(\mathbb {G})+\mu _{p}(\mathcal {A}L^{*}))\), \(k_{2}=l\Vert \tilde{B}\Vert _{p}\) and the assumption of our Theorem 2, \(-(\mu _{p}(\mathbb {G})+\mu _{p}(\mathcal {A}L^{*}))> l\Vert \tilde{B}\Vert _{p} > 0\). We have the result as,

where r is a bound on the exponential convergence rate with

Thus we conclude that, e(t) converges exponentially to zero with a convergence rate r. That is, every trajectory of the system (6) converges exponentially towards the equilibrium \((p_{1}^{*}(t),p_{2}^{*}(t))^{T}\) with a convergence rate r. This completes the proof. \(\square\)

Remark 5

The stability condition of Theorem 1 utilize only the maximum Lipschitz constant and not the information of each \(l_{i}\). But the stability condition of Theorem 2 utilizes the information of each Lipschitz constant \(l_{i}\). Thus, by letting some terms, we make the term \(\Vert \tilde{A}\Vert _{p}\) as \(\mu _{p}(\mathcal {A}L^{*})\) in Theorem 2 and hence the result is more precise than Theorem 1. But the condition of Theorem 2 holds only for \(p=1,\infty ,\omega\).

Synchronization analysis

Consider the following memristive delayed neural networks,

where \(\overline{y}(t)=(\overline{y}_{1}(t),\ldots ,\overline{y}_{n}(t))^{T} \in \mathbb {R}^{n}\). \(d_{r}>0\), \(c_{r}>0\) are constants, \(c_{r}\) denotes the rate with which the rth neuron will reset its potential to the resetting state in isolation when disconnected from the networks and external inputs. \(I_{r}=(I_{1},\ldots ,I_{n})^{T}\) and \(f(\overline{y}(t))=(f_{1}(\overline{y}_{1}(t)),\ldots ,f_{n}(\overline{y}_{n}(t)))^{T}\) denotes the external input and the non-linear activation function respectively. \(A(\overline{y}(t))=(a_{rs}(\overline{y}(t)))_{n\times n}\) and \(B(\overline{y}(t))=(b_{rs}(\overline{y}(t)))_{n\times n}\) are memristive connection weights which changes based on the feature of memristor and current–voltage characteristics are defined as,

for \(r,s=1,2,\ldots ,n\) in which switching jumps \(T_{r}>0\), \(\hat{a}_{rs}\), \(\check{a}_{rs}\), \(\hat{b}_{rs}\), \(\check{b}_{rs}\) are known constants with respect to memristance. When N inertial MNNs are coupled by a network, we can obtain the following array of linearly coupled inertial MNNs with the dynamics of the kth node as,

where \(k=1,\ldots ,N\). \(x_{k}(t)=(x_{k1}(t),\ldots ,x_{kn}(t))^{T} \in \mathbb {R}^{n}\) is the state of the kth neural network. \(D=diag\{d_{1},d_{2},\ldots ,d_{n}\}\) and \(C=diag\{c_{1},c_{2},\ldots ,c_{n}\}\) denote the positive definite matrices. \(A(x_{k}(t))\) and \(B(x_{k}(t))\) are memristive connection weights defined in (12). \(\alpha\) is the positive constant which represents network coupling strength. \(\Gamma\) is the inner coupling matrix. The matrix \(W=(W_{kl})_{N \times N}\) is the constant coupling configuration matrix which is considered to be diffusive. i.e., \(W_{kl} \ge 0\) \((k\not = l)\) and \(W_{kk}=-\sum \nolimits _{l=1,l\not =k}^{N}W_{kl}\). Also, W is not required to be symmetric or irreducible.

The initial condition of the system (13) is given as,

where \(\Phi _{k}(\chi )\), \(\Psi _{k}(\chi ) \in \mathrm {C}^{(1)}([-\tau ,0],\mathbb {R}^n)\). The isolated node of network (13) is given as,

The connection weight matrices of A(s(t)) and B(s(t)) are defined as in (12). The initial condition of the system (15) is given as,

where \(\Phi (\chi )\), \(\Psi (\chi ) \in \mathrm {C}^{(1)}([-\tau ,0],\mathbb {R}^n)\). By applying the theories of set-valued maps and differential inclusion to (15) as stated in Definition 1 we have,

or equivalently, there exist measurable functions \(\widetilde{A}(s(t)) \in co[\underline{A},\overline{A}], \widetilde{B}(s(t)) \in co[\underline{B},\overline{B}]\), such that

Consider the following transformation to the system (18),

Then we have the system (18) as,

where \(\mathcal {C}=C+I_{n}-D\) and \(\mathcal {D}=D-I_{n}\). The pinning controlled networks of the system (13) is given as,

where \(k=1,2,\ldots ,N\) and \(\sigma _{k}=1\) if the node is pinned, otherwise \(\sigma _{k}=0\). \(A(x_{k}(t))\), \(B(x_{k}(t))\) and the initial condition of the system are given in (12) and (14) respectively.

By applying the theories of set-valued maps and differential inclusion to the form as stated in Definition 1 to the system (20) we have,

or equivalently, there exist measurable functions \(\widetilde{A}(x_{k}(t)) \in co[\underline{A},\overline{A}], \widetilde{B}(x_{k}(t)) \in co[\underline{B},\overline{B}]\), such that

Consider the following transformation to the above system,

Then we have,

where \(\mathcal {C}=C+I_{n}-D\) and \(\mathcal {D}=D-I_{n}\). Now, let us introduce a Laplacian matrix \(L=(l_{kl})_{N \times N}\) of the coupling network and define \(L=-W\). Then we have,

Let us define the error dynamical network as,

Subtracting (19) from (21), we obtain the following,

According to Lemma 1, the above system can be written as follows:

where \(\tilde{\tilde{A}}=\text{ max }\{|\overline{a}_{rs}|,|\underline{a}_{rs}|\}\quad \text{ and }\quad \tilde{\tilde{B}}=\text{ max }\{|\overline{b}_{rs}|,|\underline{b}_{rs}|\}.\)

Using the Kronecker product, the above system can be written in the following compact form,

Lemma 6

(Cao et al. 2006) For matrices A, B, C and D with appropriate dimensions, one has

- 1.:

-

\((\alpha A)\otimes B=A \otimes (\alpha B)\).

- 2.:

-

\((A+B)\otimes C= A\otimes C+B\otimes C\).

- 3.:

-

\((A\otimes B)(C\otimes D)=(AC)\otimes (BD)\).

Definition 5

The linearly coupled inertial MNNs (13) is said to be exponentially synchronized if the error system (22) is exponentially stable, i.e., there exist two constants \(\overline{\alpha }>1\) and \(\overline{\beta }>0\) such that

Now, we present the synchronization result in the following theorem based on the above transformations.

Theorem 3

Under the Assumption 1, further

where \(\mathcal {K}=\left( \begin{array}{cc} -I_{Nn} &\quad I_{Nn} \\ -(I_{N}\otimes \mathcal {C}) &\quad -(I_{N}\otimes \mathcal {D})-\alpha (L+\Sigma )\otimes \Gamma \end{array}\right)\) and \(l=\max \nolimits _{1 \le i \le n}\{l_{i}\}\). Then the pinning controlled inertial MNNs (22) is globally exponentially synchronized for \(p=1,2,\infty ,\omega\).

Proof

The upper-right Dini derivative of the synchronization error, \(\Vert e(t)\Vert _{p}\) with respect to t is calculated as follows,

where \(\lambda =-(I_{N}\otimes \mathcal {C})e_{1}(t)-(I_{N}\otimes \mathcal {D})e_{2}(t)+(I_{N}\otimes \tilde{\tilde{A}})(f(y_{k}(t))-f(p(t)))+(I_{N}\otimes \tilde{\tilde{B}})(f(y_{k}(t-\tau (t)))-f(p(t-\tau (t))))-\alpha ((L+\Sigma )\otimes \Gamma )e_{2}(t)\). Using Lemma 4 by considering \(k_{1}=-(\mu _{p}(\mathcal {K})+l\Vert I_{N}\otimes \tilde{\tilde{A}}\Vert _{p})\), \(k_{2}=l\Vert I_{N}\otimes \tilde{\tilde{B}}\Vert _{p}\) and the assumption of Theorem 3, \(-(\mu _{p}(\mathcal {K})+l\Vert I_{N}\otimes \tilde{\tilde{A}}\Vert _{p})> l\Vert I_{N}\otimes \tilde{\tilde{B}}\Vert _{p}>0\). We have the result as,

where r is a bound on the exponential convergence rate with

Thus, we conclude that e(t) converges exponentially to zero with a convergence rate r. It implies that the global synchronization of the system (22) is achieved. This completes the proof. \(\square\)

Theorem 4

Under Assumption 1, if there exists a matrix measure \(\mu _{p}(\cdot )(p=1,\infty ,\omega )\) such that

where \(\mathcal {A}=\left( \begin{array}{cc} 0 &\quad 0 \\ I_{N}\otimes \tilde{\tilde{A}} &\quad 0 \end{array}\right)\), \(L^{*}=diag\{{l_{1},l_{2},\ldots ,l_{n},1,1,\ldots ,1\}}\). Then the pinning controlled coupled inertial MNNs (22) is globally exponentially synchronized.

Proof

Similar to the proof of Theorem 2. \(\square\)

Remark 6

Compared with the results on pinning synchronization of the coupled inertial delayed neural networks of Hu et al. (2015), our results on pinning synchronization are with discontinuous right-hand side. So the results in this paper are more superior than in Hu et al. (2015).

Remark 7

Recently, many of the researchers have developed synchronization results by constructing suitable Lyapunov–Krasovskii functional and by using linear matrix inequality techniques. Several effective methods such as delay decomposition approach, convex combination, free weighting matrix approach and inequalities technique have been explored and developed in the literature, see for examples Balasubramaniam et al. (2011), Balasubramaniam and Vembarasan (2012). Moreover, in Wang and Shen (2015), authors have concerned the synchronization of memristor-based neural networks with time-varying delays is investigated by employing the Newton–Leibniz formulation and inequality technique. In Bao and Cao (2015), authors deal with the problem of projective synchronization of fractional-order memristor-based neural networks in the sense of Caputo’s fractional derivative. However, to the best of our knowledge, upto now the memristor based neural networks have been investigated by the Lyapunov–Krasovskii method but the result discussed in this paper is more superior than the results that are obtained by constructing a Lyapunov–Krasovskii functional. Another important feature of the derived results is because of the usage of matrix measure and Halanay inequality.

Remark 8

In the real world, resistors are used to model connection weight to emulate the synapses in analog implementation of neural networks. By making use of the memristor which has memory and behaviour more like biological synapses we can able to develop memristor based neural network models. The memristive neural networks have characteristics of complex brain networks such as node degree, distribution and assortativity both at the whole-brain scale of human neuroimaging. With the development of application as well as many integrated technologies, memristive neural networks have proven as a promising architecture in neuromorphic systems for the high-density, non-volatility, and unique memristive characteristic. Due to the promising applications in wide areas, various memristive materials, such as ferroelectric materials, chalcogenide materials, metal oxides have attracted great attention. Further, several physical mechanisms have been proposed to illustrate the memristive behaviors, such as electronic barrier modulation from migration of oxygen vacancies, voltage-controlled domain configuration, formation and annihilation of conducting filaments via diffusion of oxygen vacancies, trapping of charge carriers and metal ions from electrodes. Motivated by the aforementioned applications, in this paper we investigate the problem of stability and synchronization analysis of inertial memristive neural networks with time delays.

Numerical simulation

In this section, numerical examples are presented to illustrate the effectiveness and usefulness of the theoretical result.

Example 1

Consider the following inertial MNNs:

where the activation function is given as, \(f_{j}(x)=\sin (|x|-1)\) for \(j=1,2\), \(d_{1}=c_{1}=6\), \(d_{2}=c_{2}=8\), \(I_{1}=I_{2}=6\), and \(\tau (t)=\frac{e^{t}}{1+e^{t}}\). Also the memristive weights of the system (25) is given as,

In order to show that the system is globally exponentially stable, we have to prove \(-(\mu _{p}(\mathbb {G})+l\Vert \tilde{A}\Vert _{p})>l\Vert \tilde{B}\Vert _{p} > 0\), where \(\mathbb {G}=\left( \begin{array}{cc} -I_{n} &\quad I_{n} \\ -\mathcal {C} &\quad -\mathcal {D}\end{array}\right)\).

From the given example we calculate the following,

and \(l_{i}=1\) for \(i=1,2\).

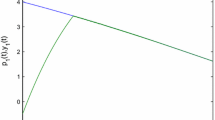

a, b The state trajectories of inertial memristive neural networks of Example 1 with different initial values

When \(p=2\), we have \(\mu _{2}(G)=-1, \Vert \tilde{A}\Vert _{2}=0.0774, \Vert \tilde{B}\Vert _{2}=0.7799\). Hence we have \(0.9226>0.7763>0\). By choosing \(\xi _{1}=\xi _{2}=2.1\) and \(p=\infty\) we have, \(\mu _{\infty }(\mathbb {G})=-1.1\), \(\Vert \tilde{A}\Vert _{\infty }=0.0722\), \(\Vert \tilde{B}\Vert _{\infty }=0.7863\). Hence we obtain \(1.0278>0.7863>0\). Figure 1a, b, depicts the trajectories of system (25) for different initial values. It is clear that the equilibrium is globally exponentially stable. Hence, the Theorem 1 is verified.

Example 2

Consider the following pinned inertial MNNs with 10 nodes:

where \(k=1,2,\ldots ,10\). \(x_{k}(t)=(x_{k1}(t),x_{k2}(t))^{T} \in \mathbb {R}^{2}\) is the state variable of kth node. The isolated node of MNNs (26) is given as,

where \(s(t)=(s_{1}(t),s_{2}(t))^{T} \in \mathbb {R}^{2}\). Let \(N=10\), \(f(x_{k}(t))=(\tanh (|x_{k1}(t)|-1),\tanh (|x_{k2}(t)|-1))^{T}\), \(I=(0.2, 0.6)^{T}\) and \(\tau (t)=\frac{0.15e^{t}}{1+e^{t}}\). So, it is easy to get \(l_{k}=1\) and \(\rho =0.0375\). The coefficient matrices are given as, \(C=\left( \begin{array}{cc} 0.2 &\quad 0 \\ 0 &\quad 0.1 \end{array}\right)\), \(D=\left( \begin{array}{cc} 0.9 &\quad 0 \\ 0 &\quad 0.8 \end{array}\right)\), and

From the given example we calculate the following,

The coupling matrix W is determined by the directed topology given in Fig. 2. From Fig. 2, it is clear that the pinning node is 1 and 9. The inner coupling matrix \(\Gamma\) is given as, \(\Gamma =diag\{6,4\}\) and the coupling strength is chosen as \(\alpha =20\). In order to show that the system is synchronizable, \(-(\mu _{p}(\mathcal {K})+l\Vert \tilde{\tilde{A}}\Vert _{p})>l\Vert \tilde{\tilde{B}}\Vert _{p}>0\). Choosing \(p=2\) we have, \(\mu _{2}(\mathcal {K})=-0.9805, \Vert \tilde{\tilde{A}}\Vert _{p}=0.7616\) and \(\Vert \tilde{\tilde{B}}\Vert _{p}=0.1023\). Hence we have, \(0.2189>0.1023>0\). Based on the conclusion of Theorem 3 the coupled inertial memristive neural networks can be exponentially synchronized. The synchronization state trajectories \(x_{k}(t), k=1,2,\ldots ,10\) and objective state trajectory s(t) are given in Fig. 3a, b respectively. The synchronization of error system \(e_{k}(t), k=1,2,\ldots ,10\) is given in Fig. 4. Hence, the Theorem 3 is verified.

Remark 9

Note that coupled complex dynamical networks can be represented by a large number of interconnected nodes, in which each node consists of a dynamical system. Owing to their applications in real life, many researchers have studied coupled complex dynamical networks recently. In addition, it can be seen from previous researches that most of the authors have attempted to find a proper controller such that the considered coupled complex network can achieve synchronization, i.e., the synchronization error dynamical network is asymptotically stable. However, controlling all nodes in a network may result in a high cost, and is hard to implement. To tackle these issues, the pinning control scheme has been adopted to study synchronization of coupled complex networks, in which only some selected nodes need to be controlled.

Remark 10

The condition of Theorem 3 is difficult to verify for the case \(p=1,\infty ,\omega\) in our example. In Example 1, by introducing the coefficient matrix \(\xi _{i}\) in variable transformation we verified the stability condition for \(p=\infty\). But this transformation is not applicable in our synchronization case.

Synchronization error for Example 2

Conclusion

In this paper, we have discussed the global exponential stability of inertial memristive neural networks and global exponential pinning synchronization of coupled inertial memristive neural networks by using matrix measure strategy and Halanay inequality. Firstly, matrix measure strategies are utilized to analyze the closed-loop error system, under which two sufficient criteria have been established such that the exponential synchronization can be achieved. The model based on the memristor widens the application scope for the design of neural networks. Finally, numerical simulations have been given to demonstrate the effectiveness of our theoretical results. Our future works may involve the stability and synchronization analysis of inertial memristive neural networks under different control schemes.

References

Bacciotti A, Rosier L (2005) Lyapunov function and stability in control theory. Springer, Berlin

Balasubramaniam P, Vembarasan V (2012) Synchronization of recurrent neural networks with mixed time-delays via output coupling with delayed feedback. Nonlinear Dyn 70:677–691

Balasubramaniam P, Chandran R, Jeeva Sathya Theesar S (2011) Synchronization of chaotic nonlinear continuous neural networks with time-varying delay. Cogn Neurodyn 5:361371

Bao HB, Cao J (2015) Projective synchronization of fractional-order memristor-based neural networks. Neural Netw 63:1–9

Benchohra M, Hamani S, Nieto JJ (2010) The method of upper and lower solutions for second order differential inclusions with integral boundary conditions. J Math 40:13–24

Cao J, Wan Y (2014) Matrix measure strategies for stability and synchronization on inertial BAM neural network with time delays. Neural Netw 53:165–172

Cao J, Li P, Wang W (2006) Global synchronization in arrays of delayed neural networks with constant and delayed coupling. Phys Lett A 353:318–325

Cao J, Rakkiyappan R, Maheswari K, Chandrasekar A (2016) Exponential \(H_{\infty }\) filtering analysis for discrete-time switched neural networks with random delays using sojourn probabilities. Sci China Technol Sci 59:387–402

Chandrasekar A, Rakkiyappan R, Cao J, Lakshmanan S (2014) Synchronization of memristor-based recurrent neural networks with two delay components based on second-order reciprocally convex approach. Neural Netw 57:79–93

Chen L, Wu R, Cao J, Liu J (2015) Stability and synchronization of memristor-based fractional-order delayed neural networks. Neural Netw 71:37–44

Chua LO (1971) Memristor—the missing circuit element. IEEE Trans Circuit Theory 18:507–519

Dai A, Zhou W, Xu Y, Xiao C (2016) Adaptive exponential synchronization in mean square for markovian jumping neutral-type coupled neural networks with time-varying delays by pinning control. Neurocomputing 173:809–818

Hahn W (1967) Stability of motion. Springer, Berlin

Halanay A (1966) Differential equations: stability, oscillations, time lags. Academic press, New York

He W, Cao J (2009) Exponential synchronization of chaotic neural networks: a matrix measure approach. Nonlinear Dyn 55:55–65

He X, Li C, Shu Y (2012) Bogdanov–Takens bifurcation in a single inertial neuron model with delay. Neurocomputing 89:193–201

Hu J, Cao J, Alofi A, Abdullah AM, Elaiw A (2015) Pinning synchronization of coupled inertial delayed neural networks. Cogn Neurodyn 9:341–350

Jagger DJ, Ashmore JF (1999) The fast activating potassium current, \(I_{k, f}\), in guinea-pig inner hair cells is regulated by protein kinase A. Neurosci Lett 263:145–148

Ke Y, Miao C (2013) Stability analysis of inertial Cohen–Grossberg-type neural networks with time delays. Neurocomputing 117:196–205

Li C, Chen G, Liao X, Yu J (2004) Hopf bifurcation and chaos in a single inertial neuron model with time delay. Eur Phys J B 41:337–343

Liu Q, Liao X, Guo S, Wu Y (2009) Stability of bifurcating periodic solutions for a single delayed inertial neuron model under periodic excitation. Nonlinear Anal Real World Appl 10:2384–2395

Lu J, Ding C, Lou J, Cao J (2015) Outer synchronization of partially coupled dynamical networks via pinning impulsive controllers. J Frankl Inst 352:5024–5041

Ospeck M, Eguiluz VM, Magnasco MO (2001) Evidence of a Hopf bifurcation in frog hair cells. Biophys J 80:2597–2607

Pan L, Cao J, Hu J (2015) Synchronization for complex networks with Markov switching via matrix measure approach. Appl Math Model 39:5636–5649

Qi J, Li C, Huang T (2014) Stability of delayed memristive neural networks with time-varying impulses. Cogn Neurodyn 8:429–436

Rakkiyappan R, Velmurugan G, Cao J (2015) Stability analysis of memristor-based fractional-order neural networks with different memductance functions. Cogn Neurodyn 9:145–177

Strukov DB, Snider GS, Stewart GR, Williams RS (2008) The missing memristor found. Nature 453:80–83

Vidyasagar M (1993) Nonlinear system analysis. Prentice Hall, Englewood Cliffs

Wang L, Shen Y (2015) Design of controller on synchronization of memristor-based neural networks with time-varying delays. Neurocomputing 147:372–379

Wang Y, Cao J, Hu J (2015a) Stochastic synchronization of coupled delayed neural networks with switching topologies via single pinning impulsive control. Neural Comput Appl 26:1739–1749

Wang F, Yang Y, Hu A, Xu X (2015b) Exponential synchronization of fractional-order complex networks via pinning impulsive control. Nonlinear Dyn 82:1979–1987

Wang J, Wu H, Huang T, Ren S (2016) Pinning control strategies for synchronization of linearly coupled neural networks with reaction–diffusion terms. IEEE Trans Neural Netw Learn Syst 27:749–761

Wheeler WD, Schieve WC (1997) Stability and Chaos in an inertial two-neuron system. Phys B 105:267–284

Yang X, Cao J (2012) Adaptive pinning synchronization of coupled neural networks with mixed delays and vector-form stochastic perturbations. Acta Math Sci 32:955–977

Yang X, Cao J (2014) Hybrid adaptive and impulsive synchronization of uncertain complex networks with delays and general uncertain perturbations. Appl Math Comput 227:480–493

Yang X, Cao J, Ho DWC (2015) Exponential synchronization of discontinuous neural networks with time-varying mixed delays via state feedback and impulsive control. Cogn Neurodyn 9:113–128

Yunkuan K, Chunfang M (2012) Stability and existence of periodic solutions in inertial BAM neural networks with time delay. Neural Comput Appl 23:1089–1099

Zhang W, Li C, Huang T, Tan J (2015) Exponential stability of inertial BAM neural networks with time-varying delay via periodically intermittent control. Neural Comput Appl 26:1781–1787

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Rakkiyappan, R., Premalatha, S., Chandrasekar, A. et al. Stability and synchronization analysis of inertial memristive neural networks with time delays. Cogn Neurodyn 10, 437–451 (2016). https://doi.org/10.1007/s11571-016-9392-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-016-9392-2