Abstract

Recently, the concept of interval-valued intuitionistic fuzzy parameterized interval-valued intuitionistic fuzzy soft sets (d-sets) has successfully modelled decision-making problems, where the parameters and alternatives have interval-valued intuitionistic fuzzy values. In the present study, to be able to transfer a large number of data in such problems to a computer environment and to process them therein, we define the concept of interval-valued intuitionistic fuzzy parameterized interval-valued intuitionistic fuzzy soft matrices (d-matrices). Moreover, we introduce operations, such as union, intersection, and AND/OR/ANDNOT/ORNOT-products, on this concept and study some of their basic properties. We then configure the state-of-the-art soft decision-making (SDM) method constructed by d-sets to render it operable in d-matrices space. Furthermore, we apply it to a performance-based value assignment (PVA) to the seven noise removal filters to compare their ranking orders. Thereafter, we conduct a comparative analysis of the configured method with five state-of-the-art SDM methods. Finally, we discuss d-matrices for future research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many mathematical tools have been proposed to overcome problems containing uncertainties in the real world. Fuzzy sets (Zadeh 1965) and soft sets (Molodtsov 1999) are among the known mathematical tools. In addition to these, intuitionistic fuzzy sets (Atanassov 1986) and interval-valued intuitionistic fuzzy sets (ivif-sets) (Atanassov 2020; Atanassov and Gargov 1989), being the generalisations of the concept of fuzzy sets, have been propounded. Afterwards, various hybrid versions of these concepts, such as fuzzy soft sets (Maji et al. 2001), fuzzy parameterized soft sets (Çağman et al. 2011a), fuzzy parameterized fuzzy soft sets (Çağman et al. 2010), intuitionistic fuzzy parameterized soft sets (Deli and Çağman 2015), interval-valued intuitionistic fuzzy parameterized soft sets (Deli and Karataş 2016), intuitionistic fuzzy parameterized intuitionistic fuzzy soft sets (Karaaslan 2016), and fuzzy parameterized intuitionistic fuzzy soft sets (Sulukan et al. 2019) have been introduced. So far, the researchers have conducted numerous theoretical and applied studies on these concepts in various fields, such as algebra (Çıtak and Çağman 2015; Senapati and Shum 2019; Sezgin 2016; Sezgin et al. 2019; Ullah et al. 2018), topology (Atmaca 2017; Aydın and Enginoğlu 2021b; Enginoğlu et al. 2015; Riaz and Hashmi 2017; Şenel 2016; Thomas and John 2016), analysis (Molodtsov 2004; Riaz et al. 2018; Şenel 2018), and decision making (Çağman and Enginoğlu 2010b; Çağman et al. 2011b; Garg and Arora 2020; Kumar and Garg 2018; Liu and Jiang 2020; Maji et al. 2002; Memiş and Enginoğlu 2019; Mishra and Rani 2018; Petchimuthu et al. 2020; Xue et al. 2021).

However, when a problem containing uncertainties incorporates a large number of data, the aforesaid set concepts display some time- and complexity-related disadvantages. To cope with these difficulties, Çağman and Enginoğlu (2010a) have defined the concept of soft matrices allowing data in such problems to be transferred to and processed in a computer environment and suggested the soft max-min method. Then, Çağman and Enginoğlu (2012) have presented the concept of fuzzy soft matrices and constructed a soft decision-making (SDM) method. Enginoğlu and Çağman (2020) have propounded the concept of fuzzy parameterized fuzzy soft matrices (fpfs-matrices). Moreover, they have proposed an SDM method called Prevalence Effect Method (PEM) and applied it to a performance-based value assignment (PVA) problem, so that they can order image-denoising filters in terms of noise-removal performance. Afterwards, Enginoğlu et al. (2019a) have offered a novel SDM method constructed with fpfs-matrices and PEM, and applied it to the problem of monolithic columns classification.

Lately, the concept of fpfs-matrices has stood out among others due to its modelling success in decision-making problems, where the alternatives and parameters have fuzzy membership degrees. Therefore, many SDM methods, constructed by its substructures, have been configured in (Aydın and Enginoğlu 2019, 2020; Enginoğlu and Memiş 2018b; Enginoğlu and Öngel 2020; Enginoğlu et al. 2021a, b) to operate them in fpfs-matrices space, faithfully to the original. Some of the configured methods have been applied to PVA problems, and successful results have been obtained (Aydın and Enginoğlu 2019, 2020; Enginoğlu and Öngel 2020). Besides, Enginoğlu and Memiş (2018a, 2018c) and Enginoğlu et al. (2018a, 2018b) have focussed on mathematical simplifications and improvements of some of the configured methods. Memiş et al. (2019) have developed a classification algorithm based on normalised Hamming pseudo-similarity of fpfs-matrices. Further, Memiş et al. (2021b) have proposed a classification algorithm based on the Euclidean pseudo-similarity of fpfs-matrices.

Afterwards, the concept of intuitionistic fuzzy parameterized intuitionistic fuzzy soft matrices (ifpifs-matrices) (Enginoğlu and Arslan 2020) has been introduced to model uncertainties in which the alternatives and parameters have intuitionistic fuzzy values. Furthermore, using this concept, a new SDM method has been proposed and applied to a hypothetical problem concerning the determination of eligible candidates in a recruitment scenario and a real-life problem of image processing. Arslan et al. (2021) have then generalised 24 SDM methods operating in fpfs-matrices space via this concept. Besides, they have suggested five test scenarios to compare the performances of the generalised SDM methods and applied the SDM methods successful in these test scenarios to a PVA problem. In addition, Memiş et al. (2021a) have offered a classifier based on the similarity of ifpifs-matrices and applied this classifier to machine learning.

Recently, to be able to model some problems mathematically in which parameters and alternatives contain serious uncertainties, Aydın and Enginoğlu (2021a) have defined the concept of interval-valued intuitionistic fuzzy parameterized interval-valued intuitionistic fuzzy soft sets (d-sets), which can be regarded as the general form of the concepts of interval-valued intuitionistic fuzzy parameterized soft sets (Deli and Karataş 2016) and interval-valued intuitionistic fuzzy soft sets (Jiang et al. 2010; Min 2008). They then have proposed an SDM method using d-sets and applied it to two decision-making problems concerning the eligibility of candidates for two vacant positions in an online job advertisement and PVA to the known filters used in image denoising. The applications have shown that d-sets can be successfully applied to problems containing further uncertainties. Thus, in decision-making problems where the parameters and alternatives contain multiple measurement results, the ambiguity as to which value to assign to a parameter or an alternative has been clarified. The primary motivation of the present study is to develop effective SDM methods by improving d-sets’ skills in modelling such problems. The second one is to propound a novel mathematical tool to enable data in similar problems, containing both a large number of data and multiple intuitionistic fuzzy measurement results, to be transferred to a computer environment. Thus, it will be possible to use the concept of d-sets effectively.

In the current study, we focus on the concept of ivif-sets, more meaningful and convenient than the others, to minimise data loss when modelling the problem of which value to assign to a parameter or an alternative with multiple fuzzy or intuitionistic fuzzy measurement results. For example, in Section 5, the results of Based on Pixel Density Filter (BPDF) (Erkan and Gökrem 2018) for 20 traditional test images at noise density \(10\%\) are as follows:

We can regard these results as the multiple membership degrees of BPDF herein. Thus, we can obtain the multiple non-membership degrees of BPDF corresponding to these multiple membership degrees using \(\nu _i=1-\mu _i\), for \(i \in \{1,2,\dots ,20\}\). Namely,

We can calculate the membership and non-membership degrees of BPDF in three different ways by availing of the aforesaid values as follows:

-

1.

Using \(\mu \)(BPDF)\( = \frac{1}{20} \sum _{i=1}^{20} \mu _i\), we obtain the degree of BPDF’s membership to a fuzzy set as \(\mu \)(BPDF)\(=0.9792\).

-

2.

By utilising \(\mu \)(BPDF)\(=\min \limits _{i \in I_{20}} {\mu _i}\) and \(\nu \)(BPDF)\(=1-\max \limits _{i \in I_{20}} {\nu _i}\), we obtain the degrees of BPDF’s membership and non-membership to an intuitionistic fuzzy set as \(\mu \)(BPDF)\(=0.9657\) and \(\nu \)(BPDF)\(=0.0062\), respectively.

-

3.

By employing \(\mu \)(BPDF)\(=\left[ \frac{\min \limits _{i \in I_{20}}{\mu _{i}}}{\max \limits _{i \in I_{20}}{\mu _{i}} + \max \limits _{i \in I_{20}}{\nu _{i}}} , \frac{\max \limits _{i \in I_{20}}{\mu _{i}}}{\max \limits _{i \in I_{20}}{\mu _{i}} + \max \limits _{i \in I_{20}}{\nu _{i}}} \right] \) and \(\nu \)(BPDF)\(=\left[ \frac{\min \limits _{i \in I_{20}}{\nu _{i}}}{\max \limits _{i \in I_{20}}{\mu _{i}} + \max \limits _{i \in I_{20}}{\nu _{i}}} , \frac{\max \limits _{i \in I_{20}}{\nu _{i}}}{\max \limits _{i \in I_{20}}{\mu _{i}} + \max \limits _{i \in I_{20}}{\nu _{i}}} \right] \), we obtain the degrees of BPDF’s membership and non-membership to an ivif-set as \(\mu \)(BPDF)\(=[0.9392,0.9666]\) and \(\nu \)(BPDF)\(=[0.0060,0.0334]\), respectively.

The first case shows that BPDF’s noise-removal performance at noise density \(10\%\) accounts for approximately \(98\%\). The second signifies that BPDF exhibits a success rate of around \(97\%\) and a failure rate of \(1\%\) in noise removal. The last one indicates that the noise-removal success of BPDF ranges from \(94\%\) to \(97\%\) and its failure from 1 to \(3\%\). These comments manifest that membership and non-membership degrees assigned to an alternative in ivif-sets offer more information than fuzzy sets and intuitionistic fuzzy sets do. Hence, we can summarise the significant advantages and contributions of the present study as follows:

-

The concept of interval-valued intuitionistic fuzzy parameterized interval-valued intuitionistic fuzzy soft matrices (d-matrices) has an important advantage to prevent errors arising from manual calculations in SDM methods constructed by d-sets. This concept makes it possible to obtain fast and reliable results.

-

The concept of d-matrices allows to process a large number of data and multiple measurement results by transferring them to a computer environment.

-

The concept of d-matrices utilises ivif-values containing more information compared to fuzzy or intuitionistic fuzzy values to determine membership and non-membership degrees of parameters and alternatives.

-

The pre-processing step of the configured method presents an approach related to the conversion of multiple intuitionistic fuzzy measurement results to ivif-values.

On the other hand, the running time of the configured method can be slightly longer than those of the others. This relatively minor drawback results from computations while converting multiple intuitionistic fuzzy measurement results to ivif-values. For instance, for d-matrix \([b_{ij}]\) and ifpifs-matrix \([c_{ij}]\) in Sects. 5 and 6 , the data concerning the average running time of the methods (in second), using MATLAB R2021a and a laptop with 2.5 GHz i5-2450M CPU and 8 GB RAM, in 1000 runs are as follows:

The configured method: 0.0063, iMBR01: 0.0011, iMRB02\((I_9)\): 0.0009, iCCE10: 0.0002, iCCE11: 0.0004, and iPEM: 0.0028

Section 2 of the present study provides some of the basic definitions to be employed in the paper’s next sections. Section 3 defines the concept of d-matrices and investigates some of its basic properties. Section 4 configures a state-of-the-art SDM method constructed with d-sets to operate it in d-matrices space. Section 5 applies it to a real-life problem concerning PVA to the known image-denoising filters using the Structural Similarity (SSIM) results of these filters for the images provided in two different databases. Furthermore, the section comments on the ranking orders of the filters. Section 6 provides a comparative analysis of the ranking performances of the configured method and those of the five methods by applying five state-of-the-art SDM methods constructed with ifpifs-matrices to the same problem. Finally, d-matrices are discussed for further research. This study is a part of the first author’s PhD dissertation (Aydın 2020).

2 Preliminaries

This section first presents several the known definitions and propositions. Throughout this paper, let Int([0, 1]) be the set of all closed classical subintervals of [0, 1].

Definition 1

Let \(\gamma _1,\gamma _2 \in Int([0,1])\). For \(\gamma _1:=[\gamma ^-_1,\gamma ^+_1]\) and \(\gamma _2:=[\gamma ^-_2,\gamma ^+_2]\),

- i.:

-

if \(\gamma ^-_2 \le \gamma ^-_1\) and \(\gamma ^+_1 \le \gamma ^+_2\), then \(\gamma _1\) is called a classical subinterval of \(\gamma _2\) and is denoted by \(\gamma _1 \subseteq \gamma _2\).

- ii.:

-

if \(\gamma ^-_1 \le \gamma ^-_2\) and \(\gamma ^+_1 \le \gamma ^+_2\), then \(\gamma _1\) is called a subinterval of \(\gamma _2\) and is denoted by \(\gamma _1 {{\tilde{\subseteq }}} \gamma _2\).

- iii.:

-

if \(\gamma ^-_1 = \gamma ^-_2\) and \(\gamma ^+_1 = \gamma ^+_2\), then \(\gamma _1\) and \(\gamma _2\) are called equal intervals and is denoted by \(\gamma _1 = \gamma _2\).

Proposition 1

Let \(\gamma _1,\gamma _2 \in Int([0,1])\). Then, \(\gamma _1 {{\tilde{\le }}} \gamma _2\Leftrightarrow \gamma _1 {{\tilde{\subseteq }}} \gamma _2\). Here, “\({{\tilde{\le }}}\)” is a partially ordered relation over Int([0, 1]).

In the present paper, the smallest upper bound and greatest lower bound of the elements of the set Int([0, 1]) are obtained from the partially ordered relation “\({{\tilde{\le }}}\)”.

Definition 2

Let \(\gamma ,\gamma _1,\gamma _2 \in Int({\mathbb {R}})\) and \(c \in \mathbb {R}^{+}\) such that \(\gamma :=[\gamma ^-,\gamma ^+]\), \(\gamma _1:=[\gamma ^-_1,\gamma ^+_1]\), and \(\gamma _2:=[\gamma ^-_2,\gamma ^+_2]\). Then,

- i.:

-

\(\gamma _1 + \gamma _2 := [\gamma ^-_1 + \gamma ^-_2,\gamma ^+_1 + \gamma ^+_2]\)

- ii.:

-

\(\gamma _1 - \gamma _2 := [\gamma ^-_1 - \gamma ^+_2,\gamma ^+_1 - \gamma ^-_2]\)

- iii.:

-

\(\gamma _1 \cdot \gamma _2 := [\min \{\gamma ^-_1\gamma ^-_2,\gamma ^-_1\gamma ^+_2,\gamma ^+_1\gamma ^-_2,\gamma ^+_1\gamma ^+_2\},\max \{\gamma ^-_1\gamma ^-_2,\gamma ^-_1\gamma ^+_2,\gamma ^+_1\gamma ^-_2,\gamma ^+_1\gamma ^+_2\}]\)

- iv.:

-

\(c \cdot \gamma := [c \cdot \gamma ^-,c \cdot \gamma ^+]\)

Proposition 2

Let \(\gamma _1,\gamma _2 \in Int([0,1])\) such that \(\gamma _1:=[\gamma ^-_1,\gamma ^+_1]\) and \(\gamma _2:=[\gamma ^-_2,\gamma ^+_2]\). Then,

-

i.

\(\sup \{\gamma _1,\gamma _2\}=[\max \{\gamma ^-_1,\gamma ^-_2\},\max \{\gamma ^+_1,\gamma ^+_2\}]\)

-

ii.

\(\inf \{\gamma _1,\gamma _2\}=[\min \{\gamma ^-_1,\gamma ^-_2\},\min \{\gamma ^+_1,\gamma ^+_2\}]\)

Second, this section presents some of the basic definitions to be used in the paper’s next sections.

Definition 3

(Atanassov and Gargov 1989) Let E be a universal set and \(\kappa \) be a function from E to \(Int([0,1]) \times Int([0,1])\). Then, the set \(\left\{ (x,\kappa (x)) : x \in E \right\} \), being the graphic of \(\kappa \), is called an interval-valued intuitionistic fuzzy set (ivif-set) over E.

Here, for all \(x \in E\), \(\kappa (x):=(\alpha (x),\beta (x))\), \(\alpha (x):=[\alpha ^-(x),\alpha ^+(x)]\), and \(\beta (x):=[\beta ^-(x),\beta ^+(x)]\) such that \(\alpha ^+(x)+\beta ^+(x) \le 1\). Moreover, \(\alpha \) and \(\beta \) are called membership function and non-membership function in an ivif-set, respectively.

From now on, the set of all the ivif-sets over E is denoted by IVIF(E). In IVIF(E), since the graph\((\kappa )\) and \(\kappa \) generate each other uniquely, the notations are interchangeable. Therefore, as long as it causes no confusion, we denote an ivif-set graph\((\kappa )\) by \(\kappa \). Moreover, we use the notation \({{^{\alpha (x)}_{\beta (x)}}}x\) instead of \((x,\alpha (x),\beta (x))\), for brevity. Thus, we represent an ivif-set over E with \(\kappa :=\left\{ {{^{\alpha (x)}_{\beta (x)}}}x : x \in E \right\} \) .

Note 1

Since \([k,k]:=k\), we use \({^{k}_{t}}x\) instead of \({{^{[k,k]}_{[t,t]}}}x\), for all \(k,t \in [0,1]\). Moreover, we do not display the elements \(^{0}_{1}x\) in an ivif-set.

Definition 4

(Aydın and Enginoğlu 2021a) Let U be a universal set, E be a parameter set, \(\kappa \in IVIF(E)\), and f be a function from \(\kappa \) to IVIF(U). Then, the set \(\left\{ \left( {{^{\alpha (x)}_{\beta (x)}}}x,f\left( {{^{\alpha (x)}_{\beta (x)}}}x\right) \right) : x\in E \right\} \), being the graphic of f, is called an interval-valued intuitionistic fuzzy parameterized interval-valued intuitionistic fuzzy soft set (d-set) parameterized via E over U (or briefly over U).

Note 2

We do not display the elements \(\left( ^{0}_{1}x,0_{U}\right) \) in a d-set. Here, \(0_{U}\) is the empty ivif-set over U.

Hereinafter, the set of all the d-sets over U is denoted by \(D_E(U)\). In \(D_E(U)\), since the graph(f) and f generate each other uniquely, the notations are interchangeable. Therefore, as long as it causes no confusion, we denote a d-set graph(f) by f.

Example 1

Let \(E=\{x_1,x_2,x_3,x_4\}\) be a parameter set and \(U=\{u_1,u_2,u_3,u_4,u_5\}\) be a universal set. Then,

is a d-set over U. Here, \(1_{U}:=\left\{ {^{1}_{0}}u : u\in U\right\} \).

3 Interval-valued intuitionistic fuzzy parameterized interval-valued intuitionistic fuzzy soft matrices

This section first defines the concept of d-matrices and introduces some of its basic properties. The primary purpose of the present section is to enable a large number of data containing multiple measurement results to be transferred to a computer environment with the help of this concept. The second one is to develop effective SDM methods by improving d-sets’ skills in modelling such cases. To do so, this section focuses on making a theoretical contribution to the concept of soft matrices and defining product operations over d-matrices to use in SDM methods based on group decision making for the subsequent studies. From now on, let E be a parameter set and U be a universal set.

Definition 5

Let \(f \in D_E(U)\). Then, \([a_{ij}]\) is called the d-matrix of f and is defined by

such that for \(i \in \{0,1,2, \cdots \}\) and \(j \in \{1,2,\cdots \}\),

Moreover, if \(|U|=m-1\) and \(|E|=n\), then \([a_{ij}]\) is an \(m \times n\) d-matrix. We represent the entry of a d-matrix \([a_{ij}]\) with \(a_{ij}:={^{\alpha _{ij}}_{\beta _{ij}}}\). It must be noted that for all i and j, \(\alpha _{ij}:=[\alpha ^-_{ij},\alpha ^+_{ij}]\) and \(\beta _{ij}:=[\beta ^-_{ij},\beta ^+_{ij}]\) such that \(\alpha ^+_{ij}+\beta ^+_{ij} \le 1\). In this paper, to avoid any confusion, as needed, the membership and non-membership degrees of \(a_{ij}\), i.e. \(\alpha _{ij}\) and \(\beta _{ij}\), will also be represented by \(\alpha ^a_{ij}\) and \(\beta ^a_{ij}\), respectively. Besides, the set of all the d-matrices parameterized via E over U is denoted by \(D_E[U]\) and \([a_{ij}], [b_{ij}], [c_{ij}] \in D_E[U]\).

The entries of a d-matrix \([a_{ij}]_{m \times n}\) consist of ivif-values. The entries of row with zero indexed of its contain membership and non-membership degrees of each parameter. For example, the entry \(a_{01}\) indicates the membership and non-membership degrees of the first parameter. Moreover, the entries of the other rows of its involve the membership and non-membership degrees of an alternative corresponding to each parameter. For instance, the entry \(a_{32}\) signifies the membership and non-membership degrees of the third alternative corresponding to the second parameter.

Example 2

The d-matrix of f provided in Example 1 is as follows:

Definition 6

Let \([a_{ij}] \in D_E[U]\). For all i and j, and for \(\lambda ,\varepsilon \in Int([0,1])\), if \(\alpha _{ij}=\lambda \) and \(\beta _{ij}=\varepsilon \), then \([a_{ij}]\) is called \((\lambda ,\varepsilon )\)-d-matrix and is denoted by \([^{\lambda }_{\varepsilon }]\). Here, \(\left[ ^{0}_{1}\right] \) is called empty d-matrix and \(\left[ ^{1}_{0}\right] \) is called universal d-matrix.

Definition 7

Let \([a_{ij}],[b_{ij}],[c_{ij}] \in D_E[U]\), \(I_{E}:=\{j : x_j \in E\}\), and \(R\subseteq I_{E}\). If

then \([c_{ij}]\) is called Rb-restriction of \([a_{ij}]\) and is denoted by \(\left[ (a_{Rb})_{ij}\right] \).

Briefly, if \([b_{ij}]=\left[ ^{0}_{1}\right] \), then \([{(a_{R})}_{ij}]\) can be used instead of \(\left[ {\left( a_{R^0_1}\right) }_{ij}\right] \) and called R-restriction of \([a_{ij}]\). It is clear that

Example 3

For \(R=\{1,3,4\}\) and \(S=\{1,3\}\), \({R^1_0}\)-restriction and S-restriction of \([a_{ij}]\) provided in Example 2 are as follows:

Definition 8

Let \([a_{ij}],[b_{ij}] \in D_E[U]\). For all i and j, if \(\alpha _{ij}^{a} {\tilde{\le }} \alpha _{ij}^{b}\) and \(\beta _{ij}^{b} {\tilde{\le }} \beta _{ij}^{a}\), then \([a_{ij}]\) is called a submatrix of \([b_{ij}]\) and is denoted by \([a_{ij}] {\tilde{\subseteq }} [b_{ij}]\).

Definition 9

Let \([a_{ij}],[b_{ij}]\in D_E[U]\). For all i and j, if \(\alpha _{ij}^{a}= \alpha _{ij}^{b}\) and \(\beta _{ij}^{a}= \beta _{ij}^{b}\), then \([a_{ij}]\) and \([b_{ij}]\) are called equal d-matrices and is denoted by \([a_{ij}]=[b_{ij}]\).

Proposition 3

Let \([a_{ij}],[b_{ij}],[c_{ij}] \in D_E[U]\). Then,

-

i.

\([a_{ij}] {\tilde{\subseteq }} \left[ ^{1}_{0}\right] \)

-

ii.

\(\left[ ^{0}_{1}\right] {\tilde{\subseteq }} [a_{ij}]\)

-

iii.

\([a_{ij}]{\tilde{\subseteq }} [a_{ij}]\)

-

iv.

\(([a_{ij}]=[b_{ij}] \wedge [b_{ij}] = [c_{ij}]) \Rightarrow [a_{ij}] = [c_{ij}]\)

-

v.

\(([a_{ij}] {\tilde{\subseteq }} [b_{ij}] \wedge [b_{ij}] {\tilde{\subseteq }} [a_{ij}]) \Leftrightarrow [a_{ij}] = [b_{ij}]\)

-

vi.

\(([a_{ij}] {\tilde{\subseteq }} [b_{ij}] \wedge [b_{ij}] {\tilde{\subseteq }} [c_{ij}]) \Rightarrow [a_{ij}] {\tilde{\subseteq }} [c_{ij}]\)

Definition 10

Let \([a_{ij}],[b_{ij}] \in D_E[U]\). If \([a_{ij}] {\tilde{\subseteq }} [b_{ij}]\) and \([a_{ij}] \ne [b_{ij}]\), then \([a_{ij}]\) is called a proper submatrix of \([b_{ij}]\) and is denoted by \([a_{ij}] {\tilde{\subsetneq }} [b_{ij}]\).

Definition 11

Let \([a_{ij}],[b_{ij}],[c_{ij}] \in D_E[U]\). For all i and j, if \(\alpha _{ij}^{c}=\sup \{\alpha _{ij}^{a}, \alpha _{ij}^{b}\}\) and \(\beta _{ij}^{c}=\inf \{\beta _{ij}^{a}, \beta _{ij}^{b}\}\), then \([c_{ij}]\) is called union of \([a_{ij}]\) and \([b_{ij}]\) and is denoted by \([a_{ij}] {\tilde{\cup }} [b_{ij}]\).

Definition 12

Let \([a_{ij}],[b_{ij}],[c_{ij}] \in D_E[U]\). For all i and j, if \(\alpha _{ij}^{c}=\inf \{\alpha _{ij}^{a}, \alpha _{ij}^{b}\}\) and \(\beta _{ij}^{c}=\sup \{\beta _{ij}^{a}, \beta _{ij}^{b}\}\), then \([c_{ij}]\) is called intersection of \([a_{ij}]\) and \([b_{ij}]\) and is denoted by \([a_{ij}] {\tilde{\cap }} [b_{ij}]\).

Proposition 4

Let \([a_{ij}],[b_{ij}],[c_{ij}] \in D_E[U]\). Then,

-

i.

\([a_{ij}] {\tilde{\cup }} [a_{ij}] = [a_{ij}] \) and \( [a_{ij}] {\tilde{\cap }} [a_{ij}] = [a_{ij}]\)

-

ii.

\([a_{ij}] {\tilde{\cup }} \left[ ^{0}_{1}\right] = [a_{ij}]\) and \( [a_{ij}] {\tilde{\cap }} \left[ ^{1}_{0}\right] = [a_{ij}]\)

-

iii.

\([a_{ij}] {\tilde{\cup }} \left[ ^{1}_{0}\right] = \left[ ^{1}_{0}\right] \) and \( [a_{ij}] {\tilde{\cap }} \left[ ^{0}_{1}\right] = \left[ ^{0}_{1}\right] \)

-

iv.

\([a_{ij}] {\tilde{\cup }} [b_{ij}]= [b_{ij}] {\tilde{\cup }} [a_{ij}] \) and \([a_{ij}] {\tilde{\cap }} [b_{ij}]= [b_{ij}] {\tilde{\cap }} [a_{ij}]\)

-

v.

\(([a_{ij}] {\tilde{\cup }} [b_{ij}]){\tilde{\cup }} [c_{ij}]= [a_{ij}] {\tilde{\cup }} ([b_{ij}] {\tilde{\cup }} [c_{ij}])\) and \(([a_{ij}] {\tilde{\cap }} [b_{ij}]) {\tilde{\cap }} [c_{ij}]= [a_{ij}] {\tilde{\cap }} ([b_{ij}] {\tilde{\cap }} [c_{ij}])\)

-

vi.

\([a_{ij}] {\tilde{\cup }} ([b_{ij}] {\tilde{\cap }} [c_{ij}])=([a_{ij}] {\tilde{\cup }} [b_{ij}]) {\tilde{\cap }} ([a_{ij}] {\tilde{\cup }} [c_{ij}])\)

\([a_{ij}] {\tilde{\cap }} ([b_{ij}] {\tilde{\cup }} [c_{ij}])= ([a_{ij}] {\tilde{\cap }} [b_{ij}]) {\tilde{\cup }} ([a_{ij}] {\tilde{\cap }} [c_{ij}])\)

-

vii.

\([a_{ij}] {\tilde{\subseteq }} [b_{ij}] \Rightarrow [a_{ij}] {\tilde{\cup }} [b_{ij}]=[b_{ij}]\) and \([a_{ij}] {\tilde{\subseteq }} [b_{ij}] \Rightarrow [a_{ij}] {\tilde{\cap }} [b_{ij}]=[a_{ij}]\)

Proof

- vi. :

-

Let \([a_{ij}],[b_{ij}],[c_{ij}] \in D_E[U]\). Then,

$$\begin{aligned} \begin{array}{lll} {[a_{ij}] {\tilde{\cup }} ([b_{ij}]{\tilde{\cap }} [c_{ij}])}&{}=&{} [a_{ij}] {\tilde{\cup }} \left[ ^{\inf \left\{ \alpha _{ij}^{b},\alpha _{ij}^{c}\right\} }_{\sup \left\{ \beta _{ij}^{b},\beta _{ij}^{c}\right\} }\right] \\ &{}=&{} \left[ ^{\sup \left\{ \alpha _{ij}^{a},\inf \left\{ \alpha _{ij}^{b},\alpha _{ij}^{c}\right\} \right\} }_{\inf \left\{ \beta _{ij}^{a},\sup \left\{ \beta _{ij}^{b}, \beta _{ij}^{c}\right\} \right\} }\right] \\ &{}=&{} \left[ ^{\inf \left\{ \sup \left\{ \alpha _{ij}^{a},\alpha _{ij}^{b}\right\} ,\sup \left\{ \alpha _{ij}^{a}, \alpha _{ij}^{c}\right\} \right\} }_{\sup \left\{ \inf \left\{ \beta _{ij}^{a},\beta _{ij}^{b}\right\} ,\inf \left\{ \beta _{ij}^{a},\beta _{ij}^{c}\right\} \right\} }\right] \\ &{}=&{} \left[ ^{\sup \left\{ \alpha _{ij}^{a},\alpha _{ij}^{b}\right\} }_{\inf \left\{ \beta _{ij}^{a},\beta _{ij}^{b}\right\} }\right] {\tilde{\cap }} \left[ ^{\sup \left\{ \alpha _{ij}^{a},\alpha _{ij}^{c}\right\} }_{\inf \left\{ \beta _{ij}^{a},\beta _{ij}^{c}\right\} }\right] \\ &{}=&{} ([a_{ij}] {\tilde{\cup }} [b_{ij}]) {\tilde{\cap }} ([a_{ij}] {\tilde{\cup }} [c_{ij}]) \\ \end{array} \end{aligned}$$

\(\square \)

Example 4

Let \(E=\{x_1,x_2,x_3\}\) and \(U=\{u_1,u_2\}\). Assume that two d-matrices \([a_{ij}]\) and \([b_{ij}]\) are as follows:

Then,

Definition 13

Let \([a_{ij}],[b_{ij}],[c_{ij}] \in D_E[U]\). For all i and j, if \(\alpha _{ij}^{c}=\inf \{\alpha _{ij}^{a},\beta _{ij}^{b}\}\) and \(\beta _{ij}^{c}=\sup \{\beta _{ij}^{a},\alpha _{ij}^{b}\}\), then \([c_{ij}]\) is called difference between \([a_{ij}]\) and \([b_{ij}]\) and is denoted by \([a_{ij}] {\tilde{\setminus }} [b_{ij}]\).

Proposition 5

Let \([a_{ij}] \in D_E[U]\). Then,

-

i.

\([a_{ij}] {\tilde{\setminus }} \left[ ^{0}_{1}\right] = [a_{ij}]\)

-

ii.

\([a_{ij}] {\tilde{\setminus }} \left[ ^{1}_{0}\right] = \left[ ^{0}_{1}\right] \)

-

iii.

\(\left[ ^{0}_{1}\right] {\tilde{\setminus }} [a_{ij}]= \left[ ^{0}_{1}\right] \)

Note 3

The difference operation does not provide associative and commutative properties.

Definition 14

Let \([a_{ij}],[b_{ij}] \in D_E[U]\). For all i and j, if \(\alpha _{ij}^{b}=\beta _{ij}^{a}\) and \(\beta _{ij}^{b}=\alpha _{ij}^{a}\), then \([b_{ij}]\) is complement of \([a_{ij}]\) and is denoted by \([a_{ij}]^{{\tilde{c}}}\) or \([a^{{\tilde{c}}}_{ij}]\). It is clear that, \([a_{ij}]^{{\tilde{c}}}=\left[ ^{1}_{0}\right] {\tilde{\setminus }} [a_{ij}]\).

Proposition 6

Let \([a_{ij}],[b_{ij}] \in D_E[U]\). Then,

-

i.

\(([a_{ij}]^{\tilde{c}})^{\tilde{c}}= [a_{ij}]\)

-

ii.

\(\left[ ^{0}_{1}\right] ^{\tilde{c}} = \left[ ^{1}_{0}\right] \)

-

iii.

\([a_{ij}] {\tilde{\setminus }} [b_{ij}]=[a_{ij}] {\tilde{\cap }} [b_{ij}]^{\tilde{c}}\)

-

iv.

\([a_{ij}] {\tilde{\subseteq }} [b_{ij}] \Rightarrow [b_{ij}]^{{\tilde{c}}} {\tilde{\subseteq }} [a_{ij}]^{{\tilde{c}}}\)

Proposition 7

Let \([a_{ij}],[b_{ij}] \in D_E[U]\). Then, the following De Morgan’s laws are valid:

-

i.

\(([a_{ij}] {\tilde{\cup }} [b_{ij}])^{\tilde{c}}= [a_{ij}]^{\tilde{c}} {\tilde{\cap }} [b_{ij}]^{\tilde{c}}\)

-

ii.

\(([a_{ij}] {\tilde{\cap }} [b_{ij}])^{\tilde{c}} = [a_{ij}]^{\tilde{c}} {\tilde{\cup }} [b_{ij}]^{\tilde{c}}\)

Proof

- i. :

-

Let \([a_{ij}],[b_{ij}] \in D_E[U]\). Then,

$$\begin{aligned} ([a_{ij}] {\tilde{\cup }} [b_{ij}])^{\tilde{c}} = \left[ ^{\sup \{\alpha _{ij}^{a},\alpha _{ij}^{b}\}}_{\inf \{\beta _{ij}^{a},\beta _{ij}^{b}\}}\right] ^{\tilde{c}} = \left[ ^{\inf \{\beta _{ij}^{a},\beta _{ij}^{b}\}}_{\sup \{\alpha _{ij}^{a},\alpha _{ij}^{b}\}}\right] = \left[ ^{\beta _{ij}^{a}}_{\alpha _{ij}^{a}}\right] {\tilde{\cap }}\left[ ^{\beta _{ij}^{b}}_{\alpha _{ij}^{b}}\right] = \left[ a_{ij}\right] ^{\tilde{c}}{\tilde{\cap }} \left[ b_{ij}\right] ^{\tilde{c}}\\ \end{aligned}$$

\(\square \)

Definition 15

Let \([a_{ij}],[b_{ij}],[c_{ij}] \in D_E[U]\). For all i and j, if

then \([c_{ij}]\) is called symmetric difference between \([a_{ij}]\) and \([b_{ij}]\) and is denoted by \([a_{ij}] {\tilde{\triangle }} [b_{ij}]\).

Proposition 8

Let \([a_{ij}],[b_{ij}] \in D_E[U]\). Then,

-

i.

\([a_{ij}] {\tilde{\triangle }} \left[ ^{0}_{1}\right] = [a_{ij}]\)

-

ii.

\([a_{ij}] {\tilde{\triangle }} \left[ ^{1}_{0}\right] = [a_{ij}]^{\tilde{c}}\)

-

iii.

\([a_{ij}] {\tilde{\triangle }} [b_{ij}]=[b_{ij}] {\tilde{\triangle }} [a_{ij}]\)

Note 4

The symmetric difference operation does not provide associative property.

Example 5

For \([a_{ij}]\) and \([b_{ij}]\) in Example 4, \([a_{ij}] {\tilde{\setminus }} [b_{ij}]\) and \([a_{ij}] {\tilde{\triangle }} [b_{ij}]\) are as follows:

Definition 16

Let \([a_{ij}],[b_{ij}] \in D_E[U]\). If \([a_{ij}] {\tilde{\cap }} [b_{ij}]=\left[ ^{0}_{1}\right] \), then \([a_{ij}]\) and \([b_{ij}]\) are called disjoint.

Definition 17

Let \([a_{ij}]_{m \times {n_1}} \in D_{E_1}[U]\), \([b_{ik}]_{m \times {n_2}} \in D_{E_2}[U]\), and \([c_{ip}]_{m \times {n_1n_2}} \in D_{E_1 \times E_2}[U]\) such that \(p=n_2(j-1)+k\). For all i and p, if \(\alpha _{ip}^{c}=\inf \{\alpha _{ij}^{a},\alpha _{ik}^{b}\}\) and \(\beta _{ip}^{c}=\sup \{\beta _{ij}^{a},\beta _{ik}^{b}\}\), then \([c_{ip}]\) is called AND-product of \([a_{ij}]\) and \([b_{ik}]\) and is denoted by \([a_{ij}]{\wedge }[b_{ik}]\).

Definition 18

Let \([a_{ij}]_{m \times {n_1}} \in D_{E_1}[U]\), \([b_{ik}]_{m \times {n_2}} \in D_{E_2}[U]\), and \([c_{ip}]_{m \times {n_1n_2}} \in D_{E_1 \times E_2}[U]\) such that \(p=n_2(j-1)+k\). For all i and p, if \(\alpha _{ip}^{c}=\sup \{\alpha _{ij}^{a},\alpha _{ik}^{b}\}\) and \(\beta _{ip}^{c}=\inf \{\beta _{ij}^{a},\beta _{ik}^{b}\}\), then \([c_{ip}]\) is called OR-product of \([a_{ij}]\) and \([b_{ik}]\) and is denoted by \([a_{ij}]{\vee }[b_{ik}]\).

Definition 19

Let \([a_{ij}]_{m \times {n_1}} \in D_{E_1}[U]\), \([b_{ik}]_{m \times {n_2}} \in D_{E_2}[U]\), and \([c_{ip}]_{m \times {n_1n_2}} \in D_{E_1 \times E_2}[U]\) such that \(p=n_2(j-1)+k\). For all i and p, if \(\alpha _{ip}^{c}=\inf \{\alpha _{ij}^{a},\beta _{ik}^{b}\}\) and \(\beta _{ip}^{c}=\sup \{\beta _{ij}^{a},\alpha _{ik}^{b}\}\), then \([c_{ip}]\) is called ANDNOT-product of \([a_{ij}]\) and \([b_{ik}]\) and is denoted by \([a_{ij}]{{\overline{\wedge }}}[b_{ik}]\).

Definition 20

Let \([a_{ij}]_{m \times {n_1}} \in D_{E_1}[U]\), \([b_{ik}]_{m \times {n_2}} \in D_{E_2}[U]\), and \([c_{ip}]_{m \times {n_1n_2}} \in D_{E_1 \times E_2}[U]\) such that \(p=n_2(j-1)+k\). For all i and p, if \(\alpha _{ip}^{c}=\sup \{\alpha _{ij}^{a},\beta _{ik}^{b}\}\) and \(\beta _{ip}^{c}=\inf \{\beta _{ij}^{a},\alpha _{ik}^{b}\}\), then \([c_{ip}]\) is called ORNOT-product of \([a_{ij}]\) and \([b_{ik}]\) and is denoted by \([a_{ij}]{{\underline{\vee }}}[b_{ik}]\).

Example 6

For \([a_{ij}]\) and \([b_{ik}]\) in Example 4, \([a_{ij}] {\overline{\wedge }} [b_{ik}]\) is as follows:

Proposition 9

Let \([a_{ij}]_{m \times {n_1}} \in D_{E_1}[U]\), \([b_{ik}]_{m \times {n_2}} \in D_{E_2}[U]\), and \([c_{il}]_{m \times {n_3}} \in D_{E_3}[U]\). Then,

-

i.

\(([a_{ij}]\wedge [b_{ik}])\wedge [c_{il}] = [a_{ij}] \wedge ([b_{ik}] \wedge [c_{il}])\)

-

ii.

\(([a_{ij}]\vee [b_{ik}])\vee [c_{il}] = [a_{ij}] \vee ([b_{ik}] \vee [c_{il}])\)

Proof

- i. :

-

Let \([a_{ij}]_{m \times {n_1}} \in D_{E_1}[U]\), \([b_{ik}]_{m \times {n_2}} \in D_{E_2}[U]\), \([c_{il}]_{m \times {n_3}} \in D_{E_3}[U]\), \([a_{ij}] \wedge [b_{ik}]=[d_{ip}]\), \([b_{ik}] \wedge [c_{il}]=[e_{ir}]\), \(([a_{ij}] \wedge [b_{ik}]) \wedge [c_{il}]=[f_{is}]\), and \([a_{ij}] \wedge ([b_{ik}] \wedge [c_{il}])=[h_{it}]\). Therefore, \([d_{ip}]_{m \times {n_1n_2}} \in D_{E_1 \times E_2}[U]\), \([e_{ir}]_{m \times {n_2n_3}} \in D_{E_2 \times E_3}[U]\), and \([f_{is}]_{m \times {n_1n_2n_3}},[h_{it}]_{m \times {n_1n_2n_3}} \in D_{E_1 \times E_2 \times E_3}[U]\). Because of Definition 17, since \(p=n_2(j-1)+k\) and \(s=n_3(p-1)+l\), then

$$\begin{aligned} s=n_3n_2(j-1)+n_3(k-1)+l \end{aligned}$$Similarly, because of Definition 17, since \(r=n_3(k-1)+l\) and \(t=n_2n_3(j-1)+r\), then

$$\begin{aligned} t=n_2n_3(j-1)+n_3(k-1)+l \end{aligned}$$Moreover, for all i, s, and t, since

$$\begin{aligned} \alpha _{is}^{f}=\inf \{\inf \{\alpha _{ij}^{a},\alpha _{ik}^{b}\},\alpha _{il}^{c}\} \quad \text {and} \quad \beta _{is}^{f}=\sup \{\sup \{\beta _{ij}^{a},\beta _{ik}^{b}\},\beta _{il}^{c}\} \end{aligned}$$and

$$\begin{aligned} \alpha _{it}^{h}=\inf \{\alpha _{ij}^{a},\inf \{\alpha _{ik}^{b},\alpha _{il}^{c}\}\} \quad \text {and} \quad \beta _{it}^{h}=\sup \{\beta _{ij}^{a},\sup \{\beta _{ik}^{b},\beta _{il}^{c}\}\} \end{aligned}$$then \(\alpha _{is}^{f}=\alpha _{it}^{h}\) and \(\beta _{is}^{f}=\beta _{it}^{h}\). Thus, \(([a_{ij}] \wedge [b_{ik}]) \wedge [c_{il}]=[a_{ij}] \wedge ([b_{ik}] \wedge [c_{il}])\).

\(\square \)

Proposition 10

Let \([a_{ij}]_{m \times {n_1}} \in D_{E_1}[U]\) and \([b_{ik}]_{m \times {n_2}} \in D_{E_2}[U]\). Then, the following De Morgan’s laws are valid:

-

i.

\(([a_{ij}] \vee [b_{ik}])^{{\tilde{c}}}=[a_{ij}]^{{\tilde{c}}} \wedge [b_{ik}]^{{\tilde{c}}}\)

-

ii.

\(([a_{ij}] \wedge [b_{ik}])^{{\tilde{c}}}=[a_{ij}]^{{\tilde{c}}} \vee [b_{ik}]^{{\tilde{c}}}\)

-

iii.

\(([a_{ij}] \, {{\underline{\vee }}} \, [b_{ik}])^{{\tilde{c}}}=[a_{ij}]^{{\tilde{c}}} \, {{\overline{\wedge }}} \, [b_{ik}]^{{\tilde{c}}}\)

-

iv.

\(([a_{ij}] \, {{\overline{\wedge }}} \, [b_{ik}])^{{\tilde{c}}}=[a_{ij}]^{{\tilde{c}}} \, {{\underline{\vee }}} \, [b_{ik}]^{{\tilde{c}}}\)

Proof

- iv. :

-

Let \([a_{ij}]_{m \times {n_1}} \in D_{E_1}[U]\) and \([b_{ik}]_{m \times {n_2}} \in D_{E_2}[U]\). Then,

$$\begin{aligned} ([a_{ij}] {{\overline{\wedge }}} [b_{ik}])^{\tilde{c}} = \left[ ^{\inf \{\alpha _{ij}^{a},\beta _{ik}^{b}\}}_{\sup \{\beta _{ij}^{a},\alpha _{ik}^{b}\}}\right] ^{\tilde{c}} = \left[ ^{\sup \{\beta _{ij}^{a},\alpha _{ik}^{b}\}}_{\inf \{\alpha _{ij}^{a},\beta _{ik}^{b}\}}\right] = \left[ a_{ij}\right] ^{\tilde{c}} {{\underline{\vee }}} \left[ b_{ij}\right] ^{\tilde{c}} \end{aligned}$$

\(\square \)

Note 5

The aforesaid products of d-matrices do not provide distributive property upon each other and commutative property. Moreover, ANDNOT-product and ORNOT-product do not provide associative property.

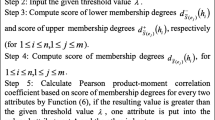

4 The configured soft decision-making method

This section first configures the SDM method (Aydın and Enginoğlu 2021a) to operate it in d-matrices space. Thus, we can employ this method in the presence of decision-making problems. The configured method is used to model a problem containing parameters and alternatives with multiple intuitionistic fuzzy values. This method consists of a pre-processing step and the main process steps. In the pre-processing, the multiple intuitionistic fuzzy values are inputted for each parameter and the alternatives corresponding to the parameters. In the first step of the main process, a d-matrix is constructed using the membership function, the non-membership function, and the multiple intuitionistic fuzzy values. In the second, a column matrix with the ivif-values is obtained by weighting the non-zero-indexed rows of the d-matrix with the zero-indexed one. In the third step, a score matrix is attained with the difference between membership and non-membership values in each entry of this matrix. Fourthly, an interval-valued fuzzy decision set over a set of alternatives is produced by normalising the score values and translating them to a closed classical subinterval of [0, 1]. In the final step, the optimal alternatives are selected through the linear ordering relation (Xu and Yager 2006). Henceforth, \(I_{n}=\{1,2,3,\dots ,n\}\) and \(I^{*}_{n}=\{0,1,2,\dots ,n\}\).

Algorithm Steps of the Configured Method

- Input Step.:

-

Input the values \({\mu ^{ij}_{t}}\) and \({\nu ^{ij}_{t}}\) such that \(i \in I^{*}_{m-1}\), \(j \in I_{n}\), and \(t \in I_{s}\)

Main Steps

- Step 1. :

-

Construct a d-matrix \([a_{ij}]_{m \times n}\) defined by \(a_{ij}:={^{\alpha ^{a}_{ij}}_{\beta ^{a}_{ij}}}\)

Here, \({\pi ^{ij}_{t}}=1-{\mu ^{ij}_{t}}-{\nu ^{ij}_{t}}\) , \(I=\left\{ p : {\mu ^{ij}_{p}}=\max \limits _{t}{\mu ^{ij}_{t}}\right\} \), \(J=\left\{ r : {\nu ^{ij}_{r}}=\max \limits _{t}{\nu ^{ij}_{t}}\right\} \), \(i \in I^{*}_{m-1}\), \(j \in I_{n}\), and \(t \in I_{s}\) such that

$$\begin{aligned} \alpha ^a_{ij}:=\left[ \frac{\min \limits _{t}{\mu ^{ij}_{t}}}{\max \limits _{t}{\mu ^{ij}_{t}} + \max \limits _{t}{\nu ^{ij}_{t}} + \min \left\{ \min \limits _{p \in I} {\pi ^{ij}_{p}},\min \limits _{r \in J} {\pi ^{ij}_{r}}\right\} } , \right. \left. \frac{\max \limits _{t}{\mu ^{ij}_{t}}}{\max \limits _{t}{\mu ^{ij}_{t}} + \max \limits _{t}{\nu ^{ij}_{t}} + \min \left\{ \min \limits _{p \in I} {\pi ^{ij}_{p}},\min \limits _{r \in J} {\pi ^{ij}_{r}}\right\} }\right] \end{aligned}$$and

$$\begin{aligned} \beta ^a_{ij}:=\left[ \frac{\min \limits _{t}{\nu ^{ij}_{t}}}{\max \limits _{t}{\mu ^{ij}_{t}} + \max \limits _{t}{\nu ^{ij}_{t}} + \min \left\{ \min \limits _{p \in I} {\pi ^{ij}_{p}},\min \limits _{r \in J} {\pi ^{ij}_{r}}\right\} } , \right. \left. \frac{\max \limits _{t}{\nu ^{ij}_{t}}}{\max \limits _{t}{\mu ^{ij}_{t}} + \max \limits _{t}{\nu ^{ij}_{t}} + \min \left\{ \min \limits _{p \in I} {\pi ^{ij}_{p}},\min \limits _{r \in J} {\pi ^{ij}_{r}}\right\} }\right] \end{aligned}$$ - Step 2. :

-

Obtain the ivif-valued column matrix \(\left[ ^{\alpha _{i1}}_{\beta _{i1}}\right] _{(m-1) \times 1}\) defined by

$$\begin{aligned} \alpha _{i1}:=\frac{1}{\lambda } \sum _{j=1}^{n} \alpha ^{a}_{0j} \alpha ^{a}_{ij} \quad \text {and} \quad \beta _{i1}:=\frac{1}{\lambda } \sum _{j=1}^{n} \beta ^{a}_{0j} \beta ^{a}_{ij} \end{aligned}$$such that \(i \in I_{m-1}\) Here,

$$\begin{aligned} \lambda :=\frac{1}{2} \sum _{j=1}^{n} \left( 1 + \frac{(\alpha ^{a}_{0j})^{-} + (\alpha ^{a}_{0j})^{+}}{2} - \frac{(\beta ^{a}_{0j})^{-} + (\beta ^{a}_{0j})^{+}}{2}\right) \end{aligned}$$ - Step 3. :

-

Obtain the score matrix \([s_{i1}]_{(m-1) \times 1}\) defined by \(s_{i1}:=\alpha _{i1}-\beta _{i1}\) such that \(i \in I_{m-1}\)

- Step 4. :

-

Obtain the decision set \(\{^{d(u_k)}u_k | u_k\in U\}\) such that

$$\begin{aligned} d(u_k)=\left\{ \begin{array}{rl}\left[ \frac{s_{k1}^- + |\min \limits _{i}s_{i1}^-|}{\max \limits _{i}s_{i1}^+ + |\min \limits _{i}s_{i1}^-|},\frac{s_{k1}^+ + |\min \limits _{i}s_{i1}^-|}{\max \limits _{i}s_{i1}^+ + |\min \limits _{i}s_{i1}^-|}\right] , &{} \max \limits _{i}s_{i1}^+ + |\min \limits _{i}s_{i1}^-| \ne 0 \\ {[}1,1],&{} \max \limits _{i}s_{i1}^+ + |\min \limits _{i}s_{i1}^-| = 0 \end{array}\right. \end{aligned}$$ - Step 5. :

-

Select the optimal elements among the alternatives via linear ordering relation (Xu and Yager 2006)

$$\begin{aligned}&\left[ \gamma ^{-}_1,\gamma ^{+}_1\right] \le _{_{XY}} \left[ \gamma ^{-}_2,\gamma ^{+}_2\right] \\&\Leftrightarrow \left[ \left( \gamma ^{-}_1 + \gamma ^{+}_1 < \gamma ^{-}_2 + \gamma ^{+}_2 \right) \vee \left( \gamma ^{-}_1 + \gamma ^{+}_1 = \gamma ^{-}_2 + \gamma ^{+}_2 \wedge \gamma ^{-}_1 - \gamma ^{+}_1 \le \gamma ^{-}_2 - \gamma ^{+}_2 \right) \right] \end{aligned}$$

Here, \(\alpha ^{a}_{0j}=[(\alpha ^{a}_{0j})^{-},(\alpha ^{a}_{0j})^{+}]\), \(\beta ^{a}_{0j}=[(\beta ^{a}_{0j})^{-},(\beta ^{a}_{0j})^{+}]\), and \(s_{i1}=[s_{i1}^-,s_{i1}^+]\).

5 An application of the configured method to performance-based value assignment problem

In this section, we apply the configured method to the PVA problem for seven known filters used in image denoising, namely Based on Pixel Density Filter (BPDF) (Erkan and Gökrem 2018), Modified Decision-Based Unsymmetric Trimmed Median Filter (MDBUTMF) (Esakkirajan et al. 2011), Decision-Based Algorithm (DBAIN) (Srinivasan and Ebenezer 2007), Noise Adaptive Fuzzy Switching Median Filter (NAFSMF) (Toh and Isa 2010), Different Applied Median Filter (DAMF) (Erkan et al. 2018), Adaptive Weighted Mean Filter (AWMF) (Tang et al. 2016), and Adaptive Riesz Mean Filter (ARmF) (Enginoğlu et al. 2019b). Hereinafter, let \(U=\{u_1,u_2,u_3,u_4,u_5,u_6,u_7\}\) be an alternative set such that \(u_1=\) “BPDF”, \(u_2=\) “MDBUTMF”, \(u_3=\) “DBAIN”, \(u_4=\) “NAFSMF”, \(u_5=\) “DAMF”, \(u_6=\) “AWMF”, and \(u_7=\) “ARmF”. Moreover, let \(E=\{x_1,x_2,x_3,x_4,x_5,x_6,x_7,x_8,x_9\}\) be a parameter set determined by a decision-maker such that \(x_1=\) “noise density \(10\%\)”, \(x_2=\) “noise density \(20\%\)”, \(x_3=\) “noise density \(30\%\)”, \(x_4=\) “noise density \(40\%\)”, \(x_5=\) “noise density \(50\%\)”, \(x_6=\) “noise density \(60\%\)”, \(x_7=\) “noise density \(70\%\)”, \(x_8=\) “noise density \(80\%\)”, and \(x_9=\) “noise density \(90\%\)”.

First, we consider 20 traditional test images, i.e. “Lena”, “Cameraman”, “Barbara”, “Baboon”, “Peppers”, “Living Room”, “Lake”, “Plane”, “Hill”, “Pirate”, “Boat”, “House”, “Bridge”, “Elaine”, “Flintstones”, “Flower”, “Parrot”, “Dark-Haired Woman”, “Blonde Woman”, and “Einstein”. To this end, we present the noise-removal performance values of the aforesaid filters by Structural Similarity (SSIM) (Wang et al. 2004) for the images at noise densities ranging from \(10\%\) to \(90\%\), in Tables 1, 2, 3, and 4, respectively. Moreover, we obtain the results herein by MATLAB R2021a. When the SSIM values provided in the tables are examined, it is observed that ARmF absolutely performs better than the other filters at all the noise densities and for all the images. However, it is non-obvious which one is the second and third etc. Our motivation is to overcome this problem.

For the problem, let \((\mu ^{ij}_{t})\) be ordered-vigintuple such that \(\mu ^{ij}_{t}\) corresponds to the SSIM results in Tables 1, 2, 3, and 4 obtained by \(t^{th}\) image for \(i^{th}\) filter at \(j^{th}\) noise density. Here, since \({\nu ^{ij}_{t}}=1-{\mu ^{ij}_{t}}\) and \({\pi ^{ij}_{t}}=0\) such that \(i \in I_{7}\), \(j \in I_{9}\), and \(t \in I_{20}\), then for d-matrix \([a_{ij}]\),

and

For example, the ordered-vigintuple

indicates SSIM results of DAMF for 20 traditional test images at noise density \(40\%\). Since

and

then \(a_{54}={^{[0.8207,0.9040]}_{[0.0127,0.0960]}}\). Here, [0.8207, 0.9040] signifies that the success of DAMF on image denoising at noise density \(40\%\) ranges from approximately \(82\%\) to \(90\%\). Moreover, [0.0127, 0.0960] means that the rate of DAMF’s failure in image denoising at the same noise density occurs approximately between \(1\%\) and \(9\%\). Similarly, the all rows of the d-matrix \([a_{ij}]\) but the zero-indexed row can be obtained. Besides, suppose that the noise-removal performances of the filters are more significant in high noise densities, in which noisy pixels outnumber uncorrupted pixels, then performance-based success would be more important in the presence of high noise densities than of the others. For example, let

Thus, the d-matrix \([a_{ij}]\), modelling the SSIM values provided in Tables 1, 2, 3, and 4, is as follows:

Second, we apply the configured method to \([a_{ij}]\). Moreover, we obtain the results herein by MATLAB R2021a.

- Step 2.:

-

The column matrix \(\left[ ^{\alpha _{i1}}_{\beta _{i1}}\right] \) is as follows:

$$\begin{aligned} \left[ ^{\alpha _{i1}}_{\beta _{i1}}\right] = \left[ \begin{array}{llll}{^{[0.2061,0.5573]}_{[0.0143,0.1151]}} \,\,\, {^{[0.3256,0.6280]}_{[0.0454,0.1309]}} \,\,\, {^{[0.2769,0.6317]}_{[0.0088,0.0977]}} \,\,\, {^{[0.3142,0.6629]}_{[0.0131,0.1078]}} \,\,\ {^{[0.3708,0.7197]}_{[0.0044,0.0730]}} \,\,\, {^{[0.3747,0.7238]}_{[0.0058,0.0774]}} \,\,\, {^{[0.3805,0.7283]}_{[0.0029,0.0700]}}\end{array} \right] ^{T} \end{aligned}$$To exemplify, \(\alpha _{11}\) and \(\beta _{11}\) are calculated as follows:

$$\begin{aligned} \begin{array}{rllll} \alpha _{11}&{}=\frac{1}{\lambda } \sum _{j=1}^{9} \alpha ^{a}_{0j} \alpha ^{a}_{1j} \\ &{} =\frac{1}{4.5}\left( \alpha ^{a}_{01} \alpha ^{a}_{11} + \alpha ^{a}_{02} \alpha ^{a}_{12} + \alpha ^{a}_{03} \alpha ^{a}_{13} + \alpha ^{a}_{04} \alpha ^{a}_{14} + \alpha ^{a}_{05} \alpha ^{a}_{15} + \alpha ^{a}_{06} \alpha ^{a}_{16} + \alpha ^{a}_{07} \alpha ^{a}_{17} + \alpha ^{a}_{08} \alpha ^{a}_{18} + \alpha ^{a}_{09} \alpha ^{a}_{19}\right) \\ &{} = \frac{1}{4.5}\left( [0,0.01] \cdot [0.9392,0.9666] + [0,0.05] \cdot [0.8872,0.9368]\right. \\ &{} \quad \left. + [0,0.1] \cdot [0.8145,0.8948] + [0.05,0.35] \cdot [0.7330,0.8465] + [0.2,0.45] \cdot [0.6392,0.7873]\right. \\ &{} \quad + [0.25,0.5] \cdot [0.5210,0.7135] + [0.8,0.85] \cdot [0.3982,0.6263] + [0.85,0.9] \cdot [0.2732,0.5243] \\ &{} \quad \left. + [0.9,0.95] \cdot [0.0909,0.3687]\right) \\ &{} = [0.2061,0.5573] \end{array} \end{aligned}$$and

$$\begin{aligned} \begin{array}{rl} \beta _{11}=&{}\frac{1}{\lambda } \sum _{j=1}^{9} \beta ^{a}_{0j} \beta ^{a}_{1j} \\ =&{}\frac{1}{4.5}\left( \beta ^{a}_{01} \beta ^{a}_{11} + \beta ^{a}_{02} \beta ^{a}_{12} + \beta ^{a}_{03} \beta ^{a}_{13} + \beta ^{a}_{04} \beta ^{a}_{14} + \beta ^{a}_{05} \beta ^{a}_{15} + \beta ^{a}_{06} \beta ^{a}_{16} + \beta ^{a}_{07} \beta ^{a}_{17} + \beta ^{a}_{08} \beta ^{a}_{18} + \beta ^{a}_{09} \beta ^{a}_{19}\right) \\ = &{} \frac{1}{4.5}\left( [0.9,0.95] \cdot [0.0060,0.0334] + [0.85,0.9] \cdot [0.0135,0.0632]+ [0.8,0.85] \cdot [0.0248,0.1052]\right. \\ &{} \quad + [0.25,0.5] \cdot [0.0399,0.1535] + [0.2,0.45] \cdot [0.0646,0.2127] + [0.05,0.35] \cdot [0.0940,0.2865] \\ &{} \quad + [0,0.1] \cdot [0.1456,0.3737] + [0,0.05] \cdot [0.2245,0.4757] \left. + [0,0.01] \cdot [0.3535,0.6313]\right) \\ =&{} [0.0143,0.1151] \end{array} \end{aligned}$$such that

$$\begin{aligned} \begin{array}{rl} \lambda =&{}\frac{1}{2} \sum _{j=1}^{9} \left( 1 + \frac{(\alpha ^{a}_{0j})^{-} + (\alpha ^{a}_{0j})^{+}}{2} - \frac{(\beta ^{a}_{0j})^{-} + (\beta ^{a}_{0j})^{+}}{2}\right) \\ =&{}\frac{1}{2} \left( \left( 1 + \frac{(\alpha ^{a}_{01})^{-} + (\alpha ^{a}_{01})^{+}}{2} - \frac{(\beta ^{a}_{01})^{-} + (\beta ^{a}_{01})^{+}}{2}\right) + \left( 1 + \frac{(\alpha ^{a}_{02})^{-} + (\alpha ^{a}_{02})^{+}}{2} - \frac{(\beta ^{a}_{02})^{-} + (\beta ^{a}_{02})^{+}}{2}\right) \right. \\ &{} \left. \quad + \left( 1 + \frac{(\alpha ^{a}_{03})^{-} + (\alpha ^{a}_{03})^{+}}{2} - \frac{(\beta ^{a}_{03})^{-} + (\beta ^{a}_{03})^{+}}{2}\right) +\left( 1 + \frac{(\alpha ^{a}_{04})^{-} + (\alpha ^{a}_{04})^{+}}{2} - \frac{(\beta ^{a}_{04})^{-} + (\beta ^{a}_{04})^{+}}{2}\right) \right. \\ &{} \left. \quad + \left( 1 + \frac{(\alpha ^{a}_{05})^{-} + (\alpha ^{a}_{05})^{+}}{2} - \frac{(\beta ^{a}_{05})^{-} + (\beta ^{a}_{05})^{+}}{2}\right) +\left( 1 + \frac{(\alpha ^{a}_{06})^{-} + (\alpha ^{a}_{06})^{+}}{2} - \frac{(\beta ^{a}_{06})^{-} + (\beta ^{a}_{06})^{+}}{2}\right) \right. \\ &{} \left. \quad + \left( 1 + \frac{(\alpha ^{a}_{07})^{-} + (\alpha ^{a}_{07})^{+}}{2} - \frac{(\beta ^{a}_{07})^{-} + (\beta ^{a}_{07})^{+}}{2}\right) + \left( 1 + \frac{(\alpha ^{a}_{08})^{-} + (\alpha ^{a}_{08})^{+}}{2} - \frac{(\beta ^{a}_{08})^{-} + (\beta ^{a}_{08})^{+}}{2}\right) \right. \\ &{} \left. \quad + \left( 1 + \frac{(\alpha ^{a}_{09})^{-} + (\alpha ^{a}_{09})^{+}}{2} - \frac{(\beta ^{a}_{09})^{-} + (\beta ^{a}_{09})^{+}}{2}\right) \right) \\ =&{}\frac{1}{2} \left[ \left( 1 + \frac{0 + 0.01}{2} - \frac{0.9 + 0.95}{2}\right) + \left( 1 + \frac{0 + 0.05}{2} - \frac{0.85 + 0.9}{2}\right) + \left( 1 + \frac{0 + 0.1}{2} - \frac{0.8 + 0.85}{2}\right) \right. \\ &{} \left. \quad + \left( 1 + \frac{0.05 + 0.35}{2} - \frac{0.25 + 0.5}{2}\right) + \left( 1 + \frac{0.2 + 0.45}{2} - \frac{0.2 + 0.45}{2}\right) + \left( 1 + \frac{0.25 + 0.5}{2} - \frac{0.05 + 0.35}{2}\right) \right. \\ &{} \left. \quad + \left( 1 + \frac{0.8 + 0.85}{2} - \frac{0 + 0.1}{2}\right) + \left( 1 + \frac{0.85 +0.9}{2} - \frac{0 + 0.05}{2}\right) + \left( 1 + \frac{0.9 + 0.95}{2} - \frac{0 + 0.01}{2}\right) \right] \\ =&{} 4.5 \end{array} \end{aligned}$$ - Step 3.:

-

The score matrix is as follows:

$$\begin{aligned} \begin{array}{rl} [s_{i1}]=&{} \left[ {[0.0909,0.5430]} \,\,\,\, {[0.1946,0.5826]} \,\,\,\, {[0.1792,0.6229]} \,\,\,\, {[0.2064,0.6498]} \right. \\ {} &{} \left. \,\,\,\, {[0.2977,0.7152]} \,\,\,\, {[0.2974,0.7181]} \,\,\,\, {[0.3105,0.7254]} \right] ^{T} \end{array} \end{aligned}$$Here,

$$\begin{aligned} s_{11} = \alpha _{11}-\beta _{11} = [0.2061,0.5573]- [0.0143,0.1151] = [0.0909,0.5430] \end{aligned}$$ - Step 4.:

-

The decision set is as follows:

$$\begin{aligned} \begin{array}{llll} \left\{ ^{[0.2228,0.7765]}\text {BPDF}, ^{[0.3498,0.8251]}\text {MDBUTMF}, ^{[0.3309,0.8744]}\text {DBAIN}, \right. \\ \,\,\,^{[0.3642,0.9074]}\text {NAFSMF}, \left. ^{[0.4761,0.9875]}\text {DAMF}, ^{[0.4757,0.9910]}\text {AWMF}, ^{[0.4917,1]}\text {ARmF}\right\} \end{array} \end{aligned}$$Here,

$$\begin{aligned} d(u_1)= & {} \left[ \frac{s_{11}^- + |\min \limits _{i}s_{i1}^-|}{\max \limits _{i}s_{i1}^+ + |\min \limits _{i}s_{i1}^-|},\frac{s_{11}^+ + |\min \limits _{i}s_{i1}^-|}{\max \limits _{i}s_{i1}^+ + |\min \limits _{i}s_{i1}^-|}\right] \\= & {} \left[ \frac{0.0909 + |0.0909|}{0.7254 + |0.0909|},\frac{0.5430 + |0.0909|}{0.7254 + |0.0909|}\right] \\= & {} [0.2228,0.7765] \end{aligned}$$ - Step 5.:

-

The ranking order

$$\begin{aligned} \text {BPDF} \prec \text {MDBUTMF} \prec \text {DBAIN} \prec \text {NAFSMF} \prec \text {DAMF} \prec \text {AWMF} \prec \text {ARmF} \end{aligned}$$is valid. Therefore, the performance ranking of the filters shows that ARmF outperforms the other filters.

Thirdly, we consider 40 test images in the TESTIMAGES database (Asuni and Giachetti 2014), i.e. “Almonds”, “Apples”, “Balloons”, “Bananas”, “Billiard Balls 1”, “Billiard Balls 2”, “Building”, “Cards 1”, “Cards 2”, “Carrots”, “Chairs”, “Clips”, “Coins”, “Cushions”, “Duck”, “Fence”, “Flowers”, “Garden Table”, “Guitar Bridge”, “Guitar Fret”, “Guitar Head”, “Keyboard 1”, “Keyboard 2”, “Lion”, “Multimeter”, “Pencils 1”, “Pencils 2”, “Pillar”, “Plastic”, “Roof”, “Scarf”, “Screws”, “Snails”, “Socks”, “Sweets”, “Tomatoes 1”, “Tomatoes 2”, “Tools 1”, “Tools 2”, and “Wood Game”. To this end, we present the results of the aforesaid filters by SSIM for the images at noise densities ranging from \(10\%\) to \(90\%\), in Tables 5, 6, 7, 8, 9, 10, and 11, respectively. Moreover, we obtain the results herein by MATLAB R2021a.

For the problem, let \((\mu ^{ij}_{t})\) be ordered-quadragintuple such that \(\mu ^{ij}_{t}\) corresponds to the SSIM results in Tables 5, 6, 7, 8, 9, 10, and 11, obtained by \(t^{th}\) image for \(i^{th}\) filter at \(j^{th}\) noise density. Here, since \({\nu ^{ij}_{t}}=1-{\mu ^{ij}_{t}}\) and \({\pi ^{ij}_{t}}=0\) such that \(i \in I_{7}\), \(j \in I_{9}\), and \(t \in I_{40}\), then for d-matrix \([b_{ij}]\),

and

For example, the ordered-quadragintuple

indicates SSIM results of BPDF for 40 test images at noise density \(10\%\). Since

and

then \(b_{11}={^{[0.9422,0.9696]}_{[0.0029,0.0304]}}\). Here, [0.9422, 0.9696] denotes that the success of BPDF on image denoising (i.e. correcting corrupted pixels) at noise density \(10\%\) occurs approximately between \(94\%\) and \(96\%\). Moreover, [0.0029, 0.0304] means that the rate of BPDF’s failure in image denoising at the same noise density ranges from approximately \(0\%\) to \(3\%\). Similarly, the all rows of the d-matrix \([b_{ij}]\) but the zero-indexed row can be obtained. Besides, suppose that the noise-removal performances of the filters are more significant in high noise densities, in which noisy pixels outnumber uncorrupted pixels, then performance-based success would be more important in the presence of high noise densities than of others. For example, let

Thus, the d-matrix \([b_{ij}]\), modelling the SSIM values provided in Tables 5, 6, 7, 8, 9, 10, and 11, is as follows:

Finally, we apply the configured method to \([b_{ij}]\). Moreover, we obtain the results herein by MATLAB R2021a.

- Step 2.:

-

The column matrix \(\left[ ^{\alpha _{i1}}_{\beta _{i1}}\right] \) is as follows:

$$\begin{aligned} \left[ ^{\alpha _{i1}}_{\beta _{i1}}\right] = \left[ \begin{array}{llll}{^{[0.1837,0.5585]}_{[0.0087,0.1125]}} \,\,\, {^{[0.3244,0.6369]}_{[0.0323,0.1490]}} \,\,\, {^{[0.2678,0.6434]}_{[0.0050,0.0924]}}\\ {^{[0.3383,0.6921]}_{[0.0065,0.0948]}}\\ {^{[0.3673,0.7252]}_{[0.0026,0.0723]}} \,\,\, {^{[0.3733,0.7299]}_{[0.0038,0.0755]}} \,\,\, {^{[0.3813,0.7369]}_{[0.0023,0.0623]}} \end{array}\right] ^{T} \end{aligned}$$ - Step 3.:

-

The score matrix is as follows:

$$\begin{aligned} \begin{array}{rl} [s_{i1}]=&{} \left[ {[0.0712,0.5497]} \,\,\,\, {[0.1754,0.6047]} \,\,\,\, {[0.1753,0.6384]} \,\,\,\, {[0.2436,0.6856]} \right. \\ {} &{} \left. \,\,\,\, {[0.2950,0.7225]} \,\,\,\, {[0.2979,0.7261]} \,\,\,\, {[0.3190,0.7347]} \right] ^{T} \end{array} \end{aligned}$$ - Step 4.:

-

The decision set is as follows:

$$\begin{aligned} \begin{array}{l} \left\{ ^{[0.1768,0.7705]}\text {BPDF}, ^{[0.3060,0.8387]}\text {MDBUTMF}, ^{[0.3059,0.8805]}\text {DBAIN}, ^{[0.3906,0.9392]}\text {NAFSMF}, \right. \\ \,\,\,\left. ^{[0.4544,0.9849]}\text {DAMF}, ^{[0.4580,0.9894]}\text {AWMF}, ^{[0.4842,1]}\text {ARmF}\right\} \end{array} \end{aligned}$$ - Step 5.:

-

The ranking order

$$\begin{aligned} \text {BPDF} \prec \text {MDBUTMF} \prec \text {DBAIN} \prec \text {NAFSMF} \prec \text {DAMF} \prec \text {AWMF} \prec \text {ARmF} \end{aligned}$$is valid. Therefore, the performance ranking of the filters shows that ARmF outperforms the other filters.

6 Comparative analysis

In this section, we compare the configured method with five SDM methods, namely iMBR01, iMRB02\((I_9)\), iCCE10, iCCE11, and iPEM, provided in (Arslan et al. 2021). For this reason, first, Table 12 presents the filters’ ranking orders provided in (Arslan et al. 2021) when the methods are applied to ifpifs-matrix \([a_{ij}]\) (Arslan et al. 2021) obtained using the results in Tables 1, 2, 3, and 4. Second, we construct ifpifs-matrix \([c_{ij}]\) using the membership and non-membership functions in (Arslan et al. 2021) and the filters’ noise-removal performance results provided in Tables 5, 6, 7, 8, 9, 10, and 11. We then apply five SDM methods to this ifpifs-matrix.

In Tables 13 and 14, we present the decision sets and the noise-removal filters’ ranking orders when five SDM methods are applied to \([c_{ij}]\), respectively. We reveal in Section 5 that the configured method produces the same ranking orders for the filters’ SSIM results obtained with 20 traditional test images and 40 test images at nine noise densities. Thus, the configured method confirms the ranking order provided in (Aydın and Enginoğlu 2021a) and those of iCCE10 and iCCE11 in Tables 12 and 14. On the other hand, although iPEM provides the same ranking order as iCCE10 and iCCE11 for 40 test images, iMBR01, iMRB02\((I_9)\), and iPEM generate different ranking orders for 20 traditional test images. Consequently, we observe that the configured method is more consistent than iMBR01, iMRB02\((I_9)\), and iPEM. Thus, these comments exhibit that the SDM method constructed with d-matrices is more advantageous in dealing with problems involving multiple measurement results.

7 Conclusion

In this paper, we defined the concept of d-matrices. Furthermore, we introduced its basic operations and investigated some of their basic properties. We then configured the SDM method (Aydın and Enginoğlu 2021a) to operate it in d-matrices space. Moreover, we applied it to two d-matrices constructed with SSIM results of the known noise-removal filters for 40 test images, provided in the TESTIMAGES database (Asuni and Giachetti 2014), and 20 traditional test images. This application results confirmed the one available in Aydın and Enginoğlu (2021a). Thus, the configured method enabled problems containing a large number of data to be processed on a computer. In addition, we applied five state-of-the-art SDM methods constructed with ifpifs-matrices to the same problem and compared the ranking performance of the configured method with those of the five methods.

The results in the present study manifested that the configured method was successfully applied to a decision-making problem containing ivif uncertainties. Therefore, further research should be focussed on developing effective SDM methods based on group decision making using AND/OR/ANDNOT/ORNOT-products of d-matrices. Moreover, it is possible to render the SDM methods constructed with fpfs-matrices (Enginoğlu and Memiş 2018d, 2020; Enginoğlu et al. 2018a, b, 2019c, d, 2021a) and ifpifs-matrices (Enginoğlu and Arslan 2020) operable in d-matrices space. Furthermore, the membership and non-membership functions used to obtain an ivif-value from multiple intuitionistic fuzzy values can be defined in a different way and used to construct a d-matrix in the first step of the configured method. Thus, these new methods can be applied to the problem featured in the current study and the results of this process can be compared with those herein. In addition, it is necessary and worthwhile to conduct theoretical and applied studies on varied topics, such as distance and similarity measures, by making use of the d-matrices. Researchers can also conduct studies on the various hybrid versions of soft sets and the other generalisations of fuzzy sets, such as hesitant fuzzy sets (Torra 2010), linear Diophantine fuzzy sets (Riaz and Hashmi 2019), spherical linear Diophantine fuzzy sets (Riaz et al. 2021), and picture fuzzy sets (Cuong 2014; Memiş 2021), and their matrices.

References

Arslan B, Aydın T, Memiş S, Enginoğlu S (2021) Generalisations of SDM methods in fpfs-matrices space to render them operable in ifpifs-matrices space and their application to performance ranking of the noise-removal filters. J New Theory (36):88–116. https://doi.org/10.53570/jnt.989335

Asuni N, Giachetti A (2014) TESTIMAGES: a large-scale archive for testing visual devices and basic image processing algorithms. STAG - Smart Tools and Apps for Graphics Conference

Atanassov KT (1986) Intuitionistic fuzzy sets. Fuzzy Sets Syst 20(1):87–96. https://doi.org/10.1016/S0165-0114(86)80034-3

Atanassov KT (2020) Interval-valued intuitionistic fuzzy sets. Studies in Fuzziness and Soft Computing, Springer, New York. https://springerlink.bibliotecabuap.elogim.com/book/10.1007/978-3-030-32090-4

Atanassov KT, Gargov G (1989) Interval valued intuitionistic fuzzy sets. Fuzzy Sets Syst 31(3):343–349. https://doi.org/10.1016/0165-0114(89)90205-4

Atmaca S (2017) Relationship between fuzzy soft topological spaces and \(({X},\tau _{e})\) parameter spaces. Cumhuriyet Sci J 38(4):77–85. https://doi.org/10.17776/csj.340541

Aydın T (2020) Interval-valued intuitionistic fuzzy parameterized interval-valued intuitionistic fuzzy soft matrices and their application to a performance-based value assignment problem. PhD Dissertation, Çanakkale Onsekiz Mart University, Çanakkale, Turkey, In Turkish

Aydın T, Enginoğlu S (2019) A configuration of five of the soft decision-making methods via fuzzy parameterized fuzzy soft matrices and their application to a performance-based value assignment problem. In: Kılıç M, Özkan K, Karaboyacı M, Taşdelen K, Kandemir H, Beram A (eds) International Conferences on Science and Technology; Natural Science and Technology, Prizren, Kosovo, pp 56–67

Aydın T, Enginoğlu S (2020) Configurations of SDM methods proposed between 1999 and 2012: A follow-up study. In: Yıldırım K (ed) 4th International Conference on Mathematics: ”An Istanbul Meeting for World Mathematicians”, Istanbul, Turkey, pp 192–211

Aydın T, Enginoğlu S (2021) Interval-valued intuitionistic fuzzy parameterized interval-valued intuitionistic fuzzy soft sets and their application in decision-making. J Ambient Intell Hum Comput 12(1):1541–1558. https://doi.org/10.1007/s12652-020-02227-0

Aydın T, Enginoğlu S (2021b) Some results on soft topological notions. Journal of New Results in Science 10(1):65–75. https://dergipark.org.tr/tr/pub/jnrs/issue/62194/910337

Çağman N, Enginoğlu S (2010) Soft matrix theory and its decision making. Comput Math Appl 59(10):3308–3314. https://doi.org/10.1016/j.camwa.2010.03.015

Çağman N, Enginoğlu S (2010) Soft set theory and uni-int decision making. Eur J Oper Res 207(2):848–855. https://doi.org/10.1016/j.ejor.2010.05.004

Çağman N, Enginoğlu S (2012) Fuzzy soft matrix theory and its application in decision making. Iran J Fuzzy Syst 9(1):109–119. http://ijfs.usb.ac.ir/article_229.html

Çağman N, Çıtak F, Enginoğlu S (2010) Fuzzy parameterized fuzzy soft set theory and its applications. Turk J Fuzzy Syst 1(1):21–35

Çağman N, Çıtak F, Enginoğlu S (2011a) FP-soft set theory and its applications. Ann Fuzzy Math Inf 2(2):219–226. http://www.afmi.or.kr/papers/2011/Vol-02_No-02/AFMI-2-2(219-226)-J-110329R1.pdf

Çağman N, Enginoğlu S, Çıtak F (2011b) Fuzzy soft set theory and its applications. Iran J Fuzzy Syst 8(3):137–147. http://ijfs.usb.ac.ir/article_292.html

Çıtak F, Çağman N (2015) Soft int-rings and its algebraic applications. J Intell Fuzzy Syst 28(3):1225–1233. https://doi.org/10.3233/IFS-141406

Cuong BC (2014) Picture fuzzy sets. J Comput Sci Cybern 30(4):409–420. https://doi.org/10.15625/1813-9663/30/4/5032

Deli I, Çağman N (2015) Intuitionistic fuzzy parameterized soft set theory and its decision making. Appl Soft Comput 28:109–113. https://doi.org/10.1016/j.asoc.2014.11.053

Deli I, Karataş S (2016) Interval valued intuitionistic fuzzy parameterized soft set theory and its decision making. J Intell Fuzzy Syst 30(4):2073–2082. https://doi.org/10.3233/IFS-151920

Enginoğlu S, Arslan B (2020) Intuitionistic fuzzy parameterized intuitionistic fuzzy soft matrices and their application in decision-making. Comput Appl Math Article Number: 325 39(4):1–20. https://doi.org/10.1007/s40314-020-01325-1

Enginoğlu S, Çağman N (2020) Fuzzy parameterized fuzzy soft matrices and their application in decision-making. TWMS J Appl Eng Math 10(4):1105–1115. http://jaem.isikun.edu.tr/web/images/articles/vol.10.no.4/25.pdf

Enginoğlu S, Memiş S (2018a) Comment on fuzzy soft sets [The Journal of Fuzzy Mathematics 9(3), 2001, 589-602]. International Journal of Latest Engineering Research and Applications 3(9):1–9, https://www.ijlera.com/papers/v3-i9/1.201809134.pdf

Enginoğlu S, Memiş S (2018b) A configuration of some soft decision-making algorithms via fpfs-matrices. Cumhuriyet Sci J 39(4):871–881. https://doi.org/10.17776/csj.409915

Enginoğlu S, Memiş S (2018c) A review on an application of fuzzy soft set in multicriteria decision making problem [P. K. Das, R. Borgohain, International Journal of Computer Applications 38 (2012) 33–37]. In: Akgül M, Yılmaz I, İpek A (eds) International Conference on Mathematical Studies and Applications. Karaman, Turkey, pp 173–178

Enginoğlu S, Memiş S (2018) A review on some soft decision-making methods. In: Akgül M, Yılmaz I, İpek A (eds) International conference on mathematical studies and applications. Karaman, Turkey, pp 437–442

Enginoğlu S, Memiş S (2020) A new approach to the criteria-weighted fuzzy soft max-min decision-making method and its application to a performance-based value assignment problem. J New Results Sci 9(1):19–36. http://dergipark.org.tr/tr/pub/jnrs/issue/53974/709375

Enginoğlu S, Öngel T (2020) Configurations of several soft decision-making methods to operate in fuzzy parameterized fuzzy soft matrices space. Eskişehir Technical University Journal of Science and Technology A-Applied Sciences and Engineering 21(1):58–71. https://doi.org/10.18038/estubtda.562578

Enginoğlu S, Çağman N, Karataş S, Aydın T (2015) On soft topology. El-Cezerî J Sci Eng 2(3):23–38. https://doi.org/10.31202/ecjse.67135

Enginoğlu S, Memiş S, Arslan B (2018a) Comment (2) on soft set theory and uni-int decision-making [European Journal of Operational Research, (2010) 207, 848–855]. J New Theory (25):84–102. https://dergipark.org.tr/download/article-file/594503

Enginoğlu S, Memiş S, Öngel T (2018b) Comment on soft set theory and uni-int decision-making [european journal of operational research, (2010) 207, 848-855]. J New Results Sci 7(3):28–43. https://dergipark.org.tr/en/pub/jnrs/issue/40346/482909

Enginoğlu S, Ay M, Çağman N, Tolun V (2019a) Classification of the monolithic columns produced in Troad and Mysia Region ancient granite quarries in Northwestern Anatolia via soft decision-making. Bilge International Journal of Science and Technology Research 3(Special Issue):21–34. https://doi.org/10.30516/bilgesci.646126

Enginoğlu S, Erkan U, Memiş S (2019) Pixel similarity-based adaptive Riesz mean filter for salt-and-pepper noise removal. Multimed Tools Appl 78:35401–35418. https://doi.org/10.1007/s11042-019-08110-1

Enginoğlu S, Memiş S, Çağman N (2019c) A generalisation of fuzzy soft max-min decision-making method and its application to a performance-based value assignment in image denoising. El-Cezerî J Sci Eng 6(3):466–481. https://doi.org/10.31202/ecjse.551487

Enginoğlu S, Memiş S, Karaaslan F (2019d) A new approach to group decision-making method based on TOPSIS under fuzzy soft environment. J New Results Sci 8(2):42–52. https://dergipark.org.tr/tr/download/article-file/904374

Enginoğlu S, Aydın T, Memiş S, Arslan B (2021a) Operability-oriented configurations of the soft decision-making methods proposed between 2013 and 2016 and their comparisons. J New Theory (34):82–114. https://dergipark.org.tr/en/pub/jnt/issue/61070/896315

Enginoğlu S, Aydın T, Memiş S, Arslan B (2021b) SDM methods’ configurations (2017–2019) and their application to a performance-based value assignment problem: A follow up study. Ann Optim Theory Pract 4(1):41–85. https://doi.org/10.22121/AOTP.2021.287404.1069

Erkan U, Gökrem L (2018) A new method based on pixel density in salt and pepper noise removal. Turk J Electr Eng Comput Sci 26(1):162–171. https://doi.org/10.3906/elk-1705-256

Erkan U, Gökrem L, Enginoğlu S (2018) Different applied median filter in salt and pepper noise. Comput Electr Eng 70:789–798. https://doi.org/10.1016/j.compeleceng.2018.01.019

Esakkirajan S, Veerakumar T, Subramanyam AN, PremChand CH (2011) Removal of high density salt and pepper noise through modified decision based unsymmetric trimmed median filter. IEEE Signal Process Lett 18(5):287–290. https://doi.org/10.1109/LSP.2011.2122333

Garg H, Arora R (2020) TOPSIS method based on correlation coefficient for solving decision-making problems with intuitionistic fuzzy soft set information. AIMS Math 5(4):2944–2966. https://doi.org/10.3934/math.2020190

Jiang Y, Tang Y, Chen Q, Liu H, Tang J (2010) Interval-valued intuitionistic fuzzy soft sets and their properties. Comput Math Appl 60(3):906–918. https://doi.org/10.1016/j.camwa.2010.05.036

Karaaslan F (2016) Intuitionistic fuzzy parameterized intuitionistic fuzzy soft sets with applications in decision making. Ann Fuzzy Math Inf 11(4):607–619. http://www.afmi.or.kr/papers/2016/Vol-11_No-04/PDF/AFMI-11-4(607-619)-H-150813-1R1.pdf

Kumar K, Garg H (2018) TOPSIS method based on the connection number of set pair analysis under interval-valued intuitionistic fuzzy set environment. Comput Appl Math 37(2):1319–1329. https://doi.org/10.1007/s40314-016-0402-0

Liu Y, Jiang W (2020) A new distance measure of interval-valued intuitionistic fuzzy sets and its application in decision making. Soft Comput 24(9):6987–7003. https://doi.org/10.1007/s00500-019-04332-5

Maji PK, Biswas R, Roy AR (2001) Fuzzy soft sets. J Fuzzy Math 9(3):589–602

Maji PK, Roy AR, Biswas R (2002) An application of soft sets in a decision making problem. Comput Math Appl 44(8–9):1077–1083. https://doi.org/10.1016/S0898-1221(02)00216-X

Memiş S (2021) A study on picture fuzzy sets. In: Çuvalcıoğlu G (ed) 7th IFS and Contemporary Mathematics Conference. Mersin, Turkey, pp 125–132

Memiş S, Enginoğlu S (2019) An application of fuzzy parameterized fuzzy soft matrices in data classification. In: Kılıç M, Özkan K, Karaboyacı M, Taşdelen K, Kandemir H, Beram A (eds) International Conferences on Science and Technology; Natural Science and Technology, Prizren, Kosovo, pp 68–77

Memiş S, Enginoğlu S, Erkan U (2019) A data classification method in machine learning based on normalised Hamming pseudo-similarity of fuzzy parameterized fuzzy soft matrices. Bilge Int J Sci Technol Res 3(Special Issue):1–8. https://doi.org/10.30516/bilgesci.643821

Memiş S, Arslan B, Aydın T, Enginoğlu S, Camcı Ç (2021a) A classification method based on Hamming pseudo-similarity of intuitionistic fuzzy parameterized intuitionistic fuzzy soft matrices. Journal of New Results in Science 10(2):59–76, https://dergipark.org.tr/en/pub/jnrs/issue/64701/981326

Memiş S, Enginoğlu S, Erkan U (2021) Numerical data classification via distance-based similarity measures of fuzzy parameterized fuzzy soft matrices. IEEE Access 9:88583–88601. https://doi.org/10.1109/ACCESS.2021.3089849

Min WK (2008) Interval-valued intuitionistic fuzzy soft sets. J Korean Inst Intell Syst 18(3):316–322. https://doi.org/10.5391/JKIIS.2008.18.3.316

Mishra AR, Rani P (2018) Interval-valued intuitionistic fuzzy WASPAS method: application in reservoir flood control management policy. Group Decis Negot 27(6):1047–1078. https://doi.org/10.1007/s10726-018-9593-7

Molodtsov D (1999) Soft set theory-first results. Comput Math Appl 37(4–5):19–31. https://doi.org/10.1016/S0898-1221(99)00056-5

Molodtsov D (2004) The theory of soft sets. URSS Publishers, Moscow, Russia ((in Russian))

Petchimuthu S, Garg H, Kamacı H, Atagün AO (2020) The mean operators and generalized products of fuzzy soft matrices and their applications in MCGDM. Comput Appl Math Article Number: 68 39(2):1–32. https://doi.org/10.1007/s40314-020-1083-2

Riaz M, Hashmi MR (2017) Fuzzy parameterized fuzzy soft topology with applications. Ann Fuzzy Math Inf 13(5):593–613. https://doi.org/10.30948/afmi.2017.13.5.593

Riaz M, Hashmi MR (2019) Linear Diophantine fuzzy set and its applications towards multi-attribute decision-making problems. J Intell Fuzzy Syst 37(4):5417–5439. https://doi.org/10.3233/JIFS-190550

Riaz M, Hashmi MR, Farooq A (2018) Fuzzy parameterized fuzzy soft metric spaces. J Math Anal 9(2):25–36. http://www.ilirias.com/jma/repository/docs/JMA9-2-3.pdf

Riaz M, Hashmi MR, Pamucar D, Chu Y (2021) Spherical linear Diophantine fuzzy sets with modeling uncertainties in MCDM. Comput Model Eng Sci 126(3):1125–1164. https://doi.org/10.32604/cmes.2021.013699

Senapati T, Shum KP (2019) Atanassov’s interval-valued intuitionistic fuzzy set theory applied in KU-subalgebras. Discr Math Algorithms Appl 11(2):16. https://doi.org/10.1142/S179383091950023X

Şenel G (2016) A new approach to hausdorff space theory via the soft sets. Mathematical Problems in Engineering 2016:Article ID 2196743, pages 6, https://doi.org/10.1155/2016/2196743

Şenel G (2018) Analyzing the locus of soft spheres: Illustrative cases and drawings. European Journal of Pure and Applied Mathematics 11(4):946–957. https://doi.org/10.29020/nybg.ejpam.v11i4.3321

Sezgin A (2016) A new approach to semigroup theory I: Soft union semigroups, ideals and bi-ideals. Algebra Letters Article ID 3, 2016:1–46, http://scik.org/index.php/abl/article/view/2989

Sezgin A, Çağman N, Çıtak F (2019) \(\alpha \)-inclusions applied to group theory via soft set and logic. Communications Faculty of Sciences University of Ankara Series A1 Mathematics and Statistics 68(1):334–352. https://doi.org/10.31801/cfsuasmas.420457

Srinivasan KS, Ebenezer D (2007) A new fast and efficient decision-based algorithm for removal of high density impulse noises. IEEE Signal Process Lett 14:189–192. https://doi.org/10.1109/LSP.2006.884018

Sulukan E, Çağman N, Aydın T (2019) Fuzzy parameterized intuitionistic fuzzy soft sets and their application to a performance-based value assignment problem. Journal of New Theory (29):79–88, https://dergipark.org.tr/tr/download/article-file/906764

Tang Z, Yang Z, Liu K, Pei Z (2016) A new adaptive weighted mean filter for removing high density impulse noise. In: Eighth International Conference on Digital Image Processing (ICDIP 2016), International Society for Optics and Photonics, vol 10033, pp 1003353/1–5, https://doi.org/10.1117/12.2243838

Thomas J, John SJ (2016) A note on soft topology. Journal of New Results in Science 5(11):24–29, https://dergipark.org.tr/tr/pub/jnrs/issue/27287/287227

Toh KKV, Isa NAM (2010) Noise adaptive fuzzy switching median filter for salt-and-pepper noise reduction. IEEE Signal Process Lett 17(3):281–284. https://doi.org/10.1109/LSP.2009.2038769

Torra V (2010) Hesitant fuzzy sets. Int J Intell Syst 25(6):529–539. https://doi.org/10.1002/int.20418

Ullah A, Karaaslan F, Ahmad I (2018) Soft uni-Abel-Grassmann’s groups. European Journal of Pure and Applied Mathematics 11(2):517–536. https://doi.org/10.29020/nybg.ejpam.v11i2.3228

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: From error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612. https://doi.org/10.1109/TIP.2003.819861

Xu Z, Yager RR (2006) Some geometric aggregation operators based on intuitionistic fuzzy sets. Int J Gen Syst 35(4):417–433. https://doi.org/10.1080/03081070600574353

Xue Y, Deng Y, Garg H (2021) Uncertain database retrieval with measure-based belief function attribute values under intuitionistic fuzzy set. Inf Sci 546:436–447. https://doi.org/10.1016/j.ins.2020.08.096

Zadeh LA (1965) Fuzzy sets. Inf Control 8(3):338–353. https://doi.org/10.1016/S0019-9958(65)90241-X

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by Anibal Tavares de Azevedo.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Aydın, T., Enginoğlu, S. Interval-valued intuitionistic fuzzy parameterized interval-valued intuitionistic fuzzy soft matrices and their application to performance-based value assignment to noise-removal filters. Comp. Appl. Math. 41, 192 (2022). https://doi.org/10.1007/s40314-022-01893-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-022-01893-4