Abstract

We propose a class of composite Newton–Jarratt iterative methods with increasing convergence order for approximating the solutions of systems of nonlinear equations. Novelty of the methods is that in each step the order of convergence is increased by an amount of two at the cost of only one additional function evaluation. Moreover, the use of only a single inverse operator in each iteration makes the algorithms computationally more efficient. Theoretical results regarding convergence and computational efficiency are verified through numerical problems, including those that arise from boundary value problems. By way of comparison, it is shown that the novel methods are more efficient than their existing counterparts, especially when applied to solve the large systems of equations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Construction of fixed point iterative methods for solving nonlinear equations or systems of nonlinear equations is an interesting and challenging task in numerical analysis and many applied scientific branches. The importance of this subject has led to the development of many numerical methods, most frequently of iterative nature (see Ortega and Rheinboldt 1970; Argyros 2007). With the advancement of computer hardware and software, the problem of solving nonlinear equations by numerical methods has gained an additional importance. In this paper, we consider the problem of approximating a solution \(t^*\) of the equation \(\mathrm {F}(t)=0\), where \(\mathrm {F}:\Omega \subset {\mathbb {R}}^m\rightarrow {\mathbb {R}}^m\), by iterative methods of a high order of convergence. The solution \(t^*\) can be obtained as a fixed point of some function \(\phi :\Omega \subset {\mathbb {R}}^m\rightarrow {\mathbb {R}}^m\) by means of fixed point iteration

There are a variety of iterative methods for solving nonlinear equations. A basic method is the well-known quadratically convergent Newton’s method (Argyros 2007)

where \( \mathrm {F'}({t})^{-1}\) is the inverse of Fréchet derivative \(\mathrm {F'}(t)\) of the function \(\mathrm {F}(t).\) This method converges if the initial approximation \(t_0\) is closer to solution \(t^*\) and \( \mathrm {F'}({t})^{-1}\) exists in the neighborhood \(\Omega \) of \(t^*.\) To achieve the higher order of convergence, a number of modified Newton’s or Newton-like methods have been proposed in the literature, see, for example (Cordero and Torregrosa 2006; Babajee et al. 2010; Behl et al. 2017; Homeier 2004; Darvishi and Barati 2007; Cordero and Torregrosa 2007; Cordero et al. 2010, 2012; Esmaeili and Ahmadi 2015; Noor and Waseem 2009; Alzahrani et al. 2018; Xiao and Yin 2017, 2018; Sharma et al. 2016; Lotfi et al. 2015; Choubey et al. 2018) and references therein. Throughout this paper, we use \(M_i^{(p)}\) to denote an i-th iteration method of convergence order p.

The principal goal and motivation in developing iterative methods is to achieve convergence order as high as possible by utilizing number of evaluations as small as possible so that the methods may possess high efficient character. The most obvious barrier in the development of efficient methods is the evaluation of inverse of a matrix since it requires a lengthy calculation work. Therefore, it will turn out to be judicious if we use minimum number of such inversions as possible (Argyros and Regmi 2019; Regmi 2021). With these considerations, here we propose multi-step iterative methods with increasing order of convergence. First, we present a cubically convergent two-step scheme with first step as Jarratt iteration and second is Newton-like iteration. Then on the basis of this scheme a three-step scheme of fifth-order convergence is proposed. Furthermore, in quest of more fast convergence the scheme is generalized to \(q+1\) Newton–Jarratt steps with increasing convergence order \(2q+1\) \((q \in {\mathbb {N}})\). The novel feature is that in each step the order of convergence is increased by an amount of two at the cost of only one additional function evaluation. Evaluation of the inverse operator \(\mathrm {F'}({t})^{-1}\) remains the same throughout which also points to the name ‘methods with frozen inverse operator’.

Rest of the paper is structured as follows. Some basic definitions relevant to the present work are provided in Sect. 2. Section 3 includes development of the third- and fifth-order methods with their analysis of convergence. Then the generalized version consisting of \(q+1\)-step scheme with convergence order \(2q+1\) is presented in Sect. 4. The computational efficiency is discussed and compared with the existing methods in Sect. 5. In Sect. 6, various numerical examples are considered to confirm the theoretical results. Concluding remarks are given in Sect. 7.

2 Basic definitions

2.1 Order of convergence

Let \(\{t_k\}_{k\ge 0}\) be a sequence in \({\mathbb {R}}^m\) which converges to \(t^*\). Then convergence is called of order p, \(p > 1,\) if there exists M, \(M > 0,\) and \(k_0\) such that

or

where \(\epsilon _k = t_k - t^*\). The convergence is called linear if \(p = 1\) and there exists M such that \(0< M < 1\).

2.2 Error equation

Let \(\epsilon _k = t_k - t^*\) be the error in the k-th iteration, we call the relation

as the error equation. Here, p is the order of convergence, L is a p -linear function, i.e. \(L\in {\mathcal {L}}({\mathbb {R}}^m \times {\mathop {\cdot \,\cdot \,\cdot \,\cdot }\limits ^{p-times}}\times {\mathbb {R}}^m, {\mathbb {R}}^m )\), \({\mathcal {L}}\) denotes the set of bounded linear functions.

2.3 Computational order of convergence

Let \(t^*\) be a zero of the function \(\mathrm {F}\) and suppose that \(t_{n-1}\), \(t_{n}\), \(t_{n+1}\) and \(t_{n+2}\) are the four consecutive iterations close to \(t^*.\) Then, the computational order of convergence (COC) can be approximated using the formula (see Weerkoon and Fernando 2000)

2.4 Computational efficiency

Computational efficiency of an iterative method is measured by the efficiency index \(E = p^{1/C}\) or \(E=\frac{\log p}{C}\) (see Ostrowski 1960), where p is the order of convergence and C is the computational cost per iteration.

3 Formulation of basic methods

In what follows first we will introduce the basic third- and fifth-order iterative methods. These are called basic methods since they pave the way for the generalized algorithm to be presented in next section.

3.1 Third-order scheme

Our aim is to develop a method which accelerates the convergence rate of Newton method (1) using minimum number of function evaluations and inverse operators. Thus, it will turn out to be judicious if we consider the two-step iteration scheme of the type

where \(Q_{k}=\mathrm {F'}(t_k)^{-1}\mathrm {F'}(w_k)\), \(a_1\) and \(a_2\) are arbitrary constants and \(\text {I}\) is an \(m\times m\) identity matrix. Idea of the first step is taken from the well-known Jarratt method (Cordero et al. 2010) whereas the second step is based on Newton-like iteration.

We introduce some known notations and results (Cordero et al. 2010), which are needed to obtain the convergence order of new method. Let \(\mathrm {F}:\Omega \subseteq {\mathbb {R}}^{m}\rightarrow {\mathbb {R}}^{m}\) be sufficiently differentiable in \(\Omega \). The q-th derivative of \(\mathrm {F}\) at \(u\in {\mathbb {R}}^m\), \(q\ge 1\), is the q-linear function \(\mathrm {F}^{(q)}(u): {\mathbb {R}}^{m}\times \cdots \times {\mathbb {R}}^{m}\rightarrow {\mathbb {R}}^{m}\) such that \(\mathrm {F}^{(q)}(u)(v_1,\ldots ,v_q )\in {\mathbb {R}}^m\). It is easy to observe that

-

(i)

\(\mathrm {F}^{(q)}(u)(v_1,\ldots ,v_{q})\in {\mathcal {L}}({\mathbb {R}}^m)\),

-

(ii)

\(\mathrm {F}^{(q)}(u)(v_{\sigma (1)},\ldots ,v_{\sigma (q)} )=\mathrm {F}^{(q)}(u)(v_1,\ldots ,v_{q})\), for all permutation \(\sigma \) of \(\{1,2,\ldots ,q\}\).

From the above properties, we can use the following notation:

-

(a)

\(\mathrm {F}^{(q)}(u)(v_1,\ldots ,v_{q})=\mathrm {F}^{(q)}(u)v_1,\ldots ,v_{q}\),

-

(b)

\(\mathrm {F}^{(q)}(u)v^{q-1}\mathrm {F}^{(p)}v^p =\mathrm {F}^{(q)}(u)\mathrm {F}^{(p)}(u)v^{q+p-1}\).

On the other hand, for \(t^*+h\in {\mathbb {R}}^{m}\) lying in a neighborhood of a solution \(t^*\) of \(\mathrm {F}(t)=0\), we can apply Taylor’s expansion and assuming that the Jacobian matrix \(\mathrm {F'}(t^*)\) is nonsingular, we have

where \(K_q=\frac{1}{q!}\mathrm {F'}(t^*)^{-1}\mathrm {F}^{(q)}(t^*)\), \(q\ge 2\). We observe that \(K_qh^q\in {\mathbb {R}}^m\) since \(\mathrm {F}^{(q)}(t^*)\in {\mathcal {L}}({\mathbb {R}}^{m}\times \cdots \times {\mathbb {R}}^{m}, {\mathbb {R}}^{m})\) and \(\mathrm {F'}(t^*)^{-1}\in {\mathcal {L}}({\mathbb {R}}^{m})\). In addition, we can express \(\mathrm {F'}\) as

where \(\text {I}\) is the identity matrix. Therefore, \(qK_qh^{q-1}\in {\mathcal {L}}({\mathbb {R}}^{m})\). From (3), we obtain

where

To analyze the convergence properties of scheme (2), we prove the following theorem:

Theorem 1

Let the function \(\mathrm {F}:\Omega \subset {\mathbb {R}}^m\rightarrow {\mathbb {R}}^m\) be sufficiently differentiable in an open neighborhood \(\Omega \) of its zero \(t^*\). Suppose that \(\mathrm {F'}(t)\) is continuous and nonsingular in \(t^*\). If an initial approximation \(t_0\) is sufficiently close to \(t^*\), then order of convergence of method (2) is at least 3, provided \(a_1=\frac{13}{12}\) and \(a_2=-\frac{3}{4}\).

Proof

Let \(\epsilon _k = t_k- t^*\) and \(\Gamma =\mathrm {F'}(t^*)^{-1}\) exist. Developing \(\mathrm {F}(t_k)\) in a neighborhood of \(t^*\), we have that

where \(A_q=\frac{1}{q!}\Gamma \mathrm {F}^{(q)}(t^*)\), \(q=2, 3, \ldots \), \(\mathrm {F}^{(q)}(t^*) \in {\mathcal {L}}({\mathbb {R}}^m \times {\mathop {\cdot \,\cdot \,\cdot \,\cdot }\limits ^{q-times}}\times {\mathbb {R}}^m, {\mathbb {R}}^m )\), \(\Gamma \in {\mathcal {L}}({\mathbb {R}}^m, {\mathbb {R}}^m)\) and \(A_q (\epsilon _k)^q= A_q(\epsilon _k,\epsilon _k,{\mathop {\cdot \,\cdot \,\cdot \,\cdot }\limits ^{q-times}},\epsilon _k)\in {\mathbb {R}}^m\) with \(\epsilon _k \in {\mathbb {R}}^m\). Also,

where \(B_1 = -2A_2\), \(B_2 = 4A_2^2-3A_3\), \(B_3 = -(8A_2^3-6A_2A_3-6A_3A_2+4A_4)\) and \(B_4 = (16A_2^4+9A_3^2-12A_2^2A_3-12A_2A_3A_2-12A_3A_2^2+8A_2A_4+8A_4A_2-5A_5).\) Applying (6) and (8) in the first step of (2), we have

Taylor expansions of \(\mathrm {F}(w_k)\) and \(\mathrm {F'}(w_k)\) about \(t^*\) yield

and

Then simple calculations yield

Substituting (6), (8), (9) and (13) in second step of (2), we obtain

It easy to prove that for the parameters \(a_1=\frac{13}{12}\) and \(a_2=-\frac{3}{4}\), the error equation (14) produces the maximum order of convergence. For these values of parameters above equation reduces to

This proves the third order of convergence. \(\square \)

Thus, the proposed Newton–Jarratt third-order method (2) is finally presented as

In terms of computational cost this formula uses one function, two derivatives and one matrix inversion per iteration.

3.2 Fifth-order scheme

Based on the third-order scheme (16), we consider the following three-step Newton–Jarratt composition:

where \(d_1\) and \(d_2\) are some parameters to be determined. This new scheme requires one extra function evaluation in addition to the evaluations of scheme (16). Following theorem proves convergence properties of (17).

Theorem 2

Let the function \(\mathrm {F}:\Omega \subset {\mathbb {R}}^m\rightarrow {\mathbb {R}}^m\) be sufficiently differentiable in an open neighborhood \(\Omega \) of its zero \(t^*\). Suppose that \(\mathrm {F'}(t)\) is continuous and nonsingular in \(t^*\). If an initial approximation \(t_0\) is sufficiently close to \(t^*\), then order of convergence of method (17) is at least 5, provided that \(d_1=\frac{5}{2}\) and \(d_2=-\frac{3}{2}\).

Proof

From (15), error equation of second step of (16) can be written as

Let \(\epsilon _{y_k}= y_k- t^*.\) Then, the Taylor expansion of \(\mathrm {F}(y_k)\) about \(t^*\) yields

Using Eqs. (8), (12), (18) and (19) in third step of (17), we obtain

It can be easily shown that for parameters \(d_1 =\frac{5}{2}\) and \(d_2 =-\frac{3}{2}\), the error equation (20) produces the maximum order of convergence. For this set of parameters, Eq. (20) reduces to

Hence the required result follows. \(\square \)

Thus, the Newton–Jarratt fifth-order method is expressed as

In terms of computational cost this formula requires two functions, two derivatives and one inverse operator per iteration.

4 Generalized method

The generalized \(q+1\)-step Newton–Jarratt composite scheme, with the base as three-step scheme (22), can be expressed as follows:

where \(q\ge 2\), \(w_k^{(0)}=w_k\), \(Q_{k}=\mathrm {F'}(t_k)^{-1}\mathrm {F'}(w_k)\) and \(\varphi (t_k,w_k)= \frac{1}{2}\big (5 \, \text {I} -3 \, Q_k\big ).\)

To analyze the convergence property, we prove the following theorem:

Theorem 3

Let the function \(\mathrm {F}:\Omega \subset {\mathbb {R}}^m\rightarrow {\mathbb {R}}^m\) be sufficiently differentiable in an open neighborhood \(\Omega \) of its zero \(t^*\). Suppose \(\mathrm {F'}(t)\) is continuous and nonsingular in \(t^*\). If an initial approximation \(t_0\) is sufficiently close to \(t^*\), the sequence \(\{t_k\}\) generated by method (23) for \(t_0\in \Omega \) converges to \(t^*\) with order \(2q+1\) for \(q\in {\mathbb {N}}\).

Proof

Let \(\epsilon _k= \, t_k-t^*,\) \(\epsilon _{w_k^{(q-1)}}= w_k^{(q-1)}- t^*.\) Taylor’s expansion of \(\mathrm {F}(w_k^{(q-1)})\) about \(t^*\) yields

Using (8) and (24), we have that

We write Eq. (13) as

Using Eqs. (25) and (26), we get

Then last step of (23) yields

For \(q=3,4,5 \), we obtain the corresponding error equations as

and

Proceeding by induction, we have

Hence, the result follows. \(\square \)

It is clear that the generalized scheme requires the information of q functions, two derivatives and only one matrix inversion per iteration. The single use of inverse operator throughout the iteration also justifies the name ‘method with frozen inverse operator’ of the above scheme

5 Computational efficiency

Ranking of numerical methods, based on their computational efficiency, is in many cases a difficult task since the quality of an algorithm depends on many parameters. Considering root-solvers, Brent (1973) said that “...the method with the higher efficiency is not always the method with the higher order". Sometimes a great accuracy of the sought results is not a main target whereas in many cases numerical stability of implemented algorithms is the preferable feature.

Computational efficiency of a root-solver can be defined in various manners, but always proportional to order of convergence p and inversely proportional to computational cost C per iteration—the number of function evaluations taking with certain weights. Traub (1964) introduced coefficient of efficiency by the ratio

whereas Ostrowski (1960) dealt with alternative definitions

An interesting question arises: Which of these definitions describes computational efficiency in the best way in practice when iterative methods are implemented on digital computers? This will be clarified in what follows.

To solve nonlinear equations, assuming that the tested equation has a solution \(t^*\) contained in an interval (n-cube or n-ball in general) of unit diameter. Starting with an initial approximation \(t_0\) to \(t^*\), a stopping criterion is given by

where k is the iteration index, \(\tau \) is the required accuracy, and d is the number of significant decimal digits of the approximation \(t_k\). Assume that \(||t_0 -t^* || \approx 10^{-1}\) and let p be the order of convergence of the applied iterative method. Then the (theoretical) number of iterative steps, necessary to reach the accuracy \(\tau \), can be calculated approximately from the relation \(10^{-d} = 10^{-p^k}\) as \(k \approx \log d/\log p.\) Taking into account that the computational efficiency is proportional to the reciprocal value of the total computational cost kC of the completed iterative process consisting of k iterative steps, one gets the estimation of computer efficiency,

For the function \(F(t)=(f_1(t), f_2(t),\ldots , f_m(t))^\mathrm{{T}}\), where \(t=(t_1, t_2, \ldots , t_m)^\mathrm{{T}}\), the computational cost C is computed as

where \(P_0(m)\) represents the number of evaluations of scalar functions used in the evaluation of F, \(P_1(m)\) is the number of evaluations of scalar functions of \(F'\), i.e. \(\frac{\partial f_i}{\partial t_j}\), \(1\leqslant i,j \leqslant m\) and P(m, l) represents the number of products or quotients needed per iteration. To express the value of \(C(\mu _0,\mu _1,m,l)\) in terms of products, the ratios \(\mu _0>0\) and \(\mu _1>0\) between products and evaluations and a ratio \(l\ge 1\) between products and quotients are required.

It is clear form the above discussion that estimating the computational efficiency of iterative methods for some fixed accuracy, it is sufficient to compare the values of \( \log p/C\). This means that the second Ostrowski’s formula \(E_{O_2} = \log p/C \) is preferable in the sense of the best fitting a real CPU time. Let us note that this formula was used in many manuscripts and books, see, e.g., Brent (1973) and McNamee (2007).

We shall make use of the Definition (28) for assessing the computational efficiency of presented methods. To do this we must consider all possible factors which contribute to the total cost of computation. For example, to compute F in any iterative method we need to calculate m scalar functions. The number of scalar evaluations is \(m^2\) for any new derivative \(F'\). To compute an inverse linear operator we solve a linear system, where we have \(m(m - 1)(2m - 1)/6\) products and \(m(m - 1)/2\) quotients in the LU decomposition and \(m(m - 1)\) products and m quotients in the resolution of two triangular linear systems. We must add \(m^2\) products for multiplication of a matrix with a vector or of a matrix by a scalar and m products for multiplication of a vector by a scalar.

To demonstrate the computational efficiency we consider the third, fifth- and seventh-order methods of the family (23) and compare the efficiency with existing third, fifth- and seventh-order methods. For example, third-order method \(M_1^{(3)}\) is compared with third-order methods by Homeier (2004), Cordero and Torregrosa (2007), Noor and Waseem (2009) and Xiao and Yin (2017); fifth-order method \(M_1^{(5)}\) with fifth-order methods by Cordero et al. (2010, 2012), Sharma and Gupta (2014) and Xiao and Yin (2018); and seventh-order \(M_1^{(7)}\) with seventh-order method by Xiao and Yin (2015). In addition, the new methods are also compared with each other. The considered existing methods are expressed as follows.

Third-order Homeier method (Homeier 2004):

Third-order methods by (Cordero and Torregrosa 2007):

and

Third-order Noor–Waseem method (Noor and Waseem 2009):

Third-order Xiao–Yin method (Xiao and Yin 2017):

Fifth-order methods by (Cordero et al. 2010, 2012):

and

where \(\alpha = \beta =0.5\).

Fifth-order Sharma–Gupta method (Sharma and Gupta 2014):

Fifth-order Xiao–Yin method (Xiao and Yin 2018):

Seventh-order Xiao–Yin method (Xiao and Yin 2015):

Denoting the efficiency indices of the methods \(M_i^{(p)}\) (\(p=3,5,7\) and \(i=1,2,3,4,5\)) by \({\mathcal {E}}_i^{(p)}\) and computational costs by \(C_i^{(p)}\). Then taking into account above considerations, we obtain

wherein \(D = \log d\).

5.1 5.1 Comparison between efficiencies

To compare the efficiencies of iterative methods, say \(M_i^{(p)}\) against \(M_j^{(q)}\), we consider the ratio

It is clear that if \(R_{i,j}^{p,q}>1\), the iterative method \(M_i^{(p)}\) is more efficient than \(M_j^{(q)}\). Mathematically this fact will be denoted by \(M_i^{(p)}\gtrdot M_j^{(q)}\). Taking into account that the border between two computational efficiencies is given by \(R_{i,j}^{p,q}=1,\) this boundary will be given by the equation \(\mu _0\) written as a function of \(\mu _1\), m and l; \((\mu _0,\mu _1)\in (0,+\infty )\times (0,+\infty ),\) m is a positive integer \(\ge 2\) and \(l\ge 1.\) In the sequel, we consider the comparison of computational efficiencies of the methods as expressed above.

\(M_1^{(3)}\) versus \(M_2^{(3)}\) case:

In this case, the ratio

for \(m\ge 5\), which implies that \({\mathcal {E}}_1^3 > {\mathcal {E}}_2^3\) and hence \(M_1^3 \gtrdot M_2^3\) for all \(m\ge 5\) and \(l\ge 1.\)

\(M_1^{(3)}\) versus \(M_3^{(3)}\) case:

In this case, the ratio

for \(m\ge 2\), which implies that \({\mathcal {E}}_1^3 > {\mathcal {E}}_3^3\) and hence \(M_1^3 \gtrdot M_3^3\) for all \(m\ge 2\) and \(l\ge 1.\)

\(M_1^{(3)}\) versus \(M_4^{(3)}\) case:

For this case, the ratio

It is easy to prove that \(R_{1,4}^{3,3}> 1\) for \(m\ge 1.\) Thus, we conclude that \({\mathcal {E}}_1^3 > {\mathcal {E}}_4^3\) and consequently \(M_1^3 \gtrdot M_4^3\) for all \(m\ge 2\) and \(l\ge 1.\)

\(M_1^{(3)}\) versus \(M_5^{(3)}\) case:

For this case, the ratio

We have that \(R_{1,5}^{3,3}> 1\) for \(m\ge 1,\) which shows \({\mathcal {E}}_1^3 > {\mathcal {E}}_5^3\) and consequently \(M_1^3 \gtrdot M_5^3\) for all \(m\ge 2\) and \(l\ge 1.\)

\(M_1^{(3)}\) versus \(M_6^{(3)}\) case:

In this case, the ratio

for \(m\ge 3\), which implies that \({\mathcal {E}}_1^3 > {\mathcal {E}}_6^3\) for all \(m\ge 3\) and \(l\ge 1\), that is \(M_1^3 \gtrdot M_6^3\).

\(M_1^{(5)}\) versus \(M_2^{(5)}\) case:

In this case, the ratio

for \(m\ge 3\), which implies that \({\mathcal {E}}_1^5 > {\mathcal {E}}_2^5\) for all \(m\ge 3\) and \(l\ge 1.\) Therefore \(M_1^5 \gtrdot M_2^5\).

\(M_1^{(5)}\) versus \(M_3^{(5)}\) case:

In this case, the ratio

for \(m\ge 6\), which implies that \({\mathcal {E}}_1^5 > {\mathcal {E}}_3^5\) and \(M_1^5 \gtrdot M_3^5\) for all \(m\ge 6\) and \(l\ge 1.\)

\(M_1^{(5)}\) versus \(M_4^{(5)}\) case:

In this case, the ratio

for \(m\ge 8\), which implies that \({\mathcal {E}}_1^5 > {\mathcal {E}}_4^5\) and \(M_1^5 \gtrdot M_4^5\) for all \(m\ge 8\) and \(l\ge 1.\)

\(M_1^{(5)}\) versus \(M_5^{(5)}\) case:

In this case, the ratio

for \(m\ge 7\), which implies that \({\mathcal {E}}_1^5 > {\mathcal {E}}_5^5\) and \(M_1^5 \gtrdot M_5^5\) for all \(m\ge 7\) and \(l\ge 1.\)

\(M_1^{(7)}\) versus \(M_2^{(7)}\) case:

In this case, the ratio

for \(m\ge 11\), which implies that \({\mathcal {E}}_1^7 > {\mathcal {E}}_2^7\) and \(M_1^7 \gtrdot M_2^7\) for all \(m\ge 11\) and \(l\ge 1.\)

\(M_1^{(3)}\) versus \(M_1^{(5)}\) case:

For this case, it is judicious to consider the boundary \(R_{1,1}^{3,5} = 1\), which is given by

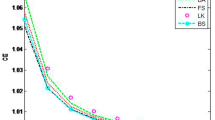

where \(r=\log 3\) and \(s=\log 5\). The comparison of efficiencies \({\mathcal {E}}_1^3\) and \({\mathcal {E}}_1^5\) is shown in the \((\mu _1,\mu _0)\)-plane by drawing some particular boundaries corresponding to \(m = 2, 5, 10 \ \text {and} \ 20\) taking \(l=1\), where \({\mathcal {E}}_1^3 > {\mathcal {E}}_1^5\) (that is \(M_1^3 \gtrdot M_1^5)\) on the left (above) and \({\mathcal {E}}_1^5 > {\mathcal {E}}_1^3\) (that is, \( M_1^5 \gtrdot M_1^3)\) on the right (below) of each line (see Fig. 1).

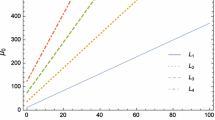

\(M_1^{(3)}\) versus \(M_1^{(7)}\) case:

Like the previous case we consider the boundary \(R_{1,1}^{3,7} = 1\), which yields

where \(r=\log 3\) and \(t=\log 7\). To compare the efficiencies \({\mathcal {E}}_1^3\) and \({\mathcal {E}}_1^7\) in the \((\mu _1,\mu _0)\)-plane, we draw some particular boundaries corresponding to \(m = 2, 5, 10 \ \text {and} \ 20\) taking \(l=1\). These boundaries are shown in Fig. 2, wherein \({\mathcal {E}}_1^3 > {\mathcal {E}}_1^7\) (that is \(M_1^3 \gtrdot M_1^7)\) on the left (above) and \({\mathcal {E}}_1^7 > {\mathcal {E}}_1^3\) (that is \(M_1^7 \gtrdot M_1^3\)) on the right (below) of each line.

\(M_1^{(5)}\) versus \(M_1^{(7)}\) case:

The boundary \(R_{1,1}^{5,7} = 1\) is given by

where \(s=\log 5\) and \(t=\log 7\). As before we draw boundary lines in \((\mu _1,\mu _0)\)-plane corresponding to cases \(m = 2, 5, 10 \ \text {and} \ 20\) taking \(l=1\). Boundaries are shown in Fig. 3, wherein \({\mathcal {E}}_1^5 > {\mathcal {E}}_1^7\) (that is \(M_1^5 \gtrdot M_1^7\)) on the left (above) and \({\mathcal {E}}_1^7 > {\mathcal {E}}_1^5\) (that is \(M_1^7 \gtrdot M_1^5\)) on the right (below) of each line.

We summarize the preceding results in following theorem:

Theorem 4

(a) For all \(\mu _0\), \(\mu _1>0\) and \(l\ge 1\) we have that:

Otherwise, the comparison depends on \(\mu _0\), \(\mu _1\) and l.

(b) For all \(m\ge 2\) we have:

where \(\beta _1 = \frac{-(14 + 18 m + m^2) r + (8 + 9 m + m^2) s + 6 m (s-r)\mu _1}{3(2r-s)}, \beta _2 = \frac{-(20+27 m +m^2) r + (8 + 9 m+ m^2) t +6 m (t-r) \mu _1}{3 (3 r - t)}\) and \(\beta _3 = \frac{-(20+27 m+ m^2) s + (14+ 18 m + m^2) t+6 m (t-s) \mu _1}{3 (3 s - 2 t)}.\)

6 Numerical results

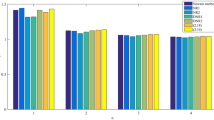

To illustrate the convergence behavior and computational efficiency of the proposed methods \(M_1^{(3)}\), \(M_1^{(5)}\) and \(M_1^{(7)}\), we consider some numerical examples and compare the performance with \(M_2^{(3)}\), \(M_3^{(3)}\), \(M_4^{(3)}\), \(M_5^{(3)}\), \(M_6^{(3)}\), \(M_2^{(5)}\), \(M_3^{(5)}\), \(M_4^{(5)}\), \(M_5^{(5)}\) and \(M_2^{(7)}\). All computations are performed in the programming package Mathematica (Wolfram 2003) in a PC with Intel(R) Pentium(R) CPU B960 @ 2.20 GHz, 2.20 GHz (32-bit Operating System) Microsoft Windows 7 Professional and 4 GB RAM. For every method, we analyze the number of iterations (k) needed to converge to the solution such that \(\Vert t_{k+1}- t_k\Vert + \Vert \mathrm {F}(t_k)\Vert < 10^{-100}.\) To verify the theoretical order of convergence (p), we calculate the computational order of convergence COC by using the formula given in Definition 2.3. In numerical results, we also include CPU time (e-time) utilized in the execution of program which is computed by the Mathematica command TimeUsed[ ]. To calculate \({\mathcal {E}}_i^p\) for all methods in numerical examples we take \(D =10^{-5}\) and \(A(\pm m)\) denotes \(A\times 10^{\pm m}.\)

The results of theorem 4 are also verified through numerical experiments. To do this, we need an estimation of the factors \(\mu _0\) and \(\mu _1\). To claim this estimation, we express the cost of the evaluation of the elementary functions in terms of products, which depends on the computer, the software and the arithmetics used (Fousse et al. 2007). In Table 1, an estimation of the cost of the elementary functions in product unit is shown, where the running time of the one product is measured in milliseconds (ms). It is evident from Table 1 that the computational cost of the quotient with respect to product is, \(l \approx 2.4.\)

For numerical tests we consider the following examples:

Example 1

Consider a system of 2 equations (Sharma and Arora 2016a):

With the initial approximations \( t_0 = \{\frac{4}{10}, -\frac{4}{10}\}^\mathrm{{T}}\), we obtain the solution \(t^* =\{1.0407\ldots , -0.0407\ldots \}^\mathrm{{T}}\). The concrete values of parameters \((m, \mu _0,\mu _1)\), obtained with the help of estimates of elementary functions displayed in Table 1, are (2, 46.8527, 36.6426). These values are used to calculate computational costs and efficiency indices of the methods shown in previous section.

Example 2

Consider a system of 3 equations (Sharma and Arora 2016c):

The initial approximation \( t_0 = \{0, 1, 0\}^\mathrm{{T}}\) is chosen to find the solution

Corresponding calculated values of the parameters \((m, \mu _0,\mu _1)\) are (3, 49.2469, 22.3370).

Example 3

Let us consider the Van der Pol equation (see Burden and Faires 2001), which is defined as follows:

which governs the flow of current in a vacuum tube, with the boundary conditions \(t(0)=0,\) \(t(2)=1.\) Further, we consider the partition of the given interval [0, 2], which is given by

Moreover, we assume that

If we discretize the problem (30) using the second-order divided difference for the first and second derivatives, which are given by

then we obtain a system of \((n-1)\) nonlinear equations

Let us consider \(\mu =\frac{1}{2}\) and initial approximation \( t_0 = (\frac{1}{2},\frac{1}{2},\ldots ,\frac{1}{2})^\mathrm{{T}}\). In particular, we solve this problem for \(n = 6\) so we obtain a system of 5 nonlinear equations. The solution of this problem is

and parametric values are \((m, \mu _0,\mu _1) = (5,6.9784,1.3157).\)

Example 4

Next, consider a system of 10 equations (Xiao and Yin 2016):

The solution \(t^* =\{0.2644\ldots , 0.2644\ldots , \,{\mathop {\cdots }\limits ^{10}},\, 0.2644\ldots \}^\mathrm{{T}}\) is obtained by assuming the initial approximations \( t_0 = \{\frac{7}{10}, \frac{7}{10} \,{\mathop {\cdots }\limits ^{10}},\, \frac{7}{10}\}^\mathrm{{T}}\). Computed values of the parameters \((m, \mu _0,\mu _1)\) are (10, 90.9400, 0.4446).

Example 5

The boundary value problem (see Ortega and Rheinboldt 1970):

is studied. Consider the following partitioning of the interval [0,1]:

Let us define \(t_0 = t(y_0) = 0, \ t_1 = t(y_1),\ldots , \ t_{n-1} = t_(y_{n-1}), \ t_n = t(y_n) = 1.\) If we discretize the problem by using the numerical formulae for first and second derivatives

we obtain a system of \(n-1\) nonlinear equations in \(n-1\) variables:

In particular, we solve this problem for \(n = 51\) so that \(k = 50\) by selecting \( t_0 = (2,2,\,{\mathop {\cdots }\limits ^{50}},\,2)^\mathrm{{T}}\) as the initial value and \(a =2\). Solution of this problem is

and \((m, \mu _0,\mu _1)=(50,3,0.0392)\).

Example 6

Consider the following Burger’s equation (see Sauer 2012):

where \(g (u,v) = -10e^{-2v}[e^v(2 -u + u^2) + 10u(1-3u + 2u^2)]\) and function \(f = f(u,v)\) satisfies the boundary conditions

Assuming the following partitioning of the domain \([0,1]^2:\)

Let us define \(f_{k,l} = f(u_k, v_l)\) and \(g_{k,l} = g(u_k, v_l)\) for \(k, l = 0, 1, 2, \ldots , n.\) Then the boundary conditions will be \(f_{0,l} = f(u_0, v_l ) = 0,\) \(f_{n,l} = f (u_n, v_l ) = 0\), \(f_{k,0} = f(u_k, v_0) = 10 u_k(u_k - 1)\) and \(f_{k,n} = f(u_k, v_n) = 10u_k(u_k - 1)/e.\) If we discretize Burger’s equation by using the numerical formulae for the partial derivatives, we obtain the following system of \((n - 1)^2\) nonlinear equations in \((n - 1)^2\) variables:

where \(i,j = 1, 2,\ldots , n-1\). In particular, we solve the nonlinear system (31) for \(n = 11\) so that \(m = 100\) by selecting \(f_{i,j} = -\frac{5}{2}\) (for \(i,j = 1, 2,\ldots , 10\)) as the initial value towards the required solution \(t^*\) given by

The approximate solution found is also plotted in Fig. 4. The concrete values of parameters for this system are \((m, \mu _0,\mu _1) = (100,10.0503,0.0492).\)

Example 7

Next, the methods are applied to solve the Poisson equation (Cordero et al. 2015)

with boundary conditions

The solution can be found using finite difference discretization. Let \(u = u(x, y)\) be the exact solution of this Poisson equation. Let \(w_{i,j} =u(x_i,y_j)\) be its approximate solution at the grid points of the mesh. Let M and N be the number of steps in x and y directions, and h and k be the respective step size (\(h=\frac{1}{M}\), \(k=\frac{1}{N}\)). If we discretize the problem by using the central divided differences, i.e., \(u_{xx}(x_i,y_j)=(w_{i+1,j} -2w_{i,j}+w_{i-1,j})/h^2\) and \(u_{yy}(x_i,y_j)=(w_{i,j+1}-2w_{i,j} +w_{i,j-1})/k^2\), we get the following system of nonlinear equations:

We consider \(M=15\) and \(N= 15\) and thereby solve the resulting nonlinear system of 196 equations in 196 unknowns. The approximate solution

is evaluated with the initial vector \(w_{i,j} =\{2,2, \, {\mathop {\cdot \cdots }\limits ^{196}}, \, 2\}^\mathrm{{T}}\) (\(i,j = 1, 2,\ldots , 14\)). The approximate solution found has also been displayed in Fig. 5. For this problem the concrete values of parameters are \((m, \mu _0,\mu _1) = (196,3,0.005102).\)

Example 8

Consider a system of 200 nonlinear equations (see Sharma and Arora 2016b)

with initial value \(t_0=(\frac{3}{2},\frac{3}{2}, \,{\mathop {\cdots }\limits ^{m}},\, \frac{3}{2})^\mathrm{{T}}\) towards the required solution \(t^*=(0.0050\ldots ,\, {\mathop {\cdots }\limits ^{200}}, \, 0.0050\ldots )^\mathrm{{T}}\). The concrete values of parameters are \((m, \mu _0,\mu _1) = (200,44.2674,0.2213).\)

Example 9

Lastly, consider a system of 500 equations (see Sharma and Arora 2016b)

To obtain the required solution \(t^* =\{1,1, \,{\mathop {\cdot \cdots }\limits ^{500}},\, 1\}^\mathrm{{T}}\) of the system, the initial value chosen is \(t_0=(\frac{18}{10},\frac{18}{10}, \,{\mathop {\cdot \cdots }\limits ^{500}},\, \frac{18}{10})^\mathrm{{T}}\). Computed values of the parameters \((m, \mu _0,\mu _1)\) are (500, 2, 0.006).

The numerical results of the performance of various methods, applied to solve the above mentioned problems, are displayed in Tables 2 and 3. Here the values like \(A(\pm m)\) denote \(A\times 10^{\pm m}\). The numerical results clearly indicate the stable convergence behavior of the methods. Also observe that in each method the computational order of convergence overwhelmingly supports the theoretical order of convergence. The elapsed CPU time (e-time in seconds) used in the execution of program shows the efficient nature of proposed methods as compared to other methods. In fact speaking of the efficient nature of an iterative method we mean that the method with high efficiency uses less computing time than that of the method with low efficiency. Similar numerical experimentations, carried out for a number of problems of different type, confirmed the above conclusions to a large extent.

7 Conclusions

In the foregoing study, we have considered the problem of solving systems of nonlinear equations and developed two- and three-step composite Newton–Jarratt iterative methods of convergence order three and five, respectively. Furthermore, in quest of fast algorithms a generalized \(q+1\)-step scheme with increasing convergence order \(2q+1\) is proposed and analyzed. Novelty of the \(q+1\)-step algorithm is that in each step order of convergence is increased by an amount of two at the cost of only one additional function evaluation. Moreover, evaluation of inverse operator remains the same throughout the iteration which makes the algorithm more attractive and computationally efficient.

Computational efficiency of the methods is discussed in its general form. Then a comparison of efficiencies of the new schemes with existing schemes is shown. It is observed that the presented methods are more efficient than similar existing methods in general. Numerical examples are presented and the performance is compared with existing methods. Theoretical order of convergence is verified in the examples by calculating computational order of convergence. Comparison of the elapsed CPU time shows that, in general, the method with large efficiency uses less computing time than the method with small efficiency. This shows that the efficiency results are in agreement with the CPU time utilized in the execution of program.

References

Alzahrani AKH, Behl R, Alshomrani AS (2018) Some higher-order iteration functions for solving nonlinear models. Appl Math Comput 334:80–93

Argyros IK (2007) Computational theory of iterative methods. In: Chui CK, Wuytack L (eds) Series: studies in computational mathematics, vol 15. Elsevier Publ. Co., New York

Argyros IK, Regmi S (2019) Undergraduate research at Cameron University on iterative procedures in banach and other spaces. Nova Science Publisher, New York

Babajee DKR, Dauhoo MZ, Darvishi MT, Karami A, Barati A (2010) Analysis of two Chebyshev-like third order methods free from second derivatives for solving systems of nonlinear equations. J Comput Appl Math 233(8):2002–2012

Behl R, Cordero A, Motsa SS, Torregrosa JR (2017) Stable high-order iterative methods for solving nonlinear models. Appl Math Comput 303(15):70–80

Brent RP (1973) Some efficient algorithms for solving systems of nonlinear equations. SIAM J Numer Anal 10:327–344

Burden RL, Faires JD (2001) Numerical analysis. PWS Publishing Company, Boston

Choubey N, Panday B, Jaiswal JP (2018) Several two-point with memory iterative methods for solving nonlinear equations. Afr Mat 29(3–4):435–449

Cordero A, Torregrosa JR (2006) Variants of Newton’s method for functions of several variables. Appl Math Comput 183(1):199–208

Cordero A, Torregrosa JR (2007) Variants of Newton’s method using fifth-order quadrature formulas. Appl Math Comput 190(1):686–698

Cordero A, Hueso JL, Martínez E, Torregrosa JR (2010) A modified Newton–Jarratt’s composition. Numer Algorithm 55(1):87–99

Cordero A, Hueso JL, Martínez E, Torregrosa JR (2012) Increasing the convergence order of an iterative method for nonlinear systems. Appl Math Lett 25(12):2369–2374

Cordero A, Feng L, Magreñán ÁA, Torregrosa JR (2015) A new fourth-order family for solving nonlinear problems and its dynamics. J Math Chem 53:893–910

Darvishi MT, Barati A (2007) Super cubic iterative methods to solve systems of nonlinear equations. Appl Math Comput 188(2):1678–1685

Esmaeili H, Ahmadi M (2015) An efficient three-step method to solve system of non linear equations. Appl Math Comput 266(1):1093–1101

Fousse L, Hanrot G, Lefèvre V, Pélissier P, Zimmermann P (2007) MPFR: a multiple-precision binary floating-point library with correct rounding. ACM Trans Math Softw 33(2):15

Homeier HHH (2004) A modified Newton method with cubic convergence: the multivariate case. J Comput Appl Math 169(1):161–169

Lotfi T, Bakhtiari P, Cordero A, Mahdiani K, Torregrosa JR (2015) Some new efficient multipoint iterative methods for solving nonlinear systems of equations. Int J Comput Math 92:1921–1934

McNamee JM (2007) Numerical methods for roots of polynomials, Part I. Elsevier, Amsterdam

Noor MA, Waseem M (2009) Some iterative methods for solving a system of nonlinear equations. Comput Math Appl 57(1):101–106

Ortega JM, Rheinboldt WC (1970) Iterative solutions of nonlinear equations in several variables. Academic Press, New York

Ostrowski AM (1960) Solution of equation and systems of equations. Academic Press, New York

Regmi S (2021) Optimized iterative methods with applications in diverse disciplines. Nova Science Publisher, New York

Sauer T (2012) Numerical analysis, 2nd edn. Pearson, Hoboken

Sharma JR, Arora H (2016a) Improved Newton-like methods for solving systems of nonlinear equations. SeMA 74(2):147–163

Sharma JR, Arora H (2016b) Efficient derivative-free numerical methods for solving systems of nonlinear equations. Comput Appl Math 35(1):269–284

Sharma JR, Arora H (2016c) A simple yet efficient derivative-free family of seventh order methods for systems of nonlinear equations. SeMA 73:59–75

Sharma JR, Gupta P (2014) An efficient fifth order method for solving systems of nonlinear equations. Comput Math Appl 67:591–601

Sharma JR, Sharma R, Bahl A (2016) An improved Newton–Traub composition for solving systems of nonlinear equations. Appl Math Comput 290:98–110

Traub JF (1964) Iterative methods for the solution of equations. Prentice-Hall, Hoboken

Weerkoon S, Fernando TGI (2000) A variant of Newton’s method with accelerated third-order convergence. Appl Math Lett 13:87–93

Wolfram S (2003) The mathematica book, 5th edn. Wolfram Media, Champaign

Xiao X, Yin H (2015) A new class of methods with higher order of convergence for solving systems of nonlinear equations. Appl Math Comput 264:300–309

Xiao XY, Yin HW (2016) Increasing the order of convergence for iterative methods to solve nonlinear systems. Calcolo 53(3):285–300

Xiao X, Yin H (2017) Achieving higher order of convergence for solving systems of nonlinear equations. Appl Math Comput 311(C):251–261

Xiao X, Yin H (2018) Accelerating the convergence speed of iterative methods for solving nonlinear systems. Appl Math Comput 333:8–19

Author information

Authors and Affiliations

Additional information

Communicated by Justin Wan.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sharma, J.R., Kumar, S. A class of accurate Newton–Jarratt-like methods with applications to nonlinear models. Comp. Appl. Math. 41, 46 (2022). https://doi.org/10.1007/s40314-021-01739-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-021-01739-5