Abstract

We present a three-step iterative method of convergence order five for solving systems of nonlinear equations. The methodology is based on Newton’s and Newton-like iterations. Hence, the name Newton-like method. Computational efficiency of the new method is considered and compared with well-known existing methods. Numerical tests are performed on some problems of different nature, which confirm robust and efficient convergence behavior of the proposed technique. Moreover, theoretical results concerning order of convergence and computational efficiency are verified in the numerical problems. It is shown that, in general, the new method is more efficient than the existing counterparts.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The construction of fixed point methods for solving nonlinear equations and systems of nonlinear equations is an interesting and challenging task in numerical analysis and many applied scientific branches. An immense importance of this topic has led to the development of many numerical methods, most frequently of iterative nature (see [1, 16, 20, 22, 26]). With the advancement of computer hardware and software, the problem of solving nonlinear equations by numerical methods has gained an additional importance. In this paper, we consider the problem of finding solution of the system of nonlinear equations \({\mathbf{F}}({\mathbf{x}})={\mathbf{0}}\) by iterative methods of a high order of convergence. This problem can be precisely stated as to find a vector \({\mathbf{r}}=(r_1,r_2,\ldots , r_n)^T\) such that \({\mathbf{F}}({\mathbf{r}})={\mathbf{0}}\), where \({\mathbf{F}}:\text{ D } \subseteq \mathbb {R}^n \rightarrow \mathbb {R}^n\) is the given nonlinear vector function \({\mathbf{F}}({\mathbf{x}})=(f_1({\mathbf{x}}), f_2({\mathbf{x}}),\ldots , f_n({\mathbf{x}}))^T\) and \({\mathbf{x}}=(x_1, x_2,\ldots , x_n)^T\). The solution vector \({\mathbf{r}}\) of \({\mathbf{F}}({\mathbf{x}})={\mathbf{0}}\) can be obtained as a fixed point of some function \(\phi :\mathbb {R}^n \rightarrow \mathbb {R}^n\) by means of the fixed point iteration

One of the basic procedures for solving systems of nonlinear equations is the quadratically convergent Newton’s method (see [16, 20, 26]), which is given as,

where \( {\mathbf{F}}'({\mathbf{x}})^{-1}\) is the inverse of first Fréchet derivative \({\mathbf{F}}'({\mathbf{x}})\) of the function \({\mathbf{F}}({\mathbf{x}})\). From the computational point of view the Newton’s method requires the evaluations of one \({\mathbf{F}}\), one \({\mathbf{F}}'\) and one matrix inversion (i.e. inverse Fréchet derivative) per iteration. Throughout the paper, we use the abbreviation \(\phi _i^{(p)}\) to denote an ith iterative function of convergence order p.

To improve the order of convergence of Newton’s method, a number of higher order methods have been proposed in literature. For example, Cordero and Torregrosa [2], Frontini and Sormani [10], Grau et al. [11], Homeier [13], and Noor and Waseem [19] have developed third order methods each requiring one \({\mathbf{F}},\) two \({\mathbf{F}}'\) and two matrix inversions per iteration. Cordero and Torregrosa have also derived two third-order methods in [3]. One of the methods requires one \({\mathbf{F}}\) and three \({\mathbf{F}}'\) whereas other requires one \({\mathbf{F}}\) and four \({\mathbf{F}}'\) evaluations per iteration. Both the methods also require two matrix inversions in each iteration. Darvishi and Barati in [7], and Potra and Pták in [23] have proposed third order methods which use two \({\mathbf{F}}\), one \({{F}}'\) and one matrix inversion. Cordero et al. developed a fourth order method in [4], which uses two \({\mathbf{F}}\), two \({\mathbf{F}}'\) and one matrix inversion. Cordero et al. in [5] have implemented fourth order Jarratt’s method [14] for scalar equations to systems of equations which requires one \({\mathbf{F}}\), two \({\mathbf{F}}'\) and two matrix inversions. Darvishi and Barati [8] presented a fourth order method requiring two \({\mathbf{F}},\) three \({\mathbf{F}}'\) and two matrix inversions per iteration. Grau et al. presented a fourth order method in [11] utilizing three \({\mathbf{F}}\), one \({\mathbf{F}}'\) and one matrix inversion. Neta [18] proposed a fourth order method using three \({\mathbf{F}}\), one \({\mathbf{F}}'\) and one matrix inversion. Sharma et al. [24] developed a fourth order method requiring one \({\mathbf{F}},\) two \({\mathbf{F}}'\) and two matrix inversions.

In quest of more fast algorithms, researchers have also proposed fifth and sixth order methods in [4–6, 11, 25]. The fifth order methods by Cordero et al. [5, 6] and Grau et al. [11] require four evaluations namely, two \({\mathbf{F}}\) and two \({\mathbf{F}}'\) per iteration. The fifth order method by Cordero et al. [4] requires three \({\mathbf{F}}\) and two \({\mathbf{F}}'\). In addition, the fifth order method in [4] requires one matrix inversion, in [5, 6] three and in [11] two matrix inversions. One sixth order method by Cordero et al. [5] uses two \({\mathbf{F}}\) and two \({\mathbf{F}}'\) while other sixth order method [6] uses three \({\mathbf{F}}\) and two \({\mathbf{F}}'\). The sixth order methods, apart from the mentioned evaluations, also require two matrix inversions per one iteration. Sharma and Gupta [25] proposed a fifth order method requiring two \({\mathbf{F}}\), two \({\mathbf{F}}'\) and two matrix inversions per one iteration.

The main goal of this paper is to develop iterative method of high computational efficiency, which may assume a high convergence order and low computational cost. To do so, we here propose a method with fifth order of convergence by employing the iterative scheme that utilizes the number of function evaluations and inverse operators as minimum as possible. In this way, we attain low computational cost and hence an increased computational efficiency. Moreover, we show that the proposed methods are efficient than existing methods in general.

Contents of the paper are summarized as follows. Some preliminary results are presented in Sect. 2. In Sect. 3, we describe the basic method for solving scalar equations. The method developed in Sect. 3 is generalized for systems of equations in Sect. 4. Here, the convergence behavior showing fifth order of convergence is also analyzed. Computational efficiency of the new method is studied and then compared with some well-known existing methods in Sect. 5. In Sect. 6, we present various numerical examples to confirm the theoretical results and to compare convergence properties of the proposed method with existing methods. Concluding remarks are given in Sect. 7.

2 Preliminary results

2.1 Order of convergence

Let \(\{{\mathbf{x}}^{(k)}\}_{k\geqslant 0}\) be a sequence in \(\mathbb {R}^n\) which converges to \({\mathbf{r}}\). Then, convergence is called of order p, \(p>1\), if there exists M, \(M>0\), and \(k_0\) such that

or

where \({\mathbf{e}}^{(k)} = {\mathbf{x}}^{(k)}-{\mathbf{r}}.\) The convergence is called linear if \(p = 1\) and there exists M such that \(0 < M < 1\).

2.2 Error equation

Let \({\mathbf{e}}^{(k)} = {\mathbf{x}}^{(k)}-{\mathbf{r}}\) be the error in the kth iteration, we call the relation

as the error equation. Here, p is the order of convergence, L is a p-linear function, i.e. \(L \in \mathcal {L}(\mathbb {R}^{n}\times \mathop {\cdot \,\cdot \,\cdot \,\cdot }\limits ^{p-times}\times \mathbb {R}^{n},\mathbb {R}^{n})\), \(\mathcal {L}\) denotes the set of bounded linear functions.

2.3 Computational order of convergence

Let \({\mathbf{x}}^{(k-1)}\), \({\mathbf{x}}^{(k)}\) and \({\mathbf{x}}^{(k+1)}\) be the three consecutive iterations close to the zero \({\mathbf{r}}\) of \({\mathbf{F}}({\mathbf{x}})\). Then, the computational order of convergence can be approximated using the formula (see [15, 21])

2.4 Computational efficiency

Computational efficiency of an iterative method is measured by the efficiency index \(E=p^{1/C}\) (see [11]), where p is the order of convergence and C is the computational cost given by

Here, \(P_0(n)\) represents the number of evaluations of scalar functions \((f_1, f_2, \ldots , f_n)\) used in the evaluations of \(\text{ F }\), \(P_1(n)\) is the number of evaluations of scalar functions of \(\text{ F }'\), i.e. \(\frac{\partial f_i}{\partial x_j}\), \(1\leqslant i,j \leqslant n\), P(n) represents the number of products or quotients needed per iteration, and \(\mu _0\) and \(\mu _1\) are ratios between products and evaluations required to express the value of \(C(\mu _0,\mu _1,n)\) in terms of products.

In case of iterative methods for scalar equations \(f(x)=0\), the cost C is measured as the number of new pieces of information required by the method per iterative step. A ‘piece of information’ typically is any evaluation of the function f or one of its derivatives.

3 Basic method

In what follows, we shall develop the scheme for solving scalar equation \(f(x)=0\), then based on this we shall state the generalized form for systems of nonlinear equations. Let us consider the scheme

where a, b and c are parameters to be determined from the following convergence theorem.

Theorem 1

Let the function \(f:I \rightarrow \mathbb {R}\) be a real valued function on I, where I is a neighborhood of a simple zero r of f(x). Assume that f(x) is sufficiently differentiable in I. If an initial approximation \(x_0\) is sufficiently close to r, then the local order of convergence of method (2) is at least 5, if \(a=5,\; b=9/5\) and \(c=1/5.\)

Proof

Let \({e}_k=x_k-r\), \({e}_{y_k}=y_k-r\) and \({e}_{z_k}=z_k-r\) be the errors in the kth iteration. Using the fact that \(f(r)=0\), \(f'(r)\ne 0\), we write the Taylor’s series expansions of the functions \(f(x_k)\), \(f'(x_k)\), \(f(y_k)\) and \(f(z_k)\) about r as follows

where \(A_j=(1/j!)f^{(j)}(r)/f'(r), \; j=2, 3, 4,\ldots \)

Let \({e}_{k+1}=x_{k+1}-r\) be the error in the \((k+1)\)th iteration. Substituting equations (4), (5) and (6) in the last step of (2) and then simplifying, we obtain the error equation as

where \(e_{y_kz_k}=b{e}_{y_k}+c{e}_{z_k}\).

Substitution of (3) and (4) in the first step of (2) yields

Then using (4), (5) and (8) in the second step of (2), we get

where \(K_1=-a+1, \ K_2=2a-1, \ K_3=-13a+4, \ K_4=19a-4, \ K_5=32a-10.\)

Combining (7), (8) and (9), it follows that

where \(L_1=(a-1)c-b +1, \ L_2=(3a-2)c-2b+1, \ L_3=-(a^2-27a+13)c+13b-4, L_4=(5a^2-52a+19)c+19b-4 \ \text{ and } \ L_5= (a^2-33a+16)c+16b-5.\)

In order to find the parameters a, b and c it will be sufficient to equate the coefficients of \(e_k^2, e_k^3\) and \(e_k^4\) to zero. Thus, we have the following system of equations:

Solving the above system of equations, we get \(a=5, b=9/5\) and \(c=1/5\). Putting these values of a, b and c in (10), the final error equation of the proposed scheme is given as

This error equation shows that the order of convergence of method (2) is five, which completes the proof of Theorem 1. \(\square \)

Remark 1

The proposed scheme is a multipoint method without memory, which is based on four function evaluations (namely three f and one \(f'\)) and possesses fifth order convergence. Applying the Definition 2.4 of computational efficiency for scalar case, we have \(E= 5^{1/4}\approx 1.495\). So, the efficiency is better than Newton’s method (\(E \approx 1.414\)). However, according to Kung-Traub hypothesis [17] multipoint methods without memory based on n function evaluations can achieve order of convergence \(2^{n-1}\). For example, with four function evaluations a method of optimal eighth order convergence can be developed. In this case the efficiency is, \(E\approx 1.682\). Therefore, the presented scheme for finding zero of a univariate function is not an efficient one.

4 Generalized method

The novel feature of the method (2) is its simple design which makes it easily implemented to systems of nonlinear equations. Moreover, the method may prove to be efficient for systems of equations. Solving a system of equations involves the computations such as evaluations of vector function, Fréchet derivative and its inverse, matrix multiplication and so on. Each of these evaluations requires a different amount of computational work. Among these the most expensive is the evaluation of inverse Fréchet derivative. For systems, therefore, the computational efficiency can not be measured by considering only the number of function and derivative evaluations. Keeping these facts in view, here our motive is to generalize the scheme (2) for solving systems of nonlinear equations.

Let us consider the problem of solving the system of equations \({\mathbf{F}}({\mathbf{x}})={\mathbf{0}}\) by an iterative method based on the scheme (2). Thus, writing the corresponding formula with \(a=5, b=9/5\) and \(c=1/5\) for system of equations

This is a scheme which uses Newton’s iteration (1) in the first step and Newton-like iterations in the subsequent steps. For this reason the scheme is called as Newton-like method. From now on this method is denoted by \(\phi _1^{(5)}\). It is clear that \(\phi _1^{(5)}\) uses three \({\mathbf{F}}\), one \({\mathbf{F}}'\) and one matrix inversion per iteration. In order to analyze the convergence properties, we recall the following result of Taylor’s expression on vector functions (see [20]).

Lemma 1

Let the function \({\mathbf{F}}:\text{ D }\subseteq \mathbb {R}^{n}\rightarrow \mathbb {R}^{n}\) be p-times Fréchet differentiable in a convex set \(\text{ D }\subseteq \mathbb {R}^{n}\), then for any \({\mathbf{x}}, {\mathbf{h}} \in \mathbb {R}^{n}\) the following expression holds:

where

The following theorem gives the convergence order of the proposed method.

Theorem 2

Let the function \({\mathbf{F}}:\text{ D }\subseteq \mathbb {R}^n\rightarrow \mathbb {R}^n\) be sufficiently Fréchet differentiable in an open neighborhood \(\text{ D }\) of its zero \({\mathbf{r}}\). Suppose that \({\mathbf{F}}'({\mathbf{x}})\) is continuous and nonsingular in \({\mathbf{r}}\). If an initial approximation \({\mathbf{x}}^{(0)}\) is sufficiently close to \({\mathbf{r}}\), then the local order of convergence of proposed Newton-like method \((\phi _1^{(5)})\) is 5.

Proof

Taylor expansion of \({\mathbf{F}}({\mathbf{x}})\) around \({\mathbf{x}}^{(k)}\) is

Let \({\mathbf{e}}^{(k)}={\mathbf{x}}^{(k)}-{\mathbf{r}}\) and assuming that \({\mathbf{F}}'({\mathbf{x}}^{(k)})^{-1}\) exists, then setting \({\mathbf{x}}={\mathbf{r}}\) and using \({\mathbf{F}}({\mathbf{r}})={\mathbf{0}}\) in (13),

Pre-multiplying (14) by \({\mathbf{F}}'({\mathbf{x}}^{(k)})^{-1}\),

where \({\mathbf{G}}({\mathbf{x}}^{(k)})={\mathbf{F}}'({\mathbf{x}}^{(k)})^{-1}{\mathbf{F}}''({\mathbf{x}}^{(k)}), \ {\mathbf{H}}({\mathbf{x}}^{(k)})={\mathbf{F}}'({\mathbf{x}}^{(k)})^{-1}{\mathbf{F}}'''({\mathbf{x}}^{(k)})\) and \({\mathbf{J}}({\mathbf{x}}^{(k)})={\mathbf{F}}'({\mathbf{x}}^{(k)})^{-1}{\mathbf{F}}^{(iv)}({\mathbf{x}}^{(k)})\).

Taylor expansion of \({\mathbf{F}}({\mathbf{y}}^{(k)})\) around \({\mathbf{x}}^{(k)}\) is

Using first step of (12) and then value of \({\mathbf{F}}'({\mathbf{x}}^{(k)})^{-1}{\mathbf{F}}\left( {\mathbf{x}}^{(k)}\right) \) from (15) in (16), we get

Taylor expansion of \({\mathbf{F}}({\mathbf{z}}^{(k)})\) around \({\mathbf{x}}^{(k)}\) is

Combining the first two steps of (12), we obtain \({\mathbf{z}}^{(k)}-{\mathbf{x}}^{(k)}=- {\mathbf{F}}'({\mathbf{x}}^{(k)})^{-1}\big ({\mathbf{F}}\left( {\mathbf{x}}^{(k)}\right) + 5{\mathbf{F}}({\mathbf{y}}^{(k)})\big )\). Then using this value in (18), it follows that

Combining all the three steps of (12), we can write

Using (14), (17) and (19) in (20), we obtain the error equation as

This equation shows that proposed method \((\phi _1^{(5)})\) for system of equations possesses fifth order of convergence. This completes the proof of Theorem 2. \(\square \)

5 Computational efficiency

In order to assess the computational efficiency of derived method, we will consider all possible number of evaluations that contribute to the total cost of computation. For example, to compute \({\mathbf{F}}\) in any iterative method we evaluate n scalar functions, whereas the number of scalar evaluations is \(n^2\) for any new derivative \({\mathbf{F}}'\). In addition, we must include the amount of computational work required to evaluate the inverse of a matrix. Instead of computing the inverse operator we solve a linear system, where we have \(n(n - 1)(2n - 1)/6\) products and \(n(n - 1)/2\) quotients in the LU decomposition, and \(n(n - 1)\) products and n quotients in the resolution of two triangular linear systems. Moreover, we must add \(n^2\) products for the multiplication of a matrix with a vector or of a matrix by a scalar and n products for the multiplication of a vector by a scalar. We suppose that a quotient is equivalent to l products.

Computational efficiency of the presented Newton-like method \(\phi _1^{(5)}\) is compared with some well-known fourth and fifth order methods. For example, fourth order generalized Jarratt’s method [5], fourth order method by Cordero et al. [4], and fifth order methods by Cordero et al. [5, 6], Grau et al. [11] and Sharma and Gupta [25]. The existing mentioned methods are given as follows:

Fourth order Generalized Jarratt method \((\phi _1^{(4)})\):

where \(\alpha \in \mathbb {R}-\{ -1/2 \}\).

Fifth order method by Cordero et al. \((\phi _3^{(5)})\):

Fifth order method by Grau et al. \((\phi _4^{(5)})\):

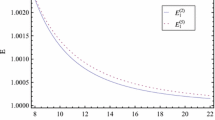

Let us denote efficiency indices of \(\phi _i^{(p)}\) by \(E_i^{(p)}\) and computational cost by \(C_i^{(p)}\). Then using the Definition 2.4 of computational efficiency while taking into account all the possible evaluations discussed above, we have

Here \(\mu _0\) and \(\mu _1\) are the ratios between products and evaluations as stated in the definition of computational efficiency in Sect. 2.

5.1 Efficiency comparison

To compare the computational efficiencies of the iterative methods, say \({{\phi }}_i^{(p)}\) against \({{\phi }}_j^{(q)}\), we consider the ratio

It is clear that if \(R_{i,j}^{p,q}>1,\) the iterative method \({{\phi }}_i^{(p)}\) is more efficient than \({{\phi }}_j^{(q)}\). Note that the boundary between two computational efficiencies is given by \(R_{i,j}^{p,q}=1,\) this boundary is expressed by the equation \(\mu _0\) written as a function of \(\mu _1\), n and l; (\(\mu _1, \mu _0) \in (0,+\infty ) \times (0,+\infty )\), n is a positive integer \(\geqslant 2\) and \(l \geqslant 1\).

\(\phi _1^{(5)}\) versus \(\phi _1^{(4)}\) case:

Using (22) and (24) in (29) the boundary \(R_{1,1}^{5,4}=1\), expressed in \(\mu _0\) as function of \(\mu _1\), n and l, is given by

where \(r=\log (5)\) and \(s=\log (4)\). A comparison between the efficiencies \(E_1^{(5)}\) and \(E_1^{(4)}\) can be made in the (\(\mu _1, \mu _0\))-plane. In Fig. 1, we present some boundary lines \(L_{i}\) (\(i=\)1, 2, 3, 4) in the (\(\mu _1, \mu _0\))-plane corresponding to n = 5, 10, 15 and 20 taking \(l=2.66\) in each case. Reason for selecting the value 2.66 for l will be clear in the next section. These boundaries are the straight lines with positive slope, where \(E_1^{(5)} > E_1^{(4)}\) on the right (below) and \(E_1^4 > E_1^5\) on the above (left) of each line.

\(\phi _1^{(5)}\) versus \(\phi _2^{(4)}\) case:

The boundary \(R_{1,2}^{5,4}=1\), calculated by using (23) and (24) in (29), is expressed by

In order to compare the efficiencies \(E_1^{(5)}\) and \(E_2^{(4)}\), here we also draw some particular boundaries \(L_{i}\) (\(i=1\), 2, 3, 4) in the (\(\mu _1\), \(\mu _0\))-plane using the same set of values of n and l as in the previous case. These boundaries are the straight lines with positive slopes, where \(E_1^{(5)} > E_2^{(4)}\) on the right and \(E_2^{(4)} > E_1^{(5)}\) on the left of each line (see Fig. 2).

\(\phi _1^{(5)}\) versus \(\phi _2^{(5)}\) case:

For this case the boundary \(R_{1,2}^{5,5}=1\) is given as

The comparison between the efficiencies \(E_1^{(5)}\) and \(E_2^{(5)}\) can be made in the (\(\mu _1, \mu _0\))-plane. Thus, we draw some particular boundaries \(L_{i}\) (\(i=1\), 2, 3, 4) in the (\(\mu _1\), \(\mu _0\))-plane using the values of n and l considered in the previous cases. The boundaries are the straight lines with positive slope, where \(E_1^{(5)} > E_2^{(5)}\) on the right (below) and \(E_2^5 > E_1^5\) on the above (left) of each line (see Fig. 3).

\(\phi _1^{(5)}\) versus \(\phi _3^{(5)}\) case:

The boundary \(R_{1,3}^{5,5}=1\) is given by

Here also we show some boundary lines \(L_{i}\) (\(i=1\), 2, 3, 4) in the (\(\mu _1\), \(\mu _0\))-plane using the values of n and l as in the previous cases for comparing the efficiencies \(E_1^{(5)}\) and \(E_3^{(5)}\). Such boundaries are straight lines with positive slopes, where \(E_1^{(5)} > E_3^{(5)}\) on the right and \(E_3^{(5)} > E_1^{(5)}\) on the left of each line (see Fig. 4).

\(\phi _1^{(5)}\) versus \(\phi _4^{(5)}\) case:

The boundary \(R_{1,4}^{5,5}=1\) is expressed by

We draw the boundaries \(L_{i}\) (\(i=1\), 2, 3, 4) in the (\(\mu _1\), \(\mu _0\))-plane using the same set of values of n and l as in the previous case. These boundaries are the straight lines with positive slope, where \(E_1^{(5)} > E_4^{(5)}\) on the right and \(E_4^{(5)} > E_1^{(5)}\) on the left of each line (see Fig. 5).

\(\phi _1^{(5)}\) versus \(\phi _5^{(5)}\)case:

For this case the boundary \(R_{1,5}^{5,5}=1\) is given by

We draw the boundaries \(L_{i}\) (\(i=1\), 2, 3, 4) in the (\(\mu _1\), \(\mu _0\))-plane using the previously considered values of n and l in order to compare the efficiencies \(E_1^{(5)}\) and \(E_5^{(5)}\). The boundaries are the straight lines with positive slope, where \(E_1^{(5)} > E_5^{(5)}\) on the right and \(E_5^{(5)} > E_1^{(5)}\) on the left of each line (see Fig. 6).

Below we summarize the above proved results:

Theorem 3

For \(\mu _0 > 0\), \(\mu _1 > 0\), \(l \geqslant 1\) and \( n\geqslant 2\) we have:

-

(i)

\(E_1^{(5)}> E_1^{(4)}\) for \(\mu _0<m_1\),

-

(ii)

\(E_1^{(5)}> E_2^{(4)}\) for \(\mu _0<m_2\),

-

(iii)

\(E_1^{(5)}> E_2^{(5)}\) for \(\mu _0<m_3\),

-

(iv)

\(E_1^{(5)}> E_3^{(5)}\) for \(\mu _0<m_4\),

-

(v)

\(E_1^{(5)}> E_4^{(5)}\) for \(\mu _0<m_5\),

-

(vi)

\(E_1^{(5)}> E_5^{(5)}\) for \(\mu _0<m_6\),

where

Remark 2

It is clear from Figs. 1, 2, 3, 4, 5 and 6 that the efficiency region of the proposed method (\(\phi _1^{(5)}\)) increases in size with increasing value of n as compared with the efficiency regions of existing methods which decrease in size. That means the efficiency of new method is greater than the efficiency of existing methods in a wide region of \((\mu _1,\mu _0)\)-plane with increasing n. This shows that the proposed method is more efficient, especially in case of the systems with large dimensions.

Remark 3

It has been seen that, in general, the presented method is more efficient than the second and third order methods. For this reason we have not included such lower order methods in the comparison of computational efficiencies.

6 Numerical results

In order to illustrate the convergence behavior and computational efficiency of the new scheme \({{\phi }}_1^{(5)}\), we consider some numerical examples and compare the performance with existing methods, namely fourth order (\({{\phi }}_i^{(4)}, \, i=1,2)\) and fifth order (\({{\phi }}_j^{(5)}, \, j=2,3,4,5)\) methods. The computations are performed in the programming package Mathematica [27] using multiple-precision arithmetic with 4096 digits. For every method, we analyze the number of iterations (k) needed to converge to the solution such that the stopping criterion \(||{\mathbf{x}}^{(k+1)}-{\mathbf{x}}^{(k)}|| + ||{\mathbf{F}}({\mathbf{x}}^{(k)})|| < 10^{-300}\) is satisfied. In numerical results, we also include CPU time used in the execution of program which is computed by the Mathematica command “TimeUsed[ ]”.

The results of Theorem 3 are also verified through numerical examples. In order to do this, we need an estimation of the factors \(\mu _{0}\) and \(\mu _{1}\). To claim this estimation, we express the cost of the evaluation of elementary functions in terms of products, which depends on the computer, the software and the arithmetics used (see, for example [9]). In Table 1, the elapsed CPU time (measured in milliseconds) in the computation of elementary functions and an estimation of the cost of the elementary functions in product units are displayed. The programs are performed in the processor Intel (R) Core (TM) i5-520M CPU @ 2.40 GHz (64-bit Machine) Microsoft Windows 7 Home Premium 2009 and are complied by computational software program Mathematica using multiple-precision arithmetic. It can be observed from Table 1 that for this hardware and the software, the computational cost of division with respect to multiplication is, \(l=2.66\).

For numerical tests we consider the following problems:

Problem 1

Consider the system of two equations (selected from [25]):

with initial value \({\mathbf{x}}^{(0)} = \{-1.5,1.5\}^T\) towards the solution: \({\mathbf{r}} =\{0,0\}^T\). For this problem the corresponding values of parameters \(\mu _0\) and \(\mu _1\) (calculated by using the Table 1) are 136.93 and 68.21 which we use in (22)–(28) for computing computational costs and efficiency indices, and also to verify the results of theorem 3. Observe that the other parameters are \((n, l) = (2, 2.66)\).

Problem 2

Consider the Gauss-Legendre quadrature formula:

where \(x_j\) and \(\omega _j\) are called abscissas and weights, respectively. The abscissas and weights are symmetrical with respect to the middle point of the interval. There being 2m unknowns, 2m relations between them are necessary so that the formula is exact for all polynomials of degree not exceeding \(2m-1\). Thus we consider

Then,

Also,

But both the above last equations are identical for all values of \(c_i\), hence comparing coefficients of \(c_i\), we obtain the following system of 2m equations in 2m unknowns \(x_j\) and \(w_j\) (\(j=1,2,\ldots ,m\)):

In particular, we solve this problem for \(m=2\) so that \(n=4\) by choosing the initial value \({\mathbf{x}}^{(0)}=\{x_1^{(0)},x_2^{(0)},\omega _1^{(0)},\omega _2^{(0)}\}^T=\{0,1,1,1\}^T\) towards the solution:

For this problem the corresponding values of parameters \(\mu _0\) and \(\mu _1\), calculated by using the Table 1, are 1.5 and 0.75. Note that the other parameters are \((n, l) = (4, 2.66)\).

Problem 3

The boundary value problem (see [20]):

is studied. Consider the following partitioning of the interval [0, 1]:

Let us define \(u_0=u(t_0)=0, u_1=u(t_1),\ldots , u_{m-1}=u(t_{m-1}), u_m=u(t_m)=1.\) If we discretize the problem by using the numerical formulae for first and second derivatives

we obtain a system of \(m-1\) nonlinear equations in \(m-1\) variables:

In particular, we solve this problem for \(m=9\) so that \(n=8\) by selecting \({\mathbf{u}}^{(0)}=\{-1,-1,\mathop {\ldots }\limits ^{},-1\}^T\) as the initial value and \(a=2\). The solution of this problem is,

and concrete values of the parameters \((n,l,\mu _0,\mu _1)\) are (8, 2.66, 2, 0.125).

Problem 4

Consider the system of thirteen equations (selected from [12]):

with initial value \( {\mathbf{x}}^{(0)} = \{2.5,2.5,\mathop {\ldots }\limits ^{},2.5\}^T\) towards the solution:

For this problem values of the parameters \((n,l,\mu _0,\mu _1)\) are (13, 2.66, 78.74, 6.057).

Problem 5

Lastly, considering the system of twenty equations (selected from [25]):

In this problem a closer choice of initial approximation to the required solution is very much needed since the problem has many solution vectors with the same value of each component of magnitude less than one in every solution vector. That means each solution vector satisfies \(\Vert {\mathbf{r}}\Vert =\sqrt{\sum _{i=1}^{20}|r_i|^{2}}<\sqrt{20}\). The two solutions of this problem are given by,

and

We choose the initial approximation \({\mathbf{x}}^{(0)} = \{-1,-1,\mathop {\ldots }\limits ^{},-1\}^T\) to solve the problem. The concrete values of parameters used in (22)–(28) are \((n,l,\mu _0,\mu _1)=(20,2.66,96.88,4.862)\).

In Table 2, we exhibit numerical results obtained for the considered problems 1–5 by implementing the methods \({{\phi }}_i^{(4)}, \, (i=1,2)\) and \({{\phi }}_j^{(5)}, \, (j=1,2,\ldots ,5)\). Displayed in the table are the necessary iterations (k), the computational order of convergence \((\rho _k)\), the computational cost \((C_i^{(p)})\) in terms of products, the computational efficiency \((E_i^{(p)})\) and the mean elapsed CPU time (CPU-Time). Computational cost and efficiency are calculated according to the corresponding expressions given by (22)–(28) using the values of parameters n, \(\mu _0\) and \(\mu _1\) as shown in the end of each problem and taking \(l=2.66\) in each case.

The mean elapsed CPU time is calculated by taking the mean of 50 performances of the program, wherein we use \(||{\mathbf{x}}^{(k+1)}-{\mathbf{x}}^{(k)}|| + ||{\mathbf{F}}({\mathbf{x}}^{(k)})|| < 10^{-300}\) as the stopping criterion in single performance of the program.

From the numerical results, we can observe that like the existing methods the present method shows consistent convergence behavior. Calculated values of the computational order of convergence displayed in the third column of Table 2 verify the theoretical fifth order of convergence proved in Sect. 4. The existing method \({{\phi }}_4^{(5)}\) by Grau et al. converges to the solution with sixth order convergence in the second and third problems consisting of algebraic equations. In order to confirm that the results obtained in Table 2 are also in accordance with the results of Theorem 3, we find \(m_i,\, (i=1,2 \cdots ,6)\) using the values of l, n, and \(\mu _1\) for each numerical problem. The results are presented in Table 3. Comparing the efficiency results of Table 2 with that of Table 3, we find that the results are compatible with each other for each numerical problem, and hence theorem 3 is verified. From the numerical values of the efficiency \((E_i^{(p)})\) and elapsed CPU Time (CPU-Time), we can observe that the method with large efficiency uses less computing time than the method with small efficiency. This shows that the efficiency results are in complete agreement with the CPU time utilized in the execution of program. Moreover, the results of efficiency and CPU-time confirm the robust and efficient nature of the new method.

7 Concluding remarks

In the foregoing study, we have developed a fifth-order iterative method for solving nonlinear equations. Scheme of the method is very simple which consists of three steps. Of these three steps the first step is Newton’s step and last two are Newton-like steps. Hence, the name Newton-like method. Taylor’s expansion is used to prove the local convergence order of the method. The computational efficiency is discussed exhaustively. Then a comparison between the efficiencies of new method with some existing methods is performed. It is shown that the proposed method is more efficient than existing methods, especially when applied for solving the systems of large dimensions. To illustrate the new technique five numerical examples are presented and completely solved. Computational results have justified robust and efficient convergence behavior of the proposed method. Similar numerical experimentations, carried out for a number of problems of different type, confirmed the above conclusions to a large extent.

References

Argyros, I.K.: Computational theory of iterative methods, series: studies in computational mathematics, vol. 15. Elsevier Publishing Company, New York (2007)

Cordero, A., Torregrosa, J.R.: Variants of Newton’s method for functions of several variables. Appl. Math. Comput. 183, 199–208 (2006)

Cordero, A., Torregrosa, J.R.: Variants of Newton’s method using fifth-order quadrature formulas. Appl. Math. Comput. 190, 686–698 (2007)

Cordero, A., Martínez, E., Torregrosa, J.R.: Iterative methods of order four and five for systems of nonlinear equations. J. Comput. Appl. Math. 231, 541–551 (2009)

Cordero, A., Hueso, J.L., Martińez, E., Torregrosa, J.R.: A modified Newton-Jarratt’s composition. Numer. Algor. 55, 87–99 (2010)

Cordero, A., Hueso, J.L., Martińez, E., Torregrosa, J.R.: Increasing the convergence order of an iterative method for nonlinear systems. Appl. Math. Lett. 25, 2369–2374 (2012)

Darvishi, M.T., Barati, A.: A third-order Newton-type method to solve system of nonlinear equations. Appl. Math. Comput. 187, 630–635 (2007)

Darvishi, M.T., Barati, A.: A fourth-order method from quadrature formulae to solve systems of nonlinear equations. Appl. Math. Comput. 188, 257–261 (2007)

Fousse, L., Hanrot, G., Lefvre, V., Plissier, P., Zimmermann, P.: MPFR: a multiple-precision binary floating-point library with correct rounding. ACM Trans. Math. Software. 33 (2) 15, Art. 13 (2007)

Frontini, M., Sormani, E.: Third-order methods from quadrature formulae for solving systems of nonlinear equations. Appl. Math. Comput. 149, 771–782 (2004)

Grau-Sánchez, M., Grau, À., Noguera, M.: On the computational efficiency index and some iterative methods for solving systems of nonlinear equations. J. Comput. Appl. Math. 236, 1259–1266 (2011)

Grau-Sánchez, M., Noguera, M., Amat, S.: On the approximation of derivatives using divided difference operators preserving the local convergence order of iterative methods. J. Comput. Appl. Math. 237, 363–372 (2013)

Homeier, H.H.H.: A modified Newton method with cubic convergence: the multivariable case. J. Comput. Appl. Math. 169, 161–169 (2004)

Jarratt, P.: Some fourth order multipoint iterative methods for solving equation. Math. Comput. 20, 434–437 (1966)

Jay, L.O.: A note on Q-order of convergence. BIT 41, 422–429 (2001)

Kelley, C.T.: Solving Nonlinear Equations with Newton’s Method. SIAM, Philadelphia, PA (2003)

Kung, H.T., Traub, J.F.: Optimal order of one-point and multipoint iteration. J. ACM 21, 643–651 (1974)

Neta, B.: A new iterative method for the solution of systems of nonlinear equations. Z. Ziegler (ed.) Approximation Theory and Applications, pp. 249–263. Acdemic Press, New York (1981)

Noor, M.A., Waseem, M.: Some iterative methods for solving a system of nonlinear equations. Comput. Math. Appl. 57, 101–106 (2009)

Ortega, J.M., Rheinboldt, W.C.: Iterative Solution of Nonlinear Equations in Several Variables. Academic Press, New York (1970)

Petković, M.S.: On a general class of multipoint root-finding methods of high computational efficiency. SIAM J. Numer. Anal. 49, 1317–1319 (2011)

Petković, M.S., Neta, B., Petković, L.D., Džunić, J.: Multipoint Methods for Solving Nonlinear Equations. Elsevier, Boston (2013)

Potra, F.-A., Pták, V.: Nondiscrete Induction and Iterarive Processes. Pitman Publishing, Boston (1984)

Sharma, J.R., Guha, R.K., Sharma, R.: An efficient fourth order weighted-Newton method for systems of nonlinear equations. Numer. Algor. 62, 307–323 (2013)

Sharma, J.R., Gupta, P.: An efficient fifth order method for solving systems of nonlinear equations. Comput. Math. Appl. 67, 591–601 (2014)

Traub, J.F.: Iterative Methods for the Solution of Equations. Prentice-Hall, Englewood Cliffs, New Jersy (1964)

Wolfram, S.: The Mathematica Book, 5th edn. Wolfram Media, (2003)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sharma, J.R., Guha, R.K. Simple yet efficient Newton-like method for systems of nonlinear equations. Calcolo 53, 451–473 (2016). https://doi.org/10.1007/s10092-015-0157-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10092-015-0157-9