Abstract

We present the iterative methods of fifth and eighth order of convergence for solving systems of nonlinear equations. Fifth order method is composed of two steps namely, Newton’s and Newton-like steps and requires the evaluations of two functions, two first derivatives and one matrix inversion in each iteration. The eighth order method is composed of three steps, of which the first two steps are that of the proposed fifth order method whereas the third is Newton-like step. This method requires one extra function evaluation in addition to the evaluations of fifth order method. Computational efficiency of proposed techniques is discussed and compared with the existing methods. Some numerical examples are considered to test the performance of the new methods. Moreover, theoretical results concerning order of convergence and computational efficiency are confirmed in numerical examples. Numerical results have confirmed the robust and efficient character of the proposed techniques.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The development of iterative methods for approximating the solution of systems of nonlinear equations is an important and interesting task in numerical analysis and applied scientific branches. With the advancement of computers, the problem of solving systems of nonlinear equations by numerical methods has gained more importance than before. This problem can be precisely stated as to find a vector \(\alpha =(\alpha _1,\alpha _2,\ldots , \alpha _m)^T\) such that \(\text{ F }(\alpha )=0\), where \(\text{ F }(x): \text{ D } \subset \mathbb {R}^m \longrightarrow \mathbb {R}^m\) is the given nonlinear system, \(\text{ F }(x)=(f_1(x), f_2(x),\ldots , f_m(x))^T\) and \(x=(x_1, x_2,\ldots ,x_m)^T\). Newton’s method [20, 21], is the most widely used algorithm for solving the system of nonlinear equations. This method, under the conditions that the function \(\text{ F }\) is continuously differentiable and a closer initial approximation \(x^{(0)}\) to the solution \(\alpha \) is given, converges quadratically and is defined by

where \(\text{ F }'(x^{(k)})^{-1}\) is the inverse of Fréchet derivative \(\text{ F }'(x^{(k)})\) of function \(\text{ F }(x)\) at \(x^{(k)}\). Here, we use the notation \(\Phi _i^{(p)}\) to denote an ith iterative function of convergence order p.

In order to accelerate the convergence of Newton’s or Newton-like iterations, many higher order methods for computing a solution of the nonlinear system \(\text{ F }(x)=0\) have been proposed. For example, Cordero and Torregrosa [2], Frontini and Sormani [9], Grau et al. [11], Homeier [14] and Noor and Waseem [19] have developed third order methods each requiring three evaluations namely, one \(\text{ F }\) and two \(\text{ F }'\) per iteration. Cordero and Torregrosa have also derived two third-order methods in [3], one of which requires one \(\text{ F }\) and three \(\text{ F }'\) whereas the other requires one \(\text{ F }\) and four \(\text{ F }'\) evaluations. In addition, all the above mentioned third order methods require two matrix inversions in each iteration. Darvishi and Barati [7] have developed a third order method which utilizes two \(\text{ F }\), one \(\text{ F }'\) and one matrix inversion. Cordero et al. [4] have presented a fourth order method requiring two \(\text{ F }\), two \(\text{ F }'\) and one matrix inversion. Cordero et al. also implemented fourth order Jarratt’s method [15] for scalar equations to systems of equations in [5], which uses one \(\text{ F }\), two \(\text{ F }'\) and one matrix inversion. Darvishi and Barati developed a fourth order method in [8] which uses two \(\text{ F }\), three \(\text{ F }'\) and two matrix inversions. Grau et al. have proposed a fourth order method in [11] that requires three \(\text{ F }\), one \(\text{ F }'\) and one matrix inversion. Grau et al. [12] have also generalized fourth order Ostrowski-like [10] and Ostrowski’s [21] methods to systems of equations each utilizing two \(\text{ F }\), one \(\text{ F }'\), one divided difference and two matrix inversions per iterative step. The fourth order family of methods proposed by Narang et al. [18] utilizes one \(\text{ F }\), two \(\text{ F }'\) and two matrix inversions. Sharma and Arora have proposed fourth order methods in [22, 23]. The method proposed in [22] requires two \(\text{ F }\), one \(\text{ F }'\), one divided difference and one matrix inversion whereas the method in [23] requires one \(\text{ F }\), two \(\text{ F }'\) and one matrix inversion per full iteration.

In quest of more fast algorithms, researchers have also proposed fifth and sixth order methods in [5, 6, 11, 12, 22, 23]. The fifth order methods by Cordero et al. [5, 6] and Grau et al. [11] require four evaluations namely, two \(\text{ F }\) and two \(\text{ F }'\) per iteration. In addition, the methods in [5, 6] require three matrix inversions and in [11] two matrix inversions. One sixth order method by Cordero et al. [5] uses two \(\text{ F }\) and two \(\text{ F }'\) while other sixth order method [6] uses three \(\text{ F }\) and two \(\text{ F }'\). The sixth order methods by Grau et al. [12] require three \(\text{ F }\), one \(\text{ F }'\) and one divided difference evaluations. The sixth order family by Narang et al. in [18] utilizes two evaluations each of \(\text{ F }\) and \(\text{ F }'\) per full iteration. One sixth order method proposed by Sharma and Arora in [22] utilizes three \(\text{ F }\), one \(\text{ F }'\) and one divided difference whereas other sixth order method in [23] uses two evaluations each of \(\text{ F }\) and \(\text{ F }'\). Apart from the mentioned evaluations, the sixth order methods of Cordero et al., Grau et al. and Narang et al. require two matrix inversions whereas that of Sharma and Arora require one matrix inversion.

Motivated by the recent developments in this area, we here propose some computationally efficient methods with higher order of convergence. In numerical analysis an iterative method is regarded as computationally efficient if it attains high convergence speed using minimal computational cost. The main elements which contribute towards the total computational cost are the evaluations of functions, derivatives and inverse operators. Among the evaluations, the evaluation of an inverse operator is a most obvious barrier in the development of an efficient iterative scheme since it is very expensive from a computational point of view. Therefore, it will turn out to be judicious if we use as small number of such inversions as possible. With these considerations, we here construct the methods of fifth and eighth order of convergence. Fifth order method is composed of two steps and per iteration requires the evaluations of two functions, two first derivatives and one matrix inversion. Eighth order method consists of three steps and uses an additional function evaluation to that of proposed fifth order scheme.

The paper is divided into seven sections and is organized as follows. Some basic definitions relevant to the present work are given in Sect. 2. Section 3 includes development of the fifth order method along with analysis of convergence. In Sect. 4, the eighth order method with its analysis of convergence is presented. The computational efficiencies of the new methods are discussed and compared with the existing methods in Sect. 5. In Sect. 6, various numerical examples are considered to confirm the theoretical results. A comparison with the existing methods is also presented in this section. Concluding remarks are given in Sect. 7.

2 Basic definitions

2.1 Order of convergence

Let \(\{x^{(k)}\}_{k\geqslant 0}\) be a sequence in \(\mathbb {R}^m\) which converges to \(\alpha \). Then, convergence is called of order p, \(p>1\), if there exists M, \(M>0\), and \(k_0\) such that

or

where \(e^{(k)}=x^{(k)}-\alpha \). The convergence is called linear if \(p=1\) and there exists M such that \(0<M<1\).

2.2 Error equation

Let \(e^{(k)}=x^{(k)}-\alpha \) be the error in the k-th iteration, we call the relation

as the error equation. Here, p is the order of convergence, L is a \(p-\)linear function, i.e. \(L \in \mathcal {L}(\mathbb {R}^m\times \mathop {\cdot \,\cdot \,\cdot \,\cdot }\limits ^{p-times}\times \mathbb {R}^m, \mathbb {R}^m)\), \(\mathcal {L}\) denotes the set of bounded linear functions.

2.3 Computational order of convergence

Let \(\alpha \) be a zero of the function \(\text{ F }\) and suppose that \(x^{(k-1)}\), \(x^{(k)}\) and \(x^{(k+1)}\) are the three consecutive iterations close to \(\alpha .\) Then, the computational order of convergence (say, \(\rho _k\)) can be approximated using the formula (see [16])

2.4 Computational efficiency

Computational efficiency of an iterative method is measured by the efficiency index \(E=p^{1/(d+op)}\) (see [1, 17]), where p is the order of convergence, d is the number of function evaluations per iteration and op is the number of operations (i.e. products and quotients) required per iteration.

3 The fifth order method

Keeping in view the definition of computationally efficient method as stated above, we begin with the following iterative scheme,

where \(\text{ G }^{(k)}= \text{ F }'(x^{(k)})^{-1}\text{ F }'(y^{(k)})\), \(a_1\), \(a_2\) and \(a_3\) are arbitrary constants and I is an \(m\times m\) identity matrix. This is a scheme which uses Newton’s iteration (1) in the first step and Newton-like iteration in the second step. For this reason the scheme is called as Newton-like method. In order to analyze the convergence properties of scheme (2), we prove the following theorem:

Theorem 1

Let the function \(\text{ F }:\text{ D }\subset \mathbb {R}^m\rightarrow \mathbb {R}^m\) be sufficiently differentiable in an open neighborhood \(\text{ D }\) of its zero \(\alpha \). Suppose that \(\text{ F }'(x)\) is continuous and nonsingular in \(\alpha \). If an initial approximation \(x^{(0)}\) is sufficiently close to \(\alpha \), then the local order of convergence of method (2) is at least 5, provided \(a_1=13/4\), \(a_2=-7/2\) and \(a_3=5/4\).

Proof

Let \(e^{(k)}=x^{(k)}-\alpha \). Developing \(\text{ F }(x^{(k)})\) in a neighborhood of \(\alpha \) and assuming that \(\Gamma =\text{ F }'(\alpha )^{-1}\) exists, we write

where \(A_i=\frac{1}{i!}\Gamma \text{ F }^{(i)}(\alpha )\), \(\text{ F }^{(i)}(\alpha ) \in \mathcal {L} (\mathbb {R}^m\times \mathop {\cdot \,\cdot \,\cdot \,\cdot }\limits ^{i-times}\times \mathbb {R}^m, \mathbb {R}^m)\), \(\Gamma \in \mathcal {L} (\mathbb {R}^m, \mathbb {R}^m)\) and \(A_i(e^{(k)})^i= A_i(e^{(k)},e^{(k)}, \mathop {\ldots }\limits ^{i-times},e^{(k)})\in \mathbb {R}^m\, \text{ with } \ e^{(k)}\in \mathbb {R}^m\). Also,

Inversion of \(\text{ F }'(x^{(k)})\) yields,

where \(X_1=-2A_2,\ X_2=4A_2^2-3A_3,\ X_3=-(8A_2^3-6A_2A_3-6A_3A_2+4A_4)\) and \(X_4=(16A_2^4+9A_3^2-12A_2^2A_3-12A_2A_3A_2-12A_3A_2^2+8A_2A_4+8A_4A_2-5A_5).\) Employing (4) and (5) in the first step of (2), we obtain

Taylor series of \(\text{ F }(y^{(k)})\) about \(\alpha \) yields

With the use of above equation, we can write

Then from Eqs. (5) and (8), it follows that

Simple algebraic calculations yield

Substituting (5), (7) and (10) in the second step of (2) we get

Combining (6) and (11), it is easy to prove that for the parameters \(a_1=13/4\), \(a_2=-7/2\) and \(a_3=5/4\), the error equation (11) produces the maximum order of convergence. For this set of values above equation reduces to

This proves the fifth order of convergence. \(\square \)

Thus, the proposed Newton-like method (2), now denoted by \(\text{ NLM }_{5}\), is finally presented as

In terms of computational cost this formula uses two functions, two derivatives and one matrix inversion per iteration.

4 The eighth order method

Based on the fifth order scheme \(\text{ NLM }_{5}\), now we consider the following three-step Newton-like scheme:

where \(b_1\), \(b_2\) and \(b_3\) are some parameters to be determined. Note that this scheme requires one extra function evaluation in addition to the evaluations of \(\text{ NLM }_{5}\). In the next theorem we prove convergence of the scheme (13).

Theorem 2

Let the function \(\text{ F }:\text{ D }\subset \mathbb {R}^m\rightarrow \mathbb {R}^m\) be sufficiently differentiable in an open neighborhood \(\text{ D }\) of its zero \(\alpha \). Supposing that \(\text{ F }'(x)\) is continuous and nonsingular in \(\alpha \). If an initial approximation \(x^{(0)}\) is sufficiently close to \(\alpha \), then the local order of convergence of method (13) is at least 8, if \(b_1=7/2\), \(b_2=-4\) and \(b_3=3/2\).

Proof

In view of (12), the error equation for the second step of (13) can be written as

Using (10), we can write

Taylor series of \(\text{ F }(z^{(k)})\) about \(\alpha \) yields

Using Eqs. (5), (15) and (16) in the third step of (13), we find

Using Eqs. (6) and (14) in (17), then after some simple calculations it can be easily shown that for the parameters \(b_1=7/2\), \(b_2=-4\) and \(b_3=3/2\), the error equation (17) produces the maximum order of convergence. For this set of values above equation reduces to

Hence the result follows. \(\square \)

Thus, the eighth order Newton-like method (13), that now we call \(\text{ NLM }_{8}\), is expressed as

5 Computational efficiency

In order to find the computational efficiencies of presented methods we shall make use of the Definition 2.4. The various evaluations and operations that contribute towards the total cost of computation are as follows. To compute \(\text{ F }\) in any iterative method we need to calculate m scalar functions. The number of scalar evaluations is \(m^2\) for any new derivative \(\text{ F }'\). The computational cost of solving a linear system of equations is \(\frac{1}{3}m^3+m^2-\frac{1}{3}m\) and the number of products and quotients required to solve r systems of linear equations with same coefficient matrix are \(\frac{1}{3}m^3+rm^2-\frac{1}{3}m\) by means of LU factorization of the common coefficient matrix. That is, the computational cost increases by \(m^2\) for each system having common matrix of coefficients. In addition to the above computational work, we add \(m^2\) products for multiplication of a matrix with a vector.

The efficiency of proposed fifth order method \(\text{ NLM }_5\) is compared with second order Newton’s method (1) denoted by \(\text{ NM }_2\), fourth order Jarratt’s method [5] denoted by \(\text{ JM }_4\), fifth order methods by Cordero et al. [5, 6] and Grau et al. [11] denoted by \(\text{ CM- }\text{ I }_5\), \(\text{ CM- }\text{ II }_5\) and \(\text{ GM }_5\), respectively. Comparison of the efficiency of eighth order method \(\text{ NLM }_8\) is performed with the sixth order methods by Cordero et al. [5, 6] denoted by \(\text{ CM- }\text{ I }_6\) and \(\text{ CM- }\text{ II }_6\), respectively. In addition, the proposed methods \(\text{ NLM }_5\) and \(\text{ NLM }_8\) are also compared with each other. The \(\text{ JM }_4\) is expressed by

The \(\text{ CM- }\text{ I }_5\) is defined by

where \(\alpha \) and \(\beta \) are any parameters.

The \(\text{ CM- }\text{ II }_5\) is given by

The \(\text{ GM }_5\) is expressed by

The methods \(\text{ CM- }\text{ I }_6\) and \(\text{ CM- }\text{ II }_6\) are, respectively, given by

and

Denoting the efficiency indices of \(\Phi _{i}^{(p)}\) by \(E_{i}^{(p)}\) and computational costs by \(C_{i}^{(p)}\), then using the Definition 2.4, we can write

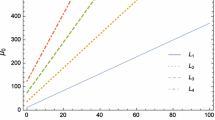

5.1 Comparison between efficiencies

To compare the considered iterative methods, say \(\Phi _{i}^{(p)}\) versus \(\Phi _{j}^{(q)},\) we consider the ratio

It is clear that if \(R^{p,q}_{i,j}>1\), the iterative method \(\Phi _{i}^{(p)}\) is more efficient than \(\Phi _{j}^{(q)}\). In the sequel, we consider the comparison of computational efficiencies of the methods as defined above.

\(\Phi _{1}^{(5)}\) versus \(\Phi _{1}^{(2)}\) case: In this case, the ratio (28) is given by

It can be easily checked that the ratio \(R_{1,1}^{5,2}>1\) for \(m\geqslant 8\). Thus, we conclude that \(E_{1}^{(5)}>E_{1}^{(2)}\) for \(m\geqslant 8\).

\(\Phi _{1}^{(5)}\) versus \(\Phi _{1}^{(4)}\) case: Here, the ratio (28) is

It can be easily seen that the ratio \(R_{1,1}^{5,4}>1\) for \(m\geqslant 8\). Thus, we conclude that \(E_{1}^{(5)}>E_{1}^{(4)}\) for \(m\geqslant 8\).

\(\Phi _{1}^{(5)}\) versus \(\Phi _{2}^{(5)}\) case: For this case it is sufficient to compare the corresponding values of \(C_{1}^{(5)}\) and \(C_{2}^{(5)}\) in (21) and (22). Let \(C_{2}^{(5)} > C_{1}^{(5)}\) which implies that \(E_{1}^{(5)}>E_{2}^{(5)}\) for \(m\geqslant 4\).

\(\Phi _{1}^{(5)}\) versus \(\Phi _{3}^{(5)}\) case: In this case also it is enough to compare the corresponding values of \(C_{1}^{(5)}\) and \(C_{3}^{(5)}\) in (21) and (23). Let \(C_{3}^{(5)} > C_{1}^{(5)}\) which implies that \(E_{1}^{(5)}>E_{3}^{(5)}\) for \(m\geqslant 5\).

\(\Phi _{1}^{(5)}\) versus \(\Phi _{4}^{(5)}\) case: Comparing the corresponding values of \(C_{1}^{(5)}\) and \(C_{4}^{(5)}\) in (21) and (24), we find that \(E_{1}^{(5)}>E_{4}^{(5)}\) for \(m\geqslant 10.\)

\(\Phi _{1}^{(8)}\) versus \(\Phi _{1}^{(6)}\) case: In this case, the ratio (28) is given by

It can be easily proved that the ratio \(R_{1,1}^{8,6}>1\) for \(m\geqslant 14\). Thus, we conclude that \(E_{1}^{(8)}>E_{1}^{(6)}\) for \(m\geqslant 14\).

\(\Phi _{1}^{(8)}\) versus \(\Phi _{2}^{(6)}\) case: In this case, the ratio (28) is given by

It can be easily shown that the ratio \(R_{1,2}^{8,6}>1\) for \(m\geqslant 12\). Thus, we conclude that \(E_{1}^{(8)}>E_{2}^{(6)}\) for \(m\geqslant 12\).

\(\Phi _{1}^{(8)}\) versus \(\Phi _{1}^{(5)}\) case: The ratio (28) is given by

We can easily prove that \(R_{1,1}^{8,5}>1\) for \(m\geqslant 28.\) Thus, we conclude that \(E_{1}^{(8)}> E_{1}^{(5)}\) for \(m\geqslant 28.\)

The above results are summarized in the following theorem:

Theorem 3

-

(a)

\(E_{1}^{(5)}>\{E_{1}^{(2)},E_{1}^{(4)}\}\) for \(m\geqslant 8.\)

-

(b)

\(E_{1}^{(5)}>E_{2}^{(5)}\) for \(m\geqslant 4.\)

-

(c)

\(E_{1}^{(5)}> E_{3}^{(5)}\) for \(m\geqslant 5.\)

-

(d)

\(E_{1}^{(5)}> E_{4}^{(5)}\) for \(m\geqslant 10.\)

-

(e)

\(E_{1}^{(8)}> E_{1}^{(6)}\) for \(m\geqslant 14.\)

-

(f)

\(E_{1}^{(8)}> E_{2}^{(6)}\) for \(m\geqslant 12.\)

-

(g)

\(E_{1}^{(8)}> E_{1}^{(5)}\) for \(m\geqslant 28.\)

The results of Theorem 3 are shown geometrically in Figs. 1, 2, 3, 4, 5, 6, 7 and 8.

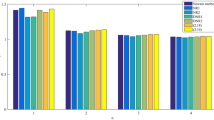

6 Numerical results

To check the validity of theoretical results regarding the convergence behavior and computational efficiencies of the proposed methods \(\text{ NLM }_5\) and \(\text{ NLM }_8\), we consider some numerical examples and compare the performance of the methods with \(\text{ NM }_2\), \(\text{ JM }_4\), \(\text{ CM-I }_5\), \(\text{ CM-II }_5\), \(\text{ GM }_5\), \(\text{ CM-I }_6\) and \(\text{ CM-II }_6\). All computations are performed in the programming package Mathematica [24] using multiple-precision arithmetic with 4096 digits. For every method, the number of iterations (k) needed to converge to the solution are calculated by using the stopping criterion \(||x^{(k+1)}-x^{(k)}||+||F(x^{(k)})||<10^{-300}\). To verify the theoretical order of convergence (p), the computational order of convergence (\(\rho _k\)) is calculated by using the formula given in Definition 2.3. In numerical results, we also include CPU time utilized in the execution of program computed by the Mathematica command “TimeUsed[ ]”.

For numerical tests the following examples are considered and solved:

Example 1

Consider the system of two equations:

The initial approximation assumed is \(x^{(0)} = \{0.3,-0.3\}^T\) for obtaining the solution \(\alpha =\{1.0406786883477141\ldots ,-0.040678688347714140\ldots \}^T\).

Example 2

Now considering the system of three equations:

with initial approximation \(x^{(0)} = \{2,0.5,2\}^T\) for obtaining the solution: \(\alpha =\{0.76759187924399822\ldots ,0.76759187924399822\ldots ,1.6972243622680054\ldots \}^T \).

Example 3

The boundary value problem (see [20]):

is studied. Consider the following partitioning of the interval [0, 1]: \(t_0=0<t_1<t_2<\cdot \cdot \cdot<t_{m-1}<t_m=1,\;\;\;t_{j+1}=t_j+h,\;\;h=1/m.\)

Let us define \(u_0=u(t_0)=0, u_1=u(t_1),\ldots , u_{m-1}=u(t_{m-1}), u_m=u(t_m)=1.\) If we discretize the problem by using the numerical formulae for first and second derivatives

we obtain a system of \(m-1\) nonlinear equations in \(m-1\) variables:

In particular, we solve this problem for \(m=9\) so that \(k=8\) by selecting \(u^{(0)}=\{-1,-1,\ldots ,-1\}^T\) as the initial value and \(a=2\). The solution of this problem is,

Example 4

Consider the system of thirteen equations (selected from [13]):

The initial value chosen is: \(x^{(0)} = \{1.5,1.5,\cdots ,1.5\}^T\) and the solution of this problem is:

Example 5

Considering the system of twenty equations (selected from [11]):

In this problem a closer choice of initial approximation to the required solution is very much needed since the problem has many solution vectors with the same value of each component of magnitude less than one in every solution vector. That means each solution vector satisfies \(\alpha =\sqrt{\sum _{i=1}^{20}|\alpha _i|^{2}}<\sqrt{20}\). One of the solutions of this problem is given by,

We choose the initial approximation \(x^{(0)} = \{0.1,0.1,\cdots ,0.1\}^T\) to solve the problem.

Example 6

Lastly, considering the system of ninety nine equations:

The initial approximation chosen for this problem is \(x^{(0)} = \{2,2,\mathop {\cdots }\limits ^{},2\}^T\) for obtaining the solution \(\alpha =\{1,1,\mathop {\cdots }\limits ^{},1\}^T.\)

Numerical results obtained by the various methods for the considered problems are presented in Tables 1, 2, 3, 4, 5 and 6. The necessary iterations (k), the local order of convergence \((\rho _k)\), the computational cost \((C_i^{(\rho )})\), the computational efficiency \((E_i^{(\rho )})\) and the mean elapsed CPU time (e-time) are shown in each table. Computational cost and efficiency are calculated according to the corresponding expressions given by (19)–(27). The mean elapsed CPU time is computed by taking the mean of 50 performances of the program, wherein we use \(||x^{(k+1)}-x^{(k)}||+||F(x^{(k)})||<10^{-300}\) as the stopping criterion in single performance of the program.

From the numerical results, it can be observed that like the existing methods the present methods show consistent convergence behavior. It is also clear that the computational order of convergence overwhelmingly supports the theoretical order of convergence. The existing method \(\text{ GM }_5\) by Grau et al. converges to the solution with sixth order of convergence in third problem. Efficiency in this exceptional case, therefore, is \(E=6^{1/672}\approx 1.0026699\). Numerical values of the efficiency indices displayed in the penultimate column of Tables 1, 2, 3, 4, 5 and 6 also verify the results of Theorem 3. As we know that the computational efficiency is proportional to the reciprocal value of the total CPU time necessary to complete running iterative process. So, the method with high efficiency utilizes less CPU time than the method with low efficiency. This fact can be judged from the numerical values of computational efficiency and computing time displayed in the last two columns of the tables. Thus, the efficiency results are in agreement with the CPU time utilized in the execution of program.

7 Conclusions

In the foregoing study, we have presented Newton-like iterative methods of fifth and eighth order of convergence for solving systems of nonlinear equations. Computational efficiency of the methods is discussed and a comparison with existing schemes is also shown. It is found that the presented methods have equal or better efficiency results than the existing methods. Numerical examples are presented and the performance is compared with known methods. Moreover, the theoretical order of convergence and the analysis of computational efficiency are verified in the considered examples. Numerical results have confirmed the robust and efficient character of the proposed algorithms. We conclude the paper with the remark that in many numerical applications high precision in computations is required. The results of numerical experiments show that the high order efficient methods associated with a multiprecision arithmetic floating point are very useful, because they yield a clear reduction in iterations.

References

Artidiello, S., Cordero, A., Torregrosa, J.R., Vassileva, M.P.: Design of high-order iterative methods for nonlinear systems by using weight function procedure. Abs. Appl. Anal. 2015, Article ID 289029 (2015)

Cordero, A., Torregrosa, J.R.: Variants of Newton’s method for functions of several variables. Appl. Math. Comput. 183, 199–208 (2006)

Cordero, A., Torregrosa, J.R.: Variants of Newton’s method using fifth-order quadrature formulas. Appl. Math. Comput. 190, 686–698 (2007)

Cordero, A., Martínez, E., Torregrosa, J.R.: Iterative methods of order four and five for systems of nonlinear equations. J. Comput. Appl. Math. 231, 541–551 (2009)

Cordero, A., Hueso, J.L., Martínez, E., Torregrosa, J.R.: A modified Newton-Jarratt’s composition. Numer. Algor. 55, 87–99 (2010)

Cordero, A., Hueso, J.L., Martínez, E., Torregrosa, J.R.: Increasing the convergence order of an iterative method for nonlinear systems. Appl. Math. Lett. 25, 2369–2374 (2012)

Darvishi, M.T., Barati, A.: A third-order Newton-type method to solve systems of nonlinear equations. Appl. Math. Comput. 187, 630–635 (2007)

Darvishi, M.T., Barati, A.: A fourth-order method from quadrature formulae to solve systems of nonlinear equations. Appl. Math. Comput. 188, 257–261 (2007)

Frontini, M., Sormani, E.: Third-order methods from quadrature formulae for solving systems of nonlinear equations. Appl. Math. Comput. 149, 771–782 (2004)

Grau, M., D́iaz-Barrero, J.L.: A technique to composite a modified Newton’s method for solving nonlinear equations. http://arxiv.org/abs/1106.0994 (2011)

Grau-Sánchez, M., Grau, À., Noguera, M.: On the computational efficiency index and some iterative methods for solving systems of nonlinear equations. J. Comput. Appl. Math. 236, 1259–1266 (2011)

Grau-Sánchez, M., Grau, À., Noguera, M.: Ostrowski type methods for solving systems of nonlinear equations. Appl. Math. Comput. 218, 2377–2385 (2011)

Grau-Sánchez, M., Noguera, M., Amat, S.: On the approximation of derivatives using divided difference operators preserving the local convergence order of iterative methods. J. Comput. Appl. Math. 237, 363–372 (2013)

Homeier, H.H.H.: A modified Newton method with cubic convergence: the multivariate case. J. Comput. Appl. Math. 169, 161–169 (2004)

Jarratt, P.: Some fourth order multipoint iterative methods for solving equations. Math. Comput. 20, 434–437 (1966)

Jay, L.O.: A note on Q-order of convergence. BIT 41, 422–429 (2001)

Lotfi, T., Bakhtiari, P., Cordero, A., Mahdiani, K., Torregrosa, J.R.: Some new efficient multipoint iterative methods for solving nonlinear systems of equations. Int. J. Comput. Math. 92, 1921–1934 (2015)

Narang, M., Bhatia, S., Kanwar, V.: New two-parameter Chebyshev-Halley-like family of fourth and sixth-order methods for systems of nonlinear equations. Appl. Math. Comput. 275, 394–403 (2016)

Noor, M.A., Waseem, M.: Some iterative methods for solving a system of nonlinear equations. Comput. Math. Appl. 57, 101–106 (2009)

Ortega, J.M., Rheinboldt, W.C.: Iterative Solutions of Nonlinear Equations in Several Variables. Academic Press, New York (1970)

Ostrowski, A.M.: Solutions of Equations and System of Equations. Academic Press, New York (1966)

Sharma, J.R., Arora, H.: On efficient weighted-Newton methods for solving systems of nonlinear equations. Appl. Math. Comput. 222, 497–506 (2013)

Sharma, J.R., Arora, H.: Efficient Jarratt-like methods for solving systems of nonlinear equations. Calcolo 51, 193–210 (2014)

Wolfram, S.: The Mathematica Book, 5th edn. Wolfram Media (2003)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sharma, J.R., Arora, H. Improved Newton-like methods for solving systems of nonlinear equations. SeMA 74, 147–163 (2017). https://doi.org/10.1007/s40324-016-0085-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40324-016-0085-x