Abstract

This paper presents a computational algorithm for solving optimal control problems based on state-control parameterization. Here, an optimal control problem is converted to an optimization problem, which can then be solved more easily. In fact, we introduce state-control parameterization technique by Chebyshev wavelets with unknown coefficients. By this method, the optimal trajectory, optimal control and performance index can be obtained approximately. Finally, some illustrative examples are presented to show the efficiency and reliability of the presented method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

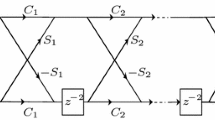

The optimization theory is divided into four major parts, including mathematical programming (static and one player), optimal control (dynamic and one player), game theory (static and many players), and differential game (dynamic and many players) (Basar 1995). An applicable branch of optimization theory is control theory that deals with minimizing (maximizing) a specified cost functional and at the same time satisfying some constraints. Two major methods are used for solving optimal control problems: Indirect methods (Pontryagin’s maximum principle, Bellman’s dynamic programming) that is based on converting the original optimal control problem into a two-point boundary value problem and direct methods (parameterization, discretization) that is based on converting the original optimal control problem into nonlinear optimization problem. Although indirect method has some advantages such as existence and uniqueness of results, it also has some disadvantages such as non-existence of the analytical solutions of optimal control problems in many cases. Therefore, direct methods are converted to an attractive field for researchers of mathematical science. Also, in recent years, different direct numerical methods and efficient algorithms based on using orthogonal polynomials have been used to solve optimal control problems. One of these orthogonal polynomials is wavelet which has good property for approximating functions with discontinuities or sharp changes. Chen and Hsiao (1997) used Haar wavelet orthogonal functions and their integration matrices to optimize dynamic systems to solve lumped and distributed parameter systems. Razzaghi and Yousefi (2001) used Legendre wavelets for solving optimal control problems. Babolian and Babolian and Fattahzadeh (2007) obtained numerical solution of differential equations using operational matrix of integration of Chebyshev wavelets basis. Ghasemi and Tavassoli Kajani (2011) presented a solution of time-varying delay systems by Chebyshev wavelets.

This paper proposes a new numerical method based on state-control parameterization via Chebyshev wavelet for solving general optimal control problems. We choose state-control parameterization because there is no need to integrate the system state equation as in control parameterization. Also, the approximated optimal solutions of state and control variables are obtained at the same time. In comparison with other works that use the power of Chebyshev wavelet to construct operational matrix of integration (Abu Haya 2011; Babolian and Fattahzadeh 2007) to convert a differential equation into an algebraic one, our proposed method does not require operational matrix. This paper is organized as follows: First, we present the basic formulation of optimal control problems. Then, we describe Chebyshev wavelet and use them to approximate state and control variables. A mathematical description of proposed state-control parameterization method is presented and by reporting some examples we compare our method with other methods that have been introduced for solving these examples.

2 Mathematical formulation of general optimal control problems

Consider the following system of differential equation on a fixed interval \([0, t_f]\),

where \( x(t)\in \mathbb {R}^l \) is the state vector, \(u(t)\in \mathbb {R}^q\) is piecewise from class of admissible controls \(\mathcal {U}\). The function \(f:\mathbb {R}^1\times \mathbb {R}^l\times \mathbb {R}^q\rightarrow \mathbb {R}^l\) is a vector function which is continuous and has continuous first partial derivative with respect to x. The above equation is called the equation of motion and the initial condition for (1) is:

where \( x_0 \) is a given vector in \( \mathbb {R}^l \).

Along with this process, we have a cost functional of the form:

Here, L(t, x, u) is the running cost, and \(\psi (t,x)\) is the terminal cost. The minimization of J(t, x, u) over all controls \(u(t)\in \mathcal {U}\) together with constraints (1) and (2) is called an optimal control problem and the pair (x, u) which achieves this minimum is called an optimal control solution. In fact, the optimization problem with performance index as in equation (3) is called a Bolza problem. There are two other equivalent optimization problems, which are called Lagrange and Mayer problems (Fleming and Rishel 1975).

3 Chebyshev wavelets

In this section, a new state-control parameterization using Chebyshev wavelets, to derive a robust method for solving optimal control problems numerically is introduced. We briefly describe Chebyshev wavelet polynomials that used in the next section. By dilation and translation of a single function that called the mother wavelet, a family of wavelets can be constituted (Babolian and Fattahzadeh 2007).

One applicable families of wavelets are Chebyshev wavelet \(\phi _{nm}(t)=\phi (k,m,n,t)\) that are defined on the interval [0, 1) by following formulae:

where \(k=1,2,\ldots \),\(n=1,2,3,\ldots ,2^{k}\), \(m=0,1,2,\ldots ,M-1\) is the order of Chebyshev polynomials and,

Here, \(T_m(t)\) are the well known Chebyshev polynomials satisfying the following recursive formulae:

4 Proposed state-control parameterization method and main results

In this section, we describe our proposed state-control parameterization method for solving general optimal control problems. Let \(Q\subset PC^{1}([0,t_{f}])\) be set of all piecewise-continuous functions that satisfy initial condition (2). The performance index is function of x(.) and u(.) and problem (1)–(3) may be interpreted as a minimization of J on set Q. Let \(Q_{2^{k}M-1}\subset Q\) be the class of combinations of Chebyshev wavelet polynomials of degree up to \((M-1)\). The basic idea is to approximate the state and control variables by a finite series of Chebyshev wavelets as follows:

We define,

The interval \([0,t_{f}]\) can be converted into \(2^{k}\) following subintervals:

In fact, \(Q_{2^{k}M-1}\subset Q\) is the class of combinations of Chebyshev wavelet functions involved \(2^{k}\) polynomials and every polynomial of degree at most \((M-1)\). Thus, the state variable (4) and the control variable (5) can be written as following:

By substituting (6) and (7) into performance index (3), we have

or

Also, after substituting (6) and (7) in (1) it is converted to:

or

and the initial condition (2) is replaced by equality constraint as follows:

Furthermore, we must add some constraints to get the continuity of the state variables between the different sections. \((2^{k}-1)\) points exist for which the continuity of state variables have to be ensured. These points are:

So there are \((2^{k}-1)\) equality constraints that must be satisfied. These constraints can be shown by the following system of equations:

By this process, minimization of problem (8) subject to constraints (9)–(11) can be written as following optimization problem:

Subject to:

where,

In fact, the optimal control problem (1)–(3) is converted to optimization problem (12)–(13) and now the optimal values of vector \([\alpha ^*,\gamma ^*]\) can be obtained using a standard quadratic programming method. The above results are summarized in the following algorithm which the main idea is to convert the optimal control into an optimization problem.

Algorithm:

Input: Optimal control problem (1)–(3).

Output: The approximated optimal trajectory, approximated optimal control and approximated performance index J.

Step 0: Choose k and M.

Step 1: Approximate the state and control variable by \((M-1)^{th}\) Chebyshev wavelet series from equation (6) and (7).

Step 2: Find an expression of \(\hat{J}\) from equation (8).

Step 3: Determine the set of equality constraints, by (9)–(11) and find matrix P.

Step 4: Determine the optimal parameters \([\alpha ^{*},\gamma ^{*}]\) by solving optimization problem (12)–(13) and substitute these parameters into equations (6), (7) and (8) to find the approximated optimal trajectory, approximated optimal control and approximated performance index J, respectively.

Step 5: Increase k or M to get better approximation of trajectory, control and performance index.

5 Convergence analysis

The following theorem and lemma ensure the convergence analysis of proposed method.

Theorem 1

Let \(f \in C([a,b],\mathbb {R})\). Then there is a sequence of polynomials \(P_{n}(x)\) that converges uniformly to f(x) on [a, b].

Proof

See Rudin (1976). \(\square \)

Lemma 1

If \(\beta _{2^{k}M-1}=\inf _{Q_{2^{k}M-1}} J\), for k and M form \(1,2,3,\ldots \) where \(Q_{2^{k}M-1}\) be a subset of Q consisting of all piecewise-continuous functions involving \(2^{k}\) polynomials and every polynomials of degree at most \((M-1)\), Then \( \lim _{k,M \longrightarrow \infty } (\beta _{2^{k}M-1})=\beta \) where \(\beta =\inf _{Q} J\).

Proof

If we define,

then,

where,

Now, let \((x^{*}_{2^{k}M-1}(t),u^{*}_{2^{k}M-1}(t))\in \hbox {Argmin}\{J(x(t),u(t)):(x(t),u(t)) \in Q_{2^{k}M-1}\}\), then,

in which \(Q_{2^{k}M-1}\) is a class of combinations of piecewise-continuous Chebyshev wavelet functions involving \(2^{k}\) polynomials and every polynomials of degree at most \((M-1)\), so,

Furthermore, according to \(Q_{2^{k}M-1}\subset Q_{2^{k}M}\), we have

Thus, we will have \(\beta _{2^{k}M}\le \beta _{2^{k}M-1}\), which means \(\beta _{2^{k}M-1}\) is a non-increasing sequence. Also, this sequence is upper bounded, and therefore is convergent. Now, the proof is complete, that is,

\(\square \)

6 Numerical examples

In this section, for illustrating the efficiency of our proposed method, four examples are considered. The first example consist of a linear time invariant system via one state variable and the second consist of a linear time invariant system via two state variables. Another example which consist of a time-varying linear system is considered and the optimal state and control is obtained. Also, the optimal control of a linear time invariant singular system is obtained in fourth example.

Example 1

(Feldbaum problem) (El-Gindy 1995; Fleming and Rishel 1975; Saberi Nik et al. 2012; Yousefi et al. 2010) Find the optimal control u(t) which minimizes:

Subject to:

The exact solutions of state and control variables in this problem are (Saberi Nik et al. 2012):

where,

We solved this problem for \(k=1\) and \(M=3\). The state and control variables can be approximated as following:

where,

By substituting (17) and (18) into (14), the approximated performance index \(\hat{J}\) can be interpreted as:

and by substituting (17) and (18) into (15) the following equality constraints can be obtained:

There is only one point which the continuity of state variable must be satisfied. This point is:

So there are one equality constraint \(\hat{x}_1(t)\vert _{t=\frac{1}{2}}=\hat{x}_2(t)\vert _{t=\frac{1}{2}}\) given by:

Also, from initial condition (16), another constraint \(\hat{x}_1(t)\Big \vert _{t=0}=1\) is produced that can be shown as:

Minimization of (19) subject to constraints (20)–(22) yield value 0.1930101957 for \(\hat{J}\). The optimal approximated trajectory \(\hat{X}(t)\) can be obtained as:

and the optimal approximated control \(\hat{U}(t)\) can be obtained as:

In Table 1, we present the obtained results for J with our proposed method for different M and k. In Table 2, a comparison between our proposed method and different methods that have been introduced for solving this problem is done. Also, Fig. 1 shows the obtained solutions of control variable for cases \(M=3,k=1\) and \(M=4,k=3\) compared with the exact solution and the error function \(|u^{*}(t)-u(t)|\) for \(M=4,k=3\).

As seen from the results reported in Table 1, by increasing k or M we can get better solutions for performance index J. Specially, for case \(k=3,M=4\) it precisely coincide with the exact solution. Also, Table 2 shows that our solution is good compared with the method presented by Abu Haya (2011), Fakharian et al. (2010), Kafash et al. (2013) and Saberi Nik et al. (2012).

Example 2

(Abu Haya 2011; Hsieh 1965; Jaddu 1998; Neuman and Sen 1973; Vlassenbroeck and Van Doreen 1988) Find an optimal controller u(t) that minimizes the following performance index:

Subject to:

We used our proposed method for solving this example and reported the obtained results for J in cases \(M=4,k=3\), \(M=5,k=3\) and \(M=6,k=3\) in Table 3. In Table 4, we present a comparison between different methods that have been introduced for solving this problem and our proposed method. Also, the approximated solutions of control variable by different M and k is plotted in Fig. 2.

From Table 4, it can be seen that our proposed method already offers a very precise solution which is better than the results reported in Abu Haya (2011), Hsieh (1965), Jaddu (1998), Majdalawi (2010), Neuman and Sen (1973) and Vlassenbroeck and Van Doreen (1988).

Example 3

(Abu Haya 2011; Elnagar 1997) Find the optimal control u(t) which minimizes:

Subject to:

We solved this problem in cases \(M=4,k=1\), \(M=4,k=2\) and \(M=5,k=2\) and presented the obtained results for J with our proposed method in Table 5. The approximated solutions of control variable in this cases is plotted in Fig. 3. Also, in Table 6, a comparison between different methods that have been introduced for solving this problem and our proposed method is reported.

Example 4

(Alirezaei et al. 2012; Dziurla and Newcomb 1979) Consider the following singular system:

with the performance index:

The system has the following exact solution (Dziurla and Newcomb 1979):

We solved this problem and presented the obtained results of x(t) and y(t) with our proposed method and the exact solutions of these variables in Tables 7 and 8, respectively. Also, the obtained solutions of control variable for \(M=3,k=1\) and \(M=7,k=2\) compared with the exact solutions and the error function \(|u^{*}(t)-u(t)|\) for \(M=7,N=2\) is plotted in Fig. 4.

7 Conclusion

In this paper, a new algorithm using the state-control parameterization method directly has been presented. The Chebyshev wavelets were used as new orthogonal polynomials to parameterize the state and control variables. An example with one state variable and another example with two state variables were solved. Also, for illustrating the efficiency of our proposed method, two examples consist of linear time-varying system and linear time invariant singular system were considered and their solutions were computed. The obtained solutions showed that our proposed method gives comparable results with other similar works. Thus, an advantage of proposed method is producing an accurate approximation of the exact solution. Also, it does not require to compute operational matrix of derivative or operational matrix of integration for converting the control problem to an optimization problem.

References

Alirezaei E, Samavat M, Vali MA (2012) Optimal control of linear time invariant singlar delay systems using the orthogonal functions. Appl Math Sci 6:1877–1891

Abu Haya AA (2011) Solving optimal control problem via Chebyshev wavelet. The Islamic University of Gaza, Gaza

Babolian E, Fattahzadeh F (2007) Numerical solution of differential equations by using chebyshev wavelet operational matrix of integration. Appl Math Comput 188(1):417–426

Basar T (1995) Dynamic non cooperative game theory, vol 200. Academic press, California

Chen CF, Hsiao CH (1997) Haare wavelets method for solving lumped and distributed parameter systems. IEEE Proc Control Theory Appl 144(2):87–94

Dziurla B, Newcomb RW (1979) The Drazin inverse and semistate equations. In: Proceedings of 4th international symposium on mathematical theory of networks and systems, pp 283–289

El-Gindy TM, El-Hawary HM, Salim MS, El-Kady M (1995) A Chebyshev approximation for solving optimal control problems. Comput Math Appl 29(6):35–45

Elnagar G (1997) State-control spectral Chebyshev parameterization for linearly constrained quadratic optimal control problems. J Comput Appl Math 79:19–40

Fakharian A, Hamidi Beheshti MT, Davari A (2010) Solving the Hamilton Jacobi Bellman equation using Adomian decomposition method. Int J Comput Math 87(12):2769–2785

Fleming WH, Rishel CJ (1975) Deterministic and stochastic optimal control. Springer, New York

Ghasemi M, Tavassoli Kajani M (2011) Numerical solution of time-varying delay systems by Chebyshev wavelets. Appl Math Model 35(11):5235–5244

Hsieh HC (1965) Synthesis of adaptive control systems by function space methods. In: Leondes CT (ed) Advances in control systems, vol 2. Academic Press, New York, pp 117–208

Jaddu HM (1998) Numerical methods for solving optimal control problems using Chebyshev polynomials, Ph.D. thesis, School of Information Science, Japan Advanced Institute of Science and Technology (1998)

Kafash B, Delavarkhalafi A, Karbassi SM (2012) Application of Chebyshev polynomials to derive efficient algorithms for the solution of optimal control problems. Sci Iran D 19(3):795–805

Kafash B, Delavarkhalafi A, Karbassi SM (2013) Application of variational iteration method for Hamilton–Jacobi–Bellman equations. Appl Math Model 37:3917–3928

Majdalawi A (2010) An iterative technique for solving nonlinear quadratic optimal control problem using orthogonal functions, Ms Thesis, Alquds University

Neuman CP, Sen A (1973) A suboptimal control algorithm for constrained problems using cubic splines. Automatica 9:601–613

Razzaghi M, Yousefi S (2001) The Legendre wavelets operational matrix of integration International Journal of systems science 32(4):495–502

Rudin W (1976) Principles of mathematical analysis, 3rd edn. McGraw-Hill, New York

Saberi Nik H, Effati S, Shirazian M (2012) An approximate-analytical solution for the Hamilton–Jacobi–Bellman equation via homotopy perturbation method. Applied Mathematical Modelling 36:5614–5623

Vlassenbroeck J, Van Doreen R (1988) A Chebyshev technique for solving nonlinear optimal control problems. IEEE Trans Autom Control 33(4):333–340

Yousefi SA, Dehghan M, Lotfi A (2010) Finding the optimal control of linear systems via Hes variational iteration method. Int J Comput Math 87(5):1042–1050

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Rafiei, Z., Kafash, B. & Karbassi, S.M. A new approach based on using Chebyshev wavelets for solving various optimal control problems. Comp. Appl. Math. 37 (Suppl 1), 144–157 (2018). https://doi.org/10.1007/s40314-017-0419-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40314-017-0419-z