Abstract

Secondary brain injury after neurotrauma is comprised of a host of distinct, potentially concurrent and interacting mechanisms that may exacerbate primary brain insult. Multimodality neuromonitoring is a method of measuring multiple aspects of the brain in order to understand the signatures of these different pathomechanisms and to detect, treat, or prevent potentially reversible secondary brain injuries. The most studied invasive parameters include intracranial pressure (ICP), cerebral perfusion pressure (CPP), autoregulatory indices, brain tissue partial oxygen tension, and tissue energy and metabolism measures such as the lactate pyruvate ratio. Understanding the local metabolic state of brain tissue in order to infer pathology and develop appropriate management strategies is an area of active investigation. Several clinical trials are underway to define the role of brain tissue oxygenation monitoring and electrocorticography in conjunction with other multimodal neuromonitoring information, including ICP and CPP monitoring. Identifying an optimal CPP to guide individualized management of blood pressure and ICP has been shown to be feasible, but definitive clinical trial evidence is still needed. Future work is still needed to define and clinically correlate patterns that emerge from integrated measurements of metabolism, pressure, flow, oxygenation, and electrophysiology. Pathophysiologic targets and precise critical care management strategies to address their underlying causes promise to mitigate secondary injuries and hold the potential to improve patient outcome. Advancements in clinical trial design are poised to establish new standards for the use of multimodality neuromonitoring to guide individualized clinical care.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The worldwide incidence of neurotrauma is estimated to be more than 27 million annually, and nearly 5.5 million suffer from severe traumatic brain injuries (sTBI) that require intensive care [1, 2]. Despite advances in management practices, sTBI continues to carry high mortality (20–40%) and substantial morbidity among survivors of whom an estimated half have moderate-to-severe disability even 1 year following injury [3, 4]. The focus of critical care management after sTBI is to prevent, detect, and mitigate secondary brain injuries (SBI) that are known to cause irreversible brain tissue injury and contribute to poor patient outcomes [5,6,7,8]. SBI are characterized by a cascade of biochemical, cellular, and molecular events underlying evolving structural tissue damage and impacted by the effects of systemic insults, such as hypotension and hypoxemia [9]. To conceptualize SBI in a clinically actionable way, the term “brain shock” has been proposed akin to acute systemic circulatory failure associated with a mismatch between oxygen and energy-substrate supply and demand [10,11,12]. The end-result is cellular dysoxia, energy crisis, and if not reversed cell death.

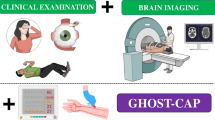

Multimodality neuromonitoring (MNM) is a method of integrating and interpreting multiple sources of information to better recognize, understand and respond to the development of SBI. Although a universal definition or a minimum accepted set of modalities does not exist, measurements that are frequently used include compartmental (e.g., intracranial) and perfusion pressures, tissue oxygenation and metabolism, pressure autoregulation, and electrophysiology [13]. Existing evidence for brain monitoring has focused on individual measurements and their utility in managing patients with sTBI. However, there is growing recognition that the use of integrated, complementary information may be better suited to guide individualized care rather than relying on a one-size-fits-all approach.

MNM in the adult sTBI population is typically associated with the use of invasive monitoring devices, including those that measure intracranial pressure (ICP) and derived pressure reactivity indices (e.g., PRx), cerebral perfusion pressure (CPP) and estimations of optimal CPP (CPPOPT), partial brain tissue oxygen tension (PbtO2), cerebral microdialysis (CMD), and electrocorticography (ECoG). An understanding of these individual modalities and their existing evidence forms an important context for understanding how the use of MNM to guide management in sTBI has become the next frontier of clinical trial design aimed at improving the outcome of these patients.

Individual Modalities

Intracranial Pressure and Derived Indices (CPP, PRx, CPPOPT)

ICP management has been a central tenet, if not a sine qua non, in the critical care of patients with sTBI. Measurements of ICP provide threshold-based targets for ICP and CPP. Using such targets has been the recommended strategy in the Brain Trauma Foundation (BTF) guidelines [14], and further supported in more recent expert consensus statements [15]. Observational and survey data, across different geographical health care settings, also suggest that it is the single most common invasive modality employed for the management of sTBI patients [16,17,18,19]. The current paradigm of managing ICP/CPP is based on escalating tiers of interventions according to threshold values. The BTF recommends treating ICP > 22 mm Hg because cohort-level values above this have been associated with increased mortality (level IIB recommendation) and to include clinical and radiographic data in management decisions (level III) [14, 20]. Intracranial hypertension (IHT) has been strongly associated with mortality in observational investigations [20,21,22,23]. Additionally, several studies suggest that care in specialized centers that practice protocol-driven therapy—typically including ICP monitoring—is associated with lower mortality and better outcomes in patients with sTBI [24,25,26,27,28,29]. However, reports of benefit are not universal as conflicting studies have found either no effect or harm from ICP-guided management [30,31,32,33,34]. The only available randomized controlled trial (RCT) was conducted in Bolivia and Ecuador and compared management of sTBI patients using invasive ICP monitoring vs. imaging and clinical exam only. This study found no significant between-group differences in morbidity or mortality measured at 6 months post-injury [35].

The dominant treatment paradigm based on population-based single ICP/CPP thresholds is criticized for several reasons. First, the methodologic approach used to derive the BTF-recommended ICP threshold for clinical interventions was based on a single cohort-level association of a mean ICP value calculated over several days of monitoring with dichotomized functional outcome; it follows that this value is an epidemiologic and not a patient-specific threshold. It should also be noted that a more recent investigation (including TBI and non-TBI patients) using principal component analysis, and elastic net regression, identified thresholds less than 19 mmHg to be associated with better outcomes [36]. The point is that the analytic process employed in identifying outcome-discriminating thresholds may make a difference in the recommended threshold. A further issue with the BTF approach is that the methodology pursued to derive this value cannot allow for differentiation between a potentially modifiable therapeutic target and a mere surrogate of severity. Second, triggers for intervention are based on unidimensional excursions over a certain number, whereas degree and duration of intracranial hypertension, i.e., dose or burden of insult are not considered. Third, a fixed threshold (e.g., ICP values above 22 mmHg) ignores the phenotypic diversity among different types of sTBI, individual patient characteristics, and the temporal progression of injury. Additionally, a focus on ICP/CPP alone ignores other crucial pathophysiologic variables that provide insight into relationships between cerebral blood flow, oxygen delivery and utilization, and cerebral metabolism. Along these lines, there is a focus on what can be conveniently measured rather than what matters in detecting or predicting SBI. Finally, the risk/benefit ratio for initiating potentially harmful interventions based on these fixed goals requires both context-specific and dynamic consideration [37,38,39]. These criticisms are partly acknowledged in the BTF guidelines by suggesting that rather than accepting a generic, absolute ICP threshold, an attempt should be made to individualize thresholds based on patient characteristics, on risk/benefit considerations for initiating treatment for elevated ICP values, and on other important variables that can be incorporated into decision-making.

Continuous ICP monitoring offers the ability not just to trend mean values, but to derive metrics that describe intracranial compliance and pressure–volume compensatory reserve [40]. The pulsatile ICP waveform can be physiologically understood as a surrogate of cerebral blood volume (CBV) and when correlated to spontaneous arterial blood pressure (ABP) fluctuations, offers a window into the quality of cerebrovascular pressure reactivity [40]. Cerebrovascular pressure reactivity is defined as the ability of vascular smooth muscle to respond to changes in transmural pressure and can be investigated by observing the relationship (or phase) between continuous ICP and ABP waveforms [41]. In pressure-reactive conditions, a rise in ABP leads within 5 to 15 s to vasoconstriction leading to a reduction in CBV, and consequently ICP; if pressure-passive, ABP, CBV, and ICP all move in the same direction. A computer-aided method developed at Cambridge University, calculates and displays a moving coherence/correlation index between spontaneous slow waves (20–200 s) of ABP and ICP. This method derives a pressure reactivity index (PRx) that has values in the range between − 1 and + 1. A negative or zero value reflects a normally reactive vascular bed, whereas positive values reflect passive, nonreactive vessels. The PRx has been shown to independently correlate with clinical outcome after TBI and discrimination thresholds for survival (PRx > 0.25) and for favorable outcome (PRx < 0.05) have been reported [20]. In addition, a change of PRx from zero or negative to positive has been used to identify the lower inflection point in the pressure-flow autoregulation curve under experimental conditions [42]. PRx plotted against CPP shows a U-shaped curve, whose minimum theoretically corresponds to the plateau section of the curve. This value was termed the “optimal CPP” (CPPOPT). Perfusion pressures lower (increased mortality) and higher (increased disability) than CPPOPT have been associated with worse outcomes [43, 44]. However, the PRx has also been recently used in examining patient-specific PRx-weighted ICP thresholds [45, 46]. When these individualized thresholds were used to quantify ICP burden based on ICP dose, i.e., pressure × time, they were stronger predictors of 6-month clinical outcome as compared with ICP doses derived from the generic thresholds of 20- and 25-mm Hg (despite larger absolute ICP doses derived based on standard thresholds) [47]. These studies suggest that the impact of ICP on clinical outcome is critically linked with the state of cerebrovascular pressure reactivity; pressure-passive conditions add vulnerability in the presence of intracranial hypertension.

To assess and monitor cerebral autoregulation (CA) in the absence of direct measures of CBF, surrogates of CBF are used. Recently, a group of 25 experts reviewed the available methodological and clinical literature aiming to reach consensus for methods to clinically assess CA [48]. The methods they reviewed can be categorized by their CBF surrogate: transcranial Doppler flow velocity (transfer function analysis, ARI, Mx), ICP (PRx, L-PRx, LAx), brain tissue oxygenation (ORx, TOx, THx), and microdialysis-derived glutamate. Only two methods have employed regional CBF measurements (Lx, CBFx). These metrics reflect time-synchronized and averaged moving correlation coefficients between ABP (or CPP) changes and their corresponding CBF surrogate. Derived indices are noisy and require time-averaging over hours; primary signals must be filtered to remove high-frequency transients and oscillations, in order to sample slow vasomotor waves [49]. The PRx was found to be the most studied and therefore most accepted CA assessment method despite lack of clear validation, concerns that it cannot capture differential behavior of CA with increasing versus decreasing CPP, poorly understood interference with other sources of CBF regulation (e.g., metabolism and oxygen/ventilation), poor signal-to-noise ratio, and the need for additional software to derive these indices. The purpose of continuous autoregulation monitoring is to allow precision individualized approaches that contribute to alleviating cerebral dysoxia and energy metabolic crisis. At the same time more data is needed on how to incorporate autoregulation monitoring into treatment protocols that shift the benefit-risk of interventions toward improved patient outcomes.

A first step regarding the feasibility of implementation has been accomplished by the completion of the CPPOPT Guided Therapy: Assessment of Target Effectiveness (COGiTATE) phase II clinical trial (NCT02982122) [50]. COGiTATE was a European, four-center, non-blinded phase II RCT. The study was powered to achieve a 20% increase in percentage of monitored time with CPP concordant ± 5 mm Hg of the CPPOPT (primary outcome). Patients were randomized to either CPP 60–70 mm Hg or targeting the CPPOPT. Twenty-eight patients were randomized to the control and 32 patients to the intervention group. CPP in the intervention group was in the target CPPOPT range 46.5% (95% CI 41.2–58%) of the monitored time and there was significantly less time spent below CPPOPT in the intervention group (19.1% vs. 34.6% in the control group; p < 0.001). There were no significant differences between groups for TIL or for other safety endpoints. The COGiTATE study suggests it is feasible to monitor and target CPPOPT in selected neurocritical care units with prior experience with this monitoring. Larger prospective studies are needed to further define safety and efficacy of a CPPOPT-guided treatment strategy.

Continuous monitoring of ICP and derived parameters offers the further opportunity towards prediction modeling of impending or future physiologic crises events [51]. The current therapeutic paradigm is a reactive one, where fixed, population-based treatment thresholds are observed and acted upon to reduce SBI. However, by the time treatment is enacted, it may be too late. The ability to predict the onset of physiologic crises could provide clinicians with valuable time to attempt aborting or manage these episodes more effectively, instead of merely reacting against ongoing SBI [52]. Prediction efforts have taken the form of ICP forecasting, involving algorithms designed to predict future ICP values, and ICP dose prediction, which involves algorithms aimed at the development of early warning systems of impending crisis events [53]. A few successful attempts utilizing high resolution, high frequency data from CENTER-TBI and from the BOOST-II trial have been recently published [54,55,56]. These reports show acceptable accuracy and potential clinical utility of prediction models for intracranial hypertension and brain tissue hypoxia; nevertheless, they await prospective validation before implementation towards prediction and prevention of SBI.

Partial Brain Tissue Oxygen Tension (PbtO2)

In 1956, Clark described the principles of an electrode that could measure oxygen tension polarographically in blood or tissue [57]. The diffusion of oxygen molecules through an oxygen-permeable membrane into an electrolyte solution causes depolarization at the nearby cathode, starting an electrical current related to the amount of oxygen. These measurements today are performed via the Licox catheter (Integra Neurosciences; San Diego, CA). The probe has a diameter of 0.5 mm, and the measurement area is 13–18 mm2; clinical experience has shown a run-in time before stable measurements are obtained of less than 2 h. The catheter requires temperature correction by means of core temperature or, preferably, measurement of brain temperature. Other technologies have been developed using fiberoptic sensors to detect changes in the absorption of light relative to the free diffusion of oxygen molecules. The Neurovent-PTO® (Raumedic, Inc; Mills River, NC) catheter has a diameter 0.63 mm and a measurement area of 22 mm2. In one study, a run-in time of a median of 8 h was required prior to stable measurements [58]. Both have been validated as accurate although differences may exist within clinical margins of error in absolute values, particularly in the setting of brain tissue hypoxia [59].

A question that remains not fully elucidated relates to the physiologic significance and interpretation of partial brain tissue oxygen tension (PbtO2) values. Is PbtO2 simply a CBF surrogate? Can it be used as an indicator of the balance between oxygen delivery, demand, and consumption? The current working model suggests that PbtO2 should not be simplistically viewed as a marker of ischemic hypoxia but rather as a complex measure resulting from the various mechanisms involved in the oxygen delivery-utilization pathway [60, 61]. Gupta et al. demonstrated that PbtO2 does not represent end-capillary oxygen tension alone [62]. Subsequently, Menon et al. highlighted the importance of diffusion barriers in the oxygen pathway from blood to the mitochondrial respiratory chain; this barrier is localized in the microvasculature with structural substrates of vascular collapse, endothelial swelling, and perivascular edema [63]. Diringer et al. found no improvement in the cerebral metabolic rate for oxygen (CMRO2) after normobaric hyperoxia, “disconnecting” PbtO2 and CMRO2 [64]. Rosenthal et al. reinforced the idea that PbtO2 is not closely related to total oxygen delivery or to cerebral oxygen metabolism, and instead identified a parabolic relationship between PbtO2 and the product of CBF and arteriovenous oxygen tension difference [65]. More recently, Launey et al. performed positron emission tomography (PET) concurrently with PbtO2 and jugular venous bulb saturation (SJVO2) monitoring and found no association between PET-derived ischemic brain volume (IBV) and SJVO2 or PbtO2 (many individuals with PbtO2 < 15 mmHg had IBV values within the control range) [66]. The authors inferred that focal PbtO2 monitoring is not reliably associated with global or regional burden of ischemia. Another possible inference is that a low PbtO2 with control-range IBV may reflect situations of tissue hypoxia where there is no augmentation of oxygen extraction fraction (OEF; as may be encountered with diffusion barrier hypoxia, mitochondrial dysfunction and microvascular shunting [12]).

Nonetheless, brain tissue oxygenation has been shown to have prognostic value as persistently low values have been associated with tissue necrosis and poor clinical outcome [6, 67,68,69]. These observations motivated undertaking prospective evaluations of monitoring and targeting PbtO2. An important step forward comprised of a phase II RCT of the safety and efficacy of brain tissue oxygen monitoring. In the Brain Tissue Oxygen Monitoring in Traumatic Brain Injury (BOOST-2) trial, 110 patients were randomized to treatment based on ICP monitoring alone (goal ICP < 20 mm Hg) versus treatment based on ICP and PbtO2 (goal > 20 mm Hg) [70]. The primary outcome was achieved with a median fraction of time spent with brain tissue hypoxia (PbtO2 less than 20 mm Hg) of 44% + / − 31% in the ICP group vs. 15% + / − 21% in the ICP + PbtO2 group (P < 0.00001). There was no significant difference between adverse events and protocol violations were infrequent. A pre-planned non-futility outcome measure was also met, with a nonstatistical trend toward lower mortality and better outcome at 6-months in the ICP + PbtO2 group. The investigators concluded that a treatment protocol guided by both ICP and PbtO2 reduces the duration of measured brain tissue hypoxia. The use of brain tissue oxygenation, in conjunction with ICP monitoring, is garnering increasing attention and is currently under study in three RCTs: BOOST-III in North America (NCT03754114), the Brain Oxygen Neuromonitoring in Australia and New Zealand Assessment Trial (BONANZA) (ACTRN12619001328167), and the French OXY-TC trial (NCT02754063). These trials represent some of the largest trials to test the use of MNM to guide management after sTBI and will provide pivotal knowledge on its use to improve patient outcomes.

Cerebral Microdialysis

Cerebral microdialysis (CMD) is performed using an intracranial catheter with a 20 kDa microdialysis membrane within subcortical white matter. Using a steady flow of 0.3 µml/min, perfusate freely exchanges with interstitial fluid, which is subsequently collected over the course of an hour. Small molecules that represent the substrate and products of glycolytic energy metabolism are measured using enzymatic reagents and colorimetric sensors including glucose, lactate, and pyruvate. In addition, cell injury markers are measured such as glycerol and glutamate. In an expert consensus summary statement, CMD was recommended as a monitor for several SBI mechanisms including ischemia, hypoxia, energy failure, and neuroglycopenia [71, 72].

In a meta-analysis of observational studies that focused on patients with TBI, the ratio of lactate to pyruvate (LPR) was associated with poor functional outcome at 3–6 months when values exceed 25 or 40, depending on the study [8]. Elevations in LPR > 40 define metabolic crisis and have been linked with non-ischemic reductions in CMRO2 suggestive of reversible areas of mitochondrial dysfunction resulting from TBI [73, 74]. These elevations correspond to elevations in ICP or decreased CPP [8], seizures [75] and spreading depolarizations [76]. Furthermore, the presence of metabolic crisis is associated with long-term structural changes, with one study documenting frontal atrophy 6-months following trauma [77] and in a recent series of 14 patients, the LPR correlated with total cortical volume loss of 1.9% (95% CI 1.7–4.4%) with an r = − 0.68 [78].

When LPR is elevated, studies suggest that reductions in pyruvate < 70 umol/L may correspond to ischemia rather than mitochondrial dysfunction, although the former is far less common than the latter [79]. In the setting of high LPR, pyruvate levels can be helpful in terms of differential diagnosis. In a flow-dependent state where flow is inadequate leading to anaerobic metabolism, pyruvate is consumed. In addition, as delivery of glucose is also interrupted, pyruvate further decreases. On the other hand, in primary mitochondrial dysfunction and due to hyperglycolysis there is a large production of lactate, however tissue pyruvate remains preserved or even slightly increases [12]. Differentiating between these two phenotypes of LPR elevation can have therapeutic implications such as augmenting flow and oxygen delivery would only be justified when the biochemical pattern suggests flow-dependency.

To avoid dysfunction in energy metabolism, the brain requires adequate glucose supply. Low CMD glucose concentrations have been associated with poor outcome [8] and consensus-based thresholds of 14.4 mg/dL or 18 mg/dL have been proposed [71]. In the setting of hyperglycolysis, either from increased conversion of glucose to lactate or shunting toward the pentose phosphate pathway, brain glucose may be low despite normal serum glucose [80]. In fact, a “normal” serum glucose target of 110–140 mg/dL may be associated with inadequate brain glucose [81, 82] and brain/serum glucose thresholds < 0.12 (expected normal ratio 0.4) have been associated with metabolic crisis [83]. Metabolic stressors that increase CMRO2 or provoke hypoxia/ischemia both impact brain glucose levels and the LPR (see example in Fig. 1). Most observational studies focus on CMD as a principal modality but the interpretation of CMD information requires the use of additional physiologic information to navigate the differential causes for changes in neurochemistry after TBI [84].

Ischemic vulnerability. A young adult admitted after rollover all-terrain vehicle accident with post-resuscitation Glasgow Coma Scale score of 5 T and a unilaterally nonreactive pupil. Preoperative axial CT is shown in inset box (top) and demonstrated a left subdural hematoma with midline shift. Post-operative CT demonstrates decompression after hemicraniectomy and evacuation of the subdural hematoma and placement of a multimodality neuromonitoring bolt in the right frontal region. The MNM data shown on the left was obtained post-trauma day 1. Twelve hours of monitoring data are presented. There is initially brain tissue hypoxia (PbtO2) and hyperemia (rCBF) with an increase in brain water content (K) suggesting the development of cerebral edema. Initially, an increase in the systemic oxygen saturation (SpO2) resolves the brain tissue hypoxia. The lactate pyruvate ratio (LPR) remains less than 40 and the pyruvate and brain glucose concentrations are within normal limits. Because of the decompressive hemicraniectomy, intracranial pressure (ICP) is relatively preserved. A neurological examination is performed (red vertical line) resulting in an increase in cerebral blood flow, suggesting an increase in the cerebral metabolic rate of oxygen metabolism (CMRO2). The PbtO2 remains preserved but there is a decrease in brain glucose with a drop in pyruvate concentration to < 70 umol/L and an increase in the LPR > 40, which meets the definition of ischemic metabolic crisis

Management of patients with TBI based on CMD involves an understanding of underlying causes for observed abnormal metabolic states, but existing interventional clinical trials have instead focused on providing alternative sources of fuel to the brain after injury. A prospective, within-subjects clinical study of 13 patients with severe TBI compared tight (80–110 mg/dL) vs. liberal (120–150 mg/dL) glucose control and found higher cortical glucose metabolism in those with brain tissue hypoglycemia and increased LPR [85]. In another study, 24 patients received sodium lactate infusion to alter CMD values rather than glucose. By providing lactate, which can be used by astrocytes or converted via lactate dehydrogenase to pyruvate, the concentration of interstitial brain glucose rose only in patients with mitochondrial dysfunction, comprising half of the studied cohort [86]. A prior study using this paradigm in 15 patients (2/3 with mitochondrial dysfunction) found that sodium lactate was associated with increased brain glucose and a decrease in interstitial glutamate; the increased osmolarity of the infusion was also associated with decreased ICP [87]. Although multiple modalities were used in conjunction with CMD in this study, they were surrogates for the safety and efficacy of sodium lactate rather than providing targets for broader intervention. In a small study that involved 8 patients with TBI undergoing CMD, disodium succinate infusion locally within the monitored tissue resulted in decreased LPR and an increase in pyruvate suggesting that the alternative fuel bypassed portions of the oxidative phosphorylation machinery and allowed improved aerobic metabolism [88]. A subsequent study was performed testing a tier-based clinical protocol to identify abnormal metabolic states using CMD in conjunction with other invasive monitoring, including ICP and PbtO2 [89]. In all, there were 33 patients enrolled and over the course of monitoring, mitochondrial dysfunction, defined as LPR > 25 in the absence of other multimodality abnormalities, occurred 15% more frequently than periods with elevated ICP and 19% more frequently than brain tissue hypoxia; in 5 patients succinate administration resulted in reduced LPR (12%) and raised brain glucose (17%). Taken together, these prospective clinical studies suggest mitochondrial dysfunction can be mitigated therapeutically although most point out that multiple modalities should be employed to rule out and treat other causes for abnormal metabolic states. There remains a lack of clinical trial evidence for the use of CMD to improve clinical outcome.

Electrocorticography

The use of scalp EEG monitoring is well-established for patients with severe neurotrauma and is recommended in several guidelines for use primarily in detecting nonconvulsive seizures, which occur in 2–33% of patients with moderate to severe TBI [90,91,92,93]. Translational science has long used electrodes placed directly on or within the brain, termed electrocorticography (ECoG), in animal models of diseases including TBI. In humans, the field of epilepsy pioneered the use of ECoG to refine localization of seizure onset zones for surgery based on its higher-resolution spatial sampling and a lower signal/noise ratio. Translating this approach into the ICU, the use of electrode strips placed in the OR under direct visualization in patients undergoing craniotomy or craniectomy began with its use to record spreading depolarizations for the first time in human subjects, 11/14 of whom had TBI [94]. ECoG strip recordings are achieved by using a 6-contact flexible strip that is placed over ~ 5 cm of contiguous cortex, ideally with its most distal electrode near the area of injury, providing a spatial continuum of the injury penumbra [95]. EcoG strip recordings have until recently remained a research tool in prospective observational studies, but centers have begun to adopt this technique as standard care. By contrast, recordings from depth electrodes began as a practical clinical tool supported by retrospective observational research [96]. Depth electrodes consist of an 8-contact array of electrodes spanning ~ 2 cm of the catheter and placed through a burr hole, skewering the cortex, which is generally recorded directly by 2–3 of the available electrodes. This technique is attractive because the electrode can be placed at bedside and anchored using bolts that accommodate multiple catheters for MNM [58, 96].

After TBI, early seizures are those that occur within 7 days of injury. In a recent systematic review and meta-analysis, authors identified just 9 RCTs that tested whether antiseizure drugs (ASDs) were useful to prevent early seizures [97]. ASDs such as phenytoin or levetiracetam were found to be effective at reducing the risk for early seizures after TBI (OR 0.42; 95% CI 0.21–0.82). However, ASDs were not found to reduce the risk for post-traumatic epilepsy. These studies did not test the impact of ASD prophylaxis on other clinical outcomes beyond mortality, however. Hampering existing observational studies is the lack of standardized use of continuous EEG (cEEG), which is required to diagnose nonconvulsive seizures. Nonconvulsive seizures are more common after acute brain injury relative to clinical seizures, and their recognition is an important consideration in management of patients with TBI. In a post-hoc analysis of 251 patients with moderate to severe TBI enrolled in an RCT that required cEEG upon admission to ICU, the incidence of electrographic seizures was only 2.6%. In this study, the presence of both seizures and ictal-interictal patterns associated with seizures had no association with 3-month functional outcome [92] but may have impacted cognitive recovery in survivors [98].

In contrast, ECoG recordings have demonstrated a much higher burden of seizures. Using ECoG strip electrode recordings, a prospective study that enrolled 138 patients with surgical TBI found an incidence of 28% relative to only 13% of patients with seizures diagnosed using cEEG as part of standard care [99]. Depth ECoG studies have documented an incidence of seizures in up to 61% of patients [75]. In both studies, 40–50% of EcoG seizures were not observed on scalp EEG, suggesting seizures may be significantly underdiagnosed when relying on clinical exam or on scalp EEG alone. No trial exists to test whether the management of acute seizures detected on scalp EEG or ECoG impacts outcome.

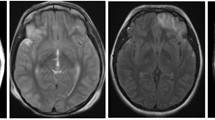

Spreading depolarizations (SDs) are large electrographic events that occur within cortex consisting of a complete depolarization of neural tissue that spreads at 3 mm/min across contiguous regions of cortex and manifest as a large amplitude, negative slow potential change in the ECoG signal lasting ~ 120 s. SDs are a marker and a mechanism of SBI, and their electrochemical and electrographic signatures are an order of magnitude larger than those observed during seizures [100, 101]. Tissue depolarization is the electrographic correlate of cytotoxic edema observed as restricted diffusion on MRI. Traditionally, clinical EEG amplifiers have included AC-coupled hardware which allow for stable recording of high-frequency EEG in the range that is typically reviewed: 0.5–70 Hz at standard sampling frequencies. However, AC-coupled amplifiers also filter the DC signal, eliminating the slow potentials that occur during SD. Next-generation EEG amplifiers have been developed that are capable of recording DC signals and software filters capable of displaying either centered DC or near-DC EEG data. ECoG recordings provide the gold-standard for the detection of SDs, as slow potential changes dissipate in scalp EEG recordings. Tissue that has depolarized is unable to produce the post-synaptic potentials that create these higher frequencies, and thus SDs are also associated with a depression of this activity. Due to the volume of cortex required to be simultaneously involved in these depressions, scalp EEG is only capable of detecting about 40% of the spreading depressions observed using EcoG [102].

Prospective, observational clinical studies have demonstrated a high burden of SDs using ECoG strip recordings in patients with sTBI requiring surgical decompression. In two multicenter studies including a total of 241 patients, SDs were detected in 56–60% of patients [103, 104]. SDs that occurred in clusters (defined as 3 SDs within a 2-h window [92]) or involved cortex in which the EEG was already electrically suppressed (termed isoelectric SDs) were associated with a lack of motor recovery at the time of hospital discharge and double the odds for worse functional outcome at 6 months (OR 2.29, 95% CI 1.13–4.65; p = 0.02). Importantly, the underlying pathophysiology of SBI after sTBI links both SDs and seizures. In a post-hoc analysis of patients enrolled in a prospective, observational study of SDs in surgical TBI, seizures were half as common as SDs, but occurred in 43% of patients with clustered or isoelectric SDs relatively to patients with no or sporadic SDs alone (18%). Seizures were often temporally associated with SDs, and the presence of seizures was independently associated with a worse EEG background and a lack of motor recovery at hospital discharge. However, seizures were not independently associated with worse 6-month functional outcome, rather they modulated the association between SDs and outcome. Taken together, the co-incidence of both SDs and seizures suggests multiple avenues of potential therapeutic intervention; however, clinical decisions remain a matter of significant debate and RCTs have been limited [105]. Using a pilot, randomized multiple crossover design including 10 patients with subarachnoid hemorrhage or TBI, the use of ketamine at doses of > 1.15 mg/kg/h was associated with less SDs; below this, the odds for SD increased by 13.8 (95% CI 1.99–1000) [106]. The fact that ketamine can also be used to treat refractory seizures makes this intervention ripe for future study [107, 108]. See Fig. 2 which illustrates the individual modalities discussed.

Neuromonitoring modalities. CMD, cerebral microdialysis; CPPOPT, optimum cerebral perfusion pressure; CSD, clustered spreading depolarization; ECoG, electrocorticography; ICP, intracranial pressures; ISD, isoelectric spreading depolarizations; PbtO2, partial pressure of brain tissue oxygen; PRx, pressure reactivity index; SD, spreading depolarization

MNM Clinical Studies and Trials

Most of the clinical trial evidence for MNM management strategies to date has focused on single modalities. In a narrative review of MNM studies [109], there were only 43 studies that used more than one modality in patients with TBI specifically. Of these, 28 (65%) were observational in nature and half came from the same research group. All but two utilized ICP monitoring and four studies that studied ICP monitoring included only non-invasive optical imaging or ultrasound-based monitoring modalities. Only three studies focused primarily on the use of MNM to guide management as a primary intervention. In one study of 113 patients admitted with sTBI, the intervention studied included both MNM and neurointensivist consultation and found that this programmatic change in clinical care resulted in improved 6-month functional outcome (OR 2.5, 95% CI 1.1–5.3; p = 0.02) [110]. An increasing number of studies using sophisticated trial design are emerging to provide definitive evidence that individualized management based on MNM may improve outcome in patients with sTBI.

ICP and PbtO2

BOOST3

The Brain Oxygen Optimization in Severe TBI Phase 3 (BOOST-3) study is the follow-up RCT to the BOOST-2 trial. It is designed as a multicenter, randomized, blinded-endpoint comparative effectiveness study enrolling 1094 patients, across North America with sTBI monitored with both ICP and PbtO2 [111]. All patients enrolled receive both ICP and PbtO2 monitoring devices and are randomized to management guided by ICP alone (blinded to PbtO2 values) vs. combined ICP + PbtO2. Tier-based protocolized interventions are used to target ICP < 22 mmHg and PbtO2 > 20 mmHg and active monitoring and management are carried out for a minimum of 5 days. The primary outcome measure is the Glasgow Outcome Scale-Extended (GOSE) performed at 180 (± 30) days by a blinded central examiner. Favorable outcome is defined according to a sliding dichotomy where the definition of favorable varies according to baseline severity (predicted probability of poor outcome based on the IMPACT core model). As designed, BOOST-3 is powered to detect a 10% absolute difference in clinical outcome. In a supplemental study to BOOST-3, EEG will be added to both ICP and PbtO2 to evaluate the relationship between these values in the setting of seizures and periodic discharges. The aim of the prospective, observational ELECTRO-BOOST (ELEtroencephalography for Cerebral Trauma Recovery and Oxygenation) study is to understand the impact of seizures on multimodality physiology in order to identify dynamic EEG biomarkers representative of SBI and understand the impact of seizures and periodic discharges on outcome.

BONANZA

The Brain Oxygen Neuromonitoring in Australia and New Zealand Assessment (BONANZA) trial aims to improve outcomes post sTBI and reduce long-term healthcare costs and will enroll 860 patients with sTBI into a pragmatic, patient-centered RCT of neuro-intensive care management based on early brain tissue oxygen optimization [112]. BONANZA, like BOOST-3, monitors PbtO2 in a blinded fashion in the control arm allowing the evaluation of cumulative hypoxic burden between groups.

OXY-TC

The Impact of Early Optimization of Brain Oxygenation on Neurological Outcome after Severe Traumatic Brain Injury (OXY-TC) study is a multicenter, open-labeled, randomized controlled superiority trial with two parallel groups and target enrollment of 300 sTBI patients in France [113]. Invasive probes must be in place within 16 h from trauma onset. Patients are randomly assigned either to ICP alone or ICP + PbtO2. This trial is different in that not all patients receive both probes. The ICP group is managed according to international guidelines to maintain ICP ≤ 20 mm Hg; however, no specific protocol directed at lowering ICP is dictated, and choice of treatments is left to clinical discretion. The ICP + PbtO2 group is managed to maintain ICP plus PbtO2 ≥ 20 mm Hg. The primary outcome is GOSE at 6 months (powered towards 30% relative reduction of unfavorable outcome). Secondary outcomes include quality-of-life assessment, mortality rate, therapeutic intensity, and incidence of adverse events during the first 5 days.

HOBIT

An alternative way of increasing PbtO2 is to expose patients to hyperbaric oxygen therapy (HBO2) producing an increased PaO2 concentration and thus increased delivery of O2 for diffusion to brain tissue [114]. It is currently unclear whether a certain threshold of PbtO2 must be reached to see improvements in cerebral oxidative metabolism (preliminary evidence suggests this threshold may be ≥ 200 mmHg) [115]. Enhanced O2 diffusion may revert anaerobic to aerobic metabolism and allow mitochondria to restore depleted cellular energy. Evidence of such improvement has been shown via increased CMRO2 following HBO2 treatments in contrast to normobaric oxygen [116, 117]. These observations have motivated the ongoing Hyperbaric Oxygen Brain Injury Treatment (HOBIT) trial [118]. This is a multicenter North American phase II adaptive RCT with a target enrollment of 200 sTBI patients. The two principal aims are to identify the optimal combination of HBO2 treatment parameters (pressure, frequency, and intervening normobaric hyperoxia) that have a > 50% probability to improve 6-month GOSE in a subsequent phase III RCT.

Electrophysiology and Multimodal ICU Management

INDICT

Clinical and translational research on both causes and treatment of SDs has resulted in a convergence of potential interventions that may be applied to the clinical setting. Studies have demonstrated that the threshold for the development of SDs is enhanced by low glucose levels, hypoperfusion (either low ABP or CPP) and brain tissue hypoxia [119]. In addition, increases in metabolic demand such as fever [120] or functional activation of tissue (i.e., stimulation of eloquent areas of cortex) create risk for the development of SDs [121, 122]. Pharmacologic blockade of NMDA currents has been demonstrated in multiple translational models to block or reduce the magnitude of SDs and in an observational study of patients with various disease processes, the use of ketamine has been shown to reduce the odds of having SDs (OR 0.38, 95% CI 0.18–0.79) [123].

With the clear impact of SDs on clinical outcomes and the practical targets for intervention that may reduce their burden, an RCT aimed at Improving Neurotrauma by Depolarization Inhibition with Combination Therapy (INDICT; NCT05337618) [124] began enrollment in 2022. This study includes patients undergoing surgical decompression for sTBI with an ECoG strip electrode placed as part of standard care. INDICT aims to enroll 72 patients, of which 60% are expected to have SDs and will subsequently be randomized 1:1 into one of two arms: a standard ICU care arm and a tier-based intervention arm. This study is particularly novel in its targeting of a specific pathomechanism (SD) in a population enriched for this pathology by randomizing only those patients who exhibit SD after enrollment. Tier-based management first includes optimization of CPP, management of ICP and PbtO2, maintenance of adequate serum glucose levels, and avoidance of fever. If SDs continue to occur, these parameters are more aggressively managed, and ketamine is initiated at a dose of 1 mg/kg/h. For patients who are intubated and continue to exhibit SDs despite these interventions, ketamine is increased to a maximum of 4 mg/kg/h until SDs resolve. Patients then step down through the tier-based management platforms as long as SDs do not recur. This phase IIb study aims to determine the feasibility of real-time ECoG-guided management strategy to reduce SD, improve cerebral physiology, and ultimately to reduce SBI. INDICT is powered to detect a 50% reduction in SD burden and will follow patients through 6 months to determine functional, cognitive, and quality of life outcomes.

Limitations and Considerations for MNM Trial Design

Open Questions in Interpretation of MNM Data

Indices such as PbtO2 and LPR are derived from regional probes sampling a small volume of brain tissue. Depending on the location of the probe (e.g., proximity to a contusion or a subdural hematoma) and the nature of injury (e.g., diffuse vs. focal), interpretations of measurements and recommended actions may differ considerably. Caution is also needed in combining regional with global data, as when an external ventricular drain is used to derive ICP/CPP/PRx/CPPOPT. This is salient as most interventions have systemic and not localized effects (such as focused succinate infusion mentioned earlier [89]). A further problem is the potential for regional heterogeneity that could result in conflicting intervention plans. For instance, different parts of the brain may demonstrate divergent and opposing physiology, e.g., coexisting hyperemia and ischemia [66]. Figure 3 illustrates how these create challenges to clinical decision-making. MNM also generates continuous data that evolves over time and requires attention to the trends and changes that may require a re-evaluation of management strategy or real-time decisions for treatment. However, there is little evidence to guide an understanding of how physiologic patterns change over time, as for example, in terms of possible transitions from macrovascular to microvascular ischemic mechanisms, or the duration and potential for reversibility of post-ischemic mitochondrial dysfunction. The ability to detect these changes over time is dependent on the resolution and frequency of measurements and while intraparenchymal monitors may provide high frequency continuous data, CMD is performed hourly, and ultrasonography or imaging may be performed daily or less. Such differences in time resolution may interfere with interpretation of observed patterns, and lead to misguided action plans. A third open question relates to understanding that improving pathophysiology guided by MNM does not guarantee improved patient outcomes. The concern here is not to “throw the baby out with the bathwater.” MNM data should primarily be helpful in providing insight into brain tissue physiology and patterns that suggest SBI or other changes in patient state. However, global functional patient outcomes are heavily influenced by various factors that may remain unrelated or unmodifiable regardless of the quality of MNM guidance.

Challenges in clinical decision-making. A young adult admitted after motorcycle collision with pre-hospital hypoxia and post-resuscitation Glasgow Coma Scale score of 6 T. Pre-monitoring axial CT is shown in inset box (top) and demonstrates multifocal superficial and deep contusions with global cerebral edema. A multimodality neuromonitoring bolt and an external ventricular drain were placed in the right frontal region (bottom). The MNM data shown on the left was obtained post-trauma day 2. Twelve hours of monitoring data are presented. During the first 6 h, regional cerebral blood flow measurements (rCBF) are consistent with hyperemia (black dashed box) which contributes to elevated intracranial pressure (ICP), a relatively low cerebral perfusion pressure (CPP), and loss of autoregulatory function represented by an elevation in the pressure reactivity index (PRx; black). There is also brain tissue hypoxia (PbtO2). In this case, the brain tissue hypoxia and elevations in ICP could be related to either hyperemia and microvascular shunting with diffusion hypoxia or a CPP below the lower limit of autoregulation creating vasodilatation and elevated cerebral blood volume. A decision was made to begin vasopressors (red vertical line). While increasing the CPP improved autoregulation and increased PbtO2, it also drove an increase in hyperemia. A ventilatory change subsequently resulted in a sudden drop in end-tidal CO2 (ETCO2; black arrow) resulting in a sharp decrease in ICP and resolution of hyperemia, suggesting development of vasoconstriction; PbtO2 was preserved. In this case, adjusting two different parameters (increasing CPP and decreasing ETCO2) resulted in the improvement of two different target parameters (PbtO2 and ICP)

Precautionary Tale of Pulmonary Artery Catheters: Clinical Trials of Diagnostic Tools

The pulmonary artery catheter (PAC) was developed in the 1970s to measure cardiac output and pulmonary artery pressures and to infer diastolic left heart filling pressures [125]. The multimodal assessment of cardiac function thus afforded was used to optimize preload and systemic vascular resistance in an individualized way in selected ICU patients. The use of the PAC was associated with changes in management in 80% of patients [126]. However, the PAC required interpretation of waveforms, multiple measurements, and the ability to infer specific relationships between cardiopulmonary pressures and volumes. The complexity of using this data to make correct management decisions is underscored by a study that found only 65% agreement with expert interpretation [127]. The use of this diagnostic tool was subsequently found to have no clear impact on survival in ICU patients in part because of a lack of a common strategy to guide its use and the misinterpretation of the data it provided [128].

Likewise, the use of brain-focused multimodality monitoring has evolved from its beginnings in the 1960s using external ventricular drainage catheters to continuously measure ICP and the interpretation of intracranial waveforms [129]. Today, a plethora of devices are available to measure pressure, flow, oxygenation, metabolism, and function within the brain tissue itself and these are tightly related to systemic physiology measured routinely in the ICU setting, including cardiac telemetry, arterial blood pressure, and peripheral oxygen saturation. This data, similarly, is complex and its interpretation is often subject to local expertise and bedside evaluation. There are no standards that exist for which combinations of measurements should be used in clinical care, how best to record or display these data, and how measurements should be interpreted over time [11].

Existing clinical trials targeting single modalities and using simple thresholds such as ICP or CPP alone have been largely unsuccessful [7, 35]. The use of multimodality monitoring offers the potential to refine and individualize critical care management much the way the PAC promised to do. Yet, clinical trials that specifically focus on the use of MNM data to improve outcome after TBI risk a similar fate if they are not designed cautiously (one should also consider the additional difficulty of including large numbers of patients in MNM studies). Pitfalls include (a) use in broad populations that are not enriched for the pathology targeted for intervention, (b) timing of monitoring information, whether too early or too late relative to injury, (c) incomplete measurement of potentially useful parameters, (d) misinterpretation of what the data represents for a given patient, and (e) use of interventions that may not target specific pathologies but rather their manifestations, for instance treating elevations in ICP rather the underlying source of these elevations by giving hyperosmotic therapy when CSF diversion might be warranted.

Precision medicine relies on individual variability to tailor management and physiology-based interventions rather than broadly applying a single intervention to a population at-risk. The BOOST-III study (NCT03754114) provides an excellent example of this form of trial design targeting combinations of ICP or PbtO2 abnormalities depending on their potential underlying cause. Clinical trial design has also focused on enriched populations with specific pathology, such as SDs, with decades of evidence for their role in evolving secondary brain injuries. The INDICT study not only focuses on a specific physiology but leverages the tier-based multi-intervention approach of BOOST to truly individualize management within the context of the heterogeneity of TBI. Finally, studies are leveraging big data generated using MNM by providing easy-to-understand visualization of analytic tools, enabling real-time management decisions specific to a given patient at a given time. The COGITATE trial (NCT02982122) has demonstrated the feasibility of strategies such as this.

A common theme that emerges from these and other novel study designs for patients with TBI is not the use of multimodality monitoring per se to positively impact outcome. Rather, multimodality monitoring is being used for the identification of specific endophenotypes within the TBI population, to provide unequivocal definitions rather than relying on clinician interpretation, and to prescribe interventions that are flexible. There is recognition across the community of TBI researchers that there are no monolithic interventions or targets that will exist across any population sized to power efficacy for any specific outcome. Strategies to standardize approaches and to more rigorously interpret multimodality monitoring information will allow identification of new and clinically relevant endophenotypes based on enhanced understanding of the pathophysiology underlying SBI. In doing so, MNM promises to inform the next generation of clinical trials and avoid the PAC trap.

References

GBD 2016 Traumatic Brain Injury and Spinal Cord Injury Collaborators. Global, regional, and national burden of traumatic brain injury and spinal cord injury, 1990–2016: a systematic analysis for the Global Burden of Disease Study 2016. Lancet Neurol. 2019;18(1):56–87.

Dewan MC, Rattani A, Gupta S, et al. Estimating the global incidence of traumatic brain injury. J Neurosurg. 2018;1:1–18.

Bragge P, Synnot A, Maas AI, et al. A state-of-the-science overview of randomized controlled trials evaluating acute management of moderate-to-severe traumatic brain injury. J Neurotrauma. 2016;33:1461–78.

Thornhill S, Teasdale GM, Murray GD, et al. Disability in young people and adults one year after head injury: prospective cohort study. BMJ. 2000;320(7250):1631–5.

Chesnut RM, Marshall LF, Klauber MR, et al. The role of secondary brain injury in determining outcome from severe head injury. J Trauma. 1993;34(2):216–22.

van Santbrink H, Maas AI, Avezaat CJ. Continuous monitoring of partial pressure of brain tissue oxygen in patients with severe head injury. Neurosurgery. 1996;38(1):21–31.

Robertson CS, Valadka AB, Hannay HJ, et al. Prevention of secondary ischemic insults after severe head injury. Crit Care Med. 1999;27(10):2086–95.

Zeiler FA, Thelin EP, Helmy A, et al. A systematic review of cerebral microdialysis and outcomes in TBI: relationships to patient functional outcome, neurophysiologic measures, and tissue outcome. Acta Neurochir (Wien). 2017;159:2245–73.

Kochanek PM, Clark RS, Ruppel RA, et al. Biochemical, cellular, and molecular mechanisms in the evolution of secondary damage after severe traumatic brain injury in infants and children: lessons learned from the bedside. Pediatr Crit Care Med. 2000;1(1):4–19.

Lazaridis C. Cerebral oxidative metabolism failure in traumatic brain injury: “brain shock.” J Crit Care. 2017;37:230–3.

Foreman B, Ngwenya LB. Sustainability of applied intracranial multimodality neuromonitoring after severe brain injury. World Neurosurg. 2019;124:378–80.

Lazaridis C. Brain shock-toward pathophysiologic phenotyping in traumatic brain injury. Crit Care Explor. 2022;4(7): e0724.

Lazaridis C, Robertson CS. The role of multimodal invasive monitoring in acute traumatic brain injury. Neurosurg Clin N Am. 2016;27(4):509–17.

Carney N, Totten AM, O’Reilly C, et al. Guidelines for the management of severe traumatic brain injury, fourth edition. Neurosurgery. 2017;80:6–15.

Hawryluk GWJ, Aguilera S, Buki A, et al. A management algorithm for patients with intracranial pressure monitoring: the Seattle International Severe Traumatic Brain Injury Consensus Conference (SIBICC). Intensive Care Med. 2019;45:1783–94.

Cnossen MC, Huijben JA, van der Jagt M, et al. CENTER-TBI Investigators: variation in monitoring and treatment policies for in- tracranial hypertension in traumatic brain injury: a survey in 66 neu- rotrauma centers participating in the CENTER-TBI study. Crit Care. 2017;21:233.

Sivakumar S, Taccone FS, Rehman M, et al. Hemodynamic and neuro-monitoring for neurocritically ill patients: an international survey of intensivists. J Crit Care. 2017;39:40–7.

Alvarado-Dyer R, Aguilera S, Chesnut RM, et al. Managing severe traumatic brain injury across resource settings: Latin American perspectives. Neurocrit Care. 2023;12:1–6.

Godoy DA, Carrizosa J, Aguilera S, et al. Latin America Brain Injury Consortium (LABIC) members. Current practices for intracranial pressure and cerebral oxygenation monitoring in severe traumatic brain injury: a Latin American survey. Neurocrit Care. 2023;38(1):171–177.

Sorrentino E, Diedler J, Kasprowicz M, et al. Critical thresholds for cerebrovascular reactivity after traumatic brain injury. Neurocrit Care. 2012;16(2):258–66.

Marmarou A, Anderson RL, Ward JD, et al. Impact of ICP instability and hypotension on outcome in patients with severe head trauma. J Neurosurg. 1991;75(Suppl):S59–66.

Stocchetti N, Zanaboni C, Colombo A, et al. Refractory intracranial hypertension and “second tier” therapies in traumatic brain injury. Intensive Care Med. 2008;34:461–7.

Stocchetti N, Maas AI. Traumatic intracranial hypertension. N Engl J Med. 2014;370(22):2121–30.

Patel HC, Menon DK, Tebbs S, et al. Specialist neurocritical care and outcome from head injury. Intensive Care Med. 2002;28(5):547–53.

Bulger EM, Nathens AB, Rivara FP, et al. Brain trauma foundation. Management of severe head injury: institutional variations in care and effect on outcome. Crit Care Med. 2002;30(8):1870–6.

Elf K, Nilsson P, Enblad P. Outcome after traumatic brain injury improved by an organized secondary insult program and standardized neurointensive care. Crit Care Med. 2002;30(9):2129–34.

Fakhry SM, Trask AL, Waller MA, et al. Management of brain-injured patients by an evidence-based medicine protocol improves outcomes and decreases hospital charges. J Trauma. 2004;56(3):492–9; discussion 499–500.

Alali AS, Fowler RA, Mainprize TG, et al. Intracranial pressure monitoring in severe traumatic brain injury: results from the American College of Surgeons Trauma Quality Improvement Program. J Neurotrauma. 2013;30:1737–46.

Rønning P, Helseth E, Skaga NO, et al. The effect of ICP monitoring in severe traumatic brain injury: a propensity score-weighted and adjusted regression approach. J Neurosurg. 2018;131(6):1896–904.

Cremer OL, van Dijk GW, van Wensen E, et al. Effect of intracranial pressure monitoring and targeted intensive care on functional outcome after severe head injury. Crit Care Med. 2005;33(10):2207–13.

Shafi S, Diaz-Arrastia R, Madden C, et al. Intracranial pressure monitoring in brain-injured patients is associated with worsening of survival. J Trauma. 2008;64(2):335–40.

Ahl R, Sarani B, Sjolin G, et al. The association of intracranial pressure monitoring and mortality: a propensity score-matched cohort of isolated severe blunt traumatic brain injury. J Emerg Trauma Shock. 2019;12(1):18–22.

Khormi YH, Senthilselvan A, O'kelly C, et al. Adherence to brain trauma foundation guidelines for intracranial pressure monitoring in severe traumatic brain injury and the effect on outcome: a population-based study. Surg Neurol Int. 2020;11:118.

Delaplain PT, Grigorian A, Lekawa M, et al. Intracranial pressure monitoring associated with increased mortality in pediatric brain injuries. Pediatr Surg Int. 2020;36(3):391–8.

Chesnut RM, Temkin N, Carney N, et al. Global neurotrauma research group. A trial of intracranial-pressure monitoring in traumatic brain injury. N Engl J Med. 2012;367(26):2471–81.

Hawryluk GWJ, Nielson JL, Huie JR, et al. Analysis of normal high-frequency intracranial pressure values and treatment threshold in neurocritical care patients: insights into normal values and a potential treatment threshold. JAMA Neurol. 2020;77(9):1150–8.

Lazaridis C, Desai M, Damoulakis G, et al. Intracranial pressure threshold heuristics in traumatic brain injury: one, none, many! neurocrit care. 2020;32(3):672–676.

Chesnut RM, Videtta W. Situational intracranial pressure management: an argument against a fixed treatment threshold. Crit Care Med. 2020;48(8):1214–6.

Lazaridis C, Goldenberg FD. Intracranial pressure in traumatic brain injury: from thresholds to heuristics. Crit Care Med. 2020;48(8):1210–3.

Czosnyka M, Pickard JD, Steiner LA. Principles of intracranial pressure monitoring and treatment. Handb Clin Neurol. 2017;140:67–89.

Czosnyka M, Smielewski P, Kirkpatrick P, et al. Continuous assessment of the cerebral vasomotor reactivity in head injury. Neurosurgery. 1997;41(1):11–7; discussion 17–9.

Brady KM, Lee JK, Kibler KK, et al. Continuous measurement of autoregulation by spontaneous fluctuations in cerebral perfusion pressure: comparison of 3 methods. Stroke. 2008;39(9):2531–7.

Steiner LA, Czosnyka M, Piechnik SK, et al. Continuous monitoring of cerebrovascular pressure reactivity allows determination of optimal cerebral perfusion pressure in patients with traumatic brain injury. Crit Care Med. 2002;30:733–8.

Aries MJ, Czosnyka M, Budohoski KP, et al. Continuous determination of optimal cerebral perfusion pressure in traumatic brain injury. Crit Care Med. 2012;40(8):2456–63.

Lazaridis C, DeSantis SM, Smielewski P, et al. Patient-specific thresholds of intracranial pressure in severe traumatic brain injury. J Neurosurg. 2014;120(4):893–900.

Zeiler FA, Ercole A, Cabeleira M, et al. High resolution ICU sub-study participants and investigators. Patient-specific ICP epidemiologic thresholds in adult traumatic brain injury: a CENTER-TBI validation study. J Neurosurg Anesthesiol. 2021;33(1):28–38.

Lazaridis C, Smielewski P, Menon DK, et al. Patient-specific thresholds and doses of intracranial hypertension in severe traumatic brain injury. Acta Neurochir Suppl. 2016;122:117–20.

Depreitere B, Citerio G, Smith M, et al. Cerebrovascular autoregulation monitoring in the management of adult severe traumatic brain injury: a Delphi consensus of clinicians. Neurocrit Care. 2021;34(3):731–8.

Lazaridis C. Cerebral autoregulation: the concept the legend the promise. Neurocrit Care. 2021;34(3):717–9.

Tas J, Beqiri E, van Kaam RC, et al. Targeting autoregulation-guided cerebral perfusion pressure after traumatic brain injury (COGiTATE): a feasibility randomized controlled clinical trial. J Neurotrauma. 2021;38(20):2790–800.

Lazaridis C, Rusin CG, Robertson CS. Secondary brain injury: predicting and preventing insults. Neuropharmacology. 2019;145(Pt B):145–52.

Myers RB, Lazaridis C, Jermaine CM, et al. Predicting intracranial pressure and brain tissue oxygen crises in patients with severe traumatic brain injury. Crit Care Med. 2016;44(9):1754–61.

McNamara R, Meka S, Anstey J, et al. Development of traumatic brain injury associated intracranial hypertension prediction algorithms: a narrative review. J Neurotrauma. 2023;40(5–6):416–34.

Carra G, Güiza F, Depreitere B, et al. Prediction model for intracranial hypertension demonstrates robust performance during external validation on the CENTER-TBI dataset. Intensive Care Med. 2021;47(1):124–6.

Lazaridis C, Ajith A, Mansour A, et al. Prediction of intracranial hypertension and brain tissue hypoxia utilizing high-resolution data from the BOOST-II clinical trial. Neurotrauma Rep. 2022;3(1):473–8.

Carra G, Güiza F, Piper I, et al. Development and external validation of a machine learning model for the early prediction of doses of harmful intracranial pressure in patients with severe traumatic brain injury. J Neurotrauma. 2023;40(5–6):514–22.

Clark LC. Monitor and control of blood and tissue oxygen tensions. Trans Am Soc Artif Int Org. 1956;2:41–5.

Foreman B, Ngwenya LB, Stoddard E, et al. Safety and reliability of bedside, single burr hole technique for intracranial multimodality monitoring in severe traumatic brain injury. Neurocrit Care. 2018;29(3):469–80.

Ngwenya LB, Burke JF, Manley GT. Brain tissue oxygen monitoring and the intersection of brain and lung: a comprehensive review. Respir Care. 2016;61(9):1232–44.

Haitsma IK, Maas AI. Advanced monitoring in the intensive care unit: brain tissue oxygen tension. Curr Opin Crit Care. 2002;8(2):115–20.

Nortje J, Gupta AK. The role of tissue oxygen monitoring in patients with acute brain injury. Br J Anaesth. 2006;97(1):95–106.

Gupta AK, Hutchinson PJ, Fryer T, et al. Measurement of brain tissue oxygenation performed using positron emission tomography scanning to validate a novel monitoring method. J Neurosurg. 2002;96(2):263–8.

Menon DK, Coles JP, Gupta AK, et al. Diffusion limited oxygen delivery following head injury. Crit Care Med. 2004;32(6):1384–90.

Diringer MN, Aiyagari V, Zazulia AR, et al. Effect of hyperoxia on cerebral metabolic rate for oxygen measured using positron emission tomography in patients with acute severe head injury. J Neurosurg. 2007;106(4):526–9.

Rosenthal G, Hemphill JC 3rd, Sorani M, et al. Brain tissue oxygen tension is more indicative of oxygen diffusion than oxygen delivery and metabolism in patients with traumatic brain injury. Crit Care Med. 2008;36(6):1917–24.

Launey Y, Fryer TD, Hong YT, et al. Spatial and temporal pattern of ischemia and abnormal vascular function following traumatic brain injury. JAMA Neurol. 2020;77(3):339–49.

Valadka AB, Gopinath SP, Contant CF, et al. Relationship of brain tissue PO2 to outcome after severe head injury. Crit Care Med. 1998;26(9):1576–81.

Nangunoori R, Maloney-Wilensky E, Stiefel M, et al. Brain tissue oxygen-based therapy and outcome after severe traumatic brain injury: a systematic literature review. Neurocrit Care. 2012;17(1):131–8.

van den Brink WA, van Santbrink H, Steyerberg EW, et al. Brain oxygen tension in severe head injury. Neurosurgery. 2000;46(4):868–76; discussion 876–8.

Okonkwo DO, Shutter LA, Moore C, et al. Brain oxygen optimization in severe traumatic brain injury phase-II: a phase II randomized trial. Crit Care Med. 2017;45(11):1907–14.

Hutchinson PJ, Jalloh I, Helmy A, et al. Consensus statement from the 2014 International Microdialysis Forum. Intensive Care Med. 2015;41:1517–28.

Le Roux P, Menon DK, Citerio G, et al. Consensus summary statement of the International Multidisciplinary Consensus Conference on Multimodality Monitoring in Neurocritical Care: a statement for healthcare professionals from the Neurocritical Care Society and the European Society of Intensive Care Medicine. Neurocrit Care. 2014;21 Suppl 2:1.

Venturini S, Bhatti F, Timofeev I, et al. Microdialysis-based classifications of abnormal metabolic states after traumatic brain injury: a systematic review of the literature. J Neurotrauma. 2023;40:195–209.

Vespa P, Bergsneider M, Hattori N, et al. Metabolic crisis without brain ischemia is common after traumatic brain injury: a combined microdialysis and positron emission tomography study. J Cereb Blood Flow Metab. 2005;25:763–74.

Vespa P, Tubi M, Claassen J, et al. Metabolic crisis occurs with seizures and periodic discharges after brain trauma. Ann Neurol. 2016;79:579–90.

Hinzman JM, Wilson JA, Mazzeo AT, et al. Excitotoxicity and metabolic crisis are associated with spreading depolarizations in severe traumatic brain injury patients. J Neurotrauma. 2016;33:1775–83.

Marcoux J, McArthur DA, Miller C, et al. Persistent metabolic crisis as measured by elevated cerebral microdialysis lactate-pyruvate ratio predicts chronic frontal lobe brain atrophy after traumatic brain injury. Crit Care Med. 2008;36:2871–7.

Bernini A, Magnoni S, Miroz J, et al. Cerebral metabolic dysfunction at the acute phase of traumatic brain injury correlates with long-term tissue loss. J Neurotrauma. 2023;40:472–81.

Gupta D, Singla R, Mazzeo AT, et al. Detection of metabolic pattern following decompressive craniectomy in severe traumatic brain injury: a microdialysis study. Brain Inj. 2017;31:1660–6.

Rostami E. Glucose and the injured brain-monitored in the neurointensive care unit. Front Neurol. 2014;5:91.

Oddo M, Schmidt JM, Carrera E, et al. Impact of tight glycemic control on cerebral glucose metabolism after severe brain injury: a microdialysis study. Crit Care Med. 2008;36:3233–8.

Oddo M, Villa F, Citerio G. Brain multimodality monitoring: an update. Curr Opin Crit Care. 2012;18:111–8.

Kurtz P, Claassen J, Schmidt JM, et al. Reduced brain/serum glucose ratios predict cerebral metabolic distress and mortality after severe brain injury. Neurocrit Care. 2013;19:311–9.

Lazaridis C, Andrews CM. Brain tissue oxygenation, lactate-pyruvate ratio, and cerebrovascular pressure reactivity monitoring in severe traumatic brain injury: systematic review and viewpoint. Neurocrit Care. 2014;21:345–55.

Vespa P, McArthur DL, Stein N, et al. Tight glycemic control increases metabolic distress in traumatic brain injury: a randomized controlled within-subjects trial. Crit Care Med. 2012;40:1923–9.

Quintard H, Patet C, Zerlauth J, et al. Improvement of neuroenergetics by hypertonic lactate therapy in patients with traumatic brain injury is dependent on baseline cerebral lactate/pyruvate ratio. J Neurotrauma. 2016;33:681–7.

Bouzat P, Sala N, Suys T, et al. Cerebral metabolic effects of exogenous lactate supplementation on the injured human brain. Intensive Care Med. 2014;40:412–21.

Stovell MG, Mada MO, Helmy A, et al. The effect of succinate on brain NADH/NAD(+) redox state and high energy phosphate metabolism in acute traumatic brain injury. Sci Rep. 2018;8:11140–3.

Khellaf A, Garcia NM, Tajsic T, et al. Focally administered succinate improves cerebral metabolism in traumatic brain injury patients with mitochondrial dysfunction. J Cereb Blood Flow Metab. 2022;42:39–55.

Claassen J, Taccone FS, Horn P, et al. Recommendations on the use of EEG monitoring in critically ill patients: consensus statement from the neurointensive care section of the ESICM. Intensive Care Med. 2013;39:1337–51.

Herman ST, Abend NS, Bleck TP, et al. Consensus statement on continuous EEG in critically ill adults and children, part I: indications. J Clin Neurophysiol. 2015;32:87–95.

Brophy GM, Bell R, Claassen J, et al. Guidelines for the evaluation and management of status epilepticus. Neurocrit Care. 2012;17:3–23.

Lee H, Mizrahi MA, Hartings JA, et al. Continuous electroencephalography after moderate to severe traumatic brain injury. Crit Care Med. 2019;47:574–82.

Strong AJ, Fabricius M, Boutelle MG, et al. Spreading and synchronous depressions of cortical activity in acutely injured human brain. Stroke. 2002;33:2738–43.

Dreier JP, Fabricius M, Ayata C, et al. Recording, analysis, and interpretation of spreading depolarizations in neurointensive care: review and recommendations of the COSBID research group. J Cereb Blood Flow Metab. 2017;37:1595–625.

Waziri A, Claassen J, Stuart RM, et al. Intracortical electroencephalography in acute brain injury. Ann Neurol. 2009;66:366–77.

Wang B, Chiu H, Luh H, et al. Comparative efficacy of prophylactic anticonvulsant drugs following traumatic brain injury: a systematic review and network meta-analysis of randomized controlled trials. PLoS ONE. 2022;17: e0265932.

Foreman B, Lee H, Mizrahi MA, et al. Seizures and cognitive outcome after traumatic brain injury: a post hoc analysis. Neurocrit Care. 2022;36:130–8.

Foreman B, Lee H, Okonkwo DO, et al. The relationship between seizures and spreading depolarizations in patients with severe traumatic brain injury. Neurocrit Care. 2022;37:31–48.

Hartings JA, Shuttleworth CW, Kirov SA, et al. The continuum of spreading depolarizations in acute cortical lesion development: examining Leao’s legacy. J Cereb Blood Flow Metab. 2017;37:1571–94.

Hartings JA. Spreading depolarization monitoring in neurocritical care of acute brain injury. Curr Opin Crit Care. 2017;23:94–102.

Hartings JA, Wilson JA, Hinzman JM, et al. Spreading depression in continuous electroencephalography of brain trauma. Ann Neurol. 2014;76:681–94.

Hartings JA, Bullock MR, Okonkwo DO, et al. Spreading depolarizations and outcome after traumatic brain injury: a prospective observational study. Lancet Neurol. 2011;10:1058–64.

Hartings JA, Andaluz N, Bullock MR, et al. Prognostic value of spreading depolarizations in patients with severe traumatic brain injury. JAMA Neurol. 2020;77:489–99.

Helbok R, Hartings JA, Schiefecker A, et al. What should a clinician do when spreading depolarizations are observed in a patient? Neurocrit Care. 2020;32:306–10.

Carlson AP, Abbas M, Alunday RL, et al. Spreading depolarization in acute brain injury inhibited by ketamine: a prospective, randomized, multiple crossover trial. J Neurosurg. 2018;1–7.

Gaspard N, Foreman B, Judd LM, et al. Intravenous ketamine for the treatment of refractory status epilepticus: a retrospective multicenter study. Epilepsia. 2013;54:1498–503.

Alkhachroum A, Der-Nigoghossian CA, Mathews E, et al. Ketamine to treat super-refractory status epilepticus. Neurology. 2020;95:e2286–94.

Tas J, Czosnyka M, van der Horst ICC, et al. Cerebral multimodality monitoring in adult neurocritical care patients with acute brain injury: a narrative review. Front Physiol. 2022;1(13):1071161.

Sekhon MS, Gooderham P, Toyota B, et al. Implementation of neurocritical care is associated with improved outcomes in traumatic brain injury. Can J Neurol Sci. 2017;44(4):350–7.

Bernard F, Barsan W, Diaz-Arrastia R, et al. Brain oxygen optimization in severe traumatic brain injury (BOOST-3): a multicentre, randomised, blinded-endpoint, comparative effectiveness study of brain tissue oxygen and intracranial pressure monitoring versus intracranial pressure alone. BMJ Open. 2022;12(3): e060188.

BONANZA-ANZICS [Internet]. 2021. https://www.anzics.com.au/current-active-endorsed-research/bonanza/. Accessed 1 April 2023.

Payen JF, Richard M, Francony G, et al. Comparison of strategies for monitoring and treating patients at the early phase of severe traumatic brain injury: the multicenter randomized controlled OXY-TC trial study protocol. BMJ Open. 2020;10(8): e040550.

Daly S, Thorpe M, Rockswold S, et al. Hyperbaric oxygen therapy in the treatment of acute severe traumatic brain injury: a systematic review. J Neurotrauma. 2018;35(4):623–9.

Rockswold SB, Rockswold GL, Zaun DA, et al. A prospective, randomized clinical trial to compare the effect of hyperbaric to normobaric hyperoxia on cerebral metabolism, intracranial pressure, and oxygen toxicity in severe traumatic brain injury. J Neurosurg. 2010;112(5):1080–94.

Rockswold SB, Rockswold GL, Vargo JM, et al. Effects of hyperbaric oxygenation therapy on cerebral metabolism and intracranial pressure in severely brain injured patients. J Neurosurg. 2001;94(3):403–11.

Daugherty WP, Levasseur JE, Sun D, et al. Effects of hyperbaric oxygen therapy on cerebral oxygenation and mitochondrial function following moderate lateral fluid-percussion injury in rats. J Neurosurg. 2004;101(3):499–504.

Gajewski BJ, Berry SM, Barsan WG, et al. Hyperbaric oxygen brain injury treatment (HOBIT) trial: a multifactor design with response adaptive randomization and longitudinal modeling. Pharm Stat. 2016;15(5):396–404.

Hartings JA, Strong AJ, Fabricius M, et al. Spreading depolarizations and late secondary insults after traumatic brain injury. J Neurotrauma. 2009;26:1857–66.

Schiefecker AJ, Kofler M, Gaasch M, et al. Brain temperature but not core temperature increases during spreading depolarizations in patients with spontaneous intracerebral hemorrhage. J Cereb Blood Flow Metab. 2018;38:549–58.

von Bornstadt D, Houben T, Seidel JL, et al. Supply-demand mismatch transients in susceptible peri-infarct hot zones explain the origins of spreading injury depolarizations. Neuron. 2015;85:1117–31.

Carlson AP, Davis HT, Jones T, et al. Is the human touch always therapeutic? Patient stimulation and spreading depolarization after acute neurological injuries. Transl Stroke Res. 2023;14:160–73.

Hertle DN, Dreier JP, Woitzik J, et al. Effect of analgesics and sedatives on the occurrence of spreading depolarizations accompanying acute brain injury. Brain. 2012;135:2390–8.

Hartings JA, Dreier JP, Ngwenya LB, et al. improving neurotrauma by depolarization inhibition with combination therapy: a phase 2 randomized feasibility trial. Neurosurgery. 2023 Apr 21. Epub ahead of print.

Thakkar AB, Desai SP. Swan, Ganz, and their catheter: its evolution over the past half century. Ann Intern Med. 2018;169:636–42.

Harvey S, Harrison DA, Singer M, et al. Assessment of the clinical effectiveness of pulmonary artery catheters in management of patients in intensive care (PAC-Man): a randomised controlled trial. Lancet. 2012;366:472–7.

Iberti TJ, Fischer EP, Leibowitz AB, et al. A multicenter study of physicians’ knowledge of the pulmonary artery catheter. Pulmonary Artery Catheter Study Group JAMA. 1990;264:2928–32.

Rajaram SS, Desai NK, Kalra A, et al. Pulmonary artery catheters for adult patients in intensive care. Cochrane Database Syst Rev. 2013;2013(2):CD003408.

Wijdicks EFM. Lundberg and his Waves. Neurocrit Care. 2019;31:546–9.

Acknowledgements

We thank Dirk Traufelder for medical illustration.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Dr. Lazaridis is the University of Chicago site PI for the BOOST-3 clinical trial. Dr. Lazaridis is supported by the Department of Defense CDMRP Log Number: TP210464 Grants.gov ID Number: GRANT13518109 for Prediction and Prevention of Intracranial Hypertension. Dr. Foreman is co-investigator for the INDICT clinical trial (DOD JW200215) and is supported by DOD grants JW200215 and W81XWH1920013. Dr. Foreman receives honoraria and serves on the speaker’s bureau for UCB Pharma, and scientific advisory boards for SAGE Therapeutics and Marinus Pharmaceuticals. He is an unpaid member of the scientific advisory committee for the Neurocritical Care Society Curing Coma Campaign.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This is an invited review for The Next Generation of Clinical Trials for Traumatic Brain Injury.

Rights and permissions