Abstract

This paper aims to solve Redundancy allocation problem (RAP). It is a significant complex optimization and non-linear integer programming problem of reliability engineering. RAP includes the choices of components and the suitable amount of redundant subsystems for maximizing reliability of the system under given restrictions like cost, weight, volume etc. It is difficult to solve non-linear complex problems. In this paper, the RAP is solved by the combination of genetic and simulating algorithm that is called Hybrid Genetic Simulating Annealing Algorithm (HGSAA). It can be observed that superiority of both the algorithms are combined and form an adequate algorithm which ignores the individual weakness. Comparative analysis of HGSAA with existing methods such as Heuristic Algorith, Constraint Optimization Genetic Algorithm, Hybrid Particle Swarm Optimization and Constraint Optimization Genetic Algorithm are presented in this study. RAP is also solved by Branch and Bound method to validate the result of HGSAA. The developed algorithm is programmed by Matlab.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

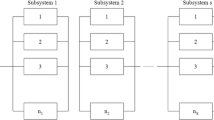

Most of the services to human beings are often provided by use of costly and complex systems. Some examples of industries using most complex systems are industries like aerospace, power generation, military, petrochemicals and automotive industries. The technological developments and increasing complication in technical systems made the job of analysts more complicated. As they study the system performance using qualitative and quantitative approaches to improve the output and productivity. The rising requirements of highly reliable systems open doors towards the study of reliability optimization. To design an exceedingly reliable system, improvement in system reliability can be done in two ways. Firstly, by way of addition of redundant components and secondly, by enhancing the component reliability. Usually, application of either of the ways increase the use of resources i.e. cost, volume, weight etc. Therefore, a substantial problem while structuring an exceedingly reliable system to keep up balance the reliability and use of other resources. To attain such an allocation, several researchers deal with different system configurations like series, parallel, series–parallel and k- out of –n systems etc. Many real world complex system design problems require the utilization of redundancy to meet the goal of maximizing the reliability. RAP with single goal has widely investigated. In some critical parts, engineers put redundancy to ensure launch success. Because of the various component combinations, RAP is classified as NP-hard.

A huge variety of techniques have been applied to solve RAP. In summary, various researchers have found various modifications of the RAP (Kuo and Prasad 2000; Chambari et al. 2012). These developed techniques can be categorized using dynamic programming, non-linear and mixed integer programming problem as single objective optimization (Bellman and Dreyfus 1962; Fyffe et al. 1968; Nakagawa and Miyazaki 1981, Garg and Kumar 2009, Garg et al. 2010). It has been also solved by using some techniques such that heuristic and meta-heuristic algorithm like genetic algorithms, PSO and Simulating Annealing (SA) etc.GA is the most popular amongst heuristic algorithms which is applied in vast range of reliability optimization problems (Painton and Campbell 1994, 1995; Davis 1987). GAs are considered as numerical search techniques which follow a procedure based on natural selection (Holland 1975). Garg and Kumar (2010) utilized GA to solve availability optimization problem of screw plant. GAs applications resembles in the works of many authors i.e., (Dengiz et al. 1997; Tavakkoli-Moghaddma et al. 2008). For improving the performance of GA, an effective oriented GA (EOGA) is proposed by adding a new criteria known as the components applicability (Essadqi et al. 2018). This parameter allows a better search in generation of initial population and operator’s specific usage and fitness function. A problem of multi-objective optimization is solved by GA (Busacca et al. 2001) in which they considered every goal as separate goal. In optimization of designing of engineered systems, there are several goals which have to be satisfied. COGA has been used for solving RAP (Devi and Garg 2017). SA algorithm, which was first independently presented as an iterative search tool to find the most favorable solution of complex optimization problems in (Kirkpatrik et al.1983; Černý 1985). It has been shown by many researchers that evolutionary algorithms GA, PSO and physical algorithm SA are attractive because they have better search capabilities to the optimization problem (He et al. 2004; He and Wang 2007; Sheikhalishahi et al. 2013; Garg 2015). For getting more efficient computations, GA has been combined with other meta heuristic algorithms like as GA with PSO and Hill climbing approach (Krink and Lvbjerg 2002). PSO is a nature inspired evolutionary algorithm. Position and velocity of PSO are revised as per its own experiences and neighbors experiences. New improvement seen in performance of PSO after defining a new parameter \(w\) and give a name inertia weight to that parameter (Shi and Eberhart 1998). As it is a stochastic search algorithm, it has some advantage and some weakness. The performance of PSO is problem dependent and that is the major weakness of PSO. There is a high degree of variation in the performance of PSO because of the different parameters are set for different problems. It can’t be possible to set a single parameter for all the problems solving by PSO. The problem has been dealt in a better way with defining self-adaptive parameters (Clerc 1999; Shi and Eberhart 2001; Hu and Eberhart 2002; Tsou and Macnish 2003; Ratnaveera et al. 2004). The second weakness of PSO is that it could be converge or trapped on local minima and even it could not improve the performance when number of large iterations (Angeline1998). To curb the convergence properties of a particle swarm system, a contraction coefficient is generated (Clerc and Kennedy 2002). A dynamic inertia weight is also introduced in PSO algorithm for solving optimization problem and it has given a name of Improved PSO (IPSO) algorithm (Jiao et al. 2008). Several modifications have been made for improving the performance of PSO, and Hybridization of techniques came in limelight. Hybridization is a recent spreading area of intelligence system. Genetical Swarm Optimization (GSO) is a combination of desired properties of PSO and GAs which is applied for solving complex optimization problems (Grimaldi et al. 2004). H-PSOCOGA is a combination of PSO and COGA, utilized for solving the RAP (Devi et al. 2017). For solving multi-objective optimization problems a hybrid algorithm known as GA/PSO was applied (Jieong et al. 2009). The validity of this algorithm is checked on test problems. This can be achieved by numerous researchers like PSO with GA and utilized to improve the work of PSO.

In recent phase, various techniques have been developed with the combination of evolutionary and physical algorithms which is more powerful for solving constraint optimization problems. For instance the combination of GA and SA has become an effective algorithm to solve RAP. (Kim et al. 2004) used SA algorithm for solving RAP with different element choices. GA and SA both are nature inspired search algorithms but the difference is that GA could be trapped in local minima while SA has the capability to jump out through local optimization. A Hybrid Simulating Annealing (HSA) has been applied to solve nonslicing floor-planning that is starting phase to design a chip (Chen et al. 2011). With the combination of GA and SA an efficient algorithm is made for solving broadband matching networks for antennas (Chen et al. 2012). In this paper HGSAA is presented for solving RAP.

2 Material and methods

2.1 Problem formulation

In this section, a problem of manufacturing industry is taken to improve the reliability of the system. There are mainly seven units are arranged in series way and the process of making a product run step by step by each and every machine. The problem is same which is explained in earlier paper (Devi and Garg 2017). The motive of solving this problem is to maximize product reliability with the reduction of low cost as defined in Table 1. Given cost constraint is C = 30,490,000.

Problem is to maximize

where \(R_{i} (a_{i} )\, = \,\mathop \Pi \limits_{i = 0}^{7} \left[ {1 - \left( {Q_{i} (a_{i} )} \right)^{{m_{i} }} } \right]\).

such that

2.2 Hybrid genetic simulating annealing algorithm (HGSAA)

This section develops a novel technique based on a combination of hybrid GA and SA based on local search and named as HGSAA. The proposed algorithm shows the advantages of both the algorithms. In this paper, the developed HGSAA technique is applied for solving RAP and avoid the drawbacks of both the above mentioned algorithms. The algorithm of proposed approach HGSAA is given as below:

-

(1)

Initialize the Parameters: population size N, crossover probability Pc, mutation probability Pm, iteration count i.

-

(2)

Initialize i = 0, Generate initial population randomly Ci.

-

(3)

Set up starting population arbitrarily Ci.

-

(4)

Figure out the fitness value of every character in population Ci.

-

(5)

Compute the fitness value of every individual in population Ci.

-

(6)

Do the selection, crossover, and mutation operations for individual in population Ci,i = i + 1,then get updated population Ci.

-

(7)

Evaluate new population Ci,i = i + 1

-

(a)

Use selection operator to select parent

-

(b)

Use crossover operator to get new sibling from selective parents

-

(c)

Further mutate

-

(a)

-

(8)

After the individual variation, perform the inner loop operation of simulated annealing algorithm until attain stable population Ci.

-

(a)

the starting solution S0 = Ci

-

(b)

k = 0

-

(c)

do

-

(d)

Increase temperature to Tmax,

-

(e)

Perturb the initial solution Sk, construct next solution Sk+1.

-

(f)

Compute the difference \(\Delta\) f = f (Sk+1) − f (Sk), f (S) is the assessment function.

-

(g)

If \(\Delta\) f > 0, then consider Sk+1=Sk+1 as the next solution, otherwise Sk+1=Sk as the next solution in accordance with the probability exp(\(\Delta\)f / T) .

-

(h)

Decrease T decreases, T \(\to\) 0, k = k + 1

-

(i)

While k < maxitr

-

(a)

-

(9)

Ci =Sk, go to step (3).

-

(10)

Check whether the outcome of the program is fulfilled with termination condition, then perform step (8), otherwise go to step 3.

-

(11)

Obtained results.

The achieved results from HGSAA are listed in below Table 2.

2.3 Exact Optimization

B&B method is classical exact method which gives optimal solution. The primary goal of B&B method is to optimize the objective function. It has been used in this paper to validate the results of HGSAA. It is too time consuming but results do not vary.

2.4 Algorithm of B&B Method

-

To search a feasible solution and fix the present optimum solution as value of starting feasible solution

-

Abort the procedure, if no unsolved problem is found

-

Split the problem into sub-problem where a decision variable is bounded.

-

Solve the problem by applying relaxation for each sub-problem. Subsequently revise the upper bound as the highest value of solution.

-

Figure out the tree node in case the upper bound is lesser than the present optimum solution.

-

Update the present optimum solution and move to the upcoming tree node if the upper bound is feasible else split into sub-problem again.

The results obtained by B&B method (exact algorithm) shown in Table 3.

3 Results

The problem given in Sect. 2 is solved by HA, COGA and H-PSOCOGA (Devi and Garg 2017). The results obtained by these three algorithms are outlined in Tables 4, 5, 6 respectively.

Table 7 shows the comparative results of four algorithms w.r.t. increment in reliability and CPU time except Exact Optimization Method. Obtained results demonstrate that percentage increment in reliability by HGSAA comparatively better than HA and COGA, HPSOCOGA. Graphical representation the results shown in (Figs. 1, 2, 3).

4 Conclusion

In the present work, a novel approach HGSAA is developed with the combination of two existing methods GA and SA. Due to all heuristic algorithms HA, COGA, HPSOCOGA, the results of HGSAA are validated by Branch and Bound method. The outcomes achieved by HA, COGA, H-PSOCOGA and HGSAA w.r.t increase in reliability are 52.30, 56.88, 56.10 and 56.85 respectively and with respect to CPU time are 50.66, 66.51, 5.559 and 2.122 respectively (in seconds), as shown in Table 7. However the increase in reliability by COGA is few more than proposed approach HGSAA but the time gap between these algorithms are high which shows the feasibility of proposed method.

Abbreviations

- \(a_{i}\) :

-

ith component

- \(R_{i} \left( {a_{i} } \right)\) :

-

Reliability of ai

- \(Q_{i} \left( {a_{i} } \right)\) :

-

Unreliability of component-ai

- \(R_{s} \left( a \right)\) :

-

Complete system reliability

- \(\rm m_i\) :

-

Redundancy in ith subunits

- \(h_{i} \left( {a_{i} } \right)\) :

-

jth resource exhausted by ith component

- \(m = 7\) :

-

Overall units

- C:

-

Total cost

- \(K(.)\) :

-

A function which estimate the reliability of overall system

- \(COGA_{num}\) :

-

No. of solutions at the time of execution of iteration in COGA

- \(COGA_{PS}\) :

-

Population size of the particles in COGA

- \(COGA_{\max lter}\) :

-

Upper iteration limit in PSO

- \(PSO_{i}\) :

-

PSO iteration in ongoing execution

References

Angeline PJ (1998) Evolutionary optimization versus particle swarm optimization: philosophy and performance differences. In: Proceedings of the 7th annual conference on evolutionary programming, San Diego, USA, pp. N601–N610

Bellman RE, Dreyfus E (1962) Applied dynamic programming. Princeton University Press, New Jersey

Busacca PG, Marseguerra M, Zio E (2001) Multi-objective optimization by genetic algorithms: application to safety systems. Reliab Eng Syst Safe 72(1):59–74

Černý V (1985) Thermodynamical approach to the traveling salesman problem: an efficient simulation algorithm. J Opt Theory Appl 45(1):41–51

Chambari A, Rahmati SHA, Najafi AA, Karimi A (2012) A bi-objective model to optimize reliability and cost of system with a choice of redundancy strategies. Comput Ind Eng 63:109–119

Chen J, Zhu W, Ali MM (2011) A hybrid simulated annealing algorithm for nonslicing VLSI floorplanning. IEEE Trans Syst Man Cybern Part C Appl Rev 41(4):544–553

Chen A, Jiang T, Chen Z, Zhang Y (2012) Genetic and simulated annealing combined algorithm for optimization of wideband antenna matching networks. Int J Antennas Propag. https://doi.org/10.1155/2012/251624

Clerc M (1999) The swarm and the queen: towards a deterministic and adaptive particle swarm optimization. In: Proceedings of the congress on evolutionary computation. Washington D.C., USA, pp. 1951–1957

Clerc M, Kennedy J (2002) The particle swarm-explosion, stability, and convergence in a multidimensional complex space. IEEE Trans Evolut Compt 6:58–73

D. Tsou D, C. Macnish C (2003) Adaptive particle swarm optimization for high-dimensional highly convex search spaces. In: Proceedings of IEEE congress on evolutionary computation 2003 (CEC 2003). Canbella, Australia, pp. 783–789

Davis L (1987) Genetic algorithms and simulated annealing. Morgan Kaufman Publishers Inc, Los Altos

Dengiz B, Altiparmak F, Smith AE (1997) Local search genetic algorithm for optimal design of reliable networks. IEEE Trans Evol Comput 1:179–188

Devi S, Garg D (2017) Redundancy-allocation in neel metal products limited. Ind J of Sc and Tech 10(30):1–5

Devi S, Sahu A, Garg D (2017) Redundancy optimization problem via comparative analysis of H-PSOCOGA. IEEE Xplore 18–23.

Essadqi M, Idrissi A, Amarir A (2018) An effective oriented genetic algorithm for solving redundancy allocation problem in multi-state power system. Proc Comput Sci 127:170–179

Fyffe DE, Hines WW, Lee NK (1968) System reliability allocation and a computational algorithm. IEEE Trans Reliab 17:64–69

Garg H (2015) A hybrid GA-GSA algorithm for optimizing the performance of an industrial system by utilizing uncertain data. Handb Res Artif Intell Tech Algorithm IGI Global. https://doi.org/10.4018/978-1-4666-7258-1.ch020

Garg D, Kumar K (2009) Reliability analysis of pharmaceutical plant using Matlab-tool. Int J Electronic Eng Res 1(2):127–133

Garg D, Kumar K (2010) Availability optimization for screw plant based on genetic algorithm. Int J Eng Sci Technol 2:658–668

Garg D, Kumar K, Pahuja GL (2010) Redundancy-allocation in pharmaceutical plant. Int J Eng Sci Technol 2(5):1088–1097

Grimaldi EA, Grimacia F, Mussetta M, Pirinoli P, Zich RE (2004) A new hybrid genetical swarm algorithm for electromagnetic optimization. Proc of Int Conf on Comput Electromagnetics and its Applications, Beijing, China 157–160

He Q, Wang L (2007) An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Eng Appl Artif Intell 20:89–99

He S, Prempain E, Wu QH (2004) An improved particle swarm optimizer for mechanical design optimization problems. Eng Optim 36(5):585–605

Holland JH (1975) Adaption in natural and artificial system. University of Michigan Press, Ann Arbor

Hu X, Eberhart RC (2002) Adaptive particle swarm optimization: detection and response to dynamicsystems. In: Proceedings of congress on evolutionary computation. Hawaii, USA, pp. 1666–1670

Jeong S, Hasegawa S, Shimoyama K, Obayashi S (2009) Development and investigation of efficient GA/PSO-hybrid algorithm applicable to real-world design optimization. IEEE Comput Intell Mag 4(3):36–44

Jiao B, Lian Z, Gu X (2008) A dynamic inertia weight particle swarm optimization algorithm. Chaos Solitons Fract 37:698–705

Kim H, Bae C and Park S (2004) Simulated annealing algorithm for redundancy optimization with multiple component choices. The 2004 Asian International workshop on Advanced Reliability Modelling, Hiroshima, Japan pp. 237–244

Kirkpatrick S, Gelatt CD, Vecchi MP (1983) Optimization by simulated annealing. Science 220:671–680

Krink T, Lvbjerg M (2002) The lifecycle model: combining particle swarm optimization, genetic algorithms and hill climbers. In: Proceedings of the parallel problem solving from nature, pp. 621–630

Kuo W, Prasad VR (2000) An annotated overview of system reliability optimization. IEEE Trans Reliab 49:176–187

NakagawaY MS (1981) Surrogate constraints algorithm for reliability optimization problems with two constraints. IEEE Trans Reliab 30:1981

Painton L, Campbell J (1994) Identification of components to optimize improvements in system reliability. Proc SRA PSAM-II Conf Syst Based Methods Des Oper Technol Syst Proces 10:10–20

Painton L, Campbell J (1995) Genetic algorithms in optimization of system reliability. IEEE Trans Reliab 44:172–178

Ratnaweera A, Halgamuge SK, Watson HC (2004) Self-organizing hierarchical particle swarm optimizer with time-varying acceleration coefficients. IEEE Trans Evolut Comput 8:240–255

Sheikhalishahi M, Ebrahimipour V, Shiri H, Zaman H, Jeihoonian M (2013) A hybrid GA-PSO approach for reliability optimization in redundancy allocation problem. Int J Adv Manuf Technol 68:1–22

Shi Y, Eberhart R (1998) A modified particle swarm optimizer evolutionary computation. In: Proceedings of the IEEE world congress on computational intelligence. AK, USA, pp. 69–73

Shi Y, Eberhart RC (2001) Fuzzy adaptive particle swarm optimization. In: Proceedings of the congress on evolutionary computation. Seoul, Korea,pp. 101–106

Tavakkoli-Moghaddama R, SafarJ SF (2008) Reliability optimization of series-parallel systems with a choice of redundancy strategies using a genetic algorithm. Reliab Eng Syst Saf 93:550–556

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Garg, D., Devi, S. RAP via hybrid genetic simulating annealing algorithm. Int J Syst Assur Eng Manag 12, 419–425 (2021). https://doi.org/10.1007/s13198-021-01081-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13198-021-01081-3