Abstract

An inverse modelling study on the interpretation of magnetic anomalies caused by 2D dyke-shaped bodies was carried out using the differential search algorithm (DSA), a novel metaheuristic inspired by the migration of super-organisms. We aimed at estimating dyke parameters that include amplitude coefficient, depth, half-width, origin and dip angle. First, the resolvability of these parameters and algorithm-dependent parameters of the DSA that affect the performance were determined. Two theoretical and two field anomalies were used in the evaluations. Theoretical anomalies comprise one and two isolated dykes. The effect of noise content was also investigated in these cases. The inversion approach was then applied to two known magnetic field anomalies measured over the Marcona iron mine in Peru and the Pima copper mine in the US state of Arizona. The results showed that the efficiency of the DSA increases significantly with the use of optimal parameter sets of the inverse magnetic problem. Furthermore, cost function maps and relative frequency histograms showed that the parameters half-width and amplitude can be estimated with some uncertainties, while the remaining significant model parameters of the source body can be solved with negligible uncertainties. Findings indicated that the DSA provided satisfactory solutions in accordance with actual data and previously obtained results. Thus, it can be concluded that DSA is an efficient tool for interpreting magnetic anomalies caused by magnetised 2D dykes.

Research highlights

-

Inverse modelling using Differential Search Algorithm for magnetic anomaly inversion is presented.

-

The algorithm takes advantage of global optimization to interpret dyke-shaped bodies.

-

Tests were carried out on two theoretical and field anomalies caused by dykes.

-

Error energy maps and frequency distribution histograms show the ambiguities of the model parameters.

-

It is a powerful tool to estimate the model parameters of thick dykes with proper control parameters.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Magnetic prospection is one of the most effective geophysical surveying methods for studying the properties of the subsurface by measuring variations in the geomagnetic field caused mainly by ferrous minerals such as ilmenite, magnetite and pyrrhotite in geological formations (Ekinci et al. 2020a). Variations in magnetic field intensity resulting from the contrast of magnetic susceptibility between the targets investigated and the host medium can be used to determine the depth, geometry, and magnetic susceptibility of the induced magnetization anomalies. The most common applications of the magnetic method generally include explorations of mineral (Sharma 1987), diamond (Power et al. 2004), oil and gas (Eventov 1997), cave (Balkaya et al. 2012), archaeological remains (Ekinci et al. 2014), dyke location (Sowerbuts 1987), solid waste landfill (Prezzi et al. 2005), basement depth (Kumar et al. 2017), buried metallic objects (Barrows and Rocchio 1990) and buried igneous intrusions (Ekinci and Yiğitbaş 2012).

Geophysical inversion refers mainly to mathematical and statistical techniques for determining various physical properties of the subsurface such as density, magnetic susceptibility and electrical conductivity from geophysical measurements (Reid 2014). The main objective is to estimate the best model parameters that match well to the observations by combining forward models with appropriate optimization techniques (Carbone et al. 2006). Numerous techniques are used to interpret potential field data sets by considering simple geometric shapes such as dykes, spheres, thin sheets and faults. Among them, the dyke model has been widely used in magnetic explorations (e.g., Abdelrahman et al. 2003, 2012; Venkata Raju 2003; Beiki and Pedersen 2012; Al-Garni 2015; Ekinci 2016, 2018; Essa and Elhussein 2017). A variety of derivative-based approaches including the steepest descent, Gauss–Newton and Levenberg–Marquardt are generally used to interpret magnetic dyke anomalies (Radhakrishna Murthy et al. 1980; Khurana et al. 1981; Won 1981; Ram Babu et al. 1982; Atchuta Rao et al. 1985; Radhakrishna Murthy 1990; Beiki and Pedersen 2012). However, due to the inherent non-uniqueness characteristics of the potential data inversion, these approaches strongly require some constraints on variables and geophysical/geological prior information to provide a realistic and interpretable solution (Li and Oldenburg 1996). Therefore, conventional methods can only be successful if a reasonable initial guess for model parameters, and sometimes geological information are available; otherwise, the solution may fall into local minima rather than the global minimum.

Due to the importance of initial model parameter estimations for an effective solution, derivative-free metaheuristics inspired by nature are becoming increasingly popular for geophysical data inverse modelling. Metaheuristics do not require initial model parameters close to the actual value to reach the global minimum. Local minima can also be avoided by sampling in relatively large search spaces, compared to derivative-based approaches based on minimization procedure. Thus, they can find optimal solutions, even if there is no a priori information for the model parameters to be investigated. Compared to conventional approaches, the great disadvantage of metaheuristics is the higher cost of performing numerous fitness evaluations before a satisfactory solution is found. In the last decade, several metaheuristics including genetic algorithms (GA), particle swarm optimization (PSO), differential evolution (DE) algorithm, simulated annealing (SA) and its variants such as very fast SA (VFSA), genetic-price algorithm (GPO), and whale optimization algorithm (WOA) have been applied to interpret seismic data (Göktürkler 2011; Soupios et al. 2011; Çaylak et al. 2012), self-potential (SP) data (Pekşen et al. 2011; Göktürkler and Balkaya 2012; Balkaya 2013; Di Maio et al. 2016, 2019; Biswas and Sharma 2017; Ekinci et al. 2020b; Gobashy et al. 2020a; Sungkono 2020), gravity and magnetic data (Biswas and Acharya 2016; Ekinci 2016; Ekinci et al. 2016, 2017, 2019, 2020a, b, 2021; Singh and Biswas 2016; Balkaya et al. 2017; Biswas 2017; Kaftan 2017; Essa and Elhussein 2018, 2020; Anderson et al. 2020; Di Maio et al. 2020; Gobashy et al. 2020b) and electrical resistivity data (Başokur et al. 2007; Fernández Martinez et al. 2010; Balkaya et al. 2012; Pekşen et al. 2014). In addition, Göktürkler et al. (2016) presented an application of four metaheuristics, including PSO, GA, DE and SA for the inversion of SP (1D), electrical resistivity (1D), magnetic (3D) and cross-hole radar (2D) datasets, respectively. To sum up, the most common metaheuristics in applied geophysics are GA, PSO, SA and more recently DE, especially for geoelectrical and potential field data.

Differential Search Algorithm (DSA) is a novel and effective swarm-based metaheuristic algorithm proposed by Civicioglu (2012) to solve real-valued numerical optimization problems. Superorganism migration is the main inspiration of the algorithm. Multi-strategy-based DSA displays an advanced evolutionary algorithm feature with its unique mutation and crossover operators used in the evolutionary cycle. In geophysics, the first applications of DSA include surface-wave data inversion (Song et al. 2014) and the horizontal-loop electromagnetic (HLEM) data evaluation (Alkan and Balkaya 2018). Most recently, Ekinci et al. (2020b) performed gravity data inversion through the algorithm. To the best of our knowledge, this is the first application of the algorithm for the inversion of magnetic anomalies caused by dyke-shaped bodies. Here, two theoretical anomalies consisting of one and two magnetized bodies, and two field anomalies measured over the Pima copper mine area (US state of Arizona) and the Marcona iron mining area (Peru) were used to test the efficiency of the metaheuristic. We used an open-source MATLAB-based code (hlem_global) developed by Alkan and Balkaya (2018), which was adapted to solve the presented optimization problem. Since the inverse potential field problems suffer mainly from ambiguities and instability, various combinations of model parameters can produce similar anomalies (Carbone et al. 2006). Therefore, a possible ambiguity for the current optimization problem was also investigated considering the formulation used to compute magnetic anomalies caused by long tabular bodies (i.e., Grant and West 1965; Venkata Raju 2003). In the first synthetic case of an isolated dyke, the effect of two user-defined control parameters of the algorithm on the solution was investigated, using the original ones (Civicioglu 2012) and those proposed by Alkan and Balkaya (2018). The ambiguities of the model parameters investigated were also demonstrated using error energy maps and frequency distribution histograms. Furthermore, the results obtained by the DSA for field cases were compared with those of previous studies.

2 Methodology

2.1 Differential search algorithm (DSA)

DSA is one of the latest population-based metaheuristics introduced by Civicioglu (2012) to solve the problem of transforming geocentric Cartesian coordinates into geodetic coordinates. The algorithm is inspired by the migration of a superorganism that includes a group of synergistically interacting organisms of the same species as fire ants, honeybees and monarch butterflies. Seasonal migration to more fertile sites can occur when the natural resources of super-organisms such as water resources and pastures decrease in some periods of the year due to periodic climate change. If the capacity and diversity of these vital sources are satisfactory, the superorganism temporarily settles at this new stopover and then continues its migration to discover more productive habitats. In the algorithm, a food area and the migration of the superorganism are simulated by the search space and a Brownian-like random-walk movement in turn (Civicioglu 2012). DSA consists mainly of the steps given below. A generalized flowchart of the algorithm is also shown in figure 1.

2.1.1 Step 1. Set control parameters of DSA

The control parameters of metaheuristics are usually problem-dependent and have a significant effect on the performance of the algorithm. Since the selection of control parameters in the DSA, just as in other metaheuristics, is an essential issue, the algorithm may not perform well if these parameters are not optimized for the problem under consideration. The algorithm has only two control parameters, namely \({p}_{1}\) and \({p}_{2}\), which are used to determine the individual perturbation frequency of the superorganism participating in the search of the stopover site. To achieve this, it mainly uses a random process by changing its user-defined initial values at each epoch (i.e., the generation). In the original DSA, these parameters are the same and vary in the range of 0–0.3 (\({p}_{1}={p}_{2}=0.3\times {rand}_{\mathrm{1,2}}\)). Here, \({rand}_{1}\) and \({rand}_{2}\) represent two uniformly distributed random numbers between [0, 1]. The maximum number of population (\(Np\)) and epoch (\(G\)) are other user-defined parameters that must be specified before the initialization stage.

2.1.2 Step 2. Initialize superorganism

The algorithm begins with a randomly initiated superorganism composed of artificial-organisms. Each artificial-organism of a superorganism is denoted as \({{\varvec{X}}}_{i}=\left[\varvec{x}_{i,j}\right]\), where \(i=\left\{\mathrm{1,2},3,\ldots ,Np\right\}\), \(j=\left\{\mathrm{1,2},3,\ldots ,D\right\}\). Here, \(D\) depicts the dimension of the optimization problem. The initial position of the \(j\)th component of \(i\)th artificial-organism in the search space is randomly generated as follows.

where \({low}\) and \({up}\) denote lower and upper limits of unknown model parameters. Each artificial-organism represents a parameter vector that will evolve into a global solution to the optimization problem.

2.1.3 Step 3. Check termination condition

The algorithm is terminated when the cost function falls below a pre-defined threshold or reaches a certain number of \(G\). In this study, the cost function (\(E\)), which represents the convergence behaviour of DSA, was computed using the following equation.

where \(N\) stands for the number of observed data, \(\varvec{d}^{{obs}}\) and \(\varvec{d}^{{cal}}\) denote the observed and calculated data, respectively, \(T\) is a transposition and \(k\) represents a counter of the observations. The square root of equation (2) is \(\mathrm{rms}\) error used in the applications after estimating the model parameters.

2.1.4 Step 4. Compute the stopover site

DSA demonstrates an efficient approach by using a Brownian-like random walk model, which enables the movement of randomly selected individuals towards the targets of a \({donor}\) artificial-organism. A stopover site position \(\varvec(s)\), which is one of the solutions among the artificial-organisms, can be computed by the following equation:

A randomly selected member from the artificial-organism serves a target vector, \(donor=\varvec{X}_{r_1,G|{random}\_{shuffling}}\), where \(r_1 \in \left(\mathrm{1,2},3,\dots ,Np\right)\) and \(r_1\ne i\) are the integers arbitrarily determined. In DSA, the \({random}\_{shuffling}()\) function makes it possible to arbitrarily change the order of the individuals in the current population and plays a crucial role in the realization of an efficient migration movement. Furthermore, the \({scale}\) value provides the determination of the perturbation amount in the size of the member positions in the artificial-organism. In DSA, this value can be determined using a lognormal distribution for a Brownian walk simulation.

2.1.5 Step 5. Check the bounds of the stopover site

When the elements of the stopover site exceed the limits of the predefined search spaces, a new position in the search space is randomly created using equation (1). Afterwards, the artificial-superorganism tries to move from its current position to a better stopover site to reach the global minimum value.

2.1.6 Step 6. Determine the most fruitful stopover site

A search process provides a stopover site via artificial-organisms individuals of the superorganism. To achieve this task, a trial vector is obtained as follows.

where \(\varvec{s}_{i,j,G}^{\prime}\) indicates a trial vector of the \(j\)th component of the \(i\)th dimension in the Gth epoch and \({r}_{i,j}\) is an integer number of either 0 or 1. Based on the logical condition, the trial vector is inherited from the mutant \(\varvec{s}_{i,j,G}\) or cloned from the target vector \(\varvec{X}_{i,j,G}\). At the selection stage, DSA swaps the target vector in the next iteration if the trial vector provides a cost function value being less than or equal to that of its target vector. Otherwise, the position of the target vector within the population is preserved. The algorithm simply applies the greedy rule to select the next population between the stopover and the artificial-organism population.

where \(f( \varvec{s}^{\prime}_{i,G})\) and \(f\left( \varvec{X}_{i,G} \right)\) represent the evaluations of the newly discovered and the currently best stopover site. The cycle between equations (3) and (5) continues until a stopping criterion is satisfied.

In both theoretical and field cases, two stopping criteria were used in the optimization scheme: the algorithm was terminated when the cost function value obtained for each epoch reached a specified threshold, or when the cycle reached the predefined number of epochs (i.e., \({G}_{\mathrm{max}}\)). It should be noted that the threshold value is 1e–5 nT and the \({G}_{\mathrm{max}}\) values for theoretical anomalies with one and two isolated dykes are 500 and 1000, respectively. These anomalies were also contaminated by zero-mean pseudo-random numbers with a standard deviation (\({SD}\)) of 25 and 30 nT, respectively. Their noise content was taken into account as a stopping criterion in noisy cases. In field cases, the algorithm was terminated at the end of the \({G}_{{max}}\) of 250.

2.2 Forward modelling

The algorithm explained in detail above was implemented to interpret magnetic anomalies caused by infinitely long magnetized thick dyke-shaped bodies. The magnetic anomaly at any point \(\left({x}_{i}\right)\) on the principal profile of a thick 2D dyke model can be expressed as following (Venkata Raju 2003):

where \(d\), \(b\), and \(h\) are the surface projection midpoint, half-width, and depth to the surface of the dyke, respectively, and \(x\) is the distance of the magnetic measurement points (\(i=\mathrm{1,2},3,\ldots , N\)), \(N\) denotes the number of observation points, while \(M\) and \(c\) represent the regional slope and the background level, respectively. Taking into account the components of the Earth's magnetic field, namely the total (\({{\varDelta}} T\)), vertical (\({{\varDelta}}V\)), and horizontal (\({{\varDelta}} H\)), the details of the amplitude coefficient (\(P\)) and the index parameter (\(Q\)) are also given in table 1 (Venkata Raju 2003). In these equations, \({I}_{0}\) denotes the inclination of the Earth's magnetic field intensity \((T)\), while \({J}_{0}\) and \(a\) are the inclination and declination of the resulting magnetization (\(J)\) and \(\alpha \) is the profile azimuth. Besides, \(K\) represents the magnetic susceptibility contrast between the body and the medium, and \({I}^{1}\) \(\left({\mathrm{tan}I}^{1}=\mathrm{tan}{I}_{0}/\mathrm{cos}\alpha \right)\) and \({J}^{1}\) \(\left({\mathrm{tan}J}^{1}=\mathrm{tan}{J}_{0}/\mathrm{cos}\alpha \right)\) display the effective inclination of the induced and the resultant field, respectively (Hood 1964). Lastly, \(\delta \) denotes dip of the thick dyke that varies between 0° and 180°, \(\beta \) is equal to \(\mathrm{sin}\left(\delta \right)\). It must also be noted that \({J}_{0}={I}_{0}\), \({J}^{1}={I}^{1}\), and \(a=\alpha \) for induced magnetization. A plan view of 2D magnetized dyke in a Cartesian coordinate system is given in figure 2(a). X and Y axes are along with the magnetic profile and strike of the body (\({D}_{\mathrm{str}}=90-\alpha \)), respectively.

(a) A plan view of the magnetic polarization of dyke. (b) Bottom panel shows a vertical section view of inclined dykes with dip angles between 15° and 90°. Net polarization vector, \(J\) with inclination, \({J}_{0}\) is also shown in the inset, and the computed magnetic anomalies are presented in the top panel (\({I}_{0}=15^\circ \), \(\alpha =0^\circ \), \(M=c=0\)).

Further information on the formulations and notations used can be found in both Hood (1964) and Venkata Raju (2003). Here, equation (6) above represents the forward part of the current inverse modelling study. Figure 2(b) shows computed magnetic anomalies for dykes with dip angles of 15°–90° in 15° steps and a cross-sectional view of a thick dyke. It is also obvious that the calculation of \(P\) and \(Q\) in table 1 requires various additional information such as \(K\), \(T\) and \({I}_{0}\) from the survey to obtain the \(P\) value. Since the exact values of these parameters are generally not available in the researchers' works, the inverse modelling of the current study is mainly aimed at estimating the unknown dyke parameters including burial depth (\(h\)), half-width (\(b\)), surface projection midpoint (\(d)\), dip angle (\(\delta \)) and amplitude coefficient (\(P)\).

3 Results and discussion

To test the efficiency of the algorithm, two theoretical (figure 3) and two real magnetic anomalies (figure 4) caused by dyke-shaped bodies were used in the inverse modelling study.

3.1 Theoretical model 1

The first total theoretical magnetic anomaly shown in the top panel of figure 3(a) comprises an infinitely long dyke located at a depth of 30 m below the surface (total measurement profile is 600 m). As shown in the bottom panel of the figure, the thickness (2\(b\)) and the dip of the dyke (δ) are 50 m and 70°, respectively, while the amplitude coefficient (\(P\)) was set to 500 nT to produce the theoretical anomaly. True model parameters to be estimated are \(h\) = 30 m, \(b\) = 25 m, \(d\) = 0 m, \(\delta \) = 70° and \(P\) = 500 nT, respectively. Other parameters for calculating the anomaly are \({I}_{0}=15^\circ \) and \(\alpha =0^\circ \). It was also assumed that there is no regional value (i.e., \(M=c=0\)). The nature of magnetic inversion problem, the effects of control parameters of the DSA and the noise content on the solution and uncertainties in the model parameters were also investigated using this theoretical model.

3.1.1 The nature of the magnetic inverse problem

As is generally known, optimization problems in geophysics mainly exhibit ill-posed, non-linear, and non-unique characteristics, resulting in the finding of various models that fit very well with the observed anomalies. Furthermore, inverse potential field problems suffer from ambiguity and instability due to the inherent characteristics of potential fields. Therefore, regardless of the applied inversion approach, the nature of the optimization problem under consideration should be determined to clarify the resolvability characteristics of each model parameter before the inversion procedure. To achieve this aim, cost-function topography maps (Fernández-Martínez et al. 2012) are computed since the ambiguities of the parameters can be estimated from the outlines of the maps (Başokur 2001).

In the case of circular contours, the parameters are said to be uncorrelated and can be solved independently (figure 5a). In the case of elliptical contours, the parameters are still uncorrelated and can be solved separately. However, one of them can be estimated with lower sensitivity based on the representation of a relatively larger solution range (figure 5b). On the other hand, a correlation between the parameters can be achieved if the contours are tilted at an angle between the parameter axes (figure 5c). In this case, the solution that can be achieved for one parameter depends on the numerical value of the other (Başokur 2001).

(adapted from Başokur 2001).

Error energy maps displaying (a) circular, (b) elliptical, and (c) tilted contours

Figure 6 shows error energy maps obtained in the vicinity of each parameter couple of the first synthetic model, taking into account equation (2). The remaining three parameters were fixed by their actual values to illustrate the relationships between these parameters. Relatively narrow search spaces were used for each parameter, which can be seen from each axis of the maps. Actual values were also highlighted on each axis by bold and italic numbers. On the maps, the contours of error energy values higher than 1e−4 nT2 were not displayed to avoid information overload. White contours surrounding the actual values (hollow red circles) demonstrate 1e−2 nT2 and 1e−3 nT2 error energy contours.

Based on these maps, mainly circular contours seen in the first two rows of the figure show an uncorrelation between \(d-P\), \(b-d\), \(b-\delta \), \(h-d\), and \(h-\delta \) parameter couples. On the other hand, valley-shaped elliptical contours presented in the last row of the figure for \(h-P\), \(b-h\), and \(d-\delta \) display a correlation between the parameters. Besides, their correlation coefficient is positive as both parameters increase proportionally. In our optimization case, the most notable error energy map was obtained for \(b-P\) parameter couple presented in the first panel of the second row. It displays a curvilinear shape commonly called as a banana or croissant, which is common in non-linear inverse problems (Fernández-Martínez et al. 2012). The map indicates that the value of \(P\) will decrease when \(b\) increases due to a negative correlation between them. Therefore, the map presents a possible uncertainty through an equivalence region, suggesting that any \(b-P\) parameter pair lying on that region can generate cost values that are equal to or most likely fairly close to each other. Thus, it was determined from the analysis that these parameters can be estimated with some uncertainties for the current magnetic optimization problem.

3.1.2 Investigating control parameters of DSA

Metaheuristics generally have control parameters that can affect the success of optimization results. Moreover, control parameters proposed by algorithm developers and widely used by researchers are not always optimal for any type of optimization problems. Although the process of parameter tuning is a time-consuming task due to the trial-and-error approach that requires various simulations and experiments, researchers should perform it to obtain better parameter estimates.

Similar to many search algorithms, DSA has two control parameters: \({p}_{1}\) and \({p}_{2}\) which are used to determine the perturbation of members in a position corresponding to an individual, as already mentioned. Civicioglu (2012) presented the tested and most appropriate initial values for these parameters, which change randomly with each cycle, as \({p}_{{1}_{\mathrm{init}}}={p}_{{2}_{\mathrm{init}}}=0.3\times {{rand}}_{\mathrm{1,2}}\). Moreover, the original work concluded that DSA’s problem-solving performance is not too sensitive to its initial values. Therefore, its effect on the solution, in general, was not investigated by various numerical optimization applications. Contrary to this widely accepted practice, after a detailed tuning study, Alkan and Balkaya (2018) proposed new initial values \(\left({p}_{{1}_{\mathrm{init}}}= 1\times {{rand}}_{1}, {p}_{{2}_{\mathrm{init}}}=5\times {{rand}}_{2}\right)\) to estimate the model parameters of HLEM anomalies. In the current magnetic optimization problem, both initial values were used to determine whether there is any effect on the DSA convergence rate. Hereafter the original and proposed values will be named as Strategy-1 and Strategy-2 in the following applications.

A comparison was achieved by 30 independent runs of the algorithm, with each run terminating either with a certain number of \({G}_{\mathrm{max}}\) (i.e., 500) or meeting a comparatively lower predefined threshold (i.e., 1e−5). Furthermore, the artificial-organism consisted of 50 individuals corresponding to ten times the number of model parameters (i.e., \(D\times 10)\). Results obtained with both strategies are summarized in table 2, together with the parameter spaces used during optimization. It is quite clear that the best performance of the DSA was achieved with the proposed initial control parameters of Strategy-2. The algorithm with Strategy-1, which represents the use of original and widely used values, indicates the worse statistics than taking into account the true model parameters of the first theoretical noise-free case. Furthermore, none of the terminations considering 30 independent runs was achieved below the chosen threshold. As a result, the \(mean\) elapsed computation time per cycle was increased almost twice compared to the results obtained with Strategy-2. Table 2 also shows that \(P\) is estimated with relatively large \(SD\) values among the model parameters for both strategies. This is due to the nature of the current magnetic optimization problem, regardless of the inversion scheme used. However, it is obvious that Strategy-2 provides more successful estimations.

Figures 7 and 8 show the convergence characteristics of both strategies on the error energy maps computed for \(h-d\) and \(b-P\) parameter couples, respectively. The maps in the first rows of figures 7(a) and 8(a) demonstrate the results of the first strategy, while the second-row maps of figures 7(b) and 8(b) show Strategy-2. Each map was generated using the results of the run that produced the best solution vector of 30 sequential independent DSA applications. As can be seen from table 2, the best solution was obtained by Strategy-2 at the end of the 389th epoch before reaching the \({G}_{\mathrm{max}}\) value while Strategy-1 needed 500 epochs. Consequently, it displays a faster convergence with good accuracy compared to Strategy-1. In the figures, the first column shows the distribution of the initial population (artificial-organism), which was randomly generated by equation (1) in step 2. On the maps, solid white contours indicate \(E\) values of 1e−2, 1e−3 and 1e−4 nT2. Each individual of the artificial-organism represents a candidate solution vector. It is clear that there are only a few vectors within these contours, which are indicated by red hollow circles. Hollow green circles are characterized by higher \(E\) values on maps. The second and third columns of the illustrations show the solution vectors obtained at the end of the 100th and 300th epoch. Based on the candidate solution vectors seen in the error energy maps, it can be concluded that Strategy-2 offers more fruitful stopover sites than Strategy-1, which was provided in the algorithm’s evaluation cycle. This is already quite clear in the relatively early epochs (i.e., 100) of the cycle shown in the middle column of the figure. If temporary stopovers determined by both strategies in the later epochs (i.e., 300) are considered, it can be concluded that Strategy-2 provides closer solutions to assumed model parameters. Since the initial values proposed by Strategy-2 yield more effective results than the original ones, the control parameters of the DSA should be adjusted to the optimization problem under consideration, contrary to the commonly used assumption. Based on this conclusion, Strategy-2 was used in the following DSA applications to estimate magnetic parameters.

Evolution of randomly generated artificial-organisms in the evaluation cycle of the DSA. Error energy maps computed for h–d using (a) Strategy-1 and (b) Strategy-2. The second and third columns of the figure display the best stopover site positions that represent candidate solution vectors obtained at the end of 100th and 300th epochs.

Evolution of randomly generated artificial-organisms in the evaluation cycle of DSA. Error energy maps computed for b–P using (a) Strategy-1 and (b) Strategy-2. The second and third columns of the figure display the best stopover positions representing candidate solution vectors obtained at the end of the 100th and 300th epochs.

A comparison between the observed and the calculated noise-free magnetic anomalies presented with the best results of Strategy-2 (presented in table 2) is shown in figure 9(a). Taking into account, 30 consecutive independent runs of the algorithm, table 2 presents more detailed statistical results, including the \({worst}\), \({mean}\) and \({SD}\) values for estimated parameters, \(\mathrm{rms}\), and epoch values. In addition, the \(\mathrm{best}\)-estimated parameters and \(\mathrm{rms}\) values obtained from the DSA implementation are also shown in the corresponding figure. Although Strategy-1 at the end of 500 epochs displays sufficient mean results, the application of Strategy-2 provides more satisfactory estimates and results for the noise-free case. It should also be noted that the \(mean\) of 262 epochs also provides a lower forward computation in the implementation. As a result, DSA exhibits a faster convergence characteristic to the global optimum by using initial control parameters of Strategy-2.

3.1.3 Convergence of DSA

Figure 10(a) presents the change in error energies with the epochs of the runs and provides the best estimates obtained from both strategies in the noise-free case. Compared to Strategy-1, Strategy-2 achieves a lower rms value before reaching the predefined \({G}_{\mathrm{max}}\) value. The remaining graphs in figure 10(b–f) show the convergence characteristics of both strategies to the correct parameter values in each epoch. Based on the results, it can be concluded that Strategy-2 provides a more efficient convergence aspect compared to Strategy-1 using the proposed control parameters in the algorithm scheme. In addition, it reduces almost half of the epochs required for the optimal solution. This result is an expected situation, as it is clearly highlighted in the section examining the control parameters of the algorithm, and demonstrates the importance of the parameter tuning process for metaheuristics.

3.1.4 Investigating the effect of noise

As shown in figure 3(a), the anomaly was also contaminated by zero-mean pseudo-random numbers with an \(SD\) of ± 25 nT to investigate the efficiency of the proposed algorithm. Results from Strategy-2 were presented in table 2, similar to the noise-free case. In the analysis, only the suggested values of the original control parameters based on the results from the noise-free case were used. Furthermore, each cycle of 30 independent runs was terminated to prevent over-parameterization when the error energy value fell below the noise content added to the synthetic anomaly. It is obvious that the DSA yielded effective parameter estimates for the presence of noise by producing \(\mathrm{rms}\) values close to the noise content. Figure 9(b) shows a comparison between the theoretical and calculated anomalies based on the best results of Strategy-2, which can be seen in the last line of table 2. Changes in the error energy with the epoch are also shown in figure 11(a). The remaining graphs in figure 11(b–f) present changing of model parameters at each epoch. The black dashed lines in the figure indicate exact parameters and estimated parameters were also displayed on each graph.

3.1.5 Analyzing the parameter uncertainties

Relative frequency distributions obtained using the model parameters estimated during the optimization are presented in figure 12. To this end, all solutions containing each epoch of 30 independent runs of the DSA were logged into a file. Thus, a histogram of each model parameter displays a maximum of 750,000 data information (i.e., 50 × 500 × 30), obtained from the evaluation of an artificial-organism consisting of 50 individuals over 500 epochs of 30 independent runs of the algorithm. The first and second rows of the figure belong to the noise-free case of the theoretical model obtained with both strategies, the latter one shows the frequency distribution of model parameters from Strategy-2 for the noisy case. All histograms are displayed between the parameter search bounds, and the actual values are also displayed on the histograms, as seen in the first row of the figure. Parameters that indicate a relatively narrow estimation range in the histograms clarify that they have a higher resolvability rate than wider ones. Hence, origin (\(d\)), dip (\(\delta \)), and depth (\(h\)) of the dyke have less uncertainty in the estimations. On the other hand, the amplitude coefficient (\(P\)) and the thickness (\(b\)) of the dyke have a relatively larger range, which represents a greater uncertainty in optimization. This finding is also expected based on the analysis of the error energy maps. Likewise, the histograms of the noisy synthetic case obtained with control parameters of Strategy-2 present similar characteristics with a lower resolvability option than the noise-free case.

Relative frequency distributions of the parameters estimated by the DSA implementation. The histograms in the first two rows belong to the noise-free case obtained by Strategy-1 and Strategy-2, respectively. The last row shows the histograms obtained from the noise case using the proposed control parameters of Strategy-2.

3.2 The second synthetic case

To demonstrate the applicability of DSA in the existence of increasing model parameters, a total magnetic anomaly was produced using two isolated, infinitely long magnetized dykes having depths of 20 and 30 m below the surface (figure 3b). The noisy anomaly was also generated with zero-mean pseudo-random numbers with an \({SD}\) of ± 30 nT. As the figure clearly shows, the length of the measurement profile similar to the first synthetic case is 600 m, and the distance between the two dykes is 120 m. Besides, the dip of the first dyke is 40°, while the second is 30°. All model parameters of the anomaly are also shown on the bottom panel of the figure. The values of \({I}_{0}=57^\circ \), \(\alpha =0^\circ \), and \(M=c=0\) were used in the computation.

This example was evaluated using Strategy-2, which provides more efficient parameter estimates than the traditional approach. Figure 13(a and c) shows a comparison between the magnetic anomaly, which theoretically produced (red filled circles) and the calculated (black line) using estimates obtained by Strategy-2 implementations of DSA for noise-free and noisy cases of the second synthetic example. A cross-sectional view of the dykes can also be seen in figure 3(b), along with true model parameters. Besides, table 3 presents search space limitations used during optimization and the best-estimated model parameters over 10 independent execution of DSA. In the application, the initial population consisted of 200 artificial-organism (i.e., \(D\) × 20) and \({G}_{\mathrm{max}}\) was fixed at 1000 epochs. Furthermore, the values of 6 and 10 were used for the initial control parameters (\(p_1\) and \(p_2\)) of the DSA based on the reported results (Alkan and Balkaya 2018). Considering the estimated values given in the table and also shown in the so-called figure, one can conclude that the DSA offers one of the optimal solutions in the vicinity of the actual values in the existence of two dykes, which led to the complexity of the optimization problem. The \(\mathrm{rms}\) value of the noise-free case is 3.24−3 nT at the end of 1000 epoch. In addition, \({max}\), \(mean\) and \({SD}\) values of the \(\mathrm{rms}\) values are 7.2e−1, 1.7e−1, and 2.24e−1 nT, taking into account 10 execution of the algorithm. On the other hand, the algorithm could not provide a misfit value below 1e−5 nT, which is the other stopping criterion defined in the algorithm for the second theoretical example. However, the application of DSA with Strategy-2 yielded effective parameter estimates, which were obtained by an average epoch of 238.6 at the end of 10 independent runs for the noisy case. Figure 13(b and d) presents the change of error energy at each epoch for noise-free and noisy cases of the second synthetic example.

Comparison of theoretical and calculated magnetic anomalies obtained from the Strategy-2 implementations of DSA for (a) noise-free and (b) noisy cases of the second synthetic example. Estimated parameters and obtained \(\mathrm{rms}\) values are also displayed in the graphs. (c, d) Changing the error energies with the epoch for both cases.

4 Field cases

Two known real data from the Marcona District (Peru) and Pima County (Arizona, US) were used to test the effectiveness of the DSA.

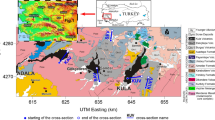

4.1 Marcona District magnetic anomaly (Peru)

Unlike the synthetic cases, the first case study includes a vertical magnetic anomaly observed near the magnetic equator and over the Marcona District of Peru (Gay 1963; Venkata Raju 2003). Although the iron mining area was discovered in 1914, it was developed after World War II as a foreign source of iron ore, and the first ore was shipped in 1953 (Gay 1966). In the area, geophysical exploration began 4 years later after a detailed low-level aeromagnetic survey and continued until 1963. This survey mainly revealed 30 magnetic anomalies over known deposits and more than 70 newly discovered (Gay 1966). Figure 4(a) shows the current anomaly, comprising 61 data points with an interval of 20 m (Al-Garni 2015).

One of the vertical magnetic anomalies in Marcona District was inverted by the DSA using 250 epochs for each cycle of 30 independent runs. The values of \({I}_{0}=6.3^\circ \), \(\alpha =0^\circ \), and \(M=c=0\) were also used in the forward solution. Optimal values resulting in an \(\mathrm{rms}\) value of 26.6 nT are: \(h\) = 151.31 m, \(b\) = 200.61 m, \(d\) = −1.07 m, \(\delta \) = 56.91°, and \(P\) = 1782.95 nT. A comparison between the observed and calculated anomalies and the estimated parameters is presented in figure 14(a). The change in error energy with the epochs is given in figure 15(a), while the remaining graphs in the figure show changes in model parameters at each epoch.

The results obtained with the Strategy-2 implementations of the DSA were also compared with those of previous studies (table 4). Of them, Gay (1963) used the standard curve approach, while Koulomzine et al. (1970) considered an analysis of the symmetrical and asymmetrical components of the profile for the interpretation of magnetic anomalies. Pal (1985) applied a simplified inversion scheme based on gradient analysis for the magnetic anomaly caused by the inclined and infinitely thick dyke, which does not require the approaches of Gay or Koulomzine et al. in the application. Radhakrishna Murthy (1985) obtained a depth of 135 m for the dyke using the horizontal and vertical derivative profiles of the Marcona anomaly considering the vertical component of equation (6). Recently, Al-Garni (2015) used a neural network technique to interpret this anomaly. Therefore, it can be assumed that the approach mentioned in this study is more coherent than the ones summarized above, as it provides a comparison between observed and calculated anomalies. Furthermore, this study uses the same forward formula for interpreting magnetic anomalies caused by dipping dykes and displays estimated results of dyke parameters such as \(h\), \(b\), and \(\delta \). As shown in table 4, the parameter spaces used for estimating the model parameters in their study (70\(-\)150) are moderately narrower than the current investigation (50\(-\)500). The value estimated by the neural network application for the depth to the top of the dyke (i.e., \(h\)) is 130 m. This is 21.31 m less than our estimation (i.e., \(h\) = 151.31 m), which is outside the search limits used by neural network application. Also, the origin of the dyke (i.e., \(d\)) was estimated on the basis of Stanley's (1977) assumption based on the main minimum and maximum values of the anomaly profile. Kara et al. (2017) obtained 154 m depth using an approach incorporating the even component of magnetic anomalies, while Essa and Elhussein (2019) retrieved a depth of 138 m with the first horizontal derivative anomalies. Lastly, the anomaly was interpreted with WOA (Gobashy et al. 2020b). Considering the parameter search spaces indicated by Al-Garni, this application yields a depth estimate of 150 m, which is fairly close to our result. However, it also corresponds to the upper search bound of \(h\) (i.e., 70–150). Consequently, the studies show relatively different parameter estimates and unfortunately there is no drilling result from the area (i.e., Gay 1963), to verify the predictions obtained. Relative frequency histograms of the estimates for each parameter provided by the DSA application are also shown in figure 16. Each histogram was generated from 375,000 data estimates (i.e., 50 × 250 × 30). Thus, we present the results for researchers interested in the subject.

4.2 Pima copper mine magnetic anomaly (Arizona)

Since the 19th century, copper mining in the US state of Arizona has been of crucial importance. Pima copper mines in the state, one of the most important commercial copper ore deposits in US, were discovered in the early 1950s by geophysical exploration using a combination of electromagnetic and vertical intensity magnetic surveys (Thurmond et al. 1954). The ore body is a metamorphic contact deposit with chalcopyrite as the main mineral. Moreover, the Laramide igneous activity is the main cause of the mineralization that occurred in the region (e.g., Shafiqullah and Langlois 1978). In this study, one of the two significant vertical magnetic anomalies mentioned above and observed by the geophysical survey was selected as a second field example for the DSA application. Figure 4(b) shows a 728 m long anomaly consisting of 57 data points with a sampling interval of 13 m (i.e., Ekinci 2016). Since drilling data of 63.7 m is available for the area (e.g., Gay 1963), this anomaly has attracted more attention from researchers than the Marcona district magnetic anomaly (Peru) to interpret their approaches. Some examples of interpretations can be found in Gay (1963), Abdelrahman and Sharafeldin (1996), Venkata Raju (2003), Abdelrahman and Essa (2015), Tlas and Asfahani (2011, 2015), Ekinci (2016), Asfahani and Tlas (2007), Biswas et al. (2017), Kaftan (2017) and Di Maio et al. (2020).

Pima magnetic anomaly was inverted by the Strategy-2 approach of the DSA. 250 epochs were performed for all 30 independent runs. The values of \({I}_{0}=15^\circ \), \(\alpha =0^\circ \), and \(M=c=0\) were used to compute the anomaly. An overview of the overall results of the current implementation is given in table 5, which contains estimated parameters for each run of the DSA and its statistics. Figure 14(b) shows a comparison between the observed Pima magnetic anomaly and the calculated one taking into account the estimated model parameters achieved by the 2nd independent run of the DSA with Strategy-2. This anomaly also shows one of the \({min}\) \(\mathrm{rms}\) values (i.e., 7.2 nT).

Depending on the table, it can be concluded that a certain uncertainty is observed between the model parameters \(b\) and \(P\) of the dyke. Based on a number of the estimated results presented, \({min}\) and \({max}\) values of \(b\) change between 2.02 and 24.892 m. Likewise, the value of \(P\) indicates a range between 767.122 and 9643.748 nT. Due to the negative correlation between them, as already described, inverse fluctuations are expected for the current magnetic optimization problem. Ultimately, they produced \(\mathrm{rms}\) values in a narrow range between 7.2 and 8.36 nT although the gap between the values determined, which can also be seen in figure 17.

Representation of the estimated \(b\) values (hollow red circles) on the computed error energy map considering the Pima magnetic anomaly. Inner and outer contours with white colour refer to error energy values of 1e−3 nT2 and 1e−4 nT2, respectively. The \(\mathrm{rms}\) values as well as both \(\mathrm{min}\) and \(\mathrm{max}\) values (hollow green squares) for \(b\) estimation are also shown on the map.

Changes in the error energies (figure 18a) and the model parameters (figure 18b–f) in each epoch are also presented considering the second independent runs of the algorithm. Relative frequency histograms generated from 375,000 data estimated by the implementation are also shown in figure 19. Histograms of \(b\) and \(P\) show a complexity that causes fluctuation between them. On the other hand, it is evident from the histograms of the remaining parameters that the DSA exhibits a robust characteristic for the parameters \(h\), \(b\), and δ during the optimization process. Thus, table 6 presents a short comparative list for the dyke depth (\(h\)), which is the most commonly estimated parameter considering previous research studies with different applications. As is also clear from table 6, \({min}\), \({max}\) and \({mean}\) values of \(h\) estimated via DSA are 63.5, 67.7, and 66.5 m, respectively. Compared to the values seen in the table considering the drilling result (i.e., 63.7) from the area (e.g., Gay 1963), we conclude that DSA provides one of the most effective estimates for \(h\) value. Moreover, the uncertainty of the current magnetic optimization problem became clear in contrast to previous studies by considering the forward formula used in the inverse modelling study.

5 Conclusions

Due to the disadvantages of the derivative-based traditional inversion schemes, metaheuristics provide efficient estimates without good initial parameters and have gained popularity in the scientific community, especially in the last decade. In this study, one of the recently introduced efficient population-based metaheuristics, DSA, was used to estimate some model parameters of dyke-shaped bodies such as depth, half-width, origin, and dip of theoretical and field magnetic anomalies. Essentially, the algorithm imitates the migration concepts of superorganisms through a random walk such as Brownian motion. Like many metaheuristics, it has the advantage of simplicity and flexibility, which provides a broad basis for solving a wide variety of optimization problems. Furthermore, it is not sensitive to initial estimates, as it randomly searches the entire parameter space in the optimization process.

Cost function maps were generated for each unknown parameter couple. Findings showed that any pair of \(b-P\) parameters located in the curvilinear equivalence region could generate the observed data with a certain cost tolerance. Accordingly, the model parameters \(b\) and \(P\) were estimated with ambiguity. Therefore, the resolvability of parameters should be investigated before the parameter estimation studies to reveal the nature of the inverse problem.

In most cases, the selection of control parameters of a metaheuristic is a key issue and also problem-dependent. However, it is generally accepted that the DSA is not very sensitive to its initial values. Therefore, we investigated their initial values to determine whether or not they affect the DSA convergence rate for the current magnetic inverse problem or not. To this end, the original and commonly used initial values (Strategy-1) and the ones (Strategy-2) suggested by a DSA application for HLEM anomalies were taken into account. Synthetic cases were performed on an infinitely long and two isolated dykes, and the results obtained show that Strategy-2 is more effective than Strategy-1 to achieve good estimates. Considering the first synthetic case, DSA with Strategy-2 mainly offered a lower \(\mathrm{rms}\) value (i.e., < 1e−5 nT) before 500 epochs in which a predefined \({G}_{\mathrm{max}}\) value was implemented compared to Strategy-1. Thus, it exhibited a faster convergence aspect and higher accuracy than the Strategy-1 approach and significantly reduced the number of epochs required for an effective solution. However, the algorithm needs more epochs (i.e., 1000) in the evaluation cycle to provide efficient parameter estimates as the problem grows larger. Consequently, the results of two theoretical cases showed that DSA typically delivers faster convergences to the global solution with initial parameters of Strategy-2 and solves relatively large-scale problems effectively. Therefore, this study revealed that DSA needs parameter tuning studies to achieve successful parameter estimates like other effective metaheuristics such as GA, PSO and DE.

The interpretation of two known case studies, involving an iron deposit (Marcona, Peru) and a copper mine (Pima, Arizona), also demonstrated the feasibility of the algorithm. Of these, the Pima County magnetic anomaly provided a more realistic assessment of the algorithm, as drilling information (i.e., 63.7 m) is available from the area. Based on the results of model parameter estimation studies, the DSA through Strategy-2 provided a depth estimate of 63.5 m, which is well consistent with drilling data and published studies. From this, it can be concluded that DSA is a practical alternative metaheuristic approach that not only provides good randomization to escape from local minima, but is also a method for seeking to find a near-optimal solution.

References

Abdelrahman E M and Essa K S 2015 A new method for depth and shape determinations from magnetic data; Pure Appl. Geophys., https://doi.org/10.1007/s00024-014-0885-9.

Abdelrahman E M and Sharafeldin S M 1996 An iterative least-squares approach to depth determination from residual magnetic anomalies due to thin dikes; J. Appl. Geophys. 1 1–2, https://doi.org/10.1016/0926-9851(95)00017-8.

Abdelrahman E-SM, El-Araby T M and Essa K S 2003 A least-squares minimisation approach to depth, index parameter, and amplitude coefficient determination from magnetic anomalies due to thin dykes; Explor. Geophys., https://doi.org/10.1071/EG03241.

Abdelrahman E M, Abo-Ezz E R and Essa K S 2012 Parametric inversion of residual magnetic anomalies due to simple geometric bodies; Explor. Geophys., https://doi.org/10.1071/EG11026.

Al-Garni M A 2015 Interpretation of magnetic anomalies due to dipping dikes using neural network inversion; Arab. J. Geosci., https://doi.org/10.1007/s12517-014-1770-7.

Alkan H and Balkaya Ç 2018 Parameter estimation by differential search algorithm from horizontal loop electromagnetic (HLEM) data; J. Appl. Geophys., https://doi.org/10.1016/j.jappgeo.2017.12.016.

Anderson N L, Essa K S and Elhussein M 2020 A comparison study using particle swarm optimization inversion algorithm for gravity anomaly interpretation due to a 2D vertical fault structure; J. Appl. Geophys., https://doi.org/10.1016/j.jappgeo.2020.104120.

Asfahani J and Tlas M A 2007 A robust non-linear inversion for the interpretation of magnetic anomalies caused by faults, thin dikes and spheres like structure using stochastic algorithms; Pure Appl. Geophys., https://doi.org/10.1007/s00024-007-0254-z.

Atchuta Rao D, Ram Babu H V and Venkata Raju D Ch 1985 Inversion of gravity and magnetic anomalies over some bodies of simple geometric shape; Pure Appl. Geophys., https://doi.org/10.1007/BF00877020.

Balkaya Ç 2013 An implementation of differential evolution algorithm for inversion of geoelectrical data; J. Appl. Geophys., https://doi.org/10.1016/j.jappgeo.2013.08.019.

Balkaya Ç, Göktürkler G, Erhan Z and Ekinci Y L 2012 Exploration for a cave by magnetic and electrical resistivity surveys: Ayvacık Sinkhole example, Bozdağ, İzmir (western Turkey); Geophysics, https://doi.org/10.1190/geo2011-0290.1.

Balkaya Ç, Ekinci Y L, Göktürkler G and Turan S 2017 3D non-linear inversion of magnetic anomalies caused by prismatic bodies using differential evolution algorithm; J. Appl. Geophys., https://doi.org/10.1016/j.jappgeo.2016.10.040.

Barrows L and Rocchio J E 1990 Magnetic surveying for buried metallic objects; Ground Water Monit. R., https://doi.org/10.1111/j.1745-6592.1990.tb00016.x.

Başokur A T 2001 Inverse solution of linear and non-linear problems (in Turkish); UCTEA Chamber of Geophysical Engineers, Educational Publications No. 4, Ankara.

Başokur A T, Akça I and Siyam N W A 2007 Hybrid genetic algorithms in view of the evolution theories with application for the electrical sounding method; Geophys. Prospect., https://doi.org/10.1111/j.1365-2478.2007.00588.x.

Beiki M and Pedersen L B 2012 Estimating magnetic dike parameters using a non-linear constrained inversion technique: An example from the Sarna area, westcentral Sweden; Geophys. Prospect., https://doi.org/10.1111/j.1365-2478.2011.01010.x.

Biswas A 2017 Inversion of source parameters from magnetic anomalies for mineral/ore deposits exploration using global optimisation technique and analysis of uncertainty; Nat. Resour. Res., https://doi.org/10.1007/s11053-017-9339-2.

Biswas A and Acharya T 2016 A very fast simulated annealing method for inversion of magnetic anomaly over semi-infinite vertical rod-type structure; Model. Earth Syst. Environ., https://doi.org/10.1007/s40808-016-0256-x.

Biswas A and Sharma S 2017 Interpretation of self-potential anomaly over 2-D inclined thick sheet structures and analysis of uncertainty using very fast simulated annealing global optimisation; Acta Geodyn. Geophys., https://doi.org/10.1007/s40328-016-0176-2.

Biswas A, Parija M P and Kumar S 2017 Global non-linear optimisation for the interpretation of source parameters from total gradient of gravity and magnetic anomalies caused by thin dyke; Ann. Geophys., https://doi.org/10.4401/ag-7129.

Carbone D, Currenti G, Del Negro C, Ganci G and Napoli R 2006 Inverse modeling in geophysical applications; Commun. SIMAI Cong., https://doi.org/10.1142/9789812709394_0025.

Çaylak Ç, Göktürkler G and Sarı C 2012 Inversion of multi-channel surface wave data using a sequential hybrid approach; J. Geophys. Eng., https://doi.org/10.1088/1742-2132/9/1/003.

Civicioglu P 2012 Transforming geocentric cartesian coordinates to geodetic coordinates by using differential search algorithm; Comput. Geosci., https://doi.org/10.1016/j.cageo.2011.12.011.

Di Maio, R, Rani P, Piegari E and Milano L 2016 Self-potential data inversion through a genetic-price algorithm; Comput. Geosci., https://doi.org/10.1016/j.cageo.2016.06.005.

Di Maio R, Piegari E, Rani P, Carbonari R, Vitagliano E and Milano L 2019 Quantitative interpretation of multiple self-potential anomaly sources by a global optimisation approach; J. Appl. Geophys., https://doi.org/10.1016/j.jappgeo.2019.02.004.

Di Maio R, Milano L and Piegari E 2020 Modeling of magnetic anomalies generated by simple geological structures through Genetic-Price inversion algorithm; Phys. Earth Planet. Inter., https://doi.org/10.1016/j.pepi.2020.106520.

Dondurur D and Ankaya Pamukçu O 2003 Interpretation of magnetic anomalies from dipping dike model using inverse solution, power spectrum and Hilbert transform methods; J. Balkan Geophys. Soc. 6 127–136.

Ekinci Y L 2016 MATLAB-based algorithm to estimate depths of isolated thin dike-like sources using higher-order horizontal derivatives of magnetic anomalies; Springerplus, https://doi.org/10.1186/s40064-016-3030-7.

Ekinci Y L 2018 Application of enhanced local wave number technique to the total field magnetic anomalies for computing model parameters of magnetised geological structures; Geol. Bull. Turkey., https://doi.org/10.25288/tjb.414015.

Ekinci Y L and Yiğitbaş E 2012 A geophysical approach to the igneous rocks in the Biga Peninsula (NW Turkey) based on airborne magnetic anomalies: Geological implications; Geodin. Acta, https://doi.org/10.1080/09853111.2013.858945.

Ekinci Y L, Balkaya Ç, Şeren A, Kaya M A and Lightfoot C 2014 Geomagnetic and geoelectrical prospection for buried archaeological remains on the upper city of Amorium, a Byzantine city in midwestern Turkey; J. Geophys. Eng., https://doi.org/10.1088/1742-2132/11/1/015012.

Ekinci Y L, Balkaya Ç, Göktürkler G and Turan S 2016 Model parameter estimations from residual gravity anomalies due to simple-shaped sources using differential evolution algorithm; J. Appl. Geophys., https://doi.org/10.1016/j.jappgeo.2016.03.040.

Ekinci Y L, Özyalın Ş, Sındırgı P, Balkaya G and Göktürkler G 2017 Amplitude inversion of the 2D analytic signal of magnetic anomalies through the differential evolution algorithm; J. Geophys. Eng., https://doi.org/10.1088/1742-2140/aa7ffc.

Ekinci Y L, Balkaya Ç and Göktürkler G 2019 Parameter estimations from gravity and magnetic anomalies due to deep-seated faults: Differential evolution versus particle swarm optimization; Turkish J. Earth Sci., https://doi.org/10.3906/yer-1905-3.

Ekinci Y L, Büyüksaraç A, Bektaş Ö and Ertekin C 2020a Geophysical investigation of Nemrut Stratovolcano (Bitlis, Eastern Turkey) through aeromagnetic anomaly analyses; Pure Appl. Geophys., https://doi.org/10.1007/s00024-020-02432-0.

Ekinci Y L, Balkaya Ç and Göktürkler G 2020b Global optimisation of near-surface potential field anomalies through metaheuristics; In: Advances in Modelling and Interpretation in Near Surface Geophysics (eds) Biswas A and Sharma S P, Series of Springer Geophysics, Springer International Publishing, pp. 155–188, ISBN: 978-3-030-28909-6.

Ekinci Y L, Balkaya Ç, Göktürkler G and Özyalın Ş 2021 Gravity data inversion for the basement relief delineation through global optimization: A case study from the Aegean Graben System, western Anatolia, Turkey; Geophys., J. Int., https://doi.org/10.1093/gji/ggaa492.

Essa K S and Elhussein M 2017 A new approach for the interpretation of magnetic data by a 2-D dipping dike; J. Appl. Geophys., https://doi.org/10.1016/j.jappgeo.2016.11.022.

Essa K S and Elhussein M 2018 PSO (Particle Swarm Optimization) for interpretation of magnetic anomalies caused by simple geometrical structures; Pure Appl. Geophys., https://doi.org/10.1007/s00024-018-1867-0.

Essa K S and Elhussein M 2019 Magnetic interpretation utilizing a new inverse algorithm for assessing the parameters of buried inclined dike-like geological structure; Acta Geophys., https://doi.org/10.1007/s11600-019-00255-9.

Essa K S and Elhussein M 2020 Interpretation of magnetic data through particle swarm optimization: Mineral exploration cases studies; Nat. Resour. Res., https://doi.org/10.1007/s11053-020-09617-3.

Eventov L 1997 Applications of magnetic methods in oil and gas exploration; Lead Edge, https://doi.org/10.1190/1.1437667.

Fernández Martinez J L, Garcia Gonzalo E, Fernández Álvarez J P, Kuzma H A and Menéndez Pérez C O 2010 PSO: A powerful algorithm to solve geophysical inverse problems: Application to a 1D-DC resistivity case; J. Appl. Geophys., https://doi.org/10.1016/j.jappgeo.2010.02.001.

Fernández-Martínez J L, Fernández-Muñiz M Z and Tompkins M J 2012 On the topography of the cost functional in linear and nonlinear inverse problems; Geophysics, https://doi.org/10.1190/geo2011-0341.1.

Gay S P 1963 Standard curves for interpretation of magnetic anomalies over long tabular bodies; Geophysics, https://doi.org/10.1190/1.1439164.

Gay S P Jr 1966 Geophysical case history, Marcona Mining District, Peru; In: Mining Geophysics, v.1 Case Histories, Society of Exploration Geophysicists, pp. 429−447.

Gobashy M, Abdelazeem M, Abdrabou M and Khalil M H 2020a Estimating model parameters from self-potential anomaly of 2d inclined sheet using whale optimization algorithm: Applications to mineral exploration and tracing shear zones; Nat. Resour. Res., https://doi.org/10.1007/s11053-019-09526-0.

Gobashy M, Abdelazeem M and Abdrabou M 2020b Minerals and ore deposits exploration using meta-heuristic based optimization on magnetic data; Contrib. Geophys. Geodyn., https://doi.org/10.31577/congeo.2020.50.2.1.

Göktürkler G 2011 A hybrid approach for tomographic inversion of crosshole seismic first-arrival times; J. Geophys. Eng., https://doi.org/10.1088/1742-2132/8/1/012.

Göktürkler G and Balkaya Ç 2012 Inversion of self-potential anomalies caused by simple geometry bodies using global optimisation algorithms; J. Geophys. Eng., https://doi.org/10.1088/1742-2132/9/5/498.

Göktürkler G, Balkaya Ç, Ekinci Y L and Turan S 2016 Metaheuristics in applied geophysics (in Turkish), Pamukkale University J. Eng. Sci., https://doi.org/10.5505/pajes.2015.81904.

Grant F S and West G F 1965 Interpretation Theory in Applied Geophysics; McGraw-Hill Book Co., New York.

Hood P 1964 The Königsberger ratio and the dipping-dyke equation; Geophys. Prospect., https://doi.org/10.1111/j.1365-2478.1964.tb01916.x.

Kaftan İ 2017 Interpretation of magnetic anomalies using a genetic algorithm; Acta Geophys., https://doi.org/10.1007/s11600-017-0060-7.

Kara İ, Tarhan Bal O, Tekkeli A B and Karcioğlu G 2017 A different method for interpretation of magnetic anomalies due to 2-D dipping dikes; Acta Geophys., https://doi.org/10.1007/s11600-017-0019-8.

Khurana K K, Rao S V S and Pal P C 1981 Frequency domain least squares inversion of thick dike magnetic anomalies using Marquardt algorithm; Geophysics, https://doi.org/10.1190/1.1441181.

Koulomzine Th, Lamontagne Y and Nadeau A 1970 New methods for the direct interpretation of magnetic anomalies caused by inclined dikes of infinite length; Geophysics, https://doi.org/10.1190/1.1440131.

Kumar R, Bansal A R, Anand S P, Rao V K and Singh U K 2017 Mapping of magnetic basement in Central India from aeromagnetic data for scaling geology; Geophys. Prospect., https://doi.org/10.1111/1365-2478.12541.

Li Y and Oldenburg D W 1996 3-D inversion of magnetic data; Geophysics, https://doi.org/10.1190/1.1443968.

Pal P C 1985 A gradient analysis-based simplified inversion strategy for the magnetic anomaly of an inclined and infinite thick dike; Geophysics, https://doi.org/10.1190/1.1441992.

Pekşen E, Yas T, Kayman A Y and Özkan C 2011 Application of particle swarm optimisation on self-potential data; J. Appl. Geophysics, https://doi.org/10.1016/j.jappgeo.2011.07.013.

Pekşen E, Yas T and Kıyak A 2014 1-D DC resistivity modeling and interpretation in anisotropic media using particle swarm optimisation; Pure Appl. Geophysics, https://doi.org/10.1007/s00024-014-0802-2.

Power M, Belcourt G and Rockel E 2004 Geophysical methods for kimberlite exploration in northern Canada; Lead. Edge, https://doi.org/10.1190/1.1825939.

Prezzi C, Orgeira M J, Ostera H and Vásquez C A 2005 Ground magnetic survey of a municipal solid waste landfill: Pilot study in Argentina; Environ. Geol., https://doi.org/10.1007/s00254-004-1198-6.

Radhakrishna Murthy I V 1985 Magnetic interpretation of dyke anomalies using derivatives; Pure Appl. Geophys., https://doi.org/10.1007/BF00877019.

Radhakrishna Murthy I V 1990 Magnetic anomalies of two-dimensional bodies and algorithms for magnetic inversion of dykes and basement topographies; Proc. Indian Acad. Sci. (Earth Planet Sci.), https://doi.org/10.1007/BF02840316.

Radhakrishna Murthy I V, Visweswara Rao C and Gopala Krishna G 1980 A gradient method for interpreting magnetic anomalies due to horizontal circular cylinders, infinite dykes and vertical steps; Proc. Indian Acad. Sci. (Earth Planet Sci.), https://doi.org/10.1007/BF02841517.

Ram Babu H V, Subrahmanyam A S and Atchuta Rao D 1982 A comparative study of the relation figures of magnetic anomalies due to two-dimensional dike and vertical step models; Geophysics, https://doi.org/10.1190/1.1441359.

Rao B S R, Radhakrishna Murthy I V and Visweswara Rao C 1973 Two methods for computer interpretation of magnetic anomalies of dikes; Geophysics, https://doi.org/10.1190/1.1440370.

Reid J 2014 Introduction to geophysical modelling. Geophysical Inversion for Mineral Explorers, ASEG seminars, https://www.aseg.org.au/2014-geophysical-inversion.

Shafiqullah M and Langlois J D 1978 The Pima mining district Arizona – A geochronologic update; In: (eds) Callender J F, Wilt J, Clemons R E and James H L, Land of Cochise (Southeastern Arizona), New Mexico Geological Society 29th Annual Fall Field Conference Guidebook, pp. 321–327.

Sharma P V 1987 Magnetic method applied to mineral exploration; Ore Geol. Rev., https://doi.org/10.1016/0169-1368(87)90010-2.

Singh A and Biswas A 2016 Application of global particle swarm optimisation for inversion of residual gravity anomalies over geological bodies with idealised geometries; Nat. Resour. Res., https://doi.org/10.1007/s11053-015-9285-9.

Song X, Li L, Zhang X, Shi X, Huang J, Cai J, Jin S and Ding J 2014 An implementation of differential search algorithm (DSA) for inversion of surface wave data; J. Appl. Geophys., https://doi.org/10.1016/j.jappgeo.2014.10.017.

Soupios P, Akca I, Mpogiatzis P, Basokur A T and Papazachos C 2011 Applications of hybrid genetic algorithms in seismic tomography; J. Appl. Geophys., https://doi.org/10.1016/j.jappgeo.2011.08.005.

Sowerbutts W T C 1987 Magnetic mapping of the Butterton Dyke: An example of detailed geophysical surveying; J. Geol. Soc., https://doi.org/10.1144/gsjgs.144.1.0029.

Stanley J M 1977 Simplified magnetic interpretation of the geologic contact and thin dike; Geophysics, https://doi.org/10.1190/1.1440788.

Steltenpohl M G, Horton J W Jr, Hatcher R D Jr, Zietz I, Daniels D L and Higgins M W 2013 Upper crustal structure of Alabama from regional magnetic and gravity data: Using geology to interpret geophysics, and vice versa; Geosphere, https://doi.org/10.1130/GES00703.1.

Sungkono 2020 An efficient global optimization method for self-potential data inversion using micro-differential evolution; J. Earth Syst. Sci., https://doi.org/10.1007/s12040-020-01430-z.

Thurmond R E, Heinrichs W E Jr and Spaulding E D 1954 Geophysical discovery and development of the Pima mine, Pima County, Arizona: A successful exploration project; Min. Eng. 6 197−202.

Tlas M and Asfahani J 2011 Fair function minimisation for interpretation of magnetic anomalies due to thin dikes, spheres and faults; J. Appl. Geophys., https://doi.org/10.1016/j.jappgeo.2011.06.025.

Tlas M and Asfahani J 2015 The simplex algorithm for best-estimate of magnetic parameters related to simple geometric-shaped structures; Math. Geosci., https://doi.org/10.1007/s11004-014-9549-7.

Venkata Raju D Ch 2003 LIMAT: A computer program for least-squares inversion of magnetic anomalies over long tabular bodies; Comput. Geosci., https://doi.org/10.1016/S0098-3004(02)00108-5.

Won I J 1981 Application of Gauss’s method to magnetic anomalies of dipping dikes; Geophysics, https://doi.org/10.1190/1.1441192.

Acknowledgements

We would like to thank the Associate Editor Dr Munukutla Radhakrishna and anonymous reviewers for their worthy comments and constructive criticisms for the improvement of the manuscript. We also thank Dr Yunus Levent Ekinci for critical reading of the manuscript.

Author information

Authors and Affiliations

Contributions

Çağlayan Balkaya developed a MATLAB-based software. Çağlayan Balkaya and Ilknur Kaftan designed the methodology, processed the data, discussed the inversion results, and wrote the paper.

Corresponding author

Additional information

Communicated by Munukutla Radhakrishna

Rights and permissions

About this article

Cite this article

Balkaya, Ç., Kaftan, I. Inverse modelling via differential search algorithm for interpreting magnetic anomalies caused by 2D dyke-shaped bodies. J Earth Syst Sci 130, 135 (2021). https://doi.org/10.1007/s12040-021-01614-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12040-021-01614-1