Abstract

This work develops formulas for numerical integration with spline interpolation. The new formulas are shown to be alternatives to the Newton–Cotes integration formulas. These methods have important application in integration of tables or for discrete functions with constant steps. An error analysis of the technique was conducted. A new type of spline interpolation is proposed in which a polynomial passes through more than two tabulated points. The results show that the proposed formulas for numerical integration methods have high precision and absolute stability. The obtained methods can be used for the integration of stiff equations. This paper opens a new field of research on numerical integration formulas using splines.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Texts on numerical methods abound with formulas for numerical integration sometimes called quadrature or mechanical quadrature [1, 2]. The function f(x), which is to be integrated, may be a known function or a set of discrete data. This is not surprising, since there are so many possibilities for selecting the base-point spacing, the degree of the interpolating polynomial, and the location of base points with respect to the interval of integration. Many known functions, however, do not have an exact integral, and an approximate numerical procedure is required to compute the integral. In many cases, the function f(x) is known only as a set of discrete points, in which case an approximate numerical procedure is required to compute the integral [1, 2]. Numerical integration formulas can be developed by fitting approximating functions to discrete data and integrating the approximating function:

when the function to be integrated has known values at equally spaced points (Δx = h=constant) and n is number of points with x ranging as: x1, x2 = x1 + h, x3 = x1 + 2h, …, xn−1=x1 + (n − 2)h, xn = x1 + (n − 1)h, a polynomial P(x) can be fit to the discrete data [2, 3]. The resulting formulas are called Newton–Cotes formulas that employ functional values at equally spaced base points.

The distance between the lower and upper limits of an integral is called the range of integration. The distance between any two data points is called an increment or step (Δx = h) [1, 3,4,5].

2 State of the Art on Numerical Quadrature

There is a large literature on numerical integration, also called quadrature. Of special importance are the midpoint rule and Simpson’s rule. They are simple to use and bring enormous improvements for smooth functions in low dimensions [6,7,8]. The advantage of classical quadrature methods decays rapidly with increasing dimension. This phenomenon is a manifestation of Bellman’s ‘curse of dimensionality’, with Monte Carlo versions in two classic theorems of Bakhvalov.

The trapezoid rule is based on a piecewise linear approximation. The trapezoid rule integrates correctly any function f that is piecewise linear on each segment [xi−1, xi], by using two evaluation points at the ends of the segments [9,10,11]. The midpoint rule also integrates such a function correctly using just one point in the middle of each segment. The midpoint rule has benefitted from an error cancellation. This kind of cancellation plays a big role in the development of classical quadrature methods.

The midpoint rule has a big practical advantage over the trapezoid rule. It does not evaluate f at either endpoint a or b. Many of the integrals that we apply Monte Carlo methods to diverge to infinity at one or both endpoints. In such cases, the midpoint rule avoids the singularity. There are numerous mathematical techniques for removing singularities [12,13,14]. When we have no such analysis of our integrand, perhaps because it has a complicated problem-dependent formulation, or because we have hundreds of integrands to consider simultaneously, then avoiding the singularity is attractive. By contrast, the trapezoid rule does not avoid the endpoints x = a and x = b. For such methods a second, less attractive principle is to ignore the singularity, perhaps by using f(xi) = 0 at any sample point xi where f is singular.

The midpoint and trapezoid rules are based on correctly integrating piecewise constant and linear approximations to the integrand. That idea extends naturally to methods that locally integrate higher order polynomials [15,16,17]. The result is much more accurate integration, at least when the integrand is smooth. The idea behind Simpson’s rule generalizes easily to higher orders. We split the interval [a, b] into panels, find a rule that integrates a polynomial correctly within a panel, and then apply it within every panel to get a compound rule.

There are two main varieties of compound quadrature rule. For open rules we do not evaluate f at the end-points of the panel. The midpoint rule is open. For closed rules we do evaluate f at the end-points of the panel [18, 19]. The trapezoid rule and Simpson’s rule are both closed. Closed rules have the advantage that some function evaluations get reused when we increase n. Open rules have a perhaps greater advantage that they avoid the ends of the interval where singularities often appear.

The trapezoid rule and Simpson’s rule use n =2 and n =3 points respectively within each panel. In general, one can use m points to integrate polynomials of degree n − 1, to yield the Newton–Cotes formulas, of which the trapezoid rule and Simpson’s rule are special cases [12, 20, 21]. The Newton–Cotes rule for n =4 is another of Simpson’s rules, called Simpson’s 3/8 rule. Newton–Cotes rules of odd order have the advantage that, by symmetry, they also correctly integrate polynomials of degree m, as we saw already in the case of Simpson’s rule.

High order rules should be used with caution [22,23,24]. They exploit high order smoothness in the integrand, but can give poor outcomes when the integrand is not as smooth as they require. In particular if a genuinely smooth quantity has some mild nonsmoothness in its numerical implementation f, then high order integration rules can behave very badly, magnifying this numerical noise.

As a further caution, note that taking f fixed and letting the order n in a Newton–Cotes formula increase does not always converge to the right answer even for f with infinitely many derivatives. Lower order rules applied in panels are more robust [23,24,25]. The Newton–Cotes rules can be made into compound rules similarly to the way Simpson’s rule was compounded. When the basic method integrates polynomials of degree r exactly within panels, then the compound method has error O(m−r), assuming that f(r) is continuous on [a, b].

The rules considered above evaluate f at equispaced points. The basic panel for a Gauss rule is conventionally [− 1, 1] or sometimes ℝ, and not [0, h] as we used for Newton–Cotes rules. Also the target integration problem is generally weighted. The widely used weight functions are multiples of standard probability density functions, such as the uniform, gamma, Gaussian and beta distributions [12, 24, 26]. The idea is that having f be nearly a polynomial can be much more appropriate than requiring the whole integrand f(x)w(x) to be nearly a polynomial. Choosing wi and xi together yields 2n parameters and it is then possible to integrate polynomials of degree up to 2n − 1 without error.

Unlike Newton–Cotes rules, Gauss rules of high order have non-negative weights. We could in principle use a very large n. For the uniform weighting w(x) =1 though, we could also break the region into panels. Then for m function evaluations the error will be O(m−2n) assuming as usual that f(2n) is continuous on [a, b]. Gauss rules for uniform weights on [− 1, 1] have the advantage that they can be used within panels.

Quadrature rules offer an elegant and efficient way to numerically evaluate integrals of functions from a linear space under consideration [24,25,26,27]. These rules typically require function evaluation at specific points, called nodes, and these values are multiplied by constants, called weights, to give the final value of the integral as a weighted sum.

There is an extensive number of various quadrature rules depending on n (f is univariate, bivariate, multivariate), domain shape (disc, hypercube, simplex), and the type of the linear space (polynomials, splines, rational functions, smooth non-polynomial) [12, 13, 28, 29]. For polynomial multivariate integration, the field is well studied. In the univariate case, a lot of research has been devoted to piecewise polynomials to address integration for spline spaces arising in isogeometric analysis. Introduced so called half-point rule for splines that needs the minimum number of quadrature points. However, this rule is in general exact only over the whole real line (infinite domain).

For finite domains, one may introduce additional quadrature points which make the rule non-Gaussian (slightly suboptimal in terms of the number of quadrature points), but more importantly, it yields quadrature weights that can be negative, unlike in Gaussian quadratures. When computing Galerkin approximations, assembling mass and stiffness matrices is the bottleneck of the whole computation and efficient quadrature rules for specific spline spaces are needed to efficiently evaluate the matrix entries [12, 13, 26, 29,30,31].

In the multivariate case where spline spaces possess a tensor-product structure, univariate quadrature rules are typically used in each direction, resulting in tensor-product rules. Recently, it have changed the paradigm of the mass and stiffness matrix computation from the traditional element-wise assembly to a row-wise concept [32,33,34]. When building the mass matrix, one B-spline basis function of the scalar product involved is considered as a positive measure (i.e., a weight function), and a weighted quadrature with respect to that weight is computed for each matrix row. Such an approach brings significant computational savings because the number of quadrature points in each parameter dimension is independent on the polynomial degree and requires asymptotically (for a large number of elements) only two points per element. For the multivariate case that is unstructured such as triangular meshes in 2D, however, constructing efficient quadrature rules from tensor product counterparts is unnatural, resulting in rules that are often not symmetric even though they act on a symmetric domain [35,36,37].

Classical quadrature methods are very well tuned to one-dimensional problems with smooth integrands. A natural way to extend them to multi-dimensional problems is to write them as iterated one-dimensional integrals, via Fubini’s theorem [12, 13, 38, 39]. When we estimate each of those one-dimensional integrals by a quadrature rule, we end up with a set of sample points on a multi-dimensional grid. Unfortunately, there is a curse of dimensionality that severely limits the accuracy of this approach. This curse of dimensionality is not confined to sampling on grids formed as products of one-dimensional rules. Any quadrature rule in high dimensions will suffer from the same problem. Two important theorems of Bakhvalov, make the point.

Bakhvalov’s theorem makes high-dimensional quadrature seem intractable. There is no way to beat the rate O(n−r/d), no matter where you put your sampling points xi or how cleverly you weight them. At first, this result looks surprising, because we have been using Monte Carlo methods which get an root mean square error O(n−1/2) in any dimension. The explanation is that in Monte Carlo sampling we pick one single function f(·) with finite variance σ2 and then in sampling n uniform random points, get an root mean square error of σn−1/2 for the estimate of that function’s integral. Bahkvalov’s theorem works in the opposite order [40]. We pick our points x1,.., xn, and their weights wi. Then Bakhvalov finds a function f with r derivatives on which our rule makes a large error. When we take a Monte Carlo sample, there is always some smooth function for which we would have got a very bad answer. Such worst case analysis is very pessimistic because the worst case functions could behave very oddly right near our sampled x1,.., xn, and the worst case functions might look nothing like the ones we are trying to integrate. We can hybridize quadrature and Monte Carlo methods by using each of them on some of the variables. Hybrids of Monte Carlo and quasi-Monte Carlo methods are often used [5,6,7, 41,42,43].

The Laplace approximation is a classical device for approximate integration. The Laplace approximation is very accurate when log(f(x)) is smooth and the quadratic approximation is good where f is not negligible. Such a phenomenon often happens when x is a statistical parameter subject to the central limit theorem, f(x) is its posterior distribution, and the sample size is large enough for the Central Limit Theorem to apply [20, 21, 44,45,46].

The Laplace approximation is now overshadowed by Markov Chain Monte Carlo. One reason is that the Laplace approximation is designed for unimodal functions. When prior distribution has two or more important modes, then the space can perhaps be cut into pieces containing one mode each, and Laplace approximations applied separately and combined, but such a process can be cumbersome. Markov Chain Monte Carlo by contrast is designed to find and sample from multiple modes, although on some problems it will have difficulty doing so. The Laplace approximation also requires finding the optimum of a d-dimensional function and working with the Hessian at the mode. In some settings that optimization may be difficult, and when d is extremely large, then finding the determinant of the Hessian can be a challenge. Finally, posterior distributions that are discrete or are mixtures of continuous and discrete parts can be handled by Markov Chain Monte Carlo but are not suitable for the Laplace approximation. The Laplace approximation is not completely superceded by Markov Chain Monte Carlo. In particular, the fully exponential version is very accurate for problems with modest dimension d and large n. When the optimization problem is tractable then it may provide a much more automatic and fast answer than Markov Chain Monte Carlo does [12, 17, 23, 31, 34, 47].

There is some mild controversy about the use of adaptive methods. There are theoretical results showing that adaptive methods cannot improve significantly over non-adaptive ones. There are also theoretical and empirical results showing that adaptive methods may do much better than non-adaptive ones. These results are not contradictory, because they make different assumptions about the problem. For a high level survey of when adaptation helps [4, 47,48,49].

Sparse grids were originally developed for the quadrature of high-dimensional functions. The method is always based on a one-dimensional quadrature rule, but performs a more sophisticated combination of univariate results. However, whereas the tensor product rule guarantees that the weights of all of the cubature points will be positive if the weights of the quadrature points were positive, Smolyak’s rule does not guarantee that the weights will all be positive [39,40,41, 48].

Bayesian Quadrature is a statistical approach to the numerical problem of computing integrals and falls under the field of probabilistic numerics [42,43,44]. It can provide a full handling of the uncertainty over the solution of the integral expressed as a Gaussian Process posterior variance. It is also known to provide very fast convergence rates which can be up to exponential in the number of quadrature points n.

The problem of evaluating the integral can be reduced to an initial value problem for an ordinary differential equation by applying the fundamental theorem of calculus. For instance, the standard fourth-order Runge–Kutta method applied to the differential equation yields Simpson’s rule. Thus, in our view, numerical quadrature problems are often wrongly studied as a numerical solution of differential equations. Numerical integration is a much more general and distinct study of the differential equations [50,51,52,53].

3 Newton–Cotes Numerical Integration Formulas

The Newton–Cotes formulas are shown for comparison with the new formulas obtained using splines. The closed integration formulas use information about f(x), that is, they have base points, at both limits of integration. The trapezoid rule for a single interval is obtained by fitting a first-degree polynomial to two discrete points [1, 2, 4, 26]. Simpson’s 1/3 rule is obtained by fitting a second-degree polynomial to three equally spaced discrete points. Simpson’s 3/8 rule is obtained by fitting a third-degree polynomial to four equally spaced discrete points. Boole’s rule is obtained by fitting a fourth-degree polynomial to five equally spaced discrete points. Table 1 shows Newton–Cotes closed integration formulas.

Generally, for closed equations where n is the number of points, ci are integer coefficients, num is the integer numerator that multiplies step h = Δx and den is the integer denominator, the closed integration formula is:

In the open integration formulas, the first (y1) and last (yn) points do not appear in the formula. The open integration formulas do not require information about f(x) at limits of integration [1, 2, 4, 5, 27]. The midpoint rule for a double interval is obtained by fitting a zero-degree polynomial to three discrete points. The upper limit of integration is x3 = x1 + 2h. Table 2 shows Newton–Cotes open integration formulas.

Generally, for the open formulas, where n is the number of points, ci are the integer coefficients, num is the integer numerator that multiplies step h = Δx and den is the integer denominator, the open integration formula is:

In the semi-open integration formulas, the last point (yn) does not appear in the formula. In the semi-closed integration formulas, the first point (y1) does not appear in formula [1, 2, 28,29,30]. The formula for a double interval is obtained by fitting a zero-degree polynomial to three discrete points. When N is odd, the semi-closed or semi-open integration formulas are the same as the open integration formulas. The upper limit of integration is x3 = x1 + 2h, and the integral (I) has the following formula:

For three intervals, the semi-open formula is obtained by fitting a first-degree polynomial to four discrete points. The upper limit of the integral is x4 = x1 + 3h; then, the integral (I) has the following formula:

Table 3 shows Newton–Cotes semi-open integration formulas [1,2,3]. Generally, for semi-open formulas, where n is the number of points, the semi-open integration formula is:

The semi-closed integration formulas are the same as the semi-open formulas. For example, in case of n = 3:

For N = 4:

Table 4 shows Newton–Cotes semi-closed integration formulas. Generally, for semi-closed formulas, where n is the number of points, ci are integer coefficients, the semi-closed integration formula is:

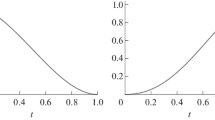

The semi-open or semi-closed formulas can be used on a type of improper integral, that is one with a lower limit of − ∞ or an upper limit of + ∞. Such integrals can usually be evaluated by making a change in the variable that transforms the infinite limit to one that is finite [1,2,3, 29]. The following identity serves this purpose and works for any function that decreases toward zero at least as fast as the function 1/x2 as x approaches infinity:

where ab > 0. Therefore, it can be used only when a is positive and b is ∞ or when a is − ∞ and b is negative. For cases where the limits are from − ∞ to ∞, the integral can be implemented in three steps [1,2,3,4]. For example:

where A is a positive number. One problem with using Eq. (10) to evaluate an integral is that the transformed function will be singular at one of the limits [1, 3, 30, 31]. The semi-open or semi-closed integration formula can be used to circumvent this dilemma as these formulas allow evaluation of the integral without employing the data at the end points of the integration range.

4 Spline Interpolation

In applying the Newton–Cotes method, (n − 1)th-order polynomials were used to interpolate between n data points. This curve captures of all the meandering suggested by the points. However, there are cases where these functions can lead to erroneous results because of round-off errors and overshooting. An alternative approach is to apply lower-order polynomials to subsets of the data points. These connecting polynomials are called spline functions [1, 2, 4, 31,32,33].

For example, third-order curves, which are employed to connect each pair of data points, are called cubic splines. These functions can be constructed so that the connections between adjacent cubic equations are visually smooth. On the surface, it would seem that the third-order approximation of the splines would be inferior to the higher-order expressions. You might wonder why a spline would ever be preferable. There are situations in which a spline performs better than a higher-order polynomial. This is the case where a function is generally smooth but undergoes an abrupt change somewhere in the region of interest. In this case a higher-order polynomial will tend to erratically oscillate in the vicinity of the abrupt change. In contrast, the spline also connects the points, but because it is limited to lower-order changes, the oscillations are kept to a minimum. Thus the spline usually provides a superior approximation of the behavior of functions that have local, abrupt changes [1, 2, 4, 34,35,36,37].

The concept of the spline originated from the drafting technique of using a thin, flexible strip (called a spline) to draw smooth curves through a set of points. The process is depicted in Fig. 1 for a series of five pins (data points). In this technique, the draftsman places paper over a wooden board and hammers nails or pins into the paper (and board) at the location of the data points [38,39,40,41]. A smooth cubic curve results from interweaving the strip between the pins. Hence, the name “cubic spline” has been adopted for polynomials of this type [1, 3, 4, 12].

Using this practical historical interpolation device, one could also calculate the area under the curve, by using the weight of the sand beneath the spline, as shown in Fig. 2.

In interpolation by splines, between every two points, we have a polynomial of a certain degree [1, 2, 4, 41,42,43,44]. Therefore, the interpolation is not made by a single polynomial but by many polynomials. Below, an example with 5 points and 4 third-degree polynomials forming a cubic spline is given. We can see from the equations that there are 12 unknown coefficients, (a0,…,a3,b0,…,b3,c0,…,c3,d0…,d3).

As shown in Fig. 3, the objective in using cubic splines is to derive a third-order polynomial for each interval between the knots (between two data points). Thus, for n data points (i = 1, 2,…, n), there are (n − 2) internal points, without the first and last point. There are (n − 1) intervals and (n − 1) third-order polynomials. Consequently, there are 4(n − 1) unknown constants to evaluate and thus 4n − 4 conditions are required to evaluate the unknown constants. These are as follows:

-

1.

The function values must be equal at the interior knots (2 conditions for each internal point = 2n − 4 conditions).

-

2.

The first and last functions must pass through the end points (2 conditions).

-

3.

The first derivatives at the interior knots must be equal (n − 2 conditions).

-

4.

The second derivatives at the interior knots must be equal (n − 2 conditions).

-

5.

Two derivatives at the first or end knots are zero (2 conditions), chosen from the first to third derivatives of the first and last polynomials: \({\text{P}}_{1}^{\prime }\)(x1) = 0, \({\text{P}}_{1}^{{\prime \prime }}\)(x1) = 0, \({\text{P}}_{1}^{\prime \prime \prime }\)(x1) = 0, \({\text{P}}_{{{\text{n}} - 1}}^{\prime }\)(xn) = 0, \({\text{P}}_{{{\text{n}} - 1}}^{\prime \prime }\)(xn) = 0 and \({\text{P}}_{{{\text{n}} - 1}}^{\prime \prime \prime }\)(xn) = 0.

Therefore, (2n − 4) + 2 + (n − 2) + (n − 2) + 2 = 4n − 4, is the number of conditions that is equal to the number of unknown polynomial coefficients.

The visual interpretation of condition 5 is that the function becomes a straight line at the end knots [1, 4, 43,44,45,46,47]. Specification of such an end condition leads to what is termed a “natural” spline. It is given this name because the drafting spline naturally behaves in this fashion. If the value of the second derivative at the end knots is nonzero (that is, there is some curvature), this information can be used alternatively to supply the two final conditions [1, 2, 4, 12].

Generalizing for an order polynomial equal to g, the objective in gth-order splines is to derive a gth-order polynomial for each interval between knots (between two data points), as in

Thus, for n data points (i = 1, 2,…, n), there are (n − 2) internal points, without the first and last point. There are (n − 1) intervals and (n − 1) gth-order polynomials consequently, and (g + 1)(n − 1) unknown constants need to be evaluated. Therefore, gn + n − g − 1 conditions are required to evaluate the unknown constants. These are as follows:

-

1.

The function values must be equal at the interior knots (2 conditions for each internal point = 2n − 4 conditions).

-

2.

The first and last functions must pass through the end points (2 conditions).

-

3.

The first to (g − 1) order derivatives at the interior knots must be equal ([g − 1][n − 2] conditions).

-

4.

The (g − 1) derivatives at the first or end knots are zero (g − 1 conditions), chosen from the first to g order derivatives of the first and last polynomials: \({\text{P}}_{1}^{\prime }\)(x1) = 0, \({\text{P}}_{1}^{{\prime \prime }}\)(x1) = 0, \({\text{P}}_{1}^{\prime \prime \prime }\)(x1) = 0, P (4)1 (x1) = 0,…, P (g)1 (x1) = 0 and \({\text{P}}_{{{\text{n}} - 1}}^{\prime }\)(xn) = 0, \({\text{P}}_{{{\text{n}} - 1}}^{\prime \prime }\)(xn) = 0, \({\text{P}}_{{{\text{n}} - 1}}^{\prime \prime \prime }\)(xn) = 0, P (4)n−1 (xn) = 0,…, P (g)n−1 (xn) = 0.

Therefore, (2n − 4) + 2 + (g + 1)(n − 2) + (g − 1) = gn + n − g − 1, is the number of conditions which equals the number of unknown polynomial coefficients.

5 New Type of Spline Interpolation

In this new type of interpolation by splines, instead of each polynomial passing through only two points, the polynomial passes through m points, as shown in Fig. 4. At each m point, the interpolator polynomial is changed, and the derivatives of these two polynomials are equated at these points to give a degree of continuity to the overall curve.

Thus, at the points of change of the polynomial, the value and the successive derivatives of these interpolating polynomials are matched. The number of points (n), the number of polynomials (np), the degree of the polynomial (g), the number of points through which the polynomial passes (m), and the order of the derivatives (d) that will be equalized at the polynomial exchange points are then altered so that we always have the number of equations equal to the number of coefficients of unknown polynomials. To complete the equations, the natural conditions are used, where the successive derivatives of the first and last polynomials in the first and last points, respectively, are made equal to zero. Thus, obtaining different interpolations and different polynomials for the same data points.

The coefficients of the interpolating polynomials are obtained by the resolution of a linear system. The equations of the linear system come from the interpolation conditions, where the polynomials pass through some points Pj(xi) = yi; from the derivative conditions, where the derivatives of successive orders are equalized P (g)j (xi) = P (g)j+1 (xi); and the natural conditions, where the derivatives in the first and last point are equalized to zero, P (g)1 (x1) = 0 or P (g)np (xn) = 0. The number of equations in the linear system must be equal to the number of polynomials (np) multiplied by the degree of the polynomials plus one (g + 1).

Final corrections can still be made before the resolution of a system of linear equations, where the equations that contain one or more of a certain point can be excluded. For example, we can remove equations that contain the first (x1,y1) or last (xn, yn) point or the middle point (xn/2,yn/2). This results in generating integration formulas that do not contain these points.

6 Integration of Polynomials Obtained by Spline Interpolation

Once the interpolating polynomials are obtained by splines, they must be integrated. Each polynomial is integrated onto its x-range [1,2,3, 12]. Because the spacing of the abscissa x is constant (Δx = h) and the value start, x1, has no influence, the integration formulas obtained are all functions of the values of y (y1, y2, y3, y4,…, yn). The formula below shows this procedure:

Once the integration formula is obtained, it is tested in many examples, and the truncation error is estimated as a function of the order Δx = h. The stability and convergence of the formula are also tested in several examples where the exact values of the integrals are known. This allows for verification and validation of the new numerical integration formulas.

7 Algorithm for Obtaining Different Integration Formulas by Spline Interpolation

An algorithm is proposed to obtain thousands of integration formulas for different interpolations by splines. A maximum of 25 points (mn = 25) was used, considering a large number of data points, to obtain integration formulas by following the steps below:

-

1.

Set the maximum number of points to 25 (mn = 25)

-

2.

Vary the number of points (n) from 2 to mn (for n = 2 to mn)

-

3.

Vary the number of polynomials (np) from 1 to mn (for np = 1 to mn)

-

4.

Vary the degree of the polynomials (g) from 0 to mn (for g = 1 to mn)

-

5.

Vary the greater order of the derivative that will be matched in the polynomials (d) from 0 to mn (i.e., from d = 0 to mn)

-

6.

Define the data points (x1,y1), (x2,y2), (x3,y3), …, (xn−1,yn−1), (xn,yn)

-

7.

Set the equally spaced abscissa (Δx = h) value so that x2 = x1 + h, x3 = x1 + 2h,…, xn = x1 + (n − 1)h

-

8.

Define the interpolating polynomials P1, P2, P3, …, Pnp

-

9.

Calculate the number of polynomial coefficients to be obtained np(g + 1)

-

10.

Obtain the np(g + 1) linear equations

-

11.

Determine the number of data points (m) through which each polynomial will pass (for m = 2 to mn)

-

12.

Obtain the linear equations using the interpolation conditions Pj(xi) = yi

-

13.

Obtain the linear equations using the derivation conditions P (g)j (xi) = P (g)j+1 (xi)

-

14.

Obtain the linear equations using the natural conditions P (g)1 (x1) = 0 or P (g)np (xn) = 0

-

15.

Optionally, eliminate the equations that contain a certain point, the first (x1,y1) or last point (xn,yn) or the middle point (xn/2,yn/2)

-

16.

Perform the test to continue if the number of equations is equal to the number of unknown polynomial coefficients

-

17.

Solve the linear system to calculate the coefficients of the interpolating polynomials

-

18.

Integrate the obtained polynomials

-

19.

Obtain the formulas for numerical integration

-

20.

Test the integration formulas obtained against known integrals

-

21.

Estimate the truncation error of the formulas

-

22.

Test the convergence, applicability and accuracy of the formulas

-

23.

Select the best verified and validated integration formulas

8 Results Obtained and the Best Integration Formulas

The following tables show some integration formulas obtained. Tables 5, 6, 7, 8, 9 and 10 show integration formulas similar to Newton–Cotes closed formulas in increasing order of truncation error. Tables 11, 12, 13, 14, 15 and 16 show integration formulas similar to Newton–Cotes open formulas in increasing order of truncation error. Tables 17, 18, 19, 20, 21, 22, 23, 24 and 25 show integration formulas similar to Newton–Cotes semi-closed formulas in increasing order of truncation error. Tables 26 and 27 show integration formulas similar to Newton–Cotes semi-closed formulas in increasing order of truncation error; it can be noticed the similarity with the previous tables of semi-closed formulas, having the same coefficients and changing only the index values of the y-ordinates. Tables 28, 29, 30, 31 and 32 show integration formulas similar to Newton–Cotes closed formulas with no middle point in increasing order of truncation error, note that there are no y-values for indexes n/2 or (n + 1)/2 or both. Tables 33, 34, 35, 36 and 37 show integration formulas similar to Newton–Cotes open formulas with no middle point in increasing order of truncation error, note also that there are no y-values for indexes n/2 or (n + 1)/2 or both. Tables 38, 39, 40, 41 and 42 show integration formulas similar to Newton–Cotes open formulas without two points in increasing order of truncation error, note that there are no y-values for indexes 1, 2, n − 1 and n.

9 Conclusion

The integration of the polynomials obtained by interpolation using splines allowed us to obtain new and previously unknown integration formulas with a high order of truncation errors. Many different integration formulas, similar to the Newton–Cotes formulas, were obtained in this study. These new integration formulas can be used in many engineering, mathematics, and physics research applications. These new integration formulas may also be compared with the application of Newton–Cotes formulas in different fields of science. There seems to be a strong relationship between the order of degree of the polynomials and the order of the truncation errors. The present article opens a new field of research to obtain numerical methods for derivation, integration and resolution of differential equations. Another interesting observation is that the interpolation by splines approach generates a smooth adjustment between intervals and a minimum variation in curve fitting, which can help in the stability of the integration. It is believed that more new and important research on this subject can be made, given the tremendous evolution of numerical methods in engineering.

References

Chapra SC (2017) Applied numerical methods with MATLAB® for engineers and scientists, 4th edn. McGraw-Hill Education, ISBN-13: 978-0073397962

Yang WY, Cao W, Chung TS, Morris J (2005) Applied numerical methods using MATLAB®. Wiley, Hoboken

Greenspan D, Carnahan B, Luther HA, Wilkes JO (2006) Applied numerical methods. Math Comput. https://doi.org/10.2307/2004855

Canova F (2019) Methods for Applied macroeconomic research. Princeton University Press, Princeton

van der Meer FP (2012) Mesolevel modeling of failure in composite laminates: constitutive, kinematic and algorithmic aspects. Arch Comput Methods Eng 19:381. https://doi.org/10.1007/s11831-012-9076-y

Davis PJ, Rabinowitz P (2007) Methods of numerical integration (Dover Books on Mathematics), 2nd edn. ISBN-13: 978-0486453392

Arthur DW, Davis PJ, Rabinowitz P (1986) Methods of numerical integration. Math Gaz. https://doi.org/10.2307/3615859

Dimov IT (2005) Monte Carlo methods for applied scientists. World Scientific Publishing Company, ISBN-13: 978-9810223298

Evans M, Swartz T (2000) Approximating integrals via Monte Carlo and deterministic methods. Oxford University Press, ISBN-13: 978-0198502784

Press WH, Teukolsky SA, Vetterling WT, Flannery BP (2007) Numerical recipes, 3rd edn. In: The art of scientific computing. Cambridge University Press, ISBN-13: 978-0521880688

Tierney L, Kadane JB (1986) Accurate approximations for posterior moments and marginal densities. J Am Stat Assoc. https://doi.org/10.1080/01621459.1986.10478240

Bartoň M, Kosinka J (2019) On numerical quadrature for C1 quadratic Powell–Sabin 6-split macro-triangles. J Comput Appl Math. https://doi.org/10.1016/j.cam.2018.07.051

Kosinka J, Bartoň M (2019) Gaussian quadrature for C1 cubic Clough–Tocher macro-triangles. J Comput Appl Math. https://doi.org/10.1016/j.cam.2018.10.036

Busenberg SN, Fisher D (1984) Spline quadrature formulas. J Approx Theory. https://doi.org/10.1016/0021-9045(84)90040-6

Patriarca M, Farrell P, Fuhrmann J, Koprucki T (2019) Highly accurate quadrature-based Scharfetter–Gummel schemes for charge transport in degenerate semiconductors. Comput Phys Commun. https://doi.org/10.1016/j.cpc.2018.10.004

Bailey DH, Borwein JM (2011) High-precision numerical integration: progress and challenges. J Symb Comput. https://doi.org/10.1016/j.jsc.2010.08.010

Skrainka BS, Judd KL (2012) High performance quadrature rules: how numerical integration affects a popular model of product differentiation. SSRN Electron J. https://doi.org/10.2139/ssrn.1870703

Reeger JA, Fornberg B (2018) Numerical quadrature over smooth surfaces with boundaries. J Comput Phys. https://doi.org/10.1016/j.jcp.2017.11.010

Aslanyan V, Aslanyan AG, Tallents GJ (2017) Efficient calculation of degenerate atomic rates by numerical quadrature on GPUs. Comput Phys Commun. https://doi.org/10.1016/j.cpc.2017.06.003

Mohammed OH, Saeed MA (2019) Numerical solution of thin plates problem via differential quadrature method using G-spline. J King Saud Univ Sci. https://doi.org/10.1016/j.jksus.2018.04.001

Burg COE (2012) Derivative-based closed Newton–Cotes numerical quadrature. Appl Math Comput. https://doi.org/10.1016/j.amc.2011.12.060

Chakrabarti A (1996) Modified quadrature rules based on a generalised mixed interpolation formula. J Comput Appl Math. https://doi.org/10.1016/S0377-0427(96)00107-0

Schoenberg IJ (1964) Spline Interpolation and best quadrature formulae. Bull Am Math Soc. https://doi.org/10.1090/S0002-9904-1964-11054-5

Taghvafard H (2011) A new quadrature rule derived from spline interpolation with error analysis. World Acad Sci Eng Technol. https://doi.org/10.5281/zenodo.1070225

Karlin S (1971) Best quadrature formulas and splines. J Approx Theory. https://doi.org/10.1016/0021-9045(71)90040-2

Nouy A (2009) Recent developments in spectral stochastic methods for the numerical solution of stochastic partial differential equations. Arch Comput Methods Eng 16:251. https://doi.org/10.1007/s11831-009-9034-5

Caicedo M, Mroginski JL, Toro S et al (2018) High performance reduced order modeling techniques based on optimal energy quadrature: application to geometrically non-linear multiscale inelastic material modeling. Arch Comput Methods Eng. https://doi.org/10.1007/s11831-018-9258-3

Badia S, Martín AF, Principe J (2018) FEMPAR: an object-oriented parallel finite element framework. Arch Comput Methods Eng 25:195. https://doi.org/10.1007/s11831-017-9244-1

Chatterjee T, Chakraborty S, Chowdhury R (2019) A critical review of surrogate assisted robust design optimization. Arch Comput Methods Eng 26:245. https://doi.org/10.1007/s11831-017-9240-5

Gleim T, Kuhl D (2019) Electromagnetic analysis using high-order numerical schemes in space and time. Arch Comput Methods Eng 26:405. https://doi.org/10.1007/s11831-017-9249-9

Huan Z, Zhenghong G, Fang X et al (2018) Review of robust aerodynamic design optimization for air vehicles. Arch Comput Methods Eng. https://doi.org/10.1007/s11831-018-9259-2

Mengaldo G, Wyszogrodzki A, Diamantakis M et al (2018) Current and emerging time-integration strategies in global numerical weather and climate prediction. Arch Comput Methods Eng. https://doi.org/10.1007/s11831-018-9261-8

Rozema W, Verstappen RWCP, Veldman AEP et al (2018) Low-dissipation simulation methods and models for turbulent subsonic flow. Arch Comput Methods Eng. https://doi.org/10.1007/s11831-018-09307-7

Scalet G, Auricchio F (2018) Computational methods for elastoplasticity: an overview of conventional and less-conventional approaches. Arch Comput Methods Eng 25:545. https://doi.org/10.1007/s11831-016-9208-x

Rodríguez JM, Carbonell JM, Jonsén P (2018) Numerical methods for the modelling of chip formation. Arch Comput Methods Eng. https://doi.org/10.1007/s11831-018-09313-9

Marussig B, Hughes TJR (2018) A review of trimming in isogeometric analysis: challenges, data exchange and simulation aspects. Arch Comput Methods Eng 25:1059. https://doi.org/10.1007/s11831-017-9220-9

Meier C, Popp A, Wall WA (2019) Geometrically exact finite element formulations for slender beams: Kirchhoff–love theory versus Simo–Reissner theory. Arch Comput Methods Eng 26:163. https://doi.org/10.1007/s11831-017-9232-5

Nodargi NA (2018) An overview of mixed finite elements for the analysis of inelastic bidimensional structures. Arch Comput Methods Eng. https://doi.org/10.1007/s11831-018-9293-0

Meyghani B, Awang M (2019) A comparison between the flat and the curved friction stir welding (FSW) thermomechanical behaviour. Arch Comput Methods Eng. https://doi.org/10.1007/s11831-019-09319-x

Laurent L, Le Riche R, Soulier B et al (2019) An overview of gradient-enhanced metamodels with applications. Arch Comput Methods Eng 26:61. https://doi.org/10.1007/s11831-017-9226-3

Fraz MM, Badar M, Malik AW et al (2018) Computational methods for exudates detection and macular edema estimation in retinal images: a survey. Arch Comput Methods Eng. https://doi.org/10.1007/s11831-018-9281-4

Rappel H, Beex LAA, Hale JS et al (2019) A tutorial on Bayesian inference to identify material parameters in solid mechanics. Arch Comput Methods Eng. https://doi.org/10.1007/s11831-018-09311-x

Moreno-García P, dos Santos JVA, Lopes H (2018) A review and study on Ritz method admissible functions with emphasis on buckling and free vibration of isotropic and anisotropic beams and plates. Arch Comput Methods Eng 25:785. https://doi.org/10.1007/s11831-017-9214-7

Borzacchiello D, Aguado JV, Chinesta F (2019) Non-intrusive sparse subspace learning for parametrized problems. Arch Comput Methods Eng 26:303. https://doi.org/10.1007/s11831-017-9241-4

Fambri F (2019) Discontinuous Galerkin methods for compressible and incompressible flows on space–time adaptive meshes: toward a novel family of efficient numerical methods for fluid dynamics. Arch Comput Methods Eng. https://doi.org/10.1007/s11831-018-09308-6

Ramírez L, Nogueira X, Ouro P et al (2018) A higher-order chimera method for finite volume schemes. Arch Comput Methods Eng 25:691. https://doi.org/10.1007/s11831-017-9213-8

Zhang LW, Ademiloye AS, Liew KM (2018) Meshfree and particle methods in biomechanics: prospects and challenges. Arch Comput Methods Eng. https://doi.org/10.1007/s11831-018-9283-2

Sarmavuori J, Särkkä S (2019) Numerical integration as a finite matrix approximation to multiplication operator. J Comput Appl Math. https://doi.org/10.1016/j.cam.2018.12.031

Magalhães Cristina, Junior Pedro (2010) Higher-order Newton–Cotes formulas. J Math Stat. https://doi.org/10.3844/jmssp.2010.193.204

Miclăuş D, Pişcoran LI (2019) A new method for the approximation of integrals using the generalized Bernstein quadrature formula. Appl Math Comput. https://doi.org/10.1016/j.amc.2018.08.008

Liu G, Xiang S (2019) Clenshaw–Curtis-type quadrature rule for hypersingular integrals with highly oscillatory kernels. Appl Math Comput. https://doi.org/10.1016/j.amc.2018.08.004

Grylonakis ENG, Filelis-Papadopoulos CK, Gravvanis GA, Fokas AS (2019) An iterative spatial-stepping numerical method for linear elliptic PDEs using the unified transform. J Comput Appl Math. https://doi.org/10.1016/j.cam.2018.11.025

Sam CN, Hon YC (2019) Generalized finite integration method for solving multi-dimensional partial differential equations. Eng Anal Bound Elem. https://doi.org/10.1016/j.enganabound.2018.11.012

Acknowledgements

The authors thank the Conselho Nacional de Desenvolvimento Cientifico e Tecnologico-CNPq-“National Counsel of technological and scientific Development” and the Fundaçao de Amparo a Pesquisa de Minas Gerais-FAPEMIG-“Foundation for Research Support of Minas Gerais”. This study was financed in part by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior - Brasil (CAPES) - Finance Code 001.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Magalhaes, P.A.A., Junior, P.A.A.M., Magalhaes, C.A. et al. New Formulas of Numerical Quadrature Using Spline Interpolation. Arch Computat Methods Eng 28, 553–576 (2021). https://doi.org/10.1007/s11831-019-09391-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11831-019-09391-3