Abstract

In this paper recent developments for mesolevel modeling of failure in composite laminates are reviewed. The complexity of failure processes in composite laminates presses the need for reliable computational tools that can predict strength and damage tolerance. In mesolevel modeling, where individual layers are modeled separately but individual fibers are not, different failure processes are distinguished such as delamination, fiber failure and matrix failure. This paper deals with the question how these different processes should be treated for efficient and realistic computational modeling of failure. The development that is central in this review is the use of the extended finite element method (XFEM) for matrix cracks. Much attention is also paid to algorithmic aspects of implicit analysis of complex failure mechanisms, particularly but not exclusively in relation to XFEM. Furthermore, the remaining limitations and challenges for mesolevel analysis of composite failure are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Composite laminates are attractive engineering materials for their high strength and stiffness with low weight. They are increasingly applied in the aircraft, automotive and wind turbine industry and they have potential for use in slender structures in civil and building engineering. Different composite designs exist such as woven, braided and non-crimp fabric, but the relatively traditional laminate made of unidirectional plies remains a dominant form. Yet, limitations in the understanding and modeling of the behavior of these composite laminates slows down their rise in engineering practice.

The advantageous properties of composite laminates stem partially from the same feature as the challenges in understanding and predicting their behavior, namely from the multiscale nature of the material (see Fig. 1). The composite is made of stiff and strong fibers (e.g. glass, carbon or aramide) and a thermoset or thermoplastic resin material, the matrix. In laminates, the fibers are straight and long and have a fixed orientation within each layer or ply. The laminate is then formed by stacking a number of plies with different fiber orientations, such that a material is obtained with directional properties that suit the application. Because it are the fibers that are stiff and strong, the design is optimal when the load is carried by the fibers. The function of the matrix material is merely to keep the fibers in place and as such to eliminate the poor resistance to bending of individual fibers and fiber bundles.

Optimal use of composites is held back because reliable prediction of their damage tolerance and strength is still challenging. As a consequence, the safety of a certain composite structure under given load conditions can only be ensured with many expensive and laborious tests or with high safety factors. For example, the number of tests on components and structural parts that is required to achieve safety certification of a typical large composite airframe is of the order 10,000 [1]. If more reliable computational analysis were possible, real-life tests could partially be replaced with simulations, or virtual tests. Moreover, efficient computational tools could aid the material researcher in improving the material and give the engineer more freedom to optimize the design. Here, reliability and efficiency are two goals for the developer of numerical methods that are typically at odds with one another. It is a huge challenge to formulate computational models with which reliable results can be obtained quickly.

The main complicating aspect for laminate failure analysis is that different processes may occur during failure (see e.g. Fig. 2). Plasticity and cracking of the matrix material may occur, as well as fracture of fibers in tension or kinking of fibers in compression, possibly accompanied by debonding in the fiber-matrix interface. Matrix failure is classified as delamination when it occurs between the plies or as matrix cracking or ply splitting when the crack is oriented through the thickness of the ply. Here, the term ‘transverse matrix cracking’ is generally used for cracks oriented perpendicular to the load direction and ‘splitting’ for cracks in load direction. Reliable failure analysis of a composite structure must include a representation of each of the possible processes as well as their interaction. For most purposes, prediction of the onset of failure processes is not sufficient, because initial local failure does not necessarily lead to loss of integrity of the structure. Especially after matrix failure, the load bearing capacity of the composite is not immediately exhausted, since stress can be redistributed over the fibers. Therefore, failure analysis has to be ‘progressive’, i.e. the progression of failure through the material should be simulated.

Laminate analysis can be performed on three different scales (see Fig. 1). On the microlevel, the composite material is considered in most detail with distinction of individual fibers and the matrix material. On the mesolevel, each ply is homogenized to an orthotropic material in which the fiber direction is implicitly present in the ply properties. On the macrolevel, a single equivalent material is used, for which the laminate properties are obtained with through-thickness homogenization, e.g. with classical lamination theory [2]. Macrolevel computational analysis of failure has been done by Williams et al. [3]. However, the idea of through-thickness homogenization only stays valid in failure analysis when cracks cut the laminate through the thickness; delamination is naturally excluded from macrolevel analysis which limits the applicability of this approach. Microlevel computational analysis of failure, on the other hand, has been done by González and LLorca for both fiber failure [4] and transverse failure [5, 6]. Microlevel simulations are important for understanding the mechanical behavior of composites during failure, but limitations with respect to computational costs are soon met. Nevertheless, the need to incorporate the microlevel failure mechanisms in a multiscale framework is often stressed [1, 7–10], where the idea is to couple models from different scales, such that detailed analysis is performed locally to provide information for a global coarse-scale analysis. To meet this need, sequential multiscale models for composite materials have been proposed in recent years by different authors [11–14], involving a priori homogenization of lower scale results to generate input for higher scale simulations. The ideal, however, would be to have fully-coupled multiscale failure analysis with concurrent simulations on different scales. For this, great care is needed to formulate the right microscale model and coupling to avoid pathological behavior that is particular for failure analysis [15]. A promising framework for multiscale failure analysis has recently been developed by Nguyen et al. [16], but this has not yet been applied to composites. Moreover, because computational costs associated with multiscale analysis remain tremendous, tools for monoscale analysis will remain useful.

In this paper, the focus is on mesolevel analysis. The benefit of the mesolevel is that the relevant failure processes can all be described, but the challenge is to represent the micromechanical behavior realistically in the mesolevel idealization. This challenge will receive attention in this paper both in the discourse on recent methods and in the discussion of their limitations. Before the paper goes into detail about the computational modeling of failure in laminates, Sect. 2 contains an overview of some of the basic concepts and notations that are used from both fields on which the discussed research builds: numerical methods on the one hand and composite materials on the other. Subsequently, in Sect. 3 computational modeling of delamination is discussed. In Sect. 4 the possibility to model ply failure with a continuum approach is explored and discarded. An alternative approach is presented in the more lengthy Sect. 5 where a discrete representation of matrix cracks is central. The formulation of a discrete model for matrix cracks is discussed, as well as the way this interacts with formulations for the other failure processes. Next to assessment of different kinematic and constitutive models for the different failure processes, this paper deals with the algorithmic treatment of those models in implicit analysis. The competition and interaction between the different failure processes and the brittleness of composite laminates endanger the stability of the iterative procedure with which the solution for each time step is found. The meticulous algorithmic treatment that is necessary for implicit analysis of cases with complex failure mechanisms is exemplified in detail in Sect. 6. Numerical results obtained with the finite element framework for failure analysis described in Sects. 5 and 6, are presented and discussed in Sect. 7.

2 Preliminaries

In this section the basic concepts and notations used in the paper are briefly introduced: the nonlinear finite element method, computational failure analysis, mechanics of composite laminates and failure theories for composites.

2.1 The Nonlinear Finite Element Method

Models for progressive failure in composites are generally embedded in the framework of the finite element method [17, 18]. The main emphasis in this paper lies on methods for implicitly solving the quasi-static equilibrium equation, which means that momentum balance is solved neglecting inertia terms. Many of the material models can also be used for explicit finite element analysis, but optimality for implicit analysis is primary here.

The fundamental unknown in the considered finite element techniques is the displacement field. In each time step the displacement field that satisfies equilibrium as well as the essential boundary conditions, is approximated by solving the discretized weak form of the momentum balance or equilibrium equation, which is written as a set of equations

where the external force vector f ext represents external loading, and the internal force vector f int is a function of the displacement field.

In Eq. (1), the order of the problem has been reduced by discretizing the displacement field with a finite set of degrees of freedom and an equally sized set of shape functions. The shape functions are defined such that the degrees of freedom can be interpreted as nodal displacements. The nodes are defined in a mesh which divides the problem domain into elements. With the shape function matrix N and nodal displacement vector a, the displacement field u T={u x ,u y ,u z } is expressed element-wise as:

with

where n is the number of nodes of the element, N 1…N n are the shape functions defined over the element domain and a ij is the displacement of node i in direction j.

The strain field is defined with the strain nodal displacement matrix B as

with B(x)=LN(x) and

Stress σ is a function of strain ε,

which can be nonlinear and history dependent. This relation, the constitutive law, describes the material behavior. The simplest constitutive law is Hooke’s law:

where D e is the (constant) elasticity matrix, related to the Young’s modulus and Poisson’s ratio.

With Eqs. (5) and (7), the stress field can be computed from the (history of) the nodal displacements. The left hand side of the equilibrium equation (1) is evaluated from the stress field in a loop over the elements:

where Ω e is the element domain and M e maps the element vector to the corresponding entries in the global vector. The fact that the B matrix from Eq. (5) reappears in Eq. (9) is related to the Galerkin approximation method [17]. To keep the notation compact, the assembly of element integrals \([\sum_{\mathrm{e}}\mathbf{M}_{\mathrm{e}}\int_{\varOmega_{\mathrm{e}}}\ldots ]\) is from here on written as an integral over the global domain [∫ Ω …].

When f int(a) is nonlinear, the set of equations in (1) cannot be solved for a directly. The solution is then found iteratively with the Newton-Raphson procedure. In this iterative procedure a linearized system is solved in each iteration to approach the solution of the true system. The solution vector is updated in iteration j by solving

where K j−1 is the global tangent matrix evaluated at a j−1:

The update is repeated until the desired level of accuracy is obtained. After that, the next time step is entered.

The global tangent matrix K is evaluated in a loop over the elements. For the relations above, it takes the form of

where D, the material tangent, is a linearization of the constitutive law:

The integrals in Eqs. (9) and (12) which are both defined over the element domain are evaluated numerically in a loop over integration points.

2.2 Computational Failure Analysis

In this section, some of the key concepts in computational modeling of failure of materials are reviewed. Different methods for the modeling of cracks can be divided into two categories: the continuum approach and the discontinuous approach. In the continuum approach, the crack is smeared over a band with finite width. This is conceptually appealing, because the intact material is already modeled as a continuum, and it is convenient if the failure of the material can be represented in the same model. However, the discontinuous approach, in which the crack is modeled as a jump in the continuum, does justice to the elementary notion that a crack is not just a weaker kind of material but rather a new interior boundary. Both will be briefly discussed here, as well as the necessary material parameters for failure analysis.

2.2.1 Continuum Approach

Continuum models fit directly into the finite element framework presented in Sect. 2.1, as they are implemented in the relation between stress and strain, Eq. (7).

Plasticity

The continuum nonlinear material law with most history in finite element modeling is based on the theory of plasticity [19]. This theory has its root in metals analysis, and is built on the idea that deformation can be decomposed in an elastic part and a permanent or plastic part. The basic form of the constitutive law with plasticity is

Typically, the plastic strain ε p is unknown and computed such that the stress satisfies a certain criterion. This makes Eq. (14) an implicit set of equations which in most cases has to be solved iteratively with the so-called return mapping algorithms [20]. For modeling of failure, the stress criterion can be formulated such that the stress must vanish upon increasing plastic strain.

Damage

A more straightforward option is offered by the continuum damage theory [21]. Here, the basic idea is that the stiffness of the material decreases as a consequence of the reduction of the effective cross section when microcracks appear. The simplest formulation, assuming isotropic stiffness degradation is written as

where ω is the damage variable, which grows from 0 to 1 during failure. Generally, the stiffness degradation is computed explicitly from the strain, which grants continuum damage the advantage over plasticity of implementational simplicity and algorithmic robustness.

Practically, the main difference between plasticity and damage is in the unloading of the material. In the case of plasticity, constant ε p gives unloading with the initial stiffness, while in the case of damage, constant ω gives secant unloading (see Fig. 3).

Regularization

Continuum models for failure suffer from severe mesh-dependency. In softening, the nonlinear behavior tends to localize in a single row of elements. The amount of energy that is dissipated in the crack that is smeared over this band depends on the size of the elements, vanishing to nonsensical zero dissipation in the limit of very fine discretization.

This can be mitigated with the crack band method, in which the local stress strain behavior depends on the element size as first proposed by Bažant and Oh [22]. However, this does not solve the mesh sensitivity problem completely; element shape and orientation still influence the solution. More advanced localization limiters such as non-local [23] and gradient models [24–26], which introduce an internal length scale are to be preferred for reliable accurate representation of softening material behavior. These methods however, require a very fine mesh in the failure zone and considerable implementation effort. Another option is to introduce a rate dependent term [27], which has physical meaning for high rate problems, but can also be used artificially with quasi-static problems to resolve the mesh dependency problem.

2.2.2 Discontinuous Approach

The alternative to smearing a crack over the continuum is to insert a discontinuity in the displacement field. Although this is a more intuitive approach to failure, since displacements really are discontinuous over a crack, it requires more fundamental changes to the finite element formulation. One way or the other, the kinematical formulation has to be adapted to accommodate the discontinuity. To control the amount of energy that is dissipated in the crack as it propagates and to remove the singularity from the stress field at the crack tip, cohesive forces are applied on the crack surface, following early work by Barenblatt [28]. This means that a second constitutive law is introduced besides the constitutive law for the continuum. This ‘cohesive law’ relates the cohesive traction t to the size of the displacement jump over the crack 〚u〛:

The cohesive law can, just like the continuum models, be based on plasticity and/or damage. However, it does not require special regularization because it is acting on a surface instead of in a volume.

Interface Elements

The most straightforward discontinuous approach is to have the discontinuity between the elements. Duplicate nodes are used along the crack path to describe a jump in the displacement field (see Fig. 4). The cohesive forces can be defined on a node to node (lumped) basis [29, 30], or in a continuous interface element [31, 32]. These two are connected through the fact that the often used nodal (Newton-Cotes) integration scheme in the continuous interface element renders it essentially similar to the lumped elements. In fact, it has been shown by Schellekens and De Borst [33] that a nodal integration scheme leads to better performance for interface elements, and the same thing has been shown for lumped versus continuous interface elements by Rots [34].

The interface element consists of two surfaces which are connected to adjacent solid elements. Initially the two surfaces coincide, but they may be driven apart mechanically. The displacement jump is defined as the difference between the displacement fields of the two surfaces, which are in turn defined with standard finite element interpolation functions from Eq. (2):

with

The contribution of the interface element to the internal force vector is then defined as an integral over the interface surface Γ i :

and the contribution to the global tangent matrix likewise as

where

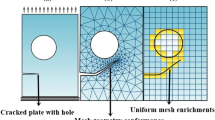

Partition of Unity Finite Element Method

An increasingly popular class of methods for modeling of cracks is based on enrichment of the solution basis with discontinuous functions, referred to as the Partition-of-Unity Finite Element Method (PUFEM) [35], the eXtended Finite Element Method (XFEM) [36] or the Generalized Finite Element Method (GFEM) [37]. Melenk and Babuška [35] introduced PUFEM as an easy way to include information about the problem being solved to the finite element basis. Exploiting the partition of unity property of the finite element shape functions, any function can be added to the basis in order to improve its approximability. This includes the possibility to add a discontinuous function for the modeling of cracks, which has been done first by Belytschko and Black [38] and Moës et al. [39]. In this way, a discontinuity is running through the elements (see Fig. 5), which obviously offers more flexibility for the crack path than interface elements. In the original publications, asymptotic functions are used for enrichment around the crack tip to approximate the singular stress field. Alternatively, it is possible to add cohesive tractions on the crack surface, as proposed by Wells and Sluys [40] and Moës and Belytschko [36]. In this case the crack tip singularity is removed from the stress field.

In this method, the displacement field is defined as the sum of two independent fields, one of which is multiplied with the Heaviside step function \(\mathcal{H}\):

where \(\tilde{\mathbf{a}}\) are additional degrees of freedom defined only on the nodes of those elements that contain the crack and \(\mathcal{H}\) is equal to 1 on one side of the crack and equal to 0 on the other side. The displacement jump is defined on the cracked surface Γ c as

Hansbo’s Version of XFEM

An alternative method has been proposed by Hansbo and Hansbo [41], in which two overlapping elements are introduced with independent displacement fields which are partially active. Cohesive tractions were applied in this method by Mergheim et al. [42], after which Song et al. [43] proved it to be equivalent to PUFEM with Heaviside function and coined the term ‘phantom node method’. Because of this equivalence, the term XFEM has grown to be used for crack modeling with both PUFEM and Hansbo’s method.

An advantage of Hansbo’s method over PUFEM is that it is more simple to implement, because the method does not require any changes to be made in the elements adjacent to the cracked elements. Moreover, in dynamic methods, Hansbo’s method allows for straightforward lumping of the mass matrix in contrast with PUFEM. However, there is no similar extension to enrichment with asymptotic functions to approximate the singular stress field around the crack tip. Therefore, for modeling of cohesive cracking Hansbo’s method is to be preferred, while for modeling of crack propagation in a fracture mechanics approach, PUFEM is the better alternative.

Hansbo’s version of XFEM is illustrated in Fig. 6. An element with original nodes n 1…n 4 is crossed by a crack at Γ c , dividing the element domain into two complementary sub-domains, Ω A and Ω B . Phantom nodes (labeled \(\tilde{n}_{1}\ldots\tilde{n}_{4}\)) are added on top of the existing nodes. The existing element is replaced by two new elements, referred to as element A and element B. The connectivity of the overlapping elements in the illustration is

The elements do not share nodes, and therefore have independent displacement fields. Both elements are only partially active: the active part of element A is Ω A and the active part of element B is Ω B . This is represented numerically in the definition of the displacement field: the displacement of a point with coordinates x is computed with the standard finite element shape functions N(x) and the nodal displacement values from either of the overlapping elements, depending on the location of the point:

The displacement jump over the crack is defined as the difference between the displacement fields of the two elements.

When this definition of the displacement field is combined with constitutive laws for the bulk stress and the cohesive traction, it follows from standard variational principles that the contribution to the internal force vector on the degrees of freedom corresponding with element A and B are defined as

and

Because \(\mathbf{f}^{\mathrm{int}}_{A}\) is coupled to u B (and \(\mathbf{f}^{\mathrm{int}}_{B}\) to u A ) via t(〚u〛) and Eq. (26), the linearization also involves cross terms and the total contribution to the global tangent matrix is

with

Because the law for the normal component is typically different from that for the shear component(s), transformations from the global coordinate frame to an orthonormal frame that is aligned with the crack (see Fig. 6) and back are wrapped around the evaluation of the constitutive law. The displacement jump in local {n,s,t}-frame is related to the displacement jump in global {x,y,z}-frame with

where, with t-axis parallel to the z-axis, the transformation matrix R is given as

Similarly, it holds

and (with R −1=R T)

In the remainder of this document, cohesive laws are presented in the local frame, omitting the overbars for notational simplicity and omitting the transformations for brevity.

2.2.3 Material Parameters

Whether the description of choice is a continuum model with a stress-strain relation, or a discontinuous model with a traction-separation relation, in either case a constitutive law is needed to characterize the fracture behavior of the material. In formulating the constitutive law, a choice has to be made for the fundamental parameters. Ideally, the model contains only parameters that can be obtained from simple experiments and that are objective material constants. A common choice is to use strength and fracture energy (or ‘fracture toughness’). The strength of the material is the maximum stress the material can sustain, which is the peak level of the stress in Fig. 3, while the fracture energy is the amount of energy that is required to form a unit area of new crack surface, which is related to the area under the curves in Fig. 3. In a traction-separation law, the fracture energy is equal to the area under the curve, but in a stress-strain relation, the area under the curve is of the dimension energy per volume and has to be multiplied with the width of the failure zone in order to obtain the fracture energy.

In fracture mechanics, distinction is made between mode I (opening), mode II (sliding) and mode III (tearing), each of which is associated with a distinct value for the fracture energy [44]. In computational practice, it can be hard to distinguish between mode II and mode III and therefore often only two modes are considered for the fracture energy: opening (mode I) and shearing (mode II/III). For strength, there can also be different values related to different loading directions. When uniaxial strength and pure mode fracture energy are determined, more assumptions and/or parameters are needed to interpolate for general stress state. In general stress space, strength becomes an envelope around the admissible stress states. And for general mixed mode fracture, the fracture energy becomes a function of the mode ratio.

In an idealized homogeneous material, strength and fracture energy can be related to fundamental bond forces on the nanolevel. In a heterogeneous material, however, the strength is dominated by irregularities in the microstructure. The failure load measured in a simple experiment is governed by stress concentrations due to stiffness inhomogeneity and/or triggered by spatial variation of the strength due to the presence of weak spots. Therefore size effects may play a role [45, 46]. Similarly, the fracture energy in a heterogeneous material is supposed to lump everything that is happening in the fracture process zone. The validity of the assumption that strength and fracture energy are fundamental material parameters for composites will be discussed at several points in this paper.

2.3 Mechanics of Composite Materials

2.3.1 Elasticity

The starting point for the constitutive modeling of composite laminates is a law for the elastic behavior of the elementary ply. Considering the fact that the ply is stiff in fiber direction and compliant in other directions, the transversely isotropic version of Hooke’s law is used, which is defined as

with

where E 1 and E 2 are the Young’s moduli of the ply in fiber direction and transverse direction respectively, ν 21 and ν 23 are the longitudinal and transverse Poisson’s ratios, and G 12 is the longitudinal shear modulus. Under the assumption of transverse isotropy, the transverse shear modulus G 23 is a dependent quantity, defined as:

The overbars in Eq. (36) are used to indicate that the measures are expressed in the local material frame. In this frame, the 1-axis is aligned with the fiber direction in the ply, as illustrated in Fig. 7. In computational analysis transformations are required to relate stress and strain quantities in the global coordinate frame (e.g. σ={σ x ,σ y ,σ z ,τ yz ,τ zx ,τ xy }) to those in the local coordinate frame (e.g. \(\bar{\boldsymbol{\sigma}}= \{\sigma_{1},\sigma_{2},\sigma_{3},\tau_{23},\tau_{31},\tau_{12} \}\)) [47]. Here too, overbars and transformations are omitted in the remainder for brevity.

2.3.2 Residual Stress

An important aspect of laminate analysis is the residual stress due to fabrication. The difference in thermal properties of fiber and matrix causes the thermal expansion behavior of the ply to be orthotropic. When the laminate is cooled during fabrication, the plies tend to shrink in transverse direction, but, since the plies are connected, this contraction is constrained. The transverse tensile stress that is caused by the mismatch in thermal properties can be significant with respect to the transverse tensile strength of the ply. Therefore it is important to take these residual stresses into account. The linear elastic constitutive law after a temperature change is

where the total strain is decomposed into a mechanical part and a thermal part:

with

where ΔT is the magnitude of the change in temperature and α 1 and α 2 are the coefficients of thermal expansion in fiber direction and transverse direction, respectively.

2.4 Failure Theories for Composite Materials

The ply is the elementary building block for the mesolevel approach to laminates. Therefore, mesolevel failure analysis requires criteria for predicting failure of the ply. The concept of strength is not as clearly defined for a homogenized composite as it is for a homogeneous material. Therefore, the composite nature of the ply complicates the formulation of a stress based criterion for onset of failure. Unidirectional strength properties are measured for the characterization of the material in its principal directions, but in failure analysis, these have to be interpolated in order to get a general failure criterion (or set of criteria) to be able to evaluate any three dimensional stress state for failure. Early work in the development of an orthotropic failure envelope was done by Hill [19], Tsai [48] and Hoffman [49], who formulated criteria that consist of a single relation for the interaction of the different stress components in the material frame. For composite materials, the most popular version of these interactive criteria is the one formulated by Tsai and Wu [50]. The transversely isotropic version of the Tsai-Wu criterion can be written as:

with

where F 1t and F 1c are the tensile and compressive strength in longitudinal direction, F 2t and F 2c the tensile and compressive strength in transverse direction and F 23 and F 12 the transverse and longitudinal shear strength.

However, a single interactive criterion does not sufficiently reflect the level of complexity that is inherent to composite materials. A smooth failure envelope does not match the fact that, due to the inhomogeneity of the material, discrete switches from one type of failure to another are involved when the load direction is gradually changed. Therefore, failure-mode-based theories have been proposed, with a number of independent criteria corresponding to an equal number of failure modes. The first failure theories that distinguished between fiber failure and matrix failure were developed by Hashin [7, 51]:

-

Fiber tension:

$$ \frac{\sigma_1}{F_{1\mathrm{t}}}=1 $$(44) -

Fiber compression:

$$ -\frac{\sigma_1}{F_{1\mathrm{c}}}=1 $$(45) -

Matrix tension:

$$ \sqrt{ \frac{ (\sigma_2+\sigma_3 )^2}{F_{2\mathrm {t}}^2} + \frac{\tau_{23}^2-\sigma_2\sigma_3}{F_{23}^2} + \frac{\tau_{31}^2+\tau_{12}^2}{F_{12}^2} }=1 $$(46) -

Matrix compression:

The World Wide Failure Exercise organized by Hinton et al. (see [52, 53] and references therein) has been an attempt to decide which failure theory is most appropriate. Although clear difference in predictions of different models were reported, there was no uniform conclusion in favor of a single approach. A trade-off between accuracy of the criterion and the number of material parameters or assumptions involved will remain present, considering the fact that “a criterion is only as good as the data available” [54]. Another problem with the failure criterion approach is that it is based on homogeneous stress, while, as soon as failure has started somewhere in the specimen, stress is not homogeneous anymore. Size effects do play a role, which blurs the meaning of the concept of strength.

Furthermore, there is a statistical size effect that is of importance for the phenomenon of fiber failure. The unidirectional ply strength in fiber direction is best described with a weakest link theory and a statistical distribution of the strength [46]. Numerous models have been developed to predict the ply strength in fiber direction as a function of the specimen size (see e.g. [55–57]). However, such models are not readily available for progressive failure analysis because they tend not to predict the location of failure, which is necessary information to continue the analysis beyond the first failure event. A Weibull criterion can be used to predict brittle fiber failure (Hallett et al. [58]), but when it is applied to cases with progressive fiber failure, as has been done by Li et al. [59], it is necessary to assume that failure occurs at the location where stress is the highest, which contradicts the Weibull assumption that failure may occur anywhere.

Another complicating factor is that ply failure can be influenced by the presence of neighboring plies when the ply is embedded in a laminate. The neighboring plies have a constraining effect on the failure which makes it uncertain to what extent the failure can be characterized accurately with properties measured for the isolated ply. A well-known example of this is the increase in transverse strength upon decreasing ply thickness, a phenomenon first reported by Parvizi et al. [60] and comprehensively reviewed by Nairn [61]. Theories exist to predict the in situ strength see e.g. Camanho et al. [62]. An open issue here is that the use of in situ strength parameters is more obvious for ply discount methods [63] than for progressive failure analysis where onset of failure is followed by softening or decohesion. In the latter case, the fracture energy is already present in the post-peak response, and onset of failure is not the same as appearance of (visible) cracks. However, when crack growth is brittle, i.e. cracks grow in a snapback, the post peak response becomes more of a numerical artifact needed for well-posedness and robustness. In that case, the use of in situ strengths is also appropriate with softening or decohesion.

In reaction to the World Wide Failure Exercise, Dávila et al. [64] developed another set of failure criteria. These criteria, referred to as LaRC03, were designed for plane stress. In a later publication by Pinho et al. [65], the set of criteria were completed for full three-dimensional stress states, referred to as LaRC04. They are considerably more elaborate than the criteria by Hashin, but the LaRC criteria do not require additional material parameters. They differ from Hashin’s on the following points. Firstly, for all matrix failure mechanisms, in situ strength values are used. Secondly, for matrix compression, they are based on Mohr-Coulomb friction following Puck and Schürmann [66]. Thirdly, for fiber compression, an initial misalignment of the fibers is assumed, and three different failure scenarios related to different stress states around the misaligned fibers result in three different criteria: one for kink-band formation, one for matrix failure under biaxial compression and one for matrix tensile failure.

As an alternative to explicit failure criteria, a micromechanical approach can be adopted to obtain a failure envelope. González et al. [5, 6] performed micromechanical simulations on a representative volume element to get the ply strength for different loading conditions. The idea is that this requires less assumptions and material parameters: only the simpler constituents need to be characterized, while the behavior of the composite is virtually determined.

However, even when an accurate general failure criterion for the elementary ply can be formulated or virtually obtained, a ply failure criterion is not sufficient for the prediction of laminate failure. One could use it to assess laminate failure in a First Ply Failure approach [67], equating failure of a single ply to failure of the laminate, but in general, local failure of a ply does not necessarily lead to global laminate failure. Redistribution of stress may be possible such that the structure can be loaded beyond the load level at which first local failure occurs. In that case, in order to predict the load bearing capacity of a structure or structural part under specific load conditions, progressive failure analysis is required. That is, the failure criteria must be extended with a theory on what happens after failure. Furthermore, the failure process of delamination has to be included to do complete laminate analysis.

3 Delamination Modeling

The two most popular computational methods for the analysis of delamination are the Virtual Crack Closure Technique (VCCT) [68, 69] and interface elements with a cohesive law [32, 70]. Disadvantages of the former are that it cannot deal with initiation and that the crack front has to coincide with element boundaries in a regular mesh. For these reasons, cohesive elements are gaining popularity, particularly for progressive failure analysis with non-self-similar crack growth and interaction with other failure processes.

In mesolevel modeling of laminates, each ply is modeled independently, which means that the boundaries between the plies always coincide with an element boundary. Interface elements can therefore be inserted easily by doubling the nodes on the existing element boundary. Moreover, they offer an efficient method to get realistic values for the interfacial tractions. Alternatively, a fine discretization through the thickness would be needed to model delamination in a continuum sense [71] or with XFEM [72, 73], because the through thickness variations in the stress field must then be computed accurately for correct initiation and propagation of cracks. Therefore, interface elements are the method of choice for mesolevel delamination modeling.

3.1 Cohesive Law

Modeling of delamination with interface elements was first done by Schellekens and De Borst [32] and Allix and Ladevèze [70]. Schellekens and De Borst developed a plasticity formulation which was further pursued by Hashagen and De Borst [74]. However, robustness and ease of implementation renders damage formulations favorable.

Delamination fracture tends to be a mixed-mode phenomenon, because the direction of crack propagation is given by the topology of the interface, while the orientation of the loading is variable. A simple bilinear softening law for mixed-mode failure with constant fracture toughness was proposed by Mi et al. [75]. However, it is important to take into account that the fracture toughness, which is a key parameter in the cohesive law, is not a material constant [76–78]. Camanho et al. [79] developed a cohesive law in which the fracture toughness is a phenomenological function of mode mixity as formulated by Benzeggagh and Kenane [80]. This cohesive law was improved for thermodynamical consistency by Turon et al. [81]. Alternative formulations have been proposed among others by Allix and Corigliano [82], Yang and Cox [83], Högberg [84] and Jiang et al. [85]. Below, the cohesive law as formulated by Turon [81] is outlined.

Starting point is the phenomenological relation between fracture energy and mode ratio by Benzeggagh and Kenane [80]:

where G c is the fracture energy as a function of the mode ratio G II /G with material parameters G Ic , G IIc and η. In case of three-dimensional analysis, mode II and mode III are taken together (in fact, it is hard to distinguish between the two in interface elements, since there is no well-defined crack front and consequently no well-defined tangent vector to the crack front). Then there is a decomposition of displacement and traction vectors into a normal part (mode I) and a shear part (mode II/III). When the normal to the interface plane is aligned with the global z-axis, decomposition between normal and shear displacement jump is straightforward:

Before the evaluation of the cohesive law, the values of the displacement jump for onset and propagation of pure mode opening are calculated from the material parameters:

where K is the initial dummy stiffness, F n and F sh are the normal and shear strength of the interface and G Ic and G IIc are the mode I and mode II fracture toughness.

Then the current displacement jump is used to compute an equivalent opening displacement:

and the mode ratio B:

Normal relative displacements only contribute when positive, hence the use of the Macauley operator, which is defined as 〈x〉=max(x,0). It can be shown that B is related to the mode ratio in Eq. (48) as B=G II /G, when it is assumed that B is constant inside the cohesive zone.

Subsequently, the onset criterion and propagation criterion related to the current mode ratio (see Fig. 8) are computed with:

The damage variable ω d is defined such that the traction-separation law is bilinear for any fixed mode ratio:

Finally, the traction t is computed with isotropic damage as

where δ ij is the Kronecker delta and the part between square brackets is included to cancel the damage for the normal traction component when the normal displacement jump is negative. That way, interpenetration of opposite crack faces is prevented through a penalty approach with K as penalty parameter.

The consistent tangent is defined as [86]:

with

3.2 Open Issues

In later work, Turon et al. [87] have shown that the energy dissipation does not follow the assumed Benzeggagh-Kenane relation under all circumstances. This is due to the fact that in mixed-mode cracking the mode ratio varies over the length of the cohesive zone, see Fig. 9, which is in contrast with model assumption of a constant mode ratio as visualized in Fig. 8. Turon et al. [87] have shown that proper behavior is obtained when the strength parameters are in accordance with the following relation:

Alternatively, it is possible to adopt an orthotropic relation for the penalty stiffness, such that

The latter choice is somewhat more laborious because the orthotropic stiffness relation must be accommodated in the implementation, but theoretically more appealing because the penalty stiffness is already a numerical artifact, as opposed to the strength parameters which have physical meaning. Nevertheless, applying Eq. (62) can also be defended arguing that the shear strength is not unambiguously defined and that the exact magnitude of strength parameters has only limited influence on the results in many delamination cases (provided that the ratio is such that Eq. (62) is satisfied).

Evolution of traction and displacement jump components in a single integration point for mixed-mode bending test with G II /G=0.5 (see van der Meer and Sluys [88] for details)

Notwithstanding this improvement by Turon et al., Goutianos and Sørensen [89] have shown that a theoretical path-dependency exists for all truss-like cohesive laws that have a mode-dependent fracture toughness. With truss-like they mean that the ratio in traction components is fixed to the ratio in opening displacements, as it is in Eq. (58). Gutianos and Sørensen have shown that the dissipation for such cohesive laws depends on the complete opening history rather than on the mode ratio only. Although there might be something physical to this path-dependency, it should be regarded a flaw as long as the basic assumption that the fracture toughness only depends on mode ratios (see Eq. (48)) has not been revised explicitly. Notably, the cohesive law by Yang and Cox [83] does not suffer from this path-dependency, because it works with fixed pure mode behavior and a mixed-mode cut-off criterion rather than with isotropic damage.

Next to this issue with a discrepancy between theory and results of cohesive laws, there are some physical phenomena that are not included the theory behind the laws. Like most cohesive laws, the one outlined in Sect. 3.1 makes use of a penalty approach to prevent interpenetration and allow for compressive forces to be transmitted through the interface. What is not taken into account, however, is the possibility of a significant increase in strength and mode II fracture energy in the presence of compressive stress. This issue has been addressed by Li et al. [90] but is still ignored in most formulations.

Even in the absence of compressive stress, the fracture toughness is not always constant for a given mode ratio. Wisnom has observed a size effect in the fracture toughness [91] and several authors have reported a dependence on the relative fiber orientations of the neighboring plies [92–94]. Davidson et al. [95] have given further evidence that different cases with the same mode ratios do not necessarily display the same fracture toughness. Part of this can be attributed to the fact that delamination is not necessarily the only dissipative process in a characterization test with which the fracture toughness is measured. In reality, there may be interaction between delamination and transverse damage. A formulation in which constitutive coupling between matrix cracking and delamination exists is the mesomodel by Ladevèze et al. [96]. How much constitutive coupling is realistic has not been characterized properly and is indeed very hard to quantify. This should be distinguished from mechanical coupling, for instance when delamination is triggered by the presence of matrix cracks. Such mechanical interaction between different failure processes can be captured well and will be given attention in the Sect. 5.3.

3.3 Element Size Requirement

One drawback of cohesive methods is that the cohesive zone has a given length and that robust and accurate simulations require the elements to be several times smaller than this cohesive zone. In (quasi-)infinite continua, the length of the cohesive zone is related to the fracture energy, stiffness and strength, but for delamination cracks in thin laminates, the thickness is an additional influence [83, 97, 98]. The length of the cohesive zone may vary for different loading conditions, generally the cohesive zone is longer for mode II than for mode I, but the length of the cohesive zone in typical laminates is of the order of 1 mm. Since elements must be several times smaller than the cohesive zone length, typical element sizes of around 0.2–0.3 mm are commonly required for robustness and accuracy. This element size requirement seriously limits the specimen dimensions that can be simulated within reasonable computation time.

An engineering solution to this limitation has been proposed by Turon et al. [99], viz. to increase the length of the cohesive zone in the simulation artificially by reducing the interface strength. This method can push the limits of model dimensions that can be analyzed within acceptable computation times considerably but it should be handled with care because the solution may be influenced [97, 100]. Limited alleviation of the mesh-requirements can furthermore be achieved by adapting the integration scheme as proposed by Yang et al. [101].

Another direction to improve the performance of large interface elements is to locally enrich the displacement field. Improvement was already reported by Crisfield and Alfano [102] with a relatively simple hierarchical enrichment. Guiamatsia et al. [103] enriched the displacement field with the analytical solution of a beam on elastic foundation. This was based on the assumption that it is underrepresentation of the variation of the stress ahead of the crack tip which needs to be addressed. However, the real challenge is to enrich the kinematics such that deformation of an element containing the crack tip can be represented accurately, resulting in a smooth response for a smooth progression of the crack tip through the element. Such an enrichment scheme has been proposed by Samimi et al. [104], who added a hat-enrichment where the location of the peak of the enrichment is an additional degree of freedom. However, this strategy has only been shown to work in 2D with line interfaces; generalization to cases with plane interfaces is not obvious, although a step in that direction has been made by Samimi et al. [105].

The most significant gain in element size has been reported by van der Meer et al. [106] in an approach where the cohesive zone is eliminated altogether. The front is described mesh-independently with the level set method and crack growth is handled with fracture mechanics. However, this method has not yet reached such level of maturity that it can be combined with descriptions for other failure processes in laminates. Currently, the element size requirement related to cohesive methods with interface elements remains problematic for progressive failure analysis of laminates.

4 Continuum Methods for Ply Failure and Their Limitation

Next to a model for interply delamination, a model for intraply failure is needed to do progressive failure analysis of laminates. In Sect. 2.4, failure criteria for the ply have been introduced. A complicating aspect is that different failure processes may occur in the ply. For each of the failure processes, there must be a representation of what happens after the strength related with this particular failure mechanism has been reached at local level. The most simple approach to progressive failure analysis is the ply discount method, where the stiffness of a ply is suddenly reduced after the failure criterion is violated. This has been applied to matrix failure by Laš and Zemčik [107] and Liu et al. [108]. These models, however, give mesh-dependent results: the amount of energy that is dissipated when a crack is formed vanishes upon mesh refinement.

In order to obtain a unique response, models with a continuous constitutive relation must be used. For orthotropic materials, several examples are available for extension of a failure criterion with a plasticity law [109–112]. But in the context of composite materials, continuum damage formulations are more popular, because these are more easily coupled to the failure-mode-based criteria, with different stiffness degradation laws for the different failure processes. After pioneering work by Ladevèze and Le Dantec [113] and Matzenmiller et al. [114], several different formulations have been proposed in which distinction is made between fiber failure and matrix failure [115–122].

The basic relation for continuum damage models for the unidirectional ply is as follows:

with

where ω f and ω m2…ω m6 are the damage variables related to fiber failure and matrix failure, respectively. The evolution of the damaged variables is strain-driven and related to failure criteria, with coupling between the different matrix damage variables.

Although this works well in some cases, there is a pathology in the continuum approach to the modeling of composites, which can be understood from simple micromechanical considerations. When looking at the micromechanical failure process, the orientation of a band with matrix failure influences the softening behavior of the composite material. A band with shear failure that is oriented in fiber direction can develop into a macrocrack running between the fibers, which is a relatively brittle mechanism, while a band with matrix shear failure in any other direction is crossed by fibers, and the corresponding failure mechanism is therefore more ductile (see Fig. 10). In continuum models, however, this distinction cannot be made. In the homogenized continuum, both mechanisms are represented with a softening shear band with the same local stress-strain relation.

This pathology of continuum models with respect to matrix crack simulation is illustrated with the example of a uniaxial tensile test on a 10∘ unidirectional laminate. This is a standard test for the determination of the in-plane shear strength [123, 124]. The test is performed on a specimen with the shape of a parallelogram, where the oblique ends are used to remove stress concentrations from the boundaries. Experiments show brittle matrix failure; in a sudden event, the specimen breaks, with the crack running in fiber direction. The case has been simulated by van der Meer and Sluys [125] with a continuum damage model of the type of Eq. (65) as well as with a softening plasticity model for orthotropic materials, both regularized with a rate-dependent term. With both models, the same erroneous response was obtained.

The results obtained with the continuum damage model are shown in Fig. 11: the load-displacement diagram for two different meshes and the final deformation. The influence of the element size on the load-displacement behavior is negligible, which is related to the fact that the band with localized strain is wider than the elements, due to the viscosity term. The deformed mesh, which is taken from the coarse mesh analyses, clearly shows a failure pattern that is different from that observed in experiments; the failure band is not aligned with the fibers.

Off-axis tensile test: geometry and experimentally observed crack path (top) and load-displacement relation and final deformed mesh with regularized continuum damage model (bottom) [125]

Notably, there is a significant displacement perpendicular to the load direction. The deformation in the localization area is such that the strain in fiber direction ε 1 remains relatively small. In the damage model, this is a consequence of the distinction that is made between fiber failure and matrix failure. Because of this, the model gives locally correct behavior, where a stress state for which the transverse strength is exceeded never gives rise to large strains in fiber direction. However, although the local behavior is correct, the global behavior is not. The fact that ε 1 remains small, is not sufficient to ensure that matrix failure develops in fiber direction. The cause for this behavior lies in the fact that the direction of failure propagation in the model is governed by the stress concentration rather than by the fiber direction. This is a consequence of the homogenization which is fundamental to continuum models. In a homogenized model the smeared crack will always propagate there where the stress is highest, whereas in the real material the very fact that the material is inhomogeneous causes the crack to grow differently, as shown in Fig. 12.

With this example, the consequences of the limitation of the continuum approach are clearly visible. The micromechanical cause for cracks to grow in fiber direction, is not present in continuum models, at least not as long as the model is a local model. This can be considered a special case of violation of the principle of separation of scales. In Sect. 1, the microscale has been introduced as the level where individual fibers and the matrix material are distinctively present, while on the mesoscale, the material is homogenized. As such, an individual matrix crack is a typical microscale phenomenon. When it is brought to the mesoscale through homogenization it is no longer individually represented. In reality, however, an individual matrix crack may grow very large, and play a role on a higher scale. After homogenization in the micro-meso transition, this information is lost.

It is unlikely that failure mechanisms, in which large cracks in fiber direction play a role in different plies with different fiber orientations, can be predicted using state-of-the-art continuum models for ply failure, irrespective of the failure criteria and damage evolution laws that are applied. However, for other failure mechanisms, the continuum description serves well, e.g. when failure in all plies is localized in a single plane [121, 126, 127]. In some cases the matrix crack will emerge correctly, such as the split near a circular hole as reported by Cox and Yang [1]. In other cases, a good match in peak load values may even be found, such as reported by Abisset et al. [128] with very good predicted failure load levels in a series of complex test cases. But, as far as localized matrix failure in a single ply is concerned, the predictive quality of continuum models should be doubted. Unphysical failure mechanisms are introduced in the system and these may lead to erroneous results.

5 A Strategy Around Discrete Modeling of Matrix Cracks

On the mesolevel, where matrix and fibers are not modeled separately, it is necessary to enforce the orientation of the matrix cracks in order to describe the mechanisms realistically, as argued in the previous section. This calls for a discrete representation of individual cracks with a discontinuous approach. This can be achieved by inserting interface elements through the thickness of the ply at a priori selected locations. This strategy, first employed by Wisnom and Chang [129], gives good interaction with interface elements for delamination. Similar work has been done by De Moura and Gonçalves [130] and Yang and Cox [83]. Wisnom, Hallett and coworkers have further applied this on different notched and unnotched geometries with considerable success [58, 59, 85, 131, 132]. However, this strategy requires additional meshing effort and is less predictive because the possible crack locations have to be predefined. Therefore a mesh-independent representation of discontinuities with XFEM (see Sect. 2.2) is to be preferred for the simulation of matrix cracking. Techniques for mesh-independent representation of discontinuities have been applied in the context of matrix cracking by Iarve et al. [133–136], Yang et al. [137–139] and van der Meer et al. [86, 88, 140, 141].

Iarve et al. make use of PUFEM with smooth enrichment function instead of the standard Heaviside enrichment. The model was first applied to matrix cracking by in unidirectional composites by Iarve [133] and then to laminates by Mollenhauer et al. [134]. In these references, the matrix cracks were still inserted a-priori without progressive damage modeling, but it was already shown that this representation of matrix cracks allows for accurate stress fields in damaged composites by comparing numerical results with images obtained with moiré interferometry. Progressive cracking and the interaction with delamination was added in a later publication [135], where cohesive cracks were inserted over the width of the specimen after the strength was violated in one point. Unnotched specimens with different layups were analyzed and results were compared with experimental observations from Crossman and Wang [142] and Johson and Chang [143]. A statistical strength distribution was used to obtain a random crack pattern and the number of cracks was limited to a maximum number per ply. A continuum damage model for fiber failure has been added by Mollenhauer et al. [136], based on the formulation of Maimí et al. [117, 118]. Results are compared overheight compact tension test results from Li et al. [144].

The formulation by Yang et al. is based on Hansbo’s version of XFEM, which they refer to as A-FEM. It was first introduced by Ling et al. [137] and applied to matrix cracking in laminates by Zhou et al. [138] and Fang et al. [139]. Zhou et al. [138] used the model to investigate the interaction and competition between matrix cracking and delamination and their sensitivity to the ratios between different material parameters. Fiber failure has been added by Fang et al. [139] as a sudden stiffness reduction after violation of a maximum strain criterion. Results obtained with the model are compared with experiments on double edge notched tension specimens by Hallett et al. [145] and again a good correlation in terms of damage progression and global response has been demonstrated [139].

The formulation by van der Meer et al. is also based on Hansbo’s method and introduced in Ref. [88]. Numerical aspects of the interaction between XFEM for matrix cracks and interface elements for delamination were investigated by van der Meer and Sluys [86] and a continuum damage model was added for fiber failure in a later publication [140]. There, results were validated against experiments by Spearing and Beaumont [146]. Further validation on experiments by Green et al. [147] and Li et al. [144] was presented in Ref. [141]. In the remainder of this paper, the main choices and findings by van der Meer et al. are discussed in more detail, providing an overview of the main issues for building a model around an XFEM representation of matrix cracks (in this section), as well as a detailed look under the hood of the algorithmic framework used (in Sect. 6) and a demonstration of the possibilities of the approach (in Sect. 7).

5.1 Fundamental Choices

With XFEM, initiation and growth of cracks can be simulated at arbitrary locations in the mesh. For this, generally two criteria are needed, the first is to judge whether the crack will grow and the second to determine in which direction the crack will grow. From this point of view, application of these methods to the simulation of matrix cracking in laminates is a simplification, because the second criterion becomes trivial: the direction of crack growth is always equal to the fiber direction. A matrix crack grows by definition between the fibers, and this can be numerically enforced by fixing the direction of crack growth (ϕ in Fig. 6 is set equal to θ in Fig. 7). As long as one layer of elements is used through the thickness of the ply, it is naturally assumed that matrix cracks always extend through the ply thickness. Because of this, complications in describing three dimensional crack paths (see e.g. [148]) are avoided. It is furthermore assumed that the matrix crack orientation is always perpendicular to the plane of the laminate. Therefore the crack topology can be described completely in the 2D midplane of the ply, even when 3D solid elements are used. The downside of this assumption is that the wedge effect that may occur in compressive laminate failure due to inclined matrix cracks [66] is not included. To date, XFEM for matrix cracking has only been applied to tensile load cases, where the assumption that cracks are perpendicular to the midplane is realistic.

For the simulation of propagating matrix cracks, the cohesive approach is chosen over the brittle version with crack tip enhancement. In the first place because the cohesive tractions and hence a fine mesh are needed anyway for delamination. And secondly because it is not clear what the singular functions should look like for a crack tip in an orthotropic medium that is constrained by neighboring plies. With this choice, Hansbo’s version of XFEM is from implementational point of view the most favorable choice.

For crack initiation and the insertion of new crack segments a stress-based criterion is used. The particular choice for the criterion in the work presented here is not related to a particular failure theory, but rather to the Benzeggagh-Kenane-criterion (Eq. (48)) used in the cohesive law (see Sect. 5.2). The stress is rotated to the material frame and then evaluated with the following expression, taking into account the fact that the local 2-axis is normal to the crack plane:

with

This criterion is evaluated in all elements in which cracking is allowed, taking into account the minimum crack spacing as described in Sect. 5.4. When the criterion is violated, the element is split in two and a cohesive segment is inserted between the two (see Fig. 6).

5.2 Cohesive Law

In contrast with interface elements, XFEM requires an initially rigid cohesive law, because the cohesive segments are introduced at nonzero stress level. In most texts on propagating cohesive cracks in XFEM or related formulations, decohesion is mode I driven, either by leaving out shear tractions altogether [36, 43, 149], or by assuming constant shear stiffness [42], or by assuming decreasing shear stiffness where the decrease is driven by normal crack opening only [40, 150]. This simplification is compatible with a crack propagation procedure that is based on the direction of maximum principal stress, because then mode I is the dominant cracking mode. In the present case of matrix cracking, however, the crack propagation direction is independent of the stress field, and a complete mixed-mode formulation is needed. Such formulations have not been developed in the initial explorations of XFEM.

It is possible to define an initially-rigid mixed-mode damage law that computes the traction vector from the displacement jump (see e.g. Oliver [151] and Mergheim and Steinmann [152]). However, the traction is then not uniquely defined for zero crack opening (see Fig. 13; all iso-lines for the traction go through 〚u〛=0). In a uniaxial case it is obvious that the traction should be equal to the strength. But in a mixed mode formulation the strength is a surface in the traction space and the initial traction can be any point on that surface, each with a zero opening. The traction evaluation itself remains feasible, because the crack opening after a finite load increment will not be exactly equal to zero. However, the highly nonlinear nature of the traction-separation law around the origin endangers the stability of the analysis. Very small variations in nodal displacements give rise to large changes in nodal forces and also, more critically, to large changes in the tangent matrix, which leads to ill-convergence.

However, more knowledge on the initial traction is available. Namely, that the cohesive traction acting on the crack surface must be in equilibrium with the stress in the bulk material next to the crack:

where σ is the stress tensor and n the normal vector of the crack surface. Notably, since the crack is parallel to the fiber, the vector σ n contains the material stress components σ 2, τ 12 and τ 23. The value of σ n upon crack initiation is known and can be used for the evaluation of the initial traction with two different concepts. The first has been introduced by Moonen et al. [153] and includes the term σ n from the neighboring bulk material directly in the cohesive law. The second concept, by Hille et al. [154], is to use a law with a finite initial stiffness and then shift the origin of the law such that the traction at zero opening matches the stress at the moment the crack segment is introduced.

Van der Meer et al. have developed two cohesive formulations for composites that each make use of one of these concepts and that both start from the phenomenological mixed mode law by Benzeggagh and Kenane [80]. The version based on Moonen’s idea can be found in [88], and the version based on Hille’s idea in [141]. Both implementations have been validated in simple mixed mode cases, but the second was found to be more robust in complex cases. The formulation of this cohesive law is obtained as follows. Let Turon’s damage law from Sect. 3.1 be written as an operator \(\mathcal{T}\) which relates the evolution of the traction t to the evolution of displacement jump 〚u〛:

where t is used to indicate the history-dependence. The shifted version uses exactly the same operator, but works on a translated argument:

with

where the translation 〚u〛0 is computed from the bulk stress at the location of the cohesive integration point at the instant before the crack segment is introduced:

Here, K is the initial elastic stiffness in the cohesive law and  is the traction on the crack surface computed from the bulk stress at the moment of introduction of the crack segment. This leads to the desired initially rigid behavior, as illustrated in Fig. 14. Moreover, the traction-separation relation is not singular as long as K is finite, and, initially, for the undamaged cohesive integration point with zero crack opening, the traction is in equilibrium with the stress in the adjacent bulk material.

is the traction on the crack surface computed from the bulk stress at the moment of introduction of the crack segment. This leads to the desired initially rigid behavior, as illustrated in Fig. 14. Moreover, the traction-separation relation is not singular as long as K is finite, and, initially, for the undamaged cohesive integration point with zero crack opening, the traction is in equilibrium with the stress in the adjacent bulk material.

5.3 Interaction with Delamination

This section deals with the numerical representation of the interaction between matrix cracks and delamination when the former is modeled with the Hansbo’s method and the latter with interface elements. The investigations are performed in a two-dimensional framework where each ply is modeled with a single layer of plane stress elements, but the same holds for a three-dimensional framework with one layer of solid elements per ply.

When a discontinuity appears in the displacement field of one of the planes that are connected with interface elements, this obviously affects the relative displacement field between the planes. Using XFEM for the ply theoretically requires that the interface elements connecting the plies are adapted accordingly, as shown in Fig. 15. Each of the plane displacement fields Na bottom and Na top in the definition of the interface displacement jump in Eq. (17) may become discontinuous as in Eq. (25). This should be taken into account in the evaluation of the interface displacement jump. Practically, this would entail that upon introduction of phantom nodes, the connectivity and integration scheme of the interface elements is adapted accordingly, including transfer of history variables. Moreover, the possibility that both connected planes in a single interface element are cracked has to be accounted for.

However, with Hansbo’s method, more than with traditional PUFEM, the nodal displacements related to the original nodes of a cracked element remain meaningful, due to the fact that those are always in the active part of the overlapping elements (see Fig. 6). When the interface elements are not adapted upon cracking of the plies, the inconsistency in the displacement field is limited to the interior of the element. Since high accuracy in the displacement field at sub-element level is generally not pursued in finite element analysis, the consequences of using such a nonconforming displacement field may very well be acceptable. Moreover, the significance of an error at sub element level will vanish upon mesh refinement. At the nodes, the unadapted displacement field is equal to the discontinuous field. The relative displacement between each pair of original nodes remains the real relative displacement of the corresponding pair of material points. Therefore, if a nodal integration scheme is used for the interface element, the displacement jump of the unadapted interface element evaluated at the integration points is exact. Then, not-updating the interface element means not much more than under-integration of the displacement jump field.

A schematic representation of the mechanical process in which matrix cracking and delamination interact is given in the top row of Fig. 16. The material in two plies with in-plane dimensions corresponding with a single quadrilateral finite element is considered. First, a matrix crack appears in the transverse ply. Next, significant crack opening demands that minor delamination takes place. Finally, the delamination front propagates beyond the boundaries of the element domain. The numerical representation of the interaction with an unadapted interface element and nodal integration is shown in the bottom row of Fig. 16. Integration points in the interface element are indicated as springs. With this simplified description, limited crack opening may occur without any delamination. But major delamination will still result in interface damage.

With unadapted interface elements minor delamination is not captured. However, even if they would be adapted, the complex micromechanical stress and displacement fields that correspond with this state would not be represented accurately. Furthermore, with unadapted elements, the final amount of energy dissipated will be correct when major delamination occurs on both sides of the splitting crack, and will approach the correct value upon mesh-refinement when major delamination occurs on only one side of the crack. Therefore, van der Meer and Sluys [86] have proposed to use unadapted interface elements for delamination in combination with XFEM for matrix cracking. In the following example, this choice is validated.

Open Hole Laminate

Above, it has been argued that an error is introduced by not updating interface elements when neighboring solid elements are cracked, but that this error can be expected to vanish upon mesh refinement. Here, results are shown from an investigation into the magnitude of this error with a mesh-refinement study for a case in which interaction between matrix cracking and delamination is essential [86]. A [±45]s-laminate with a circular hole under tension is considered (see Fig. 17). The location of two cracks per ply is predefined in order to keep the response relatively simple.

Matrix cracks are growing from the hole to the long edge. But these cracks alone are not sufficient to form a mechanism, because of mutual constraint between the two plies. The load is transferred via the interface, which causes delamination to grow away from those cracks, until the area between the cracks is completely delaminated. The failure mechanism is illustrated in Fig. 18 where the deformation short before final failure is shown. It can be observed that failure is complete on one side of the hole, while delamination between the matrix cracks on the other side of the hole is still developing. The asymmetry in the response is due to the unstable nature of the delamination process and is triggered in the simulations by asymmetry in the mesh.

Six different meshes are used and two different integration schemes for the interface elements. All meshes are generated with the same mesh generator [155], where the typical element length is each time scaled throughout the domain with a factor \(1/\sqrt{2}\), resulting in an increase in the number of nodes with a factor of approximately two. The triangular interface elements are integrated with either a three point Gauss scheme or a three point Newton-Cotes scheme. Load-displacement diagrams for three different meshes are presented in Fig. 19. The dissipation-based arclength method (see Sect. 6.1) allows for flawless tracking of the equilibrium path with two sharp snapbacks, each corresponding with delamination on one side of the hole. It can be observed that differences between the results for the different meshes are limited. Especially with the two finer meshes, there is very good agreement between the results. Fig. 20 shows the trend in maximum load value upon mesh refinement for both integration schemes. The results are practically equal for all meshes with Newton-Cotes integration. The trend for the peak load value with Gauss integration approaches the mesh-objective value from analyses with Newton-Cotes integration.

Load-displacement relation for open hole laminate with limited number of cracks obtained with three different meshes and two different integration schemes [86]

Peak load for different meshes for open hole laminate with limited number of cracks [86]

Furthermore, in Fig. 21 the dissipation at the end of all twelve analyses is visualized. Again, the results with Newton-Cotes integration converge to a unique solution very fast. The energy dissipation due to delamination in the analyses with Gauss integration decreases upon mesh refinement. The fact that more energy is dissipated before a mechanism is formed with Gauss integration can be well understood considering that the unadapted interface elements are bridging the matrix crack. Eventually, the interface is damaged on both sides of the crack, because in the unadapted interface element relative displacements become large over the whole element domain, while in the real discontinuous displacement field significant relative displacements occur on one side of the crack only. This is illustrated in Fig. 22, showing the area with delamination damage and the location of the matrix cracks. It can be observed that with Gauss integration the final delamination front lies outside of the area bound by the splitting cracks, while with nodal integration, the delamination front lies, on average, on the cracks as it should.

Energy dissipation for different meshes for open hole laminate with limited number of cracks [86]

Final matrix cracks and delamination on one side of the hole with Gauss integration (left) and Newton-Cotes integration (right) and n n ≈2000 [86]

It is concluded from this example that unadapted interface elements can be used between elements that are cracking with the phantom node method without reservation. When unadapted interface elements are used, a nodal (Newton-Cotes) integration scheme is to be preferred because with such a scheme the displacement jump is exact in all integration points and no artificial bridging is introduced.

5.4 Crack Spacing

In a mesolevel laminate model, the strains in different plies are necessarily conforming until delamination takes place. Matrix cracks that are introduced as a discontinuity in the displacement field do not change this, except at sub-element level. In other words, introduction of transverse cracks after violation of the failure criterion does not necessarily lead to localization of deformation and hence to unloading of the surrounding material. This gives the stress-based laminate analysis with matrix cracking an ill-posed character. In absence of delamination, the stress keeps increasing in every uncracked element. Eventually, the matrix strength may be exceeded throughout the domain. With a rigid interface, the stress field may give rise to an infinite number of cohesive cracks with infinitesimal crack spacing.