Abstract

Eminently, the countries of developing state have their economy based on agricultural crop yieldings. To retain the economic growth of these countries, the agricultural plants’ disease detection and proper treatment are a leading factor. The work available in the literature basically features pull out to classify the leaf images due to which the classification performance suffers. In the proposed work, we tried to resolve this rough image dataset problem. The proposed technique initially localizes the leaf region by utilizing the color features of the leaf image followed by mixture model-based county expansion for leaf localization. The classification of the leaf images depends on the features of discriminatory properties. The characteristics features of the diseased images show various types of patterns into the leaf region. Here, we utilized the features discriminable property using the Fisher vector in terms of different orders of differentiation of Gaussian distributions. The performance of the proposed system is analyzed using the PlantVillage databases of common pepper, root vegetable as potato, and tomato leaf images using a multi-layer perceptron, and support vector machine. The implementation results confirm the better performance measure of the proposed classification technique than the state of arts and provide an accuracy of 94.35\(\%\) with an area under the curve 94.7\(\%\).

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The farming landmass is sufficient as required for feeding crop sourcing in today’s world. The economizing phase of developing countries is extremely dependent on agricultural productiveness. The plant leaves-based disease detection in the field of agriculture, at the initial stage, [1] performs a paramount impact to sustain their economy.

Soares et al. [2] presented a study on leaf images segmentation in semi-controlled conditions (LSSC) [3]. Singh et al. worked on localization-based classification using soft-computing technique (LCSCT) [4]. Biswas et al. minimized the redundancy for reclining to enhance the image color differences and performed segmentation through the fuzzy logic-based C-mean congregation (SFCC) [5]. Aparajita et al. [6] worked on an automatic system for late blight disease detection in potato leaf images. It used a segmentation using statistical features-based adaptive thresholding (SFAT) in leaf image. Yanikoglu et al. [7] worked on a self-operating plant identification system to identify the plant variety in a considered imaginarium. An imaginarium pattern recognizing system for plant disease prediction (IRPD) has designed in [8]. Sabrol et al. [9] conducted classification using the color, shape as well as texture features to separate the healthy and diseased tomato leaf images. Islam et al. presented a plane disease diagnosis approach using machine learning (PDML)-based [10] image processing. It classifies the healthy and unhealthy types of potato plants. The disease classification using a support vector machine was performed through these segmented images. Patil et al. [11] introduced an automated disease management techniques in potato (ADMT) [12].

The leaf color-based image analysis was performed in [13], for the identification and disease classification. Lowe et al. [14] analyzed the hyperspectral images for plant health monitoring and prediction. Devi et al. [15] analyzed the leaf disease of rice plants using a wavelet transform, scale-invariant feature transform (SIFT), and grayscale co-occurrence matrix technique. It employed the multiclass SVM and the Naive Bayes classifiers to classify the leaves images. Khamparia et al. [16] worked on a hybrid technique for the crop disease detection through combined features of an autoencoder and the convolutional neural network (CNN) model. Rangarajan et al. [17] used the transfer learning using the Pre-trained Visual Geometry Group 16 (VGG16) network for eggplants disease classification. The evaluation of the dataset was performed through augmentation using grayscale image along with other color spaces like hue saturation value (HSV), and YCbCr [18].

Wang et al. [19] have been accomplished a deep CNN-based flora disease intensity evaluation system. Kaur et al. [20] presented a composition of the k-means congregation for semi-automatic technique (KCM) to classify the plant diseases. Khan et al. [21] improvised a genetic algorithm-based feature selection [22] for apple disease identification and recognition (GFSD). Sladojevic et al.[23] presented a deep learning technique to classify and identify the plant diseases using the leaf images. Brahimi et al. [24] worked on a CNN-based algorithm for symptom detection and classification in tomato (SDCT) leaves. A great advantage of CNN is the automatic feature extraction directly from raw images. Ferentinos et al. [25] also worked on deep CNN models to carry out plant disease detection and diagnosis (PDDD) through the leaves images of plants. Bharali et al. [26] worked on deep learning-based leaf image analysis (DLLA) to identify and classify the plant diseases. Hang et al. [27] presented deep learning for disease identification and classification (DLDIC) in plant leaves.

The existing work faces the shortcoming of different varieties to classify the multi-class problem of the contaminated data along with high time complexity. The system accomplishment quiet shortfalls the accurateness of segmentation results. In this work, a novel leaf properties-based localization technique is presented for the region of interest segmentation and classification. The proposed algorithm gives a direction over the conventional techniques of object localization in terms of precision and computational time. In order to segment the disease affected areas, we have fused the pit-based region growing technique [28]. The region growing has performed using the mixture model for the leaf area refinement. The SIFT feature-based Gaussian distribution of localized images is utilized for the discriminant feature extraction. The compact representation of these features as Fisher vectors provides optimal solutions for the healthy and diseased leaves classification [29].

Further, the structure of the paper goes as follows. In Section 2, we have explicated the dataset and proposed an object segmentation technique and the essential conceptual background. Section 3 bestows the frame of the proposed image sectionalization technique. The distinguished image object properties-based feature extraction and classification models are discussed in Sect. 4. In Section 5, we have explored the result acquired from assorted systems and juxtaposed to the projected approach. Finally, Section 6 concludes the work and provides future research guidelines.

2 Dataset

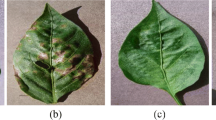

2.1 PlantVillage bell pepper dataset

The plant image dataset repository for the image-based disease analysis is available as a PlantVillage dataset. It contains 14 different crops dataset that has 54,309 labeled images. The bell pepper database has two distinct categories for healthy and disease. Sample images from the database are shown in Fig. 1. The number of images of each class is mentioned in Table 1. It contains 997 images of bacterial spot and 1478 images of healthy class.

2.2 PlantVillage potato dataset

The big database named as PlantVillage for containing dataset of plant provides multiple crop dataset. There are three different classes for potato images including healthy ones. The image sets of early and blight containing 1000 images each while a healthy image set contains 152 images.

2.3 PlantVillage tomato dataset

The PlantVillage database has different kinds of disease affected tomato leaf images. There are ten different classes for tomato plant leaf images encompassing a healthy class. The target spot contaminated imaginarium (or images) is 1404, mosaic virus affected category has 373. The yellow leaf curl virus infected images are 3209, tomato plant leaves imaginarium with bacterial spot are 2127. The early blight and healthy categories have 1000 and 1591 images, respectively. The late blight and leaf mold categories have 1909 and 952 images. The Septoria leaf spot and spider mites imaginarium varieties have 1771 and 1676 images as shown in Table 3.

3 Proposed image segmentation method

The complete model for plant image-based disease classification is shown in Fig. 2. It consists of two steps: 1) imaginarium localization through sectionalization and 2) attribute extraction for categorization.

The preferred preprocessing techniqueology explicates a three-stage system. The foremost stage environs the techniques for forefront separation, i.e., extraction of the leaflet from background. The penultimate step circumscribes initial pit detection passed through region growing process. Fig. 2 delineates the workflow of the proposed algorithm. Let us explore each one of them in-depth in the following section.

3.1 Preprocessing

The images contaminated with some types of noise into the leaves region. The primary cause of the noise is the speckle noise that basically introduced because of the environmental effects on the sensors at the time of image acquisition. To overcome it we apply the pre-processing on these images. This has performed by investigating every pixel and frame a histogram chart for the voxels that lie within the leaf region. The histogram of the pixel’s intensity undertakes the auto-thresholding process [30]. The voxel of intensity value lower than the localized threshold has been taken as the background voxel.

3.2 Primary Pit Selection

The pit point initialization primarily requires prior information of the considered leaf object. The plant leaf imaginarium for the leaf county segregation simply provides a color-deployed pit point indication. The color imaginarium analysis shows that the green color component is with higher intensity values in the leaf area of the image as shown in Fig. 3. The red color component is moderate values while the blue color component is less intense. The ratio of green to blue (G/B) color shows pit point initialization very efficiently as compared to the green to red (G/R) color ratio.

The color component (Comp.) analysis of leaf images (from top to bottom) Original image, red, green, blue color components, fifth row shows the green to red (G/R) ratio with binary G/R map in sixth row, the green to red ratio in seventh row with binary G/B map in last row (the maps are created using Otsu’s threshold)

Global thresholding techniques can be applied for the separation of the intensity level of the foreground i.e. the imaginarium pixels encompassing the leaf area from background [31]. Nevertheless, the leaf image inspection shows that the mean intensity values are disparately laid out across the complete background owing to that the leaf borders may be misleading. Therefore, it is not possible to attain acceptable outcomes through global thresholding techniques. As a remedial measure to the aforesaid problem, our approach circumscribes both the local and global thresholding techniques. In the local regions, the regional thresholding technique employs an customizable valley prong value. Thereby, it can possibly mitigate the variations of intensity levels throughout the background [32]. Further, the regional thresholding approach encompasses the foreground imaginarium by employing the global threshold. The window expanses as well as the sliding window dimensions have a remarkable impact on the reliability of the approach chosen to opt threshold as shown in Fig. 4.

The precise leaf county marking in imaginarium has been performed with a square window of size 9x9 or greater otherwise there is an issue of under segmentation as depicted in Fig. 4. This theory is true for the counter case too. Therefore, the choice of apt window expanse is of utmost pertinence to this process. The results obtained using various window sizes are illustrated in Fig. 4.

3.3 County expansion

The equivalent neighboring pixels in terms of properties are chosen from given initial pits and are appended for region growing. The operational activity is nonlinear in nature prevail to the morphology features or shape of an image is labeled as the morphological operations. A repetitive morphologic erosion [33] has employed to contract the leaf borderline and secure the solid initialization marking of the leaf. Primarily, the region growing framework is employed to acquire the circumference of the homogeneous county. Therefore, the procured outcome was not the exact imitation of the leaf area. To mitigate this, the mixture model-based county expansion technique [28] has been applied. It has developed from the county expansion method and is employed to intensify the outcome. Global Gaussian disseminations have been amalgamated with the prior knowledge acquired from the county expansion technique.

Let an image Y containing pattern X of K-classes in which a point l having intensity \(y_l\) is categorized as a member of class \(x_l \in \{1,\ldots K\}\). The mathematical model description of \(k^{th}\) class is the conditional probability \(P(y_l|x_l = k)\) [34]. The \(k^{th}\) class of the model is given in terms of linear MM:

The parameters y, \(K_0\), and \(\alpha _k\) representing the intensity of pixel offered system offset, and MM lifeline parameters, respectively. The parameter \(\xi _B\) denotes the background pixels distribution that obeys the normal distribution having mean and variance \(K_0\) and \(v_B\), respectively, with intensity statistics of \(k^{th}\) category pixels being \(\xi _{Sk} \) given in terms of the negative-binomial distribution (NBD) having parameters \(\mu _{Sk}\) and \(v_{Sk}\) as mean and variance, respectively [34].

The region growing is basically determined through a homogeneity property to establish a regional threshold using the intensity values of the commonly focused datasets. In general, it utilizes the background statistics and the signal distributions of the confocal data collection along with a linear mixture model for probability determination where the considered pixel values are discriminated as the background or foreground. These probabilities give the direction to define the rules of region growing approach.

The initial pit is taken as the center of the considered spherical region (or spheres). Then, the locality-based homogeneity property is developed focusing on the pit in the image. The dimension of considered volume be the trade-off between the local region of the segmentation and Gaussian mixture model (GMM) model fittingness and by default is set to \(N/8 \times M/8 \times 3\), where M and N represent the image stack dimensions. And the patch sizes are taken of not smaller than \(32\times 32\times 3\) to fulfill the requirement of data points to fit the GMM.

The Otsu [35] thresholding-based segmentation is an optimum option to multi-modal distributions [36], while the background considered as having the normal distribution with the negative binomial of signal, is fitted through an EM approach [28] on the distribution of crop pixel intensity. The iterative region growing process for different iterations (Ite = 10, 20, ..., 100) is shown in Fig. 5.

4 Attributes extortion and classification

The feature extortion for the simplification of the complex image features has been employed for the classification system.

4.1 Fisher vectors (FV)

The FV [37, 38] is computed using the Fisher Kernel (FK) that describes a feature by probability density function-based gradient vector using scale-invariant feature transform (SIFT) [29]. The murky depiction is given in terms of the GMM feature fitting and encoding the log-likelihood model’s derivatives with respect to specified parameters as shown in Fig. 6. A set of attributes computed using a specified probability distribution. The evaluated attributes are associated with their gradient that directs the data fitting to the Gaussian models appropriately.

The FK having well-defined statistical features as a local descriptor and Gaussian fitting centers are defined as FV. A set of complete features such as mean and covariance describing every individual Gaussian model has utilized to represent every object of interest.

In our experiments, we have taken the patch size of 24x24 for scale-invariant feature transform (SIFT) feature extraction followed by PCA to compact the size of the descriptors of SIFT from 128 to 64. The FV size for K Gaussian distributions is defined as 2Kd, where d is the feature vector dimension. The FV dimensionality is for K=256 and d=64 is 16384. The performance measure of the FV is optimized using the signed square-root followed by the \(L_2\)-normalization [29].

4.2 Classifiers

The simple neural network-based classifier as a multilayer perceptron model (MLP) [39] has been used for the two-class classification model having some nonlinearity. The nonlinearity is introduced by a rectified linear unit (ReLU) activation obligation in the entire constituent of the vectors, albeit for the last classification layer a softmax obligation has been used as an incitement for the conceptualized MLP system having four layers conception.

A two-category classification using a support vector machine (SVM) [40] offers greater flexibility of class separation. It is the most protruding model among all available classifiers the classification [41]. The fundamental mathematical formulation of SVM is explained as

subject to constraint \( y_j (\bar{w}.\bar{x}+b) \ge 1-\zeta _j \) for \(j= 1,2,3,\ldots ,N\).

A soft-max tunning measure C is adjusted for providing an adjustable edge to SVM. Additionally, the nonlinearity has been instigated by the radial basis function kernel which can be explained in terms of the Hilbert space transformation of the image. The experiments using tenfold cross-validation are performed with softmax parameter \(\gamma \) = 1 and \(C=1\) for training as well as testing of the classification model.

4.3 Performance measures

The performance specifications used for the segmentation work evaluations are \( F_1 \)-score, Dice coefficient (DC) [41] and modified Hausdorff distance (MHD) [42]. The accuracy metric (Ac) [43, 44] and receiver operating characteristic curve (ROC) [45], along with the area under the curve (AUC) [45], are measured to evaluate the performance of classification technique. For the high AUC measure, classification outcomes perform better. Generally, the evaluation metrics are utilized to measure the classifier performance and are given as;

where TP represents the number of appropriately categorized positive samples, TN denotes the number of appropriately categorized negative samples, while FP denotes the number of incorrectly (or falsely) categorized negative samples and FN is the number of falsely categorized positive samples. TNR is the true negative (that is the majority) rate, and FPR defines the false positive (that is the minority) rate.

where m is the number of classes. The G-mean measure is the accuracy ratio of minority grade to majority grade. This measure tries to balance by enhancing each class’s accuracy in unbalancing databases too because the overall accuracy does not provide enough information for class imbalance problems [46]. G-mean computes the effectiveness of the technique by considering the skewed class distributions [46]. The ROC curve [47] computes the accuracy of the system by varying the cut-off value to analyze the model score and obtain the various values of TPR on Y-axis and FPR on X-axis. The AUC [45, 48] can be used to measure the classifier with an idealistic point at (0, 1) that specifies the correctly classified all minority samples and non of the majority sample is wrongly classified to minority class sample. For higher values, the AUC performance of the classifier is better. The accuracy is defined as follows:

5 Results and discussion

The result analysis of the preferred localization deployed classification method is performed through the PlantVillage datasets of three crops, which cover the leaves of pepper plant, potato plants, and tomato leafage. The proposed model was implemented using the packages of Python, Keras [49], Scikit-learn [50], and TensorFlow [51] on the personal system (Core-i5 CPU, Clock 2.30 GHz, and Random Access Memory: 4 GB).

5.1 Evaluation of segmentation work

The segmentation performance of the LSSC [2] technique shows 0.877 \(F_1\)-score with 0.721 DC and 10.72 MHD. The SFAT [6] technique offered 0.875 \(F_1\)-score and 0.763 DC along with 10.25 MHD. The proposed segmentation technique provides average \( F_1 \)-score, DC and MHD of 0.916, 0.824, and 7.29, respectively, which is 5\(\%\) and 7\(\%\) better than SFAT [6] technique.

The computational complexity of the SFCC [5] and SFAT [6] techniques is of the order of \(O(N^3)\) while for the LSSC [2] and the proposed technique has in the order of \(O(N^2)\).

5.2 Classification work evaluation

The classification performance using MLP and SVM for bell pepper, potato, and tomato datasets is given in Table 5.

The accuracy and AUC for the tomato dataset are 0.892 and 0.865 using the MLP model while for SVM classifier is 0.918 and 0.933, respectively. For the potato dataset, the Ac and AUC values are showing moderate performance using both the classifiers. Accuracy and AUC for the bell pepper dataset are 0.928 and 0.912 using the MLP model; on the other hand, the SVM classifier shows 0.955 and 0.951, respectively. The average value of accuracy and AUC for the MLP model are 0.916 and 0.901, respectively. THE SVM Classifier shows the average accuracy of 0.944 with 0.947 AUC.

The detailed comparison of the preferred classification technique with the state-of-the-art techniques is given in Table 6.

The various state-of-the-art techniques show the average classification accuracy performances as the LCSCT [4] technique shows 0.894 Ac., and 0.922 AUC for the tomato dataset while for the bell pepper images classification it offers the accuracy of 0.921 with 0.891 AUC. The GFSD [21] technique gave Ac of 0.918 and 0.859, for the pepper leaves and potato leaf images, respectively, while it is 0.856 for tomato plants imaginarium. The proposed technique without segmentation offers 0.916 Ac. and 0.897 AUC for the bell pepper dataset and for potato and tomato accuracies are 0.893 and 0.866 with AUV of 0.917 and 0.881, respectively. The proposed technique using the SVM classifier for tomato dataset classification provides 0.928 and 0.933 accuracy and AUC values, respectively. The bell pepper dataset classification accuracy is 0.955 with 0.951 AUC. The average performance measure of the proposed technique is 0.943 accuracy with 0.947 AUC.

The average AUC comparison of the proposed technique directly to the state-of-the-art techniques is depicted in Fig. 7.

The mean value of AUC is depicted in figure with their respective techniques. The KCM [20] gives the least measure with 0.767 AUC on the other hand the DLDIC [27] provides 0.944. The preferred classification technique offers 0.945 AUC that performs better than all the mentioned state-of-the-art techniques.

The G-mean comparison of different approaches is shown in Fig. 8.

The value of G-mean given by PDML [10] technique is 0.843. The DLLA [26] technique gives the G-mean measure of 0.943 while the DLDIC [27] technique offers the G-mean value of 0.948. The proposed technique without segmentation provides the G-mean value of 0.927, on the other hand, the proposed technique offers a G-mean measure of 0.952.

The training time analysis (in seconds) for the different techniques is shown by the bar graph in Fig. 9. The DLLA [26] technique shows the average time of 9574 sec. for the training of the datasets. The LCSCT [4] technique takes 7200 seconds to train the datasets while the DLDIC [27] technique is trained in 8596 seconds.

The time taken by the proposed technique without segmentation is 1837 seconds, while the training time of the proposed localization-based classification technique takes 2100 seconds. The DLDIC [27] classification accuracy performance is at par with the proposed technique, but the time complexity is 4 times higher than the proposed technique.

6 Conclusion

Digital image analysis techniques have been utilized for numerous applications in agriculture like vigor diagnosis, vegetation measurement, phenotyping, etc. The Proposed localization-based classification technique classifies the three crops leaf images for a different kind of disease detection. The leaf localization was performed using natural color properties of the leaf images and the mixture model-based region growing. The diseased image properties like spots and damage of leaf region in the localized image provide the keys of characteristics discrimination from the healthy images that can be easily grabbed using Fisher vector extraction. The classification performance is measured in terms of accuracy and AUC. To measure the performance for the data imbalance cases the G-mean parameter is analyzed for all the three crops datasets. The average accuracy and AUC using SVM are 0.943 and 0.947, respectively, with 0.953 G-men scores and training time of 2100 seconds. Overall the proposed classification technique offers better performance as compared to the state-of-the-art techniques. One can extend this work with other crops disease detection and classification with improved accuracy.

References

Beucher, S., Meyer, F.: The morphological approach to segmentation: the watershed. Transformation 34, 433–481 (1993)

Soares, J.A.V., Jacobs, D.W.: Efficient segmentation of leaves in semi-controlled conditions. Mach. Vision Appl. 24(8), 1623–1643 (2013)

Silva, L., Koga, M., Cugnasca, C., et al.: Comparative assessment of feature selection and classification techniques for visual inspection of pot plant seedlings. Comput. Electro. Agri. 97, 47–55 (2013)

Singh, V., Misra, A.: Detection of plant leaf diseases using image segmentation and soft computing techniques. Inf. Process. Agri. 4(1), 41–49 (2017)

Biswas, S., Jagyasi, B., Singh, B.P., et al.: “Severity identification of potato late blight disease from crop images captured under uncontrolled environment,” In: Canada Intern. Humanit. Techn. Conf.—(IHTC), pp. 1–5 (June 2014)

Aparajita, Sharma, R., Singh, A., et al.: “Image processing based automated identification of late blight disease from leaf images of potato crops,” In: 2017 40th Intern. Conf. on Telecomm. and Signal Processing (TSP), pp. 758–762 (July 2017)

Yanikoglu, B., Aptoula, E., Tirkaz, C.: Automatic plant identification from photographs. Mach. Visi Appl. 25(6), 1369–83 (2014)

Qin, F., Liu, D., Sun, B., et al.: Identification of alfalfa leaf diseases using image recognition technology. Plos One 11(12), 1–26 (2016)

Sabrol, H., Satish, K.: “Tomato plant disease classification in digital images using classification tree,” In: 2016 Intern. Conf. on Communication and Signal Processing (ICCSP), pp. 1242–1246 (April 2016)

Islam, M., Dinh, Anh, Wahid, K., et al.: “Detection of potato diseases using image segmentation and multiclass support vector machine,” In: Canadian Conf. Elect and Comput Eng. (CCECE), pp. 1–4 (April 2017)

P. Patil, N. Yaligar, and M. S. M, “Comparision of performance of classifiers - svm, rf and ann in potato blight disease detection using leaf images,” In: IEEE Intern. Conf. Compu. Intell. and Comput. Research (ICCIC), Dec 2017, pp. 1–5

Schor, N., Bechar, A., Ignat, T., et al.: Robotic disease detection in greenhouses: combined detection of powdery mildew and tomato spotted wilt virus. IEEE Robot. Autom. Lett. 1(1), 354–360 (2016)

Al-Hiary, H., Bani-Ahmad, S., Ryalat, M., Braik, M., Alrahamneh, Z.: Fast and accurate detection and classification of plant diseases. Int. J. Comp. Appl. 17, 03 (2011)

Lowe, A., Harrison, N., French, A.: Hyperspectral image analysis techniques for the detection and classification of the early onset of plant disease and stress. Plant Meth. 13, 1–12 (2017)

Devi, T., Neelamegam, P.: Image processing based rice plant leaves diseases in thanjavur, tamilnadu. Clust Comput. 22, 1–14 (2019)

Khamparia, A., Saini, G., Gupta, D., et al.: Seasonal crops disease prediction and classification using deep convolutional encoder network. Circ. Syst. Sig. Process. 39, 818–836 (2020). https://doi.org/10.1007/s00034-019-01041-0

Krishnaswamy Rangarajan, A., Raja, P.: Disease classification in eggplant using pre-trained vgg16 and msvm. Sci. Rep. 10, 2322 (2020)

M. G.-Brochier, A. Vacavant, G. Cerutti, et al.: Tree leaves extraction in natural images: comparative study of preprocessing tools and segmentation methods. IEEE Trans. Image Process. 24(5), 1549–1560 (2015)

Wang, G., Sun, Y., Wang, J.: Automatic image-based plant disease severity estimation using deep learning. Comput. Intell. Neurosci. 2017, 1–8 (2017)

Kaur, S., Pandey, S., Goel, S.: Semi-automatic leaf disease detection and classification system for soybean culture. IET Image Process. 12(6), 1038–1048 (2018)

Khan, M.A., Lali, M.I.U., Sharif, M., et al.: An optimized method for segmentation and classification of apple diseases based on strong correlation and genetic algorithm based feature selection. IEEE Access. 7, 46261–46277 (2019)

Mu, H., Ni, H., Zhang, M., Yang, Y., Qi, D.: Tree leaf feature extraction and recognition based on geometric features and haar wavelet theory. Eng. Agri. Environ. Food 12, 477–483 (2019)

Sladojevic, S., Arsenovic, M., Anderla, A., et al.: Deep neural networks based recognition of plant diseases by leaf image classification. In: Computational Intelligence and Neuroscience, vol. 2016, pp. 1–11 (2016)

Brahimi, M., Boukhalfa, K., Moussaoui, A.: Deep learning for tomato diseases: Classification and symptoms visualization. Appl. Artif. Intell. 31(4), 299–315 (2017)

Ferentinos, K.P.: Deep learning models for plant disease detection and diagnosis. Comput. Electron Agri. 145, 311–318 (2018)

P. Bharali, C. Bhuyan, and A. Boruah, “Plant disease detection by leaf image classification using convolutional neural network,” In: Infor., Comm. and Comput. Tech. Singapore: Springer, 2019, pp. 194–205

Hang, J., Zhang, D., Chen, P., Zhang, J., Wang, B.: Classification of plant leaf diseases based on improved convolutional neural network. Sensors 19, 4161 (2019)

Callara, A.L., Magliaro, C., Ahluwalia, A., et al.: A smart region-growing algorithm for single-neuron segmentation from confocal and 2-photon datasets. Front Neuroinf 14, 9 (2020)

Liu, L., Wang, P., C. S., et al.: Compositional model based fisher vector coding for image classification. IEEE Trans. Pattern Anal. Mach. Intell. 39(12), 2335–2348 (2017)

Torr, P.H.S., Murray, D.W.: The development and comparison of robust methodsfor estimating the fundamental matrix. Int. J. Comput. Vision 24(3), 271–300 (1997)

Al-Kofahi, Y., Lassoued, W., Lee, W., Roysam, B.: Improved automatic detection and segmentation of cell nuclei in histopathology images. IEEE Trans. Biomed. Eng. 57(4), 841–852 (2010)

Ridler, T.W., Calvard, S.: Picture thresholding using an iterative selection method. IEEE Trans. Syst. Man Cybern. 8(8), 630–632 (1978)

Haralick, R.M., Zhuang, X., Lin, C., Lee, J.S.J.: The digital morphological sampling theorem. IEEE Trans. Acoust Speech Sig. Process. 37(12), 2067–2090 (1989)

Calapez, A., Rosa, A.: A statistical pixel intensity model for segmentation of confocal laser scanning microscopy images. IEEE Trans. Image Process. 19(9), 2408–2418 (2010)

M. A. B. Siddique, R. B. Arif, and M. M. R. Khan, “Digital image segmentation in matlab: A brief study on otsu’s image thresholding,” In: 2018 International Conference on Innovation in Engineering and Technology (ICIET), Dec 2018, pp. 1–5

Ng, H.F.: Automatic thresholding for defect detection. Pattern Recognit. Lett. 27, 1644–1649 (2006)

F. Perronnin and C. Dance, “Fisher kernels on visual vocabularies for image categorization,” In: IEEE Conf. Comput Vision and Patt. Recog., June 2007, pp. 1–8

Wang, H., Hu, J., Deng, W.: Compressing fisher vector for robust face recognition. IEEE Access 5(23), 23157–23165 (2017)

Raji, C.G., Chandra, S.S.V.: Long-term forecasting the survival in liver transplantation using multilayer perceptron networks. IEEE Trans. Syst, Man Cybern: Syst. 47(8), 2318–2329 (2017)

Cortes, C., Vapnik, V.: Support-vector networks. Mach. Learn. 20(3), 273–297 (1995)

Kurmi, Y., Chaurasia, V., Ganesh, N.: Tumor malignancy detection using histopathology imaging. J. Med. Imag. Rad. Sci 50, 514–528 (2019)

M. Dubuisson and A. K. Jain, “A modified Hausdorff distance for object matching,” In:Proceedings of 12th International Conference on Pattern Recognition, vol. 1, Oct 1994, pp. 566–568 vol.1

Kurmi, Y., Chaurasia, V.: Multifeature-based medical image segmentation. IET Image Process 12(8), 1491–1498 (2018)

Chaurasia, V., Chaurasia, V.: Statistical feature extraction based technique for fast fractal image compression. J. Vis. Commun. Image Represent. 41, 87–95 (2016)

Fawcett, T.: An introduction to roc analysis. Pattern Recognit. Lett. 27(8), 861–874 (2006)

He, H., Garcia, E.A.: Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 21(9), 1263–84 (2009)

Bradley, A.P.: The use of the area under the roc curve in the evaluation of machine learning algorithms. Pattern Recognit. 30(7), 1145–1159 (1997)

Huang, J., Ling, C.X.: Using auc and accuracy in evaluating learning algorithms. IEEE Trans. Knowl. Data Eng. 17(3), 299–310 (2005)

Keras, “Keras Documentation,” https://keras.io, 2018, [Online; accessed 2-Feb-2018]

Pedregosa, F., Varoquaux, G., e. a. Gramfort, A., : Scikit-learn: machine learning in python. J. Mach. Learn. Res. 384(12), 2825–2830 (2011)

G. B. team:, “TensorFlow,” https://www.tensorflow.org/, 2018, [Online; accessed 2-Feb-2018]

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interests

The authors declare that there is not any type of conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kurmi, Y., Gangwar, S., Agrawal, D. et al. Leaf image analysis-based crop diseases classification. SIViP 15, 589–597 (2021). https://doi.org/10.1007/s11760-020-01780-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-020-01780-7