Abstract

We present a plant identification system for automatically identifying the plant in a given image. In addition to common difficulties faced in object recognition, such as light, pose and orientation variations, there are further difficulties particular to this problem, such as changing leaf shapes according to plant age and changes in the overall shape due to leaf composition. Our system uses a rich variety of shape, texture and color features, some being specific to the plant domain. The system has achieved the best overall score in the ImageCLEF’12 plant identification campaign in both the automatic and human-assisted categories. We report the results of this system on the publicly available ImageCLEF’12 plant dataset, as well as the effectiveness of individual features. The results show 61 and 81 % accuracies in classifying the 126 different plant species in the top-1 and top-5 choices.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Plant taxonomy is a highly laborious task, consisting of the scientific classification of our planet’s flora. In particular, its crucial stage of plant identification is a veritable scientific and technical challenge, due not only to the huge plant variety that is involved, but also to their highly specialized and diverse taxonomic properties as well. For this reason, manual plant identification is a demanding and slow process, albeit imperative too, as botanists race against time to catalog plant species before they become extinct. Consequently, there is a significant need for an automated plant identification system that, when provided with raw visual plant data, will extract a number of descriptive features and use them to determine and output the corresponding plant species. In fact, besides its obvious contribution to taxonomy, such a system possesses a considerable commercial potential as well, as a regular content-based image retrieval (CBIR) product; e.g. in the form of a smartphone application it could be employed in the wild for identifying hazardous or edible plants.

Motivated by this application potential and recent advances in CBIR, the interest of the computer vision community in plant identification has been increasing steadily, along with the number of proposed features and plant identification systems [1, 2]. For instance, ImageCLEF, one of the foremost visual image retrieval campaigns, has been organizing a plant identification track since 2011 [3].

Although botanists exploit all available plant characteristics such as flowers, seeds and leaves while identifying a plant, the vast majority of the approaches proposed so far has been focusing exclusively on leaf-based plant identification [4–8], both for limiting the problem’s complexity and because leaves are easily accessible and highly discriminatory through their color, shape and texture features. Nevertheless, even with this limitation the problem is challenging, since leaves of the same plant may vary significantly both in terms of color and shape, depending on the season, plant condition and image acquisition settings. On the other hand, some general-purpose content-based description solutions have also been developed for this problem [9, 10]. While they achieved good results, customized approaches to plant identification, exploiting domain-specific features and approaches, have been shown to surpass general-purpose methods [11].

In this paper, we address the problem of automatic plant identification from photographs and present a new system developed for this task. Our system that was a slightly earlier version of the system presented in this paper has won the ImageCLEF’2012 Plant Identification Competition, in both the automatic and semi-automatic categories.

After presenting the state-of-the-art in plant identification (Sect. 2), we elaborate on our system’s design and operation details (Sect. 3). Next, we present the results of a series of experiments (Sect. 4) studying the performance of several descriptors in the context of leaf-based plant identification as well as the overall performance results. Finally, Sect. 5 is devoted to concluding remarks and future perspectives.

2 Related work

Until a few years ago, there was a limited number of publications concerning automatic plant identification, comprising botanical applications targeting leaf-based plant identification or agricultural applications, such as for detecting diseased plants.

The plant identification campaign organized by ImageCLEF in 2011, and repeated in 2012 and 2013, has marked the turning point in this area [3]. The goal of the campaign was to benchmark the progress in automatic plant identification, addressing both isolated leaf and plant recognition problems. With the participation of systems from around the world, the campaign has successfully highlighted this problem. Below, we provide a short summary of the past technical achievements in botanical and agricultural identification/retrieval applications, followed by the summaries of the systems that participated in the ImageCLEF’2012 plant identification campaign.

In systems geared towards botanical applications segmented leaf images are used to identify unknown plant varieties, often using features obtained from the leaf contour. Among these, Yahiaoui et al. proposed an image retrieval system for identifying plants using contour-based shape features in [5]. The extracted shape descriptors in this study include the length histogram of contour segments in different directions. Similarly, Wang and his group [12, 13] focused on the leaf image retrieval problem using features such as centroid-contour distance curve, eccentricity and angle code histograms, while later on investigating shape context and SIFT [14]. Concentrating on shape, Neto et al. [15] extract elliptic Fourier harmonic functions from the leaf boundary and Nam et al. [16] use minimum perimeter polygons for shape description in combination with a matrix of interest points for similarity computation.

More recently, combination of shape features with color and texture features is explored. In [17], plant leaves are classified based on their texture features, using both LBP and Gabor features, while in [6], leaf venation characteristics are extracted through the curvature scale space (CSS) corner detection algorithm. Beghin et al. [18] focused on the combination of shape and texture, by employing contour signatures along with edge gradient orientations, while Hussein et al. [19] exploit texture by means of the discrete wavelet transform. Similarly, Man et al. [20] combines color and texture features after rotating each leaf so as to align its central axis with the horizontal. Moreover, both [21] and [22] rely on simple geometric and morphological features, while [23] additionally combines them with a powerful moving median center hypersphere classifier.

Backes [24] and Bruno et al. [2] proposed a shape classification method relying on multi-scale fractal dimensions, which they applied to the leaf identification problem. The shape features are extracted using either segmented leaf outlines or leaf venations, to retrieve both internal and external leaf characteristics. This approach is later used by the authors in the IFSC/USP system that participated in the ImageCLEF’2012 campaign [25]. Recently, Zhang et al. [26] have developed a new leaf classification method using a supervised locality projection analysis method based on label propagation.

Although the stage of feature extraction is highly important for plant identification, it is also often dependent on a successfully implemented segmentation. Hence, some authors have focused specifically on this problem. For instance, Manh et al. [27] propose a method of weed leaf segmentation based on the use of deformable templates, while a marker-controlled watershed transform is employed in [7, 28]. Yet, a hybrid method based on the morphological h-max transform is elaborated in [29].

Furthermore, there are few systems that are fully developed plant identification solutions, often developed towards a particular platform or user group. For instance, Belhumeur et al. [1] have designed a leaf-based system for the National Museum of Natural History, equipped with an expectation-maximization-based segmentation and mostly with shape context-based content description. Another plant identification system has been developed by Kebapci et al. [30], employing max-flow min-cut for semi-automatic segmentation and focusing on house plant identification from whole plant images. Lastly, a tablet PC-oriented system named LeafView has been developed by White et al. [31] employing interactive segmentation and shape context for description.

In contrast to previous approaches concentrating on leaves or plant foliage, some authors have chosen to work solely with flowers. Assuming that a plant possesses them, their color-rich appearance can be highly useful for plant identification. In particular, Nilsback and Zisserman [32] have developed a method specifically for flower segmentation, using different color models for the foreground and background along with a Markov random field cost function optimized using graph cuts. Saitoh et al. [33] also explored the potential of flowers and developed a solution where a semi-automatic segmentation is employed based on intelligent scissors, followed by the computation of simple geometric shape and color features.

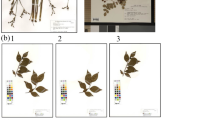

Although multiple plant datasets have been available for over a decade, such as the Swedish dataset [34], the ICL dataset [35] and the Smithsonian dataset [36], it was in 2011 that a plant identification contest was organized for the first time, as a track within the CLEF conference. The organization of this task, which was funded by the French Pl@ntNet project, led to the formation of a constantly growing plant dataset; there were 71 plant species in 2011, that increased to 126 species in 2012. The systems participating to the campaign were evaluated based on their performances in three image categories: scanned images of a leaf (scan), scan-like photographs of a leaf (scan-like) and unconstrained photographs of plant leaf or foliage (photo), as shown in Figs. 1 and 2, respectively. The evaluation is done in terms of the average inverse-rank metric.

Among the fully automatic systems that participated in the ImageCLEF’2012 plant identification campaign, the following groups and systems stood out by obtaining the best results in at least one of the categories. The Inria/IMEDIA group submitted three runs using distinct approaches [42] and obtained an average inverse-rank score of 0.42 with their best system (INRIA-Imedia-PlantNet-run-1). Their second best overall system obtained the highest score on scan-like images with a score of 0.59 (INRIA-Imedia-PlantNet-run-2), using an advanced shape context descriptor combining boundary shape information and local features within a matching approach. For photographs, local features around constrained Harris points are employed to reduce the impact of the background, but an automatic segmentation with a rejection criterion was also attempted to extract shape features when possible.

The LSIS/DYNI group obtained the best scores in the automatic identification of plants in the photo category with an inverse-rank score of 0.32 (LSIS-DYNI-run3) [10]. While not using any segmentation at all, their system involved feature extraction coupled with Spatial Pyramid Matching for local analysis and large-scale supervised classification based on linear SVM with the 1-vs-all multi-class strategy. They used a Multiscale and Color extension of the Local Phase Quantization (MSCLPQ), dense Multiscale Color Improved Local Binary Patterns (MCILBP) with Sparse Coding of patches and dense SIFT features with sparse coding. In this system, authors report to having obtained better results with increased number of descriptors. This system obtained an average overall score of 0.38.

The IFSC/USP group also submitted three systems [25]. In their work, the leaf contour was described with complex network, volumetric fractal dimension and geometric parameters. Gabor filters and local binary patterns were also used as texture descriptors. The scores obtained by their automatic system (IFSC/USP-run3) for scan and scan-like images were 0.20 and 0.14, respectively.

The Zhao/HFUT group also developed a segmentation-free system (ZhaoHFUT-run3), employing the flip SIFT descriptor, along with a sparse representation [43]. Specifically, they use the ScSPM model that uses a spatial pyramid matching. Although their performance is moderate (0.23 overall score), their approach validates the potential of sparse representation in this context.

The DBIS [44] team presents an uncommon approach, which employs logical combinations of low-level features expressed in a query language. In particular, they explored several of the MPEG-7 color and texture descriptors [45] with the color structure descriptor performing the best among them. They are one of the few participants that have additionally exploited the availability of meta-data, specifically the GPS coordinates of the acquired images. Their system has obtained an average score of 0.21.

As the Sabancı-Okan team, we submitted only two runs to ImageCLEF’2012 plant identification campaign: one for the automatic category and another one for the semi-automatic category where human assistance was allowed [46]. Our two systems obtained the highest average scores in each category. Specifically, our automatic system (Sabanci-Okan-run-1) obtained the highest average score of 0.43 and the highest score of 0.58 in the scan category, among automatic systems. For scan-like and photo categories, our system obtained inverse-rank scores of 0.55 and 0.16, respectively, while the best accuracies obtained in these categories were 0.59 and 0.32 (by different groups), as mentioned before.

This section has presented an overview of the existing approaches for plant identification, while a summary of the related approaches is provided in Table 1.

3 Proposed system

We present a plant identification system to recognize the plant species in a given image, addressing two sub-problems of plant identification: identifying the plant from a single leaf image or from an unconstrained photograph of the plant (Figs. 1, 2, respectively). As these two sub-problems are quite different from one another, we handle them in two separate sub-systems. The two sub-systems employ parallel stages for segmentation, preprocessing, and recognition steps, but in the case of unconstrained photographs of plant foliage, the preprocessing and segmentation stages are more aggressive, so as to isolate a single leaf in the image. Currently, the diversion of the input into one of the sub-systems is realized by analyzing the meta-data of the image files; however, we foresee that this can also be realized automatically if such information had been unavailable.

The proposed system employs a rich variety of shape, texture and color features, some being specific to the plant domain. It relies on mathematical morphology for quasi-flat zone-based color image simplification and reliable leaf extraction from complicated backgrounds. Recognition is accomplished through powerful classifiers that are trained with generalization performance in mind, given the large variability and relatively small amount of data.

Variants of the presented system have participated in the ImageCLEF Plant Identification Competitions in 2011, 2012 and 2013 and achieved good results (best overall result in both automatic and semi-automatic categories in 2012; best results in isolated leaf images in 2013). The evaluation results reported in this paper are obtained with the ImageCLEF’2012 plant dataset that is publicly available.

3.1 Segmentation

Considering the two sub-problems addressed by the system, one can easily remark that the segmentation of an isolated leaf on a simple background should be easy, while that of foliage photographs should be rather difficult. Indeed, for the majority of leaf images, segmentation is very successful even by means of basic techniques such as Otsu’s adaptive threshold [47], except for a small subset of leaf images containing shadows.

As for foliage images, although there are approaches avoiding segmentation altogether by describing the image content through local invariants [9], we have taken an alternate approach in this work: given a foliage image, the system aims to obtain a single leaf using an aggressive segmentation; thereafter, the foliage recognition problem is reduced to leaf recognition. We believe that this approach is complementary to recognizing plants using local invariants or global descriptors obtained from the whole image, and is especially suitable for photographs containing a single leaf on natural background, as in Fig. 4a–f.

Unfortunately, segmentation of a leaf in a plant photograph with natural background is a complex problem due to an ill-posed nature; if no a priori knowledge is available on the image acquisition settings or content, leaf segmentation becomes equivalent to unconstrained color image segmentation, where the various leaves can be located within an equally immense variety of backgrounds, such as those shown in Fig. 2. In our approach, we make a simple assumption that the object of interest dominates the center of the photograph, as explained in the next section.

Furthermore, one might argue at this stage that the plant background can provide information facilitating the recognition of the leaf/plant in the foreground. For instance, if the image has been acquired in a wild forest, then there is already some chance that it contains a non-domesticated plant. However, although it is a theoretically sound idea, exploiting the background information additionally involves the description and recognition of the background, which given its diversity increases the complexity of an already challenging problem. Moreover, even if one insisted on exploiting the background, separating it from the foreground may still be necessary.

3.1.1 Automatic segmentation

Plant image segmentation largely depends on the image acquisition method: namely, scanning an isolated leaf, photographing an isolated leaf, photographing a plant leaf or foliage on a complex background. In particular, the ImageCLEF’2012 dataset has three categories, namely scan, pseudo-scan and photos, where the scan and pseudo-scan categories correspond respectively to leaf images obtained through scanning and photography over a simple background (Fig. 1); and photo category (Fig. 2) corresponds to plant leaf or foliage photographed on natural background.

From a segmentation viewpoint, isolated leafs that are scanned or photographed on a simple background are similar; in other words, they both possess a mostly noise-free, spectrally homogeneous background, occasionally containing some amount of shadow. Consequently, in our system, automatic segmentation has been trivially resolved through Otsu’s adaptive threshold [47] as it is both efficient and effective.

Photos of plant foliage or leaf taken on an arbitrary background offer a far greater challenge for the overall system and the segmentation module. To attack this problem, we adopted a combination of spectral and spatial techniques. Specifically, we start with a weak assumption that the object of interest, i.e. the leaf, is located roughly at the center of the image and possesses a single dominant color. The image is first simplified by means of marginal color quasi-flat zones [48], a morphology-based image-partitioning method based on constrained connectivity, that creates flat zones based on both local and global spectral variational criteria (Fig. 3a). Next, we compute its morphological color gradient in the LSH color space [49], taking into account both chromatic and achromatic variations (Fig. 3b), followed by the application of the watershed transform. Hence, we obtain a first partition with spectrally homogeneous regions and spatially consistent borders, albeit with a serious over-segmentation ratio which is compensated for by merging basins below a certain area threshold (Fig. 3c).

At this point, our initial assumption about central location of the object of interest is used, as we employ the central 2/3 area of the image to determine its dominant color, obtained by means of histogram clustering in the LSH color space. Assuming that the mean color of the most significant cluster (i.e. reference color) belongs to the leaf/plant, we then switch to spectral techniques, so as to determine its watershed basins. Since camera reflections can be problematic due to their low saturation, we compute both the achromatic, i.e. grayscale distance image from the reference gray (Fig. 3d) and the angular hue distance image (Fig. 3f) from the reference hue \(h_\mathrm{ref}\) [50]:

We then apply Otsu’s method on both distance images, providing us with two masks (Fig. 3e, g), representing spectrally interesting areas with respect to the reference color. The intersection of the two masks is used as the final object mask after minor post-processing (e.g. hole filling, etc.) (Fig. 3h). As seen in Fig. 3i, spectral and spatial techniques indeed complement each other well, while the use of both chromatic and achromatic distances increases the method’s robustness. However, the main weakness of this approach is the accurate determination of the reference or dominant color, which can easily corrupt the entire process if computed incorrectly or if the leaf under consideration has more than one dominant color.

We have measured the segmentation accuracy visually, counting the number of cleanly segmented images among the 483 test photos in the ImageCLEF’2012 dataset. According to these results verified by two people, 104 out of 483 images (21.5 %) are cleanly segmented (having a segmentation mistake) and another 10 % are nearly cleanly segmented (image contains a single leaf but with a substantial missing section or attached background). Since these categories are difficult to define and identify, we give sample output in Fig. 4 showing clean segmentations (a,b,d,e) and near-clean ones (c). For the images that are not well-segmented (f, g), which amount to about 69 % of all photographs, the leaf was significantly over- or under-segmented.

3.1.2 Human-assisted segmentation

We have also developed a semi-automatic system to explore the effects of some simple human assistance, also based on a segmentation technique using mathematical morphology. This semi-automatic system has also achieved the highest overall accuracy in ImageCLEF’2012 plant identification campaign, in the human-assisted category.

In terms of human assistance, ideally, we would like the user/expert to provide some amount of high-level knowledge, spending no more than a few tens of seconds per image. Assuming that the provided knowledge is valid, we chose to use the marker-based watershed transform [51] to best exploit it. Specifically, the marker-based watershed transform is a powerful, robust and fast segmentation tool that, given a number of seed areas or markers, results in watershed lines representing the skeleton of their influence zones.

To accomplish this we need a suitable topographic relief as the input, where object borders are denoted as peaks and flat zones as valleys; this is why we employ the morphological color gradient of the input image [49] (Fig. 5b). We then require at least two markers provided by the user/expert: one denoting the background and another representing the foreground. Both of these markers can easily be provided for instance through the touchscreen of a smartphone, by indicating the leaf and the background successively (Fig. 5c). Next, having superposed the markers on the gradient, the marker-based watershed transform provides the binary image partition (Fig. 5d). Although both efficient and effective, this method depends utterly on the quality of the provided markers, since if they are too small they can lead to partial leaf detection and conversely if they are excessively large, the leaf will be confounded with its background.

3.2 Preprocessing

Having located the object of interest at the segmentation stage, we apply a minimal amount of preprocessing. Specifically, we bring the leaf’s major axis in line with the vertical and normalize the height to 600 pixels, while preserving the aspect ratio. Apart from these, the relatively high acquisition quality of the data did not warrant any image enhancement operations.

For rotation normalization, we adopt a simple approach. The image is rotated to bring the longest two-segment line, passing through the mean and connecting two contour points. For this, we first compute the distance from each contour point to the geometric mean of the contour points. Then, we find the largest two-segment line, rather than the largest line connecting two contour points, to allow some flexibility in the major axis. The computation is illustrated in Fig. 6.

Formally, we first compute the distance \(d_i\) and angle \(q_i\) of all the points on the contour with respect to mean. Then, we find the corresponding quantized bin for all points using \(b_i=q_i/F\) where \(F\) is the binning factor, set to \(F=\pi /18\) in our system. Then, the maximum distance observed for that angle (bin) is calculated using

Then, we find the two bins that are half of the length of \(B\) away from each other and have the maximal sum. Finally, we rotate the image so that the bin that contains one of the points is the first bin.

For the ImageCLEF’2012 plant database, the images of isolated leaves are mostly in upright position already; however, this is obviously not true for leaves segmented from plant foliage photos. Doing a visual inspection of cleanly segmented photos, we observed that about 54 % of leaves are correctly oriented such that its major axis is aligned with the vertical (\(\pm 15^{\circ }\)) and its tip is at the top. Note that the suggested method only tries to align the major axis of the leaf with the vertical; the upside-down leaves are counted as correct, the accuracy increases to 81 %.

A more advanced rotation normalization would require more advanced features, such as vein orientations and the stem location of the leaf. Alternatively, one can use only rotation-invariant features for classification. We believe that the former may be more promising in our case, given the current success of the algorithm and the fact that many powerful features used in this work are not rotation invariant.

3.3 Feature extraction

Our plant identification system employs a wide range of features for describing effectively the huge variety of plant images. Most of the considered features are commonly used for general-purpose content-based image description, while others have been specifically designed for the task under consideration. Furthermore, special care has been taken to ensure translation, rotation and if possible scale-invariant descriptions. In this section, we review each feature family (shape, texture, color) in detail.

3.3.1 Shape features

Shape is evidently of prime importance when it comes to distinguishing leaves, and has been treated accordingly by employing a rich array of both region- and contour-based shape descriptors. Specifically, we use well-known statistical and convexity-based global geometric descriptors, as well as Fourier coefficients that aim to capture fine leaf border nuances. In addition, lesser known yet effective approaches are also included that focus on localized image parts.

Given the mask image produced at the segmentation step, whenever required the leaf contours are computed by means of the morphological internal gradient operator using a \(3~\times ~3\) square-shaped structuring element.

The shape features evaluated in this work and used in our system are described briefly.

Perimeter convexity (PC) or simply convexity is defined using the binary segmentation mask, as the ratio of perimeter of the convex hull over that of the leaf contour:

Area convexity (AC) is also computed on the binary segmentation mask and describes the normalized difference of the image areas (in pixels) between the convex hull and the original mask:

Compactness (Comp) is a basic global shape descriptor computed on the binary mask as the ratio of the square of the perimeter over the object area:

Elongation (Elong) is a morphological shape measure computed once again on the binary segmentation mask and defined as

where \(d\) represents the maximum number of binary morphological erosions before the shape disappears completely. The structuring element in this case has been chosen as a \(3 \times 3\) square.

Basic shape statistics (BSS) provides contour-related information by computing basic statistical measures from the distance to centroid curve. In particular, once the image centroid is located, we compute the contour pixels’ Euclidean distance to the centroid, obtaining a sequence of distance values. After sorting the said sequence, we extract the following basic measures from it:

Area width factor (AWF) is computed on grayscale and constitutes a slight variation of the leaf width factor introduced in [22]. Specifically, given an isolated leaf image, it is first divided into \(n\) strips perpendicular to its major axis, as shown in Fig. 7. For the final \(n\)-dimensional feature, we compute the volume (Vol, i.e. sum of pixel values) of each strip (Vol\(_i\)) normalized by the global volume (Vol):

Regional moments of inertia (RMI) is relatively similar to AWF. It requires an identical image subdivision system, differing only in the characterization of each strip. To explain, instead of using the sum of pixel values, each strip is described by means of the mean Euclidean distance between its centroid and contour pixels [52].

Example of leaf decomposition into 14 strips [22]

Angle code histogram (ACH) has been used in [12] for tree leaf classification. Given the binary segmentation mask, it consists in first subsampling the contour points, followed by computing the angles of successive point triplets. The final feature is formed by the normalized histogram of the computed angles.

Contour point distribution histogram (CPDH) is a variation of the generic shape descriptor recently presented in [53]. It consists in first drawing \(n\) concentric circles at regular intervals around the image centroid. Then for each concentric circle, we compute the number of contour points it contains, thus leading to a numerical sequence of length \(n\). The normalization of the said sequence with the object’s perimeter provides the final feature vector.

Fourier descriptors (FD): we used the Fourier descriptors that are widely used to describe shape boundaries, as the main shape feature in our system. We first extract the eight-directional chain-code from the boundary of a leaf. Then, the discrete Fourier transform is applied on the chain-code, to obtain the Fourier coefficients. The Fourier transform coefficients of a discrete signal \(f(t)\) of length \(N\) is defined as

In our case, \(f(t)\) is the eight-directional chain-code of the plant, \(N\) is the number of points in the chain-code and \(C_k\) is the \(k\)th Fourier coefficient. The coefficients computed on the chain-code are invariant to translation since the chain-code is invariant to translation. Rotation invariance is achieved using only the magnitude information in the coefficients, ignoring the phase information. Scale invariance is achieved by dividing all the coefficients by the magnitude of the first component.

We used the first 50 coefficients to obtain a fixed-length feature and to eliminate the noise in the leaf contour.

3.3.2 Texture features

Although texture is often overshadowed by shape as the dominant feature family for leaf recognition, it is nevertheless of high significance as it provides complementary information. In particular, texture features capture venation-related information as well as any eventual directional characteristics, and more generally specialize in describing the fine nuances at the leaf surface. The most frequently encountered texture descriptors in this context appear to be Gabor features [17], fractal dimensions [2] as well as local binary patterns [17].

Although one might be tempted to use state-of-the-art texture description tools such as the recently introduced morphological texture descriptors [54, 55], they will probably not be optimal in this context, since the primary concern there is to obtain translation, rotation and scale-invariant features. Whereas in this context, the leaves have already been centered and rotation normalized. Hence, a simpler and an anisotropic descriptor can be of interest, since it does not compromise its description capacity for the sake of invariance. As far as our system is concerned, after experimenting extensively with morphological texture descriptors that had previously performed relatively well [56], we decided not to employ them in the current system for the aforementioned reason; we employed the following instead.

Orientation histogram is computed on grayscale data. After computing the orientation map using a \(11 \times 11\) edge detection operator for determining the dominant orientation at each pixel, the feature vector is computed as the normalized histogram of \(n\) bins of dominant orientations.

Gabor features: Gabor wavelets, which are commonly used for texture analysis [57, 58], are obtained through the multiplication of a Gaussian function and a harmonic function. The basic 2D Gabor function can be stated as follows:

Gabor filters in different orientations and scales are used to detect textures in different orientations and scales, respectively. They can be derived from the basic Gabor function. The response of a Gabor filter on an image \(I(x,y)\) is the convolution of the image and the Gabor filter:

where \(g_{su}(m,n)\) denotes the Gabor function at scale \(s\) and orientation \(u\), and \(m\) and \(n\) are variables that are use to sum the response over the Gabor filter window’s size, \(C\).

In our case, we applied the Gabor filter to the grayscale version of the segmented plant images using eight orientations and five scales. The mean response obtained at each orientation and scale is then used as the 40-dimensional Gabor texture features.

3.3.3 Color features

Color is not expected to be as discriminative as shape or texture for leaf recognition, since most plants are already in some shade of green, while illumination variations vary greatly. In addition to the low inter-class variability of color, another issue is its high intra-class variance: even the colors of leaves belonging to the same plant can present a very wide value range (Fig. 8) depending on the season and the plant’s overall condition.

Regardless of the aforementioned complications, color can still contribute to plant identification, considering leaves that exhibit an extraordinary hue or especially in case of flower presence. We tested the effectiveness of some well-known color descriptors, specifically the RGB histogram, the LSH histogram, the saturation-weighted hue histogram [59], and color moments [60].

Color moments [60] are used for characterizing planar color patterns, irrespective of viewpoint or illumination conditions and without the need for object contour detection. They consist of 27 moments of powers of the intensities in the individual color bands and combinations thereof. In detail, they describe the shape, the intensity and the color distribution of a given pattern. Moreover, they are invariant to both affine deformations as well as linear intensity changes.

The saturation-weighted hue histogram that was deemed as the most promising is described here. It aims to address the issue of indeterminate hue with weakly saturated pixels. In this histogram, the total value of each bin \(W_{\theta }, \theta \in [0,360]\) is calculated as

where \(H_x\) and \(S_x\) are the hue and saturation values at position \(x\) and \(\delta _{ij}\) the Kronecker delta function. As far as the color space is concerned, we have employed LSH [50] since it provides a saturation representation independent of luminance.

3.4 Classification

Plant identification is a challenging machine learning problem. The main difficulty is that many plant species are very similar to one another while one can observe a large variation within one species, due to seasonal or plant maturity changes. Other sources of variation common to many object recognition problems, such as pose, scale and lighting variations, also apply to plant identification problem.

The most important component of a classification system is using efficient and robust features that can be used to differentiate between the classes. In this work, we use a large set of powerful features, most of which are well-known and widely used in various object recognition problems (e.g. Fourier descriptors, moment invariants, Gabor features), as described in Sect. 3.3. Some other features (e.g. area width factor) are added as a result of our previous work in this area.

Of course there are a multitude of different features used in object recognition literature, so the problem may be more of a feature selection to reduce dimensionality and prevent over-fitting, especially since there are a relatively few number of samples per species. Findings are mixed in regard to feature selection: while in our previous work we found that some form of dimensionality reduction is needed [3], others have found that the more features they added the more the performance increased [10]. This issue is especially relevant since plant image databases are rather limited in size; for instance quite a few species in the ImageCLEF’2012 plant database contain only a few (less than 10) samples.

To evaluate feature effectiveness and deal with the potential over-fitting problem, we evaluated the effectiveness of each feature individually and in groups, during system development. For this, we used support vector machine (SVM) classifiers trained using a portion of the available training data (development set) and only the considered feature(s). For evaluation, we used the remaining samples from the training set (validation set), as discussed in Sect. 4. Some of the mentioned features in Sect. 3.3 are added as a result of this evaluation, after noticing frequent errors between similar classes. In this process, we also excluded the morphological texture features that we had used in our previous work [56].

After the feature selection phase, we trained our classifiers using the selected features and all of the available training data. Then, we tested these classifiers using the separate test set of the ImageCLEF’2012 plant database. The full set of our final features, along with their effectiveness on this test set, is given in Table 2.

The SVM classifiers used in this work employ the radial basis function kernel whose parameters were optimized using cross-validation and grid search on the training set. The training samples for this classifier only consist of isolated leaf images (scan and scan-like categories of the training set), so as to obtain cleanly extracted features.

4 Experimental results

We used the test portion of the publicly available ImageCLEF’2012 plant identification campaign database to evaluate different features for their effectiveness in this problem and to measure the overall system performance.

Since the ImageCLEF plant database contains separate subsets for isolated leafs on simple backgrounds and plant foliage on complex background, we report our results on isolated leaf recognition and unrestricted plant photograph recognition sub-problems separately.

We report both individual feature effectiveness and overall performance results using top-k classification rates and the average inverse-rank score of the queries. In the ImageCLEF’2012 campaign, the official tests were run by ImageCLEF organizers and the results obtained by each group for each sub-task are reported in [11], as discussed in Sect. 2. There, the average inverse-rank metric is used slightly differently, as explained in Sect. 4.3.

4.1 Dataset

The ImageCLEF’2012 plant database contains images of 126 tree species, contributed by about 20 different people. Each species contains photographs from one or more individual plants. The database consists of a training set that was made available for the participants and a test set that was kept sequestered until the official testing of participating systems by the organizers, and released afterwards. The training data contains 8,422 images (4,870 scans, 1,819 scan-like photos, 1,733 natural photos) and the test set contains a separate set of 3,150 images (1,760 scans, 907 scan-like photos, 483 natural photos).

During system development, feature effectiveness was in fact measured using a carefully obtained validation set that was split from the training set. For this, we split the training database into two, as development and validation subsets, paying attention to representing all classes in the development set and not splitting images of the same individual plant across the two subsets. We preferred this method to using a simple cross-validation, since in the ImageCLEF’2011 campaign [3], there was a large performance degradation between the results we obtained using cross-validation and in the official test. We believe that the large performance drop was partly due to the fact that the development and validation sets contained very similar images in the case of cross-validation. Indeed, in the ImageCLEF database, there are many plants that are photographed more than once, often with very little difference between two pictures. Putting these pictures in separate subsets (development and validation), as may happen during a randomly partitioned cross-validation split, may lead to overfitting. Furthermore, some classes may be missing from the development or validation subsets, simplifying the problem.

4.2 Feature evaluation

We report the effectiveness of a feature using a classifier trained with all of the training set and considering only that feature. For training, we only used isolated leaves on simple background (scan and scan-like data) so as to measure feature effectiveness on the core plant identification problem, without the added complexity of background variations.

The individual feature performances are presented in Table 2. The results show the top-1, top-2 and top-10 classification accuracies, and the average inverse-rank of the correct class for each query. A test instance is correctly classified using Top-N (or Rank-N) accuracy if the correct class label is in the most probable N classes. The inverse-rank score \(S\) is calculated as

where \(P\) is the number of different plant classes, \(N_p\) is the number of photographs for that plant, and \(r_{p,n}\) is the rank of the correct class for the \(n\)th image of the \(p\)th class.

As can be seen in Table 2, the best individual features are shape descriptors, with around 35 % accuracy in the test set (e.g. regional moments and Fourier descriptors); they are followed by color moments (\(\sim \)25 %) and orientation histograms (\(\sim \)18 %). Shape features also appear to be less prone to over-fitting as indicated by a smaller degradation between cross-validation and validation accuracies.

Since we found that the shape features are more robust for this classification task, to better analyze the shape features, we further divided them into two according to how they are extracted as contour (Fourier descriptors, basic shape statistics, angular code histogram, perimeter convexity, and contour point distribution histogram) or region based (all others). The accuracies for this division are also presented in Table 2. According to these results, region-based shape features are the best features for plant leaf retrieval.

For texture, the Gabor features show the highest discriminatory performance (\(\sim \)32 %), followed by the gradient orientation histogram.

As far as color is concerned, given its high inter-class similarity and high intra-class variance for many species, we initially did not expect a high discriminatory performance. All the same, considering its potential, to eliminate certain species or help identify those with a distinct color, we did some experiments to test the pertinence of the few standard color descriptors. Specifically, we tested the RGB histogram (4 bins per channel), color moments [60] and the saturation-weighted hue histogram [59] that was explained previously.

The best color descriptor was color moments with \(\sim \)25 % accuracy. Unfortunately, color information do not contribute to performance, when used in addition to shape and texture descriptors, as shown in Table 3.

4.3 Overall results

Results on recognizing isolated leaf images, obtained with different combinations of feature groups, are given in Table 3. As shown in this table, the best classification performance is obtained using the 142-dimensional feature vector consisting of shape and texture features, as 60.97 %; the classification accuracy reaches 80.69 % when considering top-5 classification rates.

For the photo category consisting of leaf or foliage images on natural background, we again obtained the best results with shape and texture features, achieving a top-1 classification rate of 8.49 % and top-5 classification rate of 22.15 %. Notice that in this case, the segmentation aims to isolate a single leaf in the image, which often results in noisy contours. Furthermore, the preprocessing step occasionally fails in normalizing the orientation of the leaf, which then affects the extracted features.

When measuring performance with the average inverse-rank metric, the proposed system obtains a score of 0.69 for isolated leaf images and 0.13 in recognizing photographs from automatically detected leaves in the photograph.

Part of the difference between the official scores given in [11] is due to the fact that the official metric averages the inverse-rank scores across all photographers, with the aim to better reflect the image retrieval performance in a potential end-user service. When evaluated with this metric, within the ImageCLEF’2012 plant identification campaign, a slightly earlier version of the system described here achieved a score of 0.58 on scanned leaves; 0.55 on scan-like leaf images, and 0.16 on foliage photos. The best result obtained in the campaign were 0.58, 0.59 and 0.32 for scan, scan-like and foliage photo categories, respectively, each obtained by a different research group.

4.4 Efficiency

Once a leaf is segmented from an image, our shape features are extracted efficiently. While some of our shape features, such as perimeter convexity, area convexity and elongation, require only the binary segmentation mask, the contour-based shape features, such as FFT, ACH and CPDH, require the contour of the segmented leaf.

In contrast, texture features are more demanding. For example, the extraction of Gabor features requires a convolution of the image with 40 different Gabor filters and the computation of the orientation histogram requires the convolution of the image with an edge detection operator.

To train/test the SVM using the concatenation of the features, we use the well-known LibSVM package. The total training time of the SVM along with the grid search for parameters takes less than 5 min on a 2.00-GHz computer.

5 Discussion and future work

This paper has focused on the problem of plant identification, an emerging application field enjoying increasing interest from the computer vision and machine learning communities. This interest is due to the significant practical need by botanists for such a solution, as well as to the considerable commercial potential of the solution. The plant identification problem is shown to be far from easy, since besides the usual challenges surrounding object recognition, it possesses additional difficulties, such as very high intra-class content variation. Consequently, many of the prominent solutions employed for general-purpose content-based image recognition and retrieval fail to deliver desired accuracy levels.

The proposed system is developed in the context of the ImageCLEF plant identification campaigns. It employs an automatic leaf-based plant characterization approach, equipped with state-of-the-art solutions for segmentation, content description and classification. Our segmentation method relies on color quasi-flat zone-based simplification. Given the large variability in the problem and the small sample size for many of the plant species, we focused on finding the optimal set of features to reduce over-fitting and improve generalization performance.

The results obtained on the publicly available ImageCLEF’2012 plant database show a 61 % classification accuracy on recognizing the plant from an isolated leaf image, among 126 plant classes. This result indicates the state-of-the-art in identifying plants from isolated leaves, as variants of the presented system obtained the best overall result in the ImageCLEF’2012 campaign and the best result in this category in the ImageCLEF’2013 campaign.

As for the classification of foliage photos with natural backgrounds, the results are far from satisfactory. Nonetheless, we believe that the presented approach of isolating a single leaf from the image is an important approach to the problem, as it can complement one using local descriptors (e.g. SIFT), especially in images where the background covers a large area. Furthermore, segmenting a single leaf can be better accomplished using statistical shape models (e.g. active appearance models).

While the challenge of plant identification is far from resolved, performance results observed in ImageCLEF campaigns have shown a slight increase, while the considered plant species almost doubled every year since 2011. Furthermore, the top-5 classification accuracies indicate the applicability of the plant identification and retrieval technologies for user-assisted tasks.

Our future work will focus primarily on complementary approaches based on local descriptors and feature description capabilities. In particular, we intend to adapt tree-based image representations from mathematical morphology to this context, design content descriptors specifically for botanical objects and investigate the potential of human-assisted feature extraction. In addition, we will work on improving the segmentation and recognition of leaves from foliage photos.

References

Belhumeur, P.N., Chen, D., Feiner, S., Jacobs, D.W., Kress, W.J., Ling, H., Lopez, I., Ramamoorthi, R., Sheorey, S., White, S., Zhang, L.: Searching the world’s herbaria: a system for visual identification of plant species. In: European Conference on Computer Vision, pp. 116–129, Marseille (2008)

Bruno, O.M., Plotze, R.de O., Falvo, M., de Castro, M.: Fractal dimension applied to plant identification. Inf. Sci. 178(12), 2722–2733 (2008)

Goëau, H., Bonnet, P., Joly, A., Boujemaa, N., Barthelemy, D., Molino, J., Birnbaum, P., Mouysset, E., Picard, M.: The CLEF 2011 plant image classification task. In: CLEF 2011 Working Notes, Amsterdam (2011)

Neto, J.C., Meyer, G.E., Jones, D.D.: Individual leaf extractions from young canopy images using Gustafson–Kessel clustering and a genetic algorithm. Comput. Electron. Agric. 51(1–2), 66–85 (2006)

Yahiaoui, I., Hervé, N., Boujemaa, N.: Shape-based image retrieval in botanical collections. In: Pacific-Rim Conference on Multimedia, pp. 357–364, Hangzhou (2006)

Park, J., Hwang, E., Nam, Y.: Utilizing venation features for efficient leaf image retrieval. J. Syst. Softw. 81(1), 71–82 (2008)

Wang, X.-F., Huang, D.-S., Du, J.-X., Xu, H., Heutte, L.: Classification of plant leaf images with complicated background. Appl. Math. Comput. 205(2), 916–926 (2008)

Teng, C.H., Kuo, Y.T., Chen, Y.S.: Leaf segmentation, its 3d position estimation and leaf classification from a few images with very close viewpoints. In: International Conference on Image Analysis and Recognition, pp. 937–946, Halifax (2009)

Villena-Román, J., Lana-Serrano, S., González-Cristóbal, J.C.: In: Proceeding of CLEF 2011 Labs and Workshop, Notebook Papers. Amsterdam, The Netherlands (2011)

Paris, S., Halkias, X., Glotin, H.: Participation of LSIS/DYNI to ImageCLEF 2012 plant images classification task. In: Proceeding of CLEF 2012 Labs and Workshop, Notebook Papers. Rome, Italy (2012)

Goëau, H., Bonnet, P., Joly, A., Yahiaoui, I., Barthelemy, D., Boujemaa, N., Molino, J.: The ImageCLEF 2012 plant image identification task. In: ImageCLEF 2012 Working Notes, Rome (2012)

Wang, Z., Chi, Z., Feng, D.: Shape based leaf image retrieval. IEE Proc. Vis. Image Signal Process. 150(1), 34–43 (2003)

Wang, Z., Chi, Z., Feng, D., Wang, Q.: Leaf image retrieval with shape features. In: Proceedings of the International Conference on Advances in Visual Information Systems, pp. 477–487, London (2000)

Wang, Z., Lu, B., Chi, Z., Feng, D.: Leaf image classification with shape context and sift descriptors. In: International Conference on Digital Image Computing Techniques and Applications, pp. 650–654, Queensland (2011)

Neto, J.C., Meyer, G.E., Jones, D.D., Samal, A.K.: Plant species identification using elliptic Fourier leaf shape analysis. Comput. Electron. Agric. 50(2), 121–134 (2006)

Nam, Y., Hwang, E., Kim, D.: A similarity-based leaf image retrieval scheme: joining shape and venation features. Comput. Vis. Image Underst. 110(2), 245–259 (2008)

Lin, F., Zheng, C., Wang, X., Man, Q.: Multiple classification of plant leaves based on Gabor transform and LBP operator. In: Communications in Computer and Information Science, pp. 432–439, Shanghai (2008)

Beghin, T., Cope, J.S., Remagnino, P., Barman, S.: Shape and texture based plant leaf classification. In: Advanced Concepts for Intelligent Visual Systems, pp. 345–353, Sydney (2010)

Hussein, A.N., Mashohor, S., Saripan, M.I.: A texture-based approach for content based image retrieval system for plant leaves images. In: International Colloquium on Signal Processing and its Applications, pp. 11–14, Penang (2011)

Man, Q., Zheng, C., Wang, X., Lin, F.: Recognition of plant leaves using support vector machine. In: Communications in Computer and Information Science, vol. 15, pp. 192–199, Shanghai (2008)

Chen, S.Y., Lee, C.L.: Classification of leaf images. Int. J. Imaging Syst. Technol. 16(1), 15–23 (2006)

Hossain, J., Amin, M.A.: Leaf shape identification based plant biometrics. In: International Conference on Computer and Information Technology, pp. 458–463, Dhaka (2010)

Du, J.X., Wang, X.-F., Zhang, G.-J.: Leaf shape based plant species recognition. Appl. Math. Comput. 185(2), 883–893 (2007)

Backes, A.R., Bruno, O.M.: Shape classification using complex network and multi-scale fractal dimension. Pattern Recogn. Lett. 31(1), 44–51 (2010)

Casanova, D., Florindo, J.B., Gonçalves, W.N., Bruno, O.M.: IFSC/USP at ImageCLEF 2012: plant identification task. In: Proceeding of CLEF 2012 Labs and Workshop, Notebook Papers. Rome, Italy (2012)

Zhang, S., Lei, Y., Dong, T., Zhang, X.-P.: Label propagation based supervised locality projection analysis for plant leaf classification. Pattern Recogn. 46(7), 1891–1897 (2013)

Manh, A.G., Rabatel, G., Assemat, L., Aldon, M.J.: Weed leaf image segmentation by deformable templates. J. Agric. Eng. Res. 80(2), 139–146 (2001)

Tang, X., Liu, M., Zhao, H., Tao, W.: Leaf extraction from complicated background. In: 2nd International Congress on Image and Signal Processing, pp. 1–5, Tianjin (2009)

Valliammal, N., Geethalakshmi, S.N.: Hybrid image segmentation algorithm for leaf recognition and characterization. In: International Conference on Process Automation, Control and Computing, pp. 1–6, Tamilnadu (2011)

Kebapci, H., Yanikoglu, B., Unal, G.: Plant image retrieval using color, shape and texture features. Comput. J. 53(1), 1–16 (2010)

White, S.M., Marino, D., Feiner, S.: Designing a mobile user interface for automated species identification. In: Conference on Human Factors in Computing Systems, pp. 291–294, San Jose (2007)

Nilsback, M.E., Zisserman, A.: Delving deeper into the whorl of flower segmentation. Image Vis. Comput. 28(6), 1049–1062 (2009)

Saitoh, T., Aoki, K., Kaneko, T.: Automatic recognition of blooming flowers. In: International Conference on Pattern Recognition, vol. 1, pp. 27–30, Cambridge (2004)

Söderkvist, O.J.O.: Computer vision classification of leaves from Swedish trees. Master’s thesis, Linköping University, Linköping (2001)

The ICL leaf dataset. http://www.intelengine.cn/English/dataset/ (2010). Accessed Mar 2014

Agarwal, G., Belhumeur, P., Feiner, S., Jacobs, D., Kress, J.W.R., Ramamoorthi, N.B., Dixit, N., Ling, H., Russell, D., Mahajan, R., Shirdhonkar, S., Sunkavalli, K., White, S.: First steps toward an electronic field guide for plants. Taxon 55(3), 597–610 (2006)

Abbasi, S., Mokhtarian, F., Kittler, J.: Reliable classification of chrysanthemum leaves through curvature scale space. In: International Conference on Scale-Space Theory in Computer Vision, pp. 284–295, Utrecht (1997)

Mokhtarian, F., Abbasi, S.: Matching shapes with self-intersections: application to leaf classification. IEEE Trans. Image Process. 13(5), 653–661 (2004)

Im, C., Nishida, H., Kunii, T.L.: Recognizing plant species by leaf shapes—a case study of the acer family. In: International Conference on Pattern Recognition, vol. 2, pp. 1171–1173, Brisbane (1998)

Pérez, A.J., López, F., Benlloch, J.V., Christensen, S.: Colour and shape analysis techniques for weed detection in cereal fields. Comput. Electron. Agric. 25(3), 197–212 (2000)

Arora, A., Gupta, A., Bagmar, N., Mishra, S., Bhattacharya, A.: A plant identification system using shape and morphological features on segmented leaflets: team IITK, CLEF 2012. In: Proceeding of CLEF 2012 Labs and Workshop, Notebook Papers. Rome, Italy (2012)

Bakic, V., Yahiaoui, I., Mouine, S., Litayem, S., Ouertani, W., Verroust-Blondet, A., Goëau, H., Joly, A.: Inria IMEDIA2’s participation at ImageCLEF 2012 plant identification task. In: Proceeding of CLEF 2012 Labs and Workshop, Notebook Papers. Rome, Italy (2012)

Zheng, P., Zhao, Z.-Q., Glotin, H.: ZhaoHFUT at ImageCLEF 2012 plant identification task. In: Proceeding of CLEF 2012 Labs and Workshop, Notebook Papers. Rome, Italy (2012)

Böttcher, T., Schmidt, C., Zellhöfer, D., Schmitt, I.: BTU DBIS’ plant identification runs at ImageCLEF 2012. In: Proceeding of CLEF 2012 Labs and Workshop, Notebook Papers. Rome, Italy (2012)

Manjunath, B.S., Ohm, J.R., Vasudevan, V.V., Yamada, A.: Color and texture descriptors. IEEE Trans. Circuits Syst. Video Technol. 11(6), 703–715 (2001)

Yanikoglu, B., Aptoula, E., Tirkaz, C.: Sabanci-Okan system at ImageCLEF 2012: combining features and classifiers for plant identification. In: CLEF Working Notes, Rome (2012)

Otsu, N.: A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 9(1), 62–66 (1979)

Soille, P.: Constrained connectivity for hierarchical image partitioning and simplification. IEEE Trans. Pattern Anal. Mach. Intell. 30(7), 1132–1145 (2008)

Aptoula, E., Lefèvre, S.: A basin morphology approach to colour image segmentation by region merging. In: Proceedings of the Asian Conference in Computer Vision, vol. 4843, pp. 935–944, Tokyo (2007)

Aptoula, E., Lefèvre, S.: On the morphological processing of hue. Image Vis. Comput. 27(9), 1394–1401 (2009)

Beucher, S., Meyer, F.: The morphological approach to segmentation: the watershed transformation. In: Dougherty, E.R. (ed.) Mathematical Morphology in Image Processing, pp. 433–482. Dekker, New York (1993)

Knight, D., Painter, J., Potter, M.: Automatic plant leaf classification for a mobile field guide (2010)

Shu, X., Wu, X.-J.: A novel contour descriptor for 2d shape matching and its application to image retrieval. Image Vis. Comput. 29(4), 286–294 (2011)

Aptoula, E.: Comparative study of moment based parameterization for morphological texture description. J. Vis. Commun. Image Represent. 23(8), 1213–1224 (2012)

Aptoula, E.: Extending morphological covariance. Pattern Recogn. 45(12), 4524–4535 (2012)

Yanikoglu, B., Aptoula, E., Tirkaz, C.: Sabanci-Okan system at ImageCLEF 2011: plant identification task. In: CLEF Working Notes, Amsterdam (2011)

Ma, W.Y., Manjunath, B.S.: Texture features and learning similarity. In: Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), pp. 425–430 (1996)

Manjunath, B.S., Ma, W.Y.: Texture features for browsing and retrieval of image data. IEEE Trans. Pattern Anal. Mach. Intell. 18(8), 837–842 (1996)

Hanbury, A.: Circular statistics applied to colour images. In: Computer Vision Winter Workshop, pp. 55–60, Valtice (2003)

Mindru, F., Tuytelaars, T., Van Gool, L., Moons, T.: Moment invariants for recognition under changing viewpoint and illumination. Comput. Vis. Image Underst. 94(1–3), 3–27 (2004)

Acknowledgments

The authors would like to thank the anonymous reviewers, whose remarks helped improve this article substantially.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yanikoglu, B., Aptoula, E. & Tirkaz, C. Automatic plant identification from photographs. Machine Vision and Applications 25, 1369–1383 (2014). https://doi.org/10.1007/s00138-014-0612-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00138-014-0612-7