Abstract

The global asymptotic stability of impulsive stochastic Cohen–Grossberg neural networks with mixed delays and reaction–diffusion terms is investigated. Under some suitable assumptions and using Lyapunov–Krasovskii functional method, we apply the linear matrix inequality technique to propose some new sufficient conditions for the global asymptotic stability of the addressed model in the stochastic sense. The mixed time delays comprise both the time-varying and continuously distributed delays. The effectiveness of the theoretical result is illustrated by a numerical example.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Cohen–Grossberg neural network (CGNN) was first introduced by Cohen and Grossberg (1983). In recent years, CGNN, which includes the famous Hopfield neural networks, cellular neural networks and Lotka–Volterra competition models as its special cases, has received extensive attention because of great range of applications in many areas such as optimization, pattern recognition, associative memory, robotics and computer vision. In such application, it is of prime importance to ensure that the designed neural networks is stable (Zhang and Wang 2008; Yang and Cao 2014; Qi et al. 2014; Yang et al. 2014; Li and Xu 2012; Zhou et al. 2007; Li and Song 2008, 2013; Li and Shen 2010; Li et al. 2010).

In implementation of neural networks, time delays are unavoidable due to the finite switching speed of neurons and amplifiers. It has been found that the existence of time delays may lead to instability and oscillation in a neural network (Wang et al. 2006; Li 2010; Pan and Zhong 2010; Zhang et al. 2011; Zhang and Luo 2012; Qiu 2007; Liu et al. 2011; Yang et al. 2010; Li and Li 2009). For example, Wang et al. (2006) considered the asymptotic stability of stochastic CGNNs with mixed time delays by using Lyapunov–Krasovskii functional and LMI technology.

In practice, a real system is usually affected by external perturbations which in many cases are of great uncertainty. Hence, it is necessary to consider the stochastic effects to the stability property of the neural networks. On the other hand, as we have known, artificial neural networks often are subject to impulsive perturbations which can affect dynamical behaviors of the systems. Moreover, those perturbations often may make stable systems unstable or unstable systems stable. Therefore, impulsive effects should also be taken into account (Li et al. 2011; Fu and Li 2011; Li and Xu 2012; Wang and Xu 2009; Zhang et al. 2012; Hespanha et al. 2008; Wan and Zhou 2008; Li and Li 2009). Fu and Li (2011) investigated the asymptotic stability of impulsive stochastic CGNNs with mixed time delays by using Lyapunov–Krasovskii functional and LMI technology.

However, diffusion effects cannot be avoided in the network when electrons are moving in asymmetric electromagnetic fields. Hence, it is essential to consider the state variables are varying with the time and space variables. Some criteria on global exponential stability have been obtained in recent years (Wan and Zhou 2008; Li and Li 2009; Wang and Zhang 2010; Li et al. 2012; Pan et al. 2010; Zhu et al. 2011; Zhou et al. 2012). Wan and Zhou (2008) investigated the exponential stability of stochastic reaction–diffusion CGNNs with delays. Li and Li (2009) and Wang and Zhang (2010) investigated the asymptotic stability of impulsive CGNNs with distributed delays and reaction–diffusion by using M-matrix theory and LMI technology. Li et al. (2012) investigated the mean square exponential stability of impulsive stochastic reaction–diffusion CGNNs with delays. But in their deduction and results the diffusion term does not have any effect.

It is known in the theory of partial differential equations Poincare integral inequality is often used in the deduction of diffusion. Pan et al. (2010), Zhu et al. (2011) and Zhou et al. (2012) studied reaction–diffusion neural networks with Neumann boundary conditions by using Poincare integral inequality.

Motivated by the above discussions, our objective in this paper is to investigated the asymptotic stablity in the mean square of impulsive stochastic CGNNs with mixed delays and Reaction–diffusion terms. By using Lyapunov–Krasovskii functional method, LMI technique (Boyd et al. 1994) and Poincaré inequality, some results are obtained in terms of LMI, which can be easily calculated by MATLAB LMI toolbox.

The rest of the paper is organized as follows. In second section, we introduce the model and some preliminaries. In third section, we give two main results and their proof. And then we give a numerical example to show the effectiveness of the obtained results in forth section. Finally, we conclude our results.

Problem statement and preliminaries

In this paper, we will use the notation \(\fancyscript{A}>0\) or \(\fancyscript{A}<0\) to denote that the matrix \(\fancyscript{A}\) is a symmetric and positive definite or negative definite matrix. The notation \(\fancyscript{A}^T\) and \(\fancyscript{A}^{-1}\) mean the transpose of \(\fancyscript{A}\) and the inverse of a square matrix. If \(\fancyscript{A}\) and \(\fancyscript{B}\) are symmetric matrices, \(\fancyscript{A}>\fancyscript{B}\) means that \(\fancyscript{A}-\fancyscript{B}\) is positive definite matrix. \(I\) denotes the identity matrix. Moreover, the notation \(*\) always denotes the symmetric block in one symmetric matrix.

Consider the following impulsive stochastic CGNNs with mixed delays and reaction–diffusion terms

where \(i\in N=\{1,2,\ldots, n\}\), corresponds to the number of units in a neural network; \(x=(x_1,\ldots, x_m)^T\in X\), \(X\) is a compact set with smooth boundary \(\partial X\) and \({\textit{mesX}}>0\) in space \(R^m\), where mesX is the measure of the set \(X\); \(y_i(t,x)\) represents the state of the ith neuron at time \(t\) and in space \(x\); \(a_i(y_i(t,x))\) presents an amplification function; \(f_j,g_j,\bar{g}_j,\tilde{g}_j\) denote the activation functions of the \(j\)th neuron at time \(t\) in space \(x\); \(c_{ij},d_{ij},\bar{d}_{ij},\tilde{d}_{ij}\) denote the connection strengths of the \(j\)th unit on the \(i\)th unit, respectively; \(\tau (t)\) corresponds to the transmission delay and satisfies \(0\le \tau (t)\le \tau \), \(\dot{\tau }(t)\le \rho <1,\) and \(0\le \mu (t)\le \mu \), \(\tau, \mu \) are some real constants. \(\omega (t)=(\omega _1(t),\ldots, \omega _n(t))\) is \(n-\)dimensional Brownian motion defined on a complete probability space \((\Omega,\fancyscript{F},P)\) with a natural filtration \(\{\fancyscript{F}\}_{t\ge 0}\) generated by \(\{\omega (s):0\le s\le t\}\), where we associate \(\Omega \) with the canonical space generated by \(\omega (t)\), and denote by \(\fancyscript{F}\) the associated \(\sigma \)-algebra generated by \(\omega (t)\) with the probability measure \(P\). \(w_{ik}\ge 0\) is diffusion coefficient that corresponds to the transmission diffusion coefficient along the \(i\)th neuron.

The Neumann boundary condition and initial conditions of system (1) are given by

Throughout this paper, we make the following assumptions:

-

(H1) Each function \(a_i(u)\) is bounded, positive and continuous, i.e., there exist constants \(\overline{a}_i, \underline{a}_i\), such that \(0<\underline{a}_i\le a_i(u)\le \overline{a}_i\), for \(u\in R, i\in N\).

-

(H2) \(\dfrac{b_i(s_1)-b_i(s_2)}{s_1-s_2}\ge b_i>0,\) for all \(i\in N\) and \(s_1,s_2\in R (s_1\ne s_2)\).

-

(H3) \(f_j,g_j,\bar{g}_j,\tilde{g}_j\) are Lipschitz continuous with Lipschitz constant \(F_j,L_j,\bar{L}_j,\tilde{L}_j\), respectively, for \(j\in N\).

-

(H4) The delay kernel \(k_{j}(\cdot ):[0,+\infty )\rightarrow [0,+\infty ),j\in N\) are real-valued nonnegative continuous functions that satisfy \(\int _0^{+\infty }k_{j}(s)ds=1,\)

-

(H5) The diffusion coefficient \(\sigma (\cdot )=(\sigma _{ij})\) is local Lipschitz continuous and satisfies the linear growth condition. Moreover, there exist \(n\times n\) dimension matrix \(\Gamma _j>0,j=0,1,\ldots,n\) such that

$$\begin{aligned} trace[\sigma ^T\sigma ]\le y^T(t,x)\Gamma _1 y(t,x)+ y^T(t-\tau (t),x)\Gamma _2 y(t-\tau (t),x). \end{aligned}$$ -

(H6) The impulsive times \(t_k\) satisfy \(0<t_0<t_1<\cdots <t_k<t_{k+1}<\cdots, \lim _{k\rightarrow \infty }t_k=\infty \).

-

(H7) \(b_i(0)=f_j(0)=g_j(0)=\bar{g}_j(0)=\tilde{g}_j(0)=0\), \(\sigma (0,0,0)=0\).

Let \(L^2(X)\) be the space of scalar value Lebesgue measurable function on X and be a Banach space for the \(L_2\)-norm

Then for any \(u=(u_1,u_2,\ldots,u_n)^T\), the norm \(\Vert u\Vert \) is defined as

Definition 2.1

The trivial solution of model (1) is said to be globally stochastically asymptotic stable in the mean square if the following condition holds for any initial condition \(\varphi \in C_{\fancyscript{F}}^2\):

Lemma 2.2

(Poincaré Integral Inequality, Temam 1998) Let \(\Omega \subset R^m(m>2)\) be abounded open set containing the origin. \(v(x)\in H_0^1(\Omega )=\{\omega \vert \omega \vert _{\partial \Omega }=0, \omega \in L^{2}(\Omega ), D_{i}\omega =\frac{\partial \omega }{\partial x_{i}}\in L^{2}(\Omega ), 1\le i\le m\}\) and \(\frac{\partial v(x)}{\partial m}\mid _{\partial \Omega }=0\). Then

where \(\lambda _1\) is the smallest positive eigenvalue of the Neumann boundary problem

Lemma 2.3

(Schur complement, Boyd et al. 1994) For a given matrix

where \(S^T_{11}=S_{11},S^T_{22}=S_{22}\), is equivalent to any one of the following conditions:

-

1.

\(S_{22}>0,S_{11}-S_{12}S^{-1}_{22}S^T_{12}>0\);

-

2.

\(S_{11}>0,S_{22}-S_{12}^TS^{-1}_{11}S_{12}>0\);

Lemma 2.4

For any constant matrix \(\Delta \in R^{n\times n},\Delta =\Delta ^T\),scalar a and b with \(a<b\),vector function \(\delta (t):[a,b]\rightarrow R^n\),such that the integrations concerned are well defined, then

Lemma 2.5

For any n-dimensional real vectors \(x,y,\varepsilon >0\) and positive definite matrix \(P\in R^{n\times n}\), the following matrix inequality hold.

Main results

Theorem 3.1

If assumptions (H1)–(H7) hold, and there exist diagonal matrix \(P>0, H>0\) and symmetric matrices \(Q, R>0\), such that the following matrix inequalities hold:

-

(a)

$$\begin{aligned} \Xi ^\star =\left( \begin{array}{ccccc} \Sigma & P\overline{A}C+F & P\overline{A}D &P\overline{A}\bar{D} &P\overline{A}\tilde{D}\\ *& -I & 0& 0 & 0\\ *& *& -Q& 0 & 0\\ *& *& *& -R & 0\\ *&*&*& *& -H\\ \end{array}\right) <0 \end{aligned}$$(4)

where \(\lambda _1\) is the smallest positive eigenvalue of the Neumann boundary problem (2),

$$\begin{aligned} \Sigma&= -2\lambda _1 PW-2P\underline{A}B+\lambda _2\Gamma _1+\frac{\lambda _2}{1-\rho } \Gamma _2+\frac{1}{1-\rho }L^TQL+\mu ^2\bar{L}^TR\bar{L}+\tilde{L}^TH\tilde{L},\\ \lambda _2&= \lambda _{max}(P), \underline{A}={\textit{diag}}\{\underline{a}_1, \underline{a}_2,\ldots,\underline{a}_n\}, \overline{A}={\textit{diag}}\{\overline{a}_1,\overline{a}_2,\ldots,\overline{a}_n\},\\ W&= {\textit{diag}}\{w_1,w_2,\ldots,w_n\},w_i={\textit{min}}_{1\le k\le m}\{w_{ik}\},\\ C&= (c_{ij})_{n\times n},\\ D&= (d_{ij})_{n\times n},\bar{D}=(\bar{d}_{ij})_{n\times n},\quad \tilde{D}=(\tilde{d}_{ij})_{n\times n}. \end{aligned}$$ -

(b)

\(J_{ik}(y_i(t_k^-,x))=-r_{ik}y_i(t_k^-,x)\), and \(r_{ik}\in [0,2]\). Then the equilibrium point of system (1) is globally stochastically asymptotically stable in the mean square.

Proof

Construct the following Lyapunov–Krasovskii functional:

where

Then, we shall compute \(\fancyscript{L}V_1,\fancyscript{L}V_2,\fancyscript{L}V_3,\fancyscript{L}V_4,\fancyscript{L}V_5\) along the trajectories of the model (1), respectively.

From Lemma 2.4 and the fact that \(0\le \mu (t)\le \mu \), we get

By well-known Cauchy–Schwarz inequality, we know

By using the Poincaré inequality, the Green formula and the boundary condition, it is easy to calculate that

By the same way, we can obtain

where \(\Xi ={-2\lambda _1}PW-2P\underline{A}B+ P\bar{A}CC^T\bar{A}^TP+F^TF+P\bar{A}DQ^{-1}D^T\bar{A}^TP+ P\bar{A}\bar{D}R^{-1}\bar{D}^T\bar{A}^TP+P\bar{A}\tilde{D}H^{-1}\tilde{D}^T\bar{A}^TP+ \lambda _2\Gamma _1+\frac{\lambda _2}{1-\rho }\Gamma _2+\frac{1}{1-\rho }L^TQL +\mu ^2\bar{L}^TR\bar{L}+\tilde{L}^TH\tilde{L}\).

By Lemma 2.3 and our assumption, \(\Xi <0\) if and only if \(\Xi ^\star <0.\) Then, by Dynkin’s formula (Ito and Mckean 1965), for \(t\in (t_k,t_{k+1}],\) we have

Hence, for \(t\in (t_k,t_{k+1}],\) \(EV(t,y(t,x))\le EV(t_k^-,y(t_k^-,x))\).

On the other hand, when \(t=t_k^+,\) we have

Moreover, it is obvious that \(V_2(t_k)=V_2(t_k),V_3(t_k)=V_3(t_k),V_4(t_k)=V_4(t_k^-)\). Hence we get \(V(t_k)\le V(t_k^-), EV(t_k)\le EV(t_k^-).\)

By Lyapnov–Krasovskii stability theorem, we have \(\lim \limits _{t\rightarrow +\infty }E\Vert y\Vert ^2=0\). Then the equilibrium point of (1) is globally stochastically asymptotically stable in the mean square. The proof is completed. \(\square \)

Remark 3.2

From the conditions of Theorem 3.1, we can know that the diffusion coefficient, the Nemman boundary conditions, the delays, the stochastic perturbations and system parameters have key effect on the stability of system 2.1.

Remark 3.3

If we set \(w_{ik}=0\), system 2.1 reduces to the following impulsive stochastic CGNNs with mixed delays:

Constructing Lyapunov functional 3.2 for system (19), by a tiny change, we can obtain the following result.

Corollary 3.4

If assumptions (H1)–(H6) hold, and there exist diagonal matrix \(P>0,H>0\) and a symmetric matrix \(Q,R>0\), such that the following matrix inequalities hold:

-

1.

$$\begin{aligned} \Xi ^\star =\left( \begin{array}{ccccc} \Sigma & P\overline{A}C+F & P\overline{A}D &P\overline{A}\bar{D} &P\overline{A}\tilde{D}\\ *& -I & 0& 0 & 0\\ *& *& -Q& 0 & 0\\ *& *& *& -R & 0\\ *&*&*& *& -H\\ \end{array}\right) <0 \end{aligned}$$(20)

where \(\lambda _1\) is the smallest positive eigenvalue of the Neumann boundary problem (2),

$$\begin{aligned} \Sigma =-2P\underline{A}B+\lambda _2\Gamma _1+\frac{1}{1-\rho }L^TQL+\mu ^2\bar{L} ^TR\bar{L}+\tilde{L}^TH\tilde{L}, \end{aligned}$$$$\begin{aligned} \lambda _2=\lambda _{max}(P),\underline{A}={\textit{diag}}\{\underline{a}_1,\underline{a}_2, \ldots,\underline{a}_n\}, \overline{A}={\textit{diag}}\{\overline{a}_1,\overline{a}_2,\ldots,\overline{a}_n\}, \end{aligned}$$$$\begin{aligned} W={\textit{diag}}\{w_1,w_2,\ldots,w_n\},w_i={\textit{min}}_{1\le k\le m}\{w_{ik}\}, C=(c_{ij})_{n\times n}, \end{aligned}$$$$\begin{aligned} D=(d_{ij})_{n\times n},\bar{D}=(\bar{d}_{ij})_{n\times n},\tilde{D}=(\tilde{d}_{ij})_{n\times n}. \end{aligned}$$ -

2.

\(J_{ik}(y_i(t_k^-,x))=-r_{ik}y_i(t_k^-,x)\), and \(r_{ik}\in [0,2]\). Then the equilibrium point of system (1) is globally stochastically asymptotically stable in the mean square.

Numerical example

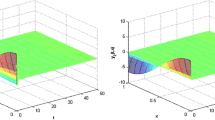

In order to illustrate the feasibility of the present criteria, we provide a concrete example.

Example 4.1

Consider the following impulsive stochastic CGNNs with mixed delays and reaction–diffusion terms

where the activation function is described by \(\Omega =\{(x_1,x_2)\mid |x_j|<\sqrt{2},j=1,2\},w_1=w_2=0.05,a_1(s)=a_2(s)=1.5 +0.5sins,b_1(s)=b_2(s)=2.16s,\quad f_1(s)=g_1(s)=\bar{g}_1(s)=\tilde{g}_1(s)=\frac{|s+1|-|s-1|}{20},\quad f_2(s) =g_2(s)=\bar{g}_2(s)=\tilde{g}_2(s)=tanh(s)\), \(t_k-t_{k-1}=0.3, \tau (t)=0.6-0.5sint, \mu (t)=0.06+0.04cost,k_{j}(s)=se^{-s}, \gamma _{ik}=1.5,\)

By simple calculation, we have \(\lambda _1=1,\rho =0.5,\mu =0.1\),

Using the Matlab LMI Control Toolbox in Matlab to solve the LMI (4), we get

Then by Matlab software, we get \(\lambda _{max}(\Xi ^\star )=-2.1396<0\). By Theorem 3.1, the equilibrium point of model (21) is globally stochastically asymptotically stable in the mean square, which is shown in Fig. 1.

If \(W=0\), we get

Then by Matlab software, we get \(\lambda _{max}(\Xi ^\star )=-2.0020<0\). By Corollary 3.4, the equilibrium point of model (21) is globally stochastically asymptotically stable in the mean square, which is shown in Fig. 2.

References

Boyd S, Ghaoui E, Feron E, Balakrishnan V (1994) Linear matrix inequalities in system and control theory. SIAM, SIAM studies in applied mathematics, Philadelphia

Cohen M, Grossberg S (1983) Absolute stability of global pattern formation and parallel memory storage by competitive neural networks. IEEE Trans Syst Man Cybern 13:815–826

Fu X, Li X (2011) LMI conditions for stability of impulsive stochastic Cohen–Grossberg neural networks with mixed delays. Commun Nolinear Sci Numer Simul 16:435–454

Hespanha J, Liberzon D, teel A (2008) Lyapunov conditions for input-to-state stability of impulsive system. Automatica 44(11):2735–2744

Ito K, Mckean HP (1965) Diffusion orocesses and their sample paths. Springer, Berlin

Li K, Song Q (2008) Exponential stability of impulsive Cohen–Grossberg neural networks with time-varying delays and reaction–diffusion terms. Neurocomputing 72:231–240

Li Z, Li K (2009) Stability analysis of impulsive fuzzy cellular neural networks with distributed delays and reaction–diffusion terms. Chaos Solitons Fractals 42:492–499

Li Z, Li K (2009) Stability analysis of impusive Cohen–Grossberg neural networks with distributed delays and reaction–diffusion terms. Appl Math Model 33:1337–1348

Li X, Shen J (2010) LMI approach for stationary oscillation of interval neural networks with discrete and distributed time varying delays under impulsive perturbations. IEEE Trans Neural Netw 21:1555–1563

Li X, Fu X, Balasubramaniam P, Rakkiyappan R (2010) Existence, uniqueness and stability analysis of recurrent neural networks with time delay in the leakage term under impulsive perturbations. Nonlinear Anal Real World Appl 11:4092–4108

Li X (2010) New results on global exponential stabilization of impulsive functional differential equations with infinite delays or finite delays. Nonlinear Anal Real World Appl 11:4194–4201

Li C, Shi J, Sun J (2011) Stability of impulsive stochastic differential delay systems and its application to impulsive stochastic neural networks. Nolinear Anal 74:3099–3111

Li Z, Xu R (2012) Global asymptotic stability of stochastic reaction–diffusion neural networks with time delays in the leakage terms. Commun Nonlinear Sci Numer Siml 17:1681–1689

Li D, He D, Xu D (2012) Mean square exponential stability of impulsive stochastic reaction–diffusion Cohen–Grossberg neural networks with delays. Math Comput Simul 82:1531–1543

Li B, Xu D (2012) Existence and exponential stability of periodic solution for impulsive Cohen–Grossberg neural networks with time-varying delays. Appl Math Comput 219:2506–2520

Li X, Song S (2013) Impulsive control for stationary oscillation of recurrent neural networks with discrete and continuously distributed delays. IEEE Trans Neural Netw 24:868–877

Liu Z, Zhong S, Yin C, Chen W (2011) On the dynamics of an impulsive reaction–diffusion Predator-Prey system with ratio-dependent functional response. Acta Appl Math 115:329–349

Pan J, Zhong S (2010) Dynamical behaviors of impulsive reaction–diffusion Cohen–Grossberg neural network with delays. Neurocomputing 73:1344–1351

Pan J, Liu X, Zhong S (2010) Stability criteria for impulsive reaction–diffusion Cohen–Grossberg neural networks with time-varying delays. Math Comput Model 51:1037–1050

Qi J, Li C, Huang T (2014) Stability of delayed memristive neural networks with time-varying impulses. Cogn Neurodyn 8:429–436

Qiu J (2007) Exponential stability of impulsive neural networks with time-varying delays and reaction–diffusion terms. Neurocomputing 70:1102–1108

Temam R (1998) Infinite dimensional dynamical systems in mechanics and physics. Springer, New York

Wan L, Zhou Q (2008) Exponential stability of stochastic reaction–diffusion Cohen–Grossberg neural networks with delays. Appl Math Comput 206:818–824

Wang Z, Liu Y, Li M, Liu X (2006) Stability analysis for stochastic Cohen–Grossberg neural networks with mixed time delays. IEEE Trans Neural Netw 17(3):814–820

Wang X, Xu D (2009) Global exponential stability of impulsive fuzzy cellular neural networks with mixed delays and reaction–diffusion terms. Chaos Solitons Fractals 42:2713–2721

Wang Z, Zhang H (2010) Global asymptotic stability of reaction–diffusion Cohen–Grossberg neural networks with continuously distributed delays. IEEE Trans Neural Netw 21:39–49

Yang R, Zhang Z, Shi P (2010) Exponential stability on stochastic neural networks with discrete interval and distributed delays. IEEE Trans Neural Netw 21:169–175

Yang X, Cao J (2014) Exponential synchronization of memristive Cohen–Grossberg neural networks with mixed delays. Cogn Neurodyn 8:239–249

Yang Z, Zhou W, Huang T (2014) Exponential input-to-state stability of recurrent neural networks with multiple time-varying delays. Cogn Neurodyn 8:47–54

Zhang H, Wang Y (2008) Stability analysis of markovian jumping stochastic Cohen–Grossberg neural networks with mixed time delays. IEEE Trans Neural Netw 19:366–370

Zhang X, Wu S, Li K (2011) Delay-dependent exponential stability for impulsive Cohen–Grossberg neural networks with time-varying delays and reaction–diffusion terms. Commun Nonlinear Sci Numer Simul 16:1524–1532

Zhang Y, Luo Q (2012) Global exponential stability of impulsive delayed reaction–diffusion neural networks via Hardy–Poincarè inequality. Neurocomputing 83:198–204

Zhang W, Li J, Chen M (2012) Dynamical behaviors of impulsive stochastic reaction–diffusion neural networks with mixed time delays. Abstr Appl Anal 2012:236562

Zhou Q, Wan L, Sun J (2007) Exponential stability of reaction–diffusion generalized Cohen–Grossberg neural networks with time-varying delays. Chaos Solitons Fractals 32:1713–1719

Zhou C, Zhang H, Zhang H, Dang C (2012) Global exponential stability of impulsive fuzzy Cohen–Grossberg neural networks with mixed delays and reaction–diffusion terms. Neurocomputing 91:67–76

Zhu Q, Li X, Yang X (2011) Exponential stability for stochastic reaction–diffusion BAM neural networks with time-varying and distributed delays. Appl Math Comput 217:6078–6091

Acknowledgments

This publication was made possible by NPRP (Grant No. 4-1162-1-181) from the Qatar National Research Fund (a member of Qatar Foundation). This work was also supported by Natural Science Foundation of China (Grant No. 61374078, 61403313).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Tan, J., Li, C. & Huang, T. The stability of impulsive stochastic Cohen–Grossberg neural networks with mixed delays and reaction–diffusion terms. Cogn Neurodyn 9, 213–220 (2015). https://doi.org/10.1007/s11571-014-9316-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-014-9316-y