Abstract

This paper addresses the stability problem on the memristive neural networks with time-varying impulses. Based on the memristor theory and neural network theory, the model of the memristor-based neural network is established. Different from the most publications on memristive networks with fixed-time impulse effects, we consider the case of time-varying impulses. Both the destabilizing and stabilizing impulses exist in the model simultaneously. Through controlling the time intervals of the stabilizing and destabilizing impulses, we ensure the effect of the impulses is stabilizing. Several sufficient conditions for the globally exponentially stability of memristive neural networks with time-varying impulses are proposed. The simulation results demonstrate the effectiveness of the theoretical results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Memristor was originally postulated by Chua in 1971 (Chua 1971; Chua and Kang 1976) and fabricated by scientists at the Hewlett-Packard (HP) research team (Strukov et al. 2008; Tour and He 2008). It has been proposed as synapse emulation because of their similar transmission characteristics and the particular device advantage such as nanoscale, low energy dissipation which are significant for the designing and optimizing of the neuromorphic circuits (Strukov et al. 2008; Tour and He 2008; Cantley et al. 2011; Kim et al. 2011). Therefore, one can apply memristor to build memristor-based neural networks to emulate the human brain. In recent years, dynamics analysis of memristor-based recurrent neural networks has been attracted increasing attention (Hu and Wang 2010; Wen et al. 2012a, b; Wu et al. 2012; Wen and Zeng 2012; Wang and Shen 2013; Zhang et al. 2012; Wu and Zeng 2012a, b; Guo et al. 2013). In Hu and Wang (2010), the dynamical analysis of memristor-based recurrent neural networks was studied and the global uniform asymptotic stability was investigated by constructing proper Lyapunov functions and using the differential inclusion theory. Following, the stability and synchronization control of memristor-based recurrent neural networks have been further investigated (Wen et al. 2012a, b; Wu et al. 2012; Wen and Zeng 2012; Wang and Shen 2013; Zhang et al. 2012; Wu and Zeng 2012a, b; Guo et al. 2013). As well known, memristor-based neural networks exhibit state-dependent nonlinear switching behaviors because of the abrupt changes at certain instants during the dynamical processes. Therefore, it is more complicated to study the stabilization of memristor-based neural networks. So, recently, many researchers begin to turn their attentions to construct the general memristor-based neural networks and analyze the dynamic behavior (Wen et al. 2012a, b; Wu et al. 2012). In this paper, we focus on the general memristor-based neural networks constructed in Wen et al. (2012a, b), Wu et al. (2012).

In the implementation of artificial memristive neural networks, time delays are unavoidable due to finite switching speeds of amplifiers and may cause undesirable dynamic behavior such as oscillation, instability and chaos (He et al. 2013a, b; Wang et al. 2012). On the other hand, the state of electronic networks is often subject to instantaneous perturbations and experiences abrupt change at certain instants that is the impulsive effects. Therefore, memristive neural networks model with delays and impulsive effects should be more accurate to describe the evolutionary process of the system. During the last few years, there has been increasing interest in the stability problem in delayed impulsive neural networks (Lu et al. 2010; Hu et al. 2012; Chen and Zheng 2009; Yang and Xu 2007; Hu et al. 2010; Liu and Liu 2007; Liu et al. 2011; Lu et al. 2011, 2012; Guan et al. 2006; Yang and Xu 2005; Zhang et al. 2006). In Liu et al. (2011), synchronization for nonlinear stochastic dynamical networks was investigated using pinning impulsive strategy. In Guan et al. (2006), a new class of hybrid impulsive models has been introduced and some good results about asymptotic stability properties have been obtained by using the “average dwell time” concept. In general, there are two kinds of impulsive effects in dynamical systems. An impulsive sequence is said to be destabilizing if the impulsive effects can suppress the stability of dynamical systems. Conversely, an impulsive is said to be stabilizing if it can enhance the stability of dynamic systems. Stability of neural networks with stabilizing impulses or destabilizing has been studied in many papers (Lu et al. 2010; Hu et al. 2012; Chen and Zheng 2009; Yang and Xu 2007; Hu et al. 2010; Liu and Liu 2007; Liu et al. 2011; Lu et al. Lu et al. 2011, 2012; Guan et al. 2006; Yang and Xu 2005; Zhang et al. 2006). When the impulsive effects are stabilizing, the frequency of the impulses should not be too low. In most of the literature (Lu et al. 2010; Hu et al. 2012; Chen and Zheng 2009; Yang and Xu 2007; Hu et al. 2010; Liu and Liu 2007; Liu et al. 2011; Lu et al. 2011, 2012; Guan et al. 2006; Yang and Xu 2005) only investigate the stability problem when the impulses are stabilizing and the upper bound of the impulse intervals is used to guarantee the frequency of the impulses. When the impulsive effects are destabilizing, the lower bound of the impulsive intervals can be used to ensure that the impulses do not occur too frequently. For instance, in the Yang and Xu (2005), Zhang et al. (2006), the authors consider such kind of impulsive effects. In all those literature, it is implicitly assumed that the destabilizing and stabilizing impulses occur separately. However, in practice, many electronic biological systems are often subject to instantaneous disturbance and then exposed to time-varying impulsive strength, and both the destabilizing and stabilizing impulses might exist in the practical systems.

Motivated by the aforementioned discussion, different from the previous works, in this paper, we shall formulate the memristive neural networks with time-varying impulses in which the destabilizing and stabilizing impulse are considered simultaneously and deal with its global exponential stability. The upper and lower bounds of stabilizing and destabilizing impulsive intervals are defined, respectively to describe the impulsive sequences such that the destabilizing impulses do not occur frequently and the frequency of the stabilizing impulses should not be too law. By using the differential inclusion theory and the Lyapunov method, the sufficient criteria will be obtained under the stability of delayed memristor-based neural networks with time-varying impulses is guaranteed.

The organization of this paper is as follows. Model description and the preliminaries are introduced in “Model description and preliminaries” section. Some algebraic conditions concerning global exponential stability are derived in “Main results” section. Numerical simulations are given in “Numerical example” section. Finally, this paper ends by the conclusions in “Conclusions” section.

Model description and preliminaries

Model description

Several memristor-based recurrent neural networks have been constructed, such as those in Hu and Wang (2010), Wen et al. (2012a, b), Wu et al. (2012), Wen and Zeng (2012), Wang and Shen (2013), Zhang et al. (2012), Wu and Zeng (2012a, b), Guo et al. (2013). Based on these works, in this paper, we consider a more general class of memristive neural networks with time-varying impulses described by the following equations:

where \(x_{i} \left( t \right)\) is the state variable of the ith neuron, d i is the ith self-feedback connection weight, \(a_{ij} \left( {x_{i} } \right)\) and \(b_{ij} \left( {x_{i} } \right)\) are, respectively, connection weights and those associated with time delays. I i is the ith external constant input. \(f_{i} \left( \cdot \right)\) and \(g_{i} \left( \cdot \right)\) are the ith activation functions and those associated with time delays satisfying the following Assumption 2. The time-delay\(\tau_{ij} \left( t \right)\) is a bounded function, i.e., 0 ≤ τ ij (t) ≤ τ where τ ≥ 0 is a constant. \(\left\{ {t_{1} ,t_{2} ,t_{3} , \ldots } \right\}\) is a sequence of strictly increasing impulsive moments,\(\alpha_{k} \in R\) represents the strength of impulses. We assume that x i (t) is right continuous at t = t k , i.e., \(x_{i} \left( {t_{k}^{ + } } \right) = \alpha_{k} x_{i} \left( {t_{k}^{ - } } \right)\). Therefore, the solution of (1) are the piecewise right-hand continuous functions with discontinuities at t = t k for \(k \in N_{ + }\).

Remark 1

The parameter α k in the equality \(x_{i} (t_{k}^{ + } ) = \alpha_{k} x_{i} (t_{k}^{ - } )\) describes the influence of impulses on the absolute value of the state. When \(\left| {\alpha_{k} } \right| > 1\), the absolute value of the state is enlarged. Thus the impulses may be viewed as destabilizing impulses. When \(\left| {\alpha_{k} } \right| < 1\), the absolute value of the state is reduced, thus the impulses may be viewed as stabilizing impulses.

Remark 2

In this paper, both stabilizing and destabilizing impulses are considered into the model simultaneously. we assume that the impulsive strengths of destabilizing impulses takes value from a finite set \(\left\{ {\mu_{1} ,\mu_{2} , \ldots ,\mu_{N} } \right\}\) and the impulsive strengths of stabilizing impulses take values from \(\left\{ {\gamma_{1} ,\gamma_{2} , \ldots ,\gamma_{M} } \right\}\), where \(\left| {\mu_{i} } \right| > 1\),\(0 < \left| {\gamma_{j} } \right| < 1\), for \(i = 1,2, \ldots ,N\),\(j = 1,2, \ldots ,M\). We assume that \(t_{ik \uparrow } ,t_{jk \downarrow }\) denote the activation time of the destabilizing impulses with impulsive strength μ i and the activation time of the stabilizing impulses with impulsive strength γ i , respectively. The following assumption is given to enforce the upper and lower bounds of stabilizing and destabilizing impulses, respectively.

Assumption 1

where \(t_{ik \uparrow } ,t_{jk \uparrow } \in \left\{ {t_{1} ,t_{2} ,t_{3} , \ldots } \right\}\).

Assumption 2

For \(i = 1,2, \ldots ,n,\quad\forall \alpha ,\beta \in R,\alpha \ne \beta ,\) then neuron activation function \(f_{i} \left( {x_{i} } \right),g_{i} \left( {x_{i} } \right)\) in (1) are bounded and satisfy

where k i , l i are nonnegative constants.

Preliminaries

For convenience, we first make the following preparations. R + and R n denote, respectively, the set of nonnegative real numbers and the n-dimensional Euclidean space. For\(x \in R^{n}\),\(X \in R^{{n \times n}}\), let \(\left| x \right|\) denotes the Euclidean vector norm, and \(\left\| X \right\| = \sqrt {\lambda_{ \hbox{max} } \left( {X^{T} X} \right)}\)the induced matrix norm. \(\lambda_{ \hbox{min} } \left( \cdot \right)\) and \(\lambda_{ \hbox{max} } \left( \cdot \right)\) denote the minimum and maximum eigenvalues of the corresponding matrix, respectively. For continuous functions \(f\left( t \right):R \to R\),\(D^{ + } f\left( t \right)\) is called the upper right Dini derivative defined as \(D^{ + } f\left( t \right) = \overline{{\mathop {lim}\limits_{{h \to 0^{ + } }} }} \left( {1/h} \right)\left( {f\left( {t + h} \right) - f\left( t \right)} \right)\). N + denotes the set of positive integers.

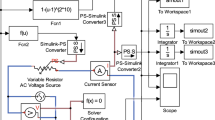

As we well known, memristor is a switchable device. It follows from its construction and fundamental circuit laws that the memristance is nonlinear and time-varying. Hence, the current–voltage characteristic of a memristor showed in Fig. 1. According to piecewise linear model (Hu and Wang 2010; Wen et al. 2012a, b) and the previous work (Wu et al. 2012; Wen and Zeng 2012; Wang and Shen 2013; Zhang et al. 2012; Wu and Zeng 2012a, b; Guo et al. 2013), we let

Here, T i > 0, are memristive switching rules and \(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{d}_{i} > 0, \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{d}_{i} > 0, \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{a}_{ij} ,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{a}_{ij} , \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{b}_{ij} , \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{b}_{ij} ,\) \(i, j = 1, 2,\ldots,n.\) are constants relating to memristance.

Let, for \(i,j = 1,2, \ldots ,n\), \(\overline{d}_{i} = { \hbox{max} }\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{d}_{i} ,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{d}_{i} } \right),\) \(\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{d}_{i} = { \hbox{min} }\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{d}_{i} ,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{d}_{i} } \right),\) \(\bar{a}_{ij} = { \hbox{max} }\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{a}_{ij} ,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{a}_{ij} } \right),\) \(\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{a}_{ij} = { \hbox{min} }\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{a}_{ij} ,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{a}_{ij} } \right),\) \(\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{a}_{ij} = { \hbox{min} }\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{a}_{ij} ,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{a}_{ij} } \right),\) \(\bar{b}_{ij} = max\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{b}_{ij} ,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{b}_{ij} } \right),\) \(\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{b}_{ij} = min\left( {\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{b}_{ij} ,\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{b}_{ij} } \right),\) and \(Co[\overline{\xi}_{i} ,\underline{\xi}_{i} ]\) denote the convex hull of \([\overline{\xi}_{i} ,\underline{\xi}_{i} ]\). Clearly, in this paper, we have \([\overline{\xi}_{i} ,\underline{\xi}_{i} ] = Co[\overline{\xi}_{i} ,\underline{\xi}_{i} ]\).

Now, according to the literature (Hu and Wang 2010; Wen et al. 2012a, b), by applying the theories of set-valued maps and differential inclusion, we have from (1)

Or, equivalently, for \(i,j = 1,2, \ldots ,n,\) there exist

such that

Definition 1

Aconstant vector \(x^{*} = (x_{1}^{*} ,x_{2}^{*} , \ldots ,x_{n}^{*} )^{T}\) is said to be an equilibrium point of network (1), if for \(i,j = 1,2, \ldots ,n,\)

Or, equivalently, for \(i,j = 1,2, \ldots ,n,\) there exist

such that

If \(x^{*} = (x_{1}^{*} ,x_{2}^{*} , \ldots ,x_{n}^{*} )^{T}\) is an equilibrium point of network (1), then by letting\(y_{i} \left( t \right) = x_{i} \left( t \right) - x_{i}^{*}\), \(i = 1,2, \ldots ,n,\) we have

Or, equivalently, there exist \(\mathop d\limits^{ * }_{i} \in {\text{Co}}[\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{d}_{i} ,\bar{d}_{i} ],\mathop a\limits^{ * }_{ij} \in Co[\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{a}_{ij} ,\bar{a}_{ij} ],\mathop b\limits^{ * }_{ij} = Co[\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{b}_{ij} ,\bar{b}_{ij} ],\) such that

Obviously, \(f_{i} \left( {x_{i} } \right),g_{i} \left( {x_{i} } \right)\) satisfy Assumption 1 and we can easily see that \({\bar{\text{f}}}_{i} \left( {x_{i} } \right),\bar{g}_{i} \left( {x_{i} } \right)\) also satisfy the following assumption:

Assumption 3

For \(i = 1,2, \ldots ,n,\quad\forall \alpha ,\beta \in R,\alpha \ne \beta ,\) then neuron activation function \(\bar{f}_{i} (x_{i} ),\bar{g}_{i} (x_{i} )\) in (5) and (6) are bounded and satisfy

where \(i = 1,2, \ldots ,n,k_{i} ,l_{i}\) are nonnegative constants.

Definition 2

If there exist constants \(\gamma > 0\), \(M\left( \gamma \right) > 0\) and \(T_{0} > 0\) such that for any initial values

then system (10) is said to be exponentially stable with exponential convergence rate γ.

For further deriving the global exponential stability conditions, the following lemmas are needed.

Lemma 1

Filippov (1960) Under Assumption2, there is at least a local solution x(t) of system (1) with the initial conditions \(\phi \left( s \right) = \left( {\phi_{1} \left( s \right),\phi_{2} \left( s \right), \ldots \phi_{n} \left( s \right)} \right)^{T}\), \(s \in [ - \tau ,0]\) , which is essentially bounded. Moreover, this local solution x(t) can be extended to the interval \([0, + \infty ]\) in the sense of Filippov.

Under Assumption 2, and \(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{d}_{i} > 0, \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{d}_{i} > 0, \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{a}_{ij} , \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{a}_{ij} , \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{b}_{ij} , \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\smile}$}}{b}_{ij} , I_{i}\), are all constant numbers, from the references (Wu et al. 2012; Hu et al. 2012), in order to study the memristive neural network (1), we can turn to the qualitative analysis of the relevant differential inclusion (3).

Lemma 2

Baæinov and Simeonov (1989) Let \(0 \le \tau_{i} \left( t \right) \le \tau\). \(F\left( {t,u,u_{1} ,u_{2} , \ldots ,u_{m} } \right):R^{ + } \times R \times \cdots \times R \to R\) be nondecreasing in u i for each fixed \(\left( {t,u,u_{1} , \ldots ,u_{i - 1} ,u_{i + 1} , \ldots u_{m} } \right)\), \(i = 1,2, \ldots ,m,\) and \(I_{K} \left( u \right):R \to R\) be nondecreasing in u. Suppose that

and

Then \(u\left( t \right) \le v\left( t \right)\) , for \(- \tau \le t \le 0\) implies that \(u\left( t \right) \le v\left( t \right)\) , for \(t \ge 0\).

In the following section, the paper aims to analysis the globally exponential stability of the system (1).

Main results

The main results of the paper are given in the following theorem.

Theorem 1

Consider the memristive neural networks (1), suppose that Assumptions 1 and 2 hold. Then, the memristive neural network (1) with time-varying impulses will be globally exponentially stable if the following inequality holds

where

here,

Proof

We choose a Lyapunov functional for system (5) or (6) as

The upper and right Dini derivative of V(t) along the trajectories of the system (5) or (6) is

From Assumption 3, we have

where \(i = 1,2, \ldots ,n,\,k_{i} ,l_{i}\) are nonnegative constants.By (11) and (12), we get

By mean-value inequality, we have

By Cauchy–Schwarz inequality, we obtain

and

It follows from (15) and (16) that

where \(p = - \mathop { \hbox{min} }\limits_{1 \le i \le n} \left\{ {\underline{d}_{i} } \right\} + 2\sum\limits_{j = 1}^{n} {\sum\limits_{k = 1}^{n} {\frac{1}{{\underline{d}_{j} }}\mathop a\limits^{ * * 2}_{jk} k_{k}^{2} } }\), \(q = 2\sum\limits_{j = 1}^{n} {\sum\limits_{k = 1}^{n} {\frac{1}{{\underline{\text{d}}_{j} }}} } \mathop b\limits^{ * * 2}_{jk} l_{k}^{2}\), \(t \in (t_{k - 1} ,t_{k} ],k \in N_{ + }\). For t = t k , from the second equation of (1), we get

For any σ > 0, let \(\upsilon \left( t \right)\) be a unique solution of the following impulsive delay system

Note that \(V\left( s \right) \le \left| {\phi \left( s \right)} \right|^{2} = \upsilon \left( s \right)\), for\(- \tau \le s \le 0\). Then it follows from (17), (18) and Lemma 2 that

By the formula for the variation of parameters, it follows from (19) that

where \(W\left( {t,s} \right),t,s \ge 0\) is the Cauchy matrix of linear system

According to the representation of the Cauchy matrix, one can obtain the following estimation

For any t > 0, if there exist an s such that there are N i destabilizing impulses and M j stabilizing impulses in the interval (s, t), then from Assumption 1, we can easily get \(N_{i} \le \frac{t - s}{{\xi_{i} }} + 1\), \(M_{j} \ge \frac{t - s}{{\zeta_{j} }} - 1\), then it follows from Assumption 1 and (22) that

where \(R = \prod\nolimits_{i = 1}^{N} {\prod\nolimits_{j = 1}^{M} {\left| {\frac{{\mu_{i} }}{{\gamma_{i} }}} \right|^{2} } }\), \(\alpha = - \left( {P + \sum\nolimits_{i = 1}^{N} {\frac{{2\ln \left| {\mu_{i} } \right|}}{{\xi_{i} }} + \sum\nolimits_{j = 1}^{M} {\frac{{2\ln \left| {\gamma_{j} } \right|}}{{\zeta_{j} }}} } } \right)\). Let \(\eta = R\sup_{ - \tau \le s \le 0} \left| {\phi \left( s \right)} \right|^{2}\) then we can get that

Let \(h\left( \upsilon \right) = \upsilon - \alpha + Rqe^{\upsilon \tau }\). It follows from \(\alpha - Rq > 0\) that \(h\left( 0 \right) < 0\), \(\mathop {\lim }\limits_{v \to + \infty } h\left( \upsilon \right) = + \infty\), and \(\mathop h\limits^{ \cdot } \left( \upsilon \right) > 0\). Therefore there is unique \(\lambda > 0\) such that \(h\left( \lambda \right) = 0\).On the other hand, it is obvious that \(R^{ - 1} \alpha - 1 > 0\). Hence,

So it suffices to prove

By the contrary, there exist t > 0 such that

We set

Then \(t^{*} > 0\) and \(\upsilon \left( {t^{*} } \right) \ge \eta e^{ - \lambda t} + \frac{\sigma }{{R^{ - 1} \alpha - q}}\). Thus, for \(t \in \left( {0,t^{*} } \right)\),

From (24) and (25), one observes that

This is a contradiction. Thus, \(\upsilon (t) \le \eta e^{ - \lambda t} + \frac{\sigma }{{R^{ - 1} \alpha - q}}\), for t > 0, holds. Letting \(\sigma \to 0\), we can get from (15) that \(V\left( t \right) \le \upsilon \left( t \right) \le \eta e^{ - \lambda t}\). By Definition 1, the solution y(t) of the memristive neural network (1) is exponentially stable. This completes the proof.

In order to show the influence of the stabilizing impulses and destabilizing impulses clearly, we assume that both the destabilizing and stabilizing impulses are time-invariant, i.e., for \(i = 1,2 \ldots ,N,\) \(j = 1,2, \ldots ,M\), \(\mu_{i} = \mu ,\;\gamma_{j} = \gamma ,\;\;\xi_{i} = \xi ,\;\;\zeta_{j} = \zeta ,\;\) \(\;t_{ik \uparrow } = t_{k \uparrow } ,\;\;t_{jk \uparrow } = t_{k \uparrow }\). Then we get the following corollary.

Corollary 1

Consider the memristive neural networks (1). Suppose that Assumptions 1 and 2 hold. Then, the memristive neural network (1) with time-invariant impulses will be globally exponentially stable if the following inequality holds

where

Here, \(\mathop a\limits^{ * * }_{jk} = { \hbox{max} }\{ \left| {\bar{a}_{jk} } \right|,\left| {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{a}_{jk} } \right|\} ,\) \(\mathop b\limits^{ * * }_{jk} = { \hbox{max} }\{ \left| {\bar{b}_{jk} } \right|,\left| {\underset{\raise0.3em\hbox{$\smash{\scriptscriptstyle-}$}}{b}_{jk} } \right|\} ,\) \(i,j,k = 1,2, \ldots ,n\).

Proof

Corollary 1 can be similarly proved as Theorem 1. So the process will be omitted here.

Numerical example

In this section, we will present an example to illustrate the effectiveness of our results. Let us consider a two-dimensional memristive neural network

where\(\begin{aligned} d_{1} \left( {x_{1} } \right) = \left\{ \begin{array}{l} 1.2,\left| {x_{1} \left( t \right)} \right| < 1 \hfill \\ 1,\left| {x_{1} \left( t \right)} \right| > 1 \hfill \\ \end{array} \right.\qquad d_{2} \left( {x_{2} } \right) = \left\{ \begin{array}{l} 1,\left| {x_{2} \left( t \right)} \right| < 1 \hfill \\ 1.2,\left| {x_{2} \left( t \right)} \right| > 1 \hfill \\ \end{array} \right. \hfill \\ a_{11} \left( {x_{1} } \right) = \left\{ \begin{array}{l} \frac{1}{6},\left| {x_{1} \left( t \right)} \right| < 1 \hfill \\ - \frac{1}{6},\left| {x_{1} \left( t \right)} \right| > 1 \hfill \\ \end{array} \right.{\kern 1pt} \quad a_{21} \left( {x_{1} } \right) = \left\{ \begin{array}{l} \frac{1}{5},\left| {x_{1} \left( t \right)} \right| < 1 \hfill \\ - \frac{1}{5},\left| {x_{1} \left( t \right)} \right| > 1 \hfill \\ \end{array} \right. \hfill \\ a_{21} \left( {x_{2} } \right) = \left\{ \begin{array}{l} \frac{1}{5},\left| {x_{2} \left( t \right)} \right| < 1 \hfill \\ - \frac{1}{5},\left| {x_{2} \left( t \right)} \right| > 1 \hfill \\ \end{array} \right.\quad a_{22} \left( {x_{2} } \right) = \left\{ \begin{array}{l} \frac{1}{8},\left| {x_{2} \left( t \right)} \right| < 1 \hfill \\ - \frac{1}{8},\left| {x_{2} \left( t \right)} \right| > 1 \hfill \\ \end{array} \right. \hfill \\ b_{11} \left( {x_{1} } \right) = \left\{ \begin{array}{l}\frac{1}{4},\left| {x_{1} \left( t \right)} \right| < 1 \hfill \\ - \frac{1}{4},\left| {x_{1} \left( t \right)} \right| > 1 \hfill \\ \end{array} \right.\quad b_{21} \left( {x_{1} } \right) = \left\{ \begin{array}{l} \frac{1}{6},\left| {x_{1} \left( t \right)} \right| < 1 \hfill \\ - \frac{1}{6},\left| {x_{1} \left( t \right)} \right| > 1 \hfill \\ \end{array} \right. \hfill \\ b_{21} \left( {x_{2} } \right) = \left\{ \begin{array}{l}\frac{1}{7},\left| {x_{2} \left( t \right)} \right| < 1 \hfill \\ - \frac{1}{7},\left| {x_{2} \left( t \right)} \right| > 1 \hfill \\ \end{array} \right.\quad b_{22} \left( {x_{2} } \right) = \left\{ \begin{array}{l} \frac{1}{3},\left| {x_{2} \left( t \right)} \right| < 1 \hfill \\ - \frac{1}{3},\left| {x_{2} \left( t \right)} \right| > 1 \hfill \\ \end{array} \right. \hfill \\ \end{aligned}\)Therefore,

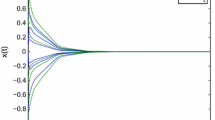

Let \(\tau \left( t \right) = 1.8 + 0.5{ \sin }\left( t \right)\), \(I_{1} = I_{2} = 0\), \(f_{i} \left( a \right) = g_{i} \left( a \right) = \frac{1}{2}\left( {\left| {a + 1} \right| - \left| {a - 1} \right|} \right).\) By simple calculation, we get \(k_{i} = l_{i} = 1,\;\;i = 1,2,\;p = - 0.7532,\;\;q = 0.4436\). For the time-varying impulses, we Choose the impulsive strengths of destabilizing impulses \(\mu_{1} = \mu_{2} = 1.2\), the impulsive strength of stabilizing impulses \(\gamma_{1} = \gamma_{2} = \cdots = 0.9\), and the lower bounds of stabilizing \(\;\;\xi_{1} = \xi_{2} = 0.5\). According to Corollary 1 that the neural network (27) can be stabilized if the maximum impulsive interval ζ of the stabilizing impulsive sequence is not more than 0.2755. If we let \(t_{k \uparrow } - t_{{\left( {k - 1} \right) \uparrow }} = 0.5\),\(t_{k \downarrow } - t_{{\left( {k - 1} \right) \downarrow }} = 0.2\) the whole impulses can be described as, \(t_{0 \uparrow } = 0,{\kern 1pt}\) \(t_{k \uparrow } = t_{{\left( {k - 1} \right) \uparrow }} + 0.5k\) for destabilizing impulses and \(t_{0 \downarrow } = 0.09,{\kern 1pt}\) \(t_{k \downarrow } = t_{{\left( {k - 1} \right) \downarrow }} + 0.2k\) for stabilizing impulses then the corresponding trajectories of the impulsive neural networks (1) are plotted as shown in Fig. 2, where one observes that, when \(\zeta < 0.2755\), the neural networks (1) can be stabilized.

State trajectories of the memristive neural networks (10) with different conditions: a without impulses (blue); b the maximum impulsive interval of the stabilizing impulsive is 0.2 (green). (Color figure online)

Conclusions

In this paper, we investigated the exponential stability analysis problem for a class of general memristor-based neural networks with time-varying delay and time-varying impulses. To investigate the dynamic properties of the system, under the framework of Filippov’s solution, we can turn to the qualitative analysis of a relevant differential inclusion. By using the Lyapunov method, the stability conditions were obtained. A numerical example was also given to illustrate effectiveness of the theoretical results.

References

Baæinov D, Simeonov PS (1989) Systems with impulse effect: stability, theory, and applications. Ellis Horwood, Chichester

Cantley KD, Subramaniam A, Stiegler HJ (2011) Hebbian learning in spiking neural networks with nanocrystalline silicon TFTs and memristive synapses. IEEE Trans Nanotechnol 10:1066–1073

Chen WH, Zheng WX (2009) Global exponential stability of impulsive neural networks with variable delay: an LMI approach. IEEE Trans Circuits Syst I Regul Pap 56:1248–1259

Chua L (1971) Memristor—the missing circuit element. IEEE Trans Circuit Theory 18:507–519

Chua L, Kang SM (1976) Memristive devices and systems. Proc IEEE 64:209–223

Filippov A (1960) Differential equations with discontinuous right-hand side. Matematicheskii Sbornik 93:99–128

Guan ZH, Hill DJ, Yao J (2006) A hybrid impulsive and switching control strategy for synchronization of nonlinear systems and application to Chua’s chaotic circuit. Int J Bifurcation Chaos 16:229–238

Guo Z, Wang J, Yan Z (2013) Global exponential dissipativity and stabilization of memristor-based recurrent neural networks with time-varying delays. Neural Netw 48:158–172

He X, Li C, Huang T, Peng M (2013a) Codimension two bifurcation in a simple delayed neuron model. Neural Comput Appl 23:2295–2300

He X, Li C, Huang T (2013b) Bogdanov–Takens singularity in tri-neuron network with time delay. IEEE Trans Neural Netw Learn Syst 24:1001–1007

Hu J, Wang J (2010) Global uniform asymptotic stability of memristor-based recurrent neural networks with time delays. In: The 2010 international joint conference on neural networks (IJCNN), pp 1–8

Hu C, Jiang H, Teng Z (2010) Impulsive control and synchronization for delayed neural networks with reaction–diffusion terms. IEEE Trans Neural Netw 21:67–81

Hu W, Li C, Wu S (2012) Stochastic robust stability for neutral-type impulsive interval neural networks with distributed time-varying delays. Neural Comput Appl 21:1947–1960

Kim KH, Gaba S, Wheeler D (2011) A functional hybrid memristor crossbar-array/CMOS system for data storage and neuromorphic applications. Nano Lett 12:389–395

Liu B, Liu X (2007) Robust stability of uncertain discrete impulsive systems[J]. IEEE Trans Circuits Syst II Express Briefs 54:455–459

Liu X, Shen X, Zhang H (2011) Intermittent impulsive synchronization of chaotic delayed neural networks. Differ Equ Dyn Syst 19:149–169

Lu J, Ho D, Cao J (2010) A unified synchronization criterion for impulsive dynamical networks. Automatica 46:1215–1221

Lu J, Ho DWC, Cao J (2011) Exponential synchronization of linearly coupled neural networks with impulsive disturbances. IEEE Trans Neural Netw 22:329–336

Lu J, Kurths J, Cao J (2012) Synchronization control for nonlinear stochastic dynamical networks: pinning impulsive strategy. IEEE Trans Neural Netw Learn Syst 23:285–292

Strukov DB, Snider GS, Stewart DR (2008) The missing memristor found. Nature 453:80–83

Tour JM, He T (2008) The fourth element. Nature 453:42–43

Wang G, Shen Y (2013) Exponential synchronization of coupled memristive neural networks with time delays. Neural Comput Appl. doi:10.1007/s00521-013-1349-3

Wang X, Li C, Huang T, Duan S (2012) Predicting chaos in memristive oscillator via harmonic balance method. Chaos 22:4

Wen S, Zeng Z (2012) Dynamics analysis of a class of memristor-based recurrent networks with time-varying delays in the presence of strong external stimuli. Neural Process Lett 35:47–59

Wen S, Zeng Z, Huang T (2012a) Adaptive synchronization of memristor-based Chua’s circuits. Phys Lett A 376:2775–2780

Wen S, Zeng Z, Huang T (2012b) Exponential stability analysis of memristor-based recurrent neural networks with time-varying delays. Neurocomputing 97:233–240

Wu A, Zeng Z (2012a) Dynamic behaviors of memristor-based recurrent neural networks with time-varying delays. Neural Networks 36:1–10

Wu A, Zeng Z (2012b) Exponential stabilization of memristive neural networks with time delays. IEEE Trans Neural Networks Learn Sys 23(12):1919–1929

Wu AL, Wen S, Zeng Z (2012) Synchronization control of a class of memristor-based recurrent neural networks. Inf Sci 183:106–116

Yang Z, Xu D (2005) Stability analysis of delay neural networks with impulsive effects. IEEE Trans Circuits Syst II Express Briefs 52:517–521

Yang Z, Xu D (2007) Stability analysis and design of impulsive control systems with time delay. IEEE Trans Autom Control 52:1448–1454

Zhang H, Guan Z, Ho D (2006) On synchronization of hybrid switching and impulsive networks. In: 2006 45th IEEE conference on decision and control, IEEE pp 2765–2770

Zhang G, Shen Y, Sun J (2012) Global exponential stability of a class of memristor-based recurrent neural networks with time-varying delays. Neurocomputing 97:149–154

Acknowledgments

This publication was made possible by NPRP Grant # NPRP 4-1162-1-181 from the Qatar National Research Fund (a member of Qatar Foundation). The statements made herein are solely the responsibility of the authors. This work was also supported by Natural Science Foundation of China (Grant No: 61374078).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Qi, J., Li, C. & Huang, T. Stability of delayed memristive neural networks with time-varying impulses. Cogn Neurodyn 8, 429–436 (2014). https://doi.org/10.1007/s11571-014-9286-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-014-9286-0