Abstract

This paper has proposed an adaptive control scheme based on a hybrid neural network (HNN) to address the problem of uncertain nonlinear systems with discrete-time having bounded disturbances. This proposed control scheme is composed of a neural network (NN) and differential evolution (DE) technique which is used to initialize the weights of the NN and the controller is designed in such a manner so that the stability can be ensured and the desired trajectory can be achieved. The designed HNN is employed to approximate unknown functions present in the system. By using the concept of system transformation, the adaptive law and controller are designed and the whole system is proved to be stable in the sense of semi-globally uniformly ultimately boundedness (SGUUB) with the assistance of Lyapunov theory. Finally, the validity and effectiveness of the results are proved through two simulation examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the past two decades, a great deal of attention is devoted by the researchers to the area of uncertain nonlinear discrete-time systems such as robot manipulators, servo motor, and underwater vehicle systems [1,2,3]. Due to the development of technology control devices and objects are becoming more complex and not possible to control via a linear control model [4, 5]. Therefore the control of nonlinear systems is becoming much more challengeable since not all states are measurable and it becomes more difficult in the presence of disturbances and hence drawing a lot of attention from researchers [6,7,8,9]. Due to uncertainty and disturbances, it has been acknowledged that the methodologies to control uncertain nonlinear systems are comparatively more difficult than the linear control [10]. Bounded disturbances often exist in many practical problems and become the cause of the instability of control systems. Therefore, designing an efficient control scheme and its stability attract the attention of researchers. However, due to limitations in modeling, measurement and observations many physical systems are affected by parameter uncertainties.

Uncertain systems appear very often in the numerous practical plants, in this connection the adaptive neural network (ANN) control and the control based on fuzzy logic systems (FLS) have achieved extensive accomplishments and improvements to approximate nonlinear functions present in such systems [11,12,13,14]. Many methods were developed like back-stepping, sliding mode control, observer-based system, and robust control which are used to tackle such nonlinear systems [15,16,17,18]. An adaptive tracking control methodology was discussed for uncertain nonlinear single-input and single-output (SISO) systems [19] and multi-input and multi-output (MIMO) systems in [17, 20, 21]. Tracking control of uncertain systems is more difficult in presence of diverse forces which may interfere with the systems [22]. Among many diverse forces, the dead zone is one force that is symmetric and asymmetric in nature [23]. Adaptive tracking control is developed for switching systems with non-symmetric dead-zone [24], in addition to this, observer-based adaptive control and some fuzzy based adaptive control techniques addressing nonlinear systems with discrete-time and bounded disturbances with dead-zone are presented in [25,26,27,28] while nonlinear time-delay problem with unknown nonlinear dead-zone is presented in [27, 29, 30].

In the assessment with continuous systems, discrete-time systems have more practical consequences. Although a large number of the real-time processes are continuous in nature, they are demonstrated mostly utilizing discrete-time as they are effectively feasible [31]. Keeping into view this advantage, the discrete-time systems are taken into consideration. Many adaptive control techniques were developed to approximate the unknown functions for nonlinear discrete-time systems [23, 30, 32, 33]. The time-changing parameters in the systems may cause major challenges in tracking performance. Another important issue is time-delay input for nonlinear systems which usually makes a system complicated. Back-stepping based adaptive control design employing intelligent systems like neural networks or fuzzy logic systems (FLS) gives more fruitful results for nonlinear systems [17, 34,35,36].

Motivated by the aforementioned discussion, it has been noticed that an adaptive control strategy based on NN or FLS, ensured the basic system stability, but how to attain the preferred trajectory remains to be studied additionally. To enhance the theory of adaptive control, especially for nonlinear systems with discrete-time, we propose an adaptive controller for uncertain nonlinear systems with discrete-time on the basis of a hybrid neural network. In the proposed hybrid neural network controller, we use the evolutionary differential evolution method to initialize the weight and biases of the neural network with mean square error (MSE) as the fitness function. In this work, we address HNN adaptive controller to attain the reference signal for uncertain nonlinear systems with discrete-time having bounded disturbances and immersion of utterly unknown functions.

The primary endowments of this work are summed up as follows:

-

(1)

An evolutionary method named differential evolution is used for the initialization of the weight vector of the neural network. Employing the HNN and feedback approach, the proposed control scheme is capable of successfully tracking the reference signal. The considered adaptive HNN is used to approximate unknown functions involving dead-zone.

-

(2)

The proposed adaptive control scheme surmounts the requirements of affine form and linear parametric situation, i.e. it is not required to be assumed that the nonlinear uncertainties are needed to be linear with unknown parameters.

-

(3)

By the use of the Lyapunov method, the semi-global uniformly ultimate boundedness (SGUUB) of the system is verified. The unknown functions are be approximated by the proposed HNN controller and the error signal converges in a compact set containing zero, which is shown and verified by the using simulation examples.

The remaining part of this paper has been structured in the following manner. The formulation of the problem for uncertain nonlinear systems with discrete-time having dead-zone and preliminaries is presented in Sect. 2. In Sect. 3, the HNN controller is discussed, and Sect. 4 deals with the stability analysis of the system using the Lyapunov method. Section 5 provides the simulation results and description, and the conclusion of this paper carries out in Sect. 6.

Notation: Following are the notations that will be used throughout this paper.

In the whole of the article, all work is done using standard notations. \({\mathfrak{R}}^{m}\) denotes an m-dimensional Euclidean space; \(\overline{\zeta }_{i} (k)\) stands for the ith state of the system and \(\overline{\zeta }_{i} (k) = \left[ {\zeta_{1} (k),\,\zeta_{2} (k),\, \cdots \,,\,\zeta_{i} (k)} \right]^{T} \in \Re^{i}\) represents state vector where T is the transpose of vector/matrix and \({\overline{}}_{s}\left(\mathrm{k}\right)\) be the supremum of \(\zeta\)\(\left(\bullet \right)\); \(\varepsilon \left(k\right)\) represents tracking error; \(\varepsilon \left(\right)\) represents approximation error for NN and \(\varepsilon\) is a constant. \(y\left(k\right)\) and \({y}_{d}(k)\) represents obtained and the desired trajectory respectively.

2 Problem Formulation and Preliminaries

Let us take into consideration the under mentioned nonlinear stochastic pure-feedback systems as follows:

where, \(\overline{\zeta }_{i} (k) = \left[ {\zeta_{1} (k),\,\zeta_{2} (k),\, \cdots \,,\,\zeta_{i} (k)} \right]^{T} \in \Re^{i}\), where \(i = 1,2,\, \cdots \,,\,n\),\(\tau (k) \in \,\,\Re\) and \(y(k) \in \,\,\Re\) be the state vector, input and output of the system respectively;\(d(k) \in \,\Re^{m} \,\) stand for external bounded disturbances such that \(\left\| {d(k)} \right\|\, \le d_{M}\), and \(\psi_{i} (\zeta (k));\,\forall i = 1,2, \cdots ,n\) and \(\phi_{i} (\zeta (k));\forall i = 1,2, \cdots ,n\) are unknown smooth and bounded functions with all its partial derivatives are also continuous. The dead zone function \(\tau (k)\) in Eq. (1) is defined as:

where\(\upsilon (k) \in \Re\) is known as dead zone for the input, \(b_{l} < 0\) and \(b_{r} > 0\) be the unknown dead zone parameters while \(\varphi_{r}\) and \(\varphi_{l}\) are the unknown smooth nonlinear function. The functions \(\varphi_{r} (\upsilon (k))\) and \(\varphi_{l} (\upsilon (k))\) are smooth functions.

where

Let \(y_{d} (k)\) be the reference signal for the system (1) and \(y(k)\) is the calculated output of the system (1). Then our objective is to minimize the usual quadratic cost function given in (4):

Definition

[37]

The Nussbaum gain \(N\left( {\zeta (k)} \right)\) of a discrete sequence \(\left\{ {\zeta (k)} \right\}\) for discrete time satisfy following properties:

where,\(\varepsilon\) is constant.

Then \(N\left( {\zeta (k)} \right)\) defined as follows:

where, \(\zeta_{s} (k) = \mathop {\sup }\limits_{k^{\prime} \le k} \left\{ {\zeta (k^{\prime})} \right\}\) and \(H\left( {\zeta (k)} \right)\) is defined as:

In this case, \(H\left( {\zeta (k_{1} + 1)} \right) = - 1\;\;{\text{otherwise}}\;\;H\left( {\zeta (k_{1} + 1)} \right) = 1\).

If \(H\left( {\zeta (k_{1} )} \right) = - 1\), then if \(\sum\limits_{k^{\prime} = 0}^{{k_{1} }} {N\left( {\zeta (k^{\prime})} \right)} \,\Delta \zeta (k^{\prime}) < - \zeta_{{_{s} }}^{3/2} (k_{1} )\).

In this case, \(H\left( {\zeta (k_{1} + 1)} \right) = 1\;\;{\text{otherwise}}\;\;H\left( {\zeta (k_{1} + 1)} \right) = - 1\).

Result [37] If \(\zeta_{s} (k)\) holds uniform boundedness, then same is true for \(\left| {H\left( {\zeta (k)} \right)} \right|\) and if \(\zeta_{s} (k)\) is unbounded then \(\left| {H\left( {\zeta (k)} \right)} \right|\) oscillates between -∞ and + ∞. Using this result we establish lemma 1.

Lemma 1

[37] If the function \(V(\zeta )\) is such that it is positive definite and \(N(\zeta )\) is Nussbaum gain defined in Eq. (5) and if inequality (6) holds:

where \(c_{1}\), \(c_{2}\) and \(c_{3}\) are constants then \(V(k)\),\(\zeta (k)\) and RHS of Eq. (6) must be bounded.

NN Approximation

In the control theory, there are several well-formulated methods for unknown function approximations namely the polynomial method, fuzzy logic, and neural network, etc. In this work we use a neural network as an unknown function approximator. The basic universal approximation result says that any smooth function \(\psi (\zeta )\) can be approximated arbitrarily on a closed compact set with appropriate weight adjustment using NN. If \(\psi (\zeta )\) be an unknown smooth function can be written as:

where, \(\varepsilon (\xi )\) is known as NN function approximation error and bounded by \(\overline{\varepsilon }\) i.e. \(\left\| {\varepsilon (\xi )} \right\| \le \overline{\varepsilon }\). The error function defined in Eq. (3) can be minimized via choosing optimal weight vector \(W\), for this let us consider Lyapunov function as:

where \(e(k) = y(k) - y_{d} (k)\) and Lyapunov function defined above is same as the usual quadratic cost function. The derivative of Eq. (8) is given by:

where \(J = \frac{\partial e}{{\partial W}}.\) If arbitrary initial weight \(W(0)\) is updated by the rule

where error \(e\) converges to zero under the condition that \(\dot{W}\) exist along the convergence trajectory. Using Eq. (10), Eq. (6) yields: \(\dot{V} = - \left\| e \right\|^{2} \le 0\) and \(\dot{V} < 0\) if \(e \ne 0\). The difference equation representation of weight updating algorithm based on Eq. (10) is given by:

where\(\alpha\) is known as learning rate.

The structure of the hybrid neural network proposed in this work can be seen in Fig. 1, where \(\left[ {x_{1} , \, x_{2} , \, ..., \, x_{n} } \right]\) is the input state vector and \(\left[ {y_{1} , \, y_{2} , \, ..., \, y_{m} } \right]\) is the initial weight matrix defined by using differential evolution, \(\sigma\) is the activation function and \(V_{jk}\) is the weight matrix from hidden to output layer and \(\left[ {y_{1} , \, y_{2} , \, ..., \, y_{m} } \right]\) is the output vector.

3 Adaptive Controller Design

In this part of paper, we will use pure feedback technique to design an adaptive controller \(\tau (k)\) in Eq. (1) or \(\upsilon (k)\) which is defined in Eq. (3). The detail system transformation, design procedure and stability analysis are described below:

Step 1: Let first design the virtual controller \(\upsilon (k)\) as defined in Eq. (3), start the virtual control design to stabilize the first part of Eq. (1) as follows:

Similarly the virtual control design to stabilize the second part of Eq. (1) as follows:

Repeat the above procedure to get the final control \(\tau (k)\), by examine the first (n-1) equation of system (1). For this let us denote:

where\(\rho_{i} \left( {\overline{\zeta }_{i + 1} (k)} \right) = \psi_{i} \left( {\zeta_{1} (k + 1),\, \cdots ,\zeta_{i} (k + 1)} \right) + \phi_{i} \left( {\zeta_{1} (k + 1),\, \cdots ,\zeta_{i} (k + 1)} \right)\zeta_{i + 1} (k + 1)\)

Therefore:

After moving one step ahead system (1) can be expressed as:

Equations (15, 16) can be rewritten as:

where

Using Eq. (15) and moving one step ahead the first (n-2) terms at the (k + 2)th step in (17) we have:

From Eq. (15) it is clear that

Similar way to above process, we have

where \(\rho_{1} \left( {\overline{\zeta }_{n} (k)} \right) = \psi_{1} \left( {\Psi_{1} \left( {\overline{\zeta }_{n - 1} (k)} \right)} \right) + \varphi_{1} \left( {\Psi_{1} \left( {\overline{\zeta }_{n - 1} (k)} \right)} \right) \times \psi_{2} \left( {\overline{\zeta }_{n} (k)} \right)\) and

Now at the nth step, we have

where \(\Psi_{1} \left( {\overline{\zeta }_{n} (k)} \right) = \psi_{1} \left( {\rho_{1} \left( {\overline{\zeta }_{n} (k)} \right)} \right)\;\;{\text{and}}\;\;\Phi_{1} \left( {\overline{\zeta }_{n} (k)} \right) = \varphi_{1} \left( {\rho_{1} \left( {\overline{\zeta }_{n} (k)} \right)} \right)\).

From Eqs. (16) to (20), Eq. (1) can be re-casted as:

From Eq. (19) to (20), we have

Repeating above process, until \(\tau (k)\) appears

where \(\Psi \left( {\overline{\zeta }_{m} (k)} \right) = \Psi_{1} (k) + \Psi_{2} (k) \times \Phi_{1} (k) + \cdots + \Psi_{n - 1} (k) \times \prod\limits_{i = 1}^{n - 2} {\Phi_{j} (k)} + \psi_{n} (k) \times \prod\limits_{i = 1}^{n - 2} {\Phi_{j} (k)}\)

Then the output of the re-casted system is given as:

Let us define tracking error \(\varepsilon (k) = y(k) - y_{d} (k)\), from Eq. (24) error is:

Since, the dead zone \(\tau (k)\) defined in Eq. (2) is containing unknown variables, therefore \(\tau (k)\) can be used for controller in terms of Eq. (3):

where, \(C^{T} (\upsilon (k)) = \left[ {C_{r} (\upsilon (k)),\,C_{l} (\upsilon (k))} \right]\).

For simplification let us denote:

Then Eq. (26) becomes

By using implicit theorem, find the required control input \(\upsilon^{*} (k)\) such that following holds

With the neural network approximation as in Eq. (7), we get

where,\(\sigma \left( {\upsilon (k)} \right)\) is the bounded input to the hidden layer and \(\varepsilon^{*} (\upsilon (k))\) error after approximation which is to be minimized, for this let us assume \(\hat{W}\) is the approximation of \(W^{*}\) and \(\overline{W} = \hat{W} - W^{*}\). Using this construct HNN controller for \(\upsilon (k)\) as

where, \(\hat{\varepsilon }\) is the estimation of \(\varepsilon^{*}\) and \(\overline{\varepsilon } = \hat{\varepsilon } - \varepsilon^{*}\). Using mean value theorem and Eq. (28), (27) can be further written as:

where \(\upsilon^{\# } (k) \in \left[ {\min \left\{ {\upsilon^{*} (k),\upsilon (k)} \right\},\,\max \left\{ {\upsilon^{*} (k),\upsilon (k)} \right\}} \right]\)

Using Eqs. (29, 30, 31) becomes.

where \(\pi (k) = \overline{W}^{T} \sigma \left( {\upsilon (k)} \right)\;{\text{and}}\;d_{0} (k) = g\left( {\upsilon^{\# } (k),\overline{\zeta }_{m} (k)} \right) \times \overline{\varepsilon }(\upsilon (k)) + d_{1} (k) + \Phi \left( {\overline{\zeta }_{m} (k)} \right)p(\upsilon (k))\).

Now, the parameter adjustment regulation are designed as

where, \(\kappa\) is positive adaptation gain constant, \(\alpha (k)\) is augmented error and \(k_{1} = k - n + 1\).

\(\Delta \zeta (k) = \zeta (k + 1) - \zeta (k) = \frac{{\alpha (k) \times \left( {1 + \left| {N\left( {\zeta (k)} \right)} \right|} \right) \times \chi^{2} (k + 1)}}{B(k)}\). For the design of controller, the sequence \(\left\{ {\zeta (k)} \right\}\) must satisfy \(\zeta (0) = 0,\) \(\zeta (k) \ge 0\) and \(\Delta \zeta (k) = \left| {\zeta (k + 1) - \zeta (k)} \right| < \varepsilon ;\) for all k.

The overall working of the control process can be well understood through Figs. 2 and 3.

Algorithm

A step-by-step procedure of detailed implementation of the controller is as follows:

-

1.

Select initial state and parameters.

-

2.

Construct HNN and evaluate error, y(k) – yd(k).

-

3.

Update the weight. Calculate the controller and construct equation

-

4.

Use the controller υ(k) for the given system.

-

5.

Go to step 3 for next updation.

4 Analysis of Stability

Theorem: If a nonlinear system of the type (1) with dead zone. To construct the HNN controller with parameter adaption law defined in Eqs. (32, 33) with appropriate design parameters, the feedback systems guarantee that system possess semi-global uniformly ultimate boundedness.

Proof: Let us consider the Lyapunov function:

where, \(V_{1} \left( k \right) = \sum\nolimits_{j = 0}^{n - 1} {\overline{W}^{T} \left( {k_{1} + j} \right)} \,\overline{W}\left( {k_{1} + j} \right)\;\;{\text{and}}\;\;V_{2} \left( k \right) = \sum\nolimits_{j = 0}^{n - 1} {\overline{\varepsilon }^{2} \,\left( {k_{1} + j} \right)} \,\)

From Eq. (34), we have

From Eq. (37) we get, \(\varepsilon (k + 1) = \,g\left( {\overline{\zeta }_{m} (k_{1} ),\upsilon^{\# } (k_{1} )} \right)\left( {\pi (k_{1} ) + \overline{\varepsilon }(k_{1} )} \right) + d_{0} (k_{1} )\)where,\(k_{1} = k - n + 1\) and \(g\left( {\overline{\zeta }_{m} (k_{1} ),\upsilon^{\# } (k_{1} )} \right)\) is not zero therefore multiplying both side by \(g^{ - 1} \left( {\overline{\zeta }_{m} (k_{1} ),\upsilon^{\# } (k_{1} )} \right)\), we get

Since,\(\chi (k + 1)1 + \left| {N\left( {\zeta (k)} \right)} \right| = \kappa \varepsilon (k + 1)\) we have

By the use of Young’s inequality \(xy \le \frac{1}{2}(x^{2} + y^{2} )\), we get

and \(\kappa^{2} N^{2} \left( {\zeta (k)} \right)\left( {\left\| {\sigma \left( {\upsilon (k_{1} )} \right)} \right\|^{2} + 1} \right)\frac{{\alpha^{2} (k)\chi^{2} (k + 1)}}{{B^{2} (k)}} \le \frac{{\kappa^{2} \alpha (k)\left( {1 + \left| {N\left( {\zeta (k)} \right)} \right|} \right)\chi^{2} (k + 1)}}{B(k)}\)

and hence we have from Eq. (39)

Taking, summation on of Eq. (40), we get

Since, \(\hat{W}(k),\,\hat{\varepsilon }(k),\,\zeta (k)\) and \(N\left( {\zeta (k)} \right)\) are bounded and hence \(1 + \left| {N\left( {\zeta (k)} \right)} \right|\) is also bounded. Since, \(\Delta \zeta (k) = \frac{{\alpha (k)\left( {1 + \left| {N\left( {\zeta (k)} \right)} \right|} \right)\chi^{2} (k + 1)}}{B(k)} \ge 0\), and bounded which concludes that \(\alpha (k) = \frac{\kappa \varepsilon (k)}{{\left( {1 + \left| {N\left( {\zeta (k)} \right)} \right|} \right)}}\) is bounded and hence \(\varepsilon (k) = \frac{1}{\kappa }\alpha (k)\left( {1 + \left| {N\left( {\zeta (k)} \right)} \right|} \right)\) is also bounded and since \(\varepsilon (k) = y(k) - y_{d} (k)\) where \(y_{d} (k)\) is bounded and hence output of the HNN \(y(k)\) is also bounded.

5 Simulation Examples

In this part of paper, two examples with simulation are provided to demonstrate the validity of the designed controller.

Example 1: Consider the below mentioned stochastic nonlinear system

where \(\tau (k) = \left\{ \begin{gathered} 0.3\,\left( {\upsilon^{2} (k) - 0.5} \right),\,\,\,\,\,\,\upsilon (k) \ge 0.5 \hfill \\ 0,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\, - 0.6 < \upsilon^{2} (k) < 0.5\,\, \hfill \\ 0.2\,\left( {\upsilon^{2} (k) + 0.6} \right),\,\upsilon (k) < - 0.6 \hfill \\ \end{gathered} \right.\) and reference trajectory is given by:\(y_{d} (k) = 0.7 + 0.5sin\left( {{\raise0.7ex\hbox{${kT\pi }$} \!\mathord{\left/ {\vphantom {{kT\pi } 5}}\right.\kern-\nulldelimiterspace} \!\lower0.7ex\hbox{$5$}}} \right) + 0.5cos\left( {{\raise0.7ex\hbox{${kT\pi }$} \!\mathord{\left/ {\vphantom {{kT\pi } {10}}}\right.\kern-\nulldelimiterspace} \!\lower0.7ex\hbox{${10}$}}} \right)\;\;{\text{where}},\;\;T = 0.05.\)

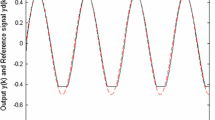

By employing the proposed HNN controller, the system is capable of successfully tracking the reference trajectory with error quickly converging to a small neighborhood of zero. The tracking performance of the controller can be seen in Figs. 4 and 5 where Fig. 4 shows the tracking performance for tracking the reference trajectory and Fig. 5 shows the tracking error. The performance of the proposed controller is compared with existing methods as shown in the Figs. 4 and 5. From Fig. 5, it can be clearly observed that the tracking error for the proposed controller is smaller than the other ones, and hence better results are obtained.

Example 2: Consider the below mentioned stochastic nonlinear system

and reference trajectory is given as \(y_{d} (k) = 1.5 sin\left( {{\raise0.7ex\hbox{${kT\pi }$} \!\mathord{\left/ {\vphantom {{kT\pi } 5}}\right.\kern-\nulldelimiterspace} \!\lower0.7ex\hbox{$5$}}} \right) + 1.5 cos\left( {{\raise0.7ex\hbox{${kT\pi }$} \!\mathord{\left/ {\vphantom {{kT\pi } {10}}}\right.\kern-\nulldelimiterspace} \!\lower0.7ex\hbox{${10}$}}} \right)\;{\text{where}}\;T = 0.05\)

By choosing initial states as [1, 1], the tracking performance of the proposed controller can be seen in Figs. 6 and 7. From Fig. 6, it can be clearly observed that the controller has successfully tracked the reference trajectory with a very small error rapidly converging to zero. Fig. 7 shows the tracking error of the proposed controller in comparison with the existing controllers and it can be clearly seen that the proposed controller has achieved a better performance than the existing ones.

6 Conclusion

In this work, an HNN is used as a controller for nonlinear discrete-time systems in presence of a nonlinear dead zone. This HNN is designed on the basis of one-step advanced state prediction of higher-order nonlinear discrete-time systems. The discrete Nussbaum function is used in order to counter the knowledge of control directions. Also, it is verified that the feedback systems possess semi-globally ultimate uniform boundedness and output tracking errors converge in the closed neighborhood of zero. The performance of the HNN model is envisaged by the two simulation examples. Results show that the HNN control model by the use of Nussbaum gain works as predicted in the analysis.

Availability of data

No.

References

Lian, Y., Zhou, Y., Zhang, J., Ma, S., & Wu, S. (2022). An Intelligent Nonlinear Control Method for the Multistage Electromechanical Servo System. Applied Sciences, 12(10), 5053.

Cruz-Zavala, E., Nuño, E., & Moreno, J. A. (2021). Robust trajectory-tracking in finite-time for robot manipulators using nonlinear proportional-derivative control plus feed-forward compensation. International Journal of Robust and Nonlinear Control, 31(9), 3878–3907.

Gong, P., Yan, Z., Zhang, W., & Tang, J. (2021). Lyapunov-based model predictive control trajectory tracking for an autonomous underwater vehicle with external disturbances. Ocean Engineering, 232, 109010.

Bacciotti, A., & Rosier, L. (2005). Liapunov functions and stability in control theory. Heidelberg: Springer Science & Business Media.

Esfandiari, F., & Khalil, H. K. (1992). Output feedback stabilization of fully linearizable systems. International Journal of control, 56(5), 1007–1037.

Jiang, B., Karimi, H. R., Kao, Y., & Gao, C. (2019). Reduced-order adaptive sliding mode control for nonlinear switching semi-Markovian jump delayed systems. Information Sciences, 477, 334–348.

Lei, H., & Lin, W. (2005). Universal output feedback control of nonlinear systems with unknown growth rate. IFAC Proceedings Volumes, 38(1), 1073–1078.

Li, F., & Liu, Y. (2017). Global finite-time stabilization via time-varying output-feedback for uncertain nonlinear systems with unknown growth rate. International Journal of Robust and Nonlinear Control, 27(17), 4050–4070.

Li, Y., Li, K., & Tong, S. (2018). Finite-time adaptive fuzzy output feedback dynamic surface control for MIMO nonstrict feedback systems. IEEE Transactions on Fuzzy Systems, 27(1), 96–110.

Miao, P., Shen, Y., Li, Y., & Bao, L. (2016). Finite-time recurrent neural networks for solving nonlinear optimization problems and their application. Neurocomputing, 177, 120–129.

Singh, U. P., & Jain, S. (2016). Modified chaotic bat algorithm based counter propagation neural network for uncertain nonlinear discrete time system. International Journal of Computational Intelligence and Applications, 15(03), 1650016.

Chen, B., Liu, X., Liu, K., & Lin, C. (2009). Direct adaptive fuzzy control of nonlinear strict-feedback systems. Automatica, 45(6), 1530–1535.

Chen, B., Liu, X., Liu, K., & Lin, C. (2010). Fuzzy-approximation-based adaptive control of strict-feedback nonlinear systems with time delays. IEEE Transactions on Fuzzy Systems, 18(5), 883–892.

Singh, U. P., Jain, S., Gupta, R. K., & Tiwari, A. (2019). AFMBC for a class of nonlinear discrete-time systems with dead zone. International Journal of Fuzzy Systems, 21(4), 1073–1084.

Tong, S., Min, X., & Li, Y. (2020). Observer-based adaptive fuzzy tracking control for strict-feedback nonlinear systems with unknown control gain functions. IEEE Transactions on Cybernetics, 50(9), 3903–3913.

Das, M., & Mahanta, C. (2014). Optimal second order sliding mode control for linear uncertain systems. ISA transactions, 53(6), 1807–1815.

Shaocheng, T., Changying, L., & Yongming, L. (2009). Fuzzy adaptive observer backstepping control for MIMO nonlinear systems. Fuzzy sets and systems, 160(19), 2755–2775.

Mobayen, S., & Majd, V. J. (2012). Robust tracking control method based on composite nonlinear feedback technique for linear systems with time-varying uncertain parameters and disturbances. Nonlinear Dynamics, 70(1), 171–180.

Ho, H. F., Wong, Y. K., & Rad, A. B. (2009). Adaptive fuzzy sliding mode control with chattering elimination for nonlinear SISO systems. Simulation Modelling Practice and Theory, 17(7), 1199–1210.

Lee, H. (2010). Robust adaptive fuzzy control by backstepping for a class of MIMO nonlinear systems. IEEE Transactions on Fuzzy Systems, 19(2), 265–275.

Chen, B., Liu, X., Liu, K., & Lin, C. (2009). Novel adaptive neural control design for nonlinear MIMO time-delay systems. Automatica, 45(6), 1554–1560.

Zhou, Q., Zhao, S., Li, H., Lu, R., & Wu, C. (2018). Adaptive neural network tracking control for robotic manipulators with dead zone. IEEE Transactions on Neural Networks and Learning Systems, 30(12), 3611–3620.

Chen, M., & Tao, G. (2015). Adaptive fault-tolerant control of uncertain nonlinear large-scale systems with unknown dead zone. IEEE Transactions on Cybernetics, 46(8), 1851–1862.

Zhao, X., Shi, P., Zheng, X., & Zhang, L. (2015). Adaptive tracking control for switched stochastic nonlinear systems with unknown actuator dead-zone. Automatica, 60, 193–200.

Cao, L., Zhou, Q., Dong, G., & Li, H. (2019). Observer-based adaptive event-triggered control for nonstrict-feedback nonlinear systems with output constraint and actuator failures. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 51(3), 1380–1391.

Xu, B., Sun, F., Pan, Y., & Chen, B. (2016). Disturbance observer based composite learning fuzzy control of nonlinear systems with unknown dead zone. IEEE Transactions on Systems, Man, and Cybernetics: Systems, 47(8), 1854–1862.

Chen, B., Liu, X., Liu, K., & Lin, C. (2013). Fuzzy approximation-based adaptive control of nonlinear delayed systems with unknown dead zone. IEEE Transactions on Fuzzy Systems, 22(2), 237–248.

Liu, Y. J., Tong, S., Li, D. J., & Gao, Y. (2015). Fuzzy adaptive control with state observer for a class of nonlinear discrete-time systems with input constraint. IEEE Transactions on Fuzzy Systems, 24(5), 1147–1158.

Liu, Y. J., Li, S., Tong, S., & Chen, C. P. (2018). Adaptive reinforcement learning control based on neural approximation for nonlinear discrete-time systems with unknown nonaffine dead-zone input. IEEE transactions on neural networks and learning systems, 30(1), 295–305.

Liu, Y. J., Gao, Y., Tong, S., & Chen, C. P. (2015). A unified approach to adaptive neural control for nonlinear discrete-time systems with nonlinear dead-zone input. IEEE Transactions on Neural Networks and Learning Systems, 27(1), 139–150.

Singh, U. P., & Jain, S. (2018). Optimization of neural network for nonlinear discrete time system using modified quaternion firefly algorithm: case study of Indian currency exchange rate prediction. Soft Computing, 22(8), 2667–2681.

Wei, Q., & Liu, D. (2015). Neural-network-based adaptive optimal tracking control scheme for discrete-time nonlinear systems with approximation errors. Neurocomputing, 149, 106–115.

Na, J., Lv, Y., Wu, X., Guo, Y., & Chen, Q. (2014). Approximate optimal tracking control for continuous-time unknown nonlinear systems. In Proceedings of the 33rd chinese control conference (pp. 8990–8995). IEEE

Zhou, Q., Shi, P., Lu, J., & Xu, S. (2011). Adaptive output-feedback fuzzy tracking control for a class of nonlinear systems. IEEE Transactions on Fuzzy Systems, 19(5), 972–982.

Mehraeen, S., Jagannathan, S., & Crow, M. L. (2011). Decentralized dynamic surface control of large-scale interconnected systems in strict-feedback form using neural networks with asymptotic stabilization. IEEE Transactions on Neural Networks, 22(11), 1709–1722.

Chen, W., & Li, J. (2008). Decentralized output-feedback neural control for systems with unknown interconnections. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), 38(1), 258–266.

Lee, T. H., & Narendra, K. (1986). Stable discrete adaptive control with unknown high-frequency gain. IEEE Transactions on Automatic Control, 31(5), 477–479.

Funding

No funding.

Author information

Authors and Affiliations

Contributions

RK: Conceptualization, Writing—original draft. UPS: Methodology, Validation, Writing. AB: Review, editing and software. KR: Supervision.

Corresponding author

Ethics declarations

Conflict of interest

On behalf of the all authors, We declare that we have no conflict of interest in any terms like financial support from any organizations/institutions, data, code used in this paper etc.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kumar, R., Singh, U.P., Bali, A. et al. Hybrid Neural Network Control for Uncertain Nonlinear Discrete-Time Systems with Bounded Disturbance. Wireless Pers Commun 126, 3475–3494 (2022). https://doi.org/10.1007/s11277-022-09875-9

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11277-022-09875-9