Abstract

Internet of Things (IoT) is helping to create a smart world by connecting sensors in a seamless fashion. With the forthcoming fifth generation (5G) wireless communication systems, IoT is becoming increasingly important since 5G will be an important enabler for the IoT. Sensor networks for IoT are increasingly used in diverse areas, e.g., in situational and location awareness, leading to proliferation of sensors at the edge of physical world. There exist several variable step-size strategies in literature to improve the performance of diffusion-based Least Mean Square (LMS) algorithm for estimation in wireless sensor networks. However, a major drawback is the complexity in the theoretical analysis of the resultant algorithms. Researchers use several assumptions to find closed-form analytical solutions. This work presents a unified analytical framework for distributed variable step-size LMS algorithms. This analysis is then extended to the case of diffusion based wireless sensor networks for estimating a compressible system and steady state analysis is carried out. The approach is applied to several variable step-size strategies for compressible systems. Theoretical and simulation results are presented and compared with the existing algorithms to show the superiority of proposed work.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The Internet of Things (IoT) has grown immensely during the last decade with many emerging applications built around different types of sensors. With issues at device and protocol levels, there is a growing trend in integration of sensors and sensor based systems with cyber physical systems as well as device-to-device (D2D) communications [1,2,3]. Recently, research directions has been shifted towards 5G networks and one current active area of research in 5G is IoT [4]. One of the most important component of the IoT paradigm is wireless sensor networks (WSNs). WSNs can sense the environment, process the sensed data, and communicate for monitoring and diagnostics [6]. The sensor data originating from the future IoT is expected to be diverse and also grow manifold with each passing year. During the last few years, multi sensor data fusion attracted a lot of attention in many applications (such as radar and target detection, target tracking, smart grid, intelligent transportation systems, robot networks, smart cities, etc.) as they provide a cost-effective solution. Unknown parameters or phenomenon of interest can be estimated using centralized and distributed estimation systems. For centralized estimation systems, the sensor nodes collect data and transmit it to a central processor for signal processing. The central node is also responsible for communication with the individual nodes. The resulting estimate is as accurate as if each sensor had access to the complete information available across the network. However, this centralized system has several disadvantages including cost of communication overhead, power consumption, and single point of failure. In order to overcome these issues, the distributed estimation systems have been proposed in the literature. Each sensor node senses data and collaborates with its neighboring nodes in order to arrive at an estimate of the unknown parameter instead of transmitting the entire data to a central node. This approach offers less communication overhead and low processing load at the cost of added complexity at the nodes [7,8,9,10,11].

Various algorithms have been proposed in the literature for distributed estimation over WSNs [9,10,11,12,13,14,15,16,17,18,19,20,21]. Further, a detailed survey of distributed algorithms is available in [22]. The focus is primarily on least mean-square (LMS)-based algorithms due to simplicity and effectiveness [23]. However, an important limiting factor of LMS is the trade-off between convergence speed and steady-state miss-adjustment. One of the most popular and fully distributed algorithm is diffusion LMS [12]. To overcome the problem with LMS-based algorithms, several variable step-size (VSS) strategies have been proposed, which provide fast convergence speed initially while reducing the step-size with time in order to achieve a low error performance [24,25,26,27,28,29,30].

1.1 VSS strategies in literature

The work in [24] introduces a normalization factor to overcome the problem of slow convergence in the case high auto-correlation for the input data, thus making the step-size variable. A novel VSS algorithm is introduced in [25], which has become the most popular VSS algorithm in literature due to its low complexity and excellent performance. The algorithm uses the energy of the instantaneous error to update the step-size. However, the algorithm may suffer in performance if the noise power is high. To overcome this problem, another VSS strategy is proposed in [26], which uses the cross-correlation between the current and previous error values to update the step-size. The work in [27] proposes an improved version of [26] by incorporating the cross-correlation of the input signal in the update equation as well. However, despite these improvements, the VSS strategy of [25] generally outperforms the two algorithms as well as most other VSS algorithms. A noise-constrained VSS algorithm is proposed in [28]. The algorithm uses Lagrange multipliers with the constraint that the noise variance is known. The work in [29] proposes a VSS normalized LMS algorithm that provided a VSS strategy to the algorithm of [24]. The authors in [30] propose a sparsity-aware VSS strategy, which updates the step-size using the absolute value of the instantaneous error instead of the energy. The algorithm is shown to perform better for sparse systems compared with other VSS strategies.

1.2 VSS strategies for WSNs

The idea of varying the step-size has been extended to estimation in WSNs. In some cases, the authors have proposed to simply incorporate existing VSS strategies within the WSN framework [13,14,15,16]. The work in [13, 14] incorporates the VSS strategy proposed in [25] directly into the distributed estimation framework. The same VSS strategy has been used to transform domain distributed estimation by the authors in [15]. The authors in [16] use a recently proposed sparse VSS technique of [30] for distributed estimation of compressible systems. While others have used the setup of the network to specifically derive new VSS strategies [17,18,19,20,21]. The work in [17] improves the strategy introduced in [13] by diffusing the error values into the network as well. The work in [18] proposes a noise constrained distributed algorithm, derived using Lagrangian multipliers. The authors in [19,20,21] derive VSS strategies from mean square deviation calculations.

1.3 Analysis for VSS strategies

In general, each VSS algorithm aims to improve performance at the cost of computational complexity. Generally, this is an acceptable trade-off as the improvement in performance is considerable. The drawback is that the additional complexity results in the analysis of the algorithm becoming tedious. This complexity is further increased for the case of WSNs as different sensor nodes collaborate with each other to improve performance. Authors have used various assumptions in order to perform the analysis of these algorithms. However, each algorithm has been dealt with independently in order to find a closed-form solution. An exact method of analysis has been recently proposed in [31, 32]. Although the results are accurate, this method is mathematically complex as well as algorithm specific and cannot be generalized for all algorithms. A generic treatment of VSS algorithms has been presented in [33] but without taking into account a WSN. In a WSN, the data is shared between nodes and this fact needs to be taken into account while performing the analysis. Due to this very important factor, the analysis of [33] cannot be extended to the WSN scenario.

Table 1 lists which analysis has been performed for the VSS algorithms of [13,14,15,16,17,18,19,20,21] and compares them with the proposed work. As can be seen, most of the work includes either no analysis or only a part of the analysis. None of the authors have, however, performed the analysis in a generic way.

1.4 Contributions

This work presents a generalized analysis approach for LMS-based VSS techniques that have been applied to WSNs. The proposed generalized analysis can be applied to most existing as well as any forthcoming VSS approaches. Following are the main contributions of this work:

-

Derivation of the step-size limit for stability of distributed VSSLMS algorithms in WSNs.

-

Generalized transient analysis for distributed VSSLMS algorithms in WSNs, including derivation of iterative equations for mean square deviation (MSD) and excess mean square error (EMSE).

-

Derivation of steady-state equations for MSD and EMSE for distributed VSSLMS algorithms in WSNs.

-

Derivation of steady-state step-size terms for the VSSLMS algorithms being used as case study in this work.

-

Validation of theoretical analysis through experimentation.

A list of the acronyms being used within the paper are given below:

The rest of the paper is organized as follows. Section 2 presents a working system model and problem statement. Section 3 details the complete theoretical analysis for diffusion-based distributed VSS LMS algorithms. Simulation results are presented in Sect. 4. Section 5 concludes the paper (Table 2).

2 System model

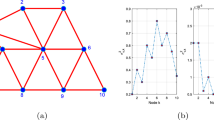

We consider an adaptive network consisting of N sensor nodes, as shown in Fig. reffigspsans, deployed over a geographical area to estimate an M dimensional unknown parameter vector, whose optimum value is denoted by \({\mathbf{w}}^o \in {\mathbb {R}}^{M}\). We denote the neighborhood of any node k by \({\mathcal {N}}_k\) and number of neighbors of node k by \(n_k\). The neighborhood of a node k is a set of nodes in close vicinity such that they have a single-hop communication link with node k, i.e., for \(l =1, 2, \ldots , N\) and \(l \ne k\), nodes l and k have single-hop communication between them if \(l \in {\mathcal {N}}_k\).

Each node k has access to a time realization of a known regressor row vector \({\mathbf{u}}_{k} (i)\) of length M and a scalar measurement \(d_k(i)\), which are related by

where \(k=1,\ldots , N,v_k(i)\) is zero-mean additive white Gaussian noise with variance \(\sigma ^2_{v_k}\), which is spatially uncorrelated, and i is the time index. At each node, these measurements are used to generate an estimate of the unknown vector \(\mathbf{w}^o\), denoted by \({\mathbf{w}}_{k}(i)\).

The fully distributed Adapt-Then-Combine (ATC) DLMS algorithm proposed in [12] is summarized below.

where \({\varvec{\Psi }}_k (i)\) is the intermediate update, \(e_k (i)\) is the instantaneous error and \(c_{lk}\) are the combination weights [12].

The step-size \(\mu _k\) can be varied using any one of several available strategies [13,14,15,16,17,18,19,20,21]. Thus, the generic VSSDLMS algorithm is described by (2), (4) and the following set of equations

where \(f\{ \mu _k (i) \}\) is a function that defines the update equation for the step-size and varies for each VSS strategy.

While performing the analysis of the LMS algorithm, the input regressor vector is assumed to be independent of the estimated vector. For the VSS algorithms, it is generally assumed that control parameters are chosen such that the step-size and the input regressor vector are asymptotically independent of each other, resulting in a closed-form steady-state solution that closely matches with the simulation results. For some VSS algorithms, the analytical and simulation results are closely matched during the transition stage as well but this is not always the case. The results are still acceptable for all algorithms as a closed-form solution is obtained.

The main objective of this work is to provide a generalized analysis for diffusion-based VSSLMS algorithms, in lieu with the assumptions mentioned above. A list of symbols used in this paper is presented in Table 3.

3 Proposed unified analysis framework

In a distributed network, the nodes exchange data, as can be seen from (4). As a result, there is going to exist a correlation between the data of the entire network. To account for this inter-node dependence, the performance of the whole network needs to be studied. Some new variables are introduced, transforming the local ones into global variables as follows:

Using the new variables, the set of equations representing the entire network is given by

where \({\mathbf{w}}^{\left( o \right) } = {\mathbf{Q}} {\mathbf{w}}^o,{\mathbf{Q}} = col\left\{ \mathrm {{\mathbf{I}}}_M,\mathrm {{\mathbf{I}}}_M,\ldots ,\mathrm {{\mathbf{I}}}_M\right\} \) is a \(MN \times M\) matrix, \({\mathbf{G}} = {\mathbf{C}} \otimes \mathrm{{\mathbf{I}}}_M,{\mathbf{C}}\) is an \(N \times N\) weighting matrix, \(\left\{ \mathbf{C}\right\} _{lk} = c_{lk}\) and \(\otimes \) is the Kronecker product.

3.1 Mean analysis

The analysis is now carried out using the new set of equations. The global weight-error vector is given by

Since \({\mathbf{G}}{\mathbf{w}^{\left( o\right) }} \buildrel \Delta \over = {\mathbf{w}^{\left( o\right) }}\), by incorporating the global weight-error vector into (7), we get

Using the assumption that the step-size matrix \({{\mathbf{D}}(i) }\) is independent of the regressor matrix \({\mathbf{U}}(i)\) [14, 16, 18, 25,26,27,28,29], the following relation holds true asymptotically

where \({\mathbb {E}}\left[ {{\mathbf{U}}^T(i) {\mathbf{U}}(i) } \right] = {\mathbf{R}_{\mathbf{U}}}\) is the auto-correlation matrix of \({{\mathbf{U}}(i)}\). Now, taking the expectation on both sides of (9) and simplifying gives

The expectation of the second term of the right-hand side of (9) is zero due to the measurement noise being zero-mean as well as spatially uncorrelated with the input regressor.

It can be seen from (11) that the term defining the stability of the algorithm is \(\left( {\mathrm{{\mathbf{I}}}_{MN} - {\mathbb {E}}\left[ {{\mathbf{D}}(i) } \right] {\mathbf{R}}_{\mathbf{U}} } \right) \). As the matrix \({{\mathbf{D}}(i) }\) is diagonal, this term can be further simplified to the node-level as \({\left( {\mathrm{\mathbf{I}} - {\mathbb {E}}\left[ {\mu _{k}(i) } \right] {\mathbf{R}}_{{\mathbf{u}},k} } \right) }\). Thus, the stability condition is given by

which holds true if the mean of the step-size is governed by

where \({{\lambda _{\max } \left( {{\mathbf{R}}_{{\mathbf{u}},k} } \right) }}\) is the maximum eigenvalue of the auto-correlation matrix \({{\mathbf{R}}_{{\mathbf{u}},k} }\).

3.2 Mean-square analysis

Following the analysis procedure of [23], we take the weighted norm of (9) and then apply the expectation operator. After simplifying we get

where

The analysis becomes quite tedious for non-Gaussian data. Therefore, the data is assumed to be Gaussian, without loss of generality [23]. The auto-correlation matrix is decomposed as \({\mathbf{R}}_{{\mathbf{U}}} = {\mathbf{T}} {\varvec{\Lambda }} {\mathbf{T}}^T\), where \(\varvec{\Lambda }\) is an eigenvalues diagonal matrix and \(\mathbf{T}\) is a matrix of eigenvectors corresponding to the eigenvalues, such that \({\mathbf{T}}^T {\mathbf{T}} = {\mathbf{I}}\). Using \(\mathbf{T}\), the variables are redefined as

where the input regressors are considered independent for each node and the step-size matrix \({\mathbf{D}} (i)\) is block-diagonal so it remains the same.

Using the data independence assumption [23] and simplifying, we arrive at the final recursive update equation

where

Finally, taking the weighting matrix \(\varvec{\Sigma } = \mathbf{I}_{M^2 N^2}\) gives the mean-square-deviation (MSD) while \(\varvec{\Sigma } = \varvec{\Lambda }\) gives the EMSE. The detailed analysis and description of the variables are given in Appendix A.

3.3 Steady-state analysis

At steady-state, (31) and (18) become

where \({\mathbf{D}}_{ss} = \text{ diag } \left\{ \mu _{ss,k} {\mathbf{I}}_M \right\} ,{\mathbf{b}}_{ss} = {\mathbf{R}}_{\mathbf{v}} {\mathbf{D}}_{ss}^2 {\varvec{\Lambda }}\) and \({\mathbf{D}}_{ss}^2 = \text{ diag } \left\{ \mu _{ss,k}^2 {\mathbf{I}}_M \right\} \). Simplifying (21), we get

This equation gives the steady-state performance measure for the entire network. In order to solve for the steady-state values of MSD and EMSE, we take \(\bar{\varvec{\sigma }} = \text{ bvec }\{\mathbf{I}_{M^2 N^2}\}\) and \(\bar{\varvec{\sigma }} = \text{ bvec }\{\varvec{\Lambda }\}\), respectively.

3.4 Steady-state step-size analysis

The analysis presented in the above section has been generic for any VSS algorithm. In this section, 3 different VSS algorithms are chosen to present the steady-state analysis for the step-size. These steady-state step-size values are then directly inserted into (23) and (22). The 3 different VSS algorithms and their step-size update equations are given in Table 4. The first algorithm, denoted KJ is the work of Kwong and Johnston [25], as used in [14]. The NC algorithm refers to the noise-constrained LMS algorithm [28], as used in [18]. Finally, Sp refers to the Sparse VSSLMS algorithm of [30], as used in [16]. It should be noted that the step-size matrix for the network, \({\mathbf{D}}\), is diagonal. Therefore, the step-size for each node can be studied independently.

Applying the expectation operator and simplifying results in the equations presented in Table 5, where \(\zeta _k(i)\) is the value of the EMSE for node k.

At steady-state, the step-size is given by \(\mu _{k,ss}\) and the approximate steady-state equations are given in Table 6. It should be noted that the steady-state EMSE values are assumed small enough to be ignored.

4 Results and discussion

In this section, the analysis presented above will be tested upon three VSS algorithms listed in Table 4 when they are incorporated within the DLMS framework. The analysis is verified through three different experiments. The first experiment plots the theoretical transient MSD using (17) and compares with simulation results. Further, steady-state results are tabulated to see the effects of the assumptions. Simulation steady-state results are compared to theoretical results attained using (17) as well as (23). In the second experiment, steady-state MSD results are compared for different network sizes while the signal-to-noise ratio (SNR) is varied. Finally, a tabular comparison has been shown to ascertain the difference between the results obtained from (23) with those from [14, 18] and [16].

4.1 Experiment 1

For the first experiment, the network size, \(N = 10\), the size of the unknown parameter vector, \(M = 4\) and the SNR is varied between 0, 10 and 20 dB. The step-size control parameters chosen for this experiment are given in Table 7. The values are slightly different in some cases in order to maintain similar convergence speed. The results are shown in Figs. 2, 3, 4.

The comparison for the KJ algorithm are shown in Fig. 2. There is a slight discrepancy due to the assumptions that have been made. However, this discrepancy is acceptable as the steady-state results match very closely. The results for the NC algorithm are shown in Fig. 3. There is a mismatch in the transient stage in this case as well but the results match very closely at steady-state again. Finally, the comparison for the Sp algorithm is shown in Fig. 4. The mismatch during the transient stage is greater for this algorithm compared with the previous two cases. However, since the step-size is assumed to be asymptotically independent of the regressor data and there is an excellent match at steady-state, these results are completely acceptable. The steady-state results are tabulated in Table 8. Due to the assumption that the EMSE is small enough to be ignored at steady-state, there exists a slight mismatch between the results of (17) and (23). However, the results are overall closely matched and the results are acceptable.

4.2 Experiment 2

For the second experiment, the SNR is varied from 0 dB to 40 dB and the steady-state results are plotted. These are compared with the theoretical results obtained from (23). The network size is varied between \(N = 10\) and \(N = 20\). The step-size controlling parameters used for this experiment are given in Table 9. The initial step-size is kept small for the NC algorithm as the initial step-size effects the steady-state value as shown in Table 6. However, there is no relation between the steady-state step-size value and the initial step-size value for the other algorithms. This has also been shown for the case of the KJ algorithm in [14]. The results are shown in Fig. 5 for the KJ algorithm. There is a close match and a steady downward trend with increase in SNR. The results for the NC algorithm are shown in Fig. 6. There is a slight mismatch but this is not significant and can be ignored. Finally, the results for the Sp algorithm are shown in Fig. 7. There is an excellent match and a steady improvement in performance with increase in SNR.

4.3 Experiment 3

In this final experiment, the steady-state MSD theoretical results from [14, 18] and [16] are compared with the results obtained from (23). The analysis performed in [14, 18] and [16] gives exact expressions for \(\mu _{k,ss}\) as well as \(\mu ^2_{k,ss}\) for evaluating steady-state MSD. In the present work, a generic expression has been proposed. This experiment shows that the results from the exact analysis and those obtained from (23) match closely. The unknown vector length is varied between \(M=2,M=4\) and \(M=6\). The SNR is varied between 0, 10 and 20 dB. The step-size control parameters are given in Table 10. The results are shown in Table 10. As can be seen, there are slight mismatches among certain values. However, the values are very closely matched for all cases. Thus, the proposed analysis procedure has been verified as generic and applicable to different VSS strategies in WSNs (Table 11).

5 Conclusion

Parameter estimation is an important aspect for WSNs that form an integral part of the IoT paradigm. This work presents a generalized approach for the theoretical analysis of LMS-based VSS algorithms being employed for estimation in diffusion-based WSNs. The analysis provided here can prove to be an excellent tool for the analysis of any VSS approach applied to the WSN framework in future. Various existing algorithms have been tested thoroughly to verify the results of the presented work under different conditions. Simulation results confirm the generic behavior for the proposed analysis for both the transient state as well as steady-state. Furthermore, a comparison has been performed between the proposed analysis results and results obtained from the analysis that already exists in the literature. Results were found to be closely matched. further strengthening our claim that the proposed analysis is generic for VSS strategies applied to WSNs.

References

Atzori, L., Iera, A., & Morabito, G. (2010). The Internet of Things: A survey. Computer Networks, 54(15), 2787–2805.

Stankovic, J. (2014). Research directions for the Internet of Things. IEEE Internet Things Journal, 1(1), 3–9.

Ejaz, W., & Ibnkahla, M. (2015). Machine-to-machine communications in cognitive cellular systems. In Proceedings of 15th IEEE international conference on ubiquitous wireless broadband (ICUWB), Montreal, Canada (pp. 1–5).

Palattella, M. R., Dohler, M., Grieco, A., Rizzo, G., Torsner, J., Engel, T., et al. (2016). Internet of Things in the 5G era: Enablers, architecture, and business models. IEEE Journal on Selected Areas in Communicatios, 34(3), 510–527.

ul Hasan, N., Ejaz, W., Baig, I., Zghaibeh, M., Anpalagan, A. (2016). QoS-aware channel assignment for IoT-enabled smart building in 5G systems. In Proceedings of 8th IEEE international conference on ubiquitous and future networks (ICUFN), Vienna, Austria (pp. 924–928).

Ejaz, W., Naeem, M., Basharat, M., Anpalagan, A., & Kandeepan, S. (2016). Efficient wireless power transfer in software-defined wireless sensor networks. IEEE Sensors Journal, 16(20), 7409–7420.

Culler, D., Estrin, D., & Srivastava, M. (2004). Overview of sensor networks. IEEE Computer, 37(8), 41–49.

Olfati-Saber, R., Fax, J. A., & Murray, R. M. (2007). Consensus and cooperation in networked multi-agent systems. Proceedings of the IEEE, 95(1), 215–233.

Lopes, C. G., & Sayed, A. H. (2007). Incremental adaptive strategies over distributed networks. IEEE Transactions on Signal Processing, 55(8), 4064–4077.

Lopes, C. G., & Sayed, A. H. (2008). Diffusion least-mean squares over adaptive networks: Formulation and performance analysis. IEEE Transactions on Signal Processing, 56(7), 3122–3136.

Schizas, I. D., Mateos, G., & Giannakis, G. B. (2009). Distributed LMS for consensus-based in-network adaptive processing. IEEE Transactions on Signal Processing, 57(6), 2365–2382.

Cattivelli, F., & Sayed, A. H. (2010). Diffusion LMS strategies for distributed estimation. IEEE Transactions on Signal Processing, 58(3), 1035–1048.

Bin Saeed, M. O., Zerguine, A., Zummo, S. A. (2010). Variable step-size least mean square algorithms over adaptive networks. In Proceedings of 10th international conference on information sciences signal processing and their applications (ISSPA), Kuala Lumpur, Malaysia (pp. 381–384).

Bin Saeed, M. O., Zerguine, A., & Zummo, S. A. (2013). A variable step-size strategy for distributed estimation over adaptive networks. EURASIP Journal on Advances in Signal Processing, 2013(2013), 135.

Almohammedi, A. & Deriche, M. (2015). Variable step-size transform domain ILMS and DLMS algorithms with system identification over adaptive networks. In Proceedings of IEEE Jordan conference on applied electrical engineering and computing technologies (AEECT), Amman, Jordan (pp. 1–6).

Bin Saeed, M. O., Zerguine, A., Sohail, M. S., Rehman, S., Ejaz, W., & Anpalagan, A. (2016). A variable step-size strategy for distributed estimation of compressible systems in wireless sensor networks. In Proceedings of IEEE CAMAD, Toronto, Canada (pp. 1–5).

Bin Saeed, M. O., & Zerguine, A. (2011). A new variable step-size strategy for adaptive networks. In Proceedings of the forty fifth asilomar conference on signals, systems and computers (ASILOMAR), Pacific Grove, CA (pp. 312–315).

Bin Saeed, M. O., Zerguine, A., & Zummo, S. A. (2013). A noise-constrained algorithm for estimation over distributed networks. International Journal of Adaptive Control and Signal Processing, 27(10), 827–845.

Ghazanfari-Rad, S., & Labeau, F. (2014). Optimal variable step-size diffusion LMS algorithms. In Proceedings of IEEE workshop on statistical signal processing (SSP), Gold Coast, VIC (pp. 464–467).

Jung, S. M., Sea, J.-H., & Park, P. G. (2015). A variable step-size diffusion normalized least-mean-square algorithm with a combination method based on mean-square deviation. Circuits, Systems, and Signal Process., 34(10), 3291–3304.

Lee, H. S., Kim, S. E., Lee, J. W., & Song, W. J. (2015). A variable step-size diffusion LMS algorithm for distributed estimation. IEEE Transactions on Signal Processing, 63(7), 1808–1820.

Sayed, A. H. (2014). Adaptive networks. Proceedings of the IEEE, 102(4), 460–497.

Sayed, A. H. (2003). Fundamentals of adaptive filtering. New York: Wiley.

Nagumo, J., & Noda, A. (1967). A learning method for system identification. IEEE Transactions on Automatic Control, 12(3), 282–287.

Kwong, R. H., & Johnston, E. W. (1992). A variable step-size LMS algorithm. IEEE Transactions on Signal Processing, 40(7), 1633–1642.

Aboulnasr, T., & Mayyas, K. (1997). A robust variable step size LMS-type algorithm: Analysis and simulations. IEEE Transactions on Signal Processing, 45(3), 631–639.

Costa, M. H., & Bermudez, J. C. M. (2008). A noise resilient variable step-size LMS algorithm. Signal Processing, 88(3), 733–748.

Wei, Y., Gelfand, S. B., & Krogmeier, J. V. (2001). Noise-constrained least mean squares algorithm. IEEE Transactions on Signal Processing, 49(9), 1961–1970.

Sulyman, A. I., & Zerguine, A. (2003). Convergence and steady-state analysis of a variable step-size NLMS algorithm. Signal Processing, 83(6), 1255–1273.

Bin Saeed, M. O., & Zerguine, A. (2013). A variable step-size strategy for sparse system identification. In Proceedings of 10th international multi-conference on systems, signals & devices (SSD), Hammamet (pp. 1–4).

Al-Naffouri, T. Y., & Moinuddin, M. (2010). Exact performance analysis of the \(\epsilon \)-NLMS algorithm for colored circular Gaussian inputs. IEEE Transactions on Signal Processing, 58(10), 5080–5090.

Al-Naffouri, T. Y., Moinuddin, M., & Sohail, M. S. (2011). Mean weight behavior of the NLMS algorithm for correlated Gaussian inputs. IEEE Signal Processing Letters, 18(1), 7–10.

Bin Saeed, M. O. (2017). LMS-based variable step-size algorithms: A unified analysis approach. Arabian Journal of Science & Engineering, 42(7), 2809–2816.

Koning, R. H., Neudecker, H., & Wansbeek, T. (1990). Block Kronecker products and the vecb operator. Economics Deptartment, Institute of Economics Research, Univ. of Groningen, Groningen, The Netherlands, Research Memo. No. 351.

Author information

Authors and Affiliations

Corresponding author

Appendix A

Appendix A

Here, we present the detailed mean-square analysis. Applying the expectation operator to the weighting matrix of (14) gives

For ease of notation, we denote \({\mathbb {E}}\left[ {{\hat{\varvec{\Sigma }}}} \right] = {\varvec{\Sigma }} '\) for the remaining analysis.

Next, using the Gaussian transformed variables as gives in Sect. 3.2, (14) and (24) are rewritten, respectively, as

and

where \({{\bar{\mathbf{Y}}}}(i) = {{\bar{\mathbf{G}} D}}(i) {{{\bar{\mathbf{U}}}}^T(i) }\) and \({\mathbb {E}}\left[ {{{\bar{\mathbf{U}}}}^T(i) {{\bar{\mathbf{U}}}}(i) } \right] = {\varvec{\Lambda }}\).

The two terms that need to be solved are \({\mathbb {E}}\Big [ {\mathbf{v}}^T(i) {{\bar{\mathbf{Y}}}}^T(i) \bar{\varvec{\Sigma }}{{\bar{\mathbf{Y}}}}(i) {\mathbf{v}}(i) \Big ]\) and \({\mathbb {E}}\left[ {{{\bar{\mathbf{U}}}}^T(i) {{\bar{\mathbf{Y}}}}(i) \bar{\varvec{\Sigma }} {{\bar{\mathbf{Y}}}}(i) {{\bar{\mathbf{U}}}}(i) } \right] \). Using the \(\text{ bvec }\{.\}\) operator and the block Kronecker product, denoted by \(\odot \) [34], the two terms are simplified as

and

where \(\bar{\varvec{\sigma }} = \text{ bvec } \left\{ {\bar{\varvec{\Sigma }} } \right\} ,{\mathbf{b}}(i) = \text{ bvec } \left\{ {{\mathbf{R}}_{\mathbf{v}} {\mathbb {E}}\left[ {{\mathbf{D}}^2(i) } \right] {\varvec{\Lambda }} } \right\} ,{\mathbf{R}}_{\mathbf{v}} = {\varvec{\Lambda }} _{\mathbf{v}} \odot \mathrm{{\mathbf{I}}}_M,\varvec{\Lambda }_{\mathbf{v}}\) is a diagonal noise variance matrix for the network and \({\mathbf{A}} = \text{ diag } \left\{ {{\mathbf{A}}_1 ,{\mathbf{A}}_2 ,\ldots ,{\mathbf{A}}_N } \right\} \) [10], with each matrix \({\mathbf{A}}_k\) defined as

where \({\varvec{\Lambda }}_k\) is the diagonal eigenvalue matrix and \(\lambda _k\) is the corresponding eigenvalue vector for node k. Applying the \(\text{ bvec }\{.\}\) operator to (26) and simplifying gives

where \({\mathbf{F}}(i)\) is given by (18). Thus, (14) is rewritten as

which characterizes the transient behavior of the network. Although not explicitly visible from (31), (18) clearly shows the effect of the VSS algorithm on the performance of the algorithm through the presence of the diagonal step-size matrix \({\mathbf{D}} (i)\).

Now, using (31) and (18), the analysis iterates as

where \({{\mathbb {E}}\left[ {\mathbf{D}} (0) \right] = \text{ diag } \left\{ {\mu _{1}(0) {{\mathbf{I}}}_M ,\ldots ,\mu _{N}(0) \mathrm{{\mathbf{I}}}_M } \right\} }\) as these are the initial step-size values. The first iterative update is given by

where \({\mathbf{b}}(0) = \text{ bvec } \left\{ {{\mathbf{R}}_{\mathbf{v}} {\mathbb {E}}\left[ {{\mathbf{D}}^2(0) } \right] {\varvec{\Lambda }} } \right\} ,{\mathbb {E}}\left[ {\mathbf{D}} (1) \right] \) is the first step-size update. The matrix \({\mathbf{F}}(i)\) is updated with (18) using the ith update for the step-size matrix \({\mathbb {E}}\left[ {\mathbf{D}} (i) \right] \), which is updated using the VSS approach that is being applied to the algorithm. The second iterative update is given by

Continuing, the third iterative update is given by

where the weighting matrix \({{\mathcal {A}}}(2) = \mathbf{F}(0)\mathbf{F}(1)\). Similarly, the fourth iterative update is given by

where the weighting matrix \({{\mathcal {A}}}(3) = {{\mathcal {A}}}(2) \mathbf{F}(2)\). Now, from the third and fourth iterative updates, we generalize the recursion for the ith update as

where \({{\mathcal {A}}}(i-1) = {{\mathcal {A}}}(i-2) \mathbf{F}(i-2)\). The recursion for the \((i+1)\)th update is given by

where \({{\mathcal {A}}}(i) = {{\mathcal {A}}}(i-1) \mathbf{F}(i-1)\). Subtracting (32) from (33) and simplifying gives the overall recursive update equation

Simplifying (34) and rearranging the terms gives the final recursive update equation (17), where

The final set of iterative equations for the mean-square learning curve are given by (17), (18), (19) and (20).

Rights and permissions

About this article

Cite this article

Bin Saeed, M.O., Ejaz, W., Rehman, S. et al. A unified analytical framework for distributed variable step size LMS algorithms in sensor networks. Telecommun Syst 69, 447–459 (2018). https://doi.org/10.1007/s11235-018-0447-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11235-018-0447-z