Abstract

The purpose of this paper is to study the convergence of the quasi-maximum likelihood (QML) estimator for long memory linear processes. We first establish a correspondence between the long-memory linear process representation and the long-memory AR\((\infty )\) process representation. We then establish the almost sure consistency and asymptotic normality of the QML estimator. Numerical simulations illustrate the theoretical results and confirm the good performance of the estimator.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since Hurst’s (1953) introduction of long-range dependent processes, much research has focused on estimating the long-range parameter, whether defined on the basis of the asymptotic power-law behavior of the correlogram at infinity or that of the spectral density at zero [see the monographs (Beran 1994) and Doukhan et al. (2003) for more details].

Two estimation frameworks have been studied extensively. The first focused on the estimation of the long-memory parameter alone, but could be carried out in a semi-parametric framework, ı.e. if only the asymptotic behavior of the correlation or spectral density was specified. This led to the first methods proposed historically, such as those based on the R/S statistic, on quadratic variations, on the log-periodogram, or more recent methods such as wavelet or local Whittle [again, see Doukhan et al. (2003) for more details].

Here we are interested in a more parametric framework, and in estimating all the parameters of the process, not just the long memory parameter. The first notable results on the asymptotic behavior of such a parametric estimator were obtained in Fox and Taqqu (1986) in the special case of Gaussian long-memory processes, using the Whittle estimator. These results were extended to linear long-memory processes with a moment of order 4 by Giraitis and Surgailis (1990). In both settings, the asymptotic normality of the estimator was proved, while non-central limit theorems were obtained for functions of Gaussian processes in Giraitis and Taqqu (1999) or for increments of the Rosenblatt process in Bardet and Tudor (2014). The asymptotic normality of the maximum likelihood estimator for Gaussian time series was also obtained by Dahlhaus (1989) using that of the Whittle estimator obtained in Fox and Taqqu (1986).

For weakly dependent time series, especially for conditionally heteroscedastic processes such as GARCH processes, the quasi-maximum likelihood (QML) estimator has become the benchmark for parametric estimation, providing very interesting convergence results where Whittle’s estimator would not. This is true for GARCH or ARMA-GARCH processes [see (Berkes and Horváth 2004) and Francq and Zakoian (2004)], but also for many others such as ARCH(\(\infty \)), AR\((\infty )\), APARCH processes, etc. (Bardet and Wintenberger 2009). We will also note convergence results for this modified estimator for long-memory squares processes, typically LARCH (\(\infty \)) processes, see Beran and Schützner (2009), or quadratic autoregressive conditional heteroscedastic processes, see Doukhan et al. (2016). But for long-memory processes, such as those defined by a non-finite sum of their autocorrelations, to our knowledge only the paper by Boubacar Maïnassara et al. (2021) has shown the normality of this QML estimator in the special case of a FARIMA(p, d, q) process with weak white noise.

We therefore propose here to study the convergence of the Gaussian QML estimator in the general framework of long-memory one-sided linear processes. In such a framework, we begin by noting that the QML estimator is in fact a non-linear least-squares estimator. The key point of our approach is to prove that long-memory one-sided linear processes can be written in autoregressive form with respect to their past values, which we can call long-memory linear AR\((\infty )\). This is perfectly suited to the use of QMLE, since this estimator is obtained from the conditional expectation and variance of the process. We then show the almost sure convergence of QMLE for these long-memory AR\((\infty )\) processes, which generalizes a result obtained in Bardet and Wintenberger (2009) for weakly dependent AR\((\infty )\) processes. We also prove the asymptotic normality of this estimator, which provides an alternative to the asymptotic normality of Whittle’s estimator obtained in Giraitis and Surgailis (1990). An advantage of QML estimation lies in the fact that, because it is applied to processes with an AR\((\infty )\) representation, the fact that the \((X_t)\) series is centered or not has no effect at all on the parameters of this AR\((\infty )\) representation, particularly on the estimation of the long memory parameter.

Finally, we performed simulations of two long-memory time series and examined the performance of the QMLE as a function of the size of the observed trajectories. This showed that the behavior of the QMLE is consistent with theory as the size of the trajectories increases, and provides a very accurate alternative to Whittle’s estimator. An application on real data (average monthly temperatures in the northern hemisphere) is also presented.

This article is organized as follows: the Sect. 2 below presents the AR\((\infty )\) notation of an arbitrary long memory one-sided linear process, the Sect. 3 is devoted to the presentation of the QMLE estimator and its asymptotic behavior, numerical applications are treated in the Sect. 4, while all proofs of the various results can be found in the Sect. 6.

2 Long-memory linear causal time series

Assume that \(\varepsilon =(\varepsilon _t)_{t \in {\mathbb {Z}}}\) is a sequence of centered independent random variables such as \({\mathbb {E}}[\varepsilon _0^2]=1\) and \((a_i)_{i \in {\mathbb {N}}}\) is a sequence of real numbers such as:

where \(d\in (0,1/2)\) and with \(L_{a}(\cdot )\) a positive slow varying function satisfying

Now, define the causal linear process \((X_t)_{t \in {\mathbb {Z}}}\) by

Since \(0<d<1/2\), it is well know that \((X_t)_{t\in {\mathbb {Z}}}\) is a second order stationary long-memory process. Indeed, its autocovariance is

where \(C_d=\int _0^\infty (u+u^2)^{d-1}\,du\) (see for instance Wu et al. 2010).

Then, it is always possible to provide a causal affine representation for \((X_t)_{t\in {\mathbb {Z}}}\), i.e. it is always possible to write \((X_t)_{t\in {\mathbb {Z}}}\) as an AR\((\infty )\) process:

Proposition 2.1

Let \((X_t)_{t\in {\mathbb {Z}}}\) be a causal linear process defined in (2.2) where \((a_i)\) satisfies (2.1). Then, there exists a sequence of real number \((u_i)_{i\in {\mathbb {N}}^*}\) such as:

where \((u_i)_{i\in {\mathbb {N}}^*}\) satisfies

where \(L_u\) is a slow varying function.

Remark 2.1

Using (6.1), the reciprocal implication of Proposition 2.1 is also true: if \((X_t)\) satisfies the linear affine causal representation (2.4) where \((u_i)_{i\in {\mathbb {N}}}\) satisfies (2.5), then \((X_t)\) is a one-sided long-memory linear process satisfying (2.2) where \((a_i)\) satisfies (2.1).

Remark 2.2

It is also known that \(\displaystyle \Gamma (d)\, \Gamma (1-d)=\frac{\pi }{\sin (\pi \, d)}\) for any \(d\in (0,1)\), and this implies \(\displaystyle u_n \begin{array}{c}{\mathop {\sim }\limits ^{}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\frac{a_0\, d \, \sin (\pi \, d)}{\pi \, L_a(n)} \, n^{-1-d}\).

As a consequence, every long-memory one-sided linear process is a long-memory AR\((\infty )\) process with the special property that the sum of the autoregressive coefficients equals 1. This is the key point for the use of quasi-maximum likelihood estimation in the following section.

Example of the FARIMA process Let \((X_t)_{t \in {\mathbb {Z}}}\) be a standard FARIMA(0, d, 0) with \(d\in (0,1/2)\), which means \(X=(I-B)^{-d} \varepsilon \), where B is the usual backward linear operator on \({\mathbb {R}}^{\mathbb {Z}}\) and I the identity operator. Then, using the power series of \((1-x)^{-d}\), it is known that

Using the Stirling expansion of the Gamma function, i.e. \(\Gamma (x) \begin{array}{c}{\mathop {\sim }\limits ^{}} \\ {\scriptstyle x\rightarrow \infty }\end{array}\sqrt{2\pi }\, e^{-x}x^{x-1/2}\), we obtain \(a_{n} \begin{array}{c}{\mathop {\sim }\limits ^{}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\frac{1}{\Gamma (d)}\, n^{d-1}\), which is (2.1) with \(L_a(n) \begin{array}{c}{\mathop {\sim }\limits ^{}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\frac{1}{\Gamma (d)}\).

Moreover, the decomposition \(X=\varepsilon +(I- (I-B)^d)\, X\) implies:

The expansion \(\displaystyle \frac{ \Gamma (n-d)}{\Gamma (n+1)} \begin{array}{c}{\mathop {\sim }\limits ^{}} \\ {\scriptstyle n\rightarrow \infty }\end{array}n^{-1-d}\) provides \(X_t=\varepsilon _t + \sum _{n=1}^\infty u_n\, X_{t-n}\) with \(u_n \begin{array}{c}{\mathop {\sim }\limits ^{}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\frac{d}{\Gamma (1-d)}\, n^{-1-d}\) is equivalent to (2.5) when \(a_0=1\) and \(L_a(n) \begin{array}{c}{\mathop {\sim }\limits ^{}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\frac{1}{\Gamma (d)}\).

3 Asymptotic behavior of the Gaussian Quasi-maximum likelihood estimator

3.1 Definition of the estimator

We will assume that \((X_t)_{t\in {\mathbb {Z}}}\) is a long-memory one-sided linear process written as an AR\((\infty )\) process, i.e.

where

-

\((\varepsilon _t)_{t\in {\mathbb {Z}}}\) is a white noise, such that \(\varepsilon _0\) has an absolutely continuous probability measure with respect to the Lebesgue measure and such that \({\mathbb {E}}[\varepsilon _0^2]=1\);

-

for \(\theta ={}^t(\gamma ,\sigma ^2) \in \Theta \) a compact subset of \({\mathbb {R}}^{p-1}\times (0,\infty )\), \((u_n(\theta ))_{n\in {\mathbb {N}}}\) is a sequence of real numbers satisfying for any \(\theta \in \Theta \),

$$\begin{aligned} u_n(\theta )= L_\theta (n)\,n^{-d(\theta )-1}\quad \hbox {for}\, n \in {\mathbb {N}}^*\, \hbox { and}\quad \sum _{n=1}^\infty u_n(\theta )=1. \end{aligned}$$(3.2)with \(d(\theta ) \in (0,1/2)\). We also assume that the sequence \((u_n(\theta ))\) does not depend on \(\sigma ^2\);

-

\(\theta ^*={}^t(\gamma ^*,\sigma ^{*2})\), \(\theta ^*\) is in the interior of \(\Theta \), with \(\sigma ^*>0\) an unknown real parameter and \(\gamma ^* \in {\mathbb {R}}^{p-1}\) an unknown vector of parameters.

A simple example of such a sequence \((u_n(\theta ))\) is \(u_n(\theta )=(\zeta (1+d))^{-1}\, n^{-1-d}\) for \(n \in {\mathbb {N}}^*\), with \(\theta =(d,\sigma ^2)\in (0,1/2)\times (0,\infty )\) where \(\zeta (\cdot )\) is the Riemann zeta function. Then \(\Theta = [d_m,d_M] \times [\sigma _m^2,\sigma _M^2]\), with \(0<d_m<d_M<1/2\) and \(0<\sigma _m^2<\sigma _M^2\).

For ease of reading, denote \(d^*=d(\theta ^*)\) the long-memory parameter of \((X_t)\). Denote also \(d^*_+=d^*+\varepsilon \) where \(\varepsilon \in (0,1/2-d^*)\) is chosen as small as possible. Since \((u_n(\theta ))_{n\in {\mathbb {N}}}\) satisfies (3.2), we know from Remark 2.1 that there exists \(C_a\) such that for any \(t\in {\mathbb {Z}}\),

We also deduce from (2.3) that there exists \(C_c>0\) satisfying

In the sequel we will also denote for any \(\theta \in \Theta \),

We want to estimate \(\theta ^*\) from an observed trajectory \((X_1,\ldots ,X_n)\), where \((X_t)\) is defined by (3.1). For such an autoregressive causal process, a Gaussian quasi-maximum likelihood estimator is really appropriate, since it is built on the assumption that \((\varepsilon _t)\) is Gaussian white noise, and it is well known that an affine function of \(\varepsilon _t\) is still a Gaussian random variable (see for example Bardet and Wintenberger 2009). It consists in considering the log-conditional density \(I_n(\theta )\) of \((X_1,\ldots ,X_n)\) when \((\varepsilon _t)\) is a standard Gaussian white noise and with \(X_t=\sigma \, \varepsilon _t + m_t(\theta )\), i.e.

However, such conditional log-likelihood is not a feasible statistic since \(m_t(\theta )\) depends on \((X_k)_{k\le 0}\) which is unobserved. Hence it is usual to replace \(m_t(\theta )\) by the following approximation:

with the convention \(\sum _{t=1}^0=0\). Then, a quasi conditional log-likelihood \({\widehat{I}}_n(\theta )\) can be defined:

If \(\Theta \) is a subset of \({\mathbb {R}}^p\) such as for all \(\theta \in \Theta \) there exists an almost surely stationary solution of the equation \(X_t=\sigma \, \varepsilon _t + m_t(\theta )\) for any \(t\in {\mathbb {Z}}\), we define the Gaussian quasi maximum likelihood estimator (QMLE) of \(\theta \) by

Note that a direct implication of the assumption that \((u_n(\theta ))\) does not depend on \(\sigma ^2\) is that if we denote \({{\widehat{\theta }}}_n={}^t({{\widehat{\gamma }}}_n,{{\widehat{\sigma }}}_n^2)\) the QMLE, then:

where by writing convention \(u_n( \theta )=u_n(\gamma )\). Hence, in this case of long-memory AR\((\infty )\), \({{\widehat{\gamma }}}_n\) is also a non-linear least square estimator of the parameter \(\gamma \).

3.2 Consistency and asymptotic normality of the estimator

The consistency of the QMLE is established under additional assumptions.

Theorem 3.1

Let \((X_t)_{t\in {\mathbb {Z}}}\) be a process defined by (3.1) and its assumptions. Assume also:

-

for any \(n\in {\mathbb {N}}^*\), \(\theta \in \Theta \mapsto u_n(\theta )\) is a continuous function on \(\Theta \);

-

If \(u_n(\theta )=u_n(\theta ')\) for all \(n\in {\mathbb {N}}^*\) with \(\theta =(\gamma ,\sigma ^2)\) and \(\theta '=(\gamma ',\sigma ^{2})\), then \(\theta =\theta '\).

Let \({{\widehat{\theta }}}_n\) be the QMLE defined in (3.9). Then

This result extends the \({{\widehat{\theta }}}_n\) consistency obtained in Bardet and Wintenberger (2009) to short-memory time series models, including ARMA, GARCH, and APARCH, among others, including AR(\(\infty \)) processes. It also applies to long memory AR(\(\infty \)) processes.

Remark 3.1

Regarding the long-memory linear process example, \(\theta ^*\) could also be estimated using Whittle’s estimator, which is constructed from the spectral density and second-order moments of the process. The consistency and asymptotic normality of this estimator were shown by Giraitis and Surgailis (1990).

Having shown the consistency, we would like to show the asymptotic normality of the QML estimator in the case of the long-memory one-sided linear processes considered above. This amounts to proving it for linear processes whose linear filter depends on a vector of parameters. This will be the case, for example, for FARIMA(p, d, q) processes, for which Boubacar Maïnassara et al. (2021) have already shown asymptotic normality in the more general case where \((\varepsilon _t)\) is weak white noise, i.e. in the case of weak FARIMA(p, d, q) processes.

As it is typical to establish the asymptotic normality of an M-estimator, we make assumptions about the differentiability of the sequence of functions \((u_n(\theta ))_{n\in {\mathbb {N}}^*}\) with respect to \(\theta \):

- \({\textbf {(A)}}\):

-

Differentiability of \((u_n(\theta ))_{n\in {\mathbb {N}}^*}\): for any \(n \in {\mathbb {N}}^*\), the function \(u_n(\theta )\) is a \(\mathcal{C}^2(\Theta )\) function and for any \(\delta >0\), there exists \(C_\delta >0\) such that:

$$\begin{aligned} \sup _{n \in {\mathbb {N}}} \sup _{\theta \in \Theta }\Big \{ n^{1+d(\theta )-\delta } \, \Big ( \big | u_n(\theta ) \big |+ \big \Vert \partial _{\theta } u_n(\theta ) \big \Vert + \big \Vert \partial ^2_{\theta ^2} u_n(\theta ) \big \Vert \Big ) \Big \} \le C_\delta . \end{aligned}$$(3.10)Moreover we assume that:

$$\begin{aligned} \hbox {for}\, v \in {\mathbb {R}}^{p-1 },\, \hbox {if for all} k\in {\mathbb {N}}^*,~~{}^t v \, \partial _{\gamma } u_{k}(\theta ^*)= 0\quad \Longrightarrow \quad v=0. \end{aligned}$$(3.11)

Example (called LM in the numerical applications): For the simple example where \((u_n(\theta ))\) is such as \(u_n(\theta )=\zeta (1+d)^{-1} n^{-1-d}\) for \(n \in {\mathbb {N}}^*\) with \(\theta =(d,\sigma ^2)\in (0,1/2)\times (0,\infty )\), we have:

Therefore (3.10) of (A) is satisfied with \(d(\theta )=d\) (note also that \(\delta =0\) is not possible). Moreover (3.11) is also clearly satisfied.

Theorem 3.2

Consider the assumptions of Theorem 3.1 and also that \({\mathbb {E}}[\varepsilon _0^3]=0\) and \(\mu _4=\Vert \varepsilon _0\Vert _4^4<\infty \). Then with \({{\widehat{\theta }}}_n\) defined in (3.9), and if (A) holds,

where \(M^*=\frac{1}{\sigma ^{*2}} \,\sum _{k=1}^\infty \sum _{\ell =1}^\infty \partial _\gamma u_k((\gamma ^*,0))\, {}^t \big (\partial _\gamma u_\ell ((\gamma ^*,0)) \big )\, r_X(\ell -k)\).

It is clear that \({{\widehat{\theta }}}_n\) satisfies (3.12) in the case of the FARIMA processes [but this asymptotic normality has been already established under more general assumptions in Boubacar Maïnassara et al. (2021)] or in the case of the LM processes example. It is also worth noting that the central limit theorem is written in exactly the same way as the one obtained in Bardet and Wintenberger (2009), although the latter dealt only with weakly dependent AR\((\infty )\) processes.

Remark 3.2

As already mentioned, Boubacar Maïnassara et al. (2021) have also established the almost certain convergence and asymptotic normality of the QML estimator in the specific case of FARIMA processes, but allowing the white noise \((\varepsilon _t)\) to be a weak white noise (non-correlation) and not a strong white noise as in our work. This comes at the price of slightly stronger moment conditions: in Boubacar Maïnassara et al. (2021), a moment of order \(2+\nu \) is required for almost sure convergence and a moment of order \(4+\nu \) for asymptotic normality (with \(0<\nu <1\)). This is the price to pay in their Assumption A4 for working with strong mixing properties of \((\varepsilon _t)\).

Remark 3.3

Of course, in this specific context of linear long-memory processes, we would like to make a comparison between the asymptotic results for the convergence of the QMLE estimator and those obtained with Whittle’s estimator in Giraitis and Surgailis (1990). In this paper, more precisely in Theorem 4, the asymptotic covariance matrix of \({{\widehat{\gamma }}}_n \) is given by the spectral density \(f_\gamma \) and is written as \((4 \pi )^{-1}\, \int _{-\pi }^\pi \big (\partial _\gamma \log (f_\gamma (\lambda )) \big ) \, {}^t \big (\partial _\gamma \log (f_\gamma (\lambda )) \big )\, d\lambda \). However, Dahlhaus (1989) has shown that this asymptotic covariance matrix is also that of the maximum likelihood estimator in the case of a Gaussian process, the latter also being \((M^*)^{-1}\) if \((\varepsilon _t)\) is Gaussian white noise. This means that asymptotically, the QML and Whittle estimators behave identically. However, we will see a slight numerical advantage due to the convergence of the QMLE in the case of observed trajectories whose size is not too large.

3.3 Case of a non-centered long-memory linear process

Finally, we can consider the special case where the process \((X_t)\) is not centered and estimate the location parameter \(\mu ^*={\mathbb {E}}[X_0]\). This means that \((X_t)\) can now be written as:

with the same assumptions on \(\theta \), on \((a_i(\theta ))\) and on \((\varepsilon _t)\).

First of all, the AR\((\infty )\) representation we used to define \((X_t)\) does not allow \(\mu ^*\) to intervene, so the QML estimator cannot estimate this parameter. So, if \((X_t)\) satisfies (3.13), then \((X_t)\) still satisfies (3.1). This is because \(\sum _{k=1}^\infty u_k(\theta )=1\) for any \(\theta \). Consequently, the QML estimate of the parameter \(\theta \) is not at all affected by the fact that \((X_t)\) is not a centered process and verifies (3.13) and Theorems 3.1 and 3.2 are still valid. Note that the same applies to the Whittle’s estimator, as it was already remarked in Dahlhaus (1989).

Concerning the estimation of the localization parameter \({\mathbb {E}}[X_0]=\mu ^*\) for long-memory processes, this question has been the subject of numerous publications. Among the most important are Adenstedt (1974) and Samarov and Taqqu (1988) and the review article (Beran 1993). We are dealing here with long-memory linear processes, and the article (Adenstedt 1974) had already shown the most important point: we cannot expect a convergence rate in \(\sqrt{n}\), contrary to the other process parameters. In the case of the QML estimator, this can be explained by the fact that \(\mu ^*\) cannot intervene in the Eq. (3.1), contrary to what would happen for an ARMA process, for example.

More precisely, from these references, under the assumptions of Theorem 3.2 except that \((X_t)\) is defined by (3.13), we obtain:

and \(C_d=\int _0^\infty (u+u^2)^{d-1}\,du\). However, as we are considering linear processes here, Adenstedt (1974) proved by a Gauss–Markov type theorem that there exists a Best Linear Unbiased Estimator (BLUE) and provided its asymptotic efficiency. By adapting its writing, it will be enough to consider the matrix \(\Sigma (\theta )= \big ( r_X(|j-i|) \big )_{1\le i,j\le n}\) with \(\displaystyle r_X(k)= \hbox {Cov}\,(X_0,X_k)=\sum _{i=0}^{\infty }{a_i(\theta )\, a_{i+k}(\theta )} \) and define:

Then it is established in Adenstedt (1974) that \(\displaystyle \frac{\hbox {Var}\,\big ({\overline{X}}_n\big )}{\hbox {Var}\,\big ({{\widehat{\mu }}}_{BLUE}(\theta ^*) \big )} \begin{array}{c}{\mathop {\longrightarrow }\limits ^{}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\frac{\pi d(\theta ^*) (2d(\theta ^*)+1)}{B\big (1-d(\theta ^*),1-d(\theta ^*)\big ) \sin \big (\pi d(\theta ^*)\big )}\), where B(a, b) is the usual Beta function, and this limit belongs to [0.98, 1] when \(0<d(\theta ^*)<1/2\). Therefore, since \({{\widehat{\mu }}}_{BLUE}(\theta ^*)\) is a linear process, we obtain:

Finally, the estimation of \(\theta ^*\) by \({{\widehat{\theta }}}_n\) makes the use of the BLUE estimator of \(\mu ^*\) effective. Indeed, as \({{\widehat{\theta }}}_n\) is a convergent estimator of \(\theta ^*\), as \(\theta \in (0,0.5) \mapsto \big ( {}^t \hbox {I\,1} \Sigma ^{-1}(\theta ) \hbox {I\,1} \big ) ^{-1} {}^t \hbox {I\,1} \Sigma ^{-1}(\theta )\) is a continuous function, we deduce by Slutsky’s lemma that:

4 Numerical applications

4.1 Numerical simulations

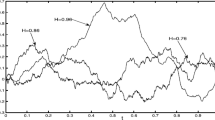

In this section, we report the results of Monte Carlo experiments conducted with different long-memory causal linear processes. More specifically, we considered:

-

Three different processes generated from Gaussian standard white noises:

-

1.

A FARIMA(0, d, 0) process, denoted FARIMA, with parameters \(\sigma ^2=4\) and \(d=0.1\), 0.2, 0.3 and 0.4;

-

2.

A FARIMA(1, d, 0) process, denoted FARIMA(1,d,0), with parameters \(\sigma ^2=4\) and \(d=0.1\), 0.2, 0.3 and 0.4, and AR-parameter \(\alpha =0.5\) and 0.9;

-

3.

A long-memory causal affine process, denoted LM, defined by:

$$\begin{aligned} X_t=a_0 \, \varepsilon _t + \zeta (1+d)^{-1} \, \sum _{k=1}^\infty k^{-1-d} \, X_{t-k}\quad \hbox { for any}\ t\in {\mathbb {Z}}, \end{aligned}$$with parameters \(\sigma ^2=4\) and \(d=0.1\), 0.2, 0.3 and 0.4.

-

1.

-

Several trajectory lengths: \(n=300,~1000,~3000\) and 10000.

-

In the case of the FARIMA process, we compared the accuracy of the QMLE with the one of the Whittle estimator which also satisfies a central limit theorem [see Giraitis and Surgailis (1990))]. We denote \({{\widehat{\theta }}}_{W}=( {\widehat{d}}_W,{{\widehat{\sigma }}}_W^2)\) this estimator.

The results are presented in Tables 1, 2 and 3.

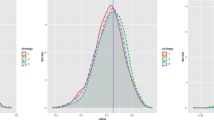

The results of Tables 1 and 3 show a weak effect of the value of d on the speed of convergence of the \({\widehat{d}}_n\) estimator and, more generally, of \({{\widehat{\theta }}}_n\), which may seem counter-intuitive since the long memory being stronger, the effect of initial values should be stronger. To investigate this further, we carried out new numerical studies using simulations of the FARIMA process for values of d approaching 0.5, i.e. \(d=0.43\), \(d=0.46\) and \(d=0.49\), and the results are shown in Table 4.

Conclusions of the simulations:

-

1.

The results of the simulations show that the consistency of the QML estimator \({{\widehat{\theta }}}_n\) is satisfied and also that its \(1/\sqrt{n}\) convergence rate of the estimators almost occurs for all processes considered.

-

2.

The value of the parameter d seems to have little influence on the speed of convergence of the estimators as long as d does not get too close to 0.5. However, when we consider values of d which increase towards 0.5, if the rate of convergence still looks good in \(\sqrt{n}\), the asymptotic variance considerably increases.

-

3.

When a short-memory component is added to the long-memory component, as in the case of a FARIMA(1, d, 0) process, the rate of convergence to d deteriorates, especially for small trajectories. But the rate of convergence still seems to be in \(\sqrt{n}\). We can also see that the rate of convergence deteriorates much more sharply than for the FARIMA(0, d, 0) process as d increases towards 0.5.

-

4.

In the case of the FARIMA process, the comparison between the QML and Whittle estimators leads to very similar results for large n, but for \(n=300\) the QML estimator provides slightly more accurate estimate, in particular with a more centered distribution around the estimated value.

4.2 Application on real data

Here, we will apply the QML estimator to a time series observation known to have a long memory. These are monthly temperature (in degree Celsius) for the northern hemisphere for the years 1854–1989, from the data base held at the Climate Research Unit of the University of East Anglia, Norwich, England. The numbers consist of the temperature difference from the monthly average over the period 1950–1979. For our purposes, and given the general rise in temperatures due to climate change, it is preferable to work on detrended data, for example using simple linear regression, as had already been done in Beran (1994). Figure 2 shows the two time series:

These data have been studied in Beran (1994) (see for example p.179), and Whittle’s estimator of the long memory parameter for a FARIMA process applied to detrended data yielded \({\widehat{d}}_W \simeq 0.37\), while the observed path size is \(n=1632\).

We applied the QML estimator for the FARIMA(0, d, 0) process to this same series and, as we might have expected, the result was almost identical \({\widehat{d}}_n \simeq 0.37\), with \({{\widehat{\sigma }}}_n \simeq 0.056\). We also applied the QML estimator for processes LM and the result obtained is rather \({\widehat{d}}_n \simeq 0.44\), which is not very far from the previous value. This confirms the long-memory nature of this series, and the implementation of a goodness-of-fit test could enable us to go a little further in choosing between the 2 models or others (note that such a test has been implemented for FARIMA processes in Boubacar Maïnassara et al. (2023)).

5 Conclusion

In this paper, we have shown that the QML estimator, which offers excellent convergence results for parameters of classical short-memory time series such as GARCH, ARMA, ARMA-GARCH or APARCH processes, also gives excellent results for long-memory time series. This had already been established for FARIMA processes, even with weak white noise, in Boubacar Maïnassara et al. (2021). And we generalize this to all long-memory linear processes, offering a very interesting alternative to Whittle estimation, both from a theoretical and a numerical point of view.

6 Proofs

6.1 Proofs of the main results

Proof of Proposition 2.1

Using B the lag or backshift linear operator on \({\mathbb {R}}^{{\mathbb {Z}}}\), we can denote \(X=S(B)\, \varepsilon \), where \(X=(X_t)_{t\in {\mathbb {Z}}}\) and \(\varepsilon =(\varepsilon _t)_{t\in {\mathbb {Z}}}\) and \(S(B)=\sum _{i=0}^\infty a_i \, B^{i}\). We know that there exists a linear operator denoted \(S^{-1}\) such as \(\varepsilon =S^{-1}(B)\, X\). As a consequence, \(X=a_0\, \varepsilon +(S(B)-a_0\, I)\,\varepsilon =a_0\, \varepsilon +(S(B)-a_0\, I)S^{-1}(B)\, X=a_0\, \varepsilon +(I-a_0\, S^{-1}(B))\, X\) which is the affine causal representation of X.

Let \(X_t=a_0\, \varepsilon _t+\sum _{i=1}^\infty u_i \, X_{t-i}\). Then, for any \(t\in {\mathbb {Z}}\),

As a consequence, denoting \(u_0=-1\), for any \(k \in {\mathbb {N}}^*\),

Finally, since the convergence radius of the power series \(\sum _{\ell =0}^\infty a_\ell \, z^\ell \) is 1 from asymptotic expansion (2.1), we deduce that for any \(z\in {\mathbb {C}}\), \(|z|<1\),

Now, we are going to use a Karamata Tauberian theorem as it is stated in Corollary 1.7.3 of Bingham et al. (1987):

Fix \(\rho >0\) and let L a slow varying function. Then if \((\alpha _n)_{n \in {\mathbb {N}}}\) is a sequence of nonnegative real numbers and the power series \(A(s)=\sum _{n=0}^\infty \alpha _n\, s^n\) converges for any \(s\in [0,1)\), then

Note that this result is also established if there exists \(N_0\in {\mathbb {N}}\) such as \((\alpha _n)_{n \ge N_0}\) is a sequence of nonnegative real numbers. We first apply (6.3) to \((\alpha _n)=(a_n)\). Indeed, from (2.1) and with \(\rho =d\), there exists \(N_0\in {\mathbb {N}}\) such as \((a_n)_{n \ge N_0}\) is a sequence of nonnegative real numbers and \(\sum _{k=0}^n a_k \begin{array}{c}{\mathop {\sim }\limits ^{}} \\ {\scriptstyle n\rightarrow \infty }\end{array}L(n)\, n^\rho \) with \(L(\cdot )=\frac{L_a(\cdot )}{d}\). Therefore, we deduce that

Therefore, from (6.2), the following expansion can be deduced:

On the other hand, if we consider (6.2) when \(s\rightarrow 1^{-}\), \(\sum _{\ell =0}^\infty a_\ell \, s^\ell \rightarrow \infty \) since \((a_n)\) satisfies (2.1). As a consequence,

We deduce that \(u_n \begin{array}{c}{\mathop {\longrightarrow }\limits ^{}} \\ {\scriptstyle n\rightarrow \infty }\end{array}0\) and the sequence \((U_n)_{n\in {\mathbb {N}}}\) can be defined where we denote \(U_n=\sum _{k=n+1}^\infty u_k\). But since \(\sum _{n=0}^\infty u_n=0\), for any \(s\in [0,1]\),

Using (6.5), we deduce

From (6.1), we also have for any \(n \in {\mathbb {N}}\)

Since \((a_n)\) satisfies (2.1), we know that there exists \(N_0\) such as \(a_n>0\) and \(\sum _{\ell =0}^n a_\ell >0\) for any \(n \ge N_0\). Therefore we know from (6.6) that for any \(n \ge N_0\), \(\sum _{k=0}^n u_k<0\) and thus \(U_n>0\) since \(\sum _{k=0}^\infty u_k=0\). Thus we can apply (6.3) to \((\alpha _n)=(U_n)\) with \(\rho =1-d\) and this induces

Since for \(n\ge N_0\), \(u_n>0\), we deduce that \((U_n)\) is a positive decreasing sequence for \(n\ge N_0\). Using again Bingham et al. (1987), we deduce that

To finish with, since \((U_n)\) is a positive decreasing sequence for \(n \ge N_0\), we deduce:

and this achieves the proof. \(\square \)

Proof of Theorem 3.1

In the sequel, we will denote for any \(t\in {\mathbb {N}}^*\) and \(\theta \in \Theta \),

For a random variable Z and \(r\ge 1\), denote \(\Vert Z\Vert _r=\big ({\mathbb {E}}\big [ |Z|^r \big ]\big )^{1/r}\).

1. Firstly we prove some useful inequalities.

From the Cauchy-Schwarz Inequality, for any \(\theta \in \Theta \) and \(t\in {\mathbb {Z}}\),

since \((u_k)\) follows (3.2), \(\Theta \) is a compact subset, \(\theta \in \Theta \mapsto u_k(\theta )\) is a continuous function for any \(k\ge 1\) and \(d(\theta )\in (0,1/2)\).

Using the same inequalities we also obtain that there exists \(C_2>0\) such that for any \(t\ge 1\),

with \(0< {\underline{d}} < \inf _{\theta \in \Theta } d(\theta )\) from the condition (3.2) on \((u_n(\theta ))\).

Finally with

we obtain from the previous bounds and \(\sigma ^2 \in [\sigma ^2_m,\sigma ^2_M]\) where \(0<\sigma ^2_m< \sigma ^2_M\),

And to conclude with these preliminary bounds, using Cauchy-Schwarz and the triangular inequality, there exists \(C>0\) such as for \(t\ge 1\),

2. From its AR\((\infty \)) representation (2.1), and since \(\Vert X_0\Vert _2<\infty \), then \((X_t)_{t\in {\mathbb {Z}}}\) is a second order ergodic stationary sequence (see Theorem 36.4 in Billingsley 1995). But for any \(\theta \in \Theta \), there exists \(H^q_\theta :{\mathbb {R}}^{\mathbb {N}}\rightarrow {\mathbb {R}}\) such that

with also \({\mathbb {E}}\big [\big | q_t(\theta ) \big |\big ]<\infty \) from (6.10). Then using Theorem 36.4 in Billingsley (1995), \(\big (q_t(\theta ) \big )\big )_{t\in {\mathbb {Z}}}\) is an ergodic stationary sequence for any \(\theta \in \Theta \) and therefore

with \(I_n(\theta )\) defined in (3.6). Moreover, since \(\Theta \) is a compact set and since we have \({\mathbb {E}}\big [\sup _{\theta \in \Theta } \big | q_t(\theta ) \big |\big ]<\infty \) from (6.10), using Theorem 2.2.1. in Straumann (2005), we deduce that \(\big (q_t(\theta ) \big )\big )_{t\in {\mathbb {Z}}}\) also follows a uniform ergodic theorem and we obtain

Now, using \({\widehat{I}}_n(\theta )\) defined in (3.8), we can write

In Corollary 1 of Kounias and Weng (1969), it is established that for a \({\mathbb {L}}^1\) sequence of r.v. \((Z_t)_t\) and a sequence of positive real numbers \((b_n)_{n\in {\mathbb {N}}^*}\) such as \(b_n \begin{array}{c}{\mathop {\longrightarrow }\limits ^{}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\infty \), then \(\sum _{t=1}^\infty \frac{{\mathbb {E}}\big [ |Z_t| \big ]}{b_t} <\infty \) implies \(\frac{1}{b_n} \, \sum _{t=1}^n Z_t \begin{array}{c}{\mathop {\longrightarrow }\limits ^{a.s.}} \\ {\scriptstyle n\rightarrow \infty }\end{array}0\).

Therefore, with \(b_t=t\) and \(Z_t=\sup _{\theta \in \Theta } \big | q_t(\theta )-{\widehat{q}}_t(\theta ) \big |\) for \(t\in {\mathbb {N}}^*\), using the inequality (6.11),

Then, using (6.13) and (6.12), we deduce:

3. Finally, the same argument already detailed in the proof of Theorem 1 of Bardet and Wintenberger (2009) is used: \(\theta \in \Theta \mapsto {\mathbb {E}}\big [ q_0(\theta )\big ]\) has a unique maximum reached in \(\theta =\theta ^*\in \Theta \) because it is assumed that if \(u_n(\theta )=u_n(\theta ')\) for all \(n\in {\mathbb {N}}^*\) with \(\theta =(\gamma ,\sigma ^2)\) and \(\theta '=(\gamma ',\sigma ^{2})\), then \(\theta =\theta '\). This property and the uniform almost sure consistency (6.14) lead to \({{\widehat{\theta }}}_n \begin{array}{c}{\mathop {\longrightarrow }\limits ^{a.s.}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\theta ^*\). \(\square \)

Proof of Theorem 3.2

As a preamble to this proof, since \({{\widehat{\theta }}}_n \begin{array}{c}{\mathop {\longrightarrow }\limits ^{a.s.}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\theta ^*\) by Theorem 3.1, we will be able to reduce the \(\Theta \) domain. Let \({{\widetilde{\Theta }}} \subset \Theta \) be a compact set of \({\mathbb {R}}^p\) such that:

Note that \(2d(\theta ^*)-1/2<d(\theta ^*)\), so it’s still possible to determine \({{\widetilde{\Theta }}}\).

In the spirit of (3.9), let’s define

Using Theorem 3.1, it is clear that \({{\widetilde{\theta }}}_n \begin{array}{c}{\mathop {\longrightarrow }\limits ^{a.s.}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\theta ^*\). Moreover, for all \(x=(x_1,\ldots ,x_p) \in {\mathbb {R}}^p\),

Since \({{\widehat{\theta }}}_n \begin{array}{c}{\mathop {\longrightarrow }\limits ^{a.s.}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\theta ^*\) by Theorem 3.1 and therefore \({\mathbb {P}}\big ( {{\widehat{\theta }}}_n \notin {{\widetilde{\Theta }}}\big ) \begin{array}{c}{\mathop {\longrightarrow }\limits ^{}} \\ {\scriptstyle n\rightarrow \infty }\end{array}0\) because \(\theta ^* \in {{\widetilde{\Theta }}}\), it is clear that the asymptotic distribution of \(\sqrt{n} \, \big ({{\widehat{\theta }}}_n -\theta ^* \big )\) is the same as the one of \(\sqrt{n} \, \big ({{\widetilde{\theta }}}_n -\theta ^* \big )\). Consequently, throughout the rest of the proof, \(\Theta \) will be replaced by \({{\widetilde{\Theta }}}\) and \({{\widehat{\theta }}}_n\) by \({{\widetilde{\theta }}}_n\).

In the sequel, for \(\theta \in {{\widetilde{\Theta }}}\), we will denote \(d=d(\theta )-\varepsilon \) and \(d^*_+=d^*+\varepsilon \) where \(d^*=d(\theta ^*)\) is the unknown long-memory parameter, and we chose \(\varepsilon >0\) such as \(\varepsilon \le \frac{1}{6} \, \big ( 1-4d(\theta )+2d(\theta ^*) \big )\). Hence, from the definition of \({{\widetilde{\Theta }}}\), \(1-4d(\theta )+2d(\theta ^*)>0\) and

From Assumption (A), for any \(\theta \in {{\widetilde{\Theta }}}\) and \(t\in {\mathbb {Z}}\), \(\partial _\theta m_t(\theta )\) and \(\partial ^2_\theta m_t(\theta )\) a.s. exist with

And the same for \(\partial _\theta {\widehat{m}}_t(\theta )\), \(\partial _\theta {{\widetilde{m}}}_t(\theta )\), \(\partial ^2_\theta {\widehat{m}}_t(\theta )\) and \(\partial ^2_\theta {{\widetilde{m}}}_t(\theta )\). However, note that for any \(\theta \in {{\widetilde{\Theta }}}\), \((m_t(\theta ))_t\), \((\partial _\theta m_t(\theta ))_t\) and \((\partial ^2_{\theta ^2} m_t(\theta ))_t\) are stationary processes while \(({\widehat{m}}_t(\theta ))_t\), \(({{\widetilde{m}}}_t(\theta ))_t\) and their derivatives are not.

Due to these results, for any \(\theta \in {{\widetilde{\Theta }}}\):

and the same for \(\partial _\theta {\widehat{q}}_t(\theta )\) by replacing \(m_t(\theta )\) by \({\widehat{m}}_t(\theta )\). Once again for any \(\theta \in {{\widetilde{\Theta }}}\), \((\partial _\theta q_t(\theta ))_t\) is a stationary process, while \((\partial _\theta {\widehat{q}}_t(\theta ))_t\) is not. Finally, for all \(\theta \in {{\widetilde{\Theta }}}\), define

Following the same reasoning it can be shown that for any \(t \in {\mathbb {Z}}\), \(\theta \in {{\widetilde{\Theta }}} \mapsto q_t(\theta )\) and \(\theta \in {{\widetilde{\Theta }}} \mapsto {\widehat{q}}_t(\theta )\) are a.s. \(\mathcal{C}^2({{\widetilde{\Theta }}})\) functions and therefore the random matrices \( \partial ^2 _{\theta ^2} L_n(\theta )\) and \( \partial ^2 _{\theta ^2} {\widehat{L}}_n(\theta )\) a.s. exist.

The proof of Theorem 3.2 will be decomposed in 3 parts:

-

1.

First, as it was already established in Bardet and Wintenberger (2009), \((\partial _\theta q_t(\theta ^*))_t\) is a stationary ergodic martingale difference since with the \(\sigma \)-algebra \(\mathcal{F}_t =\sigma \big \{ (X_{t-k})_{k\ge 1} \big \}\),

$$\begin{aligned} {\mathbb {E}}\Big [ \partial _\theta q_t(\theta ^*) ~\big | \, \mathcal{F}_t \Big ]=0, \end{aligned}$$because \((X_t)\) is a causal process and \(\varepsilon _t\) is independent of \(\mathcal{F}_t\) and \({\mathbb {E}}\big [\varepsilon _0 ^2\big ]=1\). Now since \({\mathbb {E}}\big [ \big \Vert \partial ^{}_{\theta } q_0(\theta ^*) \big \Vert ^2 \big ]<\infty \) from the same arguments as in the proof of the consistency of the estimator. Then the central limit for stationary ergodic martingale difference, Theorem 18.3 of Billingsley (1968) can be applied and

$$\begin{aligned} \sqrt{n} \, \partial _\theta L_n(\theta ^*) \begin{array}{c}{\mathop {\longrightarrow }\limits ^{\mathcal{L}}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\mathcal{N} \big (0 \,, \, G^*\big ), \end{aligned}$$(6.17)since \({\mathbb {E}}\big [ \partial _\theta q_0(\theta ^*) \big ] =0\) and where \(G^*:={\mathbb {E}}\Big [ \partial _\theta q_0(\theta ^*) \times {}^t \big ( \partial _\theta q_0(\theta ^*)\big )\Big ]\).

-

2.

We are going to prove that:

$$\begin{aligned} n \, {\mathbb {E}}\Big [\sup _{\theta \in {{\widetilde{\Theta }}}}\big \Vert \partial _\theta \widehat{L}_n(\theta )-\partial _\theta {L}_n(\theta )\big \Vert ^2 \Big ] \begin{array}{c}{\mathop {\longrightarrow }\limits ^{}} \\ {\scriptstyle n\rightarrow \infty }\end{array}0. \end{aligned}$$(6.18)Using a line of reasoning already used in Beran and Schützner (2009, Lemmas 1 and 2) and Bardet (2023, Lemma 5.1 3), and derived from Parzen (1999, Theorem 3.B), there exists \(C>0\) such that:

$$\begin{aligned} {\mathbb {E}}\Big [\sup _{\theta \in {{\widetilde{\Theta }}}}\big \Vert \partial _\theta \widehat{L}_n(\theta )-\partial _\theta {L}_n(\theta )\big \Vert ^2 \Big ] \le C \, \sup _{\theta \in {{\widetilde{\Theta }}}} {\mathbb {E}}\Big [ \big \Vert \partial _\theta \widehat{L}_n(\theta )-\partial _\theta {L}_n(\theta )\big \Vert ^2 \Big ], \end{aligned}$$because we assumed that \(\theta \rightarrow u_n(\theta )\) is a \(\mathcal{C}^{p+1}({{\widetilde{\Theta }}})\) function and therefore \(\partial _\theta \widehat{L}_n(\theta )-\partial _\theta {L}_n(\theta )\) is a \(\mathcal{C}^{p}({{\widetilde{\Theta }}})\) function. Then, for \(\theta \in {{\widetilde{\Theta }}}\),

$$\begin{aligned} \partial _{\gamma } q_t(\theta )- \partial _{\gamma } {\widehat{q}}_t(\theta )=\frac{1}{\sigma ^2} \Big ( \partial _{\gamma } {{\widetilde{m}}}_t(\theta ) \, \big (X_t -m_t(\theta )\big ) +\partial _{\gamma } {\widehat{m}}_t(\theta ) \, {{\widetilde{m}}}_t(\theta ) \Big ). \end{aligned}$$As a consequence, for \(\theta \in {{\widetilde{\Theta }}}\),

$$\begin{aligned}{} & {} \!\! \!\! \! n \,{\mathbb {E}}\big [ \big \Vert \partial _\theta \widehat{L}_n(\theta )-\partial _\theta {L}_n(\theta )\big \Vert ^2 \big ]\nonumber \\= & {} \frac{1}{n \, \sigma ^4} \Big ( 2 \!\!\!\! \sum _{1 \le s<t \le n} \!\!\!\! {\mathbb {E}}\Big [ {}^t \Big ( \partial _{\gamma } {{\widetilde{m}}}_t(\theta ) \, \big (X_t -m_t(\theta )\big ) +\partial _{\gamma } {\widehat{m}}_t(\theta ) \, {{\widetilde{m}}}_t(\theta ) \Big )\, \nonumber \\{} & {} \quad \times \Big ( \partial _{\gamma } {{\widetilde{m}}}_s(\theta ) \, \big (X_s -m_s(\theta )\big ) +\partial _{\gamma } {\widehat{m}}_s(\theta ) \, {{\widetilde{m}}}_s(\theta ) \Big )\Big ] \nonumber \\{} & {} \quad + \sum _{t=1}^n {\mathbb {E}}\Big [{}^t \Big ( \partial _{\gamma } {{\widetilde{m}}}_t(\theta ) \, \big (X_t -m_t(\theta )\big ) +\partial _{\gamma } {\widehat{m}}_t(\theta ) \, {{\widetilde{m}}}_t(\theta ) \Big )\, \nonumber \\{} & {} \quad \times \Big ( \partial _{\gamma } {{\widetilde{m}}}_t(\theta ) \, \big (X_t -m_t(\theta )\big ) +\partial _{\gamma } {\widehat{m}}_t(\theta ) \, {{\widetilde{m}}}_t(\theta ) \Big ) \Big ] \Big )\nonumber \\= & {} \frac{1}{n \, \sigma ^4} \big (I_1+I_2 \big ). \end{aligned}$$(6.19)Concerning \(I_1\), since \(X_t=\sigma ^*\, \varepsilon _t+m_t(\theta ^*)\) and since \(\varepsilon _t\) is independent to all the other terms because \(s<t\), we deduce that \( \big (X_t -m_t(\theta )\big )\) can be replaced by \(n_t(\theta ,\theta ^*)= \big (m_t(\theta ^*)-m_t(\theta ) \big )\). As a consequence, after its expansion, \(I_1\) can be written as a sum of 6 expectations of products of 4 linear combinations of \((\varepsilon _t)\). Moreover, if for \(j=1,\ldots ,4\), \(Y^{(j)}_{t_j}=\sum _{k=0}^\infty \beta ^{(j)}_k \, \xi _{t_j-k}\), where \(t_1\le t_2\le t_3\le t_4\), \((\beta ^{(j)}_n)_{n\in {\mathbb {N}}}\) are 4 real sequences and \((\xi _t)_{t\in {\mathbb {Z}}}\) is a white noise such as \({\mathbb {E}}[\xi _0^2]=1\) and \({\mathbb {E}}[\xi _0^4]=\mu _4< \infty \), then:

$$\begin{aligned} {\mathbb {E}}\big [ \prod _{j=1}^4 Y^{(j)}_{t_j} \big ]=(\mu _4-3)\, \sum _{k=0}^\infty \beta ^{(1)}_k \beta ^{(2)}_{t_2-t_1+k}\beta ^{(3)}_{t_3-t_1+k}\beta ^{(4)}_{t_4-t_1+k} \\ +{\mathbb {E}}\big [Y^{(1)}_{t_1} Y^{(2)}_{t_2}\big ] \, {\mathbb {E}}\big [Y^{(3)}_{t_3} Y^{(4)}_{t_4}\big ] + {\mathbb {E}}\big [Y^{(1)}_{t_1} Y^{(3)}_{t_3}\big ] \, {\mathbb {E}}\big [Y^{(2)}_{t_2} Y^{(4)}_{t_4}\big ] \\ + {\mathbb {E}}\big [Y^{(1)}_{t_1} Y^{(4)}_{t_4}\big ] \, {\mathbb {E}}\big [ Y^{(2)}_{t_2} Y^{(3)}_{t_3} \big ]. \end{aligned}$$Now, consider for example \(Y^{(1)}_{t_1}=\partial _{\gamma } {{\widetilde{m}}}_s(\theta )\), \( Y^{(2)}_{t_2}= \big (X_s -m_s(\theta )\big )\), \(Y^{(3)}_{t_3}=\partial _{\gamma } {\widehat{m}}_t(\theta ) \) and \(Y^{(4)}_{t_4}= {{\widetilde{m}}}_t(\theta )\). From Lemma 6.1 and for any used sequence \((\beta ^{(j)}_k)_{k\in {\mathbb {N}}}\), there exists \(C>0\) such as for any \(k\in {\mathbb {N}}\):

$$\begin{aligned} \big | \beta ^{(1)}_k \big | \le \frac{C}{s^d \, (k+1)^{1-d^*_+}}, ~\big | \beta ^{(4)}_k \big | \le \frac{C}{t^d \, (k+1)^{1-d^*_+}} \\ \hbox {and}~\max \big (\big | \beta ^{(2)}_k\,, \, \big | \beta ^{(3)}_k \big | \big )\le \frac{C}{ (k+1)^{1-d^*_+}}. \end{aligned}$$As a consequence, with \(s<t\),

$$\begin{aligned} \Big | (\mu _4-3)\, \sum _{k=0}^\infty \beta ^{(1)}_k \beta ^{(2)}_{k}\beta ^{(3)}_{t-s+k}\beta ^{(4)}_{t-s+k} \Big | \le \frac{C}{s^d t^d} \, \sum _{k=1}^\infty \frac{1}{k^{2-2d^*_+}} \, \frac{1}{(k+t-s)^{2-2d^*_+}}\nonumber \\ \le \frac{C}{s^d t^d(t-s)^{2-2d^*_+}}. \end{aligned}$$(6.20)And we obtain the same bound for any quadruple products appearing in \(I_1\). Consider now the other terms of \(I_1\). Using Lemmas 6.3 and 6.4, we obtain for any \(\theta \in {{\widetilde{\Theta }}}\) and \(s<t\):

$$\begin{aligned} \bullet \quad \Big |{\mathbb {E}}\big [Y^{(1)}_{t_1} Y^{(2)}_{t_2}\big ] \, {\mathbb {E}}\big [Y^{(3)}_{t_3} Y^{(4)}_{t_4}\big ]\Big |= & {} \Big |{\mathbb {E}}\big [\partial _{\gamma } {{\widetilde{m}}}_s(\theta )\, \big (X_s -m_s(\theta )\big ) \big ] \, {\mathbb {E}}\big [ \partial _{\gamma } {\widehat{m}}_t(\theta ) \, {{\widetilde{m}}}_t(\theta )\big ]\Big | \\= & {} \Big | {\mathbb {E}}\big [\partial _{\gamma } {{\widetilde{m}}}_s(\theta )\, n_s(\theta ,\theta ^*)\big ) \big ]\Big | \, \Big |{\mathbb {E}}\big [\partial _{\gamma } {\widehat{m}}_t(\theta ) \, {{\widetilde{m}}}_t(\theta )\big ]\Big | \\\le & {} C\, \frac{1}{s^{1+d-2d^*_+}}\, \frac{1}{t^{1+d-2d^*_+}}; \\ \bullet \quad \Big |{\mathbb {E}}\big [Y^{(1)}_{t_1} Y^{(3)}_{t_3}\big ] \, {\mathbb {E}}\big [Y^{(2)}_{t_2} Y^{(4)}_{t_4}\big ]\Big |= & {} \Big |{\mathbb {E}}\big [\partial _{\gamma } {{\widetilde{m}}}_s(\theta )\, \partial _{\gamma } {\widehat{m}}_t(\theta ) \big ] \, {\mathbb {E}}\big [\big (X_s -m_s(\theta )\big ) \, {{\widetilde{m}}}_t(\theta )\big ]\Big | \\= & {} \Big | {\mathbb {E}}\big [\partial _{\gamma } {{\widetilde{m}}}_s(\theta )\,\partial _{\gamma } {\widehat{m}}_t(\theta ) \big ) \big ]\Big | \, \Big |{\mathbb {E}}\big [ n_s(\theta ,\theta ^*)\, {{\widetilde{m}}}_t(\theta )\big ]\Big | \\&{\le }&C\, \Big ( \frac{1}{s^dt^{1-2d^*_+}}{+} \frac{1}{s^{1+2d-2d^*_+}}\Big )\,\Big ( \frac{1}{t^{1+d} s^{-2d^*_+}} {+}\frac{1}{t^{1+2d-2d^*_+}} \Big )\\ \bullet \quad \Big |{\mathbb {E}}\big [Y^{(1)}_{t_1} Y^{(4)}_{t_4}\big ] \, {\mathbb {E}}\big [Y^{(2)}_{t_2} Y^{(3)}_{t_3}\big ]\Big |= & {} \Big |{\mathbb {E}}\big [\partial _{\gamma } {{\widetilde{m}}}_s(\theta )\, {{\widetilde{m}}}_t(\theta ) \big ] \, {\mathbb {E}}\big [\big (X_s -m_s(\theta )\big ) \, \partial _{\gamma } {\widehat{m}}_t(\theta ) \big ]\Big | \\= & {} \Big | {\mathbb {E}}\big [\partial _{\gamma } {{\widetilde{m}}}_s(\theta )\, {{\widetilde{m}}}_t(\theta ) \big ] \Big | \, \Big |{\mathbb {E}}\big [ n_s(\theta ,\theta ^*)\, \partial _{\gamma } {\widehat{m}}_t(\theta )\big ]\Big | \\\le & {} C\, \frac{1}{s^dt^{1-2d^*_++d}}\,\frac{1}{(t-s)^{1-2d^*_+} } \end{aligned}$$Using these inequalities as well as (6.20), we deduce from classical comparisons between sums and integrals:

$$\begin{aligned}{} & {} \sum _{1 \le s<t \le n} \!\! {\mathbb {E}}\Big [ {}^t \Big ( \partial _{\gamma } {{\widetilde{m}}}_t(\theta ) \, \big (X_t -m_t(\theta )\big ) \partial _{\gamma } {\widehat{m}}_s(\theta ) \, {{\widetilde{m}}}_s(\theta ) \Big )\Big ] \\{} & {} \quad \le C \!\! \sum _{1 \le s<t \le n} \!\! \frac{ \mu _4-3}{s^dt^d(t-s)^{2-2d^*_+}}{+}\Big ( \frac{1}{s^dt^{1-2d^*_+}}{+} \frac{1}{s^{1+2d-2d^*_+}}\Big )\,\Big ( \frac{1}{t^{1+d} s^{-2d^*_+}} +\frac{1}{t^{1+2d-2d^*_+}} \Big ) \\{} & {} \qquad +\frac{1}{s^{1+d-2d^*_+}}\, \frac{1}{t^{1+d-2d^*_+}} +\frac{1}{s^dt^{1-2d^*_++d}}\,\frac{1}{(t-s)^{1-2d^*_+} } \\{} & {} \quad {\le } C \Big ( \int _1^n x^{2d^*_+-1-2d} dx+\int _1^n \frac{dx}{x^{2+d-2d^*_+}} \int _1 ^x \frac{dy}{y^{d-2d^*_+}} \\{} & {} \qquad +\int _1^n \frac{dx}{x^{2+2d-4d^*_+}} \int _1 ^x \frac{dy}{y^{d}}+\int _1^n \frac{dx}{x^{1+d}} \int _1 ^x \frac{dy}{y^{1+2d-4d^*_+}} \\{} & {} \qquad +\int _1^n \frac{dx}{x^{1+2d-2d^*_+}} \int _1 ^x \frac{dy}{y^{1+2d-2d^*_+}} +\int _1^n \frac{dx}{x^{1+d-2d^*_+}} \int _1 ^x \frac{dy}{y^{1+d-2d^*_+}} \\{} & {} \qquad + \int _1^n \frac{dx}{x^{1+d-2d^*_+}} \int _1 ^x \frac{dy}{y^d(x-y)^{1-2d^*_+} } \Big ) \\{} & {} \quad \le C \big (n^{2d^*_+-2d} + n^{4d^*_+-2d}+n^{4d^*_+-3d}+n^{4d^*_+-3d}+n^{4d^*_+-4d}+n^{4d^*_+-2d}+n^{4d^*_+-2d} \big ) \\{} & {} \quad \le C \, n^{4d^*_+-2d}. \end{aligned}$$We obtain exactly the same bounds if we consider the 3 others expectations, i.e. \({\mathbb {E}}\Big [ {}^t \Big ( \partial _{\gamma } {{\widetilde{m}}}_t(\theta ) \, \big (X_t -m_t(\theta )\big )\Big ) \partial _{\gamma } {{\widetilde{m}}}_s(\theta ) \, \big (X_s -m_s(\theta )\big )\Big ]\), \({\mathbb {E}}\Big [ {}^t \Big ( \partial _{\gamma } {\widehat{m}}_t(\theta ) \, {{\widetilde{m}}}_t(\theta ) \Big ) \partial _{\gamma } {{\widetilde{m}}}_s(\theta ) \, \big (X_s -m_s(\theta )\big )\Big )\Big ]\) or \({\mathbb {E}}\Big [ {}^t \Big (\partial _{\gamma } {\widehat{m}}_t(\theta ) \, {{\widetilde{m}}}_t(\theta ) \Big )\partial _{\gamma } {\widehat{m}}_s(\theta ) \, {{\widetilde{m}}}_s(\theta ) \Big )\Big ]\). As a consequence, we finally obtain:

$$\begin{aligned} \frac{1}{\sigma ^4 \, n} \, I_1 \le C\, n^{4d^*_+-2d-1}\quad \hbox { for any}\ n\in {\mathbb {N}}^*. \end{aligned}$$(6.21)Now consider the term \(I_2\) in (6.19) and therefore the case \(s=t\). For \(Y^{(1)}_{t_1}=\partial _{\gamma } {{\widetilde{m}}}_t(\theta )\), \( Y^{(2)}_{t_2}= \big (X_t -m_t(\theta )\big )\), \(Y^{(3)}_{t_3}=\partial _{\gamma } {\widehat{m}}_t(\theta ) \) and \(Y^{(4)}_{t_4}= {{\widetilde{m}}}_t(\theta )\), and the coefficient \((\beta ^{(j)}_k)\) defined previously, we obtain:

$$\begin{aligned} \Big | (\mu _4-3)\, \sum _{k=0}^\infty \beta ^{(1)}_k \beta ^{(2)}_{k}\beta ^{(3)}_{k}\beta ^{(4)}_{k} \Big | \le C \, \sum _{k=1}^\infty \frac{1}{t^{2d}} \, \frac{1}{k^{4-4d^*_+}} \le C \,\frac{1}{t^{2d}}. \end{aligned}$$(6.22)Moreover, using the same inequalities as in the case \(s<t\), we obtain:

$$\begin{aligned} \bullet \quad \Big |{\mathbb {E}}\big [Y^{(1)}_{t_1} Y^{(2)}_{t_2}\big ] \, {\mathbb {E}}\big [Y^{(3)}_{t_3} Y^{(4)}_{t_4}\big ]\Big |\le & {} C\, \frac{1}{t^{2+2d-4d^*_+}}; \\ \bullet \quad \Big |{\mathbb {E}}\big [Y^{(1)}_{t_1} Y^{(3)}_{t_3}\big ] \, {\mathbb {E}}\big [Y^{(2)}_{t_2} Y^{(4)}_{t_4}\big ]\Big |\le & {} C\, \frac{1}{t^{2+2d-4d^*_+}}\\ \bullet \quad \Big |{\mathbb {E}}\big [Y^{(1)}_{t_1} Y^{(4)}_{t_4}\big ] \, {\mathbb {E}}\big [Y^{(2)}_{t_2} Y^{(3)}_{t_3}\big ]\Big |\le & {} C\, \frac{1}{t^{1-2d^*_++2d}}. \end{aligned}$$Therefore,

$$\begin{aligned} \sum _{t=1}^ n {\mathbb {E}}\Big [ {}^t \Big ( \partial _{\gamma } {{\widetilde{m}}}_t(\theta ) \, \big (X_t -m_t(\theta )\big ) \partial _{\gamma } {\widehat{m}}_t(\theta ) \, {{\widetilde{m}}}_t(\theta ) \Big )\Big ] \\ \le C \sum _{t=1}^ n \frac{ \mu _4-3}{t^{2d}}+ \frac{1}{t^{1-2d^*_++2d}} \le C \, n^{1-2d}. \end{aligned}$$As a consequence, we finally obtain that there exists \(C>0\) such that:

$$\begin{aligned} \frac{1}{\sigma ^4 \, n} \, I_2 \le C\, n^{-2d}\quad \hbox {for any} \,n\in {\mathbb {N}}^*. \end{aligned}$$(6.23)Therefore, from (6.21) and (6.23), we deduce that there exists \(C>0\) such that for any \(n\in {\mathbb {N}}^*\):

$$\begin{aligned} n \, {\mathbb {E}}\big [ \big \Vert \partial _\theta \widehat{L}_n(\theta )-\partial _\theta {L}_n(\theta )\big \Vert ^2 \big ]\le C\, \big (n^{-2d}+n^{4d^*_+-2d-1}\big ) \begin{array}{c}{\mathop {\longrightarrow }\limits ^{}} \\ {\scriptstyle n\rightarrow \infty }\end{array}0, \end{aligned}$$(6.24)from (6.15).

-

3.

For \(\theta \in {{\widetilde{\Theta }}}\) and \(n \in {\mathbb {N}}^*\), since \( \partial ^2 _{\theta ^2} {\widehat{L}}_n(\theta )\) is a.s. a \(\mathcal{C}^2({{\widetilde{\Theta }}})\) function, the Taylor-Lagrange expansion implies:

$$\begin{aligned} \sqrt{n}\,\partial _\theta \widehat{L}_n(\theta ^{*})=\sqrt{n} \, \partial _\theta \widehat{L}_n({{\widetilde{\theta }}}_n)+\partial ^{2}_{\theta ^2} \widehat{L}_n(\bar{\theta }_{n}) \times \sqrt{n} \, (\theta ^{*} - {\widetilde{\theta }}_{n}) \end{aligned}$$where \(\bar{\theta }_{n}=c\,{\widetilde{\theta }}_{n}+(1-c)\,\theta ^{*}\) and \(0<c<1.\) But \(\partial _\theta \widehat{L}_n({{\widetilde{\theta }}}_n)=0\) because \({{\widetilde{\theta }}}_n\) is the unique local extremum of \(\theta \rightarrow \widehat{L}_n(\theta )\). Therefore,

$$\begin{aligned} \sqrt{n}\,\partial _\theta \widehat{L}_n(\theta ^{*})= \partial ^{2}_{\theta ^2} \widehat{L}_n(\bar{\theta }_{n}) \times \sqrt{n} \, (\theta ^{*} - {\widetilde{\theta }}_{n}). \end{aligned}$$(6.25)Now, \({\mathbb {E}}\big [ \big \Vert \partial ^{2}_{\theta ^2} q_0(\theta ) \big \Vert \big ]<\infty \) from the same arguments as in the proof of the consistency of the estimator, and using Theorem 36.4 in Billingsley (1995), \(\big (\partial ^{2}_{\theta ^2} q_t(\theta ) \big )\big )_{t\in {\mathbb {Z}}}\) is an ergodic stationary sequence for any \(\theta \in {{\widetilde{\Theta }}}\). Moreover \({\bar{\theta }}_n \begin{array}{c}{\mathop {\longrightarrow }\limits ^{a.s.}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\theta ^*\) since \({{\widetilde{\theta }}}_n \begin{array}{c}{\mathop {\longrightarrow }\limits ^{a.s.}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\theta ^*\). Hence:

$$\begin{aligned} \partial ^{2}_{\theta ^2} {L}_n(\bar{\theta }_{n}) \begin{array}{c}{\mathop {\longrightarrow }\limits ^{a.s.}} \\ {\scriptstyle n\rightarrow \infty }\end{array}{\mathbb {E}}\big [ \partial ^{2}_{\theta ^2} q_0(\theta ) \big ]=F(\theta ^*). \end{aligned}$$Moreover, using the same arguments as in Lemma 4 of Bardet and Wintenberger (2009), we have:

$$\begin{aligned} \sup _{\theta \in {{\widetilde{\Theta }}}} \Big \Vert \partial ^{2}_{\theta ^2} {L}_n(\theta )- \partial ^{2}_{\theta ^2} \widehat{L}_n(\theta )\Big \Vert \begin{array}{c}{\mathop {\longrightarrow }\limits ^{{{\mathbb {P}}}}} \\ {\scriptstyle n\rightarrow \infty }\end{array}0 \quad \Longrightarrow \quad \partial ^{2}_{\theta ^2} \widehat{L}_n(\bar{\theta }_{n}) \begin{array}{c}{\mathop {\longrightarrow }\limits ^{{{\mathbb {P}}}}} \\ {\scriptstyle n\rightarrow \infty }\end{array}F(\theta ^*). \end{aligned}$$(6.26)Usual calculations show that:

$$\begin{aligned} F(\theta ^*)=- \left( \begin{array}{cc}M^* &{} 0 \\ 0 &{} \frac{1}{2 \, \sigma ^{*4}} \end{array}\right) \quad \hbox {and}\quad G(\theta ^{*})=\left( \begin{array}{cc}M^* &{} 0 \\ 0 &{} \frac{\mu _4^*-1}{4 \, \sigma ^{*4}} \end{array}\right) ,\\ \hbox {with}\quad M^*=\frac{1}{\sigma ^{*2}} \ \sum _{k=1}^\infty \sum _{\ell =1}^\infty \partial _\gamma u_k((\gamma ^*,0))\, {}^t \big (\partial _\gamma u_\ell ((\gamma ^*,0)) \big )\, r_X(\ell -k) \end{aligned}$$where \(G(\theta ^{*})={\mathbb {E}}\left[ \partial _\theta q_{0}(\theta ^{*}) \, {}^t \partial _\theta q_{0}(\theta ^{*}) \right] \) has already been defined in (6.17). Thanks to the formula for \(M^*\), we can deduce that \(F^*\) is invertible. Indeed, \(M^*\) is invertible if and only if \({\mathbb {E}}\left[ \partial _\theta q_{0}(\theta ^{*}) \, {}^t \partial _\theta q_{0}(\theta ^{*}) \right] \) is invertible and therefore if and only if for all \(v\in {\mathbb {R}}^{p-1}\), \({}^t v \, {\mathbb {E}}\left[ \partial _\gamma q_{0}(\theta ^{*}) \, {}^t \partial _\theta q_{0}(\theta ^{*}) \right] \, v={\mathbb {E}}\left[ \big ({}^t v \,\partial _\gamma q_{0}(\theta ^{*}) \big )^2 \right] =0\) or \({}^t v \,\partial _\gamma q_{0}(\theta ^{*}) =0~a.s.\) implies \(v=0\). Or, pour \(v\in {\mathbb {R}}^{p-1}\),

$$\begin{aligned} {}^t v \,\partial _\gamma q_{0}(\theta ^{*})=0~~a.s.&\Longrightarrow&\frac{1}{\sigma ^{*2}} \, \varepsilon _0 \, \sum _{k=1}^\infty {}^t v \,\partial _\gamma u_k(\theta ^*) \, X_{-k}=0~ ~a.s. \\{} & {} \hspace{-2.5cm} \Longrightarrow \sum _{k=1}^\infty {}^t v \,\partial _\gamma u_k(\theta ^*) \, X_{-k}=0~ ~a.s.~~ (\varepsilon _0\, \hbox {is independent to}\, \mathcal{F}_0) \\{} & {} \hspace{-2.5cm} \Longrightarrow {}^t v \,\partial _\gamma u_k(\theta ^*)=0\quad \hbox { for all}\ k\in {\mathbb {N}}^* \\{} & {} \hspace{-2.5cm} \Longrightarrow v=0\quad \hbox {from} (3.11). \end{aligned}$$Now, from (6.17) and (6.24), we deduce that:

$$\begin{aligned} \sqrt{n}\,\partial _\theta \widehat{L}_n(\theta ^{*}) \begin{array}{c}{\mathop {\longrightarrow }\limits ^{\mathcal{L}}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\mathcal{N} \big ( 0\,, \, G(\theta ^{*}) \big ), \end{aligned}$$and since \(F(\theta ^*)\) is a definite negative matrix, from (6.25) we deduce that

$$\begin{aligned} \sqrt{n} \, \big ({{\widetilde{\theta }}}_n -\theta ^* \big ) \begin{array}{c}{\mathop {\longrightarrow }\limits ^{\mathcal{L}}} \\ {\scriptstyle n\rightarrow \infty }\end{array}\mathcal{N} \big ( 0 \,, \,F(\theta ^{*})^{-1}\,G(\theta ^{*})\, F(\theta ^{*})^{-1} \big ). \end{aligned}$$(6.27)Finally, from the previous computations of \(G(\theta ^{*})\) and \(F(\theta ^{*})\), we deduce (3.12).

\(\square \)

6.2 Proofs of additional lemmas

Lemma 6.1

Under the assumptions of Theorem 3.1, for any \(\theta \in \Theta \) and \(t\in {\mathbb {Z}}\) or \(t\in {\mathbb {N}}^*\), with \(m_t(\theta )\), \({\widehat{m}}_t(\theta )\) and \({{\widetilde{m}}}_t(\theta )\) respectively defined in (3.5), (3.7) and (6.7), we have:

where there exists \(C>0\) such as for any \(k \ge 1\) and \(t \in {\mathbb {N}}^*\),

Moreover, under the assumptions of Theorem 3.2, the same properties also hold for \(\partial _\theta m_t(\theta )\), \(\partial _\theta {\widehat{m}}_t(\theta )\) and \(\partial _\theta {{\widetilde{m}}}_t(\theta )\).

Proof

We know that \(X_t=\sum _{\ell =0}^\infty a_\ell (\theta ^*)\, \varepsilon _{t-\ell }\) for any \(t\in {\mathbb {Z}}\). Then,

As a consequence, using \(\big |a_\ell (\theta ^*)\big |\le C \, \ell ^{d^*_+-1}\) and \(\big |u_\ell (\theta )\big |\le C \, \ell ^{-d-1}\) for any \(\ell \in {\mathbb {N}}^*\), we obtain:

Using the same kind of decomposition, we obtain the other bounds. \(\square \)

Lemma 6.2

For any \(\alpha >1\), \(\beta \in (0,1)\), there exists \(C>0\) such as for any \(1\le a\),

Lemma 6.3

Under the assumptions of Theorem 3.1, there exists \(C>0\) such as for any \(\theta \in \Theta \) and \(1 \le s \le t\le n\),

Proof

Using the bounds of functions \(I_{1+d}\) and \(J_{1+d,1-2d}\) defined in Lemma 6.2, we obtain

\(\square \)

Lemma 6.4

Under the assumptions of Theorem 3.1, there exists \(C>0\) such as for any \(\theta \in \Theta \) and any \(1 \le s\) and \(1\le t\),

Proof

Then, if \(s \le t\),

And if \(s > t\),

\(\square \)

References

Adenstedt R (1974) On large-sample estimation for the mean of a stationary random sequence. Ann Math Stat 2:1095–107

Baillie R, Bollerslev T, Mikkelsen HO (1996) Fractionally integrated generalized autoregressive conditional heteroskedasticity. J Econom 74:3–30

Bardet J-M (2023) A new estimator for LARCH processes. J Time Ser Anal 45:103–132

Bardet J-M, Tudor C (2014) Asymptotic behavior of the Whittle estimator for the increments of a Rosenblatt process. J Multivar Anal 131:1–16

Bardet J-M, Wintenberger O (2009) Asymptotic normality of the quasi-maximum likelihood estimator for multidimensional causal processes. Ann Stat 37:2730–2759

Beran J (1993) Recent developments in location estimation and regression for long-memory processes. In: Brillinger D et al (eds) New directions in time series analysis, vol 46. Springer, New York

Beran J (1994) Statistics for long-memory processes. Chapman and Hall, London

Beran J, Schützner M (2009) On approximate pseudo-maximum likelihood estimation for LARCH-processes. Bernoulli 15:1057–1081

Berkes I, Horváth L (2004) The efficiency of the estimators of the parameters in GARCH processes. Ann Stat 32:633–655

Billingsley P (1968) Convergence of probability measures. Wiley, New York

Billingsley P (1995) Probability and measure. Wiley, New York

Bingham NH, Goldie CM, Teugels JL (1987) Regular variation. Encyclopedia of mathematics and its applications. Cambridge University Press, Cambridge

Boubacar Maïnassara Y, Esstafa Y, Saussereau B (2021) Estimating FARIMA models with uncorrelated but non-independent error terms. Stat Infer Stoch Process 24:549–608

Boubacar Maïnassara Y, Esstafa Y, Saussereau B (2023) Diagnostic checking in FARIMA models with uncorrelated but non-independent error terms. Electron J Stat 17:1160–1239

Dahlhaus R (1989) Efficient parameter estimation for self-similar processes. Ann Stat 17:1749–1766

Doukhan P, Grublyté I, Surgailis D (2016) A nonlinear model for long-memory conditional heteroscedasticity. Lith Math J 56:164–188

Doukhan P, Oppenheim G, Taqqu MS (2003) Theory and applications of long-range dependence. Birkhäuser, Basel

Francq C, Zakoian J-M (2004) Maximum likelihood estimation of pure GARCH and ARMA-GARCH processes. Bernoulli 10:605–637

Fox R, Taqqu MS (1986) Large-sample properties of parameter estimates for strongly dependent Gaussian time series. Ann Stat 14:517–532

Giraitis L, Surgailis D (1990) A central limit theorem for quadratic forms in strongly dependent linear variables and its applications to the asymptotic normality of Whittle estimate. Probab Theory Rel Fields 86:87–104

Giraitis L, Taqqu MS (1999) Whittle estimator for finite-variance non-Gaussian time series with long memory. Ann Stat 27:178–203

Kounias E, Weng T-S (1969) An inequality and almost sure convergence. Ann Math Stat 48:1091–1093

Parzen E (1999) Stochastic processes. Classics in applied mathematics. SIAM, Phladelphia

Samarov A, Taqqu MS (1988) On the efficiency of the sample mean in long-memory noise. J. Time Ser. Anal 9:191–200

Straumann D (2005) Estimation in conditionally heteroscedastic time series models, vol 181. Lecture Notes in Statistics Springer. Berlin

Wu WB, Yinxiao H, Wei Z (2010) Covariance estimation for long-memory processes. Adv Appl Probab 42:137–157

Acknowledgements

authors are grateful to the referees for many relevant suggestions and comments that helped to notably improve and enrich the contents of the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bardet, JM., Tchabo MBienkeu, Y.G. Quasi-maximum likelihood estimation of long-memory linear processes. Stat Inference Stoch Process (2024). https://doi.org/10.1007/s11203-024-09313-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11203-024-09313-6