Abstract

From a continuous-time long memory stochastic process, a discrete-time randomly sampled one is drawn using a renewal sampling process. We establish the existence of the spectral density of the sampled process, and we give its expression in terms of that of the initial process. We also investigate different aspects of the statistical inference on the sampled process. In particular, we obtain asymptotic results for the periodogram, the local Whittle estimator of the memory parameter and the long run variance of partial sums. We mainly focus on Gaussian continuous-time process. The challenge being that the randomly sampled process will no longer be jointly Gaussian.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Irregularly observed time series occur in many fields such as astronomy, finance, environmental, and biomedical sciences. Discretization of a continuous time process can produce unevenly time series. For example, physiological signals such as electromyography (EMG), electrocardiogram (ECG), heartbeats [see e.g. Bardet and Bertrand (2010)], as well as market prices [see e.g. Dacorogna (2001)] are measured at non regularly spaced times. In these instances and many more, we do not control the way data are observed, as they are recorded at irregular time points. A common approach consists in fitting a continuous time process to discrete-time data [see for instance Jones (1985)].

Statistical tools available to handle unevenly time series are essentially developed for short range dependence [see e.g. Li (2014) and references therein]. We can also refer to numerous papers in astronomy, that focus on spectrum estimation [see e.g. Thiebaut and Roques (2005)].

To the best of our knowledge, few results are available when the continuous-time embedding process has a long memory. Actually, long memory statistical inference for continuous-time models is generally built upon a deterministically sampled process [see Tsai and Chan (2005a, 2005b); Chambers (1996); Comte (1996)]. However, as in the examples previously cited, in several applied contexts one has to deal with random sampling from a continuous process. In time-domain, Philippe et al. (2021) studied randomly-spaced observations, using a renewal process as a sampling tool. They showed that the intensity of the long memory is preserved when the distribution of sampling intervals has a finite moment, but there are also situations where a reduction of the long memory is observed. Consequently, the continuous time memory parameter cannot be estimated without a prior information on the sampling process. Bardet and Bertrand (2010) studied spectral density estimation of continuous-time Gaussian processes with stationary increments observed at random times.

Contrary to the paper mentioned above that focuses on the continuous process itself, we are interested in the resulting discrete-time-indexed randomly sampled process. More precisely, we study the spectral-domain properties and provide explicit expressions for the spectral density of the sampled process. We mention that Philippe and Viano (2010) addressed resampling from a discrete-time process and obtained the existence of the spectral density. However, their spectral density expression is less explicit since it is expressed as a non explicit limit of an integral and they do not address resampling from a continuous process and their techniques do not extend to our case.

Most of existing long memory inferential techniques assume that the process is a subordinated Gaussian/linear one. Philippe et al. (2021) established a rather surprising characteristic consisting in the loss of the joint-Gaussianity of the sampled process when the original process was Gaussian. Therefore we cannot apply such results to our sampled processes that are neither Gaussian nor linear. We study some aspects of the inference via spectral approaches. In particular, to establish the consistency of long memory parameter’s local Whittle estimator using Dalla et al. (2006)’s assumptions for nonlinear long memory processes.

We now describe our sampling model. We start with \(X=(X_t)_{t\in \mathbb {R}^+}\), a continuous time process and a renewal process \((T_n)_{n\ge 0}\). We study the discrete-time indexed process \(Y=(Y_n)_{n\ge 1}\) defined by

We want to emphasise that the sampling process \(T_n\) is not observed. Throughout this paper, we will assume that, and refer to

- \(H_X\): :

-

X is second-order stationary continuous time process with auto-covariance function \(\sigma _X\) and having a spectral density \(f_X\): for all \(t\in \mathbb {R}\)

$$\begin{aligned} \sigma _X(t) = \int _{-\infty }^\infty e^{i\lambda t}f_X(\lambda )d\lambda .\end{aligned}$$(2) - \(H_T\): :

-

\((T_n)_{n\ge 0}\) independent of X and of i.i.d. increments \(T_{j+1}-T_j=\Delta _j\ge 0\) non degenerate with cumulative distribution function S and we let \(T_0=0\).

We impose this specific initialization \(T_0=0\) only to simplify our notations since it implies that \(\Delta _j = T_{j+1}-T_j\) for all \(j\in \mathbb {N}\). However, all the results remain true if we take \(T_0 = \Delta _0 \) and \(\Delta _j = T_{j}-T_{j-1}\), for \(j\ge 1\).

The rest of the paper is organized as follows. Section 2 presents results on the existence of a spectral density for the process Y when the spectrum of X is absolutely continuous. We also provide an integral representation of such density. In Sect. 3, we establish the asymptotic distribution of the normalized periodogram of the sampled process. In Sect. 4, we show the consistency of Y-based local Whittle memory estimator. We also study the estimation of the so-called long-run variance.

2 Spectral density function of sampled process

Under the assumptions \( H_X\) and \(H_T\), Philippe et al. (2021) show that if X is stationary then so is Y. Moreover, its covariance function is of the following form

Note that the independence of X and the renewal process imposed in \(H_T\), is required to get (3). In the next proposition, we prove that the existence of the spectral density is preserved by random sampling and we establish the link between the spectral densities of processes X and Y.

Proposition 1

Assume that the continuous-time process X satisfies \(H_X\) and that \(H_T\) holds. Then, the discrete-time process Y admits a spectral density and it is given by the following formula

where \(\Psi _S\) is the characteristic function of the cumulative distribution function S defined in \(H_T\) and

is the well known Poisson kernel.

Proof

According to the stationarity property and (3) proved in Philippe et al. (2021) and the existence of the spectral density \(f_X\) in (2), the covariance function of Y can be computed via Fubini’s theorem as follows:

To prove (4), it will suffice to show that for every \(j\ge 0\),

as \(f_Y\) defined by (4) is clearly an even function. For this, we will use the following Poisson integral formula for the disk: if u is an analytic function on the disk \(\vert z\vert <1\) and continuous on \(\vert z\vert =1\) then its real and imaginary parts are harmonic and therefore for \(\vert z\vert <1\), we have

Applying the above with \(u(z)=z^j\), where j is a fixed nonnegative integer, we get

and since for Lebesgue a.e. \(\lambda \), \(\vert \Psi _S(\lambda )\vert <1\) (S being non degenerated), then for a.e. \(\lambda \),

Also taking \(j=0\) in (7), we get

Hence, by Fubini’s theorem, we see that \(f_Y\), as given in (4), is integrable on \([-\pi , \pi ]\). Applying Fubini’s theorem once again and substituting (8) in (5), we immediately get (6). \(\square \)

The following corollary gives a precise expression of the spectral density of Y in the most common case of Poisson renewal process.

Corollary 1

Assume that the continuous-time process X satisfies \(H_X\) and that \((T_n)\) is a Poisson renewal process with rate 1, independent of X. If \(\lambda ^2f_X(\lambda )\) is bounded and continuous on the real line then

where u(x, y) is the harmonic function on the upper half plane with boundary condition \(u(x,0)=x^2f_X(x)\). In particular, both spectral densities are equivalent near zero, i.e., \(f_Y(x)\sim f_X(x)\) as \(x\rightarrow 0\).

Proof

The exponential distribution has characteristic function \((1-i\lambda )^{-1}\) and hence from Proposition 1, we can easily derive that

In the above we recognise the well known Poisson integral formula for the upper half plane for the function: \(x\mapsto x^2f_X(x)\): if g is continuous and bounded on the real line then the function defined by

is harmonic on the upper half plane and satisfies \(u(x,0)=g(x)\) [see for example the result 7.3 on p. 147 of Axler et al. (2000)] and \(\frac{u(x,y)}{g(x)} \rightarrow 1\) uniformly in x as \(y\rightarrow 0\). Combining (11) and (12) we get the stated result. \(\square \)

The next proposition precises the behaviour of the spectral density of sampled process Y near zero, given in the previous corollary, under mild semi parametric conditions on the spectral density of the original process X.

Proposition 2

Assume that \(T_n\) is a Poisson process independent of X with rate 1 and that X satisfies \(H_X\) with spectral density of the form

with \(0<d<1/2\), \(\phi (0)\ne 0\) and \(\phi \) is continuous on \([-1,1]\) and differentiable on \((-1, 1 )\). Then

with \(f_Y^*\) is positive continuous on \([-\pi ,\pi ]\) and

Proof

Since \(f_Y\) is even, we will consider \(x\in (0,\pi ]\). From (10), we have

We study both integrals in (16) near \(x=0\).

since for fixed \(\lambda \), as \(x\rightarrow 0\), the integrand (in the left-hand side) clearly increases towards \(f_X(\lambda )\). Let us deal with the first integral in (16).

Using the fact that \(f_X(\lambda )=\lambda ^{-2d}\phi (\lambda )\) and \(\sin ^2(x/2)=(1-\cos x)/2\) and putting \(\lambda =t\sin x\), we obtain for the first integral in the right hand side above, with some \(u(t)\in (0,1)\) and \(v(t)\in (0,1)\),

Putting \( t=u\tan (x/2)\) the right-hand-side of the last equation is equal to

Then

Similarly, we have

Then, we have as \(x\rightarrow 0\)

since the integrand is bounded uniformly in x by \(4f_X(\lambda )\) and converges (as \(x\rightarrow 0\)) to \(f_X(\lambda )\) and hence we can apply Lebesgue’s theorem. Combining (17) and (21) as well as (19) and (20), we obtain that

Moreover, \(f_Y^*\) is continuous and positive on \([-\pi ,,\pi ]\). Indeed, the continuity of \(f_Y^*\) follows from the fact that the 2nd integrand in the right hand side of (16) is continuous and uniformly bounded in x by \(4f_X(\lambda )\) which is integrable. As for the first integral in the right hand side of (16), after splitting it into three terms as in (18) and multiplying it by \(x^{2d}\), we see that Lebesgue’s dominated convergence theorem still applies. This completes the proof of Proposition 2. \(\square \)

We now present a lemma that gives a quite precise expression of the covariance function of X from its spectral density. We will be imposing the following condition on \(f_X\).

Condition \(H_f\): \(f_X(\lambda )=c\vert \lambda \vert ^{-2d}(1-h(\lambda ))\), \(0<d<1/2\), where h is a nondecreasing function with \(h(0)=0\) and \(h(x)\rightarrow 1\) as \(x\rightarrow \infty \) and h is differentiable at 0. We notice that condition \(H_f\) is not one of the usual slowly varying type conditions for Tauberian and Abelian theorems in the context of long range dependence [see Leonenko and Olenko (2013)]. However, it guarantees a uniform control of the remainder g(x) in (22) rather than at infinity only.

Remark 1

If the spectral density \(f_X\) satisfies \(H_f\) instead of (13), then Proposition 2 still holds with \(c:=c(d)\) instead of \(\phi (0)\). The proof is essentially the same and is omitted.

Lemma 1

Assume that condition \(H_f\) is satisfied. Then, there exist positive constants C(d) and c(d) such that for all \(x>0\),

with \(\vert g(x)\vert \le \frac{C(d)}{\vert x\vert }.\)

Proof

Let \(x>0\) be fixed. Since \(f_X\) is even we have,

Without loss of generality, we take \(2c=1\) in \(H_f\) and by the formula 3.761.9 of Gradshteyn and Ryzhik (2015)

Therefore, it remains to show that for some \(C(d)>0\),

The rest of the proof relies on applying integration by parts for Stieltjes integrals.

Let \(dU(\lambda )=\cos (\lambda x)\lambda ^{-2d}\). We have (by one integration by parts)

clearly U is bounded and

Using the fact that h is nondecreasing, \(h(\lambda )\rightarrow 1\), as \(\lambda \rightarrow \infty \), and \(h(0)=0\), we obtain (via integration by parts at some steps in the calculation below

with

also

Since

\( \int _a^b U(\lambda )dh(\lambda )\) has the same limit as

as \(a\rightarrow 0 \) and \(b\rightarrow \infty \).

We note that the integral above is indeed finite since h is a bounded function, \(h(0)=0\), and is differentiable at zero.

The proof of Lemma 1 is now complete. \(\square \)

Corollary 2

If \(T_n\) is a Poisson process and \(f_X\) satisfies condition \(H_f\) then

where \(\alpha =\min (2,3-4d)\).

Proof

We have from the previous lemma,

For \(r\ge 3\), as \(T_r\) has Gamma distribution with parameters (r, 1), we have

Also,

We know that as \(r\rightarrow \infty \),

and therefore we obtain that

which completes the proof of the corollary. \(\square \)

3 Asymptotic theory of the periodogram

We consider in this section a stationary long memory zero-mean Gaussian process \(X=(X_t)_{ t\in \mathbb {R}^+}\) having a spectral density of the form (13). Let \(Y = (X_{T_n})_{n\in \mathbb {N}}\), where \((T_n)_{n\in \mathbb {N}}\) is a Poisson process with rate equal 1 (actually any rate will do). As shown in Philippe et al. (2021) and in contrast with the original process X, while Y remains marginally normally distributed, it is no longer jointly Gaussian and, as a result, Y is not a linear process.

In this section, we extend some well-known facts about periodogram properties to the randomly sampled processes Y. In particular, our main result will be to establish that the normalized periodogram of Y will asymptotically converge to a weighted \(\chi ^2\) distribution.

Theorem 1

Assume that X is a stationary Gaussian process satisfying \(H_f\) and let \(Y = (X_{T_n})_{n\in \mathbb {N}}\) where \((T_n)_{n\in \mathbb {N}}\) is a Poisson process with rate equal 1. Let

be the periodogram of \(Y_1,\ldots ,Y_n\) at Fourier frequency \(\lambda _j=2\pi j/n\) for \(j\in \lbrace 1,~\dots ~, \lfloor n/2\rfloor \rbrace \). Then, we have for any fixed number of Fourier frequencies \(\nu \ge 1\), and any \(j_1,\dots ,j_\nu \in \lbrace 1, \dots ,\lfloor n/2\rfloor \rbrace \) all distinct integers

where \((Z_1(1),Z_2(1),\ldots ,Z_1([ n/2] ),Z_2([ n/2] )\) is a zero-mean Gaussian vector, with \(Z_1(j),Z_2(k)\) are independent for all \(j,k=1,\ldots , [n/2] \) and

and

and for \(j\ne k\),

with

and

Proof

We will prove the broader result

Conditionally on \(T_1,\ldots ,T_n\), the vector \((X_{T_1},\ldots ,X_{T_n})\) is Gaussian, and hence so is \(Z_n\). Its covariance matrix \(\Sigma _T= \textrm{Var}(Z_n| T_1,\ldots ,T_n)\) has (i, k) entry of the form

where

We prove (33) using the characteristic function: since X and T are independent, for \(u\in \mathbb {R}^{2\nu }\), and with \(u'\) being the transpose of u,

As the characteristic function is bounded, it will suffice to show that

where \(\Sigma \) is the variance-covariance matrix of \((\sqrt{L_1(j_1)}(Z_1(j_1),Z_2(j_1)),\cdots ,\sqrt{L_\nu (j_\nu )}(Z_1(j_\nu ),Z_2(j_\nu )))\).

When i and k are fixed, the form of \(h_{i,k}(r,s)\) is the same for all r and s and hence \(\mathbb {E}\left( \Sigma _T\right) \) will have entries of the form

by (3), and therefore \(\mathbb {E}(\Sigma _T)\rightarrow \Sigma \) by virtue of Theorem 5 of Hurvich and Beltrão (1993) (the only condition required is second order stationarity of the process \(Y_i\) and the behaviour (14) of its spectral density). To complete the proof of (34), it will then suffice to show that

i.e. the variances of the entries of \(\Sigma _T\) converge to zero. By Cauchy–Schwarz inequality, it will be enough to focus on the diagonals. We will treat those diagonals with cosine, as those with sine treat the same way. For some constant C (that may change from one expression to another), we obtain

using Corollary 2. \(\square \)

4 Inference for the long-memory parameter

We still assume in this section that \(X= (X_t)_{t\in \mathbb {R}^+}\) is a stationary long memory zero-mean Gaussian process having a spectral density satisfying \(H_f\) condition. Periodogram-based approaches to estimate the long memory parameter d are very popular. Often one requires that the underlying process is linear or at least is built on martingale difference innovations. The reader is referred to Beran et al. (2013), Giraitis et al. (2012) for reviews of some recent works on this issue, as well as the book edited by Doukhan et al. (2003). The next lemma and its proof show that although it is not a linear process with i.i.d. innovations, the sampled process still satisfies important long memory 4th cumulant conditions. These 4th cumulant conditions will allow us to show both the convergence of an estimator of the memory parameter d and the estimation of the asymptotic variance, necessary for example in the inference about the mean of the original continuous time process X.

Lemma 2

Assume that X is a zero mean stationary Gaussian process satisfying \(H_f\) and let \(Y= (X_{T_n})_{n\in \mathbb {N}}\), where \((T_n)_{n\in \mathbb {N}}\) is a Poisson process. Then for all \(d \in (0,1/2)\), we have

and

Proof

The proof is postponed in Appendix. Note that the term \(\log (n)\) in the right hand side of (38) is needed only in the particular case \(d=1/4\), known to be borderline between weak long memory and strong long memory, as will be seen in the proof. \(\square \)

4.1 Consistency of local Whittle estimator

We consider local Whittle estimator of the memory parameter d defined by

where the contrast function \(U_n\) is defined by

and the bandwidth parameter \(m=m_n\) satisfies \(m_n\rightarrow \infty \) and \(m_n=o(n)\).

Theorem 2

Suppose X is a stationary Gaussian process satisfying condition \(H_f\) and that \(Y_n=X_{T_n}\), where \(T_n\) is a Poisson process with rate 1. Then,

In addition, for \(m_n=n^a\), \(0<a<1\), we have

Remark 2

Equation (40) provides a lower bound on the convergence rate of \(\hat{d}\) uniformly in d. As can be seen from the proof, the non uniform rate (for each d) is much better. But this slow rate will be enough to plug \(\hat{d}\) in the long run variance estimate below (see Proposition 3).

Proof

According to our result (15) and Dalla et al. (2006) (Corollary 1), we have

for some remainder \(r_n\), which we will be controlling as in what follows, depending on the convergence rate of m/n to zero. To prove (39) it will suffice to show that \(r_n\rightarrow 0\).

Case 1 \(\sqrt{n}(\log n)^{4/(1-2d)}=O(m)\). From part (iv) of Corollary 1 of Dalla et al. (2006), the remainder \(r_n\) can be written as

where

We have

so that by (37) we get \(D_n^{**}=O(n^{2d})\) and hence \(r_n=O(1/\log n)\).

Case 2 \(m=O(\sqrt{n}(\log n)^{4/(1-2d)})\). We use (iii) of Corollary 1 of Dalla et al. (2006),

where

According to (38), \(D_n^*=O(n^{4d}\log n)\), and therefore

This concludes proof of (39).

To prove (40), we show that \(r_n=o(1/\log n)\). This is immediate in case (2) above. Since \(m=n^a\), \(0<a<1\), we will be in case (1) if \(a>1/2\) and then

\(\square \)

4.2 Long run variance

The 4th cumulant condition (37) is needed to estimate the long run variance of the sampled process. Such estimation plays a crucial role in many aspects of statistical inference. For example, when it comes to estimating the mean \(\mu \) of the original process X, as we have from Philippe et al. (2021)

and hence, it is important to obtain a consistent estimator of the variance above. Also such estimator is important in testing for short memory versus long memory or for stationarity versus unit root as such tests involve V/S type statistics and require estimating the long run variance [see Giraitis et al. (2006) and references therein for details]. Let us write the spectral density of \(Y_i\) under the form \(f(\lambda )\sim c\vert \lambda \vert ^{-2d}\) as \(\lambda \rightarrow 0\). Let

be the sample covariance function of \(Y_i\). Let the asymptotic variance of the normalized sum be

Let

Proposition 3

Let \(\hat{d}\) be a consistent estimator for memory parameter d such that \(\log (n)(\hat{d}-d)=o_P(1)\). Let \(q\rightarrow \infty \) as \(n\rightarrow \infty \) such that \(q=O(\sqrt{n})\). Then we have

Proof

Referring to Theorem 2.2. of Abadir et al. (2009) we just need to verify the cumulant condition

for some positive constant \(\tilde{c}\). This is the case according to Lemma 2. \(\square \)

Remark 3

A readily available candidate for \(\hat{d}\) above is the Whittle estimator for which the \(\log (n)\) consistency was established in Theorem 2.

5 Simulation and discussion

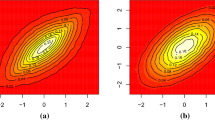

Recall that in the context of this work, a long memory continuous process is observed at random times \(T_1,\ldots ,T_n\) according to a renewal process. Therefore the number of observations n is fixed in advance but time period length \(T_n\) is random. In our simulation, we compare various strategies for sampling a continuous-time process to assess their impact on the inference regarding the mean and the long-memory parameter. It’s important to note that, in applications, the choice of sampling strategies is not deliberate but rather imposed.

The simulations are conducted in the following context. We consider a zero-mean Gaussian process \((X_t)\) with a covariance function

where d is the memory parameter. This is actually a covariance function as it satisfies Theorem 1’s conditions in Pólya (1949). The sampling process can either be a Poisson process or deterministic. We contemplate two ways of constructing the Poisson process with rate \(\lambda \): either by fixing n, the number of arrivals or by setting in advance T, the maximum duration for the observation period. The parameters of the three strategies given in Table 1 are chosen to ensure comparability of the simulation results. Indeed, on average, the time period length and the number of observations are the same for the three strategies.

5.1 Description of the compared sampling strategies

The first corresponds to the sampling approach studied in this paper.

-

1.

We fix n and we generate \(T_1, T_2, \ldots , T_n\) as the first n arrivals of a Poisson process with rate \(\lambda \). The interarrival times, denoted as \(\Delta _i = T_{i+1} - T_{i}\), are independent Exponential(\(\lambda \)) random variables. Then we generate a Gaussian vector \((X_{T_1},\ldots ,X_{T_n})\) with zero mean and covariance matrix \(\Sigma _1\) with entries \(\sigma _X(T_i-T_j)\).

-

2.

We fix n and we consider deterministic values \(t_1,\ldots ,t_n\) with increment \(\lambda ^{-1}\) the mean value of interarrival time of the previous Poisson process. Then we generate a Gaussian vector \((X_{t_1},\ldots ,X_{t_n})\) with zero mean and covariance matrix \(\Sigma _2\) with entries \(\sigma _X(t_i-t_j)\).

-

3.

We fix T, the maximum duration for the observation period, equal to the mean value of the last arrival time \(T_n\) in the strategy 1, i.e. \(T=\lambda ^{-1} n\). To simulate realisation of Poisson process on [0, T] with rate \(\lambda \), we generate N the number of arrivals on [0, T] from the Poisson distribution with parameter \(\lambda T\). We simulate \(\tau _1,\ldots ,\tau _N\) as an ordered sample of the uniform distribution [0, T]. Then we generate a Gaussian vector \((X_{\tau _1},\ldots ,X_{\tau _N})\) with zero mean and covariance matrix \(\Sigma _3\) with entries \(\sigma _X(\tau _i-\tau _j)\).

5.2 Summary of the simulation study and future directions

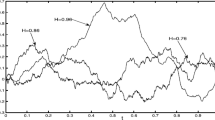

Numerical results are given in Table 2 and Figs. 1 and 2. We simulate from a Gaussian zero mean stationary process with memory parameter \(d=.25\). We compare estimation results for the mean and the memory parameter for different rates of the renewal process \((\lambda =\) 1/2, 1 and 2).

Estimation of the distribution of local Whittle estimate for \(n=500\) and \(\lambda = 1/2 \text { (left), } 1 \text { (middle) and } 2 \text { (right). }\) Estimation is based on 1000 independent replications of the Gaussian process with zero mean \(\mu = 0\), long memory parameter \(d=.25\) and \(n=1000\)

We can see that as far as the mean is concerned, there is no noticeable difference between these three strategies with little changes when the rate \(\lambda \) varies. For the memory parameter d, we also see that the bias and the standard deviations remain of the same orders across all three strategies. We retrieve the classical challenge of rightly choosing the bandwidth in local Whittle estimation. This is more acute in our context where the sampled process is neither Gaussian nor linear, despite the fact that the original process is Gaussian. It would be worth further investigating the third strategy of randomly sampling from a continuous time process at fixed period length T. One difficulty resides in the fact that interarrival times will be dependent.

References

Abadir KM, Distaso W, Giraitis L (2009) Two estimators of the long-run variance: beyond short memory. J Econom 150(1):56–70

Axler S, Bourdon P, Ramey W (2000) Harmonic function theory. Graduate texts in mathematics, vol 137. Springer, New York

Bardet J-M, Bertrand P (2010) A non-parametric estimator of the spectral density of a continuous-time Gaussian process observed at random times. Scand J Stat 37(3):458–476

Beran J, Feng Y, Ghosh S, Kulik R (2013) Long-memory processes. Probabilistic properties and statistical methods. Springer, Heidelberg

Brillinger DR (1969) The calculation of cumulants via conditioning. Ann Inst Stat Math 21(1):215–218

Chambers MJ (1996) The estimation of continuous parameter long-memory time series models. Econom Theor 12(2):374–390

Comte F (1996) Simulation and estimation of long memory continuous time models. J Time Ser Anal 17(1):19–36

Dacorogna MM (2001) An introduction to high-frequency finance. Academic Press, San Diego

Dalla V, Giraitis L, Hidalgo J (2006) Consistent estimation of the memory parameter for nonlinear time series. J Time Ser Anal 27(2):211–251

Doukhan P, Oppenheim G, Taqqu M (2003) Theory and applications of long-range dependence. Birkhäuser, Boston

Giraitis L, Leipus R, Philippe A (2006) A test for stationarity versus trends and unit roots for a wide class of dependent errors. Econom Theory 22:989–1029

Giraitis L, Koul HL, Surgailis D (2012) Large sample inference for long memory processes. Imperial College Press, London

Gradshteyn IS, Ryzhik IM (2015) Table of integrals, series, and products, 8th edn. Elsevier/Academic Press, Amsterdam

Hurvich CM, Beltrão KI (1993) Asymptotics for the low-frequency ordinates of the periodogram of a long-memory time series. J Time Ser Anal 14(5):455–472

Jones RH (1985) Time series analysis with unequally spaced data. Handb Stat 5:157–177

Leonenko N, Olenko A (2013) Tauberian and Abelian theorems for long-range dependent random fields. Methodol Comput Appl Probab 15(4):715–742

Li Z (2014) Methods for irregularly sampled continuous time processes. PhD Thesis, University College of London

Philippe A, Viano M-C (2010) Random sampling of long-memory stationary processes. J Stat Plan Inference 140(5):1110–1124

Philippe A, Robet C, Viano M-C (2021) Random discretization of stationary continuous time processes. Metrika 84(3):375–400

Pólya G (1949) Remarks on characteristic functions. In: Proceedings of the first Berkeley conference on mathematical statistics and probability. pp 115–123

Thiebaut C, Roques S (2005) Time-scale and time-frequency analyses of irregularly sampled astronomical time series. EURASIP J Adv Signal Process 2005(15):852587

Tsai H, Chan KS (2005a) Maximum likelihood estimation of linear continuous time long memory processes with discrete time data. J R Stat Soc Ser B Stat Methodol 67(5):703–716

Tsai H, Chan KS (2005b) Quasi-maximum likelihood estimation for a class of continuous-time long-memory processes. J Time Ser Anal 26(5):691–713

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Proof of Lemma 2

Appendix: Proof of Lemma 2

Proof

The proof is essentially based on Corollary 2 and a well known cumulant formula.

Without loss of generality, we can assume that the Poisson rate is 1. The process Y is 4th order stationary as the conditional joint distribution of \((Y_k,Y_{k+h},Y_{k+r},Y_{k+s})\) given \((T_1,\dots ,T_{k+\max (h,r,s)})\) is a multivariate normal with variance-covariance matrix \(M(T_k,T_{k+h},T_{k+r},T_{k+s})\) given by

which is k free. Hence it is enough to establish the lemma when \(k=0\). We apply the total law of cumulance formula (Brillinger 1969), which for the sake of clarity, we remind here: for all random vectors \(Z=(Z_1,\ldots ,Z_n)'\) and W, we have

where \(X_{\pi _j}=(X_i,i\in \pi _j)\), and \(\pi _1,\ldots ,\pi _b\), (\(b=1,\ldots ,n\)) are the blocks of the permutation \(\pi \), and the sum is over all permutations \(\pi \) of the set \(\{1,2,\ldots ,n\}\).

But condition on T, the process \(Y_t\) is jointly zero-mean Gaussian and therefore \(\mathbb {E}(Y_t\vert T)=0\) as well as \(\textrm{cum}(Y_i,Y_j,Y_k,Y_\ell \vert T)=\textrm{cum}(Y_i,Y_j,Y_k\vert T)=0\) for all \(i,j,k,\ell \). Hence applying (42) to \(Y_t\) with \(W=T\), only the two-by-two partitions of \(\{0,h,r,s\}\) will survive. and since \(\textrm{cum}(U,V)=\text {Cov}(U,V)\), we get from (41)

Note that for \( h< \min (r,s)\), \(\textrm{Cov}(\sigma _X(T_h),\sigma _X(T_r-T_s))=0\). Moreover

The last configuration is

Therefore uniformly in h we have

For the remaining two terms in the right hand side of (43) we have, for fixed h,

This concludes the proof of (37).

Let us now prove (38). Note that

Moreover, we have

In the particular case \(d=1/4\) (where we still have \(\alpha =2\)), a supplementary term \(\log (n)\) is needed in the bound. Indeed we split the sum in the right hand side of (44) into 3 configurations. when \(1 \le h \le r< s \le n\) the covariance \( \textrm{Cov}(\sigma _X(T_h),\sigma _X(T_r-T_s))\) is zero. When the sum is over \(1 \le r< h \le s \le n\), we get

For the last sum over \(1 \le r < s \le h \le n\) (where we will need the \(\log \) term) we have

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ould Haye, M., Philippe, A. & Robet, C. Inference for continuous-time long memory randomly sampled processes. Stat Papers 65, 3111–3134 (2024). https://doi.org/10.1007/s00362-023-01515-z

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00362-023-01515-z