Abstract

Recently, there has been a growing interest among statistical researchers to develop new probability distributions, adding one or more parameters to previously existing ones. In this article, we describe a bibliometric analysis carried out to show the evolution of various models based on the seminal model of (Marshall and Olkin, Biometrika. 1997). This method allows us to explore and analyze large volumes of scientific data through performance indicators to identify the main contributions of authors, universities, and journals in terms of productivity, citations, and bibliographic coupling. The analysis was performed using the Bibliometrix R-package tool. The sample of analyzed data was based on articles indexed in the main collection of the Web of Science and Scopus between 1997 and 2021. This work also includes an overview of the methodology used, the corresponding quantitative analyses, a visualization of networks of collaboration and co-citations, as well as a description of the topic trends. In total, 131 articles were analyzed, which were published in 67 journals. Two journals published 17% of the manuscripts analyzed in this work. We identified 238 separate authors who have participated in the development of this research topic. In 2020, 49% of the authors presented new distributions, where their proposed models included up to six parameters. The publications were grouped into 20 collaborative groups of which Groups 1 and 2 are dominant in the development of new models. Thus, 24% of the publications analyzed belong to two researchers who lead these two groups. To show the flexibility of the new distributions, the authors apply their models using at least two sets of real-world data to show their potentiality. This article gives a broad overview of different generalizations of the Marshall-Olkin model and will be of great help to those interested in this line of research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

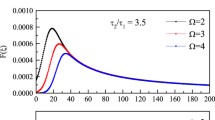

Marshall and Olkin (1997) introduced a method for adding a parameter to a distributions family through application to the exponential and Weibull with the purpose of obtaining new distributions. This procedure aims to develop new distributions with greater flexibility in modeling various data types. According to the authors, if \(\overline{F }(x)\) is denoted as a survival function of a continuous random variable \(X\), then the mechanism of adding parameter \(\alpha\), results in another survival function \(\overline{G}\left( x \right)\) defined by,

where the probability density function (PDF) and the cumulative distribution function (CDF) corresponding to Eq. (1) are:

and

where \(f\left( x \right)\) is the PDF corresponding to \(\overline{F}\left( x \right)\).

On the other hand, the hazard rate function of the Marshall–Olkin extended distribution is given by

where \(r\left(x\right)=\frac{f(x)}{\overline{F }\left(x\right)}\), which is the hazard rate function of the baseline distribution.

Marshall and Olkin called the additional parameter “tilt parameter” since the hazard rate of the new family is shifted below \((\alpha >1)\) or above \(\left(0<\alpha \le 1\right)\) of the hazard rate of the underlying distribution. In other words, for all \(x\ge 0\), \(h\left(x\right)\le r\left(x\right)\) when \(\alpha >1\), if \(0<\alpha \le 1\) then \(h\left(x\right)\ge r\left(x\right)\).

From the seminal work of Marshall and Olkin (1997), many researchers have introduced new distributions or generalized some existing distributions to model a real data set's behavior. The main objective of proposing, extending, or generalizing models is to explain how a data set behaves in lifetime analysis, survival times, failure times, and reliability analysis. Furthermore, the proposed models have been applied in areas such as medicine, public health, biology, physics, computer science, finance and insurance, engineering, industry, communications, among others (Jayakumar & Sankaran, 2019a, 2019b; Nassar et al., 2019; Rondero-Guerrero et al., 2020).

For instance, better modelling of reliability analysis of system components is increasingly important in virtually all sectors of manufacturing, engineering, and administration. Indeed, reliability engineering studies the ability of a device to function without failures in order to predict or estimate the risk of failure. That is, it studies the capacity of a component or system to function during a specific time or over an interval of time (Ricardo P. Oliveira et al., 2021). Therefore, the use or application of different lifetime distributions has become more critical due to the global dynamics of trade. Because globally there is a growing variety of products and increasing focus on quality control more companies are under pressure to perform reliability analysis of their products to understand failure and survival rates. In addition, statistical lifetime distributions have grown in fields such as biological sciences, life tests, and medicine since they can predict disease behaviors, in particular control measures and mitigation in response to the social impact of epidemics and pandemics.

Although, Ricardo P. Oliveira et al. (2021), Algarni (2021), and Eghwerido et al. (2021) mention that lifetime probability distributions such as Weibull, exponential, Lindley, and Weibull exponential distribution, among others, can be used to model the data, in many cases they do not provide a good fit for modeling phenomenon with non-monotone failure rates, such as bathtub upside down failure shaped. For this reason, many researchers have developed new, more flexible models in the last decade.

That is why this article aims to analyze publications related to the new distribution models or generalizations of existing distributions that derive from the seminal work of Marshall and Olkin (1997) through a bibliometric approach. For this bibliometric study, we focus on publications found in Web of Science (core collection) and Scopus databases. In addition, this study considers several bibliometric indicators related to authors, journals, and articles. The paper presents various bibliometric techniques using open-access software “Rstudio” of “R” to mapping collaboration and co-citation networks. The contribution of this work to the body of literature lies in:

-

Describing how the contribution by Marshall and Olkin (1997) in developing new distributions in terms of publications, authors, and journals is organized and advanced. As well as identify bibliometric trends.

-

Presenting the main characteristics of the new distributions or distribution families.

Materials and method

Bibliometric analysis is a type of quantitative analysis used to classify and report bibliographic data on a particular research topic. In other words, it is used to measure the impact of journals, identify authors, and detect new research lines. This type of analysis involves the mathematical and statistical treatment of scientific publications and their respective citations. Bibliometric analysis results provide relevant information about the level of activity (research) that exists among authors, organizations or countries, as well as the evolution of research topics (Cancino et al., 2019; Ferreira, 2018; Lei & Xu, 2020).

We chose a bibliometric study because it develops a systematic, transparent, and reproducible process of identifying relevant manuscripts. In addition, a series of techniques are applied to evaluate scientific production through objective and quantitative indicators of bibliographic data (Krainer et al., 2020). The protocol used for this bibliometric analysis is shown in Fig. 1. This process begins by establishing the research topic and then continues with four sequential stages that provide sufficient evidence on the contribution and development of scientific knowledge (development or generalization of new distributions).

Also, in this protocol, two main categories of analysis are considered: performance analysis and scientific mapping. The first one aims to present a descriptive analysis of the following parameters: authors, journals, institutions, and articles. The second focuses on mapping bibliometric networks to explore the interrelationship between authors and references (Ruggeri et al., 2019).

The “RStudio” software was used with the Bibliometrix 3.0.1 package. This package is appropriate for bibliometric and scientometric studies because it provides users with a greater degree of control over modifying and adjusting the input and output data (Aria & Cuccurullo, 2020).

Data collection

Oorschot et al. (2018) and Ruggeri et al. (2019) state that to guarantee the documents' maximum quality be analyzed, it is vital to use the Web of Science database (core collection) since the validity of any bibliometric analysis depends mostly on selecting the publications. The authors indicate that Web of Science meets the highest standards in terms of impact factor and number of citations. On the other hand, Lei and Xu (2020) mention that another database with high-quality standards is the Scopus database. According to Lei and Xu, Scopus is the world's largest peer-reviewed journal abstract and citation database.

Therefore, to achieve the objective of this study, the Web of Science (main collection) and Scopus databases were both selected. Data collection was carried out beginning on April 30, 2021. For the Web of Science database, the following search string was used: Topic (Marshall-Olkin), Publication Years (2021–1997), and Document Types (Article). The results of this search produced 339 articles. To verify the coherence of the subject matter of each manuscript, each one of them was analyzed. Through this process, 239 documents were removed from the database, resulting in a dataset of 100 documents.

The SCOPUS database was obtained with the following search string: Article title, Abstract, Keywords (Marshall-Olkin), Year (2021–1997), and Document Type (Article). The result was 352 articles. For this database, the same Web of Science process was carried out to verify the coherence of the subject matter of each manuscript, which resulted in 106 documents.

Finally, to combine the bibliographic data Web of Science and Scopus, and eliminate the duplicate data (duplicate articles), the “mergeDbSources” function of the Bibliometrix package was used (Aria & Cuccurullo, 2020), where a total of 131 articles were ultimately obtained for bibliometric analysis.

Bibliometric analysis

In this section, a quantitative analysis of the articles composing the database is presented and discussed. We carried out a descriptive analysis considering the following parameters: authors, journals, institutions, and articles. Secondly, we conducted a bibliometric network analysis considering collaboration and co-citation networks.

Of the 131 articles analyzed, 238 authors participated in the development of the publications. Collaboration between authors is the key to the development of new models. Table 1 shows that eight papers were each written by only one author. The rate of collaboration between authors is 1.8. The main information about the bibliometric data is shown in Table 1.

Annuals and trends

Table 2 presents an overview of the annual scientific production from 1997 to April 21, 2021. As shown in the table, from 1997 to 2011, there were only six publications related to new classes or generalizations of distributions based on the seminal work by Marshall and Olkin, (1997). Table shows that from 2013 to 2015, an interest in developing new probability distributions began. From 2016 to date, there has been a significant increase in the publication of new distribution models. In terms of the number of total citations (TC) and the number of average citations per article (ATC), the most cited manuscripts correspond to the year 2007, although in that year there were only two publications. However, interest in this research topic increased significantly since 2016. Regarding the number of authors, there is an upward trend from 2013 to date, as shown in Table 2.

The main authors, most influential articles and journals

Table 1 showed that, in this analyzed database, 238 authors have written about new distributions or have extended a distribution class. This section presents the most productive authors, those with five or more publications. Under this consideration, 12 researchers were identified, as Table 3 shows. These 12 authors are ordered by the number of publications from highest to lowest. Cordeiro G. is the author who has contributed the most to the development of new distributions with a total of 17 publications, followed by Afify A. with 14 articles. Regarding the total number of citations, the most cited authors are Cordeiro G. (123), Al-Awadhi F. (105), Alkhalfan L. (105), Ghitany M. (105), Yousof H. (101), and Afify A. (97).

On the other hand, one of the objectives among the scientific community (researchers) is to achieve a significant and recognized impact through their publications. One way of assessing the impact of an author is by considering where and how often their work is cited. That is, author-level metrics are citations metrics that measure the bibliometric impact of authors individually. Table 3 presents three metrics that measure the impact of the authors analyzed here. As seen in Table 3, Cordeiro G. is in the first position of two of the three indices presented (h-index and g-index), and Yousof H. has the highest level in the m-index.

Another aspect of a bibliometric analysis is to identify the most influential documents in the development of new distributions. As shown in the Table 4, the document by Ghitany et al. (2007) is the most cited in the database, with a total of 105 citations. The authors of this article present a new variant of “the extended family of Marshall and Olkin distributions,” where they introduce the Lomax distribution to generate a new model. A significant aspect of the publications shown in Table 4 is that two articles were each written by a single author; the other eight papers were collaborations.

Of the different document types available on scientific platforms or databases, journals are considered the most important means of communication, and it is one of the most widely used sources among the scientific community. Therefore, a journal works as an official means to record scientific and academic findings publicly; that is, it is considered as a scientific and social institution that indicates aspects such as the contribution and prestige of researchers (authors), the disciplines of the most influential scientists, and the most productive institutions, countries, and publishers.

Table 5 shows the journals that have two or more publications in this database. As shown in the table, the journal “Communications in Statistics-Theory and Methods” ranks first in the number of articles and citations, with 13 and 156, respectively. However, the journal “Statistical Papers” has the highest proportion of citations per paper (3 papers and 85 citations). On the other hand, journal quality can be determined by the impact factor (in this case, the relationship between the number of articles and the sum of the citations of these articles). In this bibliometric analysis, the journal with the highest impact factor is “Journal of King Saud University – Science”, followed by “Journal of Computational and Applied Mathematics”, and “Statistical Papers”.

Bibliometric networks

Network analysis is a technique widely used in bibliometrics and scientometrics studies. Bibliometric networks generally consist of nodes, which may be authors (researchers), universities, countries, journals, keywords or references, and links representing the relationships between them. In each case, the corresponding bibliometric network represents a set of documents for study or analysis. The software used in this work allows the following bibliometric networks to be created and represented visually: Collaboration Networks, Co-citation Networks, Coupling Networks, and Co-occurrences Networks.

The mapping method in Bibliometrix 3.0.1 package consists of three stages: (1) standardization method, (2) type of network, and (3) clustering algorithm of the nodes in the network. To visualize bibliometric networks, the “net2VOSviewer” function of the Bibliometrix 3.0.1 package was used to export the obtained networks to the “VOSviewer” software (Aria & Cuccurullo, 2020). The collaboration network and co-citation network for this work are presented below.

Collaboration network

This analysis allows for identifying the level of collaboration of published research from three different approaches: authors, institutions, and countries. This analysis type aims to investigate the level of collaborative strength of research in a specific field.

Concerning co-authorship relationships, Table 1 shows that 12 of the 238 authors are listed as sole authors. That is, 91% of the articles in this database were published by co-authorship. Figure 2 shows the collaboration network between authors. The most collaborative authors, in terms of publications, are represented as larger nodes. In Fig. 2, it can be seen that the largest node is for Cordeiro G. and Afify A., which shows the most articles and the total strength of the link. The above is consistent with Table 3. On the other hand, 20 research clusters were identified, represented by different colors, where it can be observed that the research clusters of Afify A., Ozel G., Jamal F., Yousof H., Alizadeh M., and Nasir M. are closely collaborating with Cordeiro G. The rest of the researchers show a lower weight in publications and collaborative links. Thus, the clusters are distributed separately, where some are barely connected with one researcher, and others have no connection or collaboration with other research clusters.

Co-citation network

According to Ruggeri et al. (2019), they mention that citation analysis is a bibliometric technique proposed by Small, (1973) that aims to represent through a network the structure of a set of documents that are commonly cited among themselves. In other words, the more times two papers are cited together, the stronger their association, which allows us to infer in some way that the authors’ research is significantly related (they belong to the same research field).

In Fig. 3, the co-citation network of references is presented. The database recorded a total of 3,505 cited references. In Fig. 3, the 200 references with the most significant citation nodes are shown, indicating that these articles are the most frequently cited in publications related to the development of new distributions. The most cited reference is the seminal work by Marshall and Olkin, (1997); this allows us to infer that this article represents the central knowledge base for developing new distributions, and it is also congruent with the study objective of this work. On the other hand, of the 131 articles analyzed, it is observed that the manuscript of Jayakumar and Mathew, (2008) is the most cited within the local citations.

Main findings of the bibliometric analysis

According to the database, in the last six years, mainly researchers in statistics, mathematics, and engineering have developed new distributions or have generalized and extended the existing models to increase the distributions' versatility. The popular and most used distributions such as the exponential, gamma, Normal, and Weibull, among others, are very limited in their characteristics and cannot show great flexibility. For this reason, many authors have used different techniques to build new models. According to El-Morshedy et al. (2020), the main reasons for these new models are:

-

To build heavy-tailed distributions to be able to model real data.

-

To make the kurtosis more flexible compared to the baseline model(s).

-

To generate distributions with symmetric, left-skewed, right-skewed, or reversed-J shape.

-

To provide more flexibility in the cumulative distribution function and the hazard rate function.

-

To provide a better fit than models generated under the same baseline distribution.

Another relevant point considered by the articles was the method for estimating the parameters of the model. Ninety-five percent of the papers presented a method for estimating the parameters of their model. The rest of the manuscripts did not present this point. The predominant method was that of maximum likelihood; 124 articles used this method. Also, 13 manuscripts applied the Bayesian estimation method. Other methods used to estimate parameters were the maximum product spacing, the least-squares method, and the interval estimation method.

The main topics addressed in the manuscripts are survival function, hazard function, mean residual life, Renyi entropy, moments, moment generating function, quantile function, order statistics, stochastic orderings, estimation method, simulation, and applications. Of the 131 works, 91 of the articles mention that they used software to find the values of the parameters in the different simulations and applications of the proposed models. Seventy-one manuscripts used the open-source software "R." They also used other software such as Maple, Matlab, Mathematical, SAS, Python, Mathcad, Mathematica, and Ox matrix programming language. The rest of the papers did not mention what software they used.

Ninety-one percent of the publications illustrate the proposed model's practical importance by applying it to a real data set to show the new distribution's potential and flexibility. The data used are adjusted to the proposed model and compared with other existing models. For comparison purposes, the authors calculate some goodness-of-fit statistics such as Akaike information criterion (AIC), consistent Akaike information criterion (CAIC), Bayesian information criterion (BIC), Hannan-Quinn information criterion (HQIC), Anderson Darling (AD), Cramér-von Mises Criterion (CVMC), Kolmogorov-Smirnov (KS) statistic, and corresponding p-values. An important aspect to note about the authors' data used to show the new distributions' application is that 109 articles take data from other publications with reference years from 1965 to 2011.

Some of the data used correspond to failure times of mechanical or electrical components, waiting times in banks, survival times due to tuberculosis infection, survival times in cancer patients, strength tests for glass fibers, number of deaths from vehicle accidents, fatigue times of 6061 T6 Aluminum Coupons, wind speed measured at 20 m height, remission times in cancer patients, tension at break of carbon fibers, GDP growth (% per year), nicotine measurements, equipment or device failure rate, fatigue fracture, monthly tax income, sports, assess the risks associated with earthquakes that occur near a nuclear power plant (distances, in miles, to the nuclear power plant and the epicenter of the earthquake), call times, average annual growth rate of carbon dioxide, maximum annual flood discharges, average maximum daily rainfall for 30 years, vehicular traffic, lifespan (in km) of front disk brake pads on randomly selected cars, marital status and divorce rates, and length of relief times of patients who received an analgesic. Consider that each of the models proposed in this database will serve as a possible model to others available through the literature to model another real-life data set.

One of the main characteristics of the new distributions or generalizations is the number of parameters added to the model to provide greater flexibility in modeling specific applications or data. Table 6 shows the name and number of parameters in each of the models proposed in the 131 articles analyzed. As can be seen in the table, 9.2% of the distributions only consider two parameters, 45% work with three parameters, 35.1% consider four parameters, 8.4% model with five parameters, and 2.3% (3 articles) are considering a distribution with six parameters, which was proposed by Handique and Chakraborty, (2017a, 2017b), Yousof et al. (2016), and Jose et al. (2011).

Clearly, from the empirical applications of the models analyzed in this bibliometric study, the results reported by the authors show that the new proposed distributions, which are generalizations of Marshall and Olkin, (1997), produce better results than other models that are already known and widely applied.

Conclusion

The main objective of this manuscript was to present a bibliometric analysis on distribution functions that have been developed from the seminal work of Marshall and Olkin (1997) over twenty-four years from 1997 and 2021. The Bibliometrix package was used through the R software for data mining and analysis and bibliometric network mapping. This process made it possible to identify the main trends and contributions in this line of research.

Two research repositories, Web of Science and Scopus, were used to compile the database of 131 articles. Some of the most relevant findings that contribute to the current literature are the new distributions adding one or two parameters to the baseline models or the previous generalizations. There are distributions where up to six parameters are involved in achieving greater flexibility in the proposed models. The maximum likelihood method is predominant for estimating parameters; in 124 articles, this method is used. However, other methods such as the maximum product spacing, the least-squares method, and the interval estimation method have been used in recent years to estimate parameters. On the other hand, the main topics addressed in the publications are survival function, hazard function, mean residual life, Renyi entropy, moments, moment generating function, quantile function, order statistics, stochastic orderings, and estimation method. In addition, in order to show the advantages of the proposed models, 91% of the analyzed publications carried out simulations and applications to real data to ratify and show the competitiveness of the new distributions that are being constituted.

Finally, we note that more than 90% of the distributions analyzed in this work are generalizations or extensions of models already existing in the literature. Thus, it follows that the different structures that have been used to model distributions will be combined or expanded, and new models will be developed that will make it possible to analyze the behavior of different data sets related to real-world problems.

References

Afify, A. Z., & Alizadeh, M. (2020). The odd dagum family of distributions: properties and applications. Journal of Applied Probability and Statistics, 15(1), 45–72.

Afify, A. Z., Cordeiro, G. M., Ibrahim, N. A., Jamal, F., Elgarhy, M., & Nasir, M. A. (2021). The Marshall-Olkin odd Burr III-G family: theory, estimation, and engineering applications. IEEE Access, 9(3), 4376–4387.

Afify, A. Z., Cordeiro, G. M., Yousof, H. M., Saboor, A., & Ortega, E. M. M. (2018). The Marshall-Olkin additive weibull distribution with variable shapes for the hazard rate. Hacettepe Journal of Mathematics and Statistics, 47(2), 365–381.

Afify, A. Z., Kumar, D., & Elbatal, I. (2020a). Marshall-Olkin power generalized weibull distribution with applications in engineering and medicine. Journal of Statistical Theory and Applications, 19(2), 223–237.

Afify, A. Z., Yousof, H. M., Alizadeh, M., Ghosh, I., Ray, S., & Ozel, G. (2020b). The Marshall-Olkin transmuted-G Family of distributions. Stochastics and Quality Control, 35(2), 79–96.

Afify, W. M. (2016). Adding a new parameter in flexible weibull distribution using Marshall-Olkin model and its application. Advances and Applications in Statistics, 48(2), 157–167.

Ahmad, H. A. H., & Almetwally, E. M. (2020). Marshall-Olkin generalized pareto distribution: bayesian and non bayesian estimation. Pakistan Journal of Statistics and Operation Research, 16(1), 21–33.

Ahmad, H. H., Bdair, O. M., & Ahsanullah, M. (2017). On Marshall-Olkin extended weibull distribution. Journal of Statistical Theory and Applications, 16(1), 1–17.

Ahmed, M. T., Khaleel, M. A., & Khalaf, E. K. (2020). The new distribution (Topp Leone Marshall Olkin-Weibull) properties with an application. Periodicals of Engineering and Natural Sciences, 8(2), 684–692.

Alawadhi, F. A., Sarhan, A. M., & Hamilton, D. C. (2016). Marshall-Olkin extended two-parameter bathtub-shaped lifetime distribution. Journal of Statistical Computation and Simulation, 86(18), 3653–3666.

Al-babtain, A. A., Elbatal, I., & Yousof, H. M. (2020). A New flexible three-parameter model: properties, clayton copula, and modeling real data. Symmetry, 12(3), 440.

Al-Babtain, A. A., Sherwani, R. A. K., Afify, A. Z., Aidi, K., Nasir, M. A., Jamal, F., & Saboor, A. (2021). The extended Burr-R class: properties, applications and modified test for censored data. AIMS Mathematics, 6(3), 2912–2931.

Algarni, A. (2021). On a new generalized lindley distribution: properties, estimation and applications. PLoS ONE, 16(2), 1–19.

Alghamedi, A., Dey, S., Kumar, D., & Dobbah, S. A. (2020). A New Extension of Extended Exponential Distribution with Applications. Annals of Data Science, 7(1), 139–162.

Alizadeh, M., Cordeiro, G. M., de Brito, E., & Clarice, C. G. (2015). The beta Marshall-Olkin family of distributions. Journal of Statistical Distributions and Applications, 2(4), 1–18.

Alizadeh, M., MirMostafaee, S. M. T. K., Altun, E., Ozel, G., & Khan Ahmadi, M. (2017a). The odd log-logistic Marshall-Olkin power lindley distribution: properties and applications. Journal of Statistics and Management Systems, 20(6), 1065–1093.

Alizadeh, M., Ozel, G., Altun, E., Abdi, M., & Hamedani, G. G. (2017b). The odd log-logistic Marshall-Olkin lindley model for lifetime data. Journal of Statistical Theory and Applications, 16(3), 382–400.

Almetwally, E. M., & Haj Ahmad, H. A. (2020). A new generalization of the pareto distribution and its applications. Statistics in Transition, 21(5), 61–84.

Almetwally, E. M., Sabry, M. A. H., Alharbi, R., Alnagar, D., Mubarak, S. A. M., & Hafez, E. H. (2021). Marshall-Olkin alpha power weibull distribution: different methods of estimation based on Type-I and Type-II censoring. Complexity, 2021, 1–18.

Al-Mofleh, H., Afify, A. Z., & Ibrahim, N. A. (2020). A new extended two-parameter distribution: properties, estimation methods, and applications in medicine and geology. Mathematics, 8(9), 1–21.

Almongy, H. M., Almetwally, E. M., & Mubarak, A. E. (2021). Marshall-Olkin Alpha power lomax distribution: estimation methods, applications on physics and economics. Pakistan Journal of Statistics and Operation Research, 17(1), 137–153.

Alqallaf, F. A., & Kundu, D. (2020). A bivariate inverse generalized exponential distribution and its applications in dependent competing risks model. Communications in Statistics: Simulation and Computation. https://doi.org/10.1080/03610918.2020.1821888

Alshangiti, A. M., Kayid, M., & Alarfaj, B. (2014). A new family of Marshall-Olkin extended distributions. Journal of Computational and Applied Mathematics, 271, 369–379.

Alshangiti, A. M., Kayid, M., & Almulhim, M. (2016). Reliability analysis of extended generalized inverted exponential distribution with applications. Journal of Systems Engineering and Electronics, 27(2), 484–492.

Aria, M., & Cuccurullo, C. (2020). Package ‘bibliometrix’: An R-Tool for Comprehensive Science Mapping Analysis. https://cran.r-project.org/web/packages/bibliometrix/bibliometrix.pdf

Balakrishnan, N., Barmalzan, G., & Haidari, A. (2018). Ordering results for order statistics from two heterogeneous Marshall-Olkin generalized exponential distributions. Sankhya b: The Indian Journal of Statistics, 80(2), 292–304.

Bantan, R., Hassan, A. S., & Elsehetry, M. (2020b). Generalized Marshall Olkin inverse lindley distribution with applications. Computers, Materials and Continua, 64(3), 1505–1526.

Bantan, R. A. R., Jamal, F., Chesneau, C., & Elgarhy, M. (2020a). On a new result on the ratio exponentiated general family of distributions with applications. Mathematics, 8(4), 598.

Barriga, G. D. C., Cordeiro, G. M., Dey, D. K., Cancho, V. G., Louzada, F., & Suzuki, A. K. (2018). The Marshall-Olkin generalized gamma distribution. Communications for Statistical Applications and Methods, 25(3), 245–261.

Basheer, A. M. (2019). Marshall-Olkin alpha power inverse exponential distribution: properties and applications. Annals of Data Science. https://doi.org/10.1007/s40745-019-00229-0

Benkhelifa, L. (2017). The Marshall-Olkin extended generalized lindley distribution: properties and applications. Communications in Statistics - Simulation and Computation, 46(10), 8306–8330.

Bidram, H., Alamatsaz, M. H., & Nekoukhou, V. (2015). On an extension of the exponentiated weibull distribution. Communications in Statistics: Simulation and Computation, 44(6), 1389–1404.

Bidram, H., Roozegar, R., & Nekoukhou, V. (2016). Exponentiated generalized geometric distribution: A new discrete distribution. Hacettepe Journal of Mathematics and Statistics, 45, 1767–1779.

Cakmakyapan, S., Ozel, G., El Gebaly, Y. M. H., & Hamedani, G. G. (2018). The Kumaraswamy Marshall-Olkin log-logistic distribution with application. Journal of Statistical Theory and Applications, 17(1), 59–76.

Cancino, C. A., Amirbagheri, K., Merigó, J. M., & Dessouky, Y. (2019). A bibliometric analysis of supply chain analytical techniques published in Computers & Industrial Engineering. Computers & Industrial Engineering, 137, 106015.

Castellares, F., & Lemonte, A. J. (2016). On the Marshall-Olkin extended distributions. Communications in Statistics - Theory and Methods, 45(15), 4537–4555.

Cordeiro, G. M., Mansoor, M., & Provost, S. B. (2019a). The Harris extended Lindley distribution for modeling hydrological data. Chilean Journal of Statistics, 10(1), 77–94.

Cordeiro, G. M., Mead, M. E., Afify, A. Z., Suzuki, A. K., & Abd El-Gaied, A. A. K. (2017). An extended Burr XII distribution: properties, inference and applications. Pakistan Journal of Statistics and Operation Research, 13(4), 809–828.

Cordeiro, G. M., Prataviera, F., Lima, M., & do C. S., & Ortega, E. M. M. . (2019b). The Marshall-Olkin extended flexible Weibull regression model for censored lifetime data. Model Assisted Statistics and Applications, 14(1), 1–17.

Cordeiro, G. M., Saboory, A., Khanz, M. N., Ozel, G., & Pascoa, M. A. R. (2016). The Kumaraswamy exponential-Weibull distribution: theory and applications. Hacettepe Journal of Mathematics and Statistics, 45(4), 1203–1229.

Cui, W., Yan, Z., & Peng, X. (2020). A new marshall olkin weibull distribution. Engineering Letters, 28, 63–68.

Da Silva, R. P., Cysneiros, A. H. M. A., Cordeiro, G. M., & Tablada, C. J. (2020). The transmuted Marshall-Olkin extended lomax distribution. Anais Da Academia Brasileira de Ciências, 92(3), e20180777.

Dey, S., Sharma, V. K., & Mesfioui, M. (2017). A new extension of weibull distribution with application to lifetime data. Annals of Data Science, 4(1), 31–61.

Eghwerido, J. T., Oguntunde, P. E., & Agu, F. I. (2021). The Alpha Power Marshall-Olkin-G Distribution: Properties, and Applications. Sankhya A: The Indian Journal of Statistics. https://doi.org/10.1007/s13171-020-00235-y

AH El-Bassiouny, EL-Damcese, M. A., Mustafa, A., Eliwa, M. S. 2016. Bivariate Exponentiated Generalized Weibull-Gompertz Distribution. Journal of Applied Probability and Statistics. 11 1 25 46

Elbatal, I., & Elgarhy, M. (2020). Extended Marshall-Olkin length-biased exponential distribution: properties and application. Advances and Applications in Statistics, 64(1), 113–125.

Eliwa, M. S., & El-Morshedy, M. (2020). Bayesian and non-bayesian estimation of four-parameter of bivariate discrete inverse weibull distribution with applications to model failure times, football, and biological data. Filomat, 34(8), 2511–2531.

El-Morshedy, M., Alhussain, Z. A., Atta, D., Almetwally, E. M., & Eliwa, M. S. (2020). Bivariate burr X generator of distributions: properties and estimation methods with applications to complete and Type-II censored samples. Mathematics, 8, 264.

Eltehiwy, M. (2020). Logarithmic inverse lindley distribution: model, properties and applications. Journal of King Saud University - Science, 32(1), 136–144.

Fawzy, M. A., Athar, H., & Alharbi, Y. F. (2021). Inference based On Marshall-Olkin extended Rayleigh lomax distribution. Applied Mathematics E-Notes, 21, 1–11.

Ferreira, F. A. F. (2018). Mapping the field of arts-based management: bibliographic coupling and co- citation analyses. Journal of Business Research, 85, 348–357.

García, V. J., Gómez-Déniz, E., & Vázquez-Polo, F. J. (2016). A Marshall-Olkin family of heavy-tailed distributions which includes the lognormal one. Communications in Statistics - Theory and Methods, 45(7), 2023–2044.

García, V., Martel-Escobar, M., & Vázquez-Polo, F. J. (2020). Generalising exponential distributions using an extended marshall-olkin procedure. Symmetry, 12, 464.

George, R., & Thobias, S. (2019). Kumaraswamy Marshall-Olkin Exponential distribution. Communications in Statistics - Theory and Methods, 48(8), 1920–1937.

Ghitany, M. E., Al-Awadhi, F. A., & Alkhalfan, L. A. (2007). Marshall-Olkin extended lomax distribution and its application to censored data. Communications in Statistics-Theory and Methods, 36, 1855–1866.

Ghosh, I., Dey, S., & Kumar, D. (2019). Bounded M-O extended exponential distribution with applications. Stochastics and Quality Control, 34(1), 35–51.

Gillariose, J., & Tomy, L. (2020). The Marshall-Olkin extended power lomax distribution with applications. Pakistan Journal of Statistics and Operation Research, 16(2), 331–341.

Gui, W. (2013). Marshall-Olkin extended log-logistic distribution and its application in minification processes. Applied Mathematical Sciences, 7(80), 3947–3961.

Hamdeni, T., & Gasmi, S. (2020). The Marshall-Olkin generalized defective Gompertz distribution for surviving fraction modeling. Communications in Statistics: Simulation and Computation. https://doi.org/10.1080/03610918.2020.1804937

Handique, L., & Chakraborty, S. (2017a). A new beta generated Kumaraswamy Marshall-Olkin-G family of distributions with applications. Malaysian Journal of Science, 36(3), 157–174.

Handique, L., & Chakraborty, S. (2017b). The beta generalized marshall-olkin kumaraswamy-G family of distributions with applications. International Journal of Agricultural and Statistical Sciences, 13(2), 721–733.

Handique, L., Chakraborty, S., & de Andrade, T. A. N. (2019). The exponentiated generalized Marshall-Olkin family of distribution: its properties and applications. Annals of Data Science, 6(3), 391–411.

Handique, L., Chakraborty, S., & Hamedani, G. G. (2017). The Marshall-Olkin-kumaraswamy-G family of distributions. Journal of Statistical Theory and Applications, 16(4), 427–447.

MA Haq ul, Afify, A. Z., Al-Mofleh, H., Usman, R. M., Alqawba, M., Sarg, A. M. 2021. The Extended Marshall-Olkin Burr III Distribution: Properties and Applications Pakistan. Journal of Statistics and Operation Research. 17 1 1 14

MAHaq ul, Usman, R. M., Hashmi, S., & Al-Omeri, A. I. 2019 The Marshall-Olkin length-biased exponential distribution and its applications. Journal of King Saud University – Science. 31 (2) 246 251

Hassan, A. S., & Nassr, S. G. (2021). Parameter estimation of an extended inverse power Lomax distribution with Type I right censored data. Communications for Statistical Applications and Methods, 28(2), 99–118.

Hussain, Z., Aslam, M., & Asghar, Z. (2019). On Exponential negative-binomial-X family of distributions. Annals of Data Science, 6(4), 651–672.

Jamal, F., Reyad, H., Chesneau, C., Nasir, M. A., & Othman, S. (2019). The Marshall-Olkin odd lindley G family of distributions: theory and applications. Journal of Mathematics, 51(7), 111–125.

Jamal, F., Tahir, M. H., Alizadeh, M., & Nasir, M. A. (2017). On Marshall-Olkin Burr X family of distribution. Tbilisi Mathematical Journal, 10(4), 175–199.

Jamalizadeh, A., & Kundu, D. (2013). Weighted Marshall-Olkin bivariate exponential distribution. Statistics, 47(5), 917–928.

Javed, M., Nawaz, T., & Irfan, M. (2019). The Marshall-Olkin kappa distribution: properties and applications. Journal of King Saud University - Science, 31(4), 684–691.

Jayakumar, K., & Girish Babu, M. (2015). Some generalizations of weibull distribution and related processes. Journal of Statistical Theory and Applications, 14(4), 425.

Jayakumar, K., & Mathew, T. (2008). On a generalization to Marshall-Olkin scheme and its application to Burr type XII distribution. Statistical Papers, 49(3), 421–439.

Jayakumar, K., & Sankaran, K. K. (2016). On a generalisation of uniform distribution and its properties. Statistica, 76(1), 83–91.

Jayakumar, K., & Sankaran, K. K. (2017). generalized exponential truncated negative binomial distribution. American Journal of Mathematical and Management Sciences, 36(2), 98–111.

Jayakumar, K., & Sankaran, K. K. (2018). A generalization of discrete Weibull distribution. Communications in Statistics - Theory and Methods, 47(24), 6064–6078.

Jayakumar, K., & Sankaran, K. K. (2019a). Discrete linnik weibull distribution. Communications in Statistics: Simulation and Computation, 48(10), 3092–3117.

Jayakumar, K., & Sankaran, K. K. (2019b). Exponential intervened poisson distribution. Communications in Statistics - Theory and Methods. https://doi.org/10.1080/03610926.2019.1682161

Jose, K. K., Krishna, E., & Ristic, M. M. (2014). On record values and reliability properties of Marshall-Olkin extended exponential distribution. Journal of Applied Statistical Science, 13(3), 247–262.

Jose, K. K., Naik, S. R., & Ristić, M. M. (2010). Marshall-Olkin q-Weibull distribution and max-min processes. Statistical Papers, 51(4), 837–851.

Jose, K. K., Ristić, M. M., & Joseph, A. (2011). Marshall-Olkin bivariate Weibull distributions and processes. Statistical Papers, 52(4), 789–798.

Kamel, B. I., Youssef, S. E. A., & Sief, M. G. (2016). The uniform truncated negative binomial distribution and its properties. Journal of Mathematics and Statistics, 12(4), 290–301.

Khaleel, M. A., Oguntunde, P. E., Abbasi, J. N., Ibrahim Al, N. A., & AbuJarad, M. H. A. (2020). The Marshall-Olkin Topp Leone-G family of distributions: A family for generalizing probability models. Scientific African., 8, e00470.

Khalil, M. G., Hamedani, G. G., & Yousof, H. M. (2019). The Burr X exponentiated weibull model: characterizations, mathematical properties and applications to failure and survival times data. Pakistan Journal of Statistics and Operation Research, 15(1), 141–160.

Khalil, M. G., & Kamel, W. M. (2020). The three-parameters Marshall-Olkin generalized weibull model with properties and different applications to real data sets. Pakistan Journal of Statistics and Operation Research, 16(4), 675–688.

Khosa, S. K., Afify, A. Z., Ahmad, Z., Zichuan, M., Hussain, S., & Iftikhar, A. (2020). A new extended-f family: properties and applications to lifetime data. Journal of Mathematics, 2020, 1–10.

Korkmaz, M., Cordeiro, G. M., Yousof, H. M., Pescim, R. R., Afify, A. Z., & Nadarajah, S. (2019a). The Weibull Marshall-Olkin family: regression model and application to censored data. Communications in Statistics - Theory and Methods, 48(16), 4171–4194.

Korkmaz, M., Cordeiro, G. M., Yousof, H. M., Pescim, R. R., Afify, A. Z., & Nadarajah, S. (2019b). The Weibull Marshall-Olkin family: regression model and application to censored data. Communications in Statistics-Theory and Methods, 48, 4171–4194.

Korkmaz, M. Ç., Yousof, H. M., Hamedani, G. G., & Ali, M. M. (2018). The Marshall-Olkin generalized G poisson family of distributions. Pakistan Journal of Statistics, 34(3), 251–267.

Krainer, J. A., Krainer, C. W. M., Vidolin, A. C., & Romano, C. A. (2020). Supplier in the Supply Chain: A Bibliometric Analysis. In A. M. T. Thomé, R. G. Barbastefano, L. F. Scavarda, J. C. G. dos Reis, & M. P. C. Amorim (Eds.), Industrial Engineering and Operations Management. IJCIEOM 2020. Springer Proceedings in Mathematics & Statistics (Vol. 337, pp. 53–65). Springer, Cham.

Krishna, E., Jose, K. K., Alice, T., & Ristić, M. M. (2013). The Marshall-Olkin fréchet distribution. Communications in Statistics - Theory and Methods, 42(22), 4091–4107.

Krishnan, B., & George, D. (2019). The Marshall-Olkin Weibull Truncated Negative Binomial Distribution and its Applications. Statistica, 79(3), 247–265.

Kundu, D., & Gupta, A. K. (2017). On bivariate inverse Weibull distribution. Brazilian Journal of Probability and Statistics, 31(2), 275–302.

Kundu, D., & Nekoukhou, V. (2019). On bivariate discrete Weibull distribution. Communications in Statistics - Theory and Methods, 48(14), 3464–3481.

Lei, X., & Xu, Q. (2020). Evolution and thematic changes of Journal of king Saud University science between 2009 and 2019: A bibliometric and visualized review. Journal of King Saud University Science, 32, 2074–2080.

Lemonte, A. J. (2013). A new extension of the Birnbaum-Saunders distribution. Brazilian Journal of Probability and Statistics, 27(2), 133–149.

Lemonte, A. J., Cordeiro, G. M., & Moreno-Arenas, G. (2016). A new useful three-parameter extension of the exponential distribution. Statistics, 50(2), 312–337.

Mansoor, M., Tahir, M. H., Cordeiro, G. M., Provost, S. B., & Alzaatreh, A. (2019). The Marshall-Olkin logistic-exponential distribution. Communications in Statistics - Theory and Methods, 48(2), 220–234.

Mansour, M. M., Elrazik, E. M. A., & Butt, N. S. (2018). The exponentiated Marshall-Olkin Fréchet distribution. Pakistan Journal of Statistics and Operation Research, 14(1), 57–74.

Marinho, P. R. D., Bourguignon, M., Silva, R. B., & Cordeiro, G. M. (2019). A new class of lifetime models and the evaluation of the confidence intervals by double percentile bootstrap. Anais Da Academia Brasileira De Ciências, 91(1), 1–27.

Marshall, A. W., & Olkin, I. (1997). A new method for adding a parameter to a family of distributions with application to the exponential and Weibull families. Biometrika, 84, 641–652.

Mathew, J. (2020). Reliability Test Plan for the Marshall Olkin Length Biased Lomax Distribution. Reliability: Theory and Applications, 15 (2) 36-49

Mathew, J., & Chesneau, C. (2020). Marshall-Olkin length-biased maxwell distribution and its applications. Mathematical and Computational Applications, 25(4), 65.

MirMostafaee, S. M. T. K., Mahdizadeh, M., & Lemonte, A. J. (2017). The Marshall-Olkin extended generalized Rayleigh distribution: properties and applications. Communications in Statistics-Theory and Methods, 46(2), 653–671.

Mondal, S., & Kundu, D. (2020). A bivariate inverse Weibull distribution and its application in complementary risks model. Journal of Applied Statistics, 47(6), 1084–1108.

Nadarajah, S., Jayakumar, K., & Ristić, M. M. (2013). A new family of lifetime models. Journal of Statistical Computation and Simulation, 83(8), 1389–1404.

Nassar, M., Kumar, D., Dey, S., Cordeiro, G. M., & Afify, A. Z. (2019). The Marshall-Olkin alpha power family of distributions with applications. Journal of Computational and Applied Mathematics, 351, 41–53.

Nwezza, E. E., & Ugwuowo, F. I. (2020). The Marshall-Olkin Gumbel-Lomax distribution: properties and applications. Heliyon, 6(3), e03569

Okasha, H. M., & Kayid, M. (2016). A new family of Marshall-Olkin extended generalized linear exponential distribution. Journal of Computational and Applied Mathematics, 296, 576–592.

Oliveira de Puziol, Achcar, JA. 2020. A new flexible bivariate discrete Rayleigh distribution generated by the Marshall-Olkin family. Model Assisted Statistics and Applications. 15 (1) 19 34

Okorie, I. E., Akpanta, A. C., & Ohakwe, J. (2017b). Marshall-Olkin generalized Erlang-truncated exponential distribution: properties and applications. Cogent Mathematics, 4(1), 1–19.

Okorie, I. E., Akpanta, A. C., Ohakwe, J., & Chikezie, D. C. (2017a). The modified power function distribution. Cogent Mathematics, 4(1), 1–20.

Oliveira de, Ricardo P Puziol, M. V. de O., Achcar, J. A., Davarzani, N. 2020. Inference for the trivariate Marshall-Olkin-Weibull distribution in presence of right-censored data Chilean Journal of Statistics. 11 (2) 95 116

Oliveira, R. P., Achcar, J. A., Mazucheli, J., Bertoli, W. (2021). A new class of bivariate Lindley distributions based on stress and shock models and some of their reliability properties. Reliability Engineering & System Safety, 211.

Oluyede, B., Jimoh, H. A., Wanduku, D., & Makubate, B. (2020). A new generalized log-logistic erlang truncated exponential distribution with applications. Electronic Journal of Applied Statistical Analysis, 13(2), 293–349.

Pal, M., Ali, M. M., & Woo, J. (2006). Exponentiated Weibull distribution. Statistica, 66(2), 139–147.

Pogány, T. K., Saboor, A., & Provost, S. (2015). The Marshall-Olkin exponential Weibull distribution. Hacettepe Journal of Mathematics and Statistics, 44(6), 1579–1594.

Raffiq, G., Dar, I. S., Haq, M. A. U., & Ramos, E. (2020). The Marshall-Olkin Inverted Nadarajah-Haghighi Distribution: Estimation and Applications. Annals of Data Science. https://doi.org/10.1007/s40745-020-00297-7

Ristić, M. M., & Kundu, D. (2015). Marshall-Olkin generalized exponential distribution. Metron, 73(3), 317–333.

Rocha, R., Nadarajah, S., Tomazella, V., & Louzada, F. (2016). Two new defective distributions based on the Marshall-Olkin extension. Lifetime Data Analysis, 22(2), 216–240.

Rocha, R., Nadarajah, S., Tomazella, V., & Louzada, F. (2017). A new class of defective models based on the Marshall-Olkin family of distributions for cure rate modeling. Computational Statistics and Data Analysis, 107, 48–63.

Rondero-Guerrero, C., González-Hernández, I., & Soto-Campos, C. (2020). On a generalized uniform distribution. Advances and Applications in Statistics, 60, 93–103.

Ruggeri, G., Orsi, L., & Corsi, S. (2019). A bibliometric analysis of the scientific literature on Fairtrade labelling. International Journal of Consumer Studies, 43, 134–152.

Saboor, A., Bakouch, H. S., Moala, F. A., & Hussain, S. (2020). Properties and methods of estimation for a bivariate exponentiated Fréchet distribution. Mathematica Slovaca, 70(5), 1211–1230.

Saboor, A., & Pogány, T. K. (2016). Marshall-Olkin gamma-Weibull distribution with applications. Communications in Statistics - Theory and Methods, 45(5), 1550–1563.

Santos-Neto, M., Bourguignon, M., Zea, L. M., Nascimento, A. D., Cordeiro, G. M. (2014). The Marshall-Olkin extended Weibull family of distributions. Journal of Statistical Theory and Applications, 1(9). https://doi.org/10.1186/2195-5832-1-9.

Sarhan, A. M., & Balakrishnan, N. (2007). A new class of bivariate distributions and its mixture. Journal of Multivariate Analysis, 98(7), 1508–1527.

Shahen, H. S., El-Bassiouny, A. H., & Abouhawwash, M. (2019). Bivariate exponentiated modified weibull distribution. Journal of Statistics Applications and Probability, 8(1), 27–39.

Shakhatreh, M. K. (2018). A new three-parameter extension of the log-logistic distribution with applications to survival data. Communications in Statistics - Theory and Methods, 47(21), 5205–5226.

Shalabi, R. M. (2019). Non Bayesian and Bayesian estimation for the Bivariate generalized Lindley distribution. Advances and Applications in Statistics, 54(2), 327–344.

Shoaib, M., Dar, I. S., Ahsan-ul-Haq, M., & Usman, R. M. (2021). A sustainable generalization of inverse Lindley distribution for wind speed analysis in certain regions of Pakistan. Modeling Earth Systems and Environment. https://doi.org/10.1007/s40808-021-01114-7

Small, H. (1973). Co-citation in the scientific literature: A new measure of the relationship between two documents. Journal of the American Society for Information Science, 24, 265–269.

Tablada, C. J., & Cordeiro, G. M. (2019). The Beta Marshall-Olkin Lomax distribution. REVSTAT - Statistical Journal, 17(3), 321–344.

Tahir, M. H., Hussain, M. A., Cordeiro, G. M., El-Morshedy, M., & Eliwa, M. S. (2020). A new kumaraswamy generalized family of distributions with properties, applications, and bivariate extension. Mathematics, 8(11), 1–28.

Usman, R. M., Ahsan, M., & ul H. . (2020). The Marshall-Olkin extended inverted Kumaraswamy distribution: Theory and applications. Journal of King Saud University - Science, 32(1), 356–365.

Van Oorschot, J. A. W. H., Hofman, E., & Halman, J. I. M. (2018). A bibliometric review of the innovation adoption literature. Technological Forecasting and Social Change, 134, 1–21.

Wang, L., Li, M., & Tripathi, Y. M. (2020). Inference for dependent competing risks from bivariate Kumaraswamy distribution under generalized progressive hybrid censoring. Communications in Statistics: Simulation and Computation. https://doi.org/10.1080/03610918.2019.1708929

Wang, Y., Feng, Z., & Zahra, A. (2021). A new logarithmic family of distributions: Properties and applications. Computers, Materials and Continua, 66(1), 919–929.

Yaghoobzadeh, S. (2017). A new generalization of the Marshall-Olkin Gompertz distribution. International Journal of Systems Assurance Engineering and Management, 8, 1580–1587.

Yari, G., Rezaei, R., & Ezmareh, Z. K. (2020). A New Generalization of the Gompertz Makeham distribution: theory and application in reliability. International Journal of Industrial Engineering and Production Research, 31(3), 455–467.

Yeh, H.-C. (2004). The generalized Marshall-Olkin type multivariate Pareto distributions. Communications in Statistics - Theory and Methods, 33(5), 1053–1068.

Yousof, H. M., Afify, A. Z., Ebraheim, A. E. H. N., Hamedani, G. G., & Butt, N. S. (2016). On six-parameter Fréchet distribution: properties and applications. Pakistan Journal of Statistics and Operation Research, 12(2), 281–299.

Yousof, H. M., Afify, A. Z., Nadarajah, S., Hamedani, G., & Aryal, G. R. (2018). The Marshall-Olkin generalized-G Family of distributions with applications. Statistica, 78(3), 273–295.

Acknowledgements

The authors acknowledge partial support from CONACyT and PRODEP, MEXICO.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

González-Hernández, I.J., Granillo-Macías, R., Rondero-Guerrero, C. et al. Marshall-Olkin distributions: a bibliometric study. Scientometrics 126, 9005–9029 (2021). https://doi.org/10.1007/s11192-021-04156-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-021-04156-x