Abstract

In this paper, a subgradient projection method for quasiconvex minimization problems is provided. By employing strong subdifferentials, it is proved that the generated sequence of the proposed algorithm converges to the solution of the minimization problem of a proper, lower semicontinuous, and strongly quasiconvex function (in the sense of Polyak in Soviet Math 7:72–75, 1966), under the same assumptions as those required for convex functions with the convex subdifferentials. Furthermore, a quasi-linear convergence rate of the iterates, extending similar results for the general quasiconvex case, is also provided.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let K be a closed and convex set in \(\mathbb {R}^{n}\) and \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}:= \mathbb {R} \cup \{\pm \infty \}\) be a proper function such that \(K \subseteq \textrm{dom}\,h\). We consider the constraint minimization problem given by:

If h is differentiable, then one of the most important iterative methods for finding a solution of problem (COP) is the gradient method, which has been well studied due to its important properties and applications in several fields of the mathematical sciences, engineering and economics among others.

If h is not differentiable, but lower semicontinuous (lsc henceforth) and convex, then we may apply the subgradient algorithm: Given \(z^{k} \in \mathrm{int\,dom}\,h \subseteq K\), we take

where \(\xi ^{k} \in \partial h (z^{k})\) is the convex subdifferential (see (2.7) below) and \(\{\alpha _{k}\}_{k}\) is a positive sequence of parameters. If \(\xi ^{k} = 0\), then \(z^{k}\) is a solution of problem (COP), if not, then we take \(k=k+1\) and we repeat (1.1).

In contrast to the gradient method, the subgradient method (with the convex subdifferential) does not decrease at each step even for convex functions (see [2, Example 8.3] and also [20]). However, the (convex) subgradient method converges to the solution of the problem for convex functions (see [2, Theorem 8.17] for instance), but also for classes of quasiconvex functions with generalized subdifferentials (see [6, 7, 9, 13, 14, 22] and references therein).

In this paper, and by using new existence results and generalized subdifferentials, we provide a subgradient projection method for finding the unique solution of the minimization problem of a proper, lsc and strongly quasiconvex function. The subdifferential that we use is the strong subdifferential (see [12]) which has been especially introduced for studying strongly quasiconvex functions. We prove the convergence of the generated sequence to the solution of problem (COP) for a proper, lsc and strongly quasiconvex function under the same assumptions than the convex case with the convex subdifferential. Recall that the class of strongly quasiconvex functions were introduced in the sixties, it is the natural extension of the strongly convex functions and its definition goes back to the famous paper on existence theorems in extremum problems of Polyak [18].

The structure of the paper is as follows: In Sect. 2 we present notation, preliminaries and the basic results on generalized convexity and generalized subdifferentials. In Sect. 3, we present new theoretical results for the strong subdifferential which will be useful for the convergence analysis of the algorithm, then we present our subgradient method and the basic assumptions for its implementation. Furthermore, we provide the convergence analysis for the proposed algorithm in which we prove the convergence of the generated sequence to the unique solution of problem (COP) under a proper, lsc, and strongly quasiconvexity assumption with a quasi-linear convergence rate. Finally, theoretical examples showing the advantages of our approach are discussed in the conclusions.

2 Preliminaries and basic definitions

The inner product in \(\mathbb {R}^{n}\) and the Euclidean norm are denoted by \(\langle \cdot ,\cdot \rangle \) and \(\Vert \cdot \Vert \), respectively. Given a convex and closed set K, the projection of x on K is denoted by \(P_{K} (x)\), and the indicator function on K by \(\iota _{K}\). The set \(]0, + \infty [\) is denoted by \(\mathbb {R}_{++}\). The unit sphere centered at the origin is denoted by \(\mathbb {B}\).

For a closed and convex set \(K \subseteq \mathbb {R}^{n}\), the metric projection satisfies that

Given any extended-valued function \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\), the effective domain of h is defined by \(\textrm{dom}\,h:= \{x \in \mathbb {R}^{n}: h(x) < + \infty \}\). It is said that h is proper if \(\textrm{dom}\,h\) is nonempty and \(h(x) > - \infty \) for all \(x \in \mathbb {R}^{n}\). The notion of properness is important when dealing with minimization problems.

It is indicated by \(\textrm{epi}\,h:= \{(x,t) \in \mathbb {R}^{n} \times \mathbb {R}: h(x) \le t\}\) the epigraph of h, by \(S_{\lambda } (h):= \{x \in \mathbb {R}^{n}: h(x) \le \lambda \}\) the sublevel set of h at the height \(\lambda \in \mathbb {R}\) and by \(\textrm{argmin}_{\mathbb {R}^{n}} h\) the set of all minimal points of h. A function h is lower semicontinuous at \(\overline{x} \in \mathbb {R}^{n}\) if for any sequence \(\{x_k\}_{k} \in \mathbb {R}^{n}\) with \(x_k \rightarrow \overline{x}\), \(h(\overline{x}) \le \liminf _{k \rightarrow + \infty } h(x_k)\). Furthermore, the current convention \(\sup _{\emptyset } h:= - \infty \) and \(\inf _{\emptyset } h:= + \infty \) is adopted.

A function h with a convex domain is said to be

- (a):

-

convex if, given any \(x, y \in \textrm{dom}\,h\), then

$$\begin{aligned} h(\lambda x + (1-\lambda )y) \le \lambda h(x) + (1 - \lambda ) h(y), ~ \forall ~ \lambda \in [0, 1]; \end{aligned}$$(2.2) - (b):

-

semistrictly quasiconvex if, given any \(x, y \in \textrm{dom}\,h\), with \(h(x) \ne h(y)\), then

$$\begin{aligned} h(\lambda x + (1-\lambda )y) < \max \{h(x), h(y)\}, ~ \forall ~ \lambda \in \, ]0, 1[; \end{aligned}$$(2.3) - (c):

-

quasiconvex if, given any \(x, y \in \textrm{dom}\,h\), then

$$\begin{aligned} h(\lambda x + (1-\lambda ) y) \le \max \{h(x), h(y)\}, ~ \forall ~ \lambda \in [0, 1]. \end{aligned}$$(2.4)It is said that h is strictly convex (resp. strictly quasiconvex) if the inequality in (2.2) (resp. (2.4)) is strict whenever \(x \ne y\).

Every (stricly) convex function is (stricly) quasiconvex and semistrictly quasiconvex, and every semistrictly quasiconvex and lsc function is quasiconvex. The continuous function \(h: \mathbb {R} \rightarrow \mathbb {R}\) given by \(h(x):= \min \{ |x |, 1\}\) is quasiconvex without being semistrictly quasiconvex. Furthermore, recall that a function h is convex iff \(\textrm{epi}\,h\) is a convex set and that is quasiconvex iff the sublevel sets \(S_{\lambda } (h)\) are convex sets for all \(\lambda \in \mathbb {R}\).

A function h with a convex domain is said to be (see [1, 2, 11, 18]):

- (a):

-

strongly convex on \(\textrm{dom}\,h\) if there exists \(\gamma \in \, ]0, + \infty [\) such that for all \(x, y \in \textrm{dom}\,h\) and all \(\lambda \in [0, 1]\), we have

$$\begin{aligned} h(\lambda y + (1-\lambda )x) \le \lambda h(y) + (1-\lambda ) h(x) - \lambda (1 - \lambda ) \frac{\gamma }{2} \Vert x - y \Vert ^{2}, \end{aligned}$$(2.5) - (b):

-

strongly quasiconvex on \(\textrm{dom}\,h\) if there exists \(\gamma \in \, ]0, + \infty [\) such that for all \(x, y \in \textrm{dom}\,h\) and all \(\lambda \in [0, 1]\), we have

$$\begin{aligned} h(\lambda y + (1-\lambda )x) \le \max \{h(y), h(x)\} - \lambda (1 - \lambda ) \frac{\gamma }{2} \Vert x - y \Vert ^{2}. \end{aligned}$$(2.6)

In these cases, it is said that h is strongly convex (resp. quasiconvex) with modulus \(\gamma > 0\). Note that every strongly convex function is strongly quasiconvex, and every strongly quasiconvex function is strictly quasiconvex. The Euclidean norm \(h_{1}(x) = \Vert x \Vert \) is strongly quasiconvex without being strongly convex on any bounded convex set \(K \subseteq \mathbb {R}^{n}\) (see [11, Theorem 2]) and the function \(h_{2}(x) = x^{3}\) is strictly quasiconvex without being strongly quasiconvex on \(\mathbb {R}\). Summarizing (quasiconvex is denoted by qcx):

If in addition the function is lsc, then all the previous notions are quasiconvex.

Remark 2.1

There is no relationship between convex and strongly quasiconvex functions. Indeed, the function \(h: \mathbb {R}^{n} \rightarrow \mathbb {R}\) given by \(h(x) = \sqrt{\Vert x \Vert }\) is strongly quasiconvex on any bounded and convex set K in \(\mathbb {R}^{n}\) without being convex ([15, Theorem 17]), while the function \(h(x) \equiv 1\) is convex without being strongly quasiconvex. However, strongly convex functions are both convex and strongly quasiconvex.

The following existence result is the starting point of our research.

Lemma 2.1

([15, Corollary 3]) Let \(K \subseteq \mathbb {R}^{n}\) be a closed and convex set and \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\) be a proper, lsc, and strongly quasiconvex function on \(K \subseteq \textrm{dom}\,h\) with modulus \(\gamma > 0\). Then, \(\textrm{argmin}_{K} h\) is a singleton.

Given a proper function \(h:\mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\), the convex subdifferential of h at \(\overline{x} \in \textrm{dom}\,h\) is defined by

and by \(\partial h(x) = \emptyset \) if \(x \not \in \textrm{dom}\,h\).

The convex subdifferential is an outstanding tool in continuous optimization. It is especially useful when the function is proper, lsc and convex, but when the function is nonconvex, the convex subdifferential is no longer useful. For this reason, several authors have introduced different generalized subdifferentials for dealing with different classes of nonconvex functions (see [16, 17, 19]).

For the case of quasiconvex and strongly quasiconvex functions, we recall the following notion of generalized subdifferential from [12].

The strong subdifferential of h at \(\overline{x} \in {{\,\textrm{dom}\,}}h \cap K\) is defined by

Clearly, \(\partial ^{K}_{\beta , \gamma }\) is a closed and convex set. Furthermore, we also have.

Lemma 2.2

([12, Proposition 7(d)]) Let \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\) be a proper function, \(\beta > 0\), \(\gamma \ge 0\), \(K \subseteq \mathbb {R}^{n}\), and \(\overline{x}\in \textrm{dom}\,h\cap K\). Then \(\partial ^{K}_{\beta , \gamma } h(\overline{x})\) is compact for every \(\overline{x} \in \textrm{int}\,(\textrm{dom}\,h\cap K)\).

We recall some interesting and useful properties of the strong subdifferentials. Before that, we first recall that \(\overline{x}\) is an \((\alpha , K)\)-strong minimum point of h if there exists \(\alpha >0\) such that

Clearly, every \((\alpha , K)\)-strong minimum point is a strict minimum point and every strict minimum point is a global minimum point, but both reverse statements do not hold.

Lemma 2.3

([12, Theorem 24(a)]) Let \(K \subseteq \mathbb {R}^n\) be a closed and convex set, \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\) be a proper function such that \(K \subseteq \textrm{dom}\,h\), \(\beta > 0\), \(\gamma > 0\) and \(\overline{x} \in K\). Then, \(0 \in \partial ^{K}_{\beta , \gamma } h(\overline{x})\) iff \(\overline{x}\) is a \(\left( \frac{\gamma ^{2} \beta }{8(1 + \gamma \beta )}, K\right) \)-strong minimum point of h.

The strong subdifferential is nonempty for bigger classes of quasiconvex functions as we can see below.

Lemma 2.4

([12, Corollary 38(a)]) Let \(K\subseteq \mathbb {R}^n\) be a closed and convex set, \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\) be a proper and lsc function such that \(K \subseteq \textrm{dom}\,h\), and \(\beta > 0\). If h is strongly quasiconvex on K with modulus \(\gamma > 0\), then \(\partial ^{K}_{\beta , \gamma } h(\overline{x}) \ne \emptyset \) for every \(\overline{x} \in K\).

Another useful property is the following.

Lemma 2.5

([12, Proposition 40]) Let \(K \subseteq \mathbb {R}^n\) be a closed and convex set, \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\) be a proper and lsc function such that \(K \subseteq \textrm{dom}\,h\), \(\beta >0\) and \(\overline{x}\in K\). If h is strongly quasiconvex on K with modulus \(\gamma >0\), then

We also recall the notion of quasi-Fejér convergence and its properties. A detailed survey on this subject can be found in [5].

Definition 2.1

Let \(C\subseteq \mathbb {R}^n\) and \(\{x^k\}_k\subseteq \mathbb {R}^n\) be any sequence in \(\mathbb {R}^n\) Then,

-

(i)

The sequence \(\{x^k\}_k\subseteq \mathbb {R}^n\) is called quasi-Fejér related to set \(C\subseteq \mathbb {R}^n\) if for every \(z\in C\) there exists a sequence \(\{\epsilon _ k\}_k\subseteq \mathbb {R}_+\) such that

$$\begin{aligned} \Vert x^{k+1}-z\Vert ^2\le \Vert x^{k}-z\Vert ^2+\epsilon _k, \end{aligned}$$with \(\sum _{k=1}^{+\infty }\epsilon _k<+\infty \).

-

(ii)

The sequence \(\{x^k\}_k\subseteq \mathbb {R}^n\) converges to \(\overline{x}\in \mathbb {R}^n\) quasi R-linearly, if there exists a constant \(0< \theta < 1\), \(k_0 \in \mathbb {N}\), and \(\{\epsilon _ k\}_k\subseteq \mathbb {R}_+\) (which does not depend on \(x_k\)) such that

$$\begin{aligned} \Vert x^{k+1}-\overline{x}\Vert ^2\le \theta \, \Vert x^{k}-\overline{x}\Vert ^2+\epsilon _k, \forall \, k \ge k_0 \end{aligned}$$with \(\sum _{k=1}^{+\infty }\epsilon _k<+\infty \).

To end this section, we state the main properties of the quasi-Fejér sequences that will be needed in our analysis.

Lemma 2.6

([4, Theorem 1]) Let \(\{x^{k}\}_{k} \subseteq \mathbb {R}^{n}\) be quasi-Fejér convergent to a nonempty set \(C\subseteq \mathbb {R}^n\). Then, the following assertions hold:

- (a):

-

\(\{x^k\}_k\) is bounded;

- (b):

-

If a cluster point \(x^{*}\) of \(\{x^k\}_{k}\) belongs to C, then \(\lim _{k \rightarrow +\infty }x^k=x^{*}\).

For a further study on nonsmooth analysis and generalized convexity we refer to [11, 12, 15,16,17,18,19].

3 Subgradient methods for quasiconvex minimization

Throughout the paper, we assume the following assumption on h:

- (A):

-

h is a proper, lsc and strongly quasiconvex function on \(K \subseteq \textrm{dom}\,h\) with modulus \(\gamma > 0\).

3.1 Properties for the strong subdifferential

Before introducing our algorithm, we show some useful theoretical results.

Proposition 3.1

Let \(K\subseteq \mathbb {R}^{n}\) be a closed and convex set, \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\) be a proper function such that \(K \subseteq \textrm{dom}\,h\), \(\beta >0\), \(\gamma > 0\) and \(z \in K\). Given \(\alpha > 0\) and \(\xi \in \partial _{\beta , \gamma }^K h(z)\), set

Then the following assertions hold:

- (a):

-

\(\Vert x^{+} - z \Vert \le \alpha \Vert \xi \Vert \).

- (b):

-

For every \(x \in K\), we have

$$\begin{aligned} \Vert x^{+} - x \Vert ^2 \le \Vert z - x \Vert ^2 + \alpha ^{2} \Vert \xi \Vert ^2 + 2 \alpha \langle \xi , x - z \rangle . \end{aligned}$$(3.2)

Proof

(a): It follows from (3.1) and (2.1) that,

taking \(y=z\), then using the Cauchy–Schwarz inequality, we deduce

(b): Let \(x \in K\). Using the nonexpansivity of the projection operator, we have

which proves (3.2). \(\square \)

By using the structure of the strong subdifferential, we obtain the following.

Proposition 3.2

Let \(K\subseteq \mathbb {R}^{n}\) be a closed and convex set, \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\) be a proper function such that \(K \subseteq \textrm{dom}\,h\), \(\beta >0\), \(\gamma > 0\) and \(z \in K\). Given \(\xi \in \partial _{\beta , \gamma }^K h(z)\), \(\alpha >0\) and \(x^{+}: = P_{K} (z - \alpha \xi )\). Then, for every \(x \in K\), we have

- (a):

-

If \(h(x) > h(z)\), then

$$\begin{aligned}&\Vert x^{+} - x \Vert ^2 \le \Vert z - x \Vert ^2 + \alpha ^{2} \Vert \xi \Vert ^2 + \frac{2 \alpha (1 + \gamma \beta )}{\gamma } \left( h(x) - h(z) \right) . \end{aligned}$$(3.3) - (b):

-

If \(h(x) \le h(z)\), then

$$\begin{aligned} \Vert x^{+} - x \Vert ^2 \le \left( 1 - \alpha \gamma \beta \right) \Vert z - x \Vert ^2 + \alpha ^{2} \Vert \xi \Vert ^2. \end{aligned}$$(3.4)

Proof

Since \(\xi \in \partial _{\beta ,\gamma }^K h(z)\), we have

Taking \(y = x\) into (3.5), we separate in two cases:

- (a):

-

If \(h(x) > h(z)\), then \(\max \{h(x), h(z)\} - h(z) = h(x) - h(z)\). Hence (3.5) becomes

$$\begin{aligned} \langle \xi , x - z \rangle \le -\frac{\beta }{2} \left( \gamma - \frac{ \lambda }{\beta } - \lambda \gamma \right) \Vert x - z \Vert ^{2} + \frac{ \beta }{\lambda } \left( h(x) - h(z)\right) . \end{aligned}$$Replacing this in (3.2), we obtain

$$\begin{aligned} \Vert x^{+} - x \Vert ^2 \le&\left( 1 - \alpha \beta (\gamma - \frac{ \lambda }{\beta } - \lambda \gamma ) \right) \Vert z - x \Vert ^2 + \alpha ^{2} \Vert \xi \Vert ^2 + 2 \frac{\alpha \beta }{\lambda } \left( h(x) - h(z) \right) . \end{aligned}$$Choosing \(0< \lambda = \frac{\gamma \beta }{1 + \gamma \beta } < 1\) and taking into account \(h(x) > h(z)\), we have

$$\begin{aligned} \Vert x^{+} - x \Vert ^2 \le \Vert z - x \Vert ^2 + \alpha ^{2} \Vert \xi \Vert ^2 + \frac{2 \alpha (1 + \gamma \beta )}{\gamma } \left( h(x) - h(z) \right) . \end{aligned}$$ - (b):

-

If \(h(x) \le h(z)\), then \(\max \{h(x), h(z)\} - h(z) = 0\). By a similar argument to the used at item (a), we obtain

$$\begin{aligned} \Vert x^{+} - x \Vert ^2 \le \left( 1 - \alpha \beta (\gamma - \frac{ \lambda }{\beta } - \lambda \gamma ) \right) \Vert z - x \Vert ^2 + \alpha ^{2} \Vert \xi \Vert ^2. \end{aligned}$$Taking \(\lambda \rightarrow 0^{+}\), we conclude

$$\begin{aligned} \Vert x^{+} - x \Vert ^2 \le \left( 1 - \alpha \gamma \beta \right) \Vert z - x \Vert ^2 + \alpha ^{2} \Vert \xi \Vert ^2, \end{aligned}$$

which completes the proof. \(\square \)

An interesting theoretical result for continuous strongly quasiconvex functions is given below.

Proposition 3.3

Let \(K \subseteq \mathbb {R}^{n}\) be a closed and convex set and \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\) be a proper, continuous and strongly quasiconvex function on \(K \subseteq \textrm{dom}\,h\) with modulus \(\gamma > 0\). Let \(\{x^k\}_{k} \subseteq K\) and \(\{\xi ^k\}_{k} \subseteq \mathbb {R}^{n}\) be such that \(\xi ^{k} \in \partial _{\beta , \gamma }^{K}h(x^{k})\) for all \(k \in \mathbb {N}\). If \(\xi ^k \rightarrow 0\) and \(x^k \rightarrow \overline{x}\), then \(0 \in \partial _{\beta , \gamma }^{K} h(\overline{x})\). Hence, the point \(\overline{x}\) is a global minimizer.

Proof

Suppose the contrary that \(0 \notin \partial _{\beta ,\gamma }^{K} h(\overline{x})\), then by [12, Corollary 37] there exists \({\widehat{x}} \in K\) such that

Furthermore, since \(\xi ^k \in \partial _{\beta ,\gamma }^{K}h(x^{k})\) for every \(k\in \mathbb {N}\), we have

By taking \(y=\widehat{x}\),

Taking \(\lim _{k\rightarrow +\infty }\) in (3.7), and since \(\xi ^k \rightarrow 0\) and \(x^k \rightarrow \overline{x}\), we have

where the second inequality yields from the fact that the real function \([0,1] \ni \lambda \mapsto \frac{\lambda }{2} \left( \gamma - \frac{\lambda }{\beta } - \lambda \gamma \right) \) reaches its minimum at \(\lambda = \frac{ \gamma \beta }{2(1+\gamma \beta )}\) and its value is \(\frac{\gamma ^2 \beta }{8 (1+\gamma \beta )}\). Then, (3.8) contradicts (3.6). Therefore, \(0 \in \partial _{\beta ,\gamma }^{K} h(\overline{x})\) and the proof is complete. \(\square \)

Remark 3.1

A similar result to Proposition 3.3 may be found in [6, Proposition 7] for Plastria’s subdifferential (see [17]) and quasiconvex Lipschitz continuous functions. The previous result is expected to be useful for implementing inexact proximal point methods for strongly quasiconvex functions using strong subdifferentials.

We finish this subsection with the following result in the continuous case, which shows that the subgradients of points in a given compact set contained in the interior of the domain are always bounded as in the convex case for the convex subdifferential.

Proposition 3.4

Let \(K \subseteq \mathbb {R}^{n}\) be a closed and convex set and \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\) be a proper, continuous and strongly quasiconvex function on \(K \subseteq \mathrm{int\,dom}\,h\) with modulus \(\gamma > 0\). If K is compact, then \(Y:= \bigcup _{x \in K} \partial ^{K}_{\beta , \gamma } h(x)\) is nonempty and bounded.

Proof

We follow the reasoning line of [2, Theorem 3.16]. Since h is proper, continuous and strongly quasiconvex, \(Y \ne \emptyset \) by Lemma 2.4. Now, suppose for the contrary that Y is unbounded. Then there exist sequences \(\{x^{k}\}_{k} \subseteq K\) and \(\xi ^{k} \in \partial ^{K}_{\beta , \gamma } h(x^{k})\) such that \(\Vert \xi ^{k} \Vert \rightarrow + \infty \) as \(k \rightarrow + \infty \).

Since K is compact, \((\mathrm{int\,dom}\,h)^{c}\) is closed and \(K \cap (\mathrm{int\,dom}\,h)^{c} = \emptyset \). Then there exists \(\varepsilon > 0\) such that

On the other hand, since \(\xi ^{k} \in \partial ^{K}_{\beta , \gamma } h(x^{k})\), we have

Take \(y = x^{k} + \frac{\varepsilon }{2} \frac{\xi ^{k}}{\Vert \xi ^{k} \Vert } \, (\in \mathrm{int\,dom}\,h)\). Then,

Taking \(0< \lambda = \frac{\gamma \beta }{1 + \gamma \beta } < 1\), we have

We claim that the left-hand side in (3.10) is bounded. Indeed, suppose for the contrary that there exist subsequences \(\{x^{k_{l}}\}_{l}\) and \(\left\{ \frac{\xi ^{k_{l}}}{\Vert \xi ^{k_{l}} \Vert }\right\} _{l}\) such that

Now, without loss of generality, we assume that

Since \(\{x^{k_{l}}\}_{l}\) and \(\left\{ \frac{\xi ^{k_{l}}}{\Vert \xi ^{k_{l}} \Vert }\right\} _{l}\) are bounded sequences, there exist sequences \(\{x^{k_{l_{j}}}\}_{j}\) and \(\left\{ \frac{\xi ^{k_{l_{j}}}}{\Vert \xi ^{k_{l_{j}}} \Vert }\right\} _{j}\) such that \(x^{k_{l_{j}}} \rightarrow \widehat{x}\) and \(\frac{\xi ^{k_{l_{j}}} }{\Vert \xi ^{k_{l_{j}}}\Vert } \rightarrow \widehat{\xi }\) as \(j \rightarrow + \infty \). Finally, since \(\{x^{k_{l_{j}}}\}\), \(\{x^{k_{l_{j}}} + \frac{\varepsilon }{2} \frac{\xi ^{k_{l_{j}}}}{\Vert \xi ^{k_{l_{j}}} \Vert }\}\) and \(\widehat{x} + \frac{\varepsilon }{2} \widehat{\xi }\) belongs to \(\mathrm{int\,dom}\,h\), it follows from the continuity of h that

which contradicts (3.11). Therefore, the left-hand side in (3.10) is bounded, i.e., the right-hand side in (3.10) is bounded too, which contradicts the fact that \(\Vert \xi ^{k} \Vert \rightarrow + \infty \).

Therefore, Y is bounded, and the proof is complete \(\square \)

3.2 The algorithm

In order to present our algorithm, we first consider the following compatibility conditions:

- (C1):

-

\(K \subseteq \mathrm{int\,dom}\,h\).

- (C2):

-

There exists \(M > 0\) such that \(\Vert \xi \Vert \le M\) for all \(\xi \in \partial ^{K}_{\beta , \gamma } h(x)\) and all \(x \in K\).

- (C3):

-

The sequence \(\{\alpha _k\}_k \subseteq \,]0,+\infty [\) is such that \(0< \alpha _{k} < \frac{1}{\gamma \beta }\) for every \(k \in \mathbb {N}\), and

$$\begin{aligned} \sum _{k=0}^{\infty } \alpha _k = + \infty , ~~~ \sum _{k=1}^{\infty } \alpha _k^2 < + \infty . \end{aligned}$$(3.12)

We analyze the previous assumptions below.

Remark 3.2

- (i):

-

Assumptions (Ci) with \(i=1,2,3\) are usual assumptions for subgradient algorithms. Indeed, (C1) and (C2) are actually used for convex functions with the convex subdifferential (see Assumption 8.7(C) and Assumption 8.12 in [2], respectively).

- (ii):

-

As in the convex case, we know by Proposition 3.4 that if K is compact, then assumption (C3) holds for proper, continuous and strongly quasiconvex functions.

The conceptual algorithm to find a solution of problem (COP) is given below.

Remark 3.3

- (i):

-

Note that under assumption (A), Algorithm 1 is well-defined. Indeed, for every \(k \in \mathbb {N}\), the subgradient \(\xi ^{k} \in \partial ^{K}_{\beta , \gamma } h(x^{k})\) exists by Lemma 2.4. Furthermore, under assumption (C1) and [12, Proposition 7(d)], we have for every iterate \(x^k\) that \(\partial _{\beta , \gamma }^{K} h(x^k)\) is bounded.

- (ii):

-

If Algorithm 1 stops, then \(0 \in \partial _{\beta , \gamma }^{K}h(x^{k})\), thus \(x^{k} \in \textrm{argmin}_{K}\,h\) by Lemma 2.3, i.e., \(x^{k}\) is solution to problem (COP).

- (iii):

-

The stopping criteria “\( 0 \in \partial _{\beta , \gamma }^{K}h(x^{k})\)” can be replaced by “\(x^{k+1}=x^{k}\)”. Indeed, if \(x^{k+1}=x^{k}\), then \(x^{k} = P_{K}(x^k - \alpha _k \xi ^{k})\), i.e., relation (2.1) yields

$$\begin{aligned} \langle - \alpha _k \xi ^k, y - x^k \rangle \le 0, ~ \forall ~ y \in K \, \Longleftrightarrow \, \langle \xi ^k, y- x^k\rangle \ge 0, ~ \forall ~ y \in K. \end{aligned}$$Since \(\xi ^k\in \partial _{\beta ,\gamma }^{K}h(x^{k})\) and \(\langle \xi ^k, y- x^k\rangle \ge 0\) for all \(y \in K\), we have

$$\begin{aligned} \max \{h(y), h(x^k)\} \ge h(x^k)+ \frac{\lambda }{2} \left( \gamma - \frac{\lambda }{\beta } - \lambda \gamma \right) \Vert y - x^k \Vert ^{2}, ~ \forall ~ y \in K, ~ \forall ~ \lambda \in [0, 1]. \end{aligned}$$Taking \(0<\lambda<\frac{\gamma \beta }{1+\gamma \beta }<1\), we have

$$\begin{aligned} \max \{h(y), h(x^k)\} > h(x^k), ~ \forall ~ y \in K \backslash \{x^{k}\}, \end{aligned}$$i.e., \(x^{k} \in \textrm{argmin}_{K}\,h\). Therefore, if \(x^{k+1}=x^{k}\), then \(x^{k} \in \textrm{argmin}_{K}\,h\).

- (iv):

-

The projection step (3.13) is equivalent to the following regularized minimization problem

$$\begin{aligned} x^{k+1} = \textrm{argmin} \left\{ \langle \alpha _k \xi ^k, y \rangle + \frac{1}{2} \Vert y - x^{k} \Vert ^2: \, y \in K \right\} . \end{aligned}$$(3.14)Indeed, (3.14) is equivalent to

$$\begin{aligned} x^{k+1} = \textrm{argmin}\left\{ \langle \alpha _k \xi ^k, y \rangle + \frac{1}{2} \Vert y - x^{k} \Vert ^2 + \iota _K(y): \, y \in \mathbb {R}^n \right\} . \end{aligned}$$Hence, using the optimality condition, we have

$$\begin{aligned} 0&\in \alpha _k \xi ^k + x^{k+1} - x^{k} + N_K(x^{k + 1}) \Longleftrightarrow (I + N_K) x^{k+1} = x^{k} - \alpha _k \xi ^{k} \\&\Longleftrightarrow x^{k+1} = (I+N_K)^{-1} (x^{k} - \alpha _k \xi ^{k}) \Longleftrightarrow x^{k+1}= P_K(x^{k}-\alpha _k \xi ^{k}), \end{aligned}$$where the last equivalence holds due to [1, Example 23.4].

3.3 Convergence analysis

In order to prove the convergence of the sequence generated for Algorithm 1 under assumption (A), we consider the following set:

Note that under assumption (A), \(\mathop {\textrm{argmin}}\limits _{K} h\) is a singleton by [15, Corollary 3], thus \(\Omega \ne \emptyset \).

The following corollary is an easy consequence of Proposition 3.2 which is a key for establishing the convergence analysis of subgradient methods.

Corollary 3.1

Let \(K\subseteq \mathbb {R}^{n}\) be a closed and convex set, \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\) be a function such that assumption (A) holds, \(\{\alpha _{k}\}_{k}\) be a sequence of positive numbers, \(\{x^{k}\}_{k}\) and \(\{\xi ^{k}\}_{k}\) be the sequences generated by Algorithm 1 and \(\widetilde{x} \in \Omega \). Then, for every \(k \in \mathbb {N}\), we have

Proof

It follows immediately from relation (3.2) and Proposition 3.2(b) with \(x^{+}=x^{k+1}, z=x^k\), \(\xi =\xi ^k, x = \widetilde{x}\) and \(\alpha = \alpha _k\). \(\square \)

We also have the following.

Corollary 3.2

Let \(K\subseteq \mathbb {R}^{n}\) be a closed and convex set, \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\) be a function such that assumption (A) holds, \(\{\alpha _{k}\}_{k}\) be a sequence of positive numbers, and \(\{x^{k}\}_{k}\) and \(\{\xi ^{k}\}_{k}\) be the sequences generated by Algorithm 1. Then, for every \(k \in \mathbb {N}\), we have

Proof

It follows immediately from Proposition 3.1(a) with \(x^{+} = x^{k+1}\), \(z=x^k\), \(\xi = \xi ^k\) and \(\alpha = \alpha _k\). \(\square \)

Another result that will be useful for the convergence of the algorithm is the following:

Proposition 3.5

Let \(K \subseteq \mathbb {R}^{n}\) be a closed and convex set, \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\) be a function such that assumption (A) holds, \(\{\alpha _{k}\}_{k}\) be a sequence of positive numbers and \(\{x^{k}\}_{k}\) and \(\{\xi ^{k}\}_{k}\) be the sequences generated by Algorithm 1. If assumptions (Ci) with \(i=1,2,3\) holds, then

- (a):

-

for every \(\widetilde{x} \in \Omega \) we have

$$\begin{aligned}&\lim _{k \rightarrow \infty } \langle \xi ^{k}, x^{k} - \widetilde{x} \rangle = 0; \end{aligned}$$(3.19) - (b):

-

\(\sum _{k=0}^{\infty } \Vert x^{k+1} - x^{k}\Vert ^{2}<+ \infty \). In particular, \(\lim _{k\rightarrow + \infty }\Vert x^{k+1}-x^{k}\Vert =0\).

Proof

(a): Since \(\xi ^{k} \in \partial ^{K}_{\beta , \gamma } h(x^{k})\) for every \(k \in \mathbb {N}\) and \(\widetilde{x} \in \Omega \), we have by Lemma 2.5 that

Furthermore, it follows from relation (3.16) that

Then, \(\sum _{k=0}^{\infty } \alpha _k \langle \xi ^k, x^k - \widetilde{x} \rangle <+ \infty \). Finally, by (C3), \(\sum _{k=0}^{\infty } \alpha _k = + \infty \), thus \(\lim _{k \rightarrow \infty } \langle \xi ^k, x^k - \widetilde{x} \rangle = 0\).

(b): It follows directly by summing up in (3.18) (after squaring both sides of the equality) taking into account (Ci) with \(i=1,3\). \(\square \)

Our main result, which shows that the sequences \(\{x^{k}\}_{k}\), generated by Algorithm 1, converge to the optimal solution of problem (COP) under assumption (A), is given below.

Theorem 3.1

Let \(K \subseteq \mathbb {R}^{n}\) be a closed and convex set, \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\) be a function such that assumption (A) holds, \(\{\alpha _{k}\}_{k}\) be a sequence of positive numbers and \(\{x^{k}\}_{k}\) and \(\{\xi ^{k}\}_{k}\) be the sequences generated by Algorithm 1. Suppose that Assumption (Ci) with \(i=1,2,3\) holds. Then \(\{x^{k}\}_{k}\) converges to \(\{\overline{x}\} = \textrm{argmin}_{K}\,h\). If moreover, h is continuous, \(\lim _{k \rightarrow + \infty } h(x^{k}) = h(\overline{x}) = \min _{K} h\).

Proof

By assumption (C2), we have \(\Vert \xi ^k\Vert \le M\) for every \(k \in \mathbb {N}\). Hence, we have from (3.17) that for every \(\widetilde{x} \in \Omega \),

Furthermore, since \(\sum _{k=0}^{\infty } \alpha _k^2<+\infty \) by (C3), it follows from Lemma 2.6(a) that \(\{x^{k}\}_k\) is quasi-Fejér and, as a consequence, \(\{x^k\}_k\) is bounded, i.e., it has cluster points. Let \(\widehat{x} \in K\) a cluster point of \(\{x^k\}_k\). Then there exists a subsequence \(\{x^{k_j}\}_{j} \subseteq \{x^k\}_k\) such that \(x^{k_j} \rightarrow {\widehat{x}}\) as \(j \rightarrow + \infty \).

Since for every k, \(\xi ^k \in \partial ^{K}_{\beta ,\gamma } h(x^{k})\), then for every \(j \in \mathbb {N}\),

Take \(y = \overline{x}\) (where \(\{\overline{x}\} = \textrm{argmin}_{K}\,h \subseteq \Omega )\). Then

which is equivalent to (taking \(0<\lambda < \frac{\gamma \beta }{1 + \gamma \beta }\))

Since \(x^{k_j} \rightarrow {\widehat{x}}\) as \(j \rightarrow + \infty \) and \(\lim _{j \rightarrow \infty } \langle \xi ^{k_{j}}, x^{k_{j}} - \overline{x} \rangle = 0\) by Proposition 3.5(i), taking the limit as \(j\rightarrow +\infty \) in (3.21) we conclude that \( \Vert \overline{x} - \widehat{x} \Vert ^2=0\), i.e., \( \overline{x} = \widehat{x}\). Hence, every cluster point of the sequence \(\{x^{k}\}_{k}\) is a minimum of problem (COP), and since h is strongly quasiconvex by (A), \(\textrm{argmin}_{K}\,h\) is a singleton, hence the whole sequence \(\{x^{k}\}_{k}\) converges to \(\{\overline{x}\} = \textrm{argmin}_{K}\,h\) by Lemma 2.6(b), and the proof is complete. The last statement of this theorem follows immediately from the fact that \(\lim _{k\rightarrow +\infty }x^{k}=\overline{x}\), continuity of h and Lemma 2.1. \(\square \)

Next, we show a quasi-linear convergence rate of the iterates.

Corollary 3.3

Let \(K \subseteq \mathbb {R}^{n}\) be a closed and convex set, \(h: \mathbb {R}^{n} \rightarrow \overline{\mathbb {R}}\) be a function such that assumption (A) holds, \(\{\alpha _{k}\}_{k}\) be a sequence of positive numbers and \(\{x^{k}\}_{k}\) and \(\{\xi ^{k}\}_{k}\) be the sequences generated by Algorithm 1. Suppose that Assumption (Ci) with \(i=1,2,3\) holds. Then, for \(\{\overline{x}\} = \textrm{argmin}_{K}\,h\), the sequence \(\{x^k\}_k\) converges quasi linearly to \(\overline{x}\), and

with \(\lim _{k\rightarrow +\infty }\epsilon _k =0\).

Proof

From (3.17) and assumption (C2) it follows that for any \(k\in \mathbb {N}\),

Since \(1-\gamma \beta \alpha _k<1\) and \(\sum _{k}^{\infty } \alpha ^2_k <+\infty \) by assumption (C3), we show the first statement. Moreover, we obtain recursively

which implies (3.22) with \(\epsilon _k=\sum _{j=0}^{k-1}\alpha _{j-1}^{2}\). \(\square \)

We finish the paper with the following remark.

Remark 3.4

In [6], the authors uses Plastria’s subdifferential [17] for quasiconvex Lipschitz continuous functions, which is completely different from our case with strongly quasiconvex functions with strong subdifferential because there is no relationship between strongly quasiconvex functions and quasiconvex Lipschitz continuous functions. Indeed, the function \(h(x) = \sqrt{|x |}\) is strongly quasiconvex on any ball centered at the origin without being Lipschitz continuous while the function \(h(x) = 0\) is quasiconvex and Lipschitz continuous without being strongly quasiconvex.

4 Conclusions

The main difference between Algorithm 1 with other subgradient methods based on generalized subdifferentials for quasiconvex functions (see [6, 8, 13, 14]) is that we use the strong subdifferential (see relation (2.8)). This theoretical difference which has many algorithmic consequences as, for instance, the strong subdifferential is compact at every point of the interior of the effective domain of the function (Lemma 2.2) while other generalized subdifferentials are unbounded (see [6, 8, 13, 14]). Furthermore, in [6, 8, 13, 14], the subgradient is taken from those unbounded subdifferentials and it needs to be normalized, thus the subgradient that they used belongs to the intersection with the unit ball in \(\mathbb {R}^n\). In our case, Algorithm 1 can take any vector from the strong subdifferential and since the strong subdifferential was motivated by strongly quasiconvex functions, its geometrical structure will provide much more valuable information.

We added two examples to show the difference between the strong subdifferential and the intersection of the GP subdifferential and the unit ball. In these examples, we can see the advantages of using the strong subdifferential instead of the GP subdifferential.

Let \(h: \mathbb {R}^n \rightarrow \mathbb {R}\) be a quasiconvex function and \(x \in \mathbb {R}^n\). Then the Greenberg–Pierskalla (GP henceforth) of h at x is defined by

while all the other subdifferentials used in [13] are variants of the previous one. Clearly, \( \partial ^{GP}\) is unbounded (as well as its variants of [13] and the ones used in [6, 8, 14]).

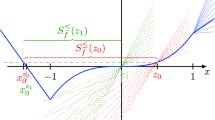

Now, let us consider the following examples:

- (i):

-

Let \(K = [-1, 1]\) and \(h: \mathbb {R} \rightarrow \mathbb {R}\) be given by \(h(x) = \sqrt{|x|}\). h is strongly quasiconvex on K with modulus \(\gamma = \frac{1}{2} > 0\). Take \(x = 0\) and \(\beta = 1\). Then, by [12, Remark 20] we have

$$\begin{aligned} \partial ^{[-1,1]}_{1,\frac{1}{2}} h(0) = [-\frac{3}{2}, \frac{3}{2}], \end{aligned}$$while \(\partial ^{GP} h(0) = \mathbb {R}\) and \(\partial ^{GP} h(0) \cap B(0, 1) = \{-1,1\}\)

- (ii):

-

Let \(h: \mathbb {R} \rightarrow \overline{\mathbb {R}}\) be given by

$$\begin{aligned} h(x)=\left\{ \begin{array}{ll} 0,~~ & \textrm{if} ~ x = 0,\\ -\frac{1}{x}, & \textrm{if} ~ 0 < x \le 1,\\ +\infty , & \textrm{otherwise}. \end{array} \right. \end{aligned}$$Note that h is strongly quasiconvex with modulus \(\gamma _{h} = 1\) (see [10]). Take \(\overline{x}=0\) and \(\beta = 1\). Then by [12, Remark 6(ii)] we have

$$\begin{aligned} \partial ^{[-1, 1]}_{1, 1} h(0) = \Bigg ] - \infty , - \frac{1}{2} \Bigg ], \end{aligned}$$while \(\partial ^{GP} h(0) = \, ]- \infty , 0[\) and \(\partial ^{GP} h(0) \cap B(0,1)= \{-1\}\).

We observe that the strong subdifferential is smaller than the GP subdifferential and its variants for this class of functions. For instance, in case (i), where the function is continuous, the GP subdifferential is the entire space, which is not particularly informative, while the strong subdifferential is compact. In the more challenging case (ii), where the function is not even lower semicontinuous, both subdifferentials are unbounded. However, the strong subdifferential is smaller and has a positive distance from zero, which could prevent the algorithm from terminating prematurely due to approximation errors.

We hope this research offers new insights into subgradient-type methods for a broader range of quasiconvex functions. This includes nonlinear projected subgradient methods [3], conditional subgradient methods [7], incremental subgradient methods [8], and other approaches involving strongly quasiconvex functions and strong subdifferential.

Availability of supporting data

No data sets were generated during the current study.

References

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operators Theory in Hilbert Spaces. CMS Books in Mathematics, 2nd edn. Springer, Berlin (2017)

Beck, A.: First Order Methods in Optimization. MOS-SIAM, Series on Optimization. SIAM, Philadelphia (2017)

Beck, A., Teboulle, M.: Mirror descent and nonlinear projected subgradient methods for convex optimization. Oper. Res. Lett. 31, 167–175 (2003)

Burachik, R.S., Graña Drummond, L.M., Iusem, A., Svaiter, B.F.: Full convergence of the steepest descent method with inexact line search. Optimization 32, 137–146 (1995)

Combettes, P.L.: Quasi-Fejerian analysis of some optimization algorithms. In: Inherently parallel algorithms in feasibility and optimization and their applications (Haifa, 2000), volume 8. Stud. Comput. Math. 2, 115–152 (2001)

Cruz Neto, J.X., Lopes, J.O., Tragliai, M.V.: Algorithms for quasiconvex minimization. Optimization 60, 1105–1117 (2011)

Hu, Y., Li, J., Yu, C.: Convergence rates of subgradient methods for quasi-convex optimization problems. Comp. Optim. Appl. 77, 183–212 (2020)

Hu, Y., Wai Yu, C.K., Yang, X.: Incremental quasi-subgradient methods for minimizing the sum of quasi-convex functions. J. Global Optim. 75, 1003–1028 (2019)

Hu, Y., Yang, X., Sim, C.K.: Inexact subgradient methods for quasi-convex optimization problems. Eur. J. Oper. Res. 240, 315–327 (2015)

Jovanović, M.: On strong quasiconvex functions and boundedness of level sets. Optimization 20, 163–165 (1989)

Jovanović, M.: A note on strongly convex and quasiconvex functions. Math. Notes 60, 584–585 (1996)

Kabgani, A., Lara, F.: Strong subdifferentials: theory and applications in nonconvex optimization. J. Global Optim. 84, 349–368 (2022)

Kiwiel, K.C.: Convergence and efficiency of subgradient methods for quasiconvex minimization. Math. Prog. Ser. A 99, 1–25 (2001)

Konnov, I.V.: On convergence properties of a subgradient method. Optim. Methods Softw. 18(1), 53–62 (2003)

Lara, F.: On strongly quasiconvex functions: existence results and proximal point algorithms. J. Optim. Theory Appl. 192, 891–911 (2022)

Penot, J.P.: Are generalized derivatives useful for generalized convex functions? In: Crouzeix, J.P., et al. (eds.) Generalized Convexity, Generalized Monotonicity, pp. 3–60. Kluwer, Philadelphia (1998)

Plastria, F.: Lower subdifferentiable functions and their minimization by cutting planes. J. Optim. Theory Appl. 46, 37–53 (1985)

Polyak, B.T.: Existence theorems and convergence of minimizing sequences in extremum problems with restrictions. Soviet Math. 7, 72–75 (1966)

Rockafellar, R.T.: Generalized directional derivatives and subgradients of nonconvex functions. Can. J. Math. 32, 257–280 (1980)

Vandenberghe, L., Optimization Methods for Large-Scale Systems. EE236C lecture notes, UCLA (2016)

Yen, L.H., Mu, L.D.: A parallel subgradient projection algorithm for quasiconvex equilibrium problems under the intersection of convex sets. Optimization 71, 4447–4462 (2022)

Yu, C., Hu, Y., Yang, X., Choy, S.K.: Abstract convergence theorem for quasi-convex optimization 507 problems with applications. Optimization 68, 1289–1304 (2018)

Acknowledgements

We dedicated our work to the first author of this manuscript, our formed student, colleague and friend MSc. Juan Choque, who passed away on August 13, 2024, after the submission of the file to the journal.

Funding

This research was partially supported by postgraduate scholarship Apoyo Institucional para Magíster Académicos de la Universidad de Tarapacá, Arica, Chile (Choque), by ANID–Chile under project Fondecyt Regular 1241040 (Lara) and by BASAL fund FB210005 for center of excellence from ANID-Chile (Marcavillaca).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the study conception, design and implementation of the paper.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

In loving memory of Juan Choque.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Choque, J., Lara, F. & Marcavillaca, R.T. A subgradient projection method for quasiconvex minimization. Positivity 28, 64 (2024). https://doi.org/10.1007/s11117-024-01082-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11117-024-01082-z