Abstract

In the process of online identification of non-homogeneous fractional order Hammerstein systems in continuous time, traditional identification algorithms have slow convergence speed and low precision, and it is difficult to simultaneously identify multiple non-homogeneous fractional orders and system parameters. This paper proposes an identification method based on the principle of multi-innovation identification. Firstly, the Hammerstein non-homogeneous fractional order continuous-time system is given, and the parameters to be identified are clarified. Secondly, based on the Riemann–Liouville differential operator, the partial derivative equation of the objective function to non-homogeneous fractional orders in the identification process is given, which ensures that the coefficients of the system and non-homogeneous fractional orders can be identified at the same time. Then, within the given value range of the fractional vector, the partial derivative equation is progressively simplified to make it convenient for online calculation. And the principle of multi-innovation is introduced into the traditional Levenberg–Marquardt algorithm, which improves the convergence speed and convergence precision of the algorithm. Finally, we illustrate the validity of the theory through two experiments, including a numerical simulation example of a fractional-order system and a numerical example of a flexible manipulator system. Experiments prove that the algorithm proposed in this paper has good performance in both simulation examples and actual systems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

As the research process of system control, fault diagnosis, life prediction, etc. [1,2,3,4,5] requires higher and higher accuracy of the system model, the emergence of fractional operators has attracted extensive attention of scholars. Researchers have integrated fractional operators with various disciplines to derive fractional systems in various industries such as fractional financial risk systems, fractional power systems, fractional sticky systems, and fractional novel coronavirus transmission systems [6,7,8,9,10]. Fractional-order phenomena with heredity and memory have been proven to be ubiquitous in practical systems.

Accurate system model and parameters are prerequisites for accurate design of controllers for the system, so the research on modeling and identification methods for fractional-order systems is particularly important. In recent years, some scholars have proposed a series of methods for the research on the identification methods of fractional order systems. It mainly includes methods based on the least squares method and gradient method. For least squares algorithms, Ahmed et al. assumed that the fractional order is known to identify the fractional order system, and used the integral equation method to study the fractional order model identification problem of the step response, and used the least squares to estimate the time delay and system coefficients of the system at the same time [11]. Djouambi et al. studied the difference between least squares and recursive least squares when the fractional order is known, and used the recursive method to weaken the influence of the singular matrix. The experiment proved that using recursive least squares can get better parameter estimation of fractional order system [12]. Zhao used the least squares method to identify the coefficients of the KiBaM nonlinear fractional order system, and successfully identified the fractional order of the system using the P-type order learning rate [13]. For gradient algorithms, Karima Hammar et al. proposed to use the Levenberg–Marquardt (LM) algorithm to simultaneously identify fractional system parameters and fractional orders, and applied it to two actual systems of flexible manipulator system [14] and halogen lamp heating system [15], the established model can better fit the actual output of the system. Wang et al. studied the nonlinear fractional order system with colored noise, and proposed to use the multi-innovation gradient descent algorithm to identify the system coefficients and fractional order, and successfully completed the identification task combined with the method of key item separation [16]. Zhang and other scholars designed an LM identification algorithm based on the principle of multi-innovation in order to improve the convergence speed and identification accuracy of the algorithm. First, it solved the difficult identification problem of the fractional-order Hammerstein system containing colored noise [17]. Secondly, in order to separate the static nonlinear term and the dynamic linear term of the fractional-order Hammerstein system, a method based on neuro-fuzzy is proposed to fit the polynomial of the nonlinear term, thereby linearizing the system as a whole and reducing the identification difficulty [18]. Recently, Zhang et al. proposed a hybrid identification algorithm, using the multi-innovation stochastic gradient method and the multi-innovation LM algorithm to separate the identification of system parameters from the fractional order, and the two algorithms work alternately [19]. The above methods are all proposed for homogeneous fractional order systems. However, in practical systems, non-homogeneous order is ubiquitous, and homogeneous order is a special assumption of non-homogeneous systems. Therefore, the research on the identification methods of non-homogeneous fractional order systems needs to be paid attention to. Although some scholars have conducted research on the identification methods of non-homogeneous systems. For example, scholars such as Abir and Stéphane studied the use of irrational number fitting combined with the LM algorithm to identify non-homogeneous fractional orders, and proposed a three-stage identification method to reduce the calculation amount of non-homogeneous system identification [20, 21]. However, no in-depth research has been carried out on the solution methods for non-homogeneous fractional partial derivative irrational numbers. For the problem of solving partial derivatives of irrational numbers based on the fractional order identification based on the minimum error, Wang J and other scholars have carried out detailed and clear derivations, but the calculation of the results is too large [22]. There are also some studies that completely entrust the identification problem of fractional order to the swarm intelligence algorithm [23,24,25]. Although these methods can identify the fractional orders of the system, the obtained results are not stable enough, and in non-homogeneous systems, when the number of fractional orders to be identified is large, the calculation time of the identification algorithm is often longer.

At present, the system identification methods are generally divided into two types: online identification and offline identification. Some scholars have proposed a series of offline identification methods for fractional order systems. Tang et al. proposed a numerical calculation method for fractional linear systems using block pulses, and proposed to transform the system and use the interior point method to identify the system parameters with time delay [26]. Similarly, Kothari et al. used Haar wavelet to numerically transform fractional order systems, and also studied the problem of parameter identification of fractional order systems with time delays [27, 28]. In the follow-up work, Wang et al. studied the advantages and disadvantages of various wavelets for modulating fractional system signals. Through experimental comparison, Legendre wavelet is used to fit the system, and Legendre wavelet is used to filter the system at the same time. Finally, the parameter identification of the fractional order system with nonlinear terms is successfully identified.

The above methods are generally based on signal modulation, and these methods have high identification accuracy and have a certain ability to suppress signal noise. However, since this method does not have too many restrictions on calculation time, it can only be used for offline identification work, resulting in a large amount of data storage and high memory requirements [29]. In addition, in the actual production process, some reaction processes cannot be interrupted, resulting in the inapplicability of offline identification methods. At this time, it is imminent to develop a series of online identification methods for such systems that cannot be interrupted. Therefore, the purpose of this paper is to propose an online identification method for non-homogeneous Hammerstein fractional order system parameters. The main contributions are as follows: (1) Combining with Riemann–Liouville (RL) fractional order operator, a new gradient calculation method is proposed, which solves the problem of simultaneous identification of multiple different fractional orders of non-homogeneous fractional order system models. (2) The multi-innovation principle is introduced into the iterative process of the algorithm, and the historical information is used to speed up the convergence speed of the algorithm and improve the identification accuracy. (3) The online identification equation based on the multi-innovation LM algorithm is derived, which provides a real-time identification strategy for non-stop systems.

The sections of this paper are arranged as follows: Sect. 2 introduces the relevant knowledge of the basic theory of fractional order, and explains the representation of fractional order operators and Laplace fractional calculus. Section 3 introduces the structure of Hammerstein non-homogeneous fractional order system, deduces the system representation convenient for system identification, and extracts all the parameters to be identified. Section 4 introduces the LM algorithm based on the multi-innovation principle and identifies the system model parameters in Sect. 3. It introduces the calculation process of identifying fractional gradients in detail, and summarizes the algorithm flow. Section 5 uses a numerical example and a flexible manipulator system to verify the algorithm of this paper. The experimental results show that the method of this paper has good performance in identifying parameters and fitting the actual system.

2 Fundamentals of fractional calculus

2.1 Fractional calculus

Unlike the integer-order system whose order is an integer, the order \(\alpha \in {\mathbb{C}}\) of the fractional-order system, when the real part of \(\alpha\) is \({\text{Re}} (\alpha ) > 0\), then the fractional-order calculus of any integrable function \(f(t)\) can be defined by the fractional-order RL integral operator as follows [30]:

In Eq. (1), \(t_{0} I_{t}^{\alpha }\) represents the fractional integral operator with order \(\alpha\), \(t_{0} D_{t}^{ - \alpha }\) represents the fractional differential operator with order \(- \alpha\), and \(t_{0}\) represents the initial time of the system, when \(t_{0} = 0\), \(t_{0} I_{t}^{\alpha }\) can be abbreviated as \(I^{\alpha }\), and \(t_{0} D_{t}^{ - \alpha }\) can be abbreviated as \(D_{{}}^{ - \alpha }\).

\(\Gamma (\alpha )\) represents the Gamma function [31], it is defined as:

When the real part of \(\alpha\) is \({\text{Re}} (\alpha ) < 0\), the value of \(\Gamma (\alpha )\) will be infinite due to the Eq. (2), which means that the fractional differential of the function \(f(t)\) cannot be calculated directly using the Eq. (1). Therefore, the function \(f(t)\) is integrated by the fractional RL integral operator first, and then the function \(f(t)\) is differentiated by the integer differentiation rule. The RL differential is defined as follows [32]:

where \(\beta = - \alpha\), under zero initial condition (\(t_{0} = 0\)), \(t_{0} I_{t}^{ - \beta }\) can be abbreviated as \(I^{ - \beta }\), and \(t_{0} D_{t}^{\beta }\) can be abbreviated as \(D_{{}}^{\beta }\).

2.2 Fractional Laplace transform

Like the calculus of integer order systems, the Laplace transform can also be used to describe fractional order systems. The Laplace transform of RL fractional calculus is defined as [29]:

Under zero initial conditions, the Laplace transform of the fractional derivative simplifies to:

The Laplace transform of the fractional integral under zero initial conditions is given by:

3 Hammerstein non-homogeneous fractional order model

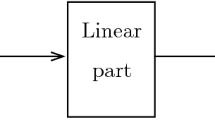

The Hammerstein non-homogeneous fractional order system essentially adds the nonlinear term of the input link to the non-homogeneous linear fractional order system. Among them, the non-homogeneous system assumption is to better adapt to the actual system. Although the homogeneous system has rich research results, in the real world, the non-homogeneous system can better describe the actual system. However, the introduction of non-homogeneous elements also makes system identification difficult. Next, we will describe the system identification of non-homogeneous elements in detail.

Figure 1 is a non-homogeneous fractional system of Hammerstein. There is a static nonlinear link at its input, and the forward path of the system input is composed of a nonlinear link and a transfer function in series.

In Fig. 1, the system input/output equations are expressed as follows:

Therefore, the complete system by Eqs. (7)-(9) can be expressed as follows:

Multiplying both sides of the equation by \(A(s,{{\varvec{\upalpha}}})\), can be expressed as:

where \(A(s,{{\varvec{\upalpha}}})\) and \(B(s,{{\varvec{\upbeta}}})\) in Eq. (11) are expressed as follows:

The nonlinear module \(f(u(t))\) in Eq. (11) is expressed as follows:

Therefore, by Eq. (7) and Eqs. (12)-(14), then Eq. (12) can be expressed as:

Under zero initial conditions, according to Eq. (5) and inverse Laplace transform. Through the transposition operation, then Eq. (15) can be expressed as:

Considering the actual situation, the system usually contains noise. And for the convenience of reading, the symbol \({\Delta }^{\alpha }\) is used instead of \(D^{\alpha }\). According to Eq. (14), Eq. (16) can be expressed as:

It is worth noting that in order to ensure the uniqueness of the system parameters in the case of the same system input and output, the model parameters must be standardized. Here, we set \(p_{1}\) to 1, then Eq. (17) can be rewritten as:

From Eq. (18), it can be concluded that all the parameters of the system to be identified, including the coefficients and orders of non-homogeneous fractions of the system, can be summarized as the following form:

Next, we present the contribution of this work. We have developed a new method to identify all the parameters listed in Eq. (19), especially the fractional order in the system. This method can be adapted to the Hammerstein non-homogeneous fractional order model in Eq. (10).

4 Multi-innovation LM identification method

The identification method in this paper is based on the output error method using the LM algorithm. This method combines the advantages of the steepest descent algorithm and Newton's method. To further adapt the algorithm, first the system of Eq. (18) is rewritten as:

where information vector \(\varphi (t,{\tilde{\mathbf{\alpha }}})\) is defined as:

In addition, the coefficients of the information vector \(\varphi (t,{\tilde{\mathbf{\alpha }}})\) are expressed as follows:

All fractional orders of the system are integrated as follows:

It can be seen from Eqs. (20)-(25) that the parameters to be identified are the coefficient \({\tilde{\mathbf{\theta }}}\) and the fractional order \({\tilde{\mathbf{\alpha }}}\) of the information vector \({\mathbf{\varphi }}(t,{\tilde{\mathbf{\alpha }}})\) respectively.

Then all the parameters of the system to be identified, namely the information vector coefficients and fractional order, can be collectively referred to as:

In order to identify the above parameters of \({{\varvec{\uptheta}}}\), the following objective function is defined:

where \(\varepsilon (t)\) represents the estimation error, and the equation is expressed as follows:

In order to minimize the objective function and achieve the purpose of identification, iterative optimization of the parameters to be identified is required. Obviously, according to the LM algorithm, the system coefficient vector \({\tilde{\mathbf{\theta }}}\) can be iterated by the following method:

where \({\tilde{\mathbf{\theta }}}(t)\) represents the identification parameter value at time \(t\), \(h\) represents the sampling time, \(\lambda\) is the harmonic coefficient, \({\mathbf{J}}_{{{\tilde{\mathbf{\theta }}}}}{\prime}\) and \({\mathbf{J}}_{{{\tilde{\mathbf{\theta }}}}}^{^{\prime\prime}}\) are the first-order derivative and the Hessian matrix of the objective function \(J(t)\) to the system coefficient vector \({\tilde{\mathbf{\theta }}}(t)\) respectively, which can be expressed as follows:

It can be seen from Eq. (30) that it is very simple to use Eq. (20) to calculate the gradient information about \({\tilde{\mathbf{\theta }}}(t)\), which makes Eq. (29) very easy to implement. However, in non-homogeneous systems, it is very difficult to identify the fractional order, because the sensitivity function method is usually used for the homogeneous fractional order identification [14], which cannot be used for non-homogeneous systems great. Therefore, we designed a new identification method based on the LM algorithm for the difficulty in identifying the non-homogeneous order of the system such as Eq. (20), and the iterative method is as follows:

where \({\tilde{\mathbf{\alpha }}}(t)\) represents the order to be identified at time \(t\), \({\mathbf{J}}_{{{\tilde{\mathbf{\alpha }}}}}{\prime}\) and \({\mathbf{J}}_{{{\tilde{\mathbf{\alpha }}}}}^{^{\prime\prime}}\) are the first-order derivative and the Hessian matrix of the objective function with respect to the fractional order parameter respectively, and the calculation method is as follows:

where \({{\varvec{\upxi}}}_{{\hat{y}/\tilde{\alpha }}}\) represents the second-order partial derivative matrix of \({\mathbf{\varphi }}^{{\text{T}}} (t,{\tilde{\mathbf{\alpha }}}){\tilde{\mathbf{\theta }}}\) for the fractional vector \({\tilde{\mathbf{\alpha }}}\), which can be derived as follows:

where the algebraic representation of \({{\varvec{\upsigma}}}_{{\hat{y}/\tilde{\alpha }}}\) is the key to iterative identification of parameter \({\tilde{\mathbf{\alpha }}}(t)\), which represents the first-order partial derivative of \({\mathbf{\varphi }}^{{\text{T}}} (t,{\tilde{\mathbf{\alpha }}}){\tilde{\mathbf{\theta }}}\) in Eq. (20) with respect to non-homogeneous fractional differential order vector. From Eq. (25), \({{\varvec{\upsigma}}}_{{\hat{y}/\tilde{\alpha }}}\) can be expressed as follows:

It can be seen from Eq. (34) that before giving the result of \({{\varvec{\upsigma}}}_{{\hat{y}/\tilde{\alpha }}}\), we need to derive the partial derivative \(\sigma_{{\hat{y}/\tilde{\alpha }_{i} }}\) of \({\mathbf{\varphi }}^{{\text{T}}} (t,{\tilde{\mathbf{\alpha }}}){\tilde{\mathbf{\theta }}}\) to fractional order \(\tilde{\alpha }_{i}\) first, and the derivation process is based on the RL differential operator. The derivation process is as follows:

First, for the convenience of derivation, we define:

It is worth noting that, as can be seen from Eq. (21), Eq. (24) and Eq. (35), \(g(t)\) represents the sum of multiple sub-items of information vector \({\mathbf{\varphi }}^{{\text{T}}} (t,{\tilde{\mathbf{\alpha }}})\) and sub-items of coefficient vector \({\tilde{\mathbf{\theta }}}\). \(D_{{}}^{{\tilde{\alpha }_{i} }} g_{{_{i} }} (t,{\tilde{\mathbf{\theta }}})\) represents sub-items related to fractional order \(\tilde{\alpha }_{i}\) in \(g(t)\), for example:

When \(n_{a} = 2\),\(n_{b} = 2\),\(n_{p} = 3\), using Eq. (18), then sub-items of \(g(t)\) are represented by:

\( \begin{gathered} D_{{}}^{{\tilde{\alpha }_{1} }} g_{1} (t,\widetilde{{\mathbf{\theta }}}) = - a_{1} \Delta ^{{\alpha _{1} }} y(t) \hfill \\ D_{{}}^{{\tilde{\alpha }_{2} }} g_{2} (t,\widetilde{{\mathbf{\theta }}}) = - a_{2} \Delta ^{{\alpha _{2} }} y(t) \hfill \\ D_{{}}^{{\tilde{\alpha }_{3} }} g_{3} (t,\widetilde{{\mathbf{\theta }}}) = b_{1} \Delta ^{{\beta _{1} }} u(t) + p_{2} b_{1} \Delta ^{{\beta _{1} }} u^{2} (t) + p_{3} b_{1} \Delta ^{{\beta _{1} }} u^{3} (t) \hfill \\ D_{{}}^{{\tilde{\alpha }_{4} }} g_{4} (t,\widetilde{{\mathbf{\theta }}}) = b_{2} \Delta ^{{\beta _{2} }} u(t) + p_{2} b_{2} \Delta ^{{\beta _{2} }} u^{2} (t) + p_{3} b_{2} \Delta ^{{\beta _{2} }} u^{3} (t) \hfill \\ \end{gathered} \).

Therefore,

Under zero initial conditions, according to Eq. (3), we can get:

where \(\psi ( \cdot )\) represents the Psi function, it is defined as [33]:

And the Eq. (37) is integrated and simplified, and the Eq. (37) can be rewritten as:

where

It can be seen that Eq. (39) can be simplified as:

Combining Eqs. (36), (38) and (41), we can get:

Obviously, the key to calculating \(\sigma_{{\hat{y}/\tilde{\alpha }_{i} }}\) is to calculate \(w(t,\tilde{\alpha }_{i} )\), but since the integral term in Eq. (40) is not easy to be calculated directly, it is transformed into discrete fitting, and because in this study, \(\left\| {{\tilde{\mathbf{\alpha }}}} \right\| < 1\), so \(n = 1\), then Eq. (40) can be simplified as follows:

So far, the calculation method for \(\sigma_{{\hat{y}/\tilde{\alpha }_{i} }}\) has been deduced. According to the Eq. (34), we can calculate the vector \({{\varvec{\upsigma}}}_{{\hat{y}/\tilde{\alpha }}}\), and then use the LM algorithm to use the Eq. (29) and Eq. (31) to iterate the parameters to be identified. However, the convergence speed of the common LM algorithm is not fast enough, and the convergence accuracy is not high enough. In order to overcome the above difficulties, we introduce the principle of multi-innovation on the basis of the traditional LM algorithm to enhance the convergence speed and accuracy of the algorithm. Firstly, the objective function of Eq. (27) is extended to multi-innovation objective function, and Eq. (27) is redefined as follows:

where \(P\) represents the length of multi-innovation,

In order to simplify Eq. (45) and facilitate the subsequent derivation, the system multi-innovation output vector \({\mathbf{Y}}(t,P)\) and its estimated vector \({\hat{\mathbf{Y}}}(t,P,{{\varvec{\uptheta}}})\) are defined as follows:

From Eq. (46) and Eq. (47), Eq. (45) can be rewritten as:

Obviously, according to Eq. (48), Eq. (44) can be smoothly rewritten as:

where \(\left\| \cdot \right\|^{2}\) represents the sum of the square values of all elements in a vector.

According to the Eq. (26), the parameter \({{\varvec{\uptheta}}}\) includes the information vector coefficient \({\tilde{\mathbf{\theta }}}\) and the fractional order \({\tilde{\mathbf{\alpha }}}\), where the parameter adjustment method of \({\tilde{\mathbf{\theta }}}\) is described by the following equation:

where \({\mathbf{J}}_{{{\tilde{\mathbf{\theta }}}}}^{^{\prime}P}\) and \({\mathbf{J}}_{{{\tilde{\mathbf{\theta }}}}}^{^{\prime\prime}P}\) contain the multi-innovation data about \({\tilde{\mathbf{\theta }}}\), which is expressed as follows:

where \({\tilde{\mathbf{\Phi }}}(t,P,{\tilde{\mathbf{\alpha }}})\) is composed of \(P\) information vector matrices, expressed as follows:

So far, it can be seen that the multi-innovation principle LM algorithm not only uses the information of the current moment, but also uses historical data as the basis for adjusting parameters. Similarly, the identification method of the non-homogeneous fractional vector \({\tilde{\mathbf{\alpha }}}\) of the system using the multi-innovation principle is as follows:

where

Similarly, \({{\varvec{\Xi}}}(t,P,{{\varvec{\uptheta}}})\) and \({\mathbf{\rm H}}(t,P,{{\varvec{\uptheta}}})\) contain multiple time-point innovations about the fractional order vector \({\tilde{\mathbf{\alpha }}}\), which are calculated by Eqs. (33) and (34), expressed as follows:

where

means to stack multiple two-dimensional matrices with the same dimension into a three-dimensional matrix.

So far, the introduction of the multi-innovation LM algorithm for the identification of non-homogeneous fractional order systems has been completed. In order to facilitate readers to use the algorithm, we provide Fig. 2, which is used to visually present the identification process of the algorithm, and we will the entire algorithm flow is summarized as follows:

Step 1: Set the initial value of the estimated parameter \({{\varvec{\uptheta}}}_{0}\), the sampling time \(h\), let \(t = h\), initialize the harmonic coefficient \(\lambda\), set the innovation length \(P\), and start identification;

Step 2: Collect input/output data \(\left\{ {u(t),y(t)} \right\}\), construct information vector \({\mathbf{\varphi }}(t,{\tilde{\mathbf{\alpha }}})\) according to Eq. (21) and \(\left\{ {u(t),y(t)} \right\}\);

Step 3: Construct \({\mathbf{E}}(t,P,{{\varvec{\uptheta}}})\) according to Eqs. (45)-(48), and calculate the objective function value \(J\) according to Eq. (44);

Step 4: According to Eq. (21) calculate the multi-innovation subitem of \({\tilde{\mathbf{\Phi }}}(t,P,{\tilde{\mathbf{\alpha }}})\) in Eq. (53), calculate \({\mathbf{J}}_{{{\tilde{\mathbf{\theta }}}}}^{^{\prime}P}\) and \({\mathbf{J}}_{{{\tilde{\mathbf{\theta }}}}}^{^{\prime\prime}P}\) according to Eqs. (51) and (52), and update \({\tilde{\mathbf{\theta }}}(t)\) according to Eq. (50);

Step 5: First calculate the multi-innovation sub-item of \({{\varvec{\Xi}}}(t,P,{{\varvec{\uptheta}}})\) in Eq. (57) according to Eq. (34), and calculate the multi-innovation sub-item of \({\mathbf{\rm H}}(t,P,{{\varvec{\uptheta}}})\) in Eq. (58) according to Eq. (33), then calculate \({\mathbf{J}}_{{\tilde{\alpha }}}^{^{\prime}P}\) and \({\mathbf{J}}_{{\tilde{\alpha }}}^{^{\prime\prime}P}\) according to Eq. (55)-(56), update \({\tilde{\mathbf{\alpha }}}(t)\) according to Eq. (54) at last;

Step 6: Judgment, If \(J < J_{0}\), then increase \(\lambda\), else decrease \(\lambda\);

Step 7: Let \(J_{0} = J\), \(t = t + h\), If \(t < t_{\max }\),then go to Step 2, else go to Step 8;

Step 8: After the identification is completed, the estimated value \({\hat{\mathbf{\theta }}}\) of the identification is output.

5 Simulation examples

In order to illustrate that the algorithm is effective, this section gives an academic example simulation experiment and an actual example simulation of a mechanical system, where the actual example data comes from the flexible manipulator data in Daisy (system identification database) [34]. Next, two calculation examples will be introduced in detail.

5.1 An academic example

In order to illustrate the reliability of the method, an academic example is used to simulate and verify the theory. Consider a Hammerstein non-homogeneous fractional order continuous system with structural orders \(n_{a} = 2\), \(n_{b} = 2\), \(n_{p} = 3\) as follows:

The parameter assignment is:

where the initial state of the system is 0, the input \(u(t)\) is a Gaussian random sequence with a mean value of 0 and a variance of 1, and \(v(t)\) is a Gaussian white noise with a mean value of 0.

Using the above system as a basis to carry out simulation experiments, using the algorithm proposed in this paper to identify the parameters of the system, the experiment compares the decline curve of the identification objective function under the conditions of different innovation lengths \(P = \{ 1,3,5\}\) and different signal-to-noise ratios \({\text{SNRs}} = \{ 25{\text{dB}}, \, 34{\text{dB\} }}\). The experimental results are shown in Fig. 3 and Fig. 4, and \(K\) in the figure represents the sampling times at equal time intervals. It can be seen from Fig. 3 that under different signal-to-noise ratios (SNRs), the longer the innovation length, the faster the objective function declines and the objective function value can converge to a smaller value. Figure 4 shows more clearly the convergence difference of the objective function with different SNRs under different lengths of innovations. It can be seen that although high SNR leads to better experimental results, the deterioration of results caused by low SNR is weakened as the innovation time prolongs. When the innovation length increases to 3, the convergence result of the objective function value hardly deteriorates due to the decrease of SNR. When the innovation length increases to 5, the objective function value for low SNR drops at almost the same rate as for high SNR. This proves that the performance improvement of the LM algorithm through multiple innovations is still very objective.

In order to reduce the influence of the random initial value of the algorithm on the results of different fractional order algorithms, we use the innovation lengths {1, 3, 5} for system identification, and the experiment counts the objective function value of 1000 Monte Carlo experiments. As shown in Fig. 5, it can be seen that Fig. 5 consists of a distribution histogram with a Kernel Smooth distribution curve and whiskers below the horizontal axis. It can be seen from the distribution curve and distribution histogram that when the length of innovation is longer, the distribution curve and histogram are denser near the objective function of 0. In addition, it can be seen from the whiskers that the longer the innovation length is, the smaller the final optimized objective function value is, and the higher the accuracy of the algorithm is. Figure 6 depicts the comparison of the statistical boxplot of the objective function value estimation with its true value. It can be seen that for the estimated coefficient values (the first 8 boxes), the boxes are all narrow, and the median line and mean almost coincide with the true values of the corresponding coefficients. For the estimated fractional order value (the last 4 boxes), the box has been coincident into a straight line, and almost coincides with the corresponding true value, and the estimation result is better. Table 1 and Table 2 respectively show the comparison of a group of estimated coefficient values with the real coefficient values, and the comparison of the estimated fractional order values with the true fractional order values for the same group. It can be seen that the estimated parameter values are basically the same as the real values, and the algorithm is effective.

Figure 7 shows the comparison between the output of the estimated system and the output of the real system. The almost coincidence of the red dotted line and the black dotted line proves the accuracy of the estimated system, and the partially enlarged part further illustrates this point. The blue straight line in Fig. 7 represents the difference between the estimated system output and the actual system output. It is clear that the error is almost distributed around 0, which further proves that the algorithm proposed in this paper is effective.

5.2 Flexible manipulator system identification

In order to further explore the effectiveness of the algorithm in real systems, taking a flexible manipulator system as an example, the fractional-order system in Fig. 1 is used as a model to identify system parameters and explore its system structure. The data is provided by KU Leuven, and the data length is 1024. The input of the system is the reaction force of the ground structure, and the output is the acceleration of the end of the manipulator. When the output signal is similar to a sinusoidal signal, its input and output signals are shown in Fig. 8. The purpose of the experiment is to use the algorithm proposed in this paper to explore the relationship between the ground reaction force and the terminal acceleration of the flexible manipulator system.

In order to ensure the reliability of the experiment, the data is divided into two groups on average, which are respectively used as identification training data and test data. In the experiment, the system structure was first determined to ensure the accuracy of the identification model. For this part of the work, we chose to refer to the existing work content, including [14] and [17]. The structural orders include the linear part \([n_{a} ,n_{b} ]\) and the nonlinear part \([n_{p} ]\) of the system. For convenience, the structure orders are denoted as \([n_{a} ,n_{b} ,n_{p} ]\) in the experiments. We select well-tested structures in [14] and [17], and used the method proposed in this paper to carry out system identification work on known inputs and outputs, and record the decline curve of the objective function. A total of 5 system structures are tested in the experiment, and the experimental results are shown in Fig. 9. Obviously, using the algorithm proposed in this paper, the objective function value can be well optimized for systems with different structures. In addition, it can be seen from the partially enlarged diagram in Fig. 9 that when the system structure is \([2,2,2]\), the value of the experimental objective function is the smallest at this time. Therefore, the Hammerstein non-homogeneous fractional continuous system model with system structure \([2,2,2][2,2,2]\) is chosen as the fitting model of the flexible manipulator system.

In order to further illustrate the effectiveness of the combination of multi-innovation theory and LM algorithm, the experimental statistics of the convergence boxplot of the objective function value of the innovation length \(P = \{ 1,3,5\}\) are shown in Fig. 10. It can be seen that with the increase of the innovation length, the box keeps compressing, the mean value keeps decreasing, and the statistical outliers keep approaching the box. This shows that in the process of parameter identification of the system using the LM algorithm combined with the principle of multi-innovation, with the increase of the length of innovations, in addition to the faster identification speed and the continuous improvement of accuracy, the longer length of innovations also makes the algorithm more likely that finding good parameter combinations.

Figures 11 and 12 show the parameter change curves in the process of identifying the system when the length of innovations is 5. It can be seen that when the initial value of the parameter is random, in the process of parameter identification of the non-homogeneous fractional order system using the multi-innovation LM algorithm, with the increase of online data sampling, the parameter can change rapidly and converge. The algorithm makes only minor adjustments to the parameters between 200 and 300 samples. After 300 samples, the parameters of the system coefficients hardly change, and the fractional order changes almost stop, the algorithm shows convergence, and the value of the objective function almost stops decreasing. At this time, the system parameters are close to the optimal value.

Figure 13 shows the output of the estimated system identified using our method compared with the actual output. It can be seen that with the same system input, the output of the estimated system is almost the same as that of the actual flexible manipulator system, which is shown in detail in the partial enlargement of Fig. 13. To further illustrate the accuracy of the estimation system, we add the output error plots of the estimation system and the flexible manipulator system, as shown in Fig. 14. It can be seen that the output errors of the estimation system and the flexible manipulator system are small, except for a small error in the early stage due to online identification. This shows that with the increase of sampling times, the identification algorithm converges, and the estimation system has successfully fitted the flexible manipulator system, which shows the effectiveness of the theory in this paper in actual engineering.

Finally, in order to show the performance of the method in this paper, we compared the methods in [14, 17,18,19]. The content of the comparison is the estimated error range of the test set. The results are shown in Table 3. It can be seen that for the same flexible manipulator system data, the method in this paper has a smaller estimation error range.

6 Conclusion

This paper proposes a LM algorithm based on the principle of multiple innovations, which is used for the online identification of Hammerstein non-homogeneous fractional order continuous systems. The algorithm deduces the identification process for system parameters in detail. Among them, for the identification of non-homogeneous fractional orders, this paper theoretically abandons traditional methods of using numerical differentiation. The partial derivative equation of the objective function with respect to non-homogeneous fractional order is deduced when the range of fractional order to be identified is (0,1). Combined with the principle of multi-innovation and LM algorithm, the parameters of an academic example and a flexible manipulator system are identified online. The conclusions of the experiment are as follows:

-

Monte Carlo experiments prove that the principle of multiple innovations has a significant effect on improving the accuracy and speed of the LM algorithm. Under different signal-to-noise ratios, the performance of the multi-innovation LM algorithm is better than that of the traditional LM algorithm.

-

The identification method for non-homogeneous fractional orders proposed in this paper can effectively reduce the value of the objective function, and can effectively identify all fractional orders of the system.

Although the algorithm in this paper can be effectively used in the identification of non-homogeneous fractional orders, and has better identification results in mechanical system examples. But the algorithm can only be used for single-input single-output systems, so our follow-up work will focus on extending the algorithm to multiple-input and multiple-output systems.

Data availability

At present, this paper completely describes the theoretical research and does not analyze the data set during the research period. The collection of data set is random according to different readers, but some codes can be provided by contacting the corresponding author.

References

Sabatier, J., Lanusse, P., Melchior, P., et al.: Fractional order differentiation and robust control design. Intell. Syst. Control Autom. Sci. Eng. 77, 13–18 (2015)

Dastjerdi, A.A., Vinagre, B.M., Chen, Y.Q., et al.: Linear fractional order controllers: a survey in the frequency domain. Annu. Rev. Control. 47, 51–70 (2019)

Yang, R., Xiong, R., He, H., et al.: A fractional-order model-based battery external short circuit fault diagnosis approach for all-climate electric vehicles application. J. Clean. Prod. 187, 950–959 (2018)

Wang, Y., Gao, G., Li, X., et al.: A fractional-order model-based state estimation approach for lithiumion battery and ultra-capacitor hybrid power source system considering load trajectory. J. Power Sources 449, 227543 (2020)

Guha, A., Patra, A.: Online estimation of the electrochemical impedance spectrum and remaining useful life of lithium-ion batteries. IEEE Trans. Instrum. Meas. 67(8), 1836–1849 (2018)

Tien, D.N.: Fractional stochastic differential equations with applications to finance. J. Math. Anal. Appl. 397(1), 334–348 (2013)

Debbarma, S., Dutta, A.: Utilizing electric vehicles for LFC in restructured power systems using fractional order controller. IEEE Trans. Smart Grid 8(6), 2554–2564 (2016)

Hu, X., Yuan, H., Zou, C., et al.: Co-estimation of state of charge and state of health for lithium-ion batteries based on fractional-order calculus. IEEE Trans. Veh. Technol. 67(11), 10319–10329 (2018)

Matlob, M.A., Jamali, Y.: The concepts and applications of fractional order differential calculus in modeling of viscoelastic systems: a primer. Crit. Rev.™ Biomed. Eng. 47(4) (2019)

Zhang, Z., Zeb, A., Egbelowo, O.F., et al.: Dynamics of a fractional order mathematical model for COVID-19 epidemic. Adv. Differ. Equ. 2020(1), 1–16 (2020)

Ahmed, S.: Parameter and delay estimation of fractional order models from step response. IFAC-PapersOnLine. 48(8), 942–947 (2015)

Djouambi, A., Voda, A., Charef, A.: Recursive prediction error identification of fractional order models. Commun. Nonlinear Sci. Numer. Simul. 17(6), 2517–2524 (2012)

Zhao, Y., Li, Y., Zhou, F., et al.: An iterative learning approach to identify fractional order KiBaM model. IEEE/CAA J. Autom. Sin. 4(2), 322–331 (2017)

Hammar, K., Djamah, T., Bettayeb, M.: Nonlinear system identification using fractional Hammerstein–Wiener models. Nonlinear Dyn. 98, 2327–2338 (2019)

Hammar, K., Djamah, T., Bettayeb, M.: Identification of fractional Hammerstein system with application to a heating process. Nonlinear Dyn. 96, 2613–2626 (2019)

Wang, J., Ji, Y., Zhang, C.: Iterative parameter and order identification for fractional-order nonlinear finite impulse response systems using the key term separation. Int. J. Adapt. Control Signal Process. 35(8), 1562–1577 (2021)

Zhang, Q., Wang, H., Liu, C.: Identification of fractional-order Hammerstein nonlinear ARMAX system with colored noise. Nonlinear Dyn. 106, 3215–3230 (2021)

Zhang, Q., Wang, H., Liu, C.: MILM hybrid identification method of fractional order neural-fuzzy Hammerstein model. Nonlinear Dyn. 108(3), 2337–2351 (2022)

Qian, Z., Hongwei, W., Chunlei, L.: Hybrid identification method for fractional-order nonlinear systems based on the multi-innovation principle. Appl. Intell. 53, 15711 (2022)

Mayoufi, A., Victor, S., Chetoui, M., et al.: Output error MISO system identification using fractional models. Fract. Calculus Appl. Anal. 24(5), 1601–1618 (2021)

Victor, S., Mayoufi, A., Malti, R., et al.: System identification of MISO fractional systems: parameter and differentiation order estimation. Automatica 141, 110268 (2022)

Wang, J., Wei, Y., Liu, T., et al.: Fully parametric identification for continuous time fractional order Hammerstein systems. J. Franklin Inst. 357(1), 651–666 (2020)

Zhou, S., Cao, J., Chen, Y.: Genetic algorithm-based identification of fractional-order systems. Entropy 15(5), 1624–1642 (2013)

Moghaddam, M.J., Mojallali, H., Teshnehlab, M.: Recursive identification of multiple-input single-output fractional-order Hammerstein model with time delay. Appl. Soft Comput. 70, 486–500 (2018)

Li, J., Zong, T., Guoping, Lu.: Parameter identification of Hammerstein-Wiener nonlinear systems with unknown time delay based on the linear variable weight particle swarm optimization. ISA Trans. 120, 89–98 (2022)

Tang, Y., Li, N., Liu, M., et al.: Identification of fractional-order systems with time delays using block pulse functions. Mech. Syst. Signal Process. 91, 382–394 (2017)

Kothari, K., Mehta, U., Vanualailai, J.: A novel approach of fractional-order time delay system modeling based on Haar wavelet. ISA Trans. 80, 371–380 (2018)

Kothari, K., Mehta, U., Prasad, V., et al.: Identification scheme for fractional Hammerstein models with the delayed Haar wavelet. IEEE/CAA J. Autom. Sin. 7(3), 882–891 (2020)

Wang, Z., Wang, C., Ding, L., et al.: Parameter identification of fractional-order time delay system based on Legendre wavelet. Mech. Syst. Signal Process. 163, 108141 (2022)

Pudlubny, I.: Fractional differential equations. Academic Press (1999)

Vigneron, J.P., Lambin, P.: Gaussian quadrature of integrands involving the error function. Math. Comput. 35(152), 1299–1307 (1980)

Wang, C.H.: On the generalization of block pulse operational matrices for fractional and operational calculus. J. Franklin Inst. 315(2), 91–102 (1983)

Qiu, S.L., Vuorinen, M.: Some properties of the gamma and psi functions, with applications. Math. Comput. 74(250), 723–742 (2005)

De Moor, B., Daisy: Database for the Identification of Systems, Department of Electrical Engineering, ESAT/SISTA, K. U. Leuven, Belgium (2004)

Funding

This work is supported by the National Natural Science Foundation of China (Grant number 61863034, 12002296), and Open Project Program of Science and Technology on Reactor System Design Technology Laboratory (No.HTKFKT- 02-2019013), and Natural Science Foundation of Xinjiang Uygur Autonomous Region (Grant number 2021D01C045), and Scientific Research Innovation Project of Excellent Doctoral Candidates (Grant number XJU2022BS095, XJU2022BS101).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interests with respect to the research, authorship and/or publication of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, C., Wang, H., Zhang, Q. et al. Online identification of non-homogeneous fractional order Hammerstein continuous systems based on the principle of multi-innovation. Nonlinear Dyn 111, 20111–20125 (2023). https://doi.org/10.1007/s11071-023-08876-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-023-08876-y