Abstract

The paper deals with identification of fractional order nonlinear systems based on Hammerstein–Wiener models. An output error approach is developed using the robust Levenberg–Marquardt algorithm. It presents the difficulty of the parametric sensitivity functions calculation which requires a heavy computational load at each iteration. To overcome this drawback, the fractional nonlinear system is reformulated under a regression form, and the gradient and the Hessian can be obtained in a closed form without using the parametric sensitivity functions. The method’s efficiency is confirmed on numerical simulations, and its feasibility is illustrated with its application to the modeling of an experimental arm robot.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Major real processes show nonlinear dynamic behavior, and their modeling and identification remain an active research topic considering the diversity and complexity of nonlinear systems. Their adequate description over the entire range of operation has required many structures such as voltera series, Narmax models, neural networks, black box or block-oriented model, etc., \(\ldots \) [1,2,3,4]. The block-structured class allows the separation of the linear dynamic part and the nonlinear static part into different subsystems which can be interconnected in a different order (Hammerstein, Wiener, Hammerstein–Wiener, etc.).

The more general model of this class is the Hammerstein–Wiener (H-W) model which consists of three subsystems, where a linear block is embedded between two nonlinear subsystems. This more elaborate system topology can improve the model’s performance describing a real nonlinear system with both an actuator nonlinearity and a sensor nonlinearity [5]. Some work has also shown that the H-W system can approximate relatively well almost any high-order nonlinear system [6, 7]. They have been successfully applied to model numerous technological processes such as fermentation bioreactor [8], skeletal muscle system [9], chemical process [10], electrical discharge machining [11], temperature variations in a silage bale [12], etc.

On the other hand, many physical nonlinear processes and materials exhibit a fractional behavior, characterized by a hereditary property and an infinite dimensional structure; hence, the fractional order models have gained an increasing interest among researchers [13,14,15,16]. Their main advantage is the parsimonious models that can mimic the dynamic behavior of real processes with better accuracy than their counterpart classical systems, in addition to having a “memory” included in the model. Among the various applications, we can cite: quantum mechanics [17], chaotic motions [18], diffusive phenomena [19], biochemical reactor [20], etc., \(\ldots \)

In this paper, the identification of fractional H-W model is aimed, where the H-W structure has its linear part of fractional order. The goal is to take advantage of the capability of the H-W system and the parsimony of the fractional order models.

Nonlinear system identification is a major research topic; the most difficult task is to select the model structure and to establish a suitable identification approach. The drawback reported in the literature is that to achieve an accurate identification, we have to deal with the curse of dimensionality.

Many studies considered the identification of the classical integer order class of systems; the simpler structures of Hammerstein or Wiener models have been focused on, while relatively less work has concerned the H-W system identification [5, 21,22,23,24].

In the area of fractional block-oriented system identification, Hammerstein models have been identified using heuristic approaches such as particle swarm optimization (PSO) [25], or genetic algorithm (GA) combined with the recursive least squares (RLS) [26]. An iterative linear optimization algorithm and a Lyapunov method have been developed in [27]. As for the fractional Wiener model, an output error method is used in [28], while a modified PSO is extended in [29].

As a matter of fact, fractional Hammerstein–Wiener models identification is more difficult than that of the simpler Hammerstein and Wiener systems; the complexity lies in the fact that they involve two unknown internal signals not accessible to measurements. Consequently, to the best of the authors knowledge, only one study has considered the identification of a continuous time fractional H-W models based on an instrumental variable method in [30].

In this context, a novel approach for identifying fractional H-W systems in the discrete case is presented.

It is based on an output error approach using a nonlinear optimization method, and the robust Levenberg–Marquardt (L-M) algorithm is developed for the fractional H-W models. Its drawback is the parametric sensitivity functions necessary for the gradient and hessian computation of the update rule. Their complexity depends on the chosen model and requires a heavy computational load at each iteration for the H-W case. To overcome this difficulty, the method reformulates the fractional H-W model under a regression form, which allows a better model parameterization. As a result, the gradient and the Hessian can be obtained in a closed form, avoiding the sensitivity functions computation, and the update burden is drastically reduced. To test the method performance, it is applied to a real arm robot system identification.

The outline of this paper is organized as follows:

Section 2 introduces the required theoretical concepts of fractional calculus. In Sect. 3, the fractional H-W system is presented along with the problem formulation, while the identification method is developed in Sect. 4. Section 5 illustrates the method efficiency with some simulation results and its application to the modeling of an arm robot. Finally, conclusion and some perspectives are provided in Sect. 6.

2 Mathematical background

2.1 Fractional derivative

Fractional calculus has attracted an increasing interest among researchers these last decades with its application in system modeling and control [19, 31, 32]

Different definitions of the differintegral operator have been proposed in the literature, and the most used for the discrete case is the Grünwald–Letnikov one (G-L), expressed as follows [33, 34]:

where \(\Delta ^{\alpha }\) denotes the fractional order difference operator of order \(\alpha \), with zero initial time, x(kh) is a function of \(t=kh\), k is the number of samples, and h is the sampling interval which is assumed to be equal to 1.

\(\left( {\begin{array}{c}\alpha \\ j\end{array}}\right) \) is the binomial term defined by:

Let us define the following recurrence relation:

where

Eq. (1) can be rewritten under the form:

The numerical simulation of the fractional system studied in this paper is performed using Eq. (5).

2.2 Fractional order models

Different models can be defined in the fractional system description. In this study, the transfer function and the recurrence equation are considered.

The discrete transfer function representation is defined by the following equation:

where U(z), Y(z) are, respectively, the system input and the system output, \(\alpha _i\) and \(\gamma _j\in {\mathbb {R}}^{*+}\) are the fractional orders (\(i=1,\ldots ,n_{a}\), and \(j=1,\ldots ,n_{b}\)), and \(z^{-1}\) is the backward shift operator with \(z^{-1}y(k)= y(k-1)\).

The recurrence equation of the model Eq. (6) can be deduced; it is expressed as follows:

The fractional models of Eq. (6) and Eq. (7) are called non-commensurate order systems when the fractional orders are completely different; otherwise, when these last are multiples of a same basis \({\tilde{\alpha }}\in {\mathbb {R}}^{*+}\) with (\(\alpha _i=i{\tilde{\alpha }}\) and \(\gamma _j=j{\tilde{\alpha }}\)), the models are of commensurate order. In this paper, the case of fractional commensurate order systems is considered, and the recurrence Eq. (7) can be rewritten under the form:

Using the discrete fractional order operator \(\Delta \) in the time domain, Eq. (8) yields the following equation:

This model will be used to describe the linear part of the fractional H-W model.

3 Problem description

The general structure of a H-W system is defined by the cascade connection of two nonlinear subsystems with a linear fractional dynamic block embedded between them according to Fig. 1.

In this work, the Hammerstein–Wiener model defined in [35] and represented by the block structure of Fig. 2 is adopted. Its input/output equation is expressed as follows:

where u(k) and y(k) are, respectively, the input and the output of the overall system, and v(k) is the noise. The nonlinear parts are described by the functions f and g, while the linear part of fractional order is described by the polynomials A(z) and B(z) of the shift operator \(z^{-1}\) given by the following equations:

The nonlinear functions f and g are expressed as a linear combination of a known basis, respectively:

\({{\varvec{f}}}=(f_1,\ldots ,f_{n_{p}})\) with the coefficients (\(p_1,\ldots ,p_{n_{p}}\))

\({{\varvec{g}}}=(g_1,\ldots ,g_{n_{q}})\) with the coefficients (\(q_1,\ldots ,q_{n_{q}}\))

where

Replacing A(z) and B(z) in Eq. (10) gives:

Substituting Eq.(12) and Eq.(13) in Eq. (14) results in the Hammerstein–Wiener system overall equation:

The commensurate order case being considered, (\(\alpha _i=i{\tilde{\alpha }}\)) and (\(\gamma _j=j{\tilde{\alpha }}\)), Eq. (15) can be written in the time domain, using the difference operator \(\Delta \):

The fractional H-W system is defined by the parameter vectors of the linear and the nonlinear subsystems as follows:

Notice that to obtain a unique parameterization, it is necessary to normalize the model parameters [36]; thus, the first coefficients of two blocks are fixed, here, \(\; {\varvec{p}}\) and \({\varvec{q}}\) are set equal to one, i.e., (\(p_1=1\) and \(q_1=1\)) and Eq. (16) can be rewritten as:

The main contribution of this work is to develop a novel identification approach to estimate the unknown parameters of the different subsystems and the fractional order of the Hammerstein–Wiener model.

4 Identification method

The identification objective consists in estimating the parameter vectors \({\varvec{a}},\quad {\varvec{b}},\quad {\varvec{p}},\quad {\varvec{q}}\) and the order \({\tilde{\alpha }}\) in Eq. (18). This approach is based on an output error method using the L-M algorithm. It is a robust nonlinear optimization method which combines the Gauss–Newton method and the steepest descent. However, it suffers from the drawback of the complex computation of the parametric sensitivity functions necessary for the gradient and hessian calculation. In this paper, we extend the L-M algorithm for the identification of the fractional H-W model, and a better model parameterization can be achieved by the reformulation of the system output Eq. (18) under a regression form. This allows for the representation of nonlinear input/output relationship with a linear in the parameters structure.

where \(\varvec{\varphi }(k,{\tilde{\alpha }})\) denote the information vector defined as follows:

where

The unknown parameter vector \({\tilde{\varvec{\theta }}}\) is defined from Eq. (18) and Eq. (19) as follows:

The Hammerstein–Wiener system identification requires the estimation of the parameter vector \(\varvec{\theta }\) which contains the parameters of the linear block and the nonlinear blocks as well as the fractional order:

The quality of the estimation procedure is measured in terms of the following quadratic criterion:

where K is the data length, \(\varepsilon (k)\) is the prediction error to be minimized with:

\({\hat{y}}(k)\), \({\hat{\tilde{\varvec{\theta }}}}\) and \(\hat{{\tilde{\alpha }}} \) are, respectively, the estimates of y(k), \({\tilde{\varvec{\theta }}}\) and \({\tilde{\alpha }}\). L-M algorithm uses the following recurrence equation:

The update rule is based on the calculation of the gradient and the Hessian \(J^{\prime }\) and \(J^{\prime \prime }\) with respect to each parameter of \(\varvec{\theta }\), and \(\lambda \) is a tuning parameter for the convergence. The reformulation of the H-W model output equation under a regression form allows a better model parameterization, and the gradient and the Hessian can be obtained in a closed form without using the parametric sensitivity functions. Based on the regression form of the prediction error Eq. (28), they are easily computed by deriving the quadratic functional of Eq. (27) with respect to \({\tilde{\varvec{\theta }}}\):

The calculation of the gradient and the Hessian with respect to the fractional order \({\tilde{\alpha }}\) (\(J^{\prime }_{{\tilde{\alpha }}}\) and \(J^{\prime \prime }_{{\tilde{\alpha }}}\)) can be performed as follows:

where \(\sigma _{{\hat{y}}(k)/{\tilde{\alpha }}}=\dfrac{\partial {\hat{y}}(k)}{\partial {\tilde{\alpha }}}\) is the output sensitivity function with respect to \( {\tilde{\alpha }}\); it is calculated numerically:

with \(\delta {\tilde{\alpha }}\) a small variation of \( {\tilde{\alpha }}\).

The Hessian \(J^{\prime \prime }_{{\tilde{\alpha }}}\) can be derived using:

Hence, the gradient \(J_{\varvec{\theta }}^{\prime }\) and the Hessian \(J_{\theta }^{\prime \prime }\) are expressed by these equations:

The main steps of the developed approach can be summarized as follows:

- 1.

Collect the input–output data set [u(k), y(k)].

- 2.

Let \(i=1\), and set the initial values \({{\tilde{\varvec{\theta }}}}^{0}\), \({\tilde{\alpha }}^{\small {0}}\) and \(\delta {\tilde{\alpha }}\).

- 3.

Form \(\varvec{\varphi }(k,{\tilde{\alpha }})\) using Eq. (20 ).

- 4.

Compute the output fractional order sensitivity function \(\sigma _{{\hat{y}}(k)/ {\tilde{\alpha }}}\) using Eq. (33).

- 5.

Compute \(J^{'}\) using Eq. (35) and \(J^{''}\) using Eq. (36).

- 6.

Update the parameter estimate \(\varvec{\theta }^{(i)}\) using Eq. (29).

- 7.

Compute the quadratic function using Eq. (27).

- 8.

If \(J(\varvec{\theta }^{(i+1)})<J(\varvec{\theta }^{(i)})\), increase \(\lambda \), otherwise, decrease \(\lambda \) and set \(\hat{\varvec{\theta }}=\varvec{\theta }^{(i)}\) and \(J(\hat{\varvec{\theta }})=J({\varvec{\theta }^{i}})\) and go to step 3.

The H-W obtained parameter estimates \( \hat{{\varvec{a}}}\), \( \hat{{\varvec{b}}}\), \( \hat{{\varvec{p}}}\), \( \hat{{\varvec{q}}}\) and \( \hat{{\tilde{\alpha }}}\) can be read from the vector \(\hat{\varvec{\theta }}\) as follows:

The estimates of the vector \({\varvec{a}}\) elements are the first \(n_{{\varvec{a}}}\) values of \(\hat{\varvec{\theta }}\), \( \hat{{\varvec{b}}}\) can be read from the (\(n_{{\varvec{a}}}n_{{\varvec{q}}}+1\)) to (\(n_{{\varvec{a}}}n_{{\varvec{q}}}+n_{{\varvec{b}}}n_{{\varvec{p}}}\)) elements of \(\hat{\varvec{\theta }}\) and \({{\tilde{\alpha }}}\) is the final element. From Eq. (25), we notice that for each \(q_j\), we have \(n_{{\varvec{a}}}\) estimates \(\hat{q_j}\); hence, the mean value may be computed as its estimate:

Similarly, the estimate of \({\varvec{p}}\) is deduced

The effectiveness of the developed method is tested in the next section using numerical simulations.

5 Simulation examples

Two examples are presented in this section: the first one is an academic example that illustrates the statistical performance of the proposed algorithm for different signal-to-noise ratios (SNR); the second example is a real experiment of a flexible robot arm which is intended to be modeled with a fractional H-W structure.

Without loss of generality, the nonlinear functions f and g are assumed to be polynomials of orders \(n_{{\varvec{p}}}\) and \(n_{{\varvec{q}}}\), respectively.

The first step required to perform good system identification is the choice of the model structure; in the present work, it consists in determining the values of \(n_{{\varvec{a}}}\), \(n_{{\varvec{b}}}\), \(n_{{\varvec{p}}}\) and \(n_{{\varvec{q}}}\) of the linear and nonlinear blocks. In this aim, different values are tried out, along with the estimation procedure, and the best structure with the smallest criterion is selected.

5.1 Example 1: academic example

Let us consider the fractional Hammerstein–Wiener system of commensurate order \({\tilde{\alpha }}=0.6\), with \(n_{{\varvec{a}}}= 2\), \(n_{{\varvec{b}}}=2\). The nonlinear parts \({\varvec{f}}\) and \({\varvec{g}}\) adopt the polynomials form of orders \(n_{{\varvec{p}}}=2\), and \( n_{{\varvec{q}}}=3\), respectively.

The overall output system equation is as follows:

with

where the parameter vectors to be estimated are:

The input u(k) is taken as a persistent excitation sequence with zero mean and unit variance, and v(k) is a white noise sequence with zero mean and constant variance. The data set is of length \(K=1000\).

In the first phase, the right structure has to be determined and various combinations \(n_a\), \(n_b\), \(n_p\), \(n_q\) are tested. The criteria evolution for the best structures versus the iteration number is represented in Fig. 3, and the obtained values for J, for each structure are tabulated in Table 1; the exact structure is recovered with a cost function \(J\simeq 0\).

In the second phase, and through the use of the best structure, the algorithm is evaluated in the absence of noise and with noisy data, for different signal-to-noise ratios (SNR): 34 dB and 26 dB.

The characteristics of the fractional H-W system for the noise-free case are shown graphically in Fig. 4. In the first figure, the estimated output is compared to the simulated one, while the second figure represents the prediction error. The results obtained are satisfactory: they show that the error is null and the output overlaps with the data.

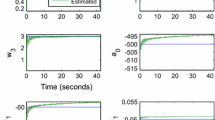

For noisy measurements, with \(SNR=34\) dB and \(SNR=26\) dB, the comparison of the real output and the estimated one along with their respective prediction errors is depicted in Figs. 5 and 6 for each SNR.

From the obtained results, we can conclude that the outputs correspond to the real data with a perfect adequacy and the errors are relatively small.

To test the robustness of the algorithm, a Monte Carlo simulation is performed for 50 sets of noise realization, with \(SNR=34\) dB and \(SNR=26\) dB.

The mean values and the variance of the estimated parameters, and the obtained criteria J are listed in Table 2.

It can be noticed that the parameters of the linear part, nonlinear part and the fractional order converge to their exact values. The resulting criterion J is equal to \(3.2e-4\) for \(SNR=34\) dB, while for \(SNR=25\) dB, it is equal to \(2.4e-3\). These results clearly verify the effectiveness of the proposed method and confirm its statistical performance.

5.2 Application to a robot arm benchmark

To illustrate further the method, a benchmark data set taken from the identification database DAISY (Database for the Identification of Systems) [37] is used.

The identification of an experimental flexible robot arm shown in Fig. 7 is intended. The system consists of an arm installed on an electrical motor, whose input is the reaction torque of the structure on the ground, and the output is the acceleration of the flexible arm. The measured data set contains 1024 samples, which is divided into two parts: the first part is selected for the identification task, while the second part is used for the validation procedure.

The input/output signals of the experimental robot arm are represented in Fig. 8.

This nonlinear benchmark has been modeled in the literature using neural networks, and classical NARX and NLARX structures [38, 39], and in this study, the fractional Hammerstein–Wiener model is tested.

In the first step, the best structure of the model is investigated, different choices of the orders \(n_{{\varvec{a}}}\), \(n_{{\varvec{b}}}\), \(n_{{\varvec{p}}}\) and \(n_{{\varvec{q}}}\) are tested, and the best structure is determined from the lowest value of the quadratic criterion J. The analysis of the criteria evolution of different structures is illustrated in Fig. 9, and Table 3 shows the obtained values of the criterion J.

We can conclude that the best model structure is obtained for the orders \(n_{{\varvec{a}}}=3\), \(n_{{\varvec{b}}}=5\), \(n_{{\varvec{p}}}=2\), and \(n_{{\varvec{q}}}=1\) with the mean square error \(J=9e-3\).

Using this structure, the robot arm’s measured output is compared with the estimated one in Fig. 10 along with the prediction error.

The estimated output corresponds to the real data with a good adequacy, and the error is small. The validation results are depicted in Fig. 11.

The estimated model of the robot arm’s benchmark is a fractional H-W model of order \({\tilde{\alpha }}=0.701\), with the parameters given by the vectors a,\( \; b\), \( \; p\), \( \; q\) as follows:

The fractional H-W model simulation results show a good agreement with the experimental robot arm’s data, and the parameters are estimated with relatively less errors than the ones reported in the literature. Moreover, a model complexity reduction is achieved since the number of the model parameters is 11 versus 16 or more in the literature and the identification procedure is achieved for 40 iterations. This confirms the efficiency of the proposed identification method.

6 Conclusion

In this paper, a novel approach for the fractional order H-W system identification is developed. An output error framework is adopted based on the robust Levenberg–Marquardt algorithm. The difficulty of the parametric sensitivity functions implementation is circumvent by reformulating the H-W model output under a regression form. The main advantage is that the gradient and the Hessian equations can be derived easily and the identification burden is drastically reduced.

The method’s efficiency has been confirmed in an academic example where consistent estimates of the subsystems parameters and the fractional order are obtained. The estimator statistical performance in presence of noise is verified using Monte Carlo simulations.

The quality of a nonlinear model requires the balance between the accuracy, the number of parameters and the computational load of the identification. The application of the fractional H-W model to the study of a flexible robot arm validates the performance of the developed system identification methodology, where a satisfactory model fit is achieved with a reduced number of parameters.

Further work will consider the extension of the identification approach to other fractional cascaded block-oriented models.

References

Chen, F., Ding, F.: Recursive least squares identification algorithms for multiple-input nonlinear Box-Jenkins systems using the maximum likelihood principle. J. Comput. Nonlinear Dyn. 11(2), 021005 (2016)

Zhao, W., Chen, H.F.: Identification of Wiener, Hammerstein, and NARX systems as Markov chains with improved estimates for their nonlinearities. Syst. Control Lett. 61(12), 1175–1186 (2012)

Jia, L., Li, X., Chiu, M.S.: The identification of neuro-fuzzy based MIMO Hammerstein model with separable input signals. Neurocomputing 174, 530–541 (2016)

Mathews, V.J., Sicuranza, G.: Polynomial Signal Processing, p. 452. Wiley, New York (2000)

Vörös, J.: Recursive identification of time-varying non-linear cascade systems with static input and dynamic output non-linearities. Trans. Inst. Meas. Control 40(3), 896–902 (2018)

Taringou, F., Srinivasan, B., Malhame, R., Ghannouchi, F.: Hammerstein-Wiener model for wideband RF transmitters using base-band data. In: Microwave Conference, Asia-Pacific (2007)

Crama, P., Schoukens, J.: Hammerstein-Wiener system estimator initialization. Automatica 40(9), 1543–1550 (2004)

Luo, X.S., Song, Y.D.: Data-driven predictive control of Hammerstein-Wiener systems based on subspace identification. Inf. Sci. 422, 446–447 (2018)

Kumar, P., Devanand, R., Schoen, M. P.: sEMG and skeletal muscle force modeling: a nonlinear hammerstein-wiener model, multiple regression model and entropy based threshold approach. In: 2nd International Electronic Conference on Entropy and Its Applications. Multidisciplinary Digital Publishing Institute (2015)

Zambrano, D., Tayamon, S., Carlsson, B., Wigren, T.: Identification of a discrete-time nonlinear Hammerstein-Wiener model for a selective catalytic reduction system. Am. Control Conf. 2011, 78–83 (2011)

Roodgar Amoli, E., Salehinia, S., Ghoreishi, M.: Comparative study of expert predictive models based on adaptive neuro fuzzy inference system, nonlinear autoregressive exogenous and Hammerstein-Wiener approaches for electrical discharge machining performance: Material removal rate and surface roughness. J. Eng. Manuf. 230(9), 1690–1701 (2016)

Nadimi, E.S., Green, O., Blanes-Vidal, V., Larsen, J.J., Christensen, L.P.: Hammerstein-Wiener model for the prediction of temperature variations inside silage stack-bales using wireless sensor networks. Biosyt. Eng. 112(3), 236–247 (2012)

Abu Arqub, O.: Solutions of time-fractional Tricomi and Keldysh equations of Dirichlet functions types in Hilbert space. Numer. Methods Partial Differ. Equ. 34(5), 1759–1780 (2018)

Arqub, O.A., Maayah, B.: Numerical solutions of integrodifferential equations of Fredholm operator type in the sense of the Atangana-Baleanu fractional operator. Chaos, Solitons Fractals 117, 117–124 (2018)

Abu Arqub, O.: Numerical algorithm for the solutions of fractional order systems of Dirichlet function types with comparative analysis. Fundam. Inf. 166(2), 111–137 (2019)

Abu Arqub, O.: Application of residual power series method for the solution of time-fractional Schrödinger equations in one-dimensional space. Fundam. Inf. 166(2), 87–110 (2019)

Burov, S., Barkai, E.: Fractional Langevin equation: overdamped, underdamped, and critical behaviors. Phys. Rev. E 78(3), 031112 (2008)

Tang, Y., Zhang, X., Hua, C., Li, L., Yang, Y.: Parameter identification of commensurate fractional-order chaotic system via differential evolution. Phys. Lett. A. 376(4), 457–464 (2012)

Djamah, T., Mansouri, R., Djennoune, S., Bettayeb, M.: Heat transfer modeling and identification using fractional order state space models. Journal europaan des systemès automatisès. 42(6–8), 939–951 (2008)

Isfer, L.A.D., Lenzi, M.K., Lenzi, E.K.: Identification of biochemical reactors using fractional differential equations. Lat. Am. Appl. Res. 40(2), 193–198 (2010)

Wang, Y., Ding, F.: Recursive least squares algorithm and gradient algorithm for Hammerstein-Wiener systems using the data filtering. Nonlinear Dyn. 84(2), 1045–1053 (2016)

Wang, D.Q., Ding, F.: Hierarchical least squares estimation algorithm for Hammerstein-Wiener systems. IEEE Signal Process. Lett. 19(12), 825–828 (2012)

Brouri, A., Kadi, L., Slassi, S.: Frequency identification of Hammerstein-Wiener systems with backlash input nonlinearity. Int. J. Control Autom. Syst. 15(5), 2222–2232 (2017)

Ni, B., Gilson, M., Garnier, H.: Refined instrumental variable method for Hammerstein-Wiener continuous-time model identification. IET Control Theory. A. 7(9), 1276–1286 (2013)

Hammar, K., Djamah, T., Bettayeb, M. (2015, December). Fractional Hammerstein system identification using particle swarm optimization. In: The 7th International Conference on Modelling, Identification and Control ( ICMIC), Sousse, Tunisia (2015)

Moghaddam, M.J., Mojallali, H., Teshnehlab, M.: Recursive identification of multiple-input single-output fractional-order Hammerstein model with time delay. Appl. Soft Comput. 70, 486–500 (2018)

Rahmani, M.R., Farrokhi, M.: Identification of neuro-fractional Hammerstein systems: a hybrid frequency-/time-domain approach. Soft Comput. 22(24), 8097–8106 (2017)

Sersour, L., Djamah, T., Bettayeb, M.: Nonlinear system identification of fractional Wiener models. Nonlinear Dyn. 92(4), 1493–1505 (2018)

Sersour, L., Djamah, T., Bettayeb, M.: Identification of Wiener fractional model using self-adaptive velocity particle swarm optimization. In: The 7th International Conference on Modelling, Identification and Control ( ICMIC 2015), Sousse, Tunisia (2015)

Allafi, W., Zajic, I., Uddin, K., Burnham, K.J.: Parameter estimation of the fractional-order Hammerstein-Wiener model using simplified refined instrumental variable fractional-order continuous time. IET. Control Theory A. 11(15), 2591–2598 (2017)

Ray, S.S., Atangana, A., Noutchie, S.C., Kurulay, M., Bildik, N., Kilicman, A.: Fractional calculus and its applications in applied mathematics and other sciences. Math. Probl. Eng. 2014, 2 (2014)

Ionescu, C., Kelly, J.F.: Fractional calculus for respiratory mechanics: power law impedance, viscoelasticity, and tissue heterogeneity. Chaos Solitons Fractals 102, 433–440 (2017)

Podlubny, I.: Fractional-order systems and \(PI^{\lambda }D^{\mu }\) controllers. IEEE Trans. Autom. Control 44(1), 208–214 (1999)

Miller, K.S., Ross, B.: An Introduction to the Fractional Calculus and Fractional Differential Equations. Wiley, San Fransisco (1993)

Bai, E.W.: An optimal two-stage identification algorithm for Hammerstein-Wiener nonlinear systems. Automatica 34(3), 333–338 (1998)

Wang, D., Ding, F.: Extended stochastic gradient identification algorithms for Hammerstein-Wiener ARMAX systems. Comput. Math. Appl. 56(12), 3157–3164 (2008)

De Moor, B., De Gersem, P., De Schutter, B., Favoreel, W.: DAISY: A database for identification of systems. J A 38(3), 4–5 (1997)

Schoukens, J., Suykens, J., Ljung, L.: Wiener-hammerstein benchmark, 15th IFAC Symposium on System Identification. St. Malo, France (2009)

Ugalde, H.M.R., Carmona, J.C., Reyes-Reyes, J., Alvarado, V.M., Mantilla, J.: Computational cost improvement of neural network models in black box nonlinear system identification. Neurocomputing 166, 96–108 (2015)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hammar, K., Djamah, T. & Bettayeb, M. Nonlinear system identification using fractional Hammerstein–Wiener models. Nonlinear Dyn 98, 2327–2338 (2019). https://doi.org/10.1007/s11071-019-05331-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-019-05331-9