Abstract

In this paper, an extended state observer-based adaptive prescribed performance control technique is proposed for a class of nonlinear systems with full-state constraints and uncertainties. An extraordinary feature is that not only the control problem of prescribed performance tracking and full-state constraints are solved simultaneously, but also the parametric uncertainties and disturbances are considered, which will make it difficult to design a stable controller. For this purpose, the extended state observer and adaptive technique are integrated to obtain estimations of disturbances and parameters. Then, based on the combination of prescribed performance and barrier Lyapunov function, a novel backstepping control scheme is developed with feedforward compensation of parameters and disturbances to ensure that the tracking error is kept within a specified prescribed performance bound without violation of full states at all times. Moreover, the boundedness of all signals in the closed-loop system is proved and asymptotic tracking can be realized if the disturbances are time-invariant. Finally, two simulation examples are performed to highlight the efficiency of the proposed approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

For practical control systems, high-performance control of nonlinear systems has always attracted much attention due to uncertainties (including parametric uncertainties and disturbances), which exist in most physical systems and may reduce the tracking accuracy and even lead to system instability. Many nonlinear control approaches are designed for nonlinear systems to improve their performance, such as adaptive control [1], adaptive robust control [2], robust adaptive control [3], sliding mode control (SMC) [4] and H∞ control [5]. However, when the disturbance becomes the main obstacle for high-performance control of the system, the above approaches always employ high-gain feedback to suppress the influence of disturbance on the system. As we know, high-gain feedback should be avoided in practical control systems due to high-frequency dynamics and measurement noise, which can deteriorate the control performance of the system and even destabilize system. If disturbances are known, they can be simply compensated by feedforward to eliminate their influence on the control performance. But in fact, the disturbances are unknown and generally immeasurable. Thus, based on disturbance observer, which is employed to get the estimated value of the disturbance, disturbance compensation control approaches are developed to eliminate its adverse effect on the control performance and enhance the anti-disturbance capability of the system. In recent years, nonlinear control strategies based on disturbance observers for total uncertainties were developed [6,7,8,9,10,11,12]. However, due to the bandwidth limitation of the observer caused by noise, the performance of the disturbance observer is limited, so it is difficult to achieve the perfect compensation of the total uncertainties. Putting system parametric uncertainties into system total interference will increase observer burden and reduce observation accuracy. When the uncertainties of the system mainly come from the strong parametric uncertainties, the control performance of disturbance compensation approach is often inferior to the nonlinear adaptive control with strong learning ability for parametric uncertainties [13]. Thus, adaptive control for parametric uncertainties was integrated into the disturbance compensation control, and better control effect was obtained [14,15,16].

However, transient control performance of tracking error is not considered emphatically in the above control strategies, and the performance indexes such as overshoot, convergence speed and steady-state tracking error should be guaranteed by the proposed control scheme in practical engineering. Recently, because of the ability to constrain the tracking performance of the system, prescribed performance control (PPC) attracts a lot of attention [17,18,19]. In order to suppress uncertainties, an adaptive dynamic surface controller with prescribed tracking performance was proposed for MIMO nonlinear systems in [17]. Based on a new formulation of performance, an improved prescribed performance controller was designed for nonaffine pure-feedback systems in [18]. When the system suffers from strong disturbance, these disturbance suppression control strategies still rely on high gain feedback to achieve good control accuracy, which are conservative. Furthermore, some compensation strategies for uncertainties are designed [20,21,22,23]. An adaptive prescribed performance motion controller and a RISE-based asymptotic prescribed performance tracking controller were proposed for nonlinear servo mechanisms [20, 21], where neural network was applied to approximate the system unknown dynamics. A composite controller with sliding mode disturbance observer is designed for space manipulators with prescribed performance [23].

On the other hand, as many practical systems are subject to the effect of the constraints, state constraint control also attracts many researchers. However, the existing PPC studies rarely take into account the system state constraints except [24,25,26,27,28]. In [26], an improved prescribed performance constraint control method was proposed for a strict-feedback nonlinear dynamic system. However, this control strategy only estimates the upper bound of the disturbance to suppress the influence of the disturbance on the control performance, which will cause the control strategy to be conservative. In [27], based on barrier Lyapunov function (BLF), a PPC method with neural network was proposed for Euler–Lagrange systems to constrain full states and achieve prescribed performance tracking, where the adaptive neural network was designed to approximate system uncertainties. In [28], a prescribed performance output feedback dynamic surface control is proposed for a class of strict-feedback uncertain nonlinear systems, full-state constraints is guaranteed by BLF, and neural network is also applied to approximate the system unknown dynamics. As we all know, neural network needs a lot of data for training, which may lead to the accurate estimation convergence time is too long.

Inspired by the above studies, drawn on the experience of the controller design idea in [29, 30], an extended state observer-based adaptive prescribed performance control is studied for a class of nonlinear systems with full-state constraints and uncertainties. The main contributions of the proposed controller are as follows:

-

(1)

This paper studies a more general class of nonlinear systems with parametric uncertainties and disturbances; the disturbance observer and adaptive control are first integrated into area of prescribed performance-full-state constraints control of nonlinear systems. Compensation strategy for uncertainties is designed. Hence, the control performance is expected to be improved without high gain feedback and the conservatism of controller can be reduced in this study. More importantly, the elimination of uncertainties can improve the feasibility of prescribed performance and state constraints.

-

(2)

In order to solve synthetically the control problem of prescribed performance tracking and full-state constraints, a backstepping design with uncertainties compensation is proposed by integrating prescribed performance function (PPF) and full-state constraint function, which can guarantee that the constraints of all the state are not violated and the tracking error is kept within a specified bound at all times, simultaneously.

2 Problem formulation and preliminaries

Consider a class of full-state constrained single-input single-output (SISO) nonlinear systems with uncertainties:

where \(\overline{x}_{i}\) = [x1, x2, …, xi]T ∈ Ri with i = 1, 2, …, n.\(\overline{x}_{n}\) = x = [x1, x2, …, xn]T ∈ Rn is the state vector, u ∈ R is control input, y ∈ R is system output, \(\varphi_{i} \in R^{\rho } ,{\kern 1pt} {\kern 1pt} i = 1, \ldots ,n,\) are known shape functions, which are also assumed to satisfy the Lipchitz condition, \(\theta { = [}\theta_{1} {,} \ldots {,}\theta_{\rho } {]}^{T} \in R^{\rho }\) is unknown constant parameters vector, \(d_{i} \left( t \right)\) ∈ R, i = 1, …, n, are disturbances.

In order to ensure that: (1) All signals in the closed-loop system are bounded; (2) all system states xi, i = 1, …, n, are constrained in \(\Omega_{{x_{i} }} = \left\{ {x_{i} :\left| {x_{i} } \right| \le c_{i} ,i = 1,...,n} \right\}\) for all t ≥ 0 when \(x\left( 0 \right) \in \Omega_{{x_{i} }}\),\(c_{i} > 0\) are constants; (3) the high control performance with prescribed tracking precision is also achieved. Then, the following assumptions are given and the proposed controller is designed in next section.

Assumption 1

The time derivative \(\dot{d}_{i}\) is as follows [31], i.e.,

where \(\overline{d}_{id} > 0\) are constants.

Assumption 2

[32] The desired trajectory x1d(t) and its ith-order derivatives \(x_{1d}^{\left( i \right)} \left( t \right)\), i = 1,..., n satisfy \(x_{1d} \left( t \right) \le \upsilon_{0} \le c_{1} - \rho_{0}\) and \(\left| {x_{1d}^{\left( i \right)} \left( t \right)} \right| \le \upsilon_{i}\), \(\upsilon_{i} > 0\) are constants.

Remark 1

Assumption 1 is a basic premise for extended state observer (ESO)-based control and has been given in [33,34,35], and these studies show that this assumption is applicable to physical applications.

The following lemmas will be used in our design.

Lemma 1

[36] There exist positive definite continuous functions Vi:(−ci, ci) → R+ , i = 1, 2,…, n, which are also differentiable on \(\Omega_{{x_{i} }}\). Vi(xi) → ∞ when xi → ± ci, i = 1,2,…,n. If dVi(xi)/dt ≤ 0 in set \(\Omega_{{x_{i} }}\), then for all t ∈ [0, + ∞], x(t) ∈ \(\Omega_{{x_{i} }}\).

Lemma 2

[37] Consider error e(t) and transformed errors z1(t). If z1(t) is bounded, prescribed performance of e(t) is satisfied for all t ≥ 0.

3 The controller design and stability analysis

3.1 Extended state observer

In order to estimate all uncertainties, we extend the uncertainties as additional states xe1, xe2,…, xen, respectively, and let hi(t), i = 1, 2, …, n represent their time derivatives. Different from Cheng et al. [38], this structure cannot be used to estimate the state of the system. Throughout this paper, \(\hat{ \bullet }\) represents the estimation of \(\bullet\) and \(\tilde{ \bullet } = \bullet - \hat{ \bullet }\) denotes the estimation error. ESOs are constructed for each equation of the system model (1) as:

where ωi > 0, i = 1, …, n are design parameters of observers, l1 and l2 are factors of the Hurwitz polynomial s2 + l1s + l2. Since the uncertainties of each equation in (1) consist of both disturbances \(d_{i} \left( {\overline{x}_{i} ,t} \right)\) and parametric uncertainties \(\tilde{\theta }\), two definitions of the extended states are given.

Case 1

We extend xei = di, i = 1,…,n., let hi(t) be the time derivatives of xei. Then, we have

Define \(\varepsilon_{i} = \left[ {\varepsilon_{i1} ,\varepsilon_{i2} } \right]^{{\text{T}}} = \left[ {\tilde{x}_{i} ,\tilde{x}_{ei} /\omega_{i} } \right]^{{\text{T}}} ,\quad i = 1,...,n\), the estimation error dynamics are obtained as follows:

where \(A = \left[ {\begin{array}{*{20}c} { - l_{1} } & 1 \\ { - l_{2} } & 0 \\ \end{array} } \right]\), \(B_{1} = \left[ {1,0} \right]^{{\text{T}}}\), \(B_{2} = \left[ {0,1} \right]^{{\text{T}}}\).

Case 2

We extend \(x_{ei} = d_{i} + \tilde{\theta }^{T} \varphi_{i}\), i = 1, …, n. Then, the dynamic of estimation errors can be obtained by

As the matrix A is Hurwitz, \(A^{T} P + PA = - 2I\) is established with a positive definite matrix P, the matrix I is an identity matrix.

Remark 2

In the above two cases, the structures of ESOs are the same, according to the different definitions of extended states; we have different dynamic state estimation errors. Based on the BLF with this property, two different results can be obtained by two stability analyses discussed later.

3.2 Controller design

Let the tracking error e(t) = x1 − x1d satisfy strictly the following inequality to realize the prescribed performance.

where δl > 0 and δu > 0 are design parameters. The performance function ρ(t) is given in (8), which is strictly positive decreasing smooth and bounded:

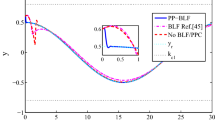

where ρ0, ρ∞ and k are positive constants. The approximate curve of prescribed performance index inequality (7) is shown in Fig. 1.

Obviously, in (7), \(- \rho_{0}\) and \(\rho_{0}\) constrain the lower bound of the undershoot and the upper bound of the overshoot of the output control error e(t), respectively. k is the convergence rate, and ρ∞ constrains the steady-state bound of e(t). By selecting appropriate parameters such as ρ0, ρ∞ and k, the transient and stability performance of output control error can be planned in advance, and the improvement of transient performance can be completed according to the actual demand of the system.

Define zi = xi − αi − 1, i = 2, …, n, αi − 1 are virtual controllers. The controller design process is given as follows:

Step 1: Define the positive definite BLF as follows:

where log(χ) is the natural logarithm of χ,\(z_{1} = e\left( t \right)/\rho \left( t \right)\).

Differentiating V1, substituting (1) into it yields

The virtual controller α1 is designed to be

where k1 > 0 is a design parameter.

Then, the dynamic \(\dot{z}_{1}\) becomes

Step 2: Define the following positive definite BLF

where L2 > 0 is a design parameter.

Differentiating (13) and noting (1), we have

The virtual controller α2 is designed to be

where k2 > 0 is design parameter,\(\dot{\alpha }_{1} = \dot{\alpha }_{1c} + \dot{\alpha }_{1u}\), \(\dot{\alpha }_{1c}\) and \(\dot{\alpha }_{1u}\) are the calculable part and incalculable part, respectively.

Then, we have

Step i: (3 ≤ i ≤ n − 1): Define the following positive definite functions

where Li > 0 are design parameters.

Differentiating (18) and noting (1), we have

Similar to (15), the virtual controllers αi are developed to be

where ki > 0 are design parameters,\(\dot{\alpha }_{i - 1} = \dot{\alpha }_{{\left( {i - 1} \right)c}} + \dot{\alpha }_{{\left( {i - 1} \right)u}}\), \(\dot{\alpha }_{{\left( {i - 1} \right)c}}\) and \(\dot{\alpha }_{{\left( {i - 1} \right)u}}\) are the calculable part and incalculable part, respectively.

Then, we have

From (22), we have

Step n: Choose nth positive definite function as follows:

where Ln > 0 is a design parameter.

Differentiating Vn, substituting (1) into it yields

The input u is designed as

where kn > 0 is a design parameter, \(\dot{\alpha }_{n - 1} = \dot{\alpha }_{{\left( {n - 1} \right)c}} + \dot{\alpha }_{{\left( {n - 1} \right)u}}\), \(\dot{\alpha }_{{\left( {n - 1} \right)c}}\) denotes the calculable part, \(\dot{\alpha }_{{\left( {n - 1} \right)u}}\) denotes the incalculable part.

When the following conditions hold:

-

(1)

Suitable parameters ki, ωi and Li are selected to satisfy

$$ c_{i + 1} \ge \left| {\alpha_{i} } \right|_{\max } + L_{i + 1} $$ -

(2)

The initial conditions zi(0) satisfy

$$ \left| {z_{1} \left( 0 \right)} \right| \le \rho_{0} ,{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} \left| {z_{i} \left( 0 \right)} \right| \le L_{i} ,i = 2,...,n $$

The following two theorems are carried out to ensure the stability of the closed-loop system.

Theorem 1

If the disturbances di, i = 1,…, n, are time-invariant in system (1), i.e., hi (t) = 0, with the proposed controller (26) with the adaptation law as follows:

where \(\Gamma > 0\) is a diagonal adaptation rate matrix. Then, all signals of closed-loop system can be guaranteed to be bounded with prescribed performance tracking, the constraints of full states are not violated, and asymptotic track performance is also achieved, i.e., z1 → 0 as t → ∞.

Proof

See Appendix 1.

Theorem 2

If the disturbances di, i = 1,..., n, are time-variant, i.e., \(h_{i} (t) \ne 0\), all signals are bounded with the proposed control law (26), prescribed performance tracking is obtained and the constraints of full states are not violated. The following positive definite Lyapunov function

is bounded by

where \(\lambda = \left\{ {2\rho_{0}^{ - 1} k_{1} ,{\kern 1pt} 2k_{2} ,{\kern 1pt} {\kern 1pt} ...,2k_{n} ,{\kern 1pt} {\kern 1pt} {\kern 1pt} {\kern 1pt} \frac{{2\omega_{1} - n - 1}}{{2\lambda_{\max } \left( P \right)}},{\kern 1pt} {\kern 1pt} ...,{\kern 1pt} {\kern 1pt} \frac{{2\omega_{n} - n - 1}}{{2\lambda_{\max } \left( P \right)}}} \right\}_{\min }\), λmax(P) is the maximum eigenvalue of matrix P, \(\sigma = \sum_{i = 1}^{n} {\frac{{\left\| {PB_{2} } \right\|^{2} \left| {h_{i} \left( t \right)} \right|_{\max }^{2} }}{{2\omega_{i}^{2} }}}\).

Proof.

See Appendix 2.

4 Simulation

Two simulation examples are carried out to testify the validity of the proposed algorithm as follows.

Example 1.

A spring, mass and damper system given in [3, 39] is considered. The dynamic model is modeled as follows:

where x1 is the position and x2 is the velocity, θ = [θ1, θ2]T = [k/m, c/m]T, \(\varphi = \left[ {x_{1} ,x_{2} } \right]^{{\text{T}}}\). The system parameters are found in Table 1.

The ESO is constructed for (31):

The controller is designed as

The virtue controller is designed as

The adaptation law is designed as

where \(\Gamma_{1} > 0\) is a diagonal adaptation rate matrix, \(\varepsilon_{1} = \left[ {\varepsilon_{11} ,\varepsilon_{12} ,\varepsilon_{13} } \right]^{{\text{T}}} = \left[ {\tilde{x}_{1} ,\tilde{x}_{2} /\omega_{1} ,\tilde{x}_{e} /\omega_{1}^{2} } \right]^{{\text{T}}}\), \(B_{1} = \left[ {0,1,0} \right]^{T}\).

The parameters of the proposed controller (i.e., APC) are selected as k1 = 200, k2 = 500, L2 = 2, ω1 = 200, θ0 = 100, \(\hat{\theta }\left( 0 \right)\) = [5, 3]T, \(\Gamma_{1} = \left[ {5.7,{\kern 1pt} {\kern 1pt} 2.2} \right]^{{\text{T}}}\), ρ0 = 0.3, ρ∞ = 0.012, k = 0.0009, c1 = 0.8, c2 = 2. The desired trajectory yd(t) = 0.5sin(0.5πt)[1 − exp(−t3)], the initial value of x1 is set as x1(0) = 0.2, d(t) = sin(2πt).

Remark 3

As the accurate disturbance estimation can be guaranteed by increasing the observer parameters, in order to test the performance of ESO, large disturbance is added into the system; In addition, the initial value of x1(0) is assigned to be 0.2 to test the effectiveness of the prescribed performance control and state constraint control.

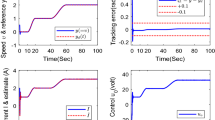

The simulation results are exhibited in Figs. 2, 4, 5, 6, 7 and 8. Figure 2 shows the desired trajectory x1d and output state x1. After a short transient response process, the output trajectory can track the desired trajectory quite well. Figure 3 presents the control input u. The tracking error e(t) and prescribed performance bounds are given in Fig. 4. Obviously, the output tracking error of the proposed controller converges to the neighborhood of zero and the prescribed performance bounds are not violated. From Figs. 5 and 6, it can be seen that the proposed controller can meet the requirements of state constraints. The parameters estimation is presented in Fig. 7. The real parameters of the system are estimated accurately. Figure 8 illustrates d and disturbance estimations. Obviously, the actual disturbances are obtained by ESO.

Example 2.

A single inverted pendulum (SIP) system [40, 41] is given as follows:

where \(\theta^{T} = \left[ {\theta_{1} ,\theta_{2} } \right] = \left[ {1,1} \right]\), \(\varphi = \left[ {f_{1} \left( x \right), - f_{2} \left( x \right)} \right]^{{\text{T}}}\), \(f_{1} \left( x \right) = \frac{{g\sin x_{1} }}{{l\left( {4/3 - {{m\cos^{2} x_{1} } \mathord{\left/ {\vphantom {{m\cos^{2} x_{1} } {\left( {m_{c} + m} \right)}}} \right. \kern-\nulldelimiterspace} {\left( {m_{c} + m} \right)}}} \right)}}\), \(f_{2} \left( x \right) = \frac{{{{mx_{2}^{2} \cos x_{1} \sin x_{1} } \mathord{\left/ {\vphantom {{mx_{2}^{2} \cos x_{1} \sin x_{1} } {\left( {m_{c} + m} \right)}}} \right. \kern-\nulldelimiterspace} {\left( {m_{c} + m} \right)}}}}{{4/3 - {{m\cos^{2} x_{1} } \mathord{\left/ {\vphantom {{m\cos^{2} x_{1} } {\left( {m_{c} + m} \right)}}} \right. \kern-\nulldelimiterspace} {\left( {m_{c} + m} \right)}}}}\), \(\beta_{{1}} \left( x \right) = \frac{{{{\cos x_{1} } \mathord{\left/ {\vphantom {{\cos x_{1} } {\left( {m_{c} + m} \right)}}} \right. \kern-\nulldelimiterspace} {\left( {m_{c} + m} \right)}}}}{{l\left( {4/3 - {{m\cos^{2} x_{1} } \mathord{\left/ {\vphantom {{m\cos^{2} x_{1} } {\left( {m_{c} + m} \right)}}} \right. \kern-\nulldelimiterspace} {\left( {m_{c} + m} \right)}}} \right)}}\).

The SIP is described by the states found in Table 2.

The ESO is constructed for (36):

The controller is designed as

The virtue controller is designed as

The adaptation law is designed as

where \(\Gamma_{1} > 0\) is a diagonal adaptation rate matrix, \(\varepsilon_{1} = \left[ {\varepsilon_{11} ,\varepsilon_{12} ,\varepsilon_{13} } \right]^{{\text{T}}} = \left[ {\tilde{x}_{1} ,\tilde{x}_{2} /\omega_{1} ,\tilde{x}_{e} /\omega_{1}^{2} } \right]^{{\text{T}}}\), \(B_{1} = \left[ {0,1,0} \right]^{T}\).

In this simulation, the desired trajectory yd(t) = 2sin(πt)[1 − exp(−0.01t3)], d = 30sin(2πt). In order to prove the validity of disturbance compensation term, adaptive law, prescribed control performance of the proposed controller, the initial values of the states are x1(0) = 0.2. The parameters of the proposed controller (i.e., APC) are given as k1 = 5, k2 = 50, L2 = 2, ω1 = 300, θ0 = 20, \(\hat{\theta }\left( 0 \right)\) = [1.6, 1.6]T, \(\Gamma_{1} = \left[ {10,{\kern 1pt} {\kern 1pt} 4.2} \right]^{{\text{T}}}\), ρ0 = 0.3, ρ∞ = 0.0005, k = 0.003, c1 = 0.4, c2 = 2.

The simulation results are shown in Figs. 9, 10, 11, 12, 13, 14 and 15. Figure 9 shows the desired trajectory x1d and output state x1. The output trajectory can track the desired trajectory quite quickly and well. Figure 10 presents the control input u. The tracking error e(t) and prescribed performance bounds are given in Fig. 11. Obviously, the output tracking error of the proposed controller converges to the neighborhood of zero within the bounds of the prescribed performance function limitation. From Fig. 12 and Fig. 13, it can be seen that the requirements of state constraints can be satisfied by the proposed controller. As presented in Fig. 14, the real parameters of the system are estimated accurately. Figure 15 illustrates d and disturbance estimations. Obviously, the actual disturbances are obtained by ESO.

5 Conclusion

In this study, an ESO-based adaptive prescribed performance controller is developed for a class of nonlinear systems with full-state constraints and uncertainties. Adaptive control for the system parametric uncertainties and multiple ESOs for disturbances are integrated into the prescribed performance and full-state constraints design via backstepping technique to achieve prescribed performance tracking of output error without violation of full states. The global stability of the proposed control approach is proved. Finally, two simulation examples are employed to demonstrate the performance of the proposed method.

References

Krstic, M.: On using least-squares updates without regressor filtering in identification and adaptive control of nonlinear systems. Automatica 45(3), 731–735 (2009)

Yao, B., Tomizukai, M.: Adaptive robust control of SISO nonlinear systems in a semi-strict feedback form. Automatica 33(5), 893–903 (1997)

Wen, C.Y., Zhou, J., Liu, Z.T.: Robust adaptive control of uncertain nonlinear systems in the presence of input saturation and external disturbance. IEEE Trans. Autom. Control 56(7), 1672–1678 (2011)

Shtessel, Y., Taleb, M., Plestan, F.: A novel adaptive-gain supertwisting sliding mode controller: methodology and application. Automatica 48(5), 759–769 (2012)

Chen, B.S., Lee, C.H., Chang, Y.C.: H-infinity tracking design of uncertain nonlinear SISO systems: adaptive fuzzy approach. IEEE Trans. Fuzzy Syst. 4(1), 32–43 (1996)

Guo, B.Z., Wu, Z.H., Zhou, H.C.: Active disturbance rejection control approach to output-feedback stabilization of a class of uncertain nonlinear systems subject to stochastic disturbance. IEEE Trans. Autom. Control 61(6), 1613–1618 (2016)

Deshpande, V.S., Phadke, S.B.: Control of uncertain nonlinear systems using an uncertainty and disturbance estimator. Trans. ASME J. Dyn. Syst., Meas., Control 134(2), 024501 (2012)

Chen, M., Wu, Q.X., Cui, R.X.: Terminal sliding mode tracking control for a class of SISO uncertain nonlinear systems. ISA Trans. 52(2), 198–206 (2013)

Chen, M., Wu, Q.X., Jiang, C.S.: Disturbance-observer-based robust synchronization control of uncertain chaotic systems. Nonlinear Dyn. 70(4), 2421–2432 (2012)

Liu, Y.L., Wang, H., Guo, L.: Composite robust H∞ control for uncertain stochastic nonlinear systems with state delay via a disturbance observer. IEEE Trans. Autom. Control 63(12), 4345–4352 (2018)

Ji, D.H., Jeong, S.C., Park, J.H., Won, S.C.: Robust adaptive backstepping synchronization for a class of uncertain chaotic systems using fuzzy disturbance observer. Nonlinear Dyn. 69(3), 1125–1136 (2012)

Rabiee, H., Ataei, M., Ekramian, M.: Continuous nonsingular terminal sliding mode control based on adaptive sliding mode disturbance observer for uncertain nonlinear systems. Automatica 109, 108515 (2019)

Yao, J.Y., Deng, W.X.: Active disturbance rejection adaptive control of uncertain nonlinear systems: theory and application. Nonlinear Dyn. 89(3), 1611–1624 (2017)

Chen, M., Shao, S.Y., Jiang, B.: Adaptive neural control of uncertain nonlinear systems using disturbance observer. IEEE Trans. Cybern. 47(10), 3110–3123 (2017)

Chen, M., Ge, S.S.: Adaptive neural output feedback control of uncertain nonlinear systems with unknown hysteresis using disturbance observer. IEEE Trans. Ind. Electron. 62(12), 7706–7716 (2015)

Pan, H.H., Sun, W.C., Gao, H.J., Jing, X.J.: Disturbance observer-based adaptive tracking control with actuator saturation and its application. IEEE Trans. Autom. Sci. Eng. 13(2), 868–875 (2016)

Wang, C.L., Lin, Y.: Adaptive dynamic surface control for MIMO nonlinear time-varying systems with prescribed tracking performance. Int. J. Control 88(4), 832–843 (2015)

Wang, Y.Y., Hu, J.B., Li, J., Liu, B.Q.: Improved prescribed performance control for nonaffine pure-feedback systems with input saturation. Int. J. Robust Nonlinear Control 29, 1769–1788 (2019)

Bechlioulis, C.P., Rovithakis, G.A.: Robust adaptive control of feedback linearizable MIMO nonlinear systems with prescribed performance. IEEE Trans. Autom. Control 53(9), 2090–2099 (2008)

Wang, S.B., Na, J., Ren, X.M.: RISE-based asymptotic prescribed performance tracking control of nonlinear servo mechanisms. IEEE Trans. Syst., Man, Cybern., Syst. 48(12), 2359–2370 (2018)

Na, J., Chen, Q., Ren, X.M., Guo, Y.: Adaptive prescribed performance motion control of servo mechanisms with friction compensation. IEEE Trans. Ind. Electron. 61(1), 486–494 (2014)

Wang, S.B., Ren, X.M., Na, J., Zeng, T.Y.: Extended-state-observer-based funnel control for nonlinear servomechanisms with prescribed tracking performance. IEEE Trans. Autom. Sci. Eng. 14(1), 98–108 (2017)

Zhu, Y.K., Qiao, J.Z., Guo, L.: Adaptive sliding mode disturbance observer-based composite control with prescribed performance of space manipulators for target capturing. IEEE Trans. Ind. Electron. 66(3), 1973–1983 (2019)

Chen, L.S., Wang, Q.: Prescribed performance-barrier Lyapunov function for the adaptive control of unknown pure-feedback systems with full-state constraints. Nonlinear Dyn. 95, 2443–2459 (2019)

Sun, T.R., Pan, Y.P.: Robust adaptive control for prescribed performance tracking of constrained uncertain nonlinear systems. J. Frank. Inst. 356, 18–30 (2019)

Han, S.I., Lee, J.M.: Improved prescribed performance constraint control for a strict feedback non-linear dynamic system. IET Control Theory Appl. 7(14), 1818–1827 (2013)

Zhao, K., Song, Y.D., Ma, T.D., He, L.: Prescribed performance control of uncertain Euler–Lagrange systems subject to full-state constraints. IEEE Trans. Neural Netw. Learn. Syst. 29(8), 3478–3489 (2018)

Zhang, J.J., Sun, Q.M.: Prescribed performance adaptive neural output feedback dynamic surface control for a class of strict-feedback uncertain nonlinear systems with full state constraints and unmodeled dynamics. Int. J. Robust Nonlinear Control 30(2), 459–483 (2020)

Cheng, J., Park, J.H., Zhao, X.D., Karimi, H.R., Cao, J.D.: Quantized nonstationary filtering of network-based Markov switching RSNSs: a multiple hierarchical structure strategy. IEEE Trans. Autom 65(11), 4816–4823 (2020)

Cheng, J., Huang W. T., Park, J. H., Cao J. D.: A hierarchical structure approach to finite-time filter design for fuzzy Markov switching systems with deception attacks. IEEE Trans. Cybern. https://doi.org/10.1109/TCYB.2021.3049476

Han, J.Q.: From PID to active disturbance rejection control. IEEE Trans. Ind. Electron 56(3), 900–906 (2009)

Tee, K.P., Ren, B., Ge, S.S.: Control of nonlinear systems with time-varying output constraints. Automatica 47(11), 2511–2516 (2011)

Zhao, Z.L., Guo, B.Z.: A novel extended state observer for output tracking of MIMO systems with mismatched uncertainty. IEEE Trans. Autom. Control 63(1), 211–218 (2018)

Guo, B.Z., Wu, Z.H.: Output tracking for a class of nonlinear systems with mismatched uncertainties by active disturbance rejection control. Syst. Control Lett. 100, 21–31 (2017)

Chen, S., Chen, Z.X.: On active disturbance rejection control for a class of uncertain systems with measurement uncertainty. IEEE Trans. Ind. Electron 68(2), 1475–1485 (2021)

Tee, K.P., Ge, S.S., Tay, E.H.: Barrier Lyapunov functions for the control of output-constrained nonlinear systems. Automatica 45(4), 918–927 (2009)

Kostarigka, A.K., Doulgeri, Z., Rovithakis, G.A.: Prescribed performance tracking for flexible joint robots with unknown dynamics and variable elasticity. Automatica 49, 1137–1147 (2013)

Cheng, J., Park, J.H., Cao, J.D., Qi, W.H.: A hidden mode observation approach to finite-time SOFC of Markovian switching systems with quantization. Nonlinear Dyn. 100(1), 509–521 (2020)

Wang, C.C., Yang, G.H.: Observer-based adaptive prescribed performance tracking control for nonlinear systems with unknown control direction and input saturation. Neurocomputing 284, 17–26 (2018)

Zong, Q., Zhao, Z.S., Zhang, J.: Higher order sliding mode control with self-tuning law based on integral sliding mode. IET Control Theory Appl. 4(7), 1282–1289 (2010)

Zuo, Z.Y.: Non-singular fixed-time terminal sliding mode control of non-linear systems. IET Control Theory Appl. 9(4), 545–552 (2015)

Ye, D., Cai, Y., Yang, H., Zhao, X.: Adaptive neural-based control for non-strict feedback systems with full-state constraints and unmodeled dynamics. Nonlinear Dyn. 97, 715–732 (2019)

Acknowledgements

This work was supported in part by the University Synergy Innovation Program of Anhui Province under Grant GXXT-2019-048.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1

Proof of Theorem 1. If the disturbances di, i = 1,..., n, are time-invariant, the following positive definite Lyapunov function is defined with xei = di in this case

Differentiating Vn, substituting (5) into it yields

As the matrix A is Hurwitz,\(A^{T} P + PA = - 2I\) is established, noting (23)–(26), we have

Utilizing the Young’s inequality, we obtain

Substituting (28) and (44) into (43), then we have

According to Lyapunov’s theorem, Va is uniformly ultimately bounded; thus, errors zi, \(\tilde{\theta }\), and \(\tilde{\varepsilon }\) are bounded. This further guarantees the boundedness of e1. Moreover, the adaptive parameters \(\hat{\theta }\) and \(\hat{x}_{ei}\) are all bounded. As x1 = e(t) + x1d(t), \(z_{1} = e\left( t \right)/\rho \left( t \right)\), \(\left| {z_{1} } \right| \le 1\) with Assumption 2 and (8), we have \(\left| {x_{1} } \right| \le c_{1}\), and x1 is bounded. α1 in (12) is a function of x1, z1,\(\hat{\theta }\),\(\dot{x}_{1d}\) and \(\hat{x}_{e1}\). Since the boundedness of x1, z1, \(\hat{\theta }\), \(\dot{x}_{1d}\) and \(\hat{x}_{e1}\), α1 is bounded. As \(\left| {x_{2} } \right| \le \left| {\alpha_{1} } \right|_{\max } + \left| {z_{2} } \right|\) and \(\left| {z_{2} } \right| \le L_{2}\), we obtain \(x_{2} \le c_{2}\) and α2 is bounded. Similarly,\(\left| {x_{i + 1} } \right|\), αi, i = 3, …, n-1 and the control input u are bounded. Consequently, all signals in the closed-loop system are bounded, prescribed performance tracking is obtained and full states are ensured to remain in the constrained field.

Appendix 2

Proof of Theorem 2. If the disturbances di, i = 1,..., n, are time-variant, xei = \(d_{i} \left( {\overline{x}_{i} ,t} \right) + \tilde{\theta }^{T} \varphi_{i} \left( {\overline{x}_{i} } \right)\). With (6), differentiating Vb defined in (29), we have

As \(A^{T} P + PA = - 2I\), noting (23), (25) and (28), we have

As \(\log \frac{{L_{j}^{2} }}{{L_{j}^{2} - z_{j}^{2} }} \le \frac{{z_{j}^{2} }}{{L_{j}^{2} - z_{j}^{2} }}\) in the interval zj < Lj [42], then

which leads to (30). Similar to the proof of Theorem 1, all signals in the closed-loop system are also bounded and prescribed performance tracking is obtained without violation of constraints of the full states.

Rights and permissions

About this article

Cite this article

Xu, Z., Xie, N., Shen, H. et al. Extended state observer-based adaptive prescribed performance control for a class of nonlinear systems with full-state constraints and uncertainties. Nonlinear Dyn 105, 345–358 (2021). https://doi.org/10.1007/s11071-021-06564-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-021-06564-3