Abstract

The finite-time stochastic synchronization of time-delay neural networks with noise disturbance is investigated according to finite-time stability theory of stochastic differential equation. Via constructing suitable Lyapunov function and controllers, finite-time stochastic synchronization is realized and sufficient conditions are derived. By analyzing the synchronization progress, factors affecting the convergence speed are given and feasible suggestions are proposed to improve the convergence rate. Finally, numerical simulations are given to verify the theoretical results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Since being proposed by Pecora and Carroll [1], chaotic synchronization has been considered and applied in many areas, such as chemical reaction, biological system, secure communication, information processing and so on. A variety of approaches have been proposed to investigate the chaos synchronization, including adaptive control [2, 3], optimal control [4], and sliding mode control [5,6,7]. Therefore, many kinds of synchronizations have been explored, involving lag synchronization [8], projective synchronization [9], anti-synchronization [10], burst synchronization [11], phase synchronization [12], hybrid synchronization [13] etc.

In the past decades, as a hot topic, dynamical behaviors of neural network have attracted much attention due to its good explaining some of the neurophysiologic phenomena and potential application in many fields [14,15,16]. In fact, the intrinsic time in realistic neuronal systems could be associated with response delay and propagation time delay in cell loop [17], which focuses on the synchronization stability under appropriate schemes. It is important to mention further guidance on prediction for collapse of synchronization and pattern stability [18, 19]in the end of the paper. Therefore, in practical engineering, it is desirable to realize the synchronization in finite time. For this, finite-time stability theory is brought forward [20,21,22,23], in which finite-time control techniques have been concerned. Research shows that the finite-time control technique has demonstrated better robustness and disturbance rejection property [24]. In fact, finite-time synchronization means the optimality in convergence time. Recently, combining the advantages of finite-time control technique and finite-time stability theorem, finite-time synchronization of complex networks was raised [25].

In real applications, almost all network systems received random uncertainties, e.g., stochastic forces and noisy measurements. Therefore, the network with noise perturbations aroused the interest of researchers [26, 27], especially the neural network, in which only a single node is considered with noise disturbances. However, in actual neural network, there is more than one node with noise disturbance; even all the nodes are provided with it. That is the reason why the stochastic synchronization has become one of the focused subjects [28,29,30], in most of which time-delay is hardly taken into account, while time-delay always exists between the neurons when communication is implemented in one neural network or between neural networks. Existing results show that, in some systems, time-delay often causes oscillation, divergence [31, 32]. Therefore, the dynamical behaviors of neural network with time-delay have been widely studied in recent years, especially the control of synchronization and stability. Accordingly, many schemes have been proposed for realizing the chaos synchronization of time-delay neural networks [33,34,35,36], in which noise disturbance is rarely considered. In real applications, time-delay and noise disturbance often coexist in many neural networks, which causes more complex dynamic behaviors and more difficult to control.

Motivated by above discussion, we consider a neural network with time-delay and noise disturbance, which includes vector-form Wiener process. This kind of network is more practical in real world. Using properties of the Wiener process and inequality techniques, suitable controllers are designed to ensure the finite-time stochastic synchronization of time-delay neural networks with noise disturbance and the factors affecting the convergence speed are found out. Several cases are given via numerical simulations to demonstrate the impact of the factors on the synchronization time.

The rest of this paper is arranged as follows. Section 2 describes the system and some relative preliminaries. In Sect. 3, the finite-time stochastic synchronization of time-delay neural networks is realized, sufficient conditions are given and some factors affecting the convergence speed are obtained via theoretical analysis. In Sect. 4, numerical simulations are demonstrated to verify the theoretical results. Section 5 draws some conclusions and gives future investigation directions.

2 System description and some preliminaries

In this section, some relative preliminaries are described to discuss the finite-time stochastic synchronization of time-delay neural networks with noise disturbance, and the time-delay neural network consisting of N nodes is considered as following

where \(x=(x_1 (t),x_2 (t),\ldots ,x_N (t))^{T}\) is the state vector of the neural network, \(x_i (t)\) is the state variable of the ith node, \(\tau \) is the time-delay, and \(A,B,C\in R^{N\times N}\) are constant matrices.

are continuously differential nonlinear vector functions. In this work, time-delay \(\tau \) is assumed as constant.

To gain the main result of this paper, system (1) is taken as the drive system, and the slave system is considered as

where the noise term \(\delta (y(t)-x(t))\dot{W}(t)\) is used to describe the coupling process influenced by environmental fluctuation, \(\delta \) is noisy intensity matrix, \(\dot{W}(t)\) is a N-dimensional white noise, and \(U=(U_1,U_2,\ldots ,U_N )^{T}\) is the controller vector to be designed.

Definition 1

[28] It is said that the finite-time stochastic synchronization between systems (2) and (1) can be achieved if, for any initial states x(0), y(0), there is a finite time function

holding that, for all \(t\ge T_0 \), we have

where \(T_0 \) is called the stochastic time.

For n-dimension stochastic differential equation

where \(x\in R^{n}\) is the state vector, \(f:R^{n}\rightarrow R^{n}\) and \(g:R^{n}\rightarrow R^{n\times m}\) are continuous functions which satisfies \(f(0)=0\), \(g(0)=0\), it is granted that Eq. (3) has a unique global solution denoted by \(x(t,x(0))(0\le t<\infty )\), where x(0) is the initial state.

For each \(V\in C^{2,1}(R^{n}\times R_+,R_+ )\), the operator \(\mathcal{L}V\)[28] relative to Eq. (3) is defined as

where \(\frac{\partial V}{\partial x}=\left( {\frac{\partial V}{\partial x_1 },\frac{\partial V}{\partial x_2 },\ldots ,\frac{\partial V}{\partial x_n }} \right) \) and \(\frac{\partial ^{2}V}{\partial x^{2}}=\left( {\frac{\partial ^{2}V}{\partial x_i \partial x_j }} \right) _{n\times n} \quad (i,j=1,2,\ldots n)\).

Assumption 1

For system (1), it is believed that \(f_i \), \(g_i (i=1,2,\ldots ,N)\) all satisfy Lipchitz condition, e.g., there are positive constants \(L_f\), \(L_g\) such that

for all \(x_i,y_i \in R^{n}(i=1,2,\ldots ,N)\).

Because the change rate of concrete system is far more than the speed of the environmental fluctuations, then for the noise intensity function, following Assumption 2 is given.

Assumption 2

The noise of intensity function \(\delta (y(t)-x(t))\) satisfies Lipchitz condition; namely, there exists a constant q such that

Moreover, \(\delta (0)\equiv 0\).

Lemma 1

[37] For Eq. (3), define \(T_0 (x_0 )=\inf \{T\ge 0:x(t,x_0 )=0,\forall t\ge T\}\), and assume that Eq. (3) has the unique global solution, if there is a positive definite, twice continuously differentiable, radially unbounded Lyapunov function \(V:R^{n}\rightarrow R^{+}\) and real numbers \(k>0,0<\rho <1\), such that

then the origin of system (3) is globally stochastically finite-time stable, and

Lemma 2

[38] Suppose that \(0<r\le 1\), a, b are all positive numbers, then the inequality

is quite straightforward.

Lemma 3

[39] Suppose that \(\Sigma _1 \),\(\Sigma _2 \),\(\Sigma _3 \) are real matrices with appropriate dimensions and \(s>0\) is a scalar, satisfying \(\Sigma _3 =(\Sigma _3 )^{T}>0\), then following inequality

holds.

Corollary

In Lemma 3, if \(\Sigma _3 \) is chosen as the identity matrix with appropriate dimension, inequality (8) can be simplified as

3 Main result

In this section, the finite-time stochastic synchronization of time-delay neural networks with noise disturbance is investigated based on above preliminaries. For this, let \(e(t)=y(t)-x(t)\), and then the error system can be gotten as

The main result can be given as following Theorem 1.

Theorem 1

Suppose that I is the identity matrix with appropriate order, if there exist constants q,s,\(k_1 \),\(k_2 \) satisfying following two conditions:

-

(i)

\(A+A^{T}+L_f (B+B^{T})+(k_2 +q-2k_1 +sL_g )I\le 0\),

-

(ii)

\(s^{-1}L_g C^{T}C-k_2 I\le 0\),

then the finite-time stochastic synchronization between systems (1) and (2) can be obtained under the feedback control

where \(k_1 \),\(k_2 \) are the control strengths, \(\eta >0\),\(0<\theta <1\) and

The finite time is estimated by \(E\left[ {T_0 (x_0 )} \right] \le T=t_0 +\frac{(V(x_0 ))^{\frac{1-\theta }{2}}}{\eta (1-\theta )}\).

Note: Here \(\left\| \cdot \right\| \) refers to Euclidean norm.

Proof

Firstly, substitute (11) into (10), and the error system can be obtained as

Secondly, Lyapunov function is chosen as

Diffuse operator \(\mathcal{L}\) defined in Eq. (4) onto function V along error system (12), and we can get

According to the Corollary of Lemma 3, there is a scalar \(s>0\) such that

Meanwhile, it is noticed that

Therefore, in line with Lemma 2 and the conditions in Theorem 1, we have

On the basis of Lemma 1, the trivial solution of (12) is globally stochastically asymptotically stable in finite time. It means that the finite-time synchronization of systems (1) and (2) could be achieved for almost every initial data, and the finite time is estimated by

where \(V(0)=(1+\tau )\sum _{i=1}^N {e_i^2 (0)} \). Theorem 1 is proved.

Remark 1

From the conditions (i) and (ii) of Theorem 1, it is known that, for any high-level noise, there are sufficiently large positive constants \(k_1 \) and \(k_2 \) such that the finite-time synchronization of neural networks is obtained in probability. That is to say, this kind of synchronization is robust to the perturbation.

Remark 2

In the light of Itô formula, it is obvious to see that the decay rate of function V(t) depends on the quality of \(\mathcal{L}V\). It means that the quality of \(\mathcal{L}V\) dominates the convergence speed of the error system (10). So the synchronization time of systems (1) and (2) is controlled by the quality of \(\mathcal{L}V\).

Remark 3

From Eq. (16), it is easy to see that, for fixed initial values, the convergence time of the proposed algorithm is closely related to the protocol parameters \(\eta \) and \(\theta \). Following conclusions can be further obtained: (a) For fixed value of \(\theta \), the synchronization time decreases with \(\eta \) increasing. (b) If \(\eta \) is fixed, the synchronization time becomes longer with \(\theta \) increasing when \(1-\hbox {2/}\ln V(0)<\theta <1\), while it becomes less with \(\theta \) increasing when \(0<\theta <1-\hbox {2/}\ln V(0)\), which can be derived from the derivation T of \(\theta \). This result depends on the initial values of the systems. Therefore, in the following numerical simulations, we only consider the influence of \(\eta \) on the synchronization time.

4 Numerical simulations

In this section, by MATLAB program, some numerical simulations are given to verify the feasibility and effectiveness of the proposed scheme. For this, we think of following neural network with single time-delay [40]:

where \(a_i >0\), \(b_{ij}\) and \(c_{ij}\) are real numbers. \(\tau >0\) is time-delay. \(I_i (i=1,2,\ldots ,N)\) are external inputs. The input–output transfer function f(x) is selected as \(\tanh (x)\), which means \(L_f =1\). Let

and then system (17) can be rewritten as

which is taken as the master system and the corresponding slave system is

where controller vector U is taken as (11).

In the simulations, the value of time-delay is \(\tau =1\). The control strengths are taken as \(k_1 =20,k_2 =2\). The initial values are taken from the interval [−1.0, 1.0] randomly. In addition, we choose \(\delta (e(t))=\sqrt{2\delta _0 }e(t)\), which holds \(trace(\delta ^{T}\delta )\le 2\delta _0 e^{T}(t)e(t)\). To investigate the effect of the number of nodes on the synchronization time, we select the neural networks with two nodes, five nodes and ten nodes, respectively. Furthermore, for the purpose of comparing the convergence rate of the system, we think about the total error function

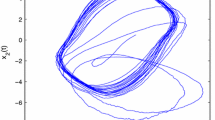

Case 1 When \(N=2\), we take \(A=\left( {{\begin{array}{cc} 1&{} 0 \\ 0&{} 1 \\ \end{array} }} \right) \), \(B=\left( {{\begin{array}{cc} {3.0}&{} {5.0} \\ {0.1}&{} {2.0} \\ \end{array} }} \right) \), \(C=\left( {{\begin{array}{cc} {-2.5}&{} {0.2} \\ {0.1}&{} {-1.5} \\ \end{array} }} \right) \), \(\theta =0.01\) and \(\eta =\hbox {6}.0\). Figure 1 shows the time evolution curves of the drive system (18) and response system (19) without controller, from which it could be seen that the two systems gradually separate from each other over time. To obtain our main point, the error dynamics of systems (18) and (19) with controller as (11) are to be simulated in several conditions. Figure 2 depicts the error dynamics of systems (18) and (19) when \(N=2,\theta =0.01\) and \(\eta =6.0\), which suggests that stochastic synchronization can be realized in finite time for 2-node neural network. Figure 3 gives the evolution of total error function E(t)in (20) with the same protocol parameter values of Fig. 2.

Case 2 When \(N=5\), the matrices are chosen as

Figure 4 traces the error evolution of systems (18) and (19) when \(\theta =0.01\) and \(\eta =6.0\), which means that the finite-time stochastic synchronization can be obtained if the neural network possesses 5 nodes. Figure 5 depicts dynamical behavior of the total error function E(t) in (20) with the same protocol parameter values of Fig. 4.

Case 3 When \(N=10\), A is taken as the 10th order unit matrix and the matrices B, C are chosen as

Figure 6 draws the time evolution of error dynamics of systems (18) and (19) when \(\theta =0.01\) and \(\eta =6.0\), from which we know that the finite-time stochastic synchronization can be obtained for 10-node neural network. Figure 7 pictures the dynamics of the total error function E(t) in (20) with \(N=10\), \(\theta =0.01\), \(\eta =6.0\). Furthermore, comparing Figs. 2, 5 and 7, it is obvious to see that the total error function of the neural networks with more nodes converge slower than those with fewer nodes.

To find out the time of stochastic synchronization over parameter \(\eta \), Fig. 8 describes the evolution of E(t) over time t when \(N=2,\theta =0.01\) and \(\eta \) is taken as different values, which indicates that the time required to realize the finite-time stochastic synchronization becomes less with \(\eta \) increasing. This phenomenon is consistent with the comment in Remark 3.

The time evolution curve of E(t) in (20) with controller, \(N=2\), \(\eta =\hbox {6}.0\), \(\theta =0.01\)

The time evolution curve of E(t) in (20) with controller, \(N=5\), \(\eta =6.0\), \(\theta =0.01\)

The time evolution curve of E(t) in (20) with controller, \(N=10\), \(\eta =6.0\), \(\theta =0.01\)

The evolution of E(t) in (20) along time t for \(N=2\), \(\theta =0.01\) and different values of \(\eta \)

5 Conclusions and future works

In this paper, based on finite-time stability theory of stochastic differential equation, via suitable controllers, stochastic synchronization of time-delay neural networks with noise disturbance is achieved in finite time. This result is not only obtained by theoretical analysis, but also verified by numerical simulations. Furthermore, factors affecting the convergence rate are described. When the number of nodes in the neural network is fixed, larger \(\eta \) is helpful for improving the convergence rate. At the same condition, the time of convergence is positively correlated with the number of nodes in the neural networks. The fewer the nodes, the less time is required to achieve stochastic synchronization.

Because the discussed neural networks take into account both time-delay and noise disturbance, it is attractive and practical in understanding the dynamic behavior of neural networks. It will be helpful for the application of neural network.

In this work, the time-delay is assumed as constant. For some systems with time-varying delay, adaptive control [41, 42] can be used to realized the finite-time stochastic synchronization of neural networks. Our next work is to investigate this problem to further understand the complexity mechanism of the neural system and avoid some unfavorable phenomenon as much as possible.

References

Pecora, L.M., Carroll, T.L.: Synchronization in chaotic systems. Phys. Rev. Lett. 64(8), 821–824 (1990)

Chen, X., Lu, J.: Adaptive synchronization of different chaotic systems with fully unknown parameters. Phys. Lett. A 364(2), 123–128 (2007)

Lin, W.: Adaptive chaos control and synchronization in only locally Lipschitz systems. Phys. Lett. A 372(18), 3195–3200 (2008)

Ma, J., Zhang, A.H., Xia, Y.F., Zhang, L.P.: Optimize design of adaptive synchronization controllers and parameter observers in different hyperchaotic systems. Appl. Math. Comput. 215(9), 3318–3326 (2010)

Aghababa, M.P., Khanmohammadi, S., Alizadeh, G.: Finite-time synchronization of two different chaotic systems with unknown parameters via sliding mode technique. Appl. Math. Model. 35(6), 3080–3091 (2011)

Chen, D., Zhang, R., Ma, X., Liu, S.: Chaotic synchronization and anti-synchronization for a novel class of multiple chaotic systems via a sliding mode control scheme. Nonlinear Dyn. 69(1–2), 35–55 (2012)

Tian, Y.W., Zhuang, J.L., Xu, R.Y.: Synchronization of two different chaotic systems using novel adaptive fuzzy sliding mode control. Chaos Interdiscip. J. Nonlinear Sci. 18(3), 033133 (2008)

Ji, D.H., Jeong, S.C., Park, J.H., Lee, S.M., Won, S.C.: Adaptive lag synchronization for uncertain complex dynamical network with delayed coupling. Appl. Math. Comput. 218(9), 4872–4880 (2012)

Bao, H.B., Cao, J.D.: Projective synchronization of fractional-order memristor-based neural networks. Neural Netw. 63, 1–9 (2015)

Li, H.L., Jiang, Y.L., Wang, Z.L.: Anti-synchronization and intermittent anti-synchronization of two identical hyperchaotic Chua systems via impulsive control. Nonlinear Dyn. 79(2), 919–925 (2015)

Shi, X., Lu, Q., Wang, H.: In-phase burst synchronization and rhythm dynamics of complex neuronal networks. Int. J. Bifurc. Chaos 22(5), 1250101 (2012)

Vinck, M., Oostenveld, R., van Wingerden, M., Battaglia, F., Pennartz, C.M.: An improved index of phase-synchronization for electrophysiological data in the presence of volume-conduction, noise and sample-size bias. Neuroimage 55(4), 1548–1565 (2011)

Wang, Z.L., Wang, C., Shi, X.R., Ma, J., Tang, K.M., Cheng, H.S.: Realizing hybrid synchronization of time-delay hyperchaotic 4D systems via partial variables. Appl. Math. Comput. 245, 427–437 (2014)

Li, B., Xu, D.: Exponential \(p\)-stability of stochastic recurrent neural networks with mixed delays and Markovian switching. Neurocomputing 103, 239–246 (2013)

Yuan, Y., Sun, F.: Delay-dependent stability criteria for time-varying delay neural networks in the delta domain. Neurocomputing 125, 17–21 (2014)

Cheng, J., Zhu, H., Zhong, S., Li, G.: Novel delay-dependent robust stability criteria for neutral systems with mixed time-varying delays and nonlinear perturbations. Appl. Math. Comput. 219(14), 7741–7753 (2013)

Song, X.L., Wang, C.N., Ma, J., Tang, J.: Transition of electric activity of neurons induced by chemical and electric autapses. Sci. China Technol. Sci. 58, 1007–1014 (2015)

Song, X.L., Wang, C.N., Ma, J., Ren, G.D.: Collapse of ordered spatial pattern in neuronal network. Phys. A 451, 95–112 (2016)

Ma, J., Xu, Y., Ren, G.D.: Prediction for breakup of spiral wave in a regular neuronal network. Nonlinear Dyn. 84, 497–509 (2016)

Vincent, U.E., Guo, R.W.: Finite-time synchronization for a class of chaotic and hyperchaotic systems via adaptive feedback controller. Phys. Lett. A 375(24), 2322–2326 (2011)

Wang, X., Fang, J.A., Mao, H., Dai, A.: Finite-time global synchronization for a class of Markovian jump complex networks with partially unknown transition rates under feedback control. Nonlinear Dyn. 79(1), 47–61 (2014)

Shi, T.: Finite-time control of linear systems under time-varying sampling. Neurocomputing 151, 1327–1331 (2015)

Velmurugan, G., Rakkiyappan, R., Cao, J.: Finite-time synchronization of fractional-order memristor-based neural networks with time delays. Neural Netw. 73, 36–46 (2016)

Bhat, S.P., Bernstein, D.S.: Finite-time stability of continuous autonomous systems. SIAM J. Control Optim. 38(3), 751–766 (2000)

Li, B.: Finite-time synchronization for complex dynamical networks with hybrid coupling and time-varying delay. Nonlinear Dyn. 76(2), 1603–1610 (2014)

Lin, X., Du, H., Li, S.: Finite-time boundedness and L 2-gain analysis for switched delay systems with norm-bounded disturbance. Appl. Math. Comput. 217(12), 5982–5993 (2011)

Cao, L., Ma, Y.: Linear generalized outer synchronization between two different complex dynamical networks with noise perturbation. Int. J. Nonlinear Sci. 3, 373–379 (2012)

Sun, Y., Li, W., Zhao, D.: Finite-time stochastic outer synchronization between two complex dynamical networks with different topologies. Chaos Interdiscip. J. Nonlinear Sci. 22(2), 023152 (2012)

Li, L., Jian, J.: Finite-time synchronization of chaotic complex networks with stochastic disturbance. Entropy 17(1), 39–51 (2014)

Jiang, N., Liu, X., Yu, W., Shen, J.: Finite-time stochastic synchronization of genetic regulatory networks. Neurocomputing 167, 314–321 (2015)

Li, H.: Synchronization stability for discrete-time stochastic complex networks with probabilistic interval time-varying delays. J. Phys. A Math. Theor. 44(10), 697–708 (2011)

Wang, Z., Liu, Y., Yu, L., Liu, X.: Exponential stability of delayed recurrent neural networks with Markovian jumping parameters. Phys. Lett. A 356(4), 346–352 (2006)

Gan, Q., Liang, Y.: Synchronization of chaotic neural networks with time delay in the leakage term and parametric uncertainties based on sampled-data control. J. Franklin Inst. 349(6), 1955–1971 (2012)

Wu, Z.G., Ju, H.P., Su, H., Chu, J.: Discontinuous Lyapunov functional approach to synchronization of time-delay neural networks using sampled-data. Nonlinear Dyn. 69(4), 2021–2030 (2012)

Mu, X., Chen, Y.: Synchronization of delayed discrete-time neural networks subject to saturated time-delay feedback. Neurocomputing 175(2), 293–299 (2015)

Yu, H., Wang, J., Du, J., Deng, B., Wei, X.: Local and global synchronization transitions induced by time delays in small-world neuronal networks with chemical synapses. Cogn. Neurodyn. 9(1), 93–101 (2015)

Chen, W., Jiao, L.C.: Finite-time stability theorem of stochastic nonlinear systems. Automatica 46(12), 2105–2108 (2010)

Wang, H., Han, Z., Xie, Q., Zhang, W.: Finite-time chaos synchronization of unified chaotic system with uncertain parameters. Commun. Nonlinear Sci. 14, 2239–2247 (2009)

Huang, J.J., Li, C.D., Huang, T.W., He, X.: Finite-time lag synchronization of delayed neural networks. Neurocomputing 139, 145–149 (2014)

Lu, H.: Chaotic attractors in delayed neural networks. Phys. Lett. A 298(2), 109–116 (2002)

Lu, J.Q., Wang, Z.D., Cao, J.D., Ho, D.W.C., Kurths, J.: Pinning impulsive stabilization of nonlinear dynamical networks with time-varying delay. Int. J. Bifurc. Chaos 22(7), 137–139 (2012)

Lu, J., Cao, J.: Adaptive synchronization of uncertain dynamical networks with delayed coupling. Nonlinear Dyn. 53(1–2), 107–115 (2008)

Acknowledgements

The authors give many thanks to the anonymous reviewers for their valuable comments. This work is supported by National Natural Science Foundation of China (Grant No. 11472238), the Natural Science Foundation of Xinjiang Uygur Autonomous Region (Grant No. 2015211C267) and the Qing Lan Project of the Jiangsu Higher Education Institutions of China.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Shi, X., Wang, Z. & Han, L. Finite-time stochastic synchronization of time-delay neural networks with noise disturbance. Nonlinear Dyn 88, 2747–2755 (2017). https://doi.org/10.1007/s11071-017-3408-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-017-3408-2