Abstract

This paper treats of the finite-time stochastic synchronization problem of chaotic dynamic neural networks with mixed time-varying delays and stochastic disturbance. State feedback controller and adaptive controller are designed such that the response system can be finite-timely synchronized with corresponding drive system. Some novel and useful finite-time synchronization criteria are derived based on finite-time stability theory. A numerical example presents the effectiveness of our proposed methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent years, a great number of efforts have been devoted to neural networks (see [1–4]), and have been successfully applied to a variety of fields such as image and signal processing, parallel computing, optimization, pattern recognition, associative memory, automatic control, etc. Both Hopfield neural networks and cellular neural networks have become important fields of active research over the past two decades for their potential applications in modeling complex dynamics. Both of them have been successfully applied in solving various linear and nonlinear programming problems, as well as in the applications of image processing. However, stability analysis in this kind of neural networks is a very important issue, and several stability criteria have been developed in the literature [3, 4] and references cited therein.

Ever since the pioneering work of Pecora and Carroll [5], the issue of synchronization and chaos control has been extensively studied due to its potential engineering applications such as secure communication, biological systems, information processing [6–14]. In addition, it is well known that chaotic systems have complex dynamical behaviors which possess some special features, such as being extremely sensitive to tiny variations of initial conditions, having bounded trajectories in phase space, etc. Synchronization is a typical collective behavior of chaotic neural networks which has caused increasing concern because of its ubiquity in lots of neural network models. To the best of knowledge, most of the existing papers are concerned with asymptotic or exponential synchronization of networks. However, in fact, the networks might always be expected to achieve synchronization as quickly as possible, particularly in engineering fields. To obtain faster convergence rate in neural networks, it is necessary to use effective finite-time synchronization control techniques [15–18].

On the other hand, due to the network traffic congestion and the finite speed of signal transmission, time delays occur commonly in neural networks, which may lead to oscillations or instability of the networks. Hence, the study on the dynamical behavior of the delayed neural networks is an active research topic and has received considerable attention during the past few years. However, in these recent works, most papers on delayed neural networks have been restricted to simple cases of discrete delays. In general, because of the existence of a lot of parallel pathways of all kinds of axon sizes and lengths, a neural network usually has a spatial nature, which makes us model them by introducing the distributed delays. Then, both discrete and distributed time-varying delays should be taken into account when modeling some realistic neural networks [19–21].

In real world, due to random uncertainties such as stochastic forces on the physical systems and noisy measurements caused by environmental uncertainties, a system with stochastic perturbations should be produced instead of a deterministic form. There have been some works in the field of stochastic chaos synchronization of master-slave type [22–26]. In [22], exponential synchronization of stochastic perturbed chaotic delayed neural networks is considered. In [23, 24], adaptive synchronization for delayed neural networks with stochastic perturbation is investigated. In [25], the author investigates the finite-time stochastic synchronization problem for complex networks with stochastic noise perturbations, and a new kind of complex network model has been introduced, which includes not only the diffusive linear couplings, but also the unknown diffusive couplings and Wiener processes. In [26], works with non-delayed and delayed coupling has been proposed by utilizing the impulsive control and the periodically intermittent control. Hence, the novel models in [25, 26] are more practical in real world. However, there is no any kind of time delays in the model of [25] and there only has discrete time delays in the model of [26].

Motivated by the discussion above, this paper aims to treat of the stochastic finite-time synchronization of neural networks with mixed time-varying delays and stochastic disturbance via designing different controllers: feedback controller and adaptive controller.

Notations: The superscript “T” stands for matrix transposition; \({\mathbb {R}}\) denotes the real space; \({\mathbb {R}}^{n}\) denotes the n-dimensional Euclidean space; the notation \(P> 0\) means that \(P\) is real symmetric and positive definite; \(I\) and \(0\) represent identity matrix and zero matrix, respectively; \({\mathcal {E}}\{\cdot \}\) represents the mathematical expectation; \( {\mathcal {L}} \) represents the diffusion operator; and matrices, if their dimensions are not explicitly stated, are assumed to be compatible for algebraic operations; For \(r>0\), \(C([-r,0];{\mathbb {R}}^{n})\) denotes the family of continuous function \(\varphi \) from \([-r,0]\) to \({\mathbb {R}}^{n}\) with the norm \(\Vert \varphi \Vert =\sup _{-r\le s\le 0}|\varphi (s)|\); \(\dot{x}(t)\) denotes the derivative of \(x(t)\); The Euclidean norm in \({\mathbb {R}}^{n}\) is denoted as \(\Vert \cdot \Vert _{2}\), accordingly, for vector \( x\in {\mathbb {R}}^{n}\), \(\Vert x\Vert _{2}=\sqrt{x^{T}x}\); \(A\) denotes a matrix of n-dimension, \(|A|=(|a_{ij}|)_{n\times n}\), \(\Vert A\Vert =\sqrt{\lambda _{max}(A^{T}A)},\) where \(\lambda _{max}\) denotes the maximum eigenvalue of \(A\).

The rest of this paper is organized as follows. In Sect. 2, the model formulation and some preliminaries are given. Finite-time synchronization of neural networks with mixed time-vary delays and stochastic disturbance can be achieved by designing two different controllers in Sect. 3. In Sect. 4, A numerical example is presented to demonstrate the validity of the proposed results, and in Sect. 5, this paper also demonstrates the effectiveness of application in secure communication. Some conclusions are drawn in Sect. 6.

2 Model description and preliminaries

In this paper, the manifold network is that

where \(z(t)\in {\mathbb {R}}\) is the state vector; \(C=\text {diag}(c_{1},c_{2},\ldots ,c_{n})\), where \(c_{i}(.)\) denotes self-inhibition of \(ith\) neuron; \(A=(a_{ij})_{n\times n}\), \( B=(b_{ij})_{n\times n}\) and \( E=(e_{ij})_{n\times n}\) are \(n\times n\) real matrices representing, respectively, the neuron, the delayed neuron interconnection matrices and distributed neuron interconnection matrices; \(g(x(t))=(g_{1}(x_{1}(t)), g_{2}(x_{2}(t)), \ldots ,g_{n}(x_{n}(t)))^{T}\) is a diagonal mapping, with \(g_{i}(\cdot ),i=1,\ldots ,n\) modeling the non-linear input-output activation of \(ith\) neuron; \(J(t)=(J_{1}(t),J_{2}(t),\ldots ,J_{n}(t))^{T}\) is a constant external input; \(\alpha (t)\) and \(\theta (t)\) represent the discrete time delay and distribute time-delay, respectively, in the network; \(z(t)=\phi _{0}(t)\in C([-\tau ,0];{\mathbb {R}}^{n})\) is the initial condition.

Consider the following stochastic neural network

where \(x(t)=(x_{1}(t),x_{2}(t),\ldots ,x_{n}(t))^{T}\) is the vector of neuron states at time \(t\) and the system parameters \( C,A,B\) and \(g,\alpha (t),\theta (t),J(t)\) have the same definitions as those in (1); \(e(t)=(e_{1}(t),e_{2}(t),\ldots ,e_{n}(t))=x(t)-z(t)\) denotes the error between the state variable \(x(t)\) and the desired state vector \(z(t)\); \(w(t)\in {\mathbb {R}} \) is a Brownian motion and defined on a complete probability space \((\Omega ,{\mathcal {F}},{\mathcal {P}})\) satisfying \(E\{dw(t)\}=0\) and \(E\{dw^2(t)\}=dt, \ \sigma :{\mathbb {R}}^{+}\times {\mathbb {R}}^{n}\times {\mathbb {R}}^{n}\) is the noise intensity function; \(x(t)=\phi (t)\in \mathcal {L}^{2}_{{\mathcal {F}}_0}([-\tau ,0],{\mathbb {R}}^{n})\) is the initial condition with \({\mathcal {L}}^{2}_{{\mathcal {F}}_0}([-\tau ,0],{\mathbb {R}}^{n})\) denoting the set of \({\mathcal {F}}_0\)-measurable \( C([-\tau ,0];{\mathbb {R}}^{n})\)-valued stochastic process \(\xi =\{\xi (s)|-\tau \le s\le 0\}\) such that \( \sup \nolimits _{-\tau \le s\le 0}E\{\Vert \xi (s)\Vert ^2\}<\infty \), \(\tau =\max \nolimits _{t\in {\mathbb {R}} }\{\alpha (t),\theta (t)\}\). This type of stochastic perturbation can be regarded as a result from the occurrence of random uncertainties which affect the dynamic behavior of the controlled networks.

In this paper, we use the feedback controller and adaptive controller to achieve the synchronization of the different systems.

Then, the error system can be obtained from (1) and (2) is that

where \(\tilde{g}(t)=g(x(t))-g(z(t))\). The initial condition of the error system (3) on \([-\tau ,0]\) can be given by

where \(\varphi (t)= \phi (t) - \phi _{0}(t)\). It is obvious that \(\varphi (t) \in {\mathcal {L}}^{2}_{{\mathcal {F}}_0}([-\tau ,0],{\mathbb {R}}^{n})\).

Remark 1

In this paper, the model not only has the discrete time-varying delays, but also has the distributed time-varying delays, which means that the results of the work will be more general, better and more practical.

Throughout this paper, we make the following assumptions:

\((A_{1})\) Assume that there exists a positive definite diagonal matrix \(L=diag(L_{1},L_{2},...,L_{n})>0\) such that the neuron activation function \(g(\cdot )\) satisfies the following condition

\((A_{2})\) In this paper, we assume \(\sigma (\cdot )\) is locally Lipschitz continuous and satisfies the linear growth condition. Moreover, there exist positive definite matrices \(U\) and \(V\) such that

\((A_{3})\) Time-varying delays \(\alpha (t)\) and \(\theta (t)\) satisfy \(0<\alpha (t)<\alpha \), \(0<\theta (t)<\theta \) and \( \dot{\alpha }(t)<\gamma <+\infty \) for all \(t>0\), where \(\alpha \) and \(\theta \) are positive constants.

Definition 1

The network (1) and (2) is said to be stochastically synchronized in finite-time, if for a suitable designed controller, there exists a constant \(t^{'} > 0\), such that

and \({\mathcal {E}}\Vert x(t)-z(t)\Vert _{2}\equiv 0,\) for \(t>t^{'}\).

To obtain the main results of this paper, the following lemmas will be needed.

Lemma 1

([27]) Let \(x\in {\mathbb {R}}^{n},y\in {\mathbb {R}}^{n}\) and a scalar \(\varepsilon >0\). Then we have

Lemma 2

([28]) If \(m_{1},m_{2},\ldots ,m_{n}\) are positive number and \(r>1\), then

Lemma 3

([29]) For any positive definite matrix \(M>0\), scalars \(\gamma _{2}>\gamma _{1}>0\) and vector function \(\omega :[\gamma _{1},\gamma _{2}]\) such that the integrations concerned are well defined, then the following inequality holds:

Lemma 4

([30]) Assume that a continuous positive-definite function \(V(t)\) satisfies the following differential inequality:

where \(\alpha >0\), \(0<\eta <1\) are two constants. Then, for any given \(t_{0}\), \(V(t)\) satisfies the following inequality:

and

with \(t_{1}\) given by

3 Main results

Theorem 1

Suppose the assumptions \((A_{1})\)–\((A_{3})\) are satisfied, then the neural networks (1) and (2) can achieve finite-time synchronization under the following feedback controller:

where \(\Lambda =diag(\Lambda _{1},\Lambda _{2},\ldots ,\Lambda _{n})>0 \) is constant diagonal matrix to be determined, \(P>0\), \(Q>0\), \(\Lambda > -C+\Vert \bar{A}\Vert I+\frac{1}{2}|E||E|^{T} +\frac{P}{2}+\frac{\theta }{2}L^{T}QL+\frac{U}{2}+\frac{1}{2}I\), \(\bar{A}=|A|L\), \( P=\frac{1}{1-\gamma }(L^{T}|B|^{T}|B|L+V) \), and \( \eta >0\) is a tunable constant.

Proof

Define the following Lyapunov functions candidate:

where \(\{e_{t}=e(t+\theta )|t\ge 0,-\tau \le \theta \le 0\}\) is a stochastic process. By Itô formula, the stochastic differential \(dV(e_{t},t)\) can be obtained as

where

On the other hand, by means of Lemma 3,

then

By virtue of \(\Lambda > -C+\Vert \bar{A}\Vert I+\frac{1}{2}|E||E|^{T}+\frac{P}{2}+\frac{\theta }{2} L^{T}QL+\frac{U}{2}+\frac{1}{2}I\) and \( P=\frac{1}{1-\gamma }(L^{T}|B|^{T}|B|L+V) \), one can get

Base on Lemma 2, one has

Hence,

therefore,

By Lemma 4, \({\mathcal {E}}[V(e_{t},t)]\) converges to zero in a finite time, and the finite time is estimated by

Hence, the error vector \(e(t)\) will stochastically converge to zero within \(t_{1}\). This completes the proof.\(\square \)

Theorem 2

Suppose the assumptions \((A_{1})\)–\((A_{3})\) hold, then the neural networks (2) can finite-timely synchronize with (1) under the following adaptive controller:

where \(\Lambda =diag(\Lambda _{1},\Lambda _{2},...,\Lambda _{n})>0 \) is constant diagonal matrix to be determined, \(P>0\), \(Q>0\), \(\Lambda > -C+\Vert \bar{A}\Vert I+\frac{1}{2}|E||E|^{T}+\frac{P}{2} +\frac{\theta }{2}L^{T}QL+\frac{U}{2}+\frac{1}{2}I\), \(\bar{A}=|A|L\), \( P=\frac{1}{1-\gamma }(L^{T}|B|^{T}|B|L+V) \), and \( \eta >0\) is a tunable constant.

Proof

Define the following Lyapunov function candidate:

where \(\left\{ e_{t}=e(t+\theta )|t\ge 0,-\tau \le \theta \le 0\right\} \) is a stochastic process. By Itô formula, the stochastic differential \(dV(e_{t},t)\) can be obtained as

where

By virtue of \(\Lambda > -C+\Vert \bar{A}\Vert I+\frac{1}{2}|E||E|^{T}+\frac{P}{2} +\frac{\theta }{2}L^{T}QL+\frac{U}{2}+\frac{1}{2}I\), \( P=\frac{1}{1-\gamma }(L^{T}|B|^{T}|B|L+V) \) and Lemma 2, one gets

Therefore,

By Lemma 4, \({\mathcal {E}}[V(e_{t},t)]\) converges to zero in a finite time, and the finite time is estimated by

Hence, the error vector \(e(t)\) will stochastically converge to zero within \(t_{2}\). This completes the proof.\(\square \)

Remark 2

In this paper, one adopts feedback control and adaptive control techniques to guarantee the stochastic finite-time synchronization of chaotic neural networks with mixed time-varying delays. As far as we are concerned, although there are many papers focusing on the finite-time synchronization or stability [25, 26, 31–34], few works concerning finite-time synchronization with mixed time-varying delays and stochastic perturbation have been published. Therefore, the results have better robustness and disturbance rejection properties, which shows that they are more practical than those in [25, 26, 31–34].

Remark 3

From the proof of Theorems 1 and 2, we can see the important roles that parameters \(\Lambda =diag(\Lambda _{1},\Lambda _{2},\ldots ,\Lambda _{n}) \) and the tunable constant \(\eta \) played in the feedback controller (4) and adaptive controllers (7). The inequalities (5) and (8) indicate that the synchronization rate increases when \(\Lambda =diag(\Lambda _{1},\Lambda _{2},\ldots ,\Lambda _{n}) \) and the tunable constant \(\eta \) increase. On the other hand, whether the network (1) and (2) can be synchronized or not relies on the value of \(\Lambda =diag(\Lambda _{1},\Lambda _{2},\ldots ,\Lambda _{n}) \) and \(\eta \), whereas the synchronization time depends on the value of \(\eta \), and has nothing to do with the value of \(\Lambda =diag(\Lambda _{1},\Lambda _{2},\ldots ,\Lambda _{n}) \). If the value of \(\Lambda =diag(\Lambda _{1},\Lambda _{2},\ldots ,\Lambda _{n}) \) is less than some value, then stochastic chaotic networks (2) cannot synchronize with the manifold system (1).

4 Illustrative examples

In this section, a numerical example has been given to show that our theoretical results obtained above are effective.

Example 1

Consider the two-node delayed stochastic neural network mode (2) with the following parameters: \(x(t)=(x_{1}(t),x_{2}(t))^{T}, z(t)=(z_{1}(t),z_{2}(t))^{T},J(t)=(0,0), \alpha (t)=\theta (t)=0.6(e^{t})/(1+e^{t})\), and

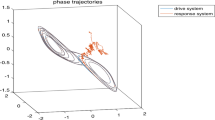

\( g_{1}(t)=g_{2}(t)=tanh(t)\). Figure 1 shows the chaotic-like trajectory (2) with initial condition \(x(t)=(-0.2,-0.3)^{T}, t\in [-1,0].\) The noise intensity function matrix is taken as

Here, It is straightforward to check that all the conditions in Theorem 1 hold. Obviously, neural networks (2) satisfies \( (A_{1})-(A_{3}) \) with \( L_{i}=1,i=1,2,\alpha =\theta =0.6,\gamma =0.15,\) letting \(\eta =0.15\),

Consider the following manifold (1) with the initial condition \(z(t)=(0.3,0.5)^{T},\ t\in [-1,0]\).

According to the conditions in Theorem 1, we get that

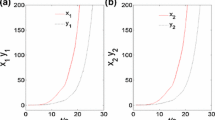

It follows from Theorem 1 that system (2) can finite-timely synchronize with the desired system (1) under the feedback controller (4) and adaptive controllers (7). We get the simulations shown in Figs. 2, 3, 4, 5 and 6. The Figs. 2, 3 show the \(x_{1}(t) \ and \ z_{1}(t) \), \(x_{2}(t) \ and \ z_{2}(t) \) can not achieve synchronization without any controller. Figures 4, 5 present that the system (2) and manifold system (1) can realize finite-time synchronization under feedback controller and adaptive controller, respectively. Figure 6 shows the trajectories of control parameters \(k_{i}(t), i=1,2\) of adaptive controllers (7).

Chaotic-like trajectory of system (2)

Trajectories of control parameters \(k_{i}(t), i=1,2\) of adaptive controllers (7)

5 Application in secure communication

In this section, the adaptive synchronization scheme proposed in Theorem 2 is applied to chaotic secure communications. An information signal \( p(t)\) carrying the message to be transmitted can be masked by the chaotic signal \(x(t)\). The finite-time chaotic synchronization discussed above can be used to extract the message at the receiver. Different strategies can be used to make the actual transmitted signal \(v(t)\) as broadband as possible, so that make its detection through spectral techniques difficult. In general, three strategies are proposed in secure communications with chaos [35–37]. One is signal masking, where \(v(t)= x(t)+ \delta p(t)\); another is modulation, \(v(t)= x(t)p(t)\); the third is a combination of masking and modulation, such as \(v(t)= x(t)[1+ \delta p(t)]\). We only focus on the first chaotic masking in this paper. Figure 7 shows the proposed communication system consisting of a transmitter and receiver. The transmitted signal is \(v(t)= x(t)+ \delta p(t)\). In addition, it is also injected into the transmitter and, simultaneously, transmitted to the receiver. By the proposed adaptive synchronization scheme, a chaotic receiver is derived to recover the message signal at the receiving end. We propose the following masking technique. The transmitter is designed as

where \(p_{1}(t)=\delta p(t)\) is the information message. It should be noted that the message signal must possess low power, i.e., be small in comparison to the chaotic carrier in general [37]. To assure this, we take \(\delta =0.1\). The receiver is designed as

where \(l_{1}(t)= v(t)= x_{1}(t)+ \delta p(t)\) is the transmitted signal, \(l_{2}(t)= x_{2}(t)\). The information message can be recovered by \(r(t)= \delta ^{-1} [v(t)- y_{1}(t)]\). In the simulations, we take the same parameters and functions as Example 4.1 in Sect. 4, and choose the initial information message as \( p(t)=0.2\). Since the eigenfrequency of the message signal \(p(t)\) is much less than the oscillating frequency of the chaotic system in practice, we can get \( \dot{p}(t)\approx 0\). Figure 8 depicts the error between the transmitted signal \(p(t)\) and the recovered signal \(r(t)\).

From the simulations, one can get that the signal can be exactly recovered under the adaptive controller. The developed results check the simulations perfectly.

6 Conclusion

In this paper, the finite-time synchronization between the drive-response systems in the mean square sense has been investigated for neural networks with mixed-time delays. Compared to the results in [25, 26], there have mixed time-varying delays in the model of this paper, which are more applicable in practice. Since finite-time synchronization has better robustness and disturbance rejection properties, the results of this work are of great importance. A numerical example has been given to illustrate the effectiveness of the present results. Furthermore, an application scheme for secure communication is presented in theory, and numerical simulation illustrates the effectiveness.

References

Balasubramaniam B, Rakkiyappan R (2009) Delay-dependent robust stability analysis of uncertain stochastic neural networks with discrete interval and distributed time-varying delays. Neurocomputing 72:3231–3237

Chen L, Zhao H (2009) New LMI conditions for global exponential stability of cellular neural networks with delays. Nonlinear Anal 10:287–297

Cao J, Song Q (2006) Stability in Cohen–Grossberg-type bidirectional associative memory neural networks with time-varying delays. Nonlinearity 19:1601–1617

Liu B, Huang L (2006) Existence and global exponential stability of periodic solutions for celluar neural networks with time-varying delays. Phys Lett 349:474–483

Pecora LM, Carroll TL (1990) Synchronization in chaotic system 64:821–824

Zhang Q, Lu J, Lv J (2008) Adaptive feedback synchronization of a general complex dynamical network with delayed nodes. IEEE Trans Circuits Syst 55:183–187

Lu J, Ho DWC, Cao J (2010) A unified synchronization criterion for impulsive dynamical networks. Automatica 46:1215–1221

Yang X, Cao J, Lu J (2012) Stochastic synchronization of complex networks with nonidentical nodes via hybrid adaptive and impulsive control. IEEE Trans Circuits Syst I Reg Papers 59:371–384

Lu J, Cao J (2008) Adaptive stabilization and synchronization for chaotic Lur’e systems with time-varying delay. IEEE Trans Circuits Syst I Regular Paper 55:1347–1356

Yang X, Cao J, Lu J (2013) Synchronization of coupled neural networks with random coupling strengths and mixed probabilistic time-varying delays. Int J Robust Nonlinear Contr 23:2060–2081

Gan Q (2012) Adaptive synchronization of Cohen–Grossberg neural networks with unknown parameters and mixed time-varying delays. Commun Nonlinear Sci Numer Simulat 17:3040–3049

Lu J, Cao J (2008) Adaptive synchronization of uncertain dynamical networks with delayed coupling. Nonlinear Dyn 53:107–115

Yang X, Cao J, Lu J (2011) Synchronization of delayed complex dynamical networks with impulsive and stochastic effects. Nonlinear Anal Real World Appl 12:2252–2266

Lu J, Cao J (2009) Synchronization of a chaotic electronic circuit system with cubic term via adaptive feedback control. Commun Nonlinear Sci Numer Simul 14:3379–3388

Mei J, Jiang M, Wang J (2013) Finite-time structure identification and synchronization of drive-response systems with uncertain parameter. Commun Nonlinear Sci Numer Simul 18:999–1015

Wang T, Zhao S, Zhou W, Yu W (2014) Finite-time master-slave synchronization and parameter identification for uncertain Lurie systems, ISA Transactions

Hu C, Yu J, Jiang H (2014) Finite-time synchronization of delayed neural networks with Cohen–Grossberg type based on delayed feedback control 142:90–96

Mei J, Jiang M, Wang B, Long B (2013) Finite-time parameter identification and adaptive synchronization between two chaotic neural networks 350:1617–1633

Wu Z, Shi P, Su H, Chu J (2012) Exponential synchronization of neural networks with discrete and distributed delays under time-varying sampling. IEEE Trans Neural Netw 23:1368–1376

Zhu Q, Zhou W, Tong D, Fang J (2013) Adaptive synchronization for stochastic neural networks of neutral-type with mixed time-delays. Neurocomputing 99:477–485

Yang X, Huang C, Zhu Q (2011) Synchronization of switched neural networks with mixed delays via impulsive control. Chaos Solitons Fract 44:817–826

Sun Y, Cao J, Wang Z (2007) Exponential synchronization of stochastic perturbed chaotic delayed neural networks. Neurocomputing 70:2465–2477

Li X, Cao J (2008) Adaptive synchronization for delayed neural networks with stochastic perturbation. J Franklin Inst 345:779–791

Hassan S, Aria A (2009) Adaptive synchronization of two chaotic systems with stochastic unknown parameters. Commun Nonlinear Sci Numer, Simulat 14

Yang X, Cao J (2010) Finite-time stochastic synchronization of complex networks. Appl Math Model 34:3631–3641

Mei J, Jiang M, Xu W, Wang B (2013) Finite-time synchronization control of complex dynamical networks with time delays. Commun Nonlinear Sci Numer Simulat 18:2462–2478

Wang W, Zhong S (2012) Stochastic stability analysis of uncertain genetic regulatory networks with mixed time-varying delays. Neurocomputing 82:143–156

Hardy GH, Littlewood JE, Polya G (1988) Inequalities. Cambridge University Press, Cambridge

Cu K (2000) An integral inequality in the stability problem of time delay systems. In: Proceedings of the 39th IEEE conference on decision control, pp 2805–2810

Tang Y (1998) Terminal sliding mode control for rigid robots. Automatica 34:51–56

Cui W, Sun S, Fang J, Xu Y, Zhao L (2014) Finite-time synchronization of Markovian jump complex networks with partially unknown transition rates. J Franklin Inst 351:2543–2561

Wang X, Fang J, Mao H, Dai A (2014) Finite-time global synchronization for a class of Markovian jump complex networks with partially unknown transition rates under feedback control. Nonlinear Dyn. doi:10.1007/s11071-014-1644-2

Mei J, Jiang M, Wang X, Han J, Wang S (2014) Finite-time synchronization of drive-response systems via periodically intermittent adaptive control. J Franklin Inst 351:2691–2710

Mei J, Jiang M, Wu Z, Wang X (2014) Periodically intermittent controlling for finite-time synchronization of complex dynamical networks. Nonlinear Dyn. doi:10.1007/s11071-014-1664-y

Li C, Liao X, Wong K (1999) Chaotic lag synchronization of coupled time-delayed systems and its applications in secure communication. IEEE Trans Neural Netw 10:978–981

Mensour B, Longtin A (1998) Synchronization of delay differential equations with application to private communication. Phys Lett A 244:59–70

Xia Y, Yang Z, Han M (2009) Lag synchronization of unknown chaotic delayed Yang–Yang-type fuzzy neural networks with noise perturbation based on adaptive control and parameter identification. IEEE Trans Neural Netw 20:1165–1180

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wu, H., Zhang, X., Li, R. et al. Finite-time synchronization of chaotic neural networks with mixed time-varying delays and stochastic disturbance. Memetic Comp. 7, 231–240 (2015). https://doi.org/10.1007/s12293-014-0150-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12293-014-0150-x