Abstract

In this paper, finite-time anti-synchronization control of memristive neural networks with stochastic perturbations is studied. We investigate a class of memristive neural networks with two different types of memductance functions. The purpose of the addressed problem is to design a nonlinear controller which can obtain anti-synchronization of the drive system and the response system in finite time. Based on two kinds of memductance functions, finite-time stability criteria are obtained for memristive neural networks with stochastic perturbations. The analysis in this paper employs differential inclusions theory, finite-time stability theorem, linear matrix inequalities and Lyapunov functional method. These theoretical analysis can characterize fundamental electrical properties of memristive systems and provide convenience for applications in pattern recognition, associative memories, associative learning, etc.. Finally, two numerical examples are given to show the effectiveness of our results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the past decades, in order to process information intelligently, people set up artificial neural networks to simulate the functions of human brain. Traditional artificial neural network has been implemented with circuit, and the connection between neural processing units is realized with resistor. The resistance is equal to the strength of synapses between neurons. The strength of synapses is variable while the resistance is invariable. Combining the memory characteristic and nanometer dimensions of memristor, the resistor is replaced by memristor in order to simulate the artificial neural network of human brain better. And the memristor eventually may have the potential to be used in artificial neural networks. With the development of applications, memristive nanodevices as synapses and conventional CMOS technology as neurons are widely adopted in order to design brain-like processing systems. Recently, the authors in Refs. [1–6] have concentrated on the dynamical nature of memristor-based neural networks in order to use it in applications, such as pattern recognition, associative memories and learning, in a way that mimics the human brain.

The stability issue for neural networks with stochastic perturbations has attracted a lot of attention. In real nervous systems, because of random fluctuations from the release of neurotransmitters and other probabilistic causes, the synaptic transmission is indeed an noisy process. Therefore, in Refs. [7–11] authors have studied stochastic perturbations on neural networks. In addition, anti-synchronization control of neural networks plays important roles in many potential applications, e.g., non-volatile memories, neuromorphic devices to simulate learning, adaptive and spontaneous behavior. Moreover, the authors in Ref. [12] have studied the anti-synchronization control of memristive neural networks. However, the memristor-based neural networks models proposed and studied in the literature are deterministic. Therefore, it is of practical importance to study the stochastic memristor-based neural networks. To the authors’ best knowledge, there are few results about the anti-synchronization control of memristive neural networks with stochastic perturbations.

Moreover, most literatures regarding the stability of neural networks with stochastic perturbations are based on the convergence time being large enough, even though we require the states of neural networks become stable as quickly as possible in practical applications. In order to achieve faster convergent speed and complete stabilization in finite time rather than merely asymptotically, an effective method is to use finite-time techniques which have demonstrated better robustness and disturbance rejection properties. Finite-time synchronization control of complex networks has been investigated in Refs. [13, 14]. Unfortunately, few papers in the open literature considered the finite-time synchronization control of memristive neural networks, let alone finite-time anti-synchronization of memristive neural networks with stochastic perturbations.

In this paper, our aim is to shorten such a gap by making the attempt to deal with the anti-synchronization problem for memristive neural networks with stochastic perturbations in finite time. The difference of this paper lies in three aspects. First, we studied the anti-synchronization control problem about the memristive neural networks with stochastic perturbations. Moreover, based on the differential inclusions theory and the finite-time stability theorem, we proposed a nonlinear controller to ensure the stability of memristive neural networks with stochastic perturbations in finite-time. Finally, according to two kinds of memductance functions, finite-time stability criteria are obtained for memristive neural networks. Two numerical examples are provided to show the effectiveness of the proposed theorems.

2 Preliminaries

We describe a general class of recurrent neural networks, and we give its circuit in Fig. 1. The Kirchoff’s current law of its \(i\hbox {th}\) subsystem in Ref. [12] has been proposed by the following equation:

where \(x_{i}(t)\) is the voltage of the capacitor \(\mathbb {C}_{i}\); \(\mathbb {R}_{ij}\) denotes the resistor through the feedback function \(g_{i}(x_{i}(t))\) and \(x_{i}(t)\). \(\mathcal {R}_{i}\) represents the parallel-resistor corresponding the capacitor \(\mathbb {C}_{i}\). And

From (1), we have

where

By replacing the resistor \(\mathbb {R}_{ij}\) and \(\mathcal {R}_{i}\) in the primitive neural networks (1) with memristor whose memductance are \(W_{ij}\) and \(P_{i}\), we can construct the memristive neural networks of the following form

where

Combining the physical structure of a memristor device, one can see that

where \(q_{ij}\) and \(\sigma _{ij}\) denote charge and magnetic fluxes corresponding to the memristor \(\mathbb {R}_{ij}\), and \(p_{i}\) and \(\chi _{i}\) denote charge and magnetic fluxes corresponding to the memristor \(\mathcal {R}_{i}\).

(Color online) The circuit of recurrent neural networks in model (1)

As we know that the pinched hysteresis loop is due to the nonlinearity of memductance function. Based on two typical memductance functions, in this paper, we discuss the following two cases which are proposed in Ref. [4].

Case 1: The memductance function \(W_{ij}\), \(P_{i}\) are given by

where \(\xi _{ij}, \eta _{ij},\phi _{i},\varphi _{i}\) and \(l_{ij}, T_{i}\) are constants.

Case 2: The memductance function \(W_{ij}\) is given by

where \(c_{ij} , \, d_{ij}, \, \alpha _{i}, \, \beta _{i}\) are constants, \(i,j=1,2,\ldots , n.\)

We give some definitions which are needed in the following.

Definition 1

(See [15]) Suppose \(E\subseteq R^{n}\), then \(x\rightarrow F(x)\) is called a set-valued map from \(E\rightarrow R^{n}\), if for each point \(x\epsilon E\), there exists a nonempty set \(F(x)\subseteq R^{n}\). A set-valued map \(F\) with nonempty values is said to be upper semi-continuous at \(x_{0}\epsilon E\), if for any open set \(N\) containing \(F(x_{0})\), there exists a neighborhood \(M\) of \(x_{0}\) such that \(F(M)\subseteq N\). The map \(F(x)\) is said to have a closed (convex, compact) image if for each \(x\epsilon E, F(x)\) is closed (convex, compact).

Definition 2

(See [16]) For the system \(\dot{x}(t)=f(x), \, x\epsilon R^{n}\), with discontinuous right-hand sides, a set-valued map is defined as

where \(co[E]\) is the closure of the convex hull of set \(E, \, B(x,\delta )=\{y:\Vert y-x\Vert \le \delta \}\) and \(\mu (N)\) is Lebesgue measure of set \(N\). A solution in Filippov’s sense of Cauchy problem for this system with initial condition \(x(0)=x_{0}\) is an absolutely continuous function \(x(t)\), which satisfies \(x(0)=x_{0}\) and differential inclusion

In order to establish our main results, it is necessary to give the following assumptions and Lemmas.

Assumption 1

The function \(g_{i}\) is odd function and bounded, and satisfies the Lipschitz condition with a Lipschitz constants \(Q_{i}\), i.e.,

where \(Q_{i}\) is a positive constant for \(i=1,2,\ldots ,n\). We let \(Q=diag\{Q_{1},Q_{2},\ldots ,Q_{n}\}\).

Assumption 2

For \(i,j=1,2,\ldots ,n\),

Lemma 1

([17]) If \(a_{1}, a_{2}, \ldots , a_{n}\) are positive numbers and \(0<r<p\), then

Lemma 2

([18]) If \(\mu , \nu (t),\) and \(\omega \) are real matrices of appropriate dimension with \(\vartheta \) satisfying \(\vartheta =\vartheta ^{T}\), then

for all \(\nu (t)\nu ^{T}(t)\le I\), if and only if there exists a positive constant \(\lambda \) such that

According to the features of memristor given in Case 1 and Case 2, the following two cases can happen.

Case \(1'\): In case 1, according to the work in Ref. [19], we get

for \(i,j=1,2,\ldots ,n\), where \(\hat{a}_{i}, \, \check{a}_{i} \, \hat{b}_{ij}\) and \(\check{b}_{ij}\) are constants, and \(\bar{a}_{i}=\max \{\hat{a}_{i}, \check{a}_{i}\}, \, \underline{a}_{i}=\min \{\hat{a}_{i}, \check{a}_{i}\}\). \(\bar{b}_{ij}=\max \{\hat{b}_{ij}, \check{b}_{ij}\}, \, \underline{b}_{ij}=\min \{\hat{b}_{ij}, \check{b}_{ij}\}\). Solutions of all the systems considered in this paper are intended in the Filippov’s sense. \([\cdot ,\cdot ]\) represents the interval. co\(\{\tilde{\varDelta },\hat{\varDelta }\}\) denotes the closure of the convex hull of \(R^{n}\) generated by real numbers \(\tilde{\varDelta }\) and \(\hat{\varDelta }\).

The system (3) is a differential equation with discontinuous right-hand sides, and based on the theory of differential inclusion, if \(x_{i}(t)\) is a solution of (3) in the sense of Filippov, then system (3) can be modified by the following

or equivalently, there exist \(a_{i}(t)\epsilon co(a_{i}(x_{i}(t)))\) and \(b_{ij}(t)\epsilon co(b_{ij}(x_{i}(t)))\), such that

where \(a_{i}(t)\) and \(b_{ij}(t)\) depend on the state \(x_{i}(t)\) and time \(t\).

In this paper, we consider system (8) or (9) as the drive system and the corresponding response system is:

or equivalently, there exist \(a_{i}(t)\epsilon co(a_{i}(y_{i}(t)))\) and \(b_{ij}(t) \epsilon co(b_{ij}(y_{i}(t)))\), such that

Let \(e(t)=(e_{1}(t),e_{2}(t),\ldots , e_{n}(t))^{T}\) be the anti-synchro- nization error, where \(e_{i}(t)=x_{i}(t)+y_{i}(t)\), using the theories of set-valued maps and differential inclusions, then we can get the anti-synchronization error system in the following. Since there exist environmental noises in real neural networks, stochastic perturbations should be considered in neural networks models. According to Assumption 2, we get

or equivalently, there exist \(a_{i}(t)\epsilon co(a_{i}(e_{i}(t)))\) and \(b_{ij}(t) \epsilon co(b_{ij}(e_{i}(t)))\), such that

where \(f_{j}(e_{j}(t))=g_{j}(x_{j}(t))+g_{j}(y_{j}(t))\), and the white noise \(dw_{i}(t)\) is independent of \(dw_{j}(t)\) for \(i\ne j\). \(w(t)=(w_{1}(t), w_{2}(t), \ldots , w_{n}(t))^{T}\epsilon R^{n}\) is an \(n\)-dimensional Brownian motion. The intensity function \(h\) is the noise intensity function matrix satisfying the following condition:

where \(M\) is a known constant matrix with compatible dimensions, and \(e(t)=(e_{1}(t), e_{2}(t), \ldots , e_{n}(t))\).

Let \(A(t)=(a_{i}(t))_{n\times n}, \, \hat{A}=(\hat{a}_{i})_{n\times n},\check{A}=(\check{a}_{i})_{n\times n}, \, co\{\hat{A}, \check{A}\}=[\underline{A},\bar{A}]\), where \(\underline{A}=(\underline{a}_{i})_{n\times n}, \bar{A}=(\bar{a}_{i})_{n\times n}\) and \(B(t)=(b_{ij}(t))_{n\times n}, \, \hat{B}=(\hat{b}_{ij})_{n\times n},\check{B}=(\check{b}_{ij})_{n\times n}, \, co\{\hat{B}, \check{B}\}=[\underline{B},\bar{B}]\), where \(\underline{B}=(\underline{b}_{ij})_{n\times n}, \bar{B}=(\bar{b}_{ij})_{n\times n}\), then Eq. (13) can be written in the compact form:

Case \(2'\): \(a_{i}(x_{i}(t))\) and \(b_{ij}(x_{i}(t))\) are continuous functions, and \(\underline{\varPsi }_{i}\le a_{i}(x_{i}(t))\le \overline{\varPsi }_{i}\) where \(\underline{\varPsi }_{i}\) and \(\overline{\varPsi }_{i}\) are constants. \(\underline{\varLambda }_{ij}\le b_{ij}(x_{i}(t))\le \overline{\varLambda }_{ij}\) where \(\underline{\varLambda }_{ij}\) and \(\overline{\varLambda }_{ij}\) are constants.

Similar to the case \(1'\), let \(\varPsi (t)=(\varPsi _{i}(t))_{n\times n}\), where \(\underline{\varPsi }=(\underline{\varPsi }_{i})_{n\times n}, \bar{\varPsi }=(\bar{\varPsi }_{i})_{n\times n}, \, \varLambda (t)=(\varLambda _{i}(t))_{n\times n}\), and \(\underline{\varLambda }=(\underline{\varLambda }_{i})_{n\times n}, \bar{\varLambda }=(\bar{\varLambda }_{i})_{n\times n}\). By adding the drive system and the response system, the error system can be written in the compact form as follows:

3 Main Results

In this section, according to two cases of memductance functions, discrete and continuous finite-time synchronization criteria are given for memristive neural networks, respectively. Two corollaries are derived for the memristive neural networks without stochastic perturbations.

3.1 Finite-Time Synchronization Criteria in Case \(1'\)

Theorem 1

If there exists a constant \(\varepsilon \) and a positive-definite matrix \(Z\epsilon R^{n\times n}\) which satisfy that

then the system (15) under the following controller \(u_{i}(t)\) can achieve the finite-time synchronization,

where \(0<\alpha <1, \, k_{i1}\) and \(k_{i2}\) are positive constants.

And

The upper bound of its convergent time is

where

Proof

The system (15) under the controller \(u(t)\) can be written as

where

Transform (18) into the compact form as follows:

Consider the controlled system (20) with controller (21), we have

\(\square \)

We construct the Lyapunov function as follows:

then we calculate the time derivative of \(V(e(t))\) along the trajectories of the system (22). By the Itô’s formula in Ref. [20], we obtain the stochastic differential as

where

According to Lemma 2 and Assumption 1, we have

where \(\varepsilon >0\) is an arbitrary positive constant.

Combining (25)–(26) results in

According to \(0<\alpha <1\) and Lemma 1, we get

then,

Thus, based on (17), taking the expectations on both sides of (27), we have

And

By the finite-time stabilization theory in [21], \(V(e(t))\) stochastically converges to zero in finite time, whose upper bound is

Thus we complete the proof.

Corollary 1

In case \(1'\), if there exist a constant \(\varepsilon \) and a positive-definite matrix \(Z\epsilon R^{n\times n}\) which satisfy

then when the system (15) without stochastic perturbations via the controller (18) will achieve the synchronization in finite-time and the upper bound of its synchronization time is the same as (31).

3.2 Finite-Time Synchronization Criteria in Case \(2'\)

Theorem 2

Under the case \(2'\), the system (16) under the controller (18) will achieve the finite-time synchronization, if there exist a constant \(\varepsilon \) and a positive-definite matrix \(Z\epsilon R^{n\times n}\) which satisfy that

The upper bound of its convergence time is the same as that in Theorem 1.

Proof

By Eq. (16), it is easy to know

Transform (34) into the compact form as follows:

We construct the Lyapunov function as follows:

Then we calculate the time derivative of \(V(e(t))\) along the trajectories of system (35). By It\(\hat{o}\)’s formula, we obtain the following stochastic differential as

where

\(\square \)

According to Lemma 2 and Assumption 1, we have

where \(\varepsilon >0\) is an arbitrary positive constant.

Combining (38)–(39) results in

According to \(0<\alpha <1\) and Lemma 1, we get

then,

Thus, based on Eq. (33), taking the expectations on both sides of Eq. (40), we have

And

By the finite-time stabilization theory , \(V(e(t))\) stochastically converges to zero in finite time. The upper bound of its convergent time is

Thus we complete the proof.

Remark 1

This paper fills the gap of finite-time control of memristive neural networks. According to two kinds of memductance functions, discrete and continuous finite-time stability criteria are obtained for memristive neural networks, respectively.

Remark 2

In this paper, the proposed two synchronization criteria not only ensure the neural networks with stochastic perturbations can achieve stabilization, but also can estimate the upper bound of the convergence time. The proposed theoretical results provide the convenience for related applications of memristive neural networks.

Corollary 2

Under Case \(2'\), the system (16) without stochastic perturbations under controller (18) will achieve finite-time synchronization, if there exist a constant \(\varepsilon \) and a positive-definite matrix \(Z\epsilon R^{n\times n}\) which satisfy that

The upper bound of its converge time is the same as Eq. (44).

4 Illustrative Example

In this section, two numerical examples are given to illustrate the effectiveness of the results obtained above.

Example 1

In Case \(1'\), we consider the two-dimensional memristive neural network with stochastic perturbations as follows:

where \(f(x(t))=tanh(x(t))\), and

So we get

And \(h(t,x(t))=diag(tanh(x_{1}(t)), tanh(x_{2}(t)))\). Then \(Q=M\) is a \(2\times 2\) identity matrix. The initial values of the error system are \(e(0)=[1,-1]^{T}\), then we get \(\parallel e(0)\parallel =1.414\). We choose \(\alpha =0.5\). If \(Z\) is an identity matrices, and \(\varepsilon =1, \, \underline{K}_{1}=2\), then we get

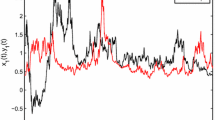

By choosing an arbitrary gain and the upper bound \(\underline{K}_{2}=0.6\), the system in Example 1 is stabilized in finite time. The upper bound of its convergence time is \(T=\frac{\parallel e(0)\parallel ^{0.5}}{0.6\times 0.5}=3.9637\). The simulation results are depicted in Fig. 2, which shows the evolutions of the errors \(e_{1}(t),e_{2}(t)\) for the controlled system in Example 1. The simulation results have confirmed the effectiveness of Theorem 1.

Example 2

In Case \(2'\), we consider the following two-dimensional memristive neural network with stochastic perturbations

where \(f(x(t))=tanh(x(t))\), and \(a_{1}(x_{1}(t))=1+0.3sin(x_{1}(t)), \, a_{2}(x_{2}(t))=1+0.6cos(x_{2}(t)), \, b_{11}(x_{1}(t))=0.6sin(x_{1}(t)), \, b_{12}(x_{1}(t))=0.8sin(x_{1}(t)), \, b_{21}(x_{2}(t))=0.8cos(x_{2}(t)), \, b_{22}(x_{2}(t))=0.6cos(x_{2}(t)), \, \underline{K}_{1}=0.5\), and the values of other parameters are as the same as those in Example 1. Then we have

and we verified that

From Fig. 3, we can see that the error curves become convergent. The upper bound of the convergence time is \(T=\frac{\parallel e(0)\parallel ^{0.5}}{0.5\times 0.5}=2.3782\), which verified the effectiveness of Theorem 2.

5 Conclusion

This paper studied finite-time anti-synchronization control of memristive neural network with stochastic perturbations. According to two kinds of memductance functions, finite-time stability criteria are obtained for memristive neural networks. It can well mimic the human brain in many applications, such as pattern recognition and associative memories. Finally, two numerical examples are given to illustrate the effectiveness of the proposed results.

References

Zhang G, Shen Y, Wang L (2013) Global anti-synchronization of a class of chaotic memristive neural networks with time-varying delays. Neural Netw 46:1–8

Wu A, Wen S, Zeng Z (2012) Synchronization control of a class of memristor-based recurrent neural networks. Inf Sci 183:106–116

Wu A, Zeng Z (2012) Dynamical behaviors of memristor-based recurrent networks with time-varyig delays. Neural Netw 36:1–10

Wu A, Zeng Z (2014) Passivity analysis of memristive neural networks with different memductance functions. Commun Nonlinear Sci Numer Simul 19:274–285

Zhang G, Shen Y (2014) Exponential synchronization of delayed memristor-based chaotic neural networks via periodically intermittent control. Neural Netw 55:1–10

Zhang G, Shen Y (2013) New algebraic criteria for synchronization stability of chaotic memristive neural networks with time-varying delays. IEEE Trans Neural Netw Learn Syst 24:1701–1707

Li X, Cao J (2008) Adaptive synchronization for delayed neural networks with stochastic perturbation. J Frankl Inst 345:779–791

Li X, Ding C, Zhu Q (2010) Synchronization of stochastic perturbed chaotic neural networks with mixed delays. J Frankl Inst 347:1266–1280

Zhu Q, Yang X, Wang H (2010) Stochastic asymptotic stability of delayed recurrent neural networks with both Markovian jump parameters and nonlinear disturbances. J Frankl Inst 347:1489–1510

Zhou W, Wang T, Mou J (2012) Synchronization control for the competitive complex networks with time delay and stochastic effects. Commun Nonlinear Sci Numer Simul 17:3417–3426

Gu H (2009) Adaptive synchronization for competitive neural networks with different time scales and stochastic perturbation. Neurocomputing 73:350–356

Wu A, Zeng Z (2013) Anti-synchronization control of a class of memristive recurrent neural networks. Commun Nonlinear Sci Numer simul 18:373–385

Yang X, Cao J (2010) Finite-time synchronization of complex networks. Appl Math Model 34:3631–3641

Wang W, Peng H, Li L, Xiao J, Yang Y (2013) Finite-time function projective synchronization in complex multi-links networks with time-varying delay. Neural Process Lett. doi:10.1007/s11063-013-9335-4

Aubin J, Frankowska H (1990) Set-valued analysis. Birkhäuser, Boston

Filippov A (1984) Differential equations with discontinuous right-hand side. Mathematics and its applications., SovietKluwer Academic, Boston

Hardy G, Littlewood J, Pólya G (1988) Inequalities. Cambridge Mathematical Library, Cambridge University Press, Cambridge

Boyd S, Ghaoui L, Feron E, balakrishnan V (1994) Linear matrix inequalities in system and control theory. vol. 15 of SIAM studied in Applied mathematics. SIAM, Philadelphia

Wu A, Zeng Z, Xiao J (2013) Dynamic evolution evoked by external inputs in memristor-based wavelet neural networks with different memductance functions. Adv Differ Equ 258:1–14

Mao X, Yuan C (2006) Stochastic differential equations with Markovian switching. Imperial College Press, London

Bhat S, Bernstein D (1997) Finite-time stability of homogeneous systems. In: Proceeding of the American control conference, vol 4. pp 2513–2514

Acknowledgments

This paper is supported by the National Natural Science Foundation of China (Grant Nos. 61100204, 61170 269, 61121061), the China Postdoctoral Science Foundation Funded Project (Grant No. 2013M540070), the Beijing Higher Education Young Elite Teacher Project (Grant No. YETP0449), the Beijing Natural Science Foundation (Grant No. 4142016).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, W., Li, L., Peng, H. et al. Finite-Time Anti-synchronization Control of Memristive Neural Networks With Stochastic Perturbations . Neural Process Lett 43, 49–63 (2016). https://doi.org/10.1007/s11063-014-9401-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-014-9401-6