Abstract

Image inpainting in the wavelet domain refers to the recovery of an image from incomplete wavelet coefficients. In this paper, we propose a wavelet inpainting model by using fractional order total variation regularization approach. Moreover, we use a simple but very efficient primal–dual algorithm to calculate the optimal solution. In the light of saddle-point theory, the convergence of new algorithm is guaranteed. Experimental results are presented to show performance of the proposed algorithm.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Image inpainting, also known as image completion or disocclusion, is an active research area in image processing. It refers to the problem of filling in missing or damaged regions in image, either in the pixel domain or in a transformed domain.

Inpainting in the image domain, which uses the values of the available pixels to fill the missing pixels, has been widely investigated in the last two decades. Bertalmo et al. (2000) introduced the partial differential equations (PDEs) to smoothly propagate the information from the neighboring pixels into the areas contain the unknown pixels. Chan and Shen (2001a) considered a total variational (TV) inpainting model. The TV inpainting model fills in the missing regions such that the TV is minimized, and its use is motivated by the wide applications of TV in image restoration. Further, higher order methods such as the curvature-driven diffusion (CDD) (Chan and Shen 2001b) and Euler’s elastic based variational model (Chan and Shen 2002) were also applied to the inpainting problem in order to overcome the block effects caused by the TV model. These edge-preserved methods are unable to recover the texture regions efficiently. Therefore, the exemplar-based texture synthesis techniques (Criminisi et al. 2004; Aujol et al. 2011) were developed for recovering the textured images. Other techniques such as morphological component analysis is also applied on simultaneous cartoon and texture image inpainting by Elad et al. (2005). In Gilboa and Osher (2008), Chen and Cheng (2010), Peyre et al. (2011), non-local total variation was further investigated and applied in various image restoration problem including the inpainting task. These non-local techniques are more suitable for recovering the texture and fine details of images than the previous local methods. In Jin and Ye (2015), a patch-based image inpainting method using a low-rank Hankel structured matrix completion approach was further investigated. All these works concentrate on image inpainting in the pixel domain.

Inpainting in transformed domains is totally different because each single corruption of data can, in general, affect the whole image, and thus an inpainting region in the pixel domain is not well defined. Transformed domain inpainting arises in practical applications because images are usually formatted, transmitted and stored in a transformed domain. In such situations, the transform is either a discrete cosine transform (DCT) or a discrete wavelet transform (DWT). In Fig. 1, we show the layout of 3-scale wavelet decomposition. The higher frequency subbands, such as”HH_1, HH_2, LH_1, HL_1, LH_2,HL_2” in Fig. 1, do not greatly affect visual quality, while the loss of other high frequencies in the coarsest subband (”HL_3,LH_3” in Fig. 1) create Gibbs artifacts or other blur effects. During storage and transmission, certain coefficients may be lost or corrupted, which naturally leads to the transformed domain inpainting problem. In Cai et al. (2008) used tight-frame approach for inpainting and showed that it was equivalent to using an \(l_1\) regularization on the tight-frame coefficients. In He and Wang (2014), a weighted sparse restoration model based on the wavelet frame are proposed. This new algorithm can be considered as the incorporation of prior structural information of the wavelet frame coefficients into the traditional \(l_1\) model.

An alternative class of approaches is to use a hybrid method to control both image and wavelet domains. We start with a standard image model

In the following, images are transformed from the pixel domain to a wavelet domain through a wavelet transform. The wavelet coefficients of the noisy image f under an orthogonal wavelet transform W are given by \(\hat{{f}}=Wf\). The observed wavelet coefficients \(\hat{{f}}\) are given by \(\hat{{f}}\) but with the missing ones set to zero, i.e.

Here, \(\Omega \) is the complete index set in the wavelet domain, and \(I\subset \Omega \) is the set of available wavelet coefficients. Then, an observed image f is obtained by the inverse wavelet transform \(f=W^{T}\hat{{f}}\).

Durand and Froment (2003) considered to reconstruct the wavelet coefficients using the total variation norm. To fill in the corrupted coefficients in the wavelet domain in such a way that sharp edges in the image domain are preserved, Chan et al. (2006) proposed the following to minimize the objective function:

where

is the TV norm. \(D_z \) is the forward difference operator in the z-direction for \(z\in \{x,y\}, \lambda \) is a regularization parameter \(I\subset \Omega \) be the uncorrupted known index set. By using the dual formulation of the TV norm, the minimization of (1) becomes the minimax problem

where the discrete divergence of pis a vector defined as

with \(p_{0,s}^x =p_{r,0}^y =0\) for \(r,s=1,\ldots ,n\). It is a method that combines coefficients in the wavelet domain and regularization in the image domain.

Although the classical total variation is surprisingly efficient for recovering some lost wavelet coefficients as presented in Chan et al. (2006), it is well known that the total variation regularization is not suitable for images with fine structures, details and textures. In Zhang and Chan (2010), Zhang et al. (2010), extended the TV wavelet inpainting model to the corresponding non-local (NL) form, and proposed an efficient iterative algorithm called Bregmanized operator splitting (BOS) to solve the NLTV wavelet inpainting models (NLTV-BOS). In fact, these models discussed above are all established as special cases of variational image restoration problem. However, these time-consuming non-local methods cannot eliminate the staircase effect and even cause extra blocky artifacts. For the staircase effect elimination, high-order derivative based models perform well, but they often cause blurring of the edges. As a compromise between the first-order TV regularized models and the high-order derivative based models, some fractional-order derivative based models have been introduced in Liu and Chang (1997), Chen et al. (1990, 2011), Pu et al. (2010), Idczak and Kamocki (2015), Bai and Feng (2007) for additive noise removal and image restoration. They can ease the conflict between staircase elimination and edge preservation by choosing the order of derivative properly. Moreover, the fractional-order derivative operator has a “non-local” behavior because the fractional-order derivative at a point depends upon the characteristics of the entire function and not just the values in the vicinity of the point, which is beneficial to improve the performance of texture preservation. The numerical results in literatures (Chen et al. 2013; Zhang et al. 2012; Zhang and Chen 2015) have demonstrated that the fractional-order derivative performs well for eliminating staircase effect and preserving textures.

In this paper, motivated by these works, in order to recover textures and geometry structures simultaneously regularization for different loss cases, we extend the total variation based wavelet inpainting to the fractional order total variation based wavelet inpainting. Moreover, for new model, we also propose a fast iterative algorithm based on the primal–dual method. The primal–dual algorithm was first presented by Arrow et al. (1958) and named as the primal–dual hybrid gradient (PDHG) method in Zhu and Chan (2008). In this method, each iteration updates both a primal and a dual variable. It is thus able to avoid some of the difficulties that arise when working only on the primal or dual side (Chambolle 2004; Esser et al. 2010). The convergence of the primal–dual algorithm has been studied in He and Yuan (2012), Bonettini and Ruggiero (2012). In our paper, the primal–dual algorithm is used to solve the fractional-order total variation inpainting model. The efficiency of this simple model for natural images is validated by a large amount of numerical simulations. Our experiments show that even with substantial loss of wavelet coefficients (up to 50%, including low frequencies), our model may also reconstruct the original image remarkably well, especially in retaining geometric structures. The challenges for fractional regularization in practice include choosing an appropriate order, and finding a stable and efficient algorithm.

The remainder of this paper is organized as follows: Sect. 2 describes the notations for discrete fractional-order derivative. In Sect. 3, we propose a fractional order primal–dual model for image inpainting and detail the proposed algorithm with a convergence analysis. Section 4 reports our simulation results with comparisons, and Sect. 5 concludes this work.

2 Fractional-order derivative

To simplify, our images will be 2-dimensional matrices of size \(N\times N\). We denote by X the Euclidean space \(\mathbb {R}^{N\times N}\). For any \(u=(u_{i,j} )_{i,j=1}^N \in X\), we denote \(u_{i,j} =0\) for \(i,j<1\) or \(i,j>N\).

From Grünwald–Letnikov fractional derivative definition (Zhang et al. 2012), the discrete fractional- order gradient \(\nabla ^{\alpha }u\) is a vector in \(Y=X\times X\) given by

with

where \(C_k^\alpha =\frac{\Gamma (\alpha +1)}{\Gamma (k+1)\Gamma (\alpha -k+1)}\) and \(\Gamma (x)\) is the Gamma function. In additional, the coefficients \(C^{(\alpha )}\) can also be obtained recursively from

When \(\alpha =1,\;C_k^1 =0\) for \(k>1\).

For any \(p=(p^{1},p^{2})\in Y\) the discrete fractional-order divergence is defined as

One can check easily that (3) and (4) satisfy the property as follows:

where \(\left\langle {u,v} \right\rangle _X =\sum _{i,j=1}^N {u_{i,j} v_{i,j} ,\forall u,v\in X} \) and \(\left\langle {p,q} \right\rangle _Y =\sum _{i,j=1}^N {(p_{i,j}^1 q_{i,j}^1 +p_{i,j}^2 q_{i,j}^2 ),\forall p} =(p^{1},p^{2}),q=(q^{1},q^{2})\in Y\), are the inner products of X and Y, respectively.

Let \(J(u)=\left\| {\nabla ^{\alpha }u} \right\| _1 \). Then, its conjugate function \(J_P^{*} (p)\) satisfies the following property:

where \(P=\left\{ {p\in Y:\left\| p \right\| _\infty \le 1} \right\} \) and \(\left\| p \right\| _\infty \) denotes the discrete maximum norm defined as

3 Proposed algorithm

Based on the model (2), we propose a wavelet inpainting model by fractional-order total variation as follows:

There are many numerical algorithms for solving the variation problem related to image inpainting. However, they suffer from slow running or high computational complexity or lack of knowledge on the selection of the regularization parameter. In this paper, by referring the primal–dual algorithm for solving saddle-point problems, we propose a primal–dual formulation to solve the fractional-order wavelet inpainting model (5).

3.1 Primal–dual method for solving saddle-point problems

For clear description, we give some preliminaries on the first-order primal–dual method summarized in Chambolle and Pock (2010). We consider the saddle-point problem

where \(G:X\rightarrow \left[ {0,+\infty } \right) \) and \(F^{{*}}:Y\rightarrow \left[ {0,+\infty } \right) \) are proper convex, lower-semicontinuous (l.s.c.) functions, \(F^{{*}}\) being itself the convex conjugate of a convex l.s.c. function F.

This saddle-point problem is a primal–dual formulation of the following nonlinear primal problem

Or of the corresponding dual problem

To solve the saddle-point problem (6), Chambolle and Pock (2010) proposed a primal–dual algorithm. Let the primal variable x be fixed. Taking the derivative of the dual variable y, we have

Similarly, by taking the derivative of the primal variable x with the fixed dual variable y, we have

where \(\partial F^{{*}}\) and \(\partial G\) are the subgradients of functions \(F^{{*}}\) and G.

In the sense that their resolvent operator through

has a closed-form representation.

As analyzed in He and Yuan (2012), Bonettini and Ruggiero (2012), Chambolle and Pock (2010), the saddle-point problem (6) can be regarded as the primal–dual formulation of a nonlinear programming problem, and this fact has inspired some primal–dual algorithms for TV image restoration problems. We refer to, e.g., Zhang et al. (2011) for their numerical efficiency.

3.2 New algorithm

Let \(\chi \) be a diagonal matrix with \(\chi _{i,j} =1\) if \((i,j)\in I\) and \(\chi _{i,j} =0\) if \((i,j)\in \Omega \backslash I\). The minimization problem in (5) can be rewritten as

For convenience, we assume that two variables u and \({\hat{u}}\) can be transformed into each other by \({\hat{u}}=Wu\) and \(u=W^{T}\hat{{u}}\). Therefore, the minimization problem (7) becomes

In the form of the general saddle-point problem (6), we see that \(A=\nabla ^{\alpha }, G(u)=\frac{\lambda }{2}\left\| {\chi W(u-f)} \right\| _2^2 , F^{{*}}(p)=J_P^{*} (p)\). The minimization problem of (8) can be solved exactly as

Substituting (9) into (8) yields the following dual problem:

where \(-A^{T}\) is the corresponding discretization of the fractional-order divergence.

Since \(F^{{*}}\) is the indicator function of a convex set, the resolvent operator reduces to pointwise Euclidean projectors onto \(L^{2}\) balls

The resolvent operator with respect to G poses simple pointwise quadratic problems. The solution is trivially given by

where \({\tilde{u}}=u-\tau \nabla ^{\alpha {*}}p\). When wavelet transform matrix Wis orthogonal, \(W^{T}W=I\). This method can also be generalized to non-orthogonal wavelet transforms, for example, bi-orthogonal wavelet transforms (Cohen et al. 1992), redundant transforms (Kingsbury 2001) and tight frames (Ron and Shen 1997). In these cases, Wis not orthogonal, but still has full rank, and hence \(W^{T}W\) is invertible.

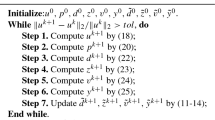

Unifying all the schemes together, we obtain the following algorithm:

The Barbara image with additive noise for 10% of wavelet coefficients lost (\(\sigma =10)\): a observed image, b restored image with \(\alpha =0.8\) by FOTV-PD (PSNR \(=23.74\) dB), c restored image with \(\alpha =1.2\) by FOTV- PD (PSNR \(=24.28\) dB), d restored image \(\alpha =1.4\) by FOTV-PD (PSNR \(=24.65\) dB), e restored image by TV-PD (PSNR \(=22.61\) dB), f restored image by NLTV-BOS(PSNR \(=23.59\) dB), g Zoom of (d), h Zoom of (e), i Zoom of (f)

The Lena image with additive noise for 10% of wavelet coefficients lost (\(\sigma =10)\): a observed image, b restored image with \(\alpha =0.8\) by FOTV-PD (PSNR \(=24.92\) dB), c restored image with \(\alpha =1.2\) by FOTV-PD (PSNR \(=25.03\) dB), d restored image with \(\alpha =1.4\) by FOTV-PD (PSNR \(=25.46\) dB), e restored image by TV-PD(PSNR \(=22.97\) dB), f restored image by NLTV-BOS (PSNR \(=23.52\) dB), g Zoom of (d), h Zoom of (e), i Zoom of (f)

Peppers image with additive noise for 50% wavelet coefficients randomly lost (\(\sigma =10)\). a Received image, b restored image with \(\alpha =0.8\) by FOTV-PD (PSNR \(=17.99\) dB), c restored image with \(\alpha =1.2\) by FOTV-PD (PSNR \(=20.18\) dB), d restored image with \(\alpha =1.4\) by FOTV-PD (PSNR \(=20.68\) dB), e restored image by TV-PD (PSNR \(=17.18\) dB), f restored image by NLTV-BOS (PSNR \(=19.65\) dB), g Zoom of (d), h Zoom of (e), i Zoom of (f)

Baboon image with additive noise for 50% wavelet coefficients randomly lost. a Received image, b restored image with \(\alpha =0.8\) by FOTV-PD (PSNR \(=20.56\) dB), c restored image with \(\alpha =1.2\) by FOTV-PD (PSNR \(=20.31\) dB), d restored image with \(\alpha =1.4\) by FOTV-PD (PSNR \(=21.06\) dB), e restored image by TV-PD (PSNR \(=18.23\) dB), f restored image by NLTV-BOS (PSNR \(=19.37\) dB), g Zoom of (d), h Zoom of (e), i Zoom of (f)

The Boat image with additive noise for whole LH subband loss(\(\sigma =15)\). a Received image, b the result by FOTV-PD (\(\alpha =1.2\), PSNR \(=26.75\) dB, CPU time \(=12.19\) s), c restored image by TV-PD(PSNR \(=24.32\) dB, CPU time \(=22.36\) s), d the result by NLTV-BOS (PSNR \(=25.48\) dB, CPU time \(=535.23\) s)

The Goldhill image with additive noise for whole HL subband loss (\(\sigma =15)\). a Received image, b the result by FOTV-PD (\(\alpha =1.2\), PSNR \(=25.12\) dB, CPU time \(=20.92\) s), c restored image by TV-PD (PSNR \(=22.19\) dB, CPU time \(=35.47\). d the result by NLTV-BOS (PSNR \(=24.48\) dB, CPU time \(=524.59\) s)

The parameters \(\tau ^{n}, \sigma ^{n}\) play a crucial role for the efficiency of the algorithm. Thus, it is important to prove the convergence of the algorithm under conditions as flexible as possible (Bonettini and Ruggiero 2012). Next we discuss the convergence of the proposed algorithm. In order to guarantee the convergence, suppose a parameter \(L=\left\| {\nabla ^{\alpha }} \right\| \). It has been proven in Bonettini and Ruggiero (2012) that the primal–dual algorithm converges to the saddle-point subject to a constraint of the parameter L.

Let \(w_i =(-1)^{i}C_i^\alpha \). Then, we have

Therefore

which guarantees the convergence of new algorithm.

For primal–dual pair (u, p), the partial primal–dual gap G(u, p) is defined by

The primal–dual gap G(u, p) is a measure of closeness of the primal–dual (u, p) to the primal–dual solution, and we use it to design the stopping criterion for our numerical algorithm in this paper.

4 Experimental results

In this section, we evaluate the performance of the proposed algorithm-FOTV-PD and compare it with TV-PD (Chan et al. 2006) and NLTV-BOS (Zhang and Chan 2010) in wavelet inpainting by PSNR value and subjective image quality. Six images (size of \(256\times 256\)): Barbara, Lena, Peppers, Baboon, Boat and Goldhill are used for our tests in Fig. 2. The reconstruction results are shown in this section and also quantitatively evaluated by the peak signal to noise ratio (PSNR) defined by

where \(u_0\) is the original image without noise and u is the inpainted image. In all the following experiments, we apply the Daubechies 7–9 biorthogonal wavelets with symmetric extension for three algorithms. We use the WaveLab to implement the forward and the backward biorthogonal wavelet transforms. 3-scale wavelet decomposition is used for the images in the test.

For the FOTV-PD algorithm, the proper selection of the fraction depends on the image to inpaint. We illustrate the inpainted images with \(\alpha =0.8,\;1.2,\;1.4\) respectively. As represented, the smaller \(\alpha \) leads to the blocky (staircase) effects and the larger \(\alpha \) will make restored image too smooth. \(\alpha =1.4\) is appropriate for nonsmooth problems because the diffusion coefficients are close to zero in regions representing large gradients of the fields, allowing discontinuities at those regions.

The steplength \(\sigma ^{n}\) and \(\tau ^{n}\) are chosen for the convergence (He and Yuan 2012). Indeed, the proposed steplength choices are

For the weight function w(x, y) in the NLTV-BOS regularization, we use the same setting as that adopted in Zhang and Chan (2010), i.e., the patch size and the searching window for the semi-local weight are fixed at 5 and 15. The 10 best neighbors and 4 nearest neighbors are chosen for the weight computation of each pixel.

Experiment 1

Barbara and Lena noiseless with 10% wavelet coefficients lost:

Figures 3 and 4 show the Barbara and Lena images with 10% wavelet coefficients lost randomly and its restored image. We illustrate the inpainted images with \(\alpha =0.8, \;{1.2}, \;{1.4}\) respectively. In Fig. 3b, PSNR of the inpainted images with \(\alpha =0.8\) is 29.37 dB. Fig. 3c is obtained by \(\alpha =1.2\) with PSNR \(=34.55\) dB and Fig. 3d with PSNR \(=34.50\) dB is obtained by \(\alpha =1.4\). In Fig. 4b, PSNR of the inpainted images with \(\alpha =0.8\) is 32.28 dB. Figure 4c is obtained by \(\alpha =1.2\) with PSNR \(=33.45\) dB and Fig. 4d with PSNR \(=34.86\) dB is obtained by \(\alpha =1.4\).

Experiment 2

Peppers and Baboon noiseless with 50% wavelet coefficients lost:

In Fig. 5 shows the Peppers image with 50% wavelet coefficients lost randomly and its restored image. We illustrate the inpainted images with \(\alpha =0.8, \;{1.2}, \;{1.4}\) respectively. In Fig. 5b, PSNR of the inpainted images with \(\alpha =0.8\) is 20.19 dB. Figure. 5c is obtained by \(\alpha =1.2\) with PSNR \(=20.25\) dB and Fig. 5d with PSNR \(=21.60\) dB is obtained by \(\alpha =1.4\). In Fig. 6b, PSNR of the inpainted images with \(\alpha =0.8\) is 21.15dB. Figure 6c is obtained by \(\alpha =1.2\) with PSNR \(=21.72\) dB and Fig. 6d with PSNR \(=22.01\) dB is obtained by \(\alpha =1.4\).

Experiment 3

Barbara and Lena with additive noise for 10% wavelet coefficients lost:

In order to show that the proposed algorithm is capable of denoising as a by-product of the inpainting, we added a zero mean white Gaussian noise (\(\sigma ={10})\) to the images Barbara and Lena with 10% of wavelet coefficients lost randomly and then applied the FOTV-PD algorithm. Figures 7 and 8 show the inpainting result. We illustrate the inpainted images with \(\alpha {=0.8}, \;{1.2}, \;{1.4}\) by FOTV-PD respectively. It is observed that FOTV-PD outperforms TV-PD and NLTV-BOS regularization in terms of PSNR and visual image quality, especially for the repeating cloth pattern.

Experiment 4

Peppers and Baboon with additive noise for 50% wavelet coefficients lost:

In Figs. 9 and 10, We add the white Gaussian noise with (\(\sigma ={10})\) to Peppers and Baboon images with 50% of wavelet coefficients lost randomly. The images and their zoomed show that FOTV-PD can remove almost all the staircase effects and recover cleaner edges than TV-PD and NLTV-BOS.

Experiment 5

Lena and peppers with the two cases of wavelet coefficients missing:

In Figs. 11 and 12, the Lena and Peppers images are used to test three algorithms. The two cases of wavelet coefficients missing: the whole LH and HL loss are considered. The corresponding PSNR values and CPU time are also shown. The images and their zoomed in views show that these algorithms can remove small oscillations created by lost coefficients, while the PSNR of NLTV-BOS and FOTV-PD give about 2 db higher than TV-PD. The CPU time of FOTV-PD is less than NLTV-BOS.

Experiment 6

Boat and Goldhill with additive noise for the two cases of wavelet coefficients missing:

In Figs. 13 and 14, we add the white Gaussian noise with (\(\sigma ={15})\) to Boat and Goldhill images for testing three algorithms. The two cases of wavelet coefficients missing: the whole LH and HL loss are considered. The FOTV-PD regularization gives a higher PSNR than TV-PD and NLTV-BOS. The CPU time of FOTV-PD is less than NLTV-BOS.

Tables 1, 2 and 3 give the results of our experiments. In summary, in Figs. 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13 and 14, the results show that the larger the parameter \(\alpha \) is, the better the textures are preserved. The resultant images show that our method can remove the noise and keep more details of the image. It is observed that our method (FOTV-PD) is more efficient than the NLTV-BOS algorithm in the CPU time though the PSNR values are more or less the same. The FOTV-PD regularization gives a higher PSNR and less staircase effect than TV-PD.

5 Conclusion

In this paper we have presented a FOTV model for wavelet inpainting problem. We applied the primal–dual algorithm to optimize the model. We discussed different loss cases in the wavelet domain, with and without noise. The numerical examples have shown that the new algorithm is very effective not only for restoring geometric features but also for filling in some image patterns. In future, on one hand, further theoretical work should attempt to document the performance of the method in filling in missing samples when the object truly has a sparse representation. On the other hand, in new algorithms, the selection of parameters is crucial for restoration quality. In our future work, we will discuss the adaptive parameter selection methods for the fractional-order total variation models.

References

Arrow, K., Hurwicz, L., & Uzawa, H. (1958). Studies in linear and nonlinear programming. In K. J. Arrow (Ed.), Mathematical studies in the social sciences. Palo Alto, CA: Stanford University Press.

Aujol, J.-F., Ladjal, S., & Masnou, S. (2011). Exemplar-based inpainting from a variational point of view. International Journal of Computer Vision, 93(3), 319–347.

Bai, J., & Feng, X. (2007). Fractional-order anisotropic diffusion for image denoising. IEEE Transactions on Image Processing, 16(10), 2492–2502.

Bertalmo, M., Sapiro, G., Caselles, V., & Ballester, C. (2000). Image inpainting. In Proceedings of SIGGRAPH (pp. 417–424).

Bonettini, S., & Ruggiero, V. (2012). On the convergence of primal–dual hybrid gradient algorithms for total variation image restoration. Journal of mathematical Imaging and Vision, 44(3), 236–253.

Cai, J. F., Chan, R. H., & Shen, Z. (2008). A framelet-based image inpainting algorithm. Applied and Computational Harmonic Analysis, 24(2), 131–149.

Chambolle, A. (2004). An algorithm for total variation minimization and applications. Journal of Mathematical Imaging and Vision, 20(1–2), 89–97.

Chambolle, A., & Pock, T. (2010). A first-order primal–dual algorithm for convex problems with applications to imaging. Journal of Mathematical Imaging and Vision, 40(1), 120–145.

Chan, T., & Shen, J. (2001a). Mathematical models for local nontexture inpaintings. SIAM Journal on Applied Mathematics, 62(3), 1019–1043.

Chan, T., & Shen, J. (2001b). Non-texture inpainting by curvature-driven diffusions. Journal of Visual Communication and Image Representation, 4(12), 436–449.

Chan, T., & Shen, J. (2002). Euler’s elastica and curvature-based inpainting. SIAM Journal on Applied Mathematics, 63(2), 564–592.

Chan, T., Shen, J., & Zhou, H. (2006). Total variation wavelet inpainting. Journal of Mathematical Imaging and Vision, 25(1), 107–125.

Chen, D., Chen, Y., & Xue, D. (2011). Digital fractional order Savitzky–Golay differentiator. IEEE Transactions on Circuits and Systems II, 58(11), 758–762.

Chen, D., Sheng, H., Chen, Y., & Xue, D. (1990). Fractional-order variational optical flow model for motion estimation. Philosophical Transactions of the Royal Society A, 2013, 371.

Chen, D., Sun, S., Zhang, C., Chen, Y., & Xue, D. (2013). Fractional order TV-L2 model for image denoising. Berlin: Central European Journal of Physics.

Chen, D. Q., & Cheng, L. Z. (2010). Alternative minimisation algorithm for non-local total variational image deblurring. IET Image Processing, 4(5), 353–364.

Cohen, A., Daubeches, I., & Feauveau, J. C. (1992). Biorthogonal bases of compactly supported wavelets. Communications on Pure and Applied Mathematics, 45(5), 485–560.

Criminisi, A., Perez, P., & Toyama, K. (2004). Region filling and object removal by exemplar-based image inpainting. IEEE Transactions on Image Processing, 13(9), 200–1212.

Durand, S., & Froment, J. (2003). Reconstruction of wavelet coefficients using total variation minimization. SIAM Journal on Scientific Computing, 24, 1754–1767.

Elad, M., Starck, J., Querre, P., & Donoho, D. (2005). Simultaneous cartoon and texture image inpainting using morphological component analysis (MCA). Applied and Computational Harmonic Analysis, 19, 340–358.

Esser, E., Zhang, X., & Chan, T. F. (2010). A general framework for a class of first order primal–dual algorithms for convex optimization in imaging science. SIAM Journal on Imaging Sciences, 3(4), 1015–1046.

Gilboa, G., & Osher, S. (2008). Nonlocal operators with applications to image processing. SIAM Multiscale Modeling and Simulation, 7(3), 1005–1028.

He, B., & Yuan, X. (2012). Convergence analysis of primal–dual algorithms for a saddle-point problem: From contraction perspective. SIAM Journal on Imaging Sciences, 5(1), 119–149.

He, L., & Wang, Y. (2014). Iterative support detection-based split Bregman method for wavelet frame-based image inpainting. IEEE Transactions on Image Processing, 23(12), 5470–5485.

Idczak, D., & Kamocki, R. (2015). Fractional differential repetitive processes with Riemann–Liouville and Caputo derivatives. Multidimensional Systems and Signal Processing, 26, 193–206.

Jin, K. H., & Ye, J. C. (2015). Annihilating filter-based low-rank Hankel matrix approach for image inpainting. IEEE Transactions on Image Processing, 24(11), 3498–3511.

Kingsbury, N. (2001). Complex wavelets for shift invariant analysis and filtering of signals. Applied and Computational Harmonic Analysis, 10(3), 234–253.

Liu, S.-C., & Chang, S. (1997). Dimension estimation of discrete-time fractional Brownian motion with applications to image texture classification. IEEE Transactions on Image Processing, 6(8), 1176–1184.

Peyre, G., Bougleux, S., & Cohen, L. (2011). Non-local regularization of inverse problems. Inverse Problems and Imaging, 5(2), 511–530.

Pu, Y. F., Zhou, J. L., & Yuan, X. (2010). Fractional differential mask: A fractional differential-based approach for multiscale texture enhancement. IEEE Transactions on Image Processing, 19(2), 491–511.

Ron, A., & Shen, Z. (1997). Affine systems in \(l^{2}(r^{d})\): The analysis of the analysis operator. Journal of Functional Analysis, 148, 408–447.

Zhang, J., & Chen, K. (2015). A total fractional-order variation model for image restoration with nonhomogeneous boundary conditions and its numerical solution. SIAM Journal on Imaging Sciences, 8(4), 2487–2518.

Zhang, J., Wei, Z., & Xiao, L. (2012). Adaptive fractional-order multiscale method for image denoising. Journal of Mathematical Imaging and Vision, 43(1), 39–49.

Zhang, X., Burger, M., & Osher, S. (2011). A unified primal–dual algorithm framework based on Bregman iteration. Journal of Scientific Computing, 46(1), 20–46.

Zhang, X. Q., Burger, M., Bresson, X., & Osher, S. (2010). Bregmanized nonlocal regularization for deconvolution and sparse reconstruction. SIAM Journal on Imaging Sciences, 3(3), 253–276.

Zhang, X. Q., & Chan, T. F. (2010). Wavelet inpainting by nonlocal total variation. Inverse Problems and Imaging, 4(1), 191–210.

Zhu, M., & Chan, T. (2008). An efficient primal–dual hybrid gradient algorithm for total variation image restoration. CAM Report 08-34, UCLA, Los Angeles, CA

Acknowledgements

The authors would like to thank the associate editor and reviewers for helpful comments that greatly improved the paper. This work was supported by the National Natural Science Foundation of China (Nos. 61301243, 61201455), Natural Science Foundation of Shandong Province of China (Nos. ZR2013FQ007, ZR2014AQ014), and the Fundamental Research Funds for the Central Universities (No. 15CX02060A) the China Scholarship Council.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Jiang, L., Yin, H. Wavelet inpainting by fractional order total variation. Multidim Syst Sign Process 29, 299–320 (2018). https://doi.org/10.1007/s11045-016-0465-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11045-016-0465-5