Abstract

Choices of regularization parameters are central to variational methods for image restoration . In this paper, a spatially adaptive (or distributed) regularization scheme is developed based on localized residuals, which properly balances the regularization weight between regions containing image details and homogeneous regions. Surrogate iterative methods are employed to handle given subsampled data in transformed domains, such as Fourier or wavelet data. In this respect, this work extends the spatially variant regularization technique previously established in Dong et al. (J Math Imaging Vis 40:82–104, 2011), which depends on the fact that the given data are degraded images only. Numerical experiments for the reconstruction from partial Fourier data and for wavelet inpainting prove the efficiency of the newly proposed approach.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Introduction

Image restoration is one of the fundamental tasks in image processing . The quality of the obtained reconstructions depends on several input factors: the quality of the given data, the choice of the regularization term or prior, and the proper balance of data fidelity versus filtering, among others. The goal of the present paper is to reconstruct an image, defined over the two-dimensional Lipschitz (image) domain Ω, from contaminated data f, defined over the data domain Λ. Given the original image \(\widehat u:\varOmega \to \mathbb {R}\), the data formation model is assumed to be

where \(K\widehat u\) represents possibly subsampled data which results from a linear sampling strategy and η is related to white Gaussian noise (with zero mean). As we later describe, η is given by white Gaussian noise in the numerical tests, and in the function space setting we assume it to be a zero mean L 2 function. A more precise description of the data formation model is postponed until section “Problem Settings and Notations”.

A popular approach to image restoration rests on variational methods, i.e., the characterization of the reconstructed image u as the solution of a minimization problem of the type

where Φ(⋅;f) represents a data fidelity term, R(⋅) an appropriate filter or prior, and α > 0 a regularization parameter which balances data fidelity and filtering. The choice of Φ is typically dictated by the type of noise contamination. As long as Gaussian noise is concerned, following the maximum likelihood we choose

On the other hand, R encodes prior information on the underlying image. For the sake of edge preservation, we choose

i.e., the total variation of a function u (see Eq. (1.5) below for its definition). Then the resulting model (1.2) becomes the well-known Rudin-Osher-Fatemi (ROF) model [31] which has been studied intensively in the literature; see, e.g., [5, 6, 8, 14, 21, 24, 29, 32, 33] as well as the monograph [38] and many references therein.

It is well known that the proper choice of α is delicate. A general guideline is the following one: Large α favorably removes noise in homogeneous image regions, but it also compromises image details in other regions; Small α, on the other hand, might be advantageous in regions with image details, but it adversely retains noise in homogeneous image regions. For an automated choice of α in (1.2) several methods have been devised; see for example [10, 18, 20, 34, 40] and the references therein, and see [22, 25] for the spatially distributed α methods. We note that instead of considering (1.2) one may equivalently study λΦ(u;f) + R(u) with λ = 1∕α. Based on this view, in [2], a piecewise constant function λ over the image domain is considered: The partitioning of the imagine domain is done via pre-segmentation and λ is computed by an augmented-Lagrangian-type algorithm. While still operating in a deterministic regime, [2] interestingly uses a spatially variant (more precisely a piecewise constant) parameter function λ.

Later it was noticed that stable choices of λ (or respectively α) have to incorporate statistical properties of the noise. In this vein, in [1, 15] automated update rules for λ based on statistics of local constraints were proposed. For statistical multiscale methods we refer to [16, 17, 26]. A different approach has been proposed in [35] for image denoising only, where non-local means [4] has been used to create a non-local data fidelity term. While the methods in [1, 15, 23] are highly competitive in practice, the adjustment of λ requests the output of K to be a deteriorated image which is again defined over Ω. This, however, limits the applicability of these approaches in situations where K involves transformation of an image into a different type of data output space. Particular examples of such transformations include wavelet or Fourier transforms. It is therefore the goal of this paper to study the approach of [15] in the context of reconstructing from such non-image data, possibly coupled with subsampling for the sake of fast data acquisition.

Here we also mention other spatially weighted total variation methods from the existing literatures. Very often these methods, different from [15, 23] (and also the present paper), weight the total variation locally by certain edge indicators. In [9, 42, 43] the difference of the image curvature was used as an edge indicator, while alternatively the (modified) difference of eigenvalues of the image Hessian was considered by Yan et al. [41] and Ruan et al. [30]. Recently, the authors in [27, 28] used similar edge indicators to weight the total variation anisotropically under the framework of quasi-variational inequalities.

The rest of the paper is organized as follows. Section “Problem Settings and Notations” describes in detail the problem settings and the notations. Our adaptive regularization approach is presented in section “Adaptive Regularization Approach”. Section “Numerical Experiments” concludes the paper with numerical experiments on reconstruction of partial Fourier data and wavelet inpainting .

Problem Settings and Notations

In the data formation model (1.1), we shall consider the continuous linear operator K as a composition of two linear operators, i.e., K = S ∘ T. More precisely, T : L 2(Ω) → L 2(Λ) is a linear orthogonal transformation which preserves the inner product, i.e., \(\langle u,v\rangle _{L^2(\varOmega )}=\langle Tu,Tv\rangle _{L^2(\varLambda )}\) for any u, v ∈ L 2(Ω). Typical examples of T include Fourier and orthogonal wavelet transforms. Further, we denote the subsampling domain by \(\tilde \varLambda \), which is assumed to be a (measurable) subset of Λ of finite positive measure, i.e., \(0<|\tilde \varLambda |<\infty \). Such a \(\tilde \varLambda \) may arise in application cases where there is no access to the complete measured data over Λ, but only to a reduced version of it. Define \({\mathbf {1}}_{\tilde \varLambda }\) as the characteristic function on \(\tilde \varLambda \), i.e., \({\mathbf {1}}_{\tilde \varLambda }\) equals 1 on \(\tilde \varLambda \) and 0 elsewhere. Then the so-called subsampling operator S : L 2(Λ) → L 2(Λ) is defined by \((Sf)(y)={\mathbf {1}}_{\tilde \varLambda }(y)f(y)\) almost everywhere (a.e.) on Λ. It is worth mentioning that S is an orthogonal projection which satisfies idempotency, i.e., S 2 = S, and self-adjointness, i.e., S ∗ = S, and that the range of S, denoted by \( \operatorname {\mathrm {Ran}} S\), is a closed subspace of L 2(Λ). In this setting, we consider the noise η as an arbitrary oscillatory function in \( \operatorname {\mathrm {Ran}} S\) with

for some σ > 0. As a direct consequence, the data f according to (1.1) also lies in \( \operatorname {\mathrm {Ran}} S\).

For u ∈ L 1(Ω), the total variation term |Du|(Ω) in (1.3) is defined as follows:

Here, \(C_0^1(\varOmega ;\mathbb {R}^2)\) denotes the set of all \(\mathbb {R}^2\)-valued continuously differentiable functions on Ω with compact support.

Adaptive Regularization Approach

The focus of this paper is to reconstruct a high-quality image from subsampled data in a non-image data domain using an adaptive regularization approach. The present section is structured as follows. In section “ROF-Model and Surrogate Iteration ”, we introduce the surrogate iteration method for solving the ROF-model [31]. Then in section “Hierarchical Spatially Adaptive Algorithm” we incorporate spatially adaptive regularization into the surrogate iteration. We further accelerate the spatial adaptive algorithm by hierarchical decomposition.

ROF-Model and Surrogate Iteration

Our variational paradigm is chosen to follow Rudin et al. [31], which allows to preserve edges in images. Further, due to the properties of the noise term η in (1.4), the ROF-model restores the image by solving the following constrained optimization problem:

Usually (1.6) is addressed via the following unconstrained optimization problem:

for a given constant λ > 0. Note that, since \(Ku-f\in\operatorname {\mathrm {Ran}} S\), the objective in (1.7) remains unchanged if the integration in the second term of the objective is performed over Λ rather than \(\tilde \varLambda \). Assuming that K does not annihilate constant functions, one can show that there exists a constant λ ≥ 0 such that the constrained problem (1.6) is equivalent to the unconstrained problem (1.7); see [6].

Our purpose is to modify the objective in (1.7) in order to handle a spatially variant parameter λ over the image domain Ω and the operator K: L 2(Ω) → L 2(Λ). Note that this can not be done directly by inserting λ on the integral over \(\tilde {\varLambda }\) in (1.7) since we require λ to be defined over Ω. Hence, instead of tackling (1.7) directly we introduce a so-called surrogate functional \(\mathbb S\) [12]. In this vein, for given a ∈ L 2(Ω), \(\mathbb S\) is defined as

with

where we assume δ > 1. Since ∥S ∗∥ = ∥S∥≤ 1 and ∥T ∗∥ = ∥T∥ = 1, we have ∥K∥≤ 1 < δ. We note that here and below ∥⋅∥ denotes the operator norm \(\|\cdot \|{ }_{\mathcal L(L^2(\varOmega ))}\). We also emphasize that ϕ is a function independent of u. It is readily observed that minimization of \(\mathbb S(u,a)\) over u is no longer affected by the action of K. Rather, minimizing \(\mathbb S(u,a)\) for fixed a resembles a typical image denoising problem. In order to approach a solution of (1.7), we consider the following iteration.

Surrogate Iteration: Choose u (0) ∈ L 2(Ω). Then compute for k = 0, 1, 2, …

with \(f_K^{(k)}:=f_K(u^{(k)})\).

It can be shown that the iteration (1.9) generates a sequence \((u^{(k)})_{k\in \mathbb {N}}\) which converges to a minimizer of (1.7); see [12, 13]. Moreover, the minimization problem in (1.9) is strictly convex and can be efficiently solved by standard algorithms such as the primal-dual first-order algorithm [5], the split Bregman method [19], or the primal-dual semismooth Newton algorithm [24].

For a constant λ > 0, the above iteration can be formulated as a forward-backward splitting algorithm : Let F 1(u) := |Du|(Ω) and \(F_2(u) :=\frac{\lambda}{2}\int_{\varOmega } \|Ku-f\|{}^2 dx\), and define the proximal operator

Then, (1.9) is equivalent to

A different scenario is present if instead we consider a spatially adapted λ as we do next.

Hierarchical Spatially Adaptive Algorithm

The problem in (1.9) is related, via Lagrange multiplier theory, to the globally constrained minimization problem

where A > 0 is a constant depending on σ and K; see [6]. In order to enhance image details while preserving homogeneous regions, we localize the constraint in (1.10), which leads to the modified variational model :

Here the local variance term \(\mathcal S(u)(\cdot ):=\int _{\varOmega } w(\cdot ,x) |u-f_K(u)|{ }^2(x)dx\) is defined for some given localization filter w. A popular choice for w, utilized in what follows, is a window type filter. Thus the constraint in (1.11) with u = u (k+1) reads

Given the convergence result, as k →∞, for scalar λ alluded to in connection with (1.9), one expects the term u (k+1) − u (k) to vanish. This indicates that \(\int _\varOmega w(\cdot ,x) |\frac {1}{\delta } K^*(K u^{(k)}-f)|{ }^2(x)dx \leq A \) is expected in the limit. This consideration leads to the following pointwisely constrained optimization problem:

Next we discuss the choice of A. In view of the (global) estimate for the backprojected residual \(K^*(K\widehat u-f)\), i.e.,

we thus choose

In deriving the above inequalities, we have used the facts that ∥K ∗∥ = ∥K∥≤ 1 and \(\|K\widehat u-f\|{ }_{L^2(\varLambda )}^2=\sigma ^2|\tilde \varLambda |\).

In a discrete setting, we now describe a strategy, based on a statistical local variance estimator , to adapt the spatially variant regularization parameter λ. The idea behind considering a spatially varying λ (instead of a constant one) is motivated by the fact that the constraint in (1.13) is spatially dependent, in contrast to the one in (1.10); see [15] for further discussion. For this purpose, consider a discrete image u defined over the discrete 2D index set Ω h (of cardinality |Ω h|), whose nodes lie on a regular grid of uniform mesh size \(h:=\sqrt {1/|\varOmega _h|} {\in \mathbb {N}}\). The total variation of a discrete image u is denoted by |Du|(Ω h); see (1.15) below for a precise definition. We also define the residual image associated with f K(⋅) by

Concerning the filter w associated with \(\mathcal S\) in (1.11), we exemplarily choose the mean filter pertinent to a square window centered at x. For this reason and in our discrete setting, we define the averaging window

where ω > 1 is an odd integer representing the window size, and then compute the estimated local variance at (i, j) ∈ Ω h by

Given the reconstruction u n associated with λ n, we use \(\mathcal S^\omega (u_n)\) to check whether λ n should be updated or it already yields a successful reconstruction u n. In particular, motivated by Dong et al. [15], we intend to increase λ n at the pixels where the corresponding local variance violates the upper estimate A. More specifically, we utilize the following update rule:

Here

\(\bar {\lambda }>0\) is a prescribed upper bound, and \(\|\lambda _n\|{ }_{\ell ^\infty }\) is a scaling factor suggested in [15]. Two step-size parameters, ζ n > 1 and ρ n > 0, will allow a backtracking procedure should λ n+1 be overshot by (1.14), on which we refer to the HSA algorithm below for a more detailed account.

We are now ready to present our (basic) spatially adaptive (SA) image reconstruction algorithm.

SA Algorithm: Initialize \(u_{0}\in \mathbb {R}^{\varOmega _h}\), \(\lambda _{1} \in \mathbb {R}^{\varOmega _h}_+\), n := 1. Iterate as follows until a stopping criterion is satisfied:

-

1)

Set \(u^{(0)}_n:=u_{n-1}\). For each k = 0, 1, 2, …, compute \(u^{(k+1)}_n\) according to

$$\displaystyle \begin{aligned}\notag \begin{aligned} u^{(k+1)}_n :=& \arg\min_u |Du|(\varOmega_h) + \frac{\delta h^2}{2}\sum_{(i,j)\in\varOmega_h} (\lambda_n)_{i,j} \left|(u-f_n^{(k)})_{i,j}\right|{}^2, \end{aligned}\end{aligned} $$with \(f_n^{(k)}:=u^{(k)}_n - \frac {1}{\delta } K^* (K u^{(k)}_n - f)\). Let u n be the outcome of this iteration.

-

2)

Update λ n+1 according to (1.14). Set n := n + 1.

Following [15] we further accelerate the SA algorithm by employing a hierarchical decomposition of the image into scales. This idea, introduced by Tadmor, Nezzar and Vese in [36, 37], utilizes concepts from interpolation theory to represent a noisy image as the sum of “atoms” u (l), where every u (l) extracts features at a scale finer than the one of the previous u (l−1). This method acts like an iterative regularization scheme, i.e., up to some iteration number \(\bar {l}\) the method yields improvement on reconstruction results with a deterioration (due to noise influence and ill-conditioning) beyond \(\bar {l}\).

Here we illustrate the basic workflow of hierarchical decomposition in a denoising problem (i.e., where K equals the identity). Given the exponential scales {ζ l λ 0 : l = 0, 1, 2, …} with \(\lambda _0\in \mathbb {R}^{\varOmega _h}_+\) and ζ > 1, the hierarchical decomposition operates as follows:

-

1.

Initialize \(u_0\in \mathbb {R}^{\varOmega _h}\) by

$$\displaystyle \begin{aligned}\notag \begin{aligned} u_0:=& \arg\min_u |Du|(\varOmega_h) + \frac {h^2}2\sum_{(i,j)\in\varOmega_h}(\lambda_0)_{i,j}\left|(u-f)_{i,j}\right|{}^2. \end{aligned}\end{aligned} $$ -

2.

For l = 0, 1, …, set λ l+1 := ζλ l and v l := f − u l. Then compute

$$\displaystyle \begin{aligned}\notag \begin{aligned} d_l :=& \arg\min_u |Du|(\varOmega_h) + \frac{h^2}2 \sum_{(i,j)\in\varOmega_h} (\lambda_{l+1})_{i,j}\left|(u-v_l)_{i,j} \right|{}^2, \end{aligned}\end{aligned} $$and update u l+1 := u l + d l.

Now we incorporate such a hierarchical decomposition into the SA algorithm, which we shall refer to as the hierarchical spatially adaptive (HSA) algorithm. We note that all minimization (sub)problems in the HSA algorithm are solved by the primal-dual Newton method in [24]. There, the original ROF-model is approximated by a variational problem posed in \(H^1_0(\varOmega )\) via adding an additional regularization term \(\frac \mu 2\|\nabla u\|{ }_{L^2(\varOmega )}^2\), with \(0<\mu \ll 1/( \operatorname {\mathrm {ess}}\sup \lambda )\), to the objective and assuming, without loss of generality, homogeneous Dirichlet boundary conditions. In this case, the (discrete) total variation is given by

with u i,j = 0 whenever (i, j)∉Ω h. We refer to [24] for a detailed account of this algorithm.

HSA Algorithm: Input parameters δ > 1, \(\omega \in 2\mathbb {N}+1\). Initialize \(u_0\in \mathbb {R}^{\varOmega _h}\), \(\lambda _1\in \mathbb {R}^{\varOmega _h}_+\) (sufficiently small), ζ 0 > 1, ρ 0 > 0.

-

1)

Set \(u^{(0)}_0:=u_0\). For each k = 0, 1, 2, …, κ 0, compute \(u^{(k+1)}_0\) by

$$\displaystyle \begin{aligned}\notag \begin{aligned} u^{(k+1)}_0 :=& \arg\min_u |Du|(\varOmega_h) +\frac{\delta h^2}{2}\sum_{(i,j)\in\varOmega_h} (\lambda_1)_{i,j}\left|(u-f_0^{(k)})_{i,j}\right|{}^2, \end{aligned}\end{aligned} $$with \(f_0^{(k)}:=u^{(k)}_0-\frac {1}{\delta }K^*(Ku^{(k)}_0-f)\). Let u 1 be the outcome of this iteration, and set n := 1.

-

2)

Set v n := f − Ku n−1 and \(d^{(0)}_n:=0\). For each k = 0, 1, 2, …, κ n, compute \(d^{(k+1)}_n\) by

$$\displaystyle \begin{aligned}\notag \begin{aligned} d^{(k+1)}_n := &\arg\min_u |Du|(\varOmega_h) + \frac{\delta h^2}{2}\sum_{(i,j)\in\varOmega} (\lambda_n)_{i,j}\left|(u-f_n^{(k)})_{i,j}\right|{}^2, \end{aligned}\end{aligned} $$with \(f_n^{(k)}:=d^{(k)}_n-\frac {1}{\delta }K^*(Kd^{(k)}_n-v_n)\). Let d n be the outcome of this iteration, and update u n := u n−1 + d n.

-

3)

Evaluate the (normalized) data-fitting error

$$\displaystyle \begin{aligned} \theta_n:=\frac{\|Ku_n-f\|{}_{\ell^2}^2}{\sigma^2|\tilde\varLambda_h|}. \end{aligned}$$If θ n > 1, then set \(\tilde n:=n\), ζ n := ζ n−1, ρ n := ρ n−1, and continue with step 4; If 0.8 ≤ θ n ≤ 1, then return u n, λ n and stop; If θ n < 0.8, then set \(u_{n}:=u_{\tilde n}\), \(\lambda _n:=\lambda _{\tilde n}\), \(\zeta _n:=\sqrt {\zeta _{n-1}}\), ρ n := ρ n−1∕2, and continue with step 4.

-

4)

Update λ n+1 according to formula (1.14). Set n := n + 1 and return to step 2.

We also remark that the initial \(\lambda _1\in \mathbb {R}^{\varOmega _h}_+\) should be sufficiently small such that the resulting normalized data-fitting error θ 1 is much larger than 1. Then the HSA iterations are responsible for (monotonically) lifting up λ n in a spatially adaptive fashion as described earlier in this paper. Such a lifting is performed until the data-fitting error \(\|Ku_n-f\|{ }_{\ell ^2}^2/|\tilde \varLambda _h|\) approaches the underlying noise level σ 2. If the data-fitting error drops too far below σ 2, then the algorithm may suffer from overfitting the noisy data. In this scenario, we backtrack on λ n through potential reduction of ζ n and ρ n; see step 3 of the HSA algorithm.

Numerical Experiments

In this section, we present numerical results of the newly proposed HSA algorithm for two applications, namely reconstruction from partial Fourier data and wavelet inpainting . All experiments reported here were performed under Matlab. The image intensity is scaled to the interval [0, 1] in advance of our computation. For the HSA algorithm, we always choose the following parameters: δ = 1.2, ω = 11, ζ 0 = 2, ρ 0 = 1, \(\bar \lambda =10^6\), u 0 = K ∗ f. In the primal-dual Newton algorithm [24], we choose the H 1-regularization parameter μ = 10−4, the Huber smoothing parameter γ = 10−3, and terminate the overall Newton iterations as soon as the initial residual norm is reduced by a factor of 10−4. Besides, the maximum iteration numbers {κ n} for the surrogate iterations are adaptively chosen such that \(\| d_n^{(\kappa _n)} - d_n^{(\kappa _{n-1})}\|{ }_{\ell ^2} \leq 10^{-6}\sqrt {|\varOmega _h|}\).

The images restored by HSA are compared, both visually and quantitatively, with the ones restored by the variational model in (1.7) with scalar-valued λ. For quantitative comparisons among restorations, we evaluate their peak signal-to-noise ratios (PSNR ) [3] and also the structural similarity measures (SSIM) [39]; see Table 1.1. To optimize our choice for each scalar-valued λ, we adopt a bisection procedure, up to a relative error of 0.02, i.e., |λ k+1 − λ k| < 0.02λ k, to maximize the following weighted sum of the PSNR - and SSIM-values of the resulting scalar-λ restoration

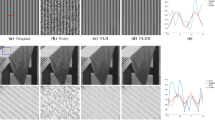

over the interval I = [102, 105]. The maximal PSNR and SSIM in the above formula are pre-computed up to a relative error of 0.001. The original images used for our numerical tests are given in Fig. 1.1.

Reconstruction of Partial Fourier Data

In magnetic resonance imaging, one aims to reconstruct an image which is only sampled by partial Fourier data and additionally distorted by additive white Gaussian noise of zero mean and standard deviation σ. Here the data-formation operator is given by K = S ∘ T, where T is a 2D (discrete) Fourier transform and S represents a downsampling of Fourier data. In particular, we consider S which picks Fourier data along radial lines centered at zero frequency.

Our experiments are performed for the test images “Cameraman” and “Knee” with σ ∈{0.05, 0.1} and #radials ∈{75, 90, 105} respectively. In these experiments, we have always initialized HSA with λ 1 = 100. The resulting restorations via the total-variation method with scalar-valued λ and via our HSA method are both displayed in Figs. 1.2, 1.3 and 1.4. We also show the ultimate spatially adapted λ from HSA in each test run, where the light regions in the λ-plot correspond to high values of λ and vice versa. It is observed that the values of λ in regions containing detailed features (e.g. the camera and the tripod in “Cameraman”) typically outweigh its values in more homogeneous regions (e.g. the background sky in “Cameraman”). As a consequence, this favorably yields a sharper background-versus-detail contrast in the restored images via HSA. In Fig. 1.3, we observe face and camera of “Cameraman” reconstructions with a better performance of our HSA method. According to the quantitative comparisons reported in Table 1.1, HSA almost always outperforms scale-valued λ in terms of PSNR and SSIM. As a side remark, it is also observed that the spatially adapted λ via HSA is able to capture more features of the underlying image at a lower noise level (Fig. 1.4).

To test the robustness of HSA, we perturb our choices of the window size ω and the initial choice of λ in our experiments. In Fig. 1.5, we report the resulting PSNRs and SSIMs of such sensitivity tests on the particular Fourier-Cameraman example with σ = 0.05 and #radials = 90. It is observed that HSA behaves relatively stable with different choices of ω. On the other hand, one should be cautioned that the results of HSA deteriorate as the initial λ is chosen too large. Nevertheless, among all initial λ’s smaller than a certain threshold (in this case 200), smaller choices do not always claim advantages over larger ones.

Wavelet Inpainting

Wavelet inpainting is about restoring missing wavelet coefficients due to lossy compression or error-prone data transmission; see, e.g., [7, 44]. Here we consider the scenario where a test image is compressed by storing the largest Daubechies-4 wavelet coefficients [11] in magnitude only up to a small sampling rate (s.r.), namely s.r. ∈ {2.5%, 5%, 10%}. The compressed wavelet coefficients are further contaminated by additive white Gaussian noise of mean zero and standard deviation σ ∈{0.05, 0.1}. For wavelet inpainting , we have initialized HSA with λ 1 = 10. The experiments are performed for the test images “Cameraman” and “Barbara”, and the corresponding results, both restored images and the adapted λ’s, are shown in Figs. 1.6 and 1.7. A detailed view of the face region of “Barbara” is given in Fig. 1.8, where the results are in favor of our HSA algorithm.

In the wavelet-Cameraman example, the results via scalar-valued λ’s and HSA are almost identical to human eyes. Even though, HSA always outperforms the scale-valued λ in terms of PSNR , while the SSIM-comparison is somewhat even; see Table 1.1. Interestingly, the adapted λ’s in this example exhibit patterns analogous to the ones in the Fourier-Cameraman example.

Our HSA method gains more advantages when it is applied to the “Barbara” image with a stronger cartoon-texture contrast than “Cameraman”. In Fig. 1.7, it is witnessed that the restored images via scalar-valued λ’s suffer from undesirable staircase effects. In comparison, spatially adapted λ’s yield significant improvements on the restorations, even in the cases where the pattern of λ is less transparent due to lack of data or strong noise. In Table 1.1, the PSNR - and SSIM-comparisons also dominantly favor the HSA method.

Qualitative Relation to Other Spatially Distributed Parameter Methods

A particular feature of the HSA algorithm is that it allows to assign a spatially variant parameter λ, on the image domain Ω, associated to a data fidelity term that is determined by an integral over \(\tilde {\varLambda }\subset \varLambda \), a non-image domain. Such configuration renders certain variational methods with spatially variant λ’s not applicable: For example, the SATV algorithm developed in [15] requires K to map into functions over the image domain Ω. This obstacle has been overcome in [22] and [25] where the spatially variant parameter is not longer related to the data fidelity term, but rather to the regularization functional. Specifically, a parameter \(\alpha :\varOmega \to \mathbb {R}\) in the model

is automatically selected based on a bilevel formulation involving also localized variance estimators.

For non-negative constants λ and α in (1.7) and (1.16), respectively, it holds true that if λ = α −1 solutions of the optimization problems are identical. In the spatially variant case for λ and α, the relationship between the parameters does no longer hold exactly when automatically chosen via local variance estimates of reconstructions, i.e., we only expect λ(x) ≃ (α(x))−1 for x ∈ Ω. One specific difference between the two approaches is related to the fact that λ is only required to be essentially bounded while (in the function space setting) α requires to have higher regularity for the objective in (1.16) to be well-defined. The latter translates into the need of having an additional regularization term for the smoothing of α in the upper level objective. This has a clear consequence in differences for λ and α for the HSA algorithm and the bilevel method in [22] and [25], respectively: λ seems to be able to have more variability than α on Ω. On the other hand, although λ and α have, in general, high and low values on details, respectively, α seems to decrease on edges more drastically, while λ has slower transitions there. In particular, the previous explains how the selection of α is a preferable choice over the one of λ in images with large homogeneous regions, sharp edges, and corners, and vice versa for images with significant number of details on small regions and certain textures. A quantitative analysis for such differences is beyond the scope of the paper, and an active research direction.

In order to compare with the HSA method, we consider the spatially distributed method described in [22] and [25], where α is chosen in (1.16) via a bilevel formulation. We define K = S ∘ T to collect Fourier coefficients along 120 radial lines centered at zero frequency, and take data distorted by additive white Gaussian noise of zero mean and standard deviation σ = 0.05. For the bilevel scheme, we utilized the same configuration and parameters as in [25], where the local variance bounds are chosen as in (#2); see [25] for all details. For the HSA algorithm, we use the same setup as above in the reconstruction of partial Fourier data . When K maps into functions over the image domain, it was observed that the bilevel formulation provides, in general, reconstructions with better SSIM than the ones from the SATV algorithm in [15]. However, the SATV performs better in terms of PSNR than the bilevel scheme. This same behavior is observed between the HSA algorithm and the bilevel one. Reconstructions for both methods are given in Fig. 1.9, and we take zoom views of the two framed regions in the “Chest” image for further detail comparison in Fig. 1.10. Finally, in Fig. 1.11, we observe the α parameter of the bilevel scheme, and λ −1 where λ is HSA parameter. As fine details are hard to observe in the black and white images, we have included red colored plots of the surfaces associated with both parameters with a specific light effect to show such details.

Conclusion

In this work, it has been shown that spatially adapted data fidelity weights help to improve the quality of restored images. The automated adjustment of the local weights is developed based on the localized image residuals. Such a parameter adjustment scheme can be further accelerated by employing hierarchical decompositions, which aim at decomposing an image into so-called atoms at different scales. The framework of the paper is suitable for subsampled data in non-image domain, in particular incomplete coefficients from orthogonal Fourier- and wavelet transforms as illustrated in the numerical experiments.

References

A. Almansa, C. Ballester, V. Caselles, G. Haro, A TV based restoration model with local constraints. J. Sci. Comput. 34, 209–236 (2008)

M. Bertalmio, V. Caselles, B. Rougé, A. Solé, TV based image restoration with local constraints. J. Sci. Comput. 19, 95–122 (2003)

A. Bovik, Handbook of Image and Video Processing (Academic, San Diego, 2000)

A. Buades, B. Coll, J.-M. Morel, A review of image denoising algorithms, with a new one. Multiscale Model. Simul. 4, 490–530 (2005)

A. Chambolle, An algorithm for total variation minimization and applications. J. Math. Imaging Vision 20, 89–97 (2004)

A. Chambolle, P.-L. Lions, Image recovery via total variation minimization and related problems. Numer. Math. 76, 167–188 (1997)

T.F. Chan, J. Shen, H.-M. Zhou, Total variation wavelet inpainting. J. Math. Imaging Vision 25, 107–125 (2006)

Q. Chang, I.-L. Chern, Acceleration methods for total variation based image denoising. SIAM J. Appl. Math. 25, 982–994 (2003)

Q. Chen, P. Montesinos, Q.S. Sun, P.A. Heng, D.S. Xia, Adaptive total variation denoising based on difference curvature. Image Vis. Comput. 28, 298–306 (2010)

K. Chen, E.L. Piccolomini, F. Zama, An automatic regularization parameter selection algorithm in the total variation model for image deblurring. Numer. Algorithms 67, 73–92 (2014)

I. Daubechies, Ten Lectures on Wavelets (SIAM, Philadelphia, 1992)

I. Daubechies, M. Defrise, C. De Mol, An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 57, 1413–1457 (2004)

I. Daubechies, G. Teschke, L. Vese, Iteratively solving linear inverse problems under general convex constraints. Inverse Prob. Imaging 1, 29–46 (2007)

D.C. Dobson, C.R. Vogel, Convergence of an iterative method for total variation denoising. SIAM J. Numer. Anal. 34, 1779–1791 (1997)

Y. Dong, M. Hintermüller, M. Rincon-Camacho, Automated regularization parameter selection in multi-scale total variation models for image restoration. J. Math. Imaging Vis. 40, 82–104 (2011)

K. Frick, P. Marnitz, Statistical multiresolution Dantzig estimation in imaging: fundamental concepts and algorithmic framework. Electron. J. Stat. 6, 231–268 (2012)

K. Frick, P. Marnitz, A. Munk, Statistical multiresolution estimation for variational imaging: with an application in Poisson-biophotonics. J. Math. Imaging Vision 46, 370–387 (2013)

R. Giryes, M. Elad, Y.C. Eldar, The projected GSURE for automatic parameter tuning in iterative shrinkage methods. Appl. Comput. Harmon. Anal. 30, 407–422 (2011)

T. Goldstein, S. Osher, The split Bregman method for ℓ 1 regularized problems. SIAM J. Imaging Sci. 2, 1311–1333 (2009)

C. He, C. Hu, W. Zhang, B. Shi, A fast adaptive parameter estimation for total variation image restoration. IEEE Trans. Image Process. 23, 4954–4967 (2014)

M. Hintermüller, K. Kunisch, Total bounded variation regularization as bilaterally constrained optimization problem. SIAM J. Appl. Math. 64, 1311–1333 (2004)

M. Hintermüller, C.N. Rautenberg, Optimal selection of the regularization function in a weighted total variation model. Part I: modelling and theory. J. Math. Imaging Vision 59(3), 498–514 (2017)

M. Hintermüller, M.M. Rincon-Camacho, Expected absolute value estimators for a spatially adapted regularization parameter choice rule in L 1-TV-based image restoration. Inverse Prob. 26, 085005 (2010)

M. Hintermüller, G. Stadler, An infeasible primal-dual algorithm for total bounded variation-based Inf-convolution-type image restoration. SIAM J. Sci. Comput. 28, 1–23 (2006)

M. Hintermüller, C.N. Rautenberg, T. Wu, A. Langer, Optimal selection of the regularization function in a weighted total variation model. Part II: algorithm, its analysis and numerical tests. J. Math. Imaging Vision 59(3), 515–533 (2017)

T. Hotz, P. Marnitz, R. Stichtenoth, L. Davies, Z. Kabluchko, A. Munk, Locally adaptive image denoising by a statistical multiresolution criterion. Comput. Stat. Data Anal. 56(33), 543–558 (2012)

F. Lenzen, F. Becker, J. Lellmann, S. Petra, C. Schnörr, A class of quasi-variational inequalities for adaptive image denoising and decomposition. Comput. Optim. Appl. 54, 371–398 (2013)

F. Lenzen, J. Lellmann, F. Becker, C. Schnörr, Solving quasi-variational inequalities for image restoration with adaptive constraint sets. SIAM J. Imaging Sci. 7, 2139–2174 (2014)

S. Osher, M. Burger, D. Goldfarb, J. Xu, W. Yin, An iterative regularization method for total variation-based image restoration. Multiscale Model. Simul. 4, 460–489 (2005)

Y. Ruan, H. Fang, Q. Chen, Semiblind image deconvolution with spatially adaptive total variation regularization. Math. Probl. Eng. 2014(606170), 8 (2014)

L.I. Rudin, S. Osher, E. Fatemi, Nonlinear total variation based noise removal algorithms. Physica D 60, 259–268 (1992)

D. Strong, T. Chan, Spatially and scale adaptive total variation based regularization and anisotropic diffusion in image processing. Technical report, UCLA (1996)

D. Strong, T. Chan, Edge-preserving and scale-dependent properties of total variation regularization. Inverse Prob. 19, 165–187 (2003)

D. Strong, J.-F. Aujol, T. Chan, Scale recognition, regularization parameter selection, and Meyer’s G norm in total variation regularization. Technical report, UCLA (2005)

C. Sutour, C.-A. Deledalle, J.-F. Aujol, Adaptive regularization of the NL-means: application to image and video denoising. IEEE Trans. Image Process. 23, 3506–3521 (2014)

E. Tadmor, S. Nezzar, L. Vese, A multiscale image representation using hierarchical (BV, L 2) decompositions. Multiscale Moel. Simul. 2, 554–579 (2004)

E. Tadmor, S. Nezzar, L. Vese, Multiscale hierarchical decomposition of images with applications to deblurring, denoising and segmentation. Commun. Math. Sci. 6, 1–26 (2008)

C.R. Vogel, Computational Methods for Inverse Problems. Frontiers in Applied Mathematics, vol. 23 (SIAM, Philadelphia, 2002)

Z. Wang, A.C. Bovik, H.R. Sheikh, E.P. Simoncelli, Image quality assessment: from error to structural similarity. IEEE Trans. Image Process. 13, 600–612 (2004)

Y.-W. Wen, R.H. Chan, Parameter selection for total -variation-based image restoration using discrepancy principle. IEEE Trans. Image Process. 21, 1770–1781 (2012)

L. Yan, H. Fang, S. Zhong, Blind image deconvolution with spatially adaptive total variation regularization. Opt. Lett. 37, 2778–2780 (2012)

Q. Yuan, L. Zhang, H. Shen, Multiframe super-resolution employing a spatially weighted total variation model. IEEE Trans. Circuits Syst. Video Technol. 22, 379–392 (2012)

Q. Yuan, L. Zhang, H. Shen, Regional spatially adaptive total variation super-resolution with spatial information filtering and clustering. IEEE Trans. Image Process. 22, 2327–2342 (2013)

X. Zhang, T.F. Chan, Wavelet inpainting by nonlocal total variation. Inverse Prob. Imaging 4, 191–210 (2010)

Acknowledgements

This research was supported by the Austrian Science Fund (FWF) through START-Project Y305 “Interfaces and Free Boundaries” and SFB-Project F3204 “Mathematical Optimization and Applications in Biomedical Sciences”, the German Research Foundation DFG through Project HI1466/7-1 “Free Boundary Problems and Level Set Methods”, as well as the Research Center MATHEON through Project C-SE15 “Optimal Network Sensor Placement for Energy Efficiency” supported by the Einstein Center for Mathematics Berlin.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Hintermüller, M., Langer, A., Rautenberg, C.N., Wu, T. (2018). Adaptive Regularization for Image Reconstruction from Subsampled Data. In: Tai, XC., Bae, E., Lysaker, M. (eds) Imaging, Vision and Learning Based on Optimization and PDEs. IVLOPDE 2016. Mathematics and Visualization. Springer, Cham. https://doi.org/10.1007/978-3-319-91274-5_1

Download citation

DOI: https://doi.org/10.1007/978-3-319-91274-5_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-91273-8

Online ISBN: 978-3-319-91274-5

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)