Abstract

Recently, tensor decompositions have attracted increasing attention and shown promising performance in processing multi-dimensional data. However, the existing tensor decompositions assume that the correlation along one mode is homogeneous and thus cannot characterize the multiple types of correlations (i.e., heterogeneous correlation) along the mode in real data. To address this issue, we propose a heterogeneous tensor product that allows us to explore this heterogeneous correlation, which can degenerate into the classic tensor products (e.g., mode product and tensor–tensor product). Equipped with this heterogeneous tensor product, we develop a generalized tensor decomposition (GTD) framework for third-order tensors, which not only induces many novel tensor decompositions but also helps us to better understand the interrelationships between the new tensor decompositions and the existing tensor decompositions. Especially, under the GTD framework, we find that new tensor decompositions can faithfully characterize the multiple types of correlations along the mode. To examine the effectiveness of the new tensor decomposition, we evaluate its performance on a representative data compression task. Extensive experimental results on multispectral images, light field images, and videos compression demonstrate the superior performance of our developed tensor decomposition compared to other existing tensor decompositions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Tensors [6, 10, 41], as multi-dimensional extensions of matrices, can naturally represent the complex intrinsic structures of high-order data. This characteristic has drawn significant attention in recent years, making tensor analysis increasingly important in various fields, such as numerical linear algebra [4, 24, 28, 30], graph analysis [11, 15], image processing [14, 16, 25, 42, 45], and computer vision [7, 12, 34]. Within these literatures, the processing and analysis of multi-dimensional data primarily rely on a powerful tool, i.e., tensor decomposition.

Recently, various tensor decompositions, generalized from the matrix singular value decomposition (SVD), have been widely studied and applied. As is well-known, the SVD can represent a matrix as the sum of a series of rank-1 matrices. Analogously, the CANDECOMP/PARAFAC (CP) decomposition [5, 13, 21] aims to represent a tensor as the sum of a finite number of rank-1 tensors. Another convincing multi-linear generalization of the SVD is the higher-order singular value decomposition (HOSVD), which expresses a tensor as a core tensor multiplied by a factor matrix along each mode. The key point of departure is that the mode product treats the vector (i.e., one column of the factor matrix) in a similar way as the SVD does for the left and the right singular vectors. Furthermore, HOSVD is also commonly referred to as Tucker decomposition [36, 37]. Subsequently, the tensor singular value decomposition (T-SVD) [18, 19] is proposed, which regards a third-order tensor as a matrix with each element being a tube. This tube-based strategy enables T-SVD to take a similar form to the matrix SVD by decomposing a third-order tensor into the tensor-tensor product (T-product) of three third-order tensors, with two of them being orthogonal tensors and one being the diagonal tensor[23, 31, 32]. Later, \(\text {T}_k\)-product and generalized T-SVD (see Definitions 2 and 4) are proposed, which allows us to handle interactions flexibly among slices of different modes [40, 46].

More recently, tensor network (TN)-based decompositions, inspired by the graph representation, have garnered significant interest. The most well-known one is the tensor train (TT) decomposition [3, 27, 43], which breaks down a tensor into sequential multi-linear products over a sequence of factors with matrices as the first and last factors and third-order tensors as the intermediate factors. TT decomposition can serve as the building block for other complex tensor networks (e.g., tensor ring (TR) decomposition [29, 44] and fully-connected tensor network (FCTN) decomposition [47]) due to its simple form. In the quantum physics community, the TT format is frequently referred to as the matrix product state representation [33, 38].

The existing tensor decompositions only consider a single type of correlation (i.e., homogeneous correlation) along one mode. However, real data typically exhibit multiple types of correlations (i.e., heterogeneous correlation) along one mode. Taking a light field image dishes as an example, we illustrate its heterogeneous correlation along mode-3 in Fig. 1. Figure 1a displays the singular value curve of the mode-3 unfolding matrix of data \(\mathcal {X} \in \mathbb {R}^{128 \times 128 \times 243}\), which reveals the correlation along mode-3. This type of correlation can be characterized by the mode-3 product, i.e., \(\mathcal {X} = \mathcal {Y} \times _3 \varvec{H}\). Moreover, the factor \(\mathcal {Y} \in \mathbb {R}^{128 \times 128 \times 40}\) exhibits another type of correlation along mode-3, as shown in Fig. 1b. The more faithful characterization of the heterogeneous correlation can lead to better performance of multi-dimensional data processing. Therefore, a natural question is can we construct new tensor product and tensor decomposition that more accurately capture this heterogeneous correlation along the mode of multi-dimensional data?

To address this challenge, we define a heterogeneous tensor product that allows us to better characterize the heterogeneous correlation. Leveraging this product, we develop a generalized tensor decomposition (GTD) framework. More specifically, the main contributions of this work are twofold.

-

To faithfully characterize the heterogeneous correlation along one mode, we establish a heterogeneous tensor product, which can degenerate into the mode product and the \(\text {T}_k\)-product. Based on this product, we develop a GTD framework that not only delivers many novel tensor decompositions but also helps us to better understand the interconnections between these new tensor decompositions and the classic tensor decompositions.

-

To verify the effectiveness of the new tensor decomposition within the GTD framework, we examine its performance on the representative data compression task. We propose a corresponding algorithm for the decomposition and conduct extensive compression experiments on multispectral images, light field images, and videos. The results demonstrate the superior compression performance of our developed new tensor decomposition as compared to the existing tensor decompositions.

The outline of this paper is as follows. We begin by summarizing the notations and preliminaries in Sect. 2. Then, we define the heterogeneous tensor product and analyze the relationship between this product and two classic tensor products in Sect. 3. Based on the proposed heterogeneous tensor product, we develop a GTD framework in Sect. 4. We consider a data compression task to examine the new tensor decomposition within the GTD framework in Sect. 5. We conduct extensive numerical experiments to evaluate the performance of the new tensor decomposition in Sect. 6. Finally, we conclude this paper in Sect. 7.

2 Tensor Preliminaries

Before presenting the main body of this paper, we summarize the notations in Table 1 and provide preliminaries about tensor products and tensor decompositions.

2.1 Two Classic Tensor Products

In this subsection, we review two classic tensor products, including mode product and \(\text {T}_k\)-product.

Definition 1

(Mode product [8]) The mode product of a tensor \(\mathcal {X} \in \mathbb {R}^{I_1 \times I_2 \times \cdots \times I_M}\) by a matrix \(\varvec{Y} \in \mathbb {R}^{J_m \times I_m}\) \((m \in \{1,2,\ldots ,M\})\), denoted by \(\mathcal {X} \times _m \varvec{Y}\), is a tensor of size \(I_1 \times I_2 \times \cdots \times I_{m-1} \times J_m \times I_{m+1} \times \cdots \times I_M\), whose elements are defined as

Definition 2

(\(\hbox {T}_k\)-product [40]) The \({\textbf {T}}_\mathbf{{1}}\)-product of a tensor \(\mathcal {X} \in \mathbb {R}^{I_1 \times I_2 \times R_1} \) and a tensor \(\mathcal {Y} \in \mathbb {R}^{I_1 \times R_1 \times I_3}\), denoted by \(\mathcal {X} *_1 \mathcal {Y}\), is a tensor of size \(I_1 \times I_2 \times I_3\), whose mode-1 fibers are defined as

The \({\textbf {T}}_\mathbf{{2}}\)-product of a tensor \(\mathcal {X} \in \mathbb {R}^{I_1 \times I_2 \times R_2} \) and a tensor \(\mathcal {Y} \in \mathbb {R}^{R_2 \times I_2 \times I_3}\), denoted by \(\mathcal {X} *_2 \mathcal {Y}\), is a tensor of size \(I_1 \times I_2 \times I_3\), whose mode-2 fibers are defined as

The \(\hbox {T}_\mathbf{{3}}\)-product of a tensor \(\mathcal {X} \in \mathbb {R}^{I_1 \times R_3 \times I_3} \) and a tensor \(\mathcal {Y} \in \mathbb {R}^{R_3 \times I_2 \times I_3} \), denoted by \(\mathcal {X} *_3 \mathcal {Y}\), is a tensor of size \(I_1 \times I_2 \times I_3\), whose mode-3 fibers are defined as

where the T-product of two fibers is \(\varvec{x} \star _L \varvec{y}=\varvec{L}^{-1}((\varvec{L}\varvec{x})\odot (\varvec{L}\varvec{y}))\), \(\varvec{L}\) is an invertible matrix, and \(\odot \) denotes Hadamard product.

Recently, some structured invertible matrices \(\varvec{L}\) have been studied, such as discrete Fourier transform (DFT) matrix [19], discrete cosine transform (DCT) matrix [17], and wavelet transform matrix [35].

2.2 Three Classic Tensor Decompositions

In this subsection, we review three classic tensor decompositions, including HOSVD, generalized T-SVD, and TT decomposition. To facilitate understanding, this paper mainly focuses on the third-order tensors.

Definition 3

(HOSVD [9]) A tensor \(\mathcal {X} \in \mathbb {R}^{I_1 \times I_2 \times I_3} \) can be factored as the mode product

where \(\varvec{F} \in \mathbb {R}^{I_1 \times R_1}\), \(\varvec{G} \in \mathbb {R}^{I_2 \times R_2}\), and \(\varvec{H} \in \mathbb {R}^{I_3 \times R_3}\) are column-orthogonal matrices, and \(\mathcal {C} \in \mathbb {R}^{R_1 \times R_2 \times R_3}\) is the core factor.

Definition 4

(Generalized T-SVD [40]) A tensor \(\mathcal {X} \in \mathbb {R}^{I_1 \times I_2 \times I_3} \) can be factored as the generalized \(\text {T}_k\)-product

in which

-

1.

\(\mathcal {U}_k\) and \(\mathcal {V}_k\) are mode-k orthogonal tensors,

-

2.

\(\mathcal {S}_k\) is mode-k diagonal tensor that each of its mode-k slices is a diagonal matrix.

-

(i)

mode-k conjugate transpose: The mode-k conjugate transpose of \(\mathcal {X}\), denote by \(\mathcal {X}^{T_k}\), is obtained by conjugate transposing each of the mode-k slices and then reversing the order of transposed mode-k slices 2 through \(N_k\).

-

(ii)

orthogonality: tensor \(\mathcal {X}\) is mode-k orthogonal if

$$\begin{aligned} \mathcal {X} *_k \mathcal {X}^{T_k} = \mathcal {X}^{T_k} *_k \mathcal {X} = \mathcal {I}_k, \end{aligned}$$(7)where \(\mathcal {I}_k\) is identity tensor that its first mode-k slice is an identity matrix and other mode-k slices are all zeros.

-

(i)

Definition 5

(TT decomposition [27]) A tensor \(\mathcal {X} \in \mathbb {R}^{I_1 \times I_2 \times I_3} \) can be factored as

where \(\varvec{F} \in \mathbb {R}^{I_1 \times R_1}\), \(\mathcal {C} \in \mathbb {R}^{R_1 \times I_2 \times R_2}\), and \(\varvec{H} \in \mathbb {R}^{I_3 \times R_2}\) are called canonical factors.

The forms of tensor products and tensor decompositions introduced above are quite distinct. This gives rise to two intriguing questions: (i) Can we devise a tensor product and decomposition that encompasses the aforementioned tensor products and decompositions? (ii) In the framework of this newly constructed tensor product and decomposition, is it possible to analyze the relationships between the classic tensor products as well as the relationships among the classic tensor decompositions?

3 The Heterogeneous Tensor Product

To address the above problems, we define a heterogeneous tensor product that can degenerate into the classic tensor products. Additionally, we detailedly analyze the relationships between this heterogeneous tensor product and the classic tensor products.

We first introduce the heterogeneous tensor product that extends the classic mode product and \(\text {T}_k\)-product.

Definition 6

(Mode permutation) The m-mode permutation \((m \in \{1,2,\ldots ,M\})\) of an Mth-order tensor \(\mathcal {X} \in \mathbb {R}^{I_1 \times \cdots \times I_{m-1} \times I_{m+1} \times \cdots \times I_M \times I_m}\) is defined as tensor \(\overrightarrow{\mathcal {X}}^m\) with size \(I_1 \times \cdots \times I_{m-1} \times I_m \times I_{m+1} \times \cdots \times I_M\), whose elements obey

Definition 7

(Heterogeneous tensor product) Given an Mth-order tensor \(\mathcal {X} \in \mathbb {R}^{I_1 \times I_2 \times \cdots \times I_M}\) and an Nth-order tensor \(\mathcal {Y} \in \mathbb {R}^{J_1 \times J_2 \times \cdots \times J_N}\) with d common modes \((1 \le d \le min (M,N)\), \(d \in \mathbb {Z})\). Assume that two vectors \(\varvec{m} = (\varvec{m}_1,\varvec{m}_2,\ldots ,\varvec{m}_M)\) and \(\varvec{n} = (\varvec{n}_1,\varvec{n}_2,\ldots ,\varvec{n}_N)\) respectively indicate the recording of vectors \((1,2,\ldots ,M)\) and \((1,2,\ldots ,N)\), satisfying \(I_{\varvec{m}_l}=J_{\varvec{n}_l}\) for \(l=1,2,\ldots ,d\), \(\varvec{m}_{d+1}< \varvec{m}_{d+2}< \cdots < \varvec{m}_{M}\), and \(\varvec{n}_{d+1}< \varvec{n}_{d+2}< \cdots < \varvec{n}_{N}\). Then the heterogeneous tensor product between \(\mathcal {X}\) and \(\mathcal {Y}\), denoted as \(\mathcal {X} \boxtimes \mathcal {Y}\),Footnote 1 is defined as

where \(\hat{\mathcal {X}}(i_{\varvec{m}_d},i_{\varvec{m}_{d+1}},\ldots ,i_{\varvec{m}_M},:)\) is a fiber of tensor \(\hat{\mathcal {X}}\) with size \(I_{\varvec{m}_1} I_{\varvec{m}_2} \cdots I_{\varvec{m}_{d-1}}\), \(\hat{\mathcal {X}}\) denotes the permutation of \(\mathcal {X}\) satisfying \(\hat{\mathcal {X}}_{i_{\varvec{m}_d} i_{\varvec{m}_{d+1}} \cdots i_{\varvec{m}_M} i_{\varvec{m}_1} \cdots i_{\varvec{m}_{d-1}}}=\mathcal {X}_{i_d i_{d+1} \cdots i_M i_1 \cdots i_{d-1}}\), and \(\Phi (\varvec{x},\varvec{y})\) denotes the product of fibers \(\varvec{x}\) and \(\varvec{y}\).

Considering different vector products (i.e., \(\Phi (\varvec{x},\varvec{y})\)) in Definition 7 delivers various tensor products. In the following, we detailedly show how the heterogeneous tensor product degenerates into the classic tensor products. The following lemmas present our conclusions.

Lemma 1

The heterogeneous tensor product can degenerate into the mode product.

Proof

Given an Mth-order tensor \(\mathcal {X} \in \mathbb {R}^{I_1 \times I_2 \times \cdots \times I_M}\) and a second-order tensor \(\mathcal {Y} \in \mathbb {R}^{J_m \times I_m}\) with one common mode (i.e., \(d=1\)). Assume that \(\varvec{m} = (m,1,2,\ldots ,m-1,m+1,\ldots ,M)\), \(\varvec{n}=(2,1)\), and \(\Phi (\varvec{x},\varvec{y})\) denotes the inner product of vectors \(\varvec{x}\) and \(\varvec{y}\), then the heterogeneous tensor product between \(\mathcal {X}\) and \(\mathcal {Y}\) is

Equation (11) verifies that the heterogeneous tensor product and the mode product are consistent when \(\Phi (\varvec{x},\varvec{y})\) is the inner product of vectors \(\varvec{x}\) and \(\varvec{y}\). \(\square \)

Lemma 2

The heterogeneous tensor product can degenerate into the generalized \(\text {T}_k\)-product.

Proof

The proof is divided into three parts, in which we demonstrate that the heterogeneous tensor product can respectively degenerate into \(\text {T}_1\)-product, \(\text {T}_2\)-product, and \(\text {T}_3\)-product.

(i) The heterogeneous tensor product can degenerate into the \(\text {T}_1\)-product:

Given a third-order tensor \(\mathcal {X} \in \mathbb {R}^{I_1 \times I_2 \times R_1} \) and a third-order tensor \(\mathcal {Y} \in \mathbb {R}^{I_1 \times R_1 \times I_3}\) with two common modes (i.e., \(d=2\)). Assume that \(\varvec{m} = (1,3,2)\), \(\varvec{n}=(1,2,3)\), and \(\Phi (\varvec{x},\varvec{y})\) denotes the \(\text {T}\)-product of vectors \(\varvec{x}\) and \(\varvec{y}\), then the heterogeneous tensor product between \(\mathcal {X}\) and \(\mathcal {Y}\) is

(ii) The heterogeneous tensor product can degenerate into the \(\text {T}_2\)-product:

Given a third-order tensor \(\mathcal {X} \in \mathbb {R}^{I_1 \times I_2 \times R_2} \) and a third-order tensor \(\mathcal {Y} \in \mathbb {R}^{R_2 \times I_2 \times I_3}\) with two common modes (i.e., \(d=2\)). Assume \(\varvec{m} = (2,3,1)\), \(\varvec{n}=(2,1,3)\), and \(\Phi (\varvec{x},\varvec{y})\) denotes the \(\text {T}\)-product of vectors \(\varvec{x}\) and \(\varvec{y}\), then the heterogeneous tensor product between \(\mathcal {X}\) and \(\mathcal {Y}\) is

(iii) The heterogeneous tensor product can degenerate into the \(\text {T}_3\)-product:

Given a third-order tensor \(\mathcal {X} \in \mathbb {R}^{I_1 \times R_3 \times I_3} \) and a third-order tensor \(\mathcal {Y} \in \mathbb {R}^{R_3 \times I_2 \times I_3}\) with two common modes (i.e., \(d=2\)). Assume \(\varvec{m} = (3,2,1)\), \(\varvec{n}=(3,1,2)\), and \(\Phi (\varvec{x},\varvec{y})\) denotes the \(\text {T}\)-product of vectors \(\varvec{x}\) and \(\varvec{y}\), then the heterogeneous tensor product between \(\mathcal {X}\) and \(\mathcal {Y}\) is

Equations (12)–(14) verify that the heterogeneous tensor product and the \(\text {T}_k\)-product are consistent when \(\Phi (\varvec{x},\varvec{y})\) is the T-product of vectors \(\varvec{x}\) and \(\varvec{y}\). \(\square \)

Now, we restate the relationship between the \(\text {T}_k\)-product and the mode product [40], which helps us better understand the relationship between tensor decompositions within the proposed GTD framework, as discussed in the subsequent section.

Lemma 3

The \(\text {T}_k\)-product can degenerate into the mode product.

Proof

Suppose that \(\mathcal {C}\in \mathbb {R}^{R_1 \times R_2 \times R_3}\), \(\varvec{F} \in \mathbb {R}^{I_1 \times R_1}\), \(\mathcal {F}\in \mathbb {R}^{I_1 \times R_2 \times R_1}\), \(\hat{\mathcal {F}}\in \mathbb {R}^{I_1 \times R_1 \times R_3}\), \(\varvec{G} \in \mathbb {R}^{I_2 \times R_2}\), \(\mathcal {G}\in \mathbb {R}^{R_2 \times I_2 \times R_3}\), \(\hat{\mathcal {G}}\in \mathbb {R}^{R_1 \times I_2 \times R_2}\), \(\varvec{H} \in \mathbb {R}^{I_3 \times R_3}\), \(\mathcal {H}\in \mathbb {R}^{R_1 \times R_3 \times I_3}\), \(\hat{\mathcal {H}}\in \mathbb {R}^{R_3 \times R_2 \times I_3}\), if the following constraints

are satisfied, the \(\text {T}_k\)-product can degenerate into the mode product

where \(\varvec{F}_1^{(i)}\), \(\varvec{F}_2^{(i)}\), and \(\varvec{F}_3^{(i)}\) denote the ith horizontal slice, lateral slice, and frontal slice of tensor \(\mathcal {F}\), respectively. \(\square \)

These lemmas possess significant values. Specifically, they serve three purposes: (i) They can inspire some new forms of tensor decomposition. For instance, in HOSVD (i.e., \(\mathcal {C} \times _1 \varvec{F} \times _2 \varvec{G} \times _3 \varvec{H}\)), we can replace \(\mathcal {C} \times _1 \varvec{F}\) with \(\mathcal {F}*_2 \mathcal {C}\), resulting in a novel tensor decomposition \(\mathcal {F}*_2 \mathcal {C}\times _2 \varvec{G} \times _3 \varvec{H}\); (ii) Additionally, by establishing the connections among these tensor products, we can explore the relationships between tensor decompositions based on different tensor products; (iii) By inserting inner product or T-product of vectors, this heterogeneous tensor product degenerates into the mode product and the \(\text {T}_k\)-product, which inspires us to consider other suitable vector products to design new tensor products.

4 The GTD Framework

With the heterogeneous tensor product, we develop a GTD framework. For easier analysis, the GTD framework is mainly established on third-order tensors. This framework not only encompasses the classic tensor decompositions as special cases but also induces numerous novel tensor decompositions. Furthermore, this framework helps us to understand the relationships among these tensor decompositions, including the classic and novel tensor decompositions.

Definition 8

(GTD framework) A tensor \(\mathcal {X} \in \mathbb {R}^{I_1 \times I_2 \times I_3}\) can be factored as

where \(\mathcal {G}_k\) \((k=1,2,\ldots ,K)\) are the factors. These factors can be third-order tensors or matrices with matched dimensions.

Remark 1

We can further impose constraints on certain factors, such as requiring the factors to be orthogonal, sparse, or diagonal.

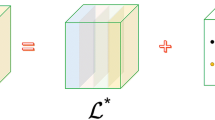

For simplicity, we focus on the GTD framework containing four factors, i.e., \(\mathcal {X} = \mathcal {F} \boxtimes \mathcal {C} \boxtimes \mathcal {G} \boxtimes \mathcal {H}\). Among these factors, \(\mathcal {C} \in \mathbb {R}^{R_1 \times R_2 \times R_3}\) is referred to as the core factor. The GTD framework with four factors is illustrated in Fig. 2.

Figure 2 shows how the GTD framework degenerates into the classic tensor decompositions. For example, when the four factors are all third-order tensors, the heterogeneous tensor products degenerate into \(\text {T}_3\)-product, and \(\mathcal {C}\) and \(\mathcal {H}\) are f-diagonal tensor and identity tensor respectively, the decomposition \(\mathcal {X} = \mathcal {F} \boxtimes \mathcal {C} \boxtimes \mathcal {G} \boxtimes \mathcal {H}\) degenerates into T-SVD.

Furthermore, this GTD framework allows us to explore many new tensor decompositions. We systematically consider the new tensor decompositions from \( three \) \( aspects \) according to the relationships between heterogeneous tensor product, \(\text {T}_k\)-product, and mode product revealed by Lemmas 2 and 3.

(i) All heterogeneous tensor products in GTD framework \(\mathcal {X} = \mathcal {F} \boxtimes \mathcal {C} \boxtimes \mathcal {G} \boxtimes \mathcal {H}\) degenerate into the \(\text {T}_k\)-product. In this context, there are some implicit constraints, such as the requirement that the two tensors involved in the \(\text {T}_k\)-product must have two common dimensions and the size requirement of the core factor (i.e., \(\mathcal {C} \in \mathbb {R}^{R_1 \times R_2 \times R_3}\)). Therefore, it is difficult to directly consider these tensor decompositions. But fortunately, by applying the inverse process of Lemma 3, which extends the mode product in the Tucker decomposition into the \(\text {T}_k\)-product, we can quickly derive these new tensor decompositions:

We see that there are only eight forms of tensor decomposition rather than twenty seven (\(3^3\)).

(ii) Based on (i), some but not all \(\text {T}_k\)-product degenerate into mode product. According to Lemma 3, each decomposition in Eq. (20) can induce many degenerated forms. For instance, \(\mathcal {X}=\mathcal {F}*_3 \mathcal {C} *_3 \mathcal {G} *_1 \mathcal {H}\) can degenerate into:

By applying Lemma 1 once more, the decompositions in Eq. (21) then degenerate into:

(iii) Based on (ii), all \(\text {T}_k\)-product degenerates into mode product. Then the decomposition is classic Tucker decomposition.

Under the GTD framework, we can obtain many new tensor decompositions with three factors by simply multiplying the first two factors of the tensor decompositions containing four factors. For example, \(\mathcal {X}=\mathcal {F}*_3 \mathcal {C} *_1 \mathcal {G} *_3 \mathcal {H}\) can be rewritten as \(\mathcal {X}=\hat{\mathcal {F}} *_1 \mathcal {G} *_3 \mathcal {H}\) with \(\hat{\mathcal {F}} = \mathcal {F}*_3 \mathcal {C}\); \(\mathcal {X}=\mathcal {F}*_3 \mathcal {C} *_3 \mathcal {G} \times _3 \varvec{H}\) can be rewritten as \(\mathcal {X}=\hat{\mathcal {F}} *_3 \mathcal {G} \times _3 \varvec{H}\) with \(\hat{\mathcal {F}} = \mathcal {F}*_3 \mathcal {C}\); and \(\mathcal {X}=\mathcal {C} \times _1 \varvec{F}\times _2 \varvec{G} \times _3 \varvec{H}\) can be rewritten as \(\mathcal {X}=\hat{\mathcal {C}} \times _2 \varvec{G} \times _3 \varvec{H}\) with \(\hat{\mathcal {C}} = \mathcal {C} \times _1 \varvec{F}\).

Remark 2

For real data, it is crucial to find a suitable or optimal GTD within the GTD framework. We can determine a suitable or optimal GTD using heuristic algorithms (e.g., genetic algorithm [2, 22]). For example, by employing genetic algorithm, we can encode different tensor products into binary strings and search for an optimal GTD that achieves the highest compression ratio or recovered PSNR value on Hamming space.

5 Representative Application

This GTD framework not only helps us to better understand the interconnection between the separated tensor decompositions but also delivers new tensor decompositions for different tasks (e.g., data compression and recovery).

Here, we consider the multi-dimensional data compression task as an example. For multi-dimensional data, such as multispectral image, light field image, and video, it has significant low-rankness along the third mode. We mainly exploit this feature to compress data. As shown in Fig. 1, the multi-dimensional data typically exhibits multiple types of correlations along the third mode, where the correlation shown in Fig. 1a can be characterized by the mode-3 product, i.e., \(\mathcal {X}=\mathcal {Y} \times _3 \varvec{H}\) and the correlation shown in Fig. 1b can be characterized by the \(\text {T}_3\)-product, i.e., \(\mathcal {Y}=\mathcal {F} *_3 \mathcal {G}\). Therefore, this work empirically utilizes the GTD \(\mathcal {X}=\mathcal {F} *_3 \mathcal {G} \times _3 \varvec{H}\) within the GTD framework to compress multi-dimensional data, especially for data with obvious low-rankness in the third mode. To solve this decomposition, we design a corresponding algorithm. The algorithm can be divided into two steps: the first step is to decompose the data as follows: \(\mathcal {X} \approx \hat{\mathcal {X}} \times _3 \varvec{H}\); the second step is to perform T-SVD on \(\hat{\mathcal {X}}\). The accompanying data compression algorithm is summarized in Algorithm 1.

Reconstructed results of multispectral images by different methods under compression ratio 0.8. From top to bottom: the odd-numbered rows are the visual results of \(toy \), \(cloth \), and \(feather \), respectively; the even-numbered rows are the corresponding residual images average over three color channels

6 Numerical Experiment

In this section, we conduct extensive numerical experiments to verify the effectiveness of the proposed data compression algorithm. To objectively evaluate the compression performance of the algorithm, we consider four classic tensor decompositions-based compression algorithms for comparison: HOSVD (Section 3 in [9]), T-SVD (Algorithm T-Compress in [18]), TT-SVD (Algorithm 2 in [27]), and TR-SVD (Algorithm 1 in [44]). It should be noted that all the compared algorithms and the suggested algorithm have no parameters. By inputting the prescribed relative error \(\epsilon \), we can obtain the final compression ratios of the corresponding algorithms. The peak signal-to-noise ratio (PSNR) and the structural similarity index (SSIM) [39] are employed to measure the quality of reconstruction. In addition, the compression ratio is defined as

where numel(\(\cdot \)) denotes the number of elements. Moreover, we theoretically analyze the compression ratio and computational complexity of different decomposition algorithms in Table 2 for comparison.

The experiments for the HOSVD and the T-SVD are based on Matlab Tensor Toolbox, version 3.0 [20]. Additionally, all data is normalized into [0,1] band-by-band. All computations are performed in MATLAB (R2019a) on a computer of 64Gb RAM and Intel(R) Core(TM) i9-10900KF CPU: @3.60 GHz.

6.1 Multispectral Images Compression

We conduct experiments on three multispectral images,Footnote 2 each with a size of \(256 \times 256 \times 31\), to evaluate the performance of the proposed method.

Figure 3 shows the compression ratio with respect to relative error of the reconstructed results by different methods. It is evident that the proposed compression algorithm outperforms the compared methods for overall relative errors. The T-SVD method achieves a higher compression rate than other comparison methods when the relative error is low, and the compression rate is lower than other comparison methods when the relative error is high. T-SVD utilizes T-product to characterize the correlation in the third mode, while HOSVD and TT decomposition use mode product to characterize the correlation in the third mode. However, these decompositions cannot faithfully capture this multiple types of correlations in the third mode. Tensor decomposition \(\mathcal {X}=\mathcal {F}*_3 \mathcal {C} *_3 \mathcal {G} \times _3 \varvec{H}\) can compensate for the defect of these methods, significantly improving the compression ratio.

Furthermore, Fig. 4 shows the reconstructed results (the first, third, and fifth rows), their corresponding residual images (the second, fourth, and sixth rows), and their corresponding PSNR and SSIM values of the multispectral images \(toy \), \(cloth \), and \(feather \). From Fig. 4, especially for the residual images, it is evident that the proposed method yields better reconstructed results compared to the other methods.

6.2 Light Field Images Compression

We then consider three light field images,Footnote 3 each with a size of \(128 \times 128 \times 243\), to verify the effectiveness of the proposed method.

Reconstructed results of light field images by different methods under compression ratio 0.9. From top to bottom: the odd-numbered rows are the visual results of \(greek \), \(dishes \), and \(table \), respectively; the even-numbered rows are the corresponding residual images average over three color channels

Figure 5 shows the compression ratio with respect to relative error of the reconstructed results by different methods. For overall relative errors, the suggested algorithm performs better than the compared methods. Additionally, compared to the other three methods (HOSVD, T-SVD, and TR-SVD), the TT-SVD method typically achieves a higher compression rate in the case of equal relative error. The reason is that it fully utilizes the low-rankness of the spatial and spectral dimensions. Although HOSVD method and TR-SVD method also take advantage of the correlation of all dimensions, truncating different dimensions with the same relative error (e.g., the HOSVD method truncates singular values in each dimension using the same relative error \(\epsilon /\sqrt{3}\)) cannot achieve satisfied compression performance. Note that the TT-SVD method has produced positive results, the suggested new tensor decomposition \(\mathcal {X}=\mathcal {F}*_3 \mathcal {C} *_3 \mathcal {G} \times _3 \varvec{H}\) can significantly benefit from it.

Additionally, Fig. 6 shows the reconstructed results (the odd-numbered rows), their corresponding residual images (the even-numbered rows), and their corresponding PSNR and SSIM values of the light field images \(greek \), \(dishes \), and \(table \). As observed, the results obtained by the proposed method are significantly superior to those of the compared ones, especially for the recovery of objects in the images, such as statues in \(greek \), bowls and spoons in \(dishes \), cabinets and plants in \(table \).

6.3 Videos Compression

We finally evaluate the performance of the proposed method on three widely used color videos sequence,Footnote 4 each with a size of \(144 \times 176 \times 150\).

Reconstructed results of videos by different methods under compression ratio 0.95. From top to bottom: the odd-numbered rows are the visual results of \(bunny \), \(carphone \), and \(news \), respectively; the even-numbered rows are the corresponding residual images average over three color channels

Figure 7 shows the compression ratio with respect to relative error of the reconstructed results by different methods. We observe that the proposed data compression algorithm outperforms the compared methods for overall relative errors. Notice that videos frequently feature moving objects and people, such as a flowing river in \(bunny \), and a moving person in \(carphone \) and \(news \), which destroy the relevance of videos in spatial and temporal modes. Similar to light field image compression, HOSVD and TT-SVD methods still achieve positive results while T-SVD and TR-SVD methods do not deliver satisfactory results in video compression.

Additionally, Fig. 8 shows the reconstructed results (the odd-numbered rows), their corresponding residual images (the even-numbered rows), and their corresponding PSNR and SSIM values of the videos \(bunny \), \(carphone \), and \(news \). It is evident that the results obtained by the proposed method are notably superior to those of the compared methods, particularly in the recovery of moving objects in the images, such as river in \(bunny \), person in \(carphone \) and \(news \).

6.4 Discussion

To comprehensively evaluate the potential of our GTD, we investigate its performance on another representative task: tensor completion (TC). We conduct TC experiments on three multispectral images (i.e., Toy, Cloth, and Feather). All data are normalized to [0, 1]. Incomplete data are generated by randomly sampling elements of the data with three sampling rates (SRs): 1\(\%\), 5\(\%\), and 10\(\%\).

Four methods are selected as compared methods, including Tucker decomposition-based method HaLRTC [26], tensor singular value decomposition-based method TF-TC [48], TT decomposition-based method TT-TC [27], and TR decomposition-based method TR-TC [44]. For fairness, TF-TC, TT-TC, TR-TC, and our GTD-TC models are all solved using the proximal alternating minimization (PAM) algorithm [1]. The algorithm parameters are set as follows: proximal parameter \(\rho =0.1\), maximum iteration number \(t^{\text {max}}=1000\), and stopping criteria \(\Vert \mathcal {X}^{t+1}-\mathcal {X}^t\Vert _F/ \Vert \mathcal {X}^t\Vert _F \le 10^{-5}\). Additionally, the parameters of HaLRTC are set to \((\frac{1}{3},\frac{1}{3},\frac{1}{3})\). The parameters of TF-TC, TT-TC, TR-TC, and GTD-TC are tubal rank (i.e., \(R^{\text {Tub}}\)), TT rank (i.e., (\(R_1^{\text {TT}}, R_2^{\text {TT}}\))), TR rank (i.e., (\(R_1^{\text {TR}}, R_2^{\text {TR}},R_3^{\text {TR}}\))), and GTD rank (i.e., (\(R_1^{\text {GTD}}, R_2^{\text {GTD}}\))), respectively, which are manually adjusted to achieve the best performance. Specifically, the adjusted tensor ranks for the three multispectral images under different SRs are provided in Table 3.

Table 4 reports the PSNR and SSIM values of the recovered multispectral images by different recovered methods under different SRs. From Table 4, we can observe that our decomposition outperforms the classical tensor decompositions in most cases, which validates the superiority of the proposed tensor decomposition on TC task.

7 Conclusion

In this paper, we first define a heterogeneous tensor product that enables us to better characterize the heterogeneous correlation along the mode of the real-world data. In addition, this heterogeneous tensor product can degenerate into the mode product and the \(\text {T}_k\)-product. Based on this heterogeneous tensor product, we propose a GTD framework, which helps to discover numerous novel tensor decompositions. Subsequently, for the data compression problem, we flexibly choose a new tensor decomposition within the GTD framework and design the corresponding compression algorithm. Extensive experimental results verify the effectiveness of the developed tensor decomposition in comparison to other existing tensor decompositions.

We believe that our framework still offers significant potential for exploration. In the future, we will explore simple and efficient methods that can find a suitable GTD under the GDT framework for different types of data and applications.

Data Availability

The datasets generated during and analyzed during the current study are available from the corresponding author on reasonable request.

Notes

If \(d=1\), \(\overrightarrow{\mathcal {Z}}^{\varvec{m}_{d-1}}\) is assume to \(\overrightarrow{\mathcal {Z}}^{\varvec{m}_1}\).

The data is available at https://www.cs.columbia.edu/CAVE/databases/multispectral/.

The data is available at http://hci-lightfield.iwr.uni-heidelberg.de.

The data is available at http://trace.eas.asu.edu/yuv/.

References

Attouch, H., Bolte, J., Svaiter, B.F.: Convergence of descent methods for semi-algebraic and tame problems: proximal algorithms, forward–backward splitting, and regularized Gauss–Seidel methods. Math. Program. 137(1), 91–129 (2013)

Bies, R.R., Muldoon, M.F., Pollock, B.G., Manuck, S., Smith, G., Sale, M.E.: A genetic algorithm-based, hybrid machine learning approach to model selection. J. Pharmacokinet. Pharmacodyn. 33(2), 195 (2006)

Bigoni, D., Engsig-Karup, A.P., Marzouk, Y.M.: Spectral tensor-train decomposition. SIAM J. Sci. Comput. 38(4), A2405–A2439 (2016)

Brachat, J., Comon, P., Mourrain, B., Tsigaridas, E.: Symmetric tensor decomposition. Linear Algebra Appl. 433(11–12), 1851–1872 (2010)

Carroll, J.D., Chang, J.J.: Analysis of individual differences in multidimensional scaling via an N-way generalization of “Eckart-Young" decomposition. Psychometrika 35(3), 283–319 (1970)

Che, M., Wei, Y.: An efficient algorithm for computing the approximate t-URV and its applications. J. Sci. Comput. 92(3), 93 (2022)

Cyganek, B., Gruszczyński, S.: Hybrid computer vision system for drivers’ eye recognition and fatigue monitoring. Neurocomputing 126, 78–94 (2014)

De Lathauwer, L.: Signal Processing Based on Multilinear Algebra. Katholieke Universiteit Leuven, Leuven (1997)

De Lathauwer, L., De Moor, B., Vandewalle, J.: A multilinear singular value decomposition. SIAM J. Matrix Anal. Appl. 21(4), 1253–1278 (2000)

Dong, W., Yu, G., Qi, L., Cai, X.: Practical sketching algorithms for low-rank Tucker approximation of large tensors. J. Sci. Comput. 95(2), 52 (2023)

Franz, T., Schultz, A., Sizov, S., Staab, S.: Triplerank: ranking semantic web data by tensor decomposition. In: International Semantic Web Conference, pp. 213–228 (2009)

Govindu, V.M.: A tensor decomposition for geometric grouping and segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, vol. 1, pp. 1150–1157 (2005)

Harshman, R.A.: 11Foundations of the PARAFAC procedure: models and conditions for an “explanatory” multimodal factor analysis”. In: UCLA Working Papers in Phonetics (1970)

He, H., Ling, C., Xie, W.: Tensor completion via a generalized transformed tensor t-product decomposition without t-svd. J. Sci. Comput. 93(2), 47 (2022)

Jiang, B., Ding, C., Tang, J., Luo, B.: Image representation and learning with graph-Laplacian tucker tensor decomposition. IEEE Trans. Cybern. 49(4), 1417–1426 (2018)

Jiang, Q., Zhao, X.L., Lin, J., Yang, J.H., Peng, J., Jiang, T.X.: Superpixel-oriented thick cloud removal method for multitemporal remote sensing images. IEEE Geosci. Remote Sens. Lett. 21, 1–5 (2024)

Kernfeld, E., Kilmer, M., Aeron, S.: Tensor-tensor products with invertible linear transforms. Linear Algebra Appl. 485, 545–570 (2015)

Kilmer, M.E., Martin, C.D.: Factorization strategies for third-order tensors. Linear Algebra Appl. 435(3), 641–658 (2011)

Kilmer, M.E., Martin, C.D., Perrone, L.: A third-order generalization of the matrix SVD as a product of third-order tensors. Tufts University, Department of Computer Science, Tech. Rep. TR-2008-4 (2008)

Kola, T., Bader, B.W., Acar Ataman, E.N., Dunlavy, D., Bassett, R., et al.: Matlab tensor toolbox, version 3.0. https://www.osti.gov//servlets/purl/1349514 (2017)

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51(3), 455–500 (2009)

Li, C., Sun, Z.: Evolutionary topology search for tensor network decomposition. In: Proceedings of the International Conference on Machine Learning (ICML), pp. 5947–5957 (2020)

Li, M., Li, W., Chen, Y., Xiao, M.: The nonconvex tensor robust principal component analysis approximation model via the weighted \(l_p\)-norm regularization. J. Sci. Comput. 89(3), 67 (2021)

Li, N., Kindermann, S., Navasca, C.: Some convergence results on the regularized alternating least-squares method for tensor decomposition. Linear Algebra Appl. 438(2), 796–812 (2013)

Lin, J., Huang, T.Z., Zhao, X.L., Ji, T.Y., Zhao, Q.: Tensor robust kernel PCA for multidimensional data. IEEE Trans. Neural Netw. Learn. Syst. (2024). https://doi.org/10.1109/TNNLS.2024.3356228

Liu, J., Musialski, P., Wonka, P., Ye, J.: Tensor completion for estimating missing values in visual data. IEEE Trans. Pattern Anal. Mach. Intell. 35(1), 208–220 (2012)

Oseledets, I.V.: Tensor-train decomposition. SIAM J. Sci. Comput. 33(5), 2295–2317 (2011)

Popa, J., Lou, Y., Minkoff, S.E.: Low-rank tensor data reconstruction and denoising via ADMM: algorithm and convergence analysis. J. Sci. Comput. 97(2), 49 (2023)

Qi, L., Chen, Y., Bakshi, M., Zhang, X.: Triple decomposition and tensor recovery of third order tensors. SIAM J. Matrix Anal. Appl. 42(1), 299–329 (2021)

Qi, L., Sun, W., Wang, Y.: Numerical multilinear algebra and its applications. Front. Math. China 2(4), 501–526 (2007)

Qiu, D., Bai, M., Ng, M.K., Zhang, X.: Robust low transformed multi-rank tensor methods for image alignment. J. Sci. Comput. 87(1), 24 (2021)

Reichel, L., Ugwu, U.O.: Tensor Arnoldi–Tikhonov and GMRES-type methods for ill-posed problems with a t-product structure. J. Sci. Comput. 90, 1–39 (2022)

Schollwöck, U.: The density-matrix renormalization group in the age of matrix product states. Ann. Phys. 326(1), 96–192 (2011)

Sobral, A., Javed, S., Ki Jung, S., Bouwmans, T., Zahzah, E.h.: Online stochastic tensor decomposition for background subtraction in multispectral video sequences. In: Proceedings of the IEEE International Conference on Computer Vision Workshops, pp. 106–113 (2015)

Song, G., Ng, M.K., Zhang, X.: Robust tensor completion using transformed tensor singular value decomposition. Numer. Linear Algebra Appl. 27(3), e2299 (2020)

Tucker, L.R.: The extension of factor analysis to three-dimensional matrices. Contrib. Math. Psychol. 110119, 110–182 (1964)

Tucker, L.R.: Some mathematical notes on three-mode factor analysis. Psychometrika 31(3), 279–311 (1966)

Verstraete, F., Cirac, J.I.: Matrix product states represent ground states faithfully. Phys. Rev. B 73(9), 094423 (2006)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Yu, Q., Zhang, X., Huang, Z.H.: Multi-tubal rank of third order tensor and related low rank tensor completion problem. arXiv preprint arXiv:2012.05065 (2020)

Zeng, C.: Rank properties and computational methods for orthogonal tensor decompositions. J. Sci. Comput. 94(1), 6 (2023)

Zhang, X., Ng, M.K., Bai, M.: A fast algorithm for deconvolution and Poisson noise removal. J. Sci. Comput. 75, 1535–1554 (2018)

Zhang, Z., Yang, X., Oseledets, I.V., Karniadakis, G.E., Daniel, L.: Enabling high-dimensional hierarchical uncertainty quantification by ANOVA and tensor-train decomposition. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 34(1), 63–76 (2014)

Zhao, Q., Zhou, G., Xie, S., Zhang, L., Cichocki, A.: Tensor ring decomposition. arXiv preprint arXiv:1606.05535 (2016)

Zhao, X., Bai, M., Ng, M.K.: Nonconvex optimization for robust tensor completion from grossly sparse observations. J. Sci. Comput. 85(2), 46 (2020)

Zheng, Y.B., Huang, T.Z., Zhao, X.L., Jiang, T.X., Ma, T.H., Ji, T.Y.: Mixed noise removal in hyperspectral image via low-fibered-rank regularization. IEEE Trans. Geosci. Remote Sens. 58(1), 734–749 (2019)

Zheng, Y.B., Huang, T.Z., Zhao, X.L., Zhao, Q., Jiang, T.X.: Fully-connected tensor network decomposition and its application to higher-order tensor completion. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35(12), pp. 11071–11078 (2021)

Zhou, P., Lu, C., Lin, Z., Zhang, C.: Tensor factorization for low-rank tensor completion. IEEE Trans. Image Process. 27(3), 1152–1163 (2017)

Funding

This research is supported by the National Natural Science Foundation of China (Nos. 12371456, 12171072, 12201522, and 62131005), Sichuan Science and Technology Program (Nos. 2024NSFJQ0038 and 2023ZYD0007), National Key Research and Development Program of China (No. 2020YFA0714001), Science Foundation of Sichuan Province of China (No. 2024NSFSC1389), Fundamental Research Funds for the Central Universities (No. 2682023CX069).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, YY., Zhao, XL., Ding, M. et al. The Generalized Tensor Decomposition with Heterogeneous Tensor Product for Third-Order Tensors. J Sci Comput 100, 78 (2024). https://doi.org/10.1007/s10915-024-02637-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-024-02637-8