Abstract

The orthogonal decomposition factorizes a tensor into a sum of an orthogonal list of rank-one tensors. The corresponding rank is called orthogonal rank. We present several properties of orthogonal rank, which are different from those of tensor rank in many aspects. For instance, a subtensor may have a larger orthogonal rank than the whole tensor. To fit the orthogonal decomposition, we propose an algorithm based on the augmented Lagrangian method. The gradient of the objective function has a nice structure, inspiring us to use gradient-based optimization methods to solve it. We guarantee the orthogonality by a novel orthogonalization process. Numerical experiments show that the proposed method has a great advantage over the existing methods for strongly orthogonal decompositions in terms of the approximation error.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

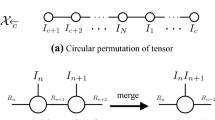

Given a tensor \(\mathcal {A}\in \mathbb {R}^{I_1\times \cdots \times I_N}\), the CANDECOMP/PARAFAC (CP) decomposition factorizes it into a sum of rank-one tensors:

where \(\textbf{v}^{(n)}_k\in \mathbb {R}^{I_n},k=1,\ldots ,K,n=1,\ldots ,N\). Usually, it is difficult to determine the number K for expressing \(\mathcal {A}\) exactly [15, 16]. Hence, the following approximate CP decomposition is more meaningful in practical applications:

where \(\Vert \cdot \Vert \) is the Frobenius norm and R is a prescribed number. This problem is just to find a best rank-R approximation to \(\mathcal {A}\). Unfortunately, this problem has no solution in general [9, 20].

As mentioned in Ref. [9], the major open question in tensor approximation is how to overcome the ill-posedness of the low rank approximation problem. One natural strategy is to impose orthogonality constraints, because the orthogonality is an inherent property of second-order tensor rank decompositions, i.e., matrix singular value decompositions (SVD). The orthogonal tensor decomposition can be traced back to [6] for the symmetric case, and then is studied in [17] for the general case:

where \(\langle \cdot ,\cdot \rangle \) is the inner product that induces the Frobenius norm; see Sect. 2.1 for details. In [23], the orthogonality constraint is extended to general angular constraints, where several properties including the existence, uniqueness and exact recoverability are discussed. As a special case of decompositions with angular constraints, the orthogonal tensor decomposition also has these properties.

The greedy approach presented in Ref. [17] is the earliest method for computing a low orthogonal rank approximation to a given tensor, where one rank-one component is updated in one iteration. Specifically, suppose we have obtained k rank-one components. The \((k+1)\)st rank-one component is updated by

This method is reasonable only if the Eckart-Young theorem [11] can be extended to the orthogonal decomposition, i.e., the best low orthogonal rank approximation can be obtained by truncating the orthogonal rank decomposition (see Sect. 3 for the definition). Refer to [17, Section 5] for details. However, a counterexample presented in Ref. [18] shows that such an extension is not possible. Suppose \(\mathcal {T}_r=\otimes _{n=1}^N \textbf{v}_r^{(n)}\) in (1). The orthogonality constraint has the following form

This means that there exists at least one \(m \in \{1,\ldots ,N\}\) such that \(\left\langle \textbf{v}_s^{(m)},\textbf{v}_t^{(m)}\right\rangle =0\). However, we cannot determine the number m for different pairs of s, t. This is the main difficulty in fitting orthogonal decompositions. Practical existing algorithms are proposed by fixing the number m; see [5, 13, 31, 34, 35]. Actually, these algorithms are aimed at strongly orthogonal decompositions, whose one or more factor matrices are orthogonal. All these algorithms follow a similar framework, by combining the alternating minimization method and the polar decomposition. For factor matrices with general angular constraints, a proximal gradient algorithm is proposed in Ref. [26]. In [24], the Jacobi SVD algorithm is extended to reduce a tensor to a form with the \(\ell _2\) norm of the diagonal vector being maximized. The resulting form is not diagonal and hence this is not an algorithm for orthogonal decompositions discussed in this work.

In this paper, we first study orthogonal rank. We find that there are many differences between orthogonal rank and tensor rank. Orthogonal rank may be variant under the invertible n-mode product, a subtensor may have a larger orthogonal rank than the whole tensor, and orthogonal rank is lower semicontinuous. A refined upper bound of orthogonal rank [22] is presented. As for the algorithm, we employ the augmented Lagrangian method to convert (1) into an unconstrained problem. We find that the gradient of the objective function has a good structure. Therefore, we use gradient-based optimization methods to solve each subproblem. To guarantee the orthogonality of the final result, we develop an orthogonalization process. Numerical experiments show that our method has a great advantage over the existing methods for strongly orthogonal decompositions in terms of the approximation error.

The rest of this paper is organized as follows. Section 2 recalls some preliminary materials. In Sect. 3, we present several properties of orthogonal rank. The algorithm is proposed in Sect. 4. Experimental results are given in Sect. 5. Conclusions are presented in Sect. 6.

1.1 Notation

We use bold-face lowercase letters (\(\textbf{a}, \textbf{b}, \ldots \)) to denote vectors, bold-face capitals (\(\textbf{A}, \textbf{B}, \ldots \)) to denote matrices and calligraphic letters (\(\mathcal {A}, \mathcal {B}, \ldots \)) to denote tensors. The notations \(\textbf{I}\) and \(\textbf{0}\) denote the identity matrix and the zero matrix of suitable dimensions, respectively. The \((i_1,i_2,\cdots ,i_N)\)th element of \(\mathcal {A}\) is denoted by \(a_{i_1i_2\cdots i_N}\). The n-mode product of a tensor \(\mathcal {A}\) by a matrix \(\textbf{M}\) is denoted by \(\textbf{M}\cdot _n \mathcal {A}\). Following [9], we write \(\textbf{M}_1\cdot _1 \cdots \textbf{M}_N\cdot _N \mathcal {A}\) more concisely as \((\textbf{M}_1,\cdots ,\textbf{M}_N)\cdot \mathcal {A}\). The mode-n unfolding matrix is denoted by \(\textbf{A}_{(n)}\), whose columns are all mode-n fibers of \(\mathcal {A}\), \(n=1,\ldots ,N\).

2 Preliminaries

2.1 Inner Product, Angle and Orthogonality

Let \(\mathcal {A},\mathcal {B} \in \mathbb {R}^{I_1\times \cdots \times I_N}\). The inner product of \(\mathcal {A}, \mathcal {B}\) is defined by

and the Frobenius norm of \(\mathcal {A}\) induced by this inner product is \(\Vert \mathcal {A}\Vert = \sqrt{\langle \mathcal {A},\mathcal {A}\rangle }\). Let \(\mathcal {U}=\textbf{u}^{(1)}\otimes \cdots \otimes \textbf{u}^{(N)}\) and \(\mathcal {V}=\textbf{v}^{(1)}\otimes \cdots \otimes \textbf{v}^{(N)}\). Then

We say that \(\mathcal {A}\) is a unit tensor if \(\Vert \mathcal {A}\Vert =1\).

The angle between \(\mathcal {A},\mathcal {B}\) is defined by

Two tensors \(\mathcal {A},\mathcal {B}\) are orthogonal (\(\mathcal {A} \bot \mathcal {B}\)) if \(\langle \mathcal {A},\mathcal {B}\rangle =0\), i.e., \(\angle (\mathcal {A},\mathcal {B})= \frac{\pi }{2}\). In (2), \(\mathcal {U}\) and \(\mathcal {V}\) are orthogonal if \(\prod _{n=1}^{N}\langle \textbf{u}^{(n)},\textbf{v}^{(n)}\rangle =0\). This leads to other options for defining orthogonality of two rank-one tensors. Given \(1 \le i_1< \cdots < i_M \le N\), we say that \(\mathcal {U}\) and \(\mathcal {V}\) are \((i_1,\cdots ,i_M)\)-orthogonal if

If \(M=N\), we say that \(\mathcal {U}\) and \(\mathcal {V}\) are completely orthogonal.

A list of tensors \(\mathcal {T}_1,\cdots ,\mathcal {T}_m\) is said to be orthogonal if \(\langle \mathcal {T}_i,\mathcal {T}_j\rangle = 0\) for all distinct \(i,j\in \{1,\ldots ,m\}\). An orthogonal list of tensors is an orthonormal list if each of its elements is a unit tensor. Similarly, we can define an \((i_1,\cdots ,i_M)\)-orthogonal list of rank-one tensors.

2.2 CP Decompositions and Tensor Rank

The CP decomposition factorizes a tensor into a sum of rank-one tensors:

where the nth factor matrix is

An interesting property of tensors is that their CP decompositions are often unique. Refer to [19, Section 3.2] for detailed introductions. The most famous results [21, 30] on the uniqueness condition depend on the concept of k-rank. The k-rank of a matrix \(\textbf{M}\), denoted by \(k_{\textbf{M}}\), is the largest integer such that every set containing \(k_{\textbf{M}}\) columns of \(\textbf{M}\) is linearly independent. For the CP decomposition (4), its uniqueness condition presented in [30] is

Clearly, if (4) is unique, we must have \(R=\textrm{rank}(\mathcal {A})\).

The rank of \(\mathcal {A}\) is defined by \(\textrm{rank}(\mathcal {A}):=\min \left\{ R: \mathcal {A} = \sum _{r=1}^{R}\textbf{v}_r^{(1)} \otimes \cdots \otimes \textbf{v}_r^{(N)}\right\} \). Given \(R>0\), the following problem

aims to find the best rank-R approximation of \(\mathcal {A}\). However, (6) has no solution in general [9, 20]. This is due to the following feature of tensor rank.

Proposition 2.1

( [9]) Let \(R\ge 2\). The set \(\{\mathcal {A}\in \mathbb {R}^{I_1\times \cdots \times I_N}: \textrm{rank}(\mathcal {A})\le R\}\) is not closed in the normed space \(\mathbb {R}^{I_1\times \cdots \times I_N}\). That is, the function \(\textrm{rank}(\mathcal {A})\) is not lower semicontinuous.

2.3 Orthogonal Decompositions

The orthogonal decomposition factorizes a tensor into a sum of an orthogonal list of rank-one tensors:

The following lemma can be obtained by a direct calculation based on (2).

Lemma 2.2

The decomposition (4) is an orthogonal decomposition if and only if \(\textbf{V}^{(1)^\top }\textbf{V}^{(1)}\circledast \cdots \circledast \textbf{V}^{(N)^\top }\textbf{V}^{(N)}\) is diagonal, where “\(\circledast \)” is the Hadamard product.

The \((i_1,\cdots ,i_M)\)-orthogonal decomposition factorizes a tensor into a sum of an \((i_1,\cdots ,i_M)\)-orthogonal list of rank-one tensors. Any type of \((i_1,\cdots ,i_M)\)-orthogonal decomposition is called strongly orthogonal decompositionFootnote 1. Clearly, a strongly orthogonal decomposition is also an orthogonal decomposition. However, we are not in general guaranteed that a strongly orthogonal decomposition exists. Simple examples include the tensors with \(\textrm{rank}(\mathcal {A})>\max \{I_1,\ldots ,I_N\}\)Footnote 2. This is because an \((i_1,\cdots ,i_M)\)-orthogonal list consists of at most \(\min \{I_{i_1},\ldots ,I_{i_M}\}\) elements. Related discussions can be found in Ref. [5, 17].

There is a lot of research on strongly orthogonal decompositions. The \((1,\cdots ,N)\)-orthogonal decomposition, also called the completely orthogonal decomposition, is discussed in Ref. [5]. The (n)-orthogonality, where \(1\le n \le N\), is considered in Ref. [31, 34]. General strongly orthogonal decompositions are considered in Ref. [13, 35].

3 Properties of Orthogonal Rank

The orthogonal rank of \(\mathcal {A}\) is defined by

If \(R=\textrm{rank}_{\bot }(\mathcal {A})\) in (7), then (7) is called an orthogonal rank decomposition.

Clearly, \(\textrm{rank}_{\bot }(\mathcal {A})\ge \textrm{rank}(\mathcal {A})\). The following lemma gives a necessary and sufficient condition for \(\textrm{rank}_{\bot }(\mathcal {A})=\textrm{rank}(\mathcal {A})\) under some assumptions.

Lemma 3.1

Let \(R\ge 2,\textbf{V}^{(n)} \in \mathbb {R}^{I_n \times R}\) for \(n=1,\ldots N\) and \(\mathcal {A} = [\![ \textbf{V}^{(1)},\cdots ,\textbf{V}^{(N)}]\!]\). Suppose \(\textrm{rank}(\textbf{V}^{(n)})=R\) for all \(n=1,\ldots N\). Then \(\textrm{rank}(\mathcal {A})=\textrm{rank}_{\bot }(\mathcal {A})=R\) if and only if \(\textbf{V}^{(1)^\top }\textbf{V}^{(1)}\circledast \cdots \circledast \textbf{V}^{(N)^\top }\textbf{V}^{(N)}\) is diagonal.

Proof

Since \(\textrm{rank}(\textbf{V}^{(n)})=R\) and \(R\ge 2\), we have

By (5), this decomposition is unique and \(\textrm{rank}(\mathcal {A})=R\).

By Lemma 2.2, if \(\textbf{V}^{(1)^\top }\textbf{V}^{(1)}\circledast \cdots \circledast \textbf{V}^{(N)^\top }\textbf{V}^{(N)}\) is diagonal, then this decomposition is an orthogonal decomposition. Hence,

Also by Lemma 2.2, if \(\textbf{V}^{(1)^\top }\textbf{V}^{(1)}\circledast \cdots \circledast \textbf{V}^{(N)^\top }\textbf{V}^{(N)}\) is not diagonal, then this decomposition is not an orthogonal decomposition. Due to the uniqueness, there does not exist an orthogonal decomposition with R terms, i.e., \(\textrm{rank}_{\bot }(\mathcal {A})>R\). \(\square \)

Suppose \(\mathcal {A}\) is a subtensor of \(\mathcal {B}\), then \(\textrm{rank}(\mathcal {A}) \le \textrm{rank}(\mathcal {B})\). It comes as a surprise that the analog does not hold for orthogonal rank. See the next proposition.

Proposition 3.2

Let \(R\ge 2,\textbf{V}^{(n)} \in \mathbb {R}^{I_n \times R}\) for \(n=1,\ldots N\) and \(\mathcal {A} = [\![ \textbf{V}^{(1)},\cdots ,\textbf{V}^{(N)}]\!]\). Suppose \(\textrm{rank}(\textbf{V}^{(n)})=R\) for all \(n=1,\ldots N\). If \(\textbf{V}^{(1)^\top }\textbf{V}^{(1)}\circledast \cdots \circledast \textbf{V}^{(N)^\top }\textbf{V}^{(N)}\) is not diagonal, then there exists a tensor \(\mathcal {B}\) such that

Proof

We can find a sufficiently large t such that \(t\textbf{I}-\textbf{V}^{(1)^\top }\textbf{V}^{(1)}\) is positive semidefinite. Then there exists a matrix \(\textbf{M}\) with R columns such that

Define \(\textbf{V}=\begin{bmatrix} \textbf{V}^{(1)} \\ \textbf{M} \end{bmatrix}\). Then \(\mathcal {B}=[\![ \textbf{V},\textbf{V}^{(2)},\cdots ,\textbf{V}^{(N)}]\!]\) is an orthogonal decomposition. By Lemma 3.1, we have \(\textrm{rank}_{\bot }(\mathcal {B})=R<\textrm{rank}_{\bot }(\mathcal {A})\). \(\square \)

Remark 3.3

If we regard a matrix as a two-dimensional dataset, the value of rank represents the real quantity of information that the matrix embodies. That is, a low-rank matrix embodies a lot of redundant information. Therefore, it makes sense that the rank of a submatrix is smaller than that of the whole matrix. There are various ways to decompose a tensor into a sum of rank-one tensors, and each of these decompositions leads naturally to a concept of tensor rank. Proposition 3.2 shows the flaw of orthogonal rank in representing the real quantity of information that a tensor embodies.

A basic property of tensor rank is its invariance under the invertible n-mode product. If \(\textbf{M}_n\) is invertible for \(n=1,\dots ,N\), [9, Lemma 2.3] tells us that

However, the analog does not hold for orthogonal rank. Counterexamples can be constructed based on Lemma 3.1. Due to the fact that \(\textrm{rank}(\textbf{V}^{(1)})=R\), there exists an invertible matrix \(\textbf{M} \in \mathbb {R}^{I_1\times I_1}\) satisfying \(\textbf{M}(:,1:R)=\textbf{V}^{(1)}\). Then \(\textbf{M}^{-1}\textbf{V}^{(1)}=\begin{bmatrix} \textbf{I} \\ \textbf{0} \end{bmatrix}\) and \(\textbf{M}^{-1}\cdot _1 \mathcal {A} = [\![ \textbf{M}^{-1}\textbf{V}^{(1)},\textbf{V}^{(2)},\cdots ,\textbf{V}^{(N)}]\!]\) is an orthogonal decomposition. Therefore,

If the n-mode product is orthogonal, we have the following lemma.

Lemma 3.4

Let \(\mathcal {A}\in \mathbb {R}^{I_1\times \cdots \times I_N}\) and \(\textbf{M}_n \in \mathbb {R}^{I_n \times I_n}\) be orthogonal for \(n=1,\ldots ,N\). Then

Proof

Suppose \(\mathcal {A}=[\![ \textbf{V}^{(1)},\cdots ,\textbf{V}^{(N)}]\!]\) is an orthogonal decomposition. Then \((\textbf{M}_1,\cdots ,\textbf{M}_N)\cdot \mathcal {A}= [\![ \textbf{M}_1\textbf{V}^{(1)},\cdots ,\textbf{M}_N\textbf{V}^{(N)}]\!]\) and \((\textbf{M}_1\textbf{V}^{(1)})^\top \textbf{M}_1\textbf{V}^{(1)}\circledast \cdots \circledast (\textbf{M}_N\textbf{V}^{(N)})^\top \textbf{M}_N\textbf{V}^{(N)}=\textbf{V}^{(1)^\top }\textbf{V}^{(1)}\circledast \cdots \circledast \textbf{V}^{(N)^\top }\textbf{V}^{(N)}\) is diagonal. Hence, \(\textrm{rank}_{\bot }((\textbf{M}_1,\cdots ,\textbf{M}_N)\cdot \mathcal {A}) \le \textrm{rank}_{\bot }(\mathcal {A})\).

On the other hand, we have

and hence \(\textrm{rank}_{\bot }(\mathcal {A})\le \textrm{rank}_{\bot }((\textbf{M}_1,\cdots ,\textbf{M}_N)\cdot \mathcal {A})\). Combining these two parts yields the result. \(\square \)

In [22, (2.8)], an upper bound of \(\textrm{rank}_{\bot }(\mathcal {A})\) is given as

We refine this result in terms of the multilinear rank. The n-rank of \(\mathcal {A}\), denoted by \(\textrm{rank}_{n}(\mathcal {A})\), is the dimension of the vector space spanned by all n-mode fibers, i.e., \(\textrm{rank}_{n}(\mathcal {A})=\textrm{rank}(\textbf{A}_{(n)})\). The N-tuple (\(\textrm{rank}_{1}(\mathcal {A}),\ldots ,\textrm{rank}_{N}(\mathcal {A})\)) is called the multilinear rank of \(\mathcal {A}\).

Proposition 3.5

Let \(\mathcal {A}\in \mathbb {R}^{I_1\times \cdots \times I_N}\). Then

Proof

Suppose \(\mathcal {A}\) has the following higher-order singular value decomposition (HOSVD) [8]:

where \(\textbf{U}_n \in \mathbb {R}^{I_n\times I_n}\) is orthogonal and \(s_{i_1i_2\cdots i_N}=0\) if there exists at least one \(n \in \{1,\ldots ,N\}\) such that \(i_n>\textrm{rank}_n(\mathcal {A})\). It follows from Lemma 3.4 that \(\textrm{rank}_{\bot }(\mathcal {A})=\textrm{rank}_{\bot }(\mathcal {S})\). Note that

is an orthogonal decomposition, where \(\textbf{e}_{i_k}\in \mathbb {R}^{I_k}\) is the standard basis vector and \(\mathcal {S}(i_1,\ldots ,i_{m-1},:,i_{m+1},\ldots ,i_N)\) is a mode-m fiber. Hence \(\textrm{rank}_{\bot }(\mathcal {S})\) is less than the number of non-zero mode-m fibers, which is at most \(\prod _{n\ne m}\textrm{rank}_n(\mathcal {A})\). \(\square \)

In contrast to Proposition 2.1, we have the following proposition for orthogonal rank.

Proposition 3.6

For any \(R>0\), the set \(\{\mathcal {A}\in \mathbb {R}^{I_1\times \cdots \times I_N}: \textrm{rank}_{\bot }(\mathcal {A})\le R\}\) is closed in the normed space \(\mathbb {R}^{I_1\times \cdots \times I_N}\). That is, the function \(\textrm{rank}_{\bot }(\mathcal {A})\) is lower semicontinuous.

Proof

Suppose \(\lim _{m\rightarrow \infty }\mathcal {A}_m=\mathcal {A}\), where \(\textrm{rank}_{\bot }(\mathcal {A}_m ) \le R\). It suffices to prove that \(\textrm{rank}_{\bot }(\mathcal {A})\le R\). Since \(\textrm{rank}_{\bot }(\mathcal {A}_m ) \le R\), we can write

where \(\left\langle \mathcal {U}_{m,s}, \mathcal {U}_{m,t}\right\rangle =0\) for all \(s\ne t\) and \(\Vert \textbf{u}^{(n)}_{m,r}\Vert =1\) for all \(n=1,\ldots ,N\) and \(r=1,\ldots ,R\). (If \(\textrm{rank}_{\bot }(\mathcal {A}_m ) < R\), we just need to set \(\sigma _{m,r}=0\) for \(r=\textrm{rank}_{\bot }(\mathcal {A}_m )+1,\ldots ,R\).) Then

Since \(\lim _{m\rightarrow \infty }\Vert \mathcal {A}_m\Vert =\Vert \mathcal {A}\Vert \), \(\sigma _{m,r}\) are uniformly bounded. Thus we can find a subsequence with convergence \(\lim _{k\rightarrow \infty }\sigma _{m_k,r}= \sigma _{r}, \lim _{k\rightarrow \infty }\textbf{u}^{(n)}_{m_k,r}= \textbf{u}^{(n)}_{r}\) for all r and n. Note that \(\lim _{k\rightarrow \infty } \mathcal {A}_{m_k} = \mathcal {A}\), i.e.,

Moreover,

Then (8) is an orthogonal decomposition and hence \(\textrm{rank}_{\bot }(\mathcal {A})\le R\). \(\square \)

Given \(R>0\), finding the best orthogonal rank-R approximation of \(\mathcal {A}\) is

By Proposition 3.6, we know that the solution of (9) always exists.

4 Algorithms for Low Orthogonal Rank Approximation

Problem (9) can be formulated as

where \(\textbf{v}:=\left[ \textbf{v}_{1}^{(1)^\top }\cdots \textbf{v}_{R}^{(1)^\top }\cdots \textbf{v}_{1}^{(N)^\top }\cdots \textbf{v}_{R}^{(N)^\top } \right] ^\top \) and \(P=R\sum _{n=1}^{N}I_n\). Define \(\mathcal {T}_r = \otimes _{n=1}^N \textbf{v}_r^{(n)}, r=1,\ldots ,R\). Then (10) can be rewritten as

The main difficulty in solving (10) is how to handle the 2N-degree polynomial constraints. The augmented Lagrangian method is a powerful method for solving constrained problems. This method is suitable for (10), because the subproblems can be solved easily by gradient-based optimization methods, which will be shown later. Using the augmented Lagrangian method means that the orthogonality constraint is not met exactly in each step. Our target is to make \(\angle (\mathcal {T}_s,\mathcal {T}_t)\) close to \(\pi /2\). By (3), we have

To avoid the influence of the norms \(\Vert \mathcal {T}_r\Vert \), an ideal strategy is to consider the following augmented Lagrangian function:

However, this would make the subproblem rather difficult to solve. We can realize this idea by setting different penalty parameters for the augmented Lagrangian function:

where \(\lambda _{st}=\lambda _{ts}\) are Lagrange multipliers, \(c_{st}=c_{ts}>0\) are penalty parameters and \(\varvec{\lambda }=\{\lambda _{st}\},\textbf{c}=\{c_{st}\}\). How to set penalty parameters will be elaborated in Sect. 4.1. Using different penalty parameters can reflect different features of different constraints, and is very common for the augmented Lagrangian method; see [3, p. 124], [33, Chapter 10.4] and [7, (1.5)].

For each iteration of the augmented Lagrangian method, we need to solve the following problem

with \(\varvec{\lambda },\textbf{c}\) given. If \(\varvec{\lambda }=\{0\},\textbf{c}=\{0\}\), (14) is just (6). Since (6) has no solution in general, the first issue that we need to make sure is whether (14) has a solution. We have the following proposition.

Proposition 4.1

If \(c_{st}>0\) for all \(s\ne t\), then (14) always has a solution.

Proof

For convenience, define \(\mathscr {E}(\textbf{v})=\mathscr {L}(\textbf{v},\varvec{\lambda };\textbf{c})\). It follows from (11) and (13) that

Note that

We can scale each \(\textbf{v}_r^{(n)}\) such that \(\Vert \textbf{v}_r^{(n)}\Vert = \Vert \mathcal {T}_r\Vert ^{1/N}, n=1,\ldots ,N\). Define the following set

The continuity of \(\Vert \cdot \Vert \) implies that W is closed. We have

Hence, it suffices to show that (14) has a solution on W.

Define \(\alpha =\frac{1}{4}\sum _{s=1}^{R}\sum _{t=1,t\ne s}^{R}\frac{\lambda _{st}^2}{c_{st}}, \beta =\min \{c_{st}\},\gamma =\sum _{s=1}^{R}\sum _{t=1,t\ne s}^{R}\frac{|\lambda _{st}|}{c_{st}}\). For any \(\xi \ge \inf \mathscr {E} \ge 0\), if \(\mathscr {E} \le \xi \), then \(\left\| \mathcal {A} - \sum _{r=1}^{R}\mathcal {T}_r\right\| \le \sqrt{2(\xi +\alpha )}\) and

Hence \(\Vert \sum _{r=1}^{R}\mathcal {T}_r\Vert \le \Vert \mathcal {A} - \sum _{r=1}^{R}\mathcal {T}_r\Vert + \Vert \mathcal {A}\Vert \le \sqrt{2(\xi +\alpha )} + \Vert \mathcal {A}\Vert \). For any \(\textbf{v}\in W\), it follows that

That is, the level set \(\{\textbf{v}\in W: \mathscr {E}(\textbf{v}) \le \xi ,\xi \ge \inf \mathscr {E} \}\) is bounded. Combining with the fact that \(\mathscr {E}(\textbf{v})\) is continuous and W is closed, it follows from [28, Theorem 1.9] that \(\mathscr {E}\) can attain its minimum on W. \(\square \)

We will employ gradient-based optimization methods to solve each subproblem. Gradient-based optimization methods have been used in fitting CP decompositions; see [1, 12]. To use such methods, we need to compute the gradient of the objective function. Define

where “\(\odot \)” is the Khatri-Rao product. With the relationship introduced in [19, Section 2.6], we have

The gradient of the first term of \(\mathscr {L}\), i.e., \(\mathscr {F}(\textbf{v})\) in (10), can be found in [1, 12]. Here we provide a calculation based on unfolding matrices as follows.

Lemma 4.2

The gradient of \(\mathscr {F}(\textbf{v})\) in (10) is given by

for \(n=1,\ldots ,N\), where \(\textbf{V}^{(-n)}\) and \(\varvec{\Gamma }^{(n)}\) are defined in (16) and (17), respectively.

Proof

Let \(\mathcal {B}=\sum _{r=1}^{R}\otimes _{n=1}^N \textbf{v}_r^{(n)}\). Then we have \(\textbf{B}_{(n)}=\textbf{V}^{(n)}\textbf{V}^{(-n)^\top }\) (see [19, Sect. 3]) and

Then,

\(\square \)

Denote the sum of the last two terms of \(\mathscr {L}\) by \(\mathscr {G}\), i.e.,

and define \(\gamma _{sr}^{(n)}=\prod _{m=1,m\ne n}^{N}\left\langle \textbf{v}_s^{(m)}, \textbf{v}_r^{(m)}\right\rangle \). Direct calculation gives that

Note that \(\gamma _{st}^{(n)}= \varvec{\Gamma }^{(n)}(s,t)\), where \(\varvec{\Gamma }^{(n)}\) is defined in (17). Define matrices \(\varvec{\Lambda },\textbf{C} \in \mathbb {R}^{R \times R}\) by

Combining Lemma 4.2 and (18), we can write the gradient of \(\mathscr {L}\) in matrix form, as the following corollary shows.

Corollary 4.3

The gradient of the objective function \(\mathscr {L}\) in (13) is given by

for \(n=1,\ldots ,N\), where related matrices are defined in (16), (17) and (19).

4.1 Algorithm: OD-ALM

Suppose we have obtained the solution \(\textbf{v}_{[k]}\) for the kth iteration. We introduce how to solve \(\textbf{v}_{[k+1]}\) for the \((k+1)\)st iteration.

We use \(\textbf{v}_{[k]}\) as the initialization of the \((k+1)\)st iteration. By (15), we scale the initialization such that \(\Vert \textbf{v}^{(m)}_{r,[k]}\Vert = \left( \prod _{n=1}^{N}\Vert \textbf{v}^{(n)}_{r,[k]}\Vert \right) ^{1/N},m=1,\ldots ,N\). This scaling can avoid the situation that some \(\Vert \textbf{v}^{(n_1)}_{r,[k]}\Vert \) is too big and some \(\Vert \textbf{v}^{(n_2)}_{r,[k]}\Vert \) is too small, where \(1\le n_1,n_2\le N\). The idea of (12) can be realized by setting different penalty parameters for (13):

where \(\mu _{[k]}>0\). In the matrix form (19), the non-diagonal entries of \(\textbf{C}_{[k]}\) are the same as those of \(\mu _{[k]} \textbf{h}_{[k]}^\top \textbf{h}_{[k]}\), where

Then \(\textbf{v}_{[k+1]}\) can be obtained by solving \(\min _{\textbf{v} \in \mathbb {R}^{P}} \mathscr {L}(\textbf{v},\varvec{\lambda }_{[k]};\textbf{c}_{[k]})\). At last, the Lagrange multiplier \(\lambda _{st,[k+1]}\) is updated by \(\lambda _{st,[k+1]}= \lambda _{st,[k]}+ c_{st,[k]}\prod _{n=1}^{N}\left\langle \textbf{v}_{s,[k+1]}^{(n)},\textbf{v}_{t,[k+1]}^{(n)}\right\rangle \), whose matrix form is

Now we introduce how to develop a systematic scheme for the augmented Lagrangian method. The standard procedure of the augmented Lagrangian method tells us that we need to increase the penalty parameters gradually to a sufficiently large value. This procedure is rather important for (14), because \(\mathscr {L}\) is nonconvex. The later subproblems corresponding to larger penalty parameters can be solved relatively efficiently by warm starting point from the previous solutions. By (20), we need to set \(\mu _{[k+1]}\) sufficiently large such that \(c_{st,[k+1]} > c_{st,[k]}\) for all \(s\ne t\). We set

where \(\beta >1\). Usually, we can simply set a sufficiently large \(\beta \), for instance \(\beta = 10\), and the condition \(c_{st,[k+1]} > c_{st,[k]}\) will be satisfied naturally. The whole procedure of the augmented Lagrangian method is presented in Algorithm 1.

The convergence analysis of augmented Lagrangian methods can be found in many textbooks. See [3, 27, 33] for reference. Here we extend [33, Theorem 10.4.2], which is useful for designing the termination criteria.

Proposition 4.4

For Algorithm 1, we have

Proof

We have

For any feasible point \(\bar{\textbf{v}}\) of (10), by noting that \(\mathscr {L}(\textbf{v}_{[k+1]},\varvec{\lambda }_{[k]};\textbf{c}_{[k]})\le \mathscr {L}(\bar{\textbf{v}},\varvec{\lambda }_{[k]};\textbf{c}_{[k]})=\mathscr {F}(\bar{\textbf{v}})\), we have

This suggests that there exists \(\delta >0\) such that \(\sum _{s\ne t} \frac{\lambda ^2_{st,[k]}}{c_{st,[k]}}\le \delta k\). Define

It follows from (20) that \(\sum _{s\ne t} \frac{d^2_{st,[k]}}{\mu _{[k]}} = \sum _{s\ne t} \frac{\lambda ^2_{st,[k]}}{c_{st,[k]}}\le \delta k\). By the algorithm, \(\mu _{[k]}\ge \beta ^k\), where \(\beta >1\). Hence, \(\frac{d_{st,[k]}}{\mu _{[k]}}=o(1)\).

For any feasible point \(\bar{\textbf{v}}\) of (10), we have

By noting that \(\lim _{k\rightarrow \infty }\mu _{[k]}=\infty \) and \(\mathscr {F}(\bar{\textbf{v}})\) is bounded, we obtain the result. \(\square \)

Corollary 4.5

For Algorithm 1, suppose \(\prod _{n=1}^N\frac{\Vert \textbf{v}_{r,[k]}^{(n)}\Vert }{\Vert \textbf{v}_{r,[k+1]}^{(n)}\Vert }\) is bounded for all r and k. Then we have

4.2 Orthogonalization of Rank-One Tensors

OD-ALM can only obtain an approximate solution of (10). We need to develop an orthogonalization process to make the orthogonality constraint exact for the final result.

Suppose we have obtained a decomposition by OD-ALM:

First, we normalize each \(\textbf{v}_r^{(n)}\) to \(\textbf{u}_r^{(n)}\), i.e., \(\textbf{u}_r^{(n)}=\textbf{v}_r^{(n)}/\Vert \textbf{v}^{(n)}_{r}\Vert \). Assume that we have orthogonalizated the first \(\ell -1\) rank-one components:

We start to handle the \(\ell \)th rank-one component. Define

Compute the absolute value of the inner product \(\left| \left\langle \textbf{u}_r^{(n)}, \textbf{u}_{\ell }^{(n)}\right\rangle \right| \) for \(n=1,\ldots ,N\) and \(r=1\ldots , \ell -1\), and stack the results as a matrix:

where \(|\cdot |\) denotes the entrywise absolute value. Let \(\textbf{P}(m_r,r)= \min \{\textbf{P}(1,r),\ldots ,\textbf{P}(N,r)\}\). Then for each \(r\in \{1,\ldots ,\ell -1\}\), \(\left\{ \textbf{u}_{r}^{(m_r)},\textbf{u}_{\ell }^{(m_r)}\right\} \) is a pair of vectors that is the closest to orthogonality among all pairs \(\left\{ \textbf{u}_{r}^{(n)},\textbf{u}_{\ell }^{(n)}\right\} , n=1,\ldots ,N\). Suppose \(\{r: m_r = n\}=\{r_1\ldots , r_{\rho (n)}\}\). For each \(n\in \{1,\ldots ,N\}\), We will modify \(\textbf{u}_{\ell }^{(n)}\) to \(\textbf{u}_{\ell }^{(n)} - \sum _{j=1}^{\rho (n)}x_j \textbf{u}_{r_j}^{(n)}\) such that

whose matrix form is

We present the whole process of the orthogonalization in Algorithm 2. This process can also be used for generating general orthonormal lists of rank-one tensors.

The final orthogonal rank-R approximation is the orthogonal projection of \(\mathcal {A}\) onto the space spanned by the orthonormal list \(\{\otimes _{n=1}^{N} \textbf{u}_1^{(n)},\ldots , \otimes _{n=1}^{N} \textbf{u}_R^{(n)}\}\):

where the coefficient \(\sigma _r = \left\langle \mathcal {A}, \otimes _{n=1}^{N} \textbf{u}_r^{(n)}\right\rangle \).

5 Numerical Experiments

We will show the performance of OD-ALM combined with the orthogonalization process in this section. All experiments are performed on MATLAB R2016a with Tensor Toolbox, version 3.0 [2] on a laptop (Intel Core i5-6300HQ CPU @ 2.30GHz, 8.00G RAM). The test data include both synthetic and real-world tensors. The synthetic tensors are generated from known ground truth and thus make the evaluation reliable. Choosing real-world tensors is to assess the approximation ability of orthogonal decompositions in practice.

The experiments are designed based on those of [5, 13]. The test tensors are shown in Table 1, where \(\mathcal {A}_1,\ldots ,\mathcal {A}_4\) are synthetic tensors and \(\mathcal {A}_5,\ldots ,\mathcal {A}_8\) are real-world tensors. The tensor \(\mathcal {A}_1\) is a randomly generated tensor, \(\mathcal {A}_2\) is a randomly generated rank-5 tensor, and \(\mathcal {A}_3\) is a Hilbert tensor also used in [13]. For \(\mathcal {A}_4\), we generate an orthonormal list of rank-one tensors by Algorithm 2 and then use this list to generate an orthogonal rank-5 tensor \(\mathcal {B}_1\). The final tensor \(\mathcal {A}_4\) is

where \(\mathcal {B}_2\) is a noise tensor with entries drawn from a standard normal distribution, and \(\rho = 0.1 \Vert \mathcal {B}_1\Vert /\Vert \mathcal {B}_2\Vert \). The tensors \(\mathcal {A}_5,\mathcal {A}_6\) are hyperspectral imagesFootnote 3, and \(\mathcal {A}_7,\mathcal {A}_8\) are video tensorsFootnote 4. We will factorize each tensor into R terms by different methods. Different R’s would result in different approximation errors and running time. We concern the approximation abilities of different methods. For simplicity, we fix R for each test tensor, prescribed in Table 1. Using other R’s would show the same comparison results.

Suppose \(\mathcal {B}\) is an approximation of \(\mathcal {A}\) obtained by any method. We use the relative error (RErr) to evaluate the result:

5.1 Implementation Details of OD-ALM

The initialization is crucial for OD-ALM. We adopt the result of the alternating least squares algorithm (CP-ALS) [4, 14, 19] for (6) as the initialization, because this result is just the numerical solution of (14) with Lagrange multipliers and penalty parameters equal to zero, which is relatively near to the solution of the first subproblem of OD-ALM. The CP-ALS is with the truncated HOSVD initialization, and terminates if the relative change in the function value is less than \(10^{-6}\) or the number of iterations exceeds 500. As for (22), we set \(\beta =10\) for all tests.

Commonly used gradient-based optimization methods include the steepest descent method, the conjugate gradient method, the Broyden-Fletcher-Goldfarb-Shanno (BFGS) method and the limited-memory BFGS (L-BFGS) method. We have tried all these methods to solve the subproblems (14) and find that the L-BFGS method outperforms the other three ones. Hence, we use the L-BFGS method with \(m=20\) levels of memory in all tests. We stop the procedure of the L-BFGS method if the relative change between successive iterates is less than \(10^{-8}\), or the \(\ell _2\) norm of the gradient divided by the number of entries is less than \(\epsilon _{\text {inner}}\), which will be specified later. The maximum number of inner iterations is set to be 500. We adopt the Moré-Thuente line search [25] from MINPACKFootnote 5. For all experiments, Moré-Thuente line search parameters used are as follows: \(10^{-4}\) for the function value tolerance, \(10^{-2}\) for the gradient norm tolerance, a starting search step length of 1 and a maximum of 20 iterations.

For the solution \(\textbf{v}_{[k]}\) of the kth subproblem, define

By Corollary 4.5, we can terminate the outer iteration when \(\theta _{[k]}< \epsilon _{\text {outer}}\), which will be specified later. The maximum number of outer iterations is set to be 25.

5.2 Influence of Stopping Tolerances

We test different settings of tolerances: \(\epsilon _{\text {inner}}=10^{-3},10^{-4},10^{-5}\) and \(\epsilon _{\text {outer}}=10^{-3},10^{-4},10^{-5}\). The results are shown in Table 2, which are averaged over 10 trials for each case.

From Table 2, we can find that OD-ALM has a good performance on convergence: the outer iteration numbers are at most 12 on average for all cases. The running time would increase if we choose a smaller tolerance, but there is no improvement on the relative error for almost all cases. Therefore, we do not recommend using a too small tolerance in practical applications. We will use \(\epsilon _{\text {inner}}=10^{-4},\epsilon _{\text {outer}}=10^{-4}\) for synthetic tensors and \(\epsilon _{\text {inner}}=10^{-3},\epsilon _{\text {outer}}=10^{-3}\) for real-world tensors in all remaining tests.

5.3 Convergence Behaviour

We show the value of \(\theta _{[k]}\) defined in (23), the relative change between successive outer iterates \(\Vert \textbf{v}_{[k]} - \textbf{v}_{[k-1]}\Vert /\Vert \textbf{v}_{[k-1]}\Vert \) and the number of inner iterations corresponding to each outer iteration in Figure 1 and Figure 2.

The convergence behaviour of OD-ALM on \(\mathcal {A}_1,\ldots ,\mathcal {A}_4\). The first column is about \(\theta _{[k]}\), the second column is about \(\Vert \textbf{v}_{[k]} - \textbf{v}_{[k-1]}\Vert /\Vert \textbf{v}_{[k-1]}\Vert \), and the last column is about the number of inner iterations. All values are shown as functions of the number of outer iterations

The convergence behaviour of OD-ALM on \(\mathcal {A}_5,\ldots ,\mathcal {A}_8\). The three columns have the same meaning as in Figure 1

The value of \(\theta _{[k]}\) is decreasing as k increases, but the situations differ greatly for different tensors. For example, \(\theta _{[k]}\) of \(\mathcal {A}_7\) is almost unchanged for the first five outer iterations, while \(\theta _{[k]}\) of \(\mathcal {A}_6\) decreases from more than 0.6 to less than 0.1 in the first five outer iterations. Usually, a big number of inner iterations brings a relatively big change of \(\theta _{[k]}\). For example, for \(\mathcal {A}_3\), the number of inner iterations corresponding to \(k=2\) is more than 250, resulting in the difference between \(\theta _{[1]}\) and \(\theta _{[2]}\) being more than 0.4.

The relative change between successive outer iterates can be relatively big for some tensors even when k is big, e.g., \(\mathcal {A}_6\) and \(\mathcal {A}_7\). For all cases, the relative change is relatively small between the last two outer iterates. The number of inner iterations reflects the relative change: A big number of inner iterations often results in a big relative change between successive outer iterates.

5.4 Comparison with Other Methods

We compare our method with CP-ALS, the low rank orthogonal approximation of tensors (LROAT) [5] and the high-order power method for orthogonal low rank decomposition (OLRD-HOP) [34]. The method CP-ALS fits a CP decomposition (6). The method LROAT fits an \((1,\cdots ,N)\)-orthogonal decomposition, and OLRD-HOP fits an (N)-orthogonal decomposition. CP-ALS, LROAT and OLRD-HOP are all with the truncated HOSVD initialization. CP-ALS terminates if the relative change in the function value is less than \(10^{-8}\). LROAT and OLRD-HOP terminate if the relative change between successive iterates is less than \(10^{-8}\). The maximum number of iterations is set to be 500 for all these three methods. The results of the running time and the relative error are shown in Table 3, which are averaged over 10 trials for each case.

We can see that our method is much slower than the other methods. As discussed in [1], the time cost of one outer iteration of OD-ALM is of the same order of magnitude with CP-ALS. OD-ALM needs several outer iterations, resulting in a much longer time cost than CP-ALS. The running time of LROAT and OLRD-HOP is close to that of CP-ALS.

As for the relative error, CP-ALS is the best, OD-ALM is the second best, and OLRD-HOP outperforms LROAT. This is not surprising because of the relationships among the decompositions fitted by different methods. For \(\mathcal {A}_4\) whose ground truth is an orthogonal rank-5 tensor, the OD-ALM RErr is less than the noise level 0.1, which demonstrates the effectiveness of our method. In addition, we can find that the difference between the CP-ALS RErr and the OD-ALM RErr is very small for real-world tensors. For \(\mathcal {A}_8\), the results of these two methods are even the same. This suggests the potential of orthogonal decompositions in fitting real-world tensors. The small gap between the CP-ALS RErr and the OD-ALM RErr also indicates the effectiveness of our method in some sense.

Suppose \(\textbf{U}_j^{(n)}\) is the nth normalized factor matrix corresponding to the final result for \(\mathcal {A}_{j}\) obtained by our method. We record the results of \(\textbf{U}_j^{(n)^\top }\textbf{U}_j^{(n)}\) for \(j=3,5\) in one running:

We also compute \(\textbf{U}_j^{(n)^\top }\textbf{U}_j^{(n)}\) for other tensors and find that the appearance of zeros in \(\textbf{U}_j^{(n)^\top }\textbf{U}_j^{(n)}\) is not regular. Therefore, strongly orthogonal decompositions cannot replace orthogonal decompositions in practical

6 Conclusion

We present several properties of orthogonal rank. Orthogonal rank is different from tensor rank in many aspects. For example, a subtensor may have a larger orthogonal rank than the whole tensor, and orthogonal rank is lower semicontinuous.

To tackle the complicated orthogonality constraints, we employ the augmented Lagrangian method to convert the original problem into an unconstrained problem. The gradient of the objective function has a good structure, inspiring us to use gradient-based optimization methods to solve each subproblem. A novel orthogonalization process is developed to make the final result satisfy the orthogonality condition exactly. Numerical experiments show that the proposed method has a great advantage over the existing methods for strongly orthogonal decompositions in terms of the approximation error.

The main drawback of our method is the time cost. This is because the time cost of one outer iteration of OD-ALM is of the same order of magnitude with that of CP-ALS, which is not very short, and we need several outer iterations to obtain the final result. Although the ill-conditioning is not so severe for the augmented Lagrangian method compared to the penalty method, preconditioning is a possible way to speed up. For preconditioning of optimization methods for CP decompositions, one can refer to [10, 32]. Preconditioning for OD-ALM can be studied as future work. A better strategy is to design an algorithm with a framework different from the augmented Lagrangian method. This may need further exploration of orthogonal decompositions.

Data Availability

The datasets analysed during the current study are public available and we have provided the URLs when using them.

Notes

Strongly orthogonal decomposition has a different definition in Ref. [17].

Such tensors exist. See [9, Lemma 4.7] for an example.

The hyperspectral image data have been used in [36] and available at https://rslab.ut.ac.ir/data.

The video data are from the video trace library [29] and available at http://trace.eas.asu.edu/yuv/.

A Matlab implementation, adapted by Dianne P. O’Leary, is available at http://www.cs.umd.edu/users/oleary/software/.

References

Acar, E., Dunlavy, D.M., Kolda, T.G.: A scalable optimization approach for fitting canonical tensor decompositions. J. Chemom. 25(2), 67–86 (2011)

Bader, B.W., Kolda, T.G. et al.: MATLAB Tensor Toolbox Version 3.0-dev. Available online, Oct. (2017)

Bertsekas, D.P.: Constrained Optimization and Lagrange Multiplier Methods. Academic press, Cambridge (1982)

Carroll, J.D., Chang, J.-J.: Analysis of individual differences in multidimensional scaling via an N-way generalization of “Eckart-Young’’ decomposition. Psychometrika 35(3), 283–319 (1970)

Chen, J., Saad, Y.: On the tensor SVD and the optimal low rank orthogonal approximation of tensors. SIAM J. Matrix Anal. Appl. 30(4), 1709–1734 (2008)

Comon, P.: Independent component analysis, A new concept? Signal Process. 36(3), 287–314 (1994)

Conn, A.R., Gould, N., Sartenaer, A., Toint, P.L.: Convergence properties of an augmented Lagrangian algorithm for optimization with a combination of general equality and linear constraints. SIAM J. Optim. 6(3), 674–703 (1996)

De Lathauwer, L., De Moor, B., Vandewalle, J.: A multilinear singular value decomposition. SIAM J. Matrix Anal. Appl. 21(4), 1253–1278 (2000)

De Silva, V., Lim, L.-H.: Tensor rank and the ill-posedness of the best low-rank approximation problem. SIAM J. Matrix Anal. Appl. 30(3), 1084–1127 (2008)

De Sterck, H., Howse, A.J.: Nonlinearly preconditioned L-BFGS as an acceleration mechanism for alternating least squares with application to tensor decomposition. Num. Linear Algebra Appl. 25(6), e2202 (2018)

Eckart, C., Young, G.: The approximation of one matrix by another of lower rank. Psychometrika 1(3), 211–218 (1936)

Espig, M., Hackbusch, W.: A regularized Newton method for the efficient approximation of tensors represented in the canonical tensor format. Numer. Math. 122(3), 489–525 (2012)

Guan, Y., Chu, D.: Numerical computation for orthogonal low-rank approximation of tensors. SIAM J. Matrix Anal. Appl. 40(3), 1047–1065 (2019)

Harshman, R.A. et al.: Foundations of the PARAFAC procedure: models and conditions for an “explanatory” multimodal factor analysis. (1970)

Håstad, J.: Tensor rank is NP-complete. J. Algorithms 11(4), 644–654 (1990)

Hillar, C.J., Lim, L.-H.: Most tensor problems are NP-hard. J. ACM (JACM) 60(6), 45 (2013)

Kolda, T.G.: Orthogonal tensor decompositions. SIAM J. Matrix Anal. Appl. 23(1), 243–255 (2001)

Kolda, T.G.: A counterexample to the possibility of an extension of the Eckart-Young low-rank approximation theorem for the orthogonal rank tensor decomposition. SIAM J. Matrix Anal. Appl. 24(3), 762–767 (2003)

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51(3), 455–500 (2009)

Krijnen, W.P., Dijkstra, T.K., Stegeman, A.: On the non-existence of optimal solutions and the occurrence of “degeneracy’’ in the CANDECOMP/PARAFAC model. Psychometrika 73(3), 431–439 (2008)

Kruskal, J.B.: Three-way arrays: rank and uniqueness of trilinear decompositions, with application to arithmetic complexity and statistics. Linear Algebra Appl. 18(2), 95–138 (1977)

Li, Z., Nakatsukasa, Y., Soma, T., Uschmajew, A.: On orthogonal tensors and best rank-one approximation ratio. SIAM J. Matrix Anal. Appl. 39(1), 400–425 (2018)

Lim, L.-H., Comon, P.: Blind multilinear identification. IEEE Trans. Inf. Theory 60(2), 1260–1280 (2013)

Martin, C.D.M., Van Loan, C.F.: A Jacobi-type method for computing orthogonal tensor decompositions. SIAM J. Matrix Anal. Appl. 30(3), 1219–1232 (2008)

More, J.J., Thuente, D.J.: Line search algorithms with guaranteed sufficient decrease. ACM Trans. Math. Softw. 20(3), 286–307 (1994)

Nazih, M., Minaoui, K., Comon, P.: Using the proximal gradient and the accelerated proximal gradient as a canonical polyadic tensor decomposition algorithms in difficult situations. Signal Process. 171, 107472 (2020)

Nocedal, J., Wright, S.: Numerical Optimization. Springer Science & Business Media, New York (2006)

Rockafellar, R.T., Wets, R.J.-B.: Variational Analysis. Springer Science & Business Media, New York (2009)

Seeling, P., Reisslein, M.: Video transport evaluation with H. 264 video traces. IEEE Commun. Surv. Tutor. 14(4), 1142–1165 (2011)

Sidiropoulos, N.D., Bro, R.: On the uniqueness of multilinear decomposition of N-way arrays. J. Chemometr. J. Chemometr. Soc. 14(3), 229–239 (2000)

Sørensen, M., De Lathauwer, L., Comon, P., Icart, S., Deneire, L.: Canonical polyadic decomposition with a columnwise orthonormal factor matrix. SIAM J. Matrix Anal. Appl. 33(4), 1190–1213 (2012)

Sterck, H.D.: A nonlinear GMRES optimization algorithm for canonical tensor decomposition. SIAM J. Sci. Comput. 34(3), A1351–A1379 (2012)

Sun, W., Yuan, Y.-X.: Optimization Theory and Methods: Nonlinear Programming. Springer Optimization and Its Applications. Springer Science & Business Media, New York (2010)

Wang, L., Chu, M.T., Yu, B.: Orthogonal low rank tensor approximation: alternating least squares method and its global convergence. SIAM J. Matrix Anal. Appl. 36(1), 1–19 (2015)

Yang, Y.: The epsilon-alternating least squares for orthogonal low-rank tensor approximation and its global convergence. SIAM J. Matrix Anal. Appl. 41(4), 1797–1825 (2020)

Zhu, F., Wang, Y., Fan, B., Xiang, S., Meng, G., Pan, C.: Spectral unmixing via data-guided sparsity. IEEE Trans. Image Process. 23(12), 5412–5427 (2014)

Acknowledgements

The author is extremely grateful to the two anonymous referees for their valuable feedback, which improved this paper significantly. This work was partially supported by the National Natural Science Foundation of China (12201319).

Funding

This work was partially supported by the National Natural Science Foundation of China (12201319).

Author information

Authors and Affiliations

Contributions

CZ is the single author of the manuscript and responsible for this work.

Corresponding author

Ethics declarations

Conflict of interest

The author declares he/she has no financial interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zeng, C. Rank Properties and Computational Methods for Orthogonal Tensor Decompositions. J Sci Comput 94, 6 (2023). https://doi.org/10.1007/s10915-022-02054-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-02054-9