Abstract

Low-rank approximation of tensors has been widely used in high-dimensional data analysis. It usually involves singular value decomposition (SVD) of large-scale matrices with high computational complexity. Sketching is an effective data compression and dimensionality reduction technique applied to the low-rank approximation of large matrices. This paper presents two practical randomized algorithms for low-rank Tucker approximation of large tensors based on sketching and power scheme, with a rigorous error-bound analysis. Numerical experiments on synthetic and real-world tensor data demonstrate the competitive performance of the proposed algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In practical applications, high-dimensional data, such as color images, hyperspectral images and videos, often exhibit a low-rank structure. Low-rank approximation of tensors has become a general tool for compressing and approximating high-dimensional data and has been widely used in scientific computing, machine learning, signal/image processing, data mining, and many other fields [1]. The classical low-rank tensor factorization models include, e.g., Canonical Polyadic decomposition (CP) [2, 3], Tucker decomposition [4,5,6], Hierarchical Tucker (HT) [7, 8], and Tensor Train decomposition (TT) [9]. This paper focuses on low-rank Tucker decomposition, also known as the low multilinear rank approximation of tensors. When the target rank of Tucker decomposition is much smaller than the original dimensions, it will have good compression performance. For a given Nth-order tensor \({\varvec{\mathcal {X}}}\in \mathbb {R}^{I_1\times I_2\times \ldots \times I_N}\), the low-rank Tucker decomposition can be formulated as the following optimization problem, i.e.,

where \({\varvec{\mathcal {Y}}}\in \mathbb {R}^{I_1\times I_2\times \ldots \times I_N}\), with \(\texttt{rank}(\textbf{Y}_{(n)})\le r_n\) for \(n=1,2,\ldots ,N\), \(\textbf{Y}_{(n)}\) is the mode-n unfolding matrix of \({\varvec{\mathcal {Y}}}\), and \(r_n\) is the rank of the mode-n unfolding matrix of \({\varvec{\mathcal {X}}}\).

For the Tucker approximation of higher-order tensors, the most frequently used non-iterative algorithms are the improved algorithms for the higher-order singular value decomposition (HOSVD) [5], the truncated higher-order SVD (THOSVD) [10] and the sequentially truncated higher-order SVD (STHOSVD) [11]. Although the results of THOSVD and STHOSVD are usually sub-optimal, they can use as reasonable initial solutions for iterative methods such as higher-order orthogonal iteration (HOOI) [10]. However, both algorithms rely directly on SVD when computing the singular vectors of intermediate matrices, requiring large memory and high computational complexity when the size of tensors is large.

Strikingly, randomized algorithms can reduce the communication among different levels of memories and are parallelizable. In recent years, many scholars have become increasingly interested in randomized algorithms for finding approximation Tucker decomposition of large-scale data tensors [12,13,14,15,16,17, 19, 20]. For example, Zhou et al. [12] proposed a randomized version of the HOOI algorithm for Tucker decomposition. Che and Wei [13] proposed an adaptive randomized algorithm to solve the multilinear rank of tensors. Minster et al. [14] designed randomized versions of the THOSVD and STHOSVD algorithms, i.e., R-THOSVD and R-STHOSVD. Sun et al. [17] presented a single-pass randomized algorithm to compute the low-rank Tucker approximation of tensors based on a practical matrix sketching algorithm for streaming data, see also [18] for more details. Regarding more randomized algorithms proposed for Tucker decomposition, please refer to [15, 16, 19, 20] for a detailed review of randomized algorithms for solving Tucker decomposition of tensors in recent years involving, e.g., random projection, sampling, count-sketch, random least-squares, single-pass, and multi-pass algorithms.

This paper presents two efficient randomized algorithms for finding the low-rank Tucker approximation of tensors, i.e., Sketch-STHOSVD and sub-Sketch-STHOSVD summarized in Algorithms 6 and 8, respectively. The main contributions of this paper are threefold. Firstly, we propose a new one-pass sketching algorithm (i.e., Algorithm 6) for low-rank Tucker approximation, which can significantly improve the computational efficiency of STHOSVD. Secondly, we present a new matrix sketching algorithm (i.e., Algorithm 7) by combining the two-sided sketching algorithm proposed by Tropp et al. [18] with subspace power iteration. Algorithm 7 can accurately and efficiently compute the low-rank approximation of large-scale matrices. Thirdly, the proposed Algorithm 8 can deliver a more accurate Tucker approximation than simpler randomized algorithms by combining the subspace power iteration. More importantly, sub-Sketch-STHOSVD can converge quickly for any data tensors and independently of singular value gaps.

The rest of this paper is organized as follows. Section 2 briefly introduces some basic notations, definitions, and tensor-matrix operations used in this paper and recalls some classical algorithms, including THOSVD, STHOSVD, and R-STHOSVD, for low-rank Tucker approximation. Our proposed two-sided sketching algorithm for STHOSVD is given in Sect. 3. In Sect. 4, we present an improved algorithm with subspace power iteration. The effectiveness of the proposed algorithms is validated thoroughly in Sect. 5 by numerical experiments on synthetic and real-world data tensors. We conclude in Sect. 6.

2 Preliminary

2.1 Notations and Basic Operations

Some common symbols used in this paper are shown in the following Table 1.

We denote an Nth-order tensor \({\varvec{\mathcal {X}}}\in \mathbb {R}^{I_1\times I_2\times \ldots \times I_N}\) with entries given by \(\textbf{x}_{i_1,i_2,\ldots ,i_N},1\le i_n\le I_n, n=1,2,\ldots ,N.\) The Frobenius norm of \({\varvec{\mathcal {X}}}\) is defined as

The mode-n tensor-matrix multiplication is a frequently encountered operation in tensor computation. The mode-n product of a tensor \({\varvec{\mathcal {X}}}\in \mathbb {R}^{I_1\times I_2\times \ldots \times I_N}\) by a matrix \(\textbf{A}\in \mathbb {R}^{K\times I_n}\) (with entries \(\textbf{a}_{k,i_n}\)) is denoted as \({\varvec{\mathcal {Y}}}={\varvec{\mathcal {X}}}\times _{n}\textbf{A}\in \mathbb {R}^{I_1\times ...\times I_{n-1}\times K \times I_{n+1}\times ...\times I_N}\), with entries:

The mode-n matricization of higher-order tensors is the reordering of tensor elements into a matrix. The columns of mode-n unfolding matrix \(\textbf{X}_{(n)}\in \mathbb {R}^{I_n\times (\prod _{N\ne n}I_N)}\) are the mode-n fibers of tensor \({\varvec{\mathcal {X}}}\). More specifically, an element \((i_1,i_2,\ldots ,i_N)\) of \({\varvec{\mathcal {X}}}\) is maps on an element \((i_n,j)\) of \(\textbf{X}_{(n)}\), where

Let the rank of mode-n unfolding matrix \(\textbf{X}_{(n)}\) is \(r_n\), the integer array \((r_1,r_2,\ldots ,r_N)\) is Tucker-rank of Nth-order tensor \({\varvec{\mathcal {X}}}\), also known as the multilinear rank. The Tucker decomposition of \({\varvec{\mathcal {X}}}\) with rank \((r_1,r_2,\ldots ,r_N)\) is expressed as

where \({\varvec{\mathcal {G}}}\in \mathbb {R}^{r_1\times r_2\times \ldots \times r_N}\) is the core tensor, and \(\{\textbf{U}^{(n)}\}_{n=1}^{N}\) with \(\textbf{U}^{(n)}\in \mathbb {R}^{I_n\times r_n}\) is the mode-n factor matrices. We denote an optimal rank-\((r_1,r_2,\ldots ,r_N)\) approximation of a tensor \({\varvec{\mathcal {X}}}\) as \(\hat{{\varvec{\mathcal {X}}}}_\textrm{opt}\), which is the optimal Tucker approximation by solving the minimization problem in (1).

Below we present the definitions of some concepts used in this paper.

Definition 1

(Kronecker products) The Kronecker product of matrices \(\textbf{A}\in \mathbb {R}^{m\times n}\) and \(\textbf{B}\in \mathbb {R}^{k\times l}\) is defined as

The Kronecker product helps express Tucker decomposition. The Tucker decomposition in (5) implies:

Definition 2

(Standard normal matrix) The elements of a standard normal matrix follow the real standard normal distribution (i.e., Gaussian with mean zero and variance one) form an independent family of standard normal random variables.

Definition 3

(Standard Gaussian tensor) The elements of a standard Gaussian tensor follow the standard Gaussian distribution.

Definition 4

(Tail energy) The jth tail energy of a matrix \(\textbf{X}\) is defined:

2.2 Truncated Higher-Order SVD

Since the actual Tucker rank of large-scale higher-order tensor is hard to compute, the truncated Tucker decomposition with a pre-determined truncation \((r_1,r_2,\ldots ,r_N)\) is widely used in practice. THOSVD is a popular approach to computing the truncated Tucker approximation, also known as the best low multilinear rank-\((r_1,r_2,\ldots ,r_N)\) approximation, which reads:

Algorithm 1 summarizes the THOSVD approach. Each mode is processed individually in Algorithm 1. The low-rank factor matrices of mode-n unfolding matrix \(\textbf{X}_{(n)}\) are computed through the truncated SVD, i.e.,

where \(\textbf{U}^{(n)} \textbf{S}^{(n)} \textbf{V}^{(n)\top }\) is a rank-\(r_n\) approximation of \(\textbf{X}_{(n)}\), the orthogonal matrix \(\textbf{U}^{(n)}\in \mathbb {R}^{I_n\times r_n}\) is the mode-n factor matrix of \({\varvec{\mathcal {X}}}\) in Tucker decomposition, \( \textbf{S}^{(n)}\in \mathbb {R}^{r_n\times r_n}\) and \(\textbf{V}^{(n)}\in \mathbb {R}^{ I_1...I_{n-1}I_{n+1}...I_N\times r_n}\). Once all factor matrices have been computed, the core tensor \({\varvec{\mathcal {G}}}\) can be computed as

Then, the Tucker approximation \(\hat{{\varvec{\mathcal {X}}}}\) of \({\varvec{\mathcal {X}}}\) can be computed:

With the notation \(\Delta _{n}^{2}({\varvec{\mathcal {X}}}) \triangleq \sum _{i=r_{n}+1}^{I_{n}}\sigma _{i}^{2}(\textbf{X}_{(n)})\) and \(\Delta _{n}^2{({\varvec{\mathcal {X}}})\le \Vert {\varvec{\mathcal {X}}}-\hat{{\varvec{\mathcal {X}}}}_\textrm{opt}\Vert _F^2}\) [14], the error-bound for Algorithm 1 can be stated in the following Theorem 1.

Theorem 1

([11], Theorem 5.1) Let \(\hat{{\varvec{\mathcal {X}}}}={\varvec{\mathcal {G}}}\times _1\textbf{U}^{(1)}\times _2\textbf{U}^{(2)}\cdots \times _N\textbf{U}^{(N)}\) be the low multilinear rank-\((r_1,r_2,\ldots ,r_N)\) approximation of a tensor \({\varvec{\mathcal {X}}}\in \mathbb {R}^{I_1\times I_2\times \ldots \times I_N}\) by THOSVD. Then

2.3 Sequentially Truncated Higher-Order SVD

Vannieuwenhoven et al. [11] proposed one more efficient and less computationally complex approach for computing approximate Tucker decomposition of tensors, called STHOSVD. Unlike THOSVD algorithm, STHOSVD updates the core tensor simultaneously whenever a factor matrix has computed.

Given the target rank \((r_1,r_2,\ldots ,r_N)\) and a processing order \(s_p: \{1,2,\ldots ,N\}\), the minimization problem (1) can be formulated as the following optimization problem:

where \(\hat{{\varvec{\mathcal {X}}}}^{(n)}={\varvec{\mathcal {X}}}\times _1(\textbf{U}^{(1)}{} \textbf{U}^{(1)\top })\times _2(\textbf{U}^{(2)}\textbf{U}^{(2)\top })\cdots \times _n(\textbf{U}^{(n)}{} \textbf{U}^{(n)\top }), n=1,2,\ldots ,N-1\), denote the intermediate approximation tensors.

In Algorithm 2, the solution \(\textbf{U}{(n)}\) of problem (14) can be obtained via \( \texttt{truncatedSVD}(\textbf{G}_{(n)},r_n)\), where \(\textbf{G}_{(n)}\) is mode-n unfolding matrix of the \((n-1)\)-th intermediate core tensor \({\varvec{\mathcal {G}}}={\varvec{\mathcal {X}}}\times _{i=1}^{n-1}\textbf{U}^{(i)\top }\in \mathbb {R}^{r_1\times r_2\times ...\times r_{n-1}\times I_{n}\times ...\times I_{N} }\), i.e.,

where the orthogonal matrix \(\textbf{U}^{(n)}\) is the mode-n factor matrix, and \(\textbf{S}^{(n)}{} \textbf{V}^{(n)\top }\) \(\in \mathbb {R}^{r_n\times r_1...r_{n-1}I_{n+1}...I_N}\) is used to update the n-th intermediate core tensor \({\varvec{\mathcal {G}}}\). Function \(\mathtt{fold_{n}}(\textbf{S}^{(n)}{} \textbf{V}^{(n)\top })\) tensorizes matrix \(\textbf{S}^{(n)}{} \textbf{V}^{(n)\top }\) into tensor \({\varvec{\mathcal {G}}}\in \mathbb {R}^{r_1\times r_2\times ...\times r_{n}\times I_{n+1}\times ...\times I_{N} }\). When the target rank \(r_n\) is much smaller than \(I_n\), the size of the updated intermediate core tensor \({\varvec{\mathcal {G}}}\) is much smaller than original tensor. This method can significantly improve computational performance. STHOSVD algorithm possesses the following error-bound.

Theorem 2

([11], Theorem 6.5) Let \(\hat{{\varvec{\mathcal {X}}}}={\varvec{\mathcal {G}}}\times _1\textbf{U}^{(1)}\times _2\textbf{U}^{(2)}\ldots \times _N\textbf{U}^{(N)}\) be the low multilinear rank-\((r_1,r_2,\ldots ,r_N)\) approximation of a tensor \({\varvec{\mathcal {X}}}\in \mathbb {R}^{I_1\times I_2\times \ldots \times I_N}\) by STHOSVD with processsing order \(s_p:\{1,2,\ldots ,N\}\). Then

Although STHOSVD has the same error-bound as THOSVD, it is less computationally complex and requires less storage. As shown in Sect. 5 for the numerical experiment, the running (CPU) time of the STHOSVD algorithm is significantly reduced, and the approximation error has slightly better than that of THOSVD in some cases.

2.4 Randomized STHOSVD

When the dimensions of data tensors are enormous, the computational cost of the classical deterministic algorithm TSVD for finding a low-rank approximation of mode-n unfolding matrix can be expensive. Randomized low-rank matrix algorithms replace original large-scale matrix with a new one through a preprocessing step. The new matrix contains as much information as possible about the rows or columns of original data matrix. Its size is smaller than original matrix, allowing the data matrix to be processed efficiently and thus reducing the memory requirements for solving low-rank approximation of large matrix.

Halko et al. [21] proposed a randomized SVD (R-SVD) for matrices. The preprocessing stage of the algorithm is performed by right multiplying original data matrix \(\textbf{A}\in \mathbb {R}^{m\times n}\) with a random Gaussian matrix \({\varvec{\Omega }}\in \mathbb {R}^{n\times r}\). Each column of the resulting new matrix \(\textbf{Y}=\textbf{A}{\varvec{\Omega }}\in \mathbb {R}^{m\times r}\) is a linear combination of the columns of original data matrix. When \(r<n\), the size of matrix \(\textbf{Y}\) is smaller than \(\textbf{A}\). The oversampling technique can improve the accuracy of solutions. Subsequent computations are summarised in Algorithm 3, where randn generates a Gaussian random matrix, thinQR produces an economy-size of the QR decomposition, and thinSVD is the thin SVD decomposition. When \(\textbf{A}\) is dense, the arithmetic cost of Algorithm 3 is \(\mathcal {O}(2(r+p)mn+r^2(m+n))\) flops, where \(p>0\) is the oversampling parameter satisfying \(r+p\le \min \{m,n\}\).

Algorithm 3 is an efficient randomized algorithm for computing rank-r approximations to matrices. Minster et al. [14] applied Algorithm 3 directly to the STHOSVD algorithm and then presented a randomized version of STHOSVD (i.e., R-STHOSVD), see Algorithm 4.

3 Sketching Algorithm for STHOSVD

A drawback of R-SVD algorithm is that when both dimensions of the intermediate matrices are enormous, the computational cost can still be high. To resolve this problem, we could resort to the two-sided sketching algorithm for low-rank matrix approximation proposed by Joel Tropp et al. [22]. The preprocessing of sketching algorithm needs two sketch matrices to contain information regarding the rows and columns of input matrix \(\textbf{A}\in \mathbb {R}^{m\times n}\). Thus we should choose two sketch size parameters k and l, s.t., \(r\le k\le \min \{l, n\}\), \(0< l\le m\). The random matrices \({\varvec{\Omega }}\in \mathbb {R}^{n\times k}\) and \({\varvec{\Psi }} \in \mathbb {R}^{l\times m}\) are fixed independent standard normal matrices. Then we can multiply matrix \(\textbf{A}\) left and right respectively to obtain random sketch matrices \(\textbf{Y}\in \ \mathbb {R}^{m\times k}\) and \(\textbf{W}\in \mathbb {R}^{l\times n}\), which collect sufficient data about the input matrix to compute the low-rank approximation. The dimensionality and distribution of the random sketch matrices determine the approximation’s potential accuracy, with larger values of k and l resulting in better approximations but also requiring more storage and computational cost.

The sketching algorithm for low-rank approximation is given in Algorithm 5. Function \(\texttt{orth}(\textbf{A})\) in Step 2 produces an orthonormal basis of \(\textbf{A}\). Using orthogonalization matrices will achieve smaller errors and better numerical stability than directly using the randomly generated Gaussian matrices. In particular, when \(\textbf{A}\) is dense, the arithmetic cost of Algorithm 5 is \(\mathcal {O}((k+l)mn+kl(m+n))\) flops. Algorithm 5 is simple, practical, and possesses the sub-optimal error-bound as stated in the following Theorem 3.

Theorem 3

([22], Theorem 4.3) Assume that the sketch size parameters satisfy \(l>k+1\), and draw random test matrices \({\varvec{\Omega }}\in \mathbb {R}^{n\times k}\) and \({\varvec{\Psi }}{\in \mathbb {R}}^{l\times m}\) independently forming the standard normal distribution. Then the rank-k approximation \(\hat{\textbf{A}}\) obtained from Algorithm 5 satisfies:

In Theorem 3, function \(f(s,t):=s/(t-s-1)(t>s+1>1)\). The minimum in Theorem 3 reveals that the low rank approximation of given matrix \(\textbf{A}\) automatically exploits the decay of tail energy.

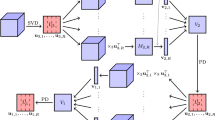

Using the two-sided sketching algorithm to leverage STHOSVD algorithm, we propose a practical sketching algorithm for STHOSVD named Sketch-STHOSVD. We summarize the procedures of Sketch-STHOSVD algorithm in Algorithm 6, with its error analysis stated in Theorem 4.

Theorem 4

Let \(\hat{{\varvec{\mathcal {X}}}}={\varvec{\mathcal {G}}}\times _1\textbf{U}^{(1)}\times _2\textbf{U}^{(2)}\ldots \times _N\textbf{U}^{(N)}\) be the low multilinear rank-\((r_1,r_2,\ldots ,r_N)\) approximation of a tensor \({\varvec{\mathcal {X}}}\in \mathbb {R}^{I_1\times I_2\times \ldots \times I_N}\) by the Sketch-STHOSVD algorithm (i.e., Algorithm 6) with processing order \(s_p:\{1,2,\ldots ,N\}\) and sketch size parameters \(\{l_1,l_2,\ldots ,l_N\}\). Then

Proof

Combining Theorems 2 and 3, we have

\(\square \)

We assume the processing order for STHOSVD, R-STHOSVD, and Sketch-STHOSVD algorithms is \(s_p:\{1,2,\ldots ,N\}\). Table 2 summarises the arithmetic cost of different algorithms for the cases related to the general higher-order tensor \({\varvec{\mathcal {X}}}\in \mathbb {R}^{I_1\times I_{2}\times ...\times I_N}\) with target rank \((r_1,r_2,\ldots ,r_N)\) and the special cubic tensor \({\varvec{\mathcal {X}}}\in \mathbb {R}^{I\times I\times ...\times I}\) with target rank \((r,r,\ldots ,r)\). Here the tensors are dense and the target ranks \(r_j\ll I_j, j = 1, 2, \ldots , N\).

4 Sketching Algorithm with Subspace Power Iteration

When the size of original matrix is very large or the singular spectrum of original matrix decays slowly, Algorithm 5 may produce a poor basis in many applications. Inspired by [23], we suggest using the power iteration technique to enhance the sketching algorithm by replacing \(\textbf{A}\) with \((\textbf{A}{} \textbf{A}^\top )^{q}{} \textbf{A}\), where q is a positive integer. According to the SVD decomposition of matrix \(\textbf{A}\), i.e., \(\textbf{A}=\textbf{U}{} \textbf{S}{} \textbf{V}^\top \), we know that \((\textbf{A}{} \textbf{A}^\top )^{q}{} \textbf{A}=\textbf{U}{} \textbf{S}^{2q+1}{} \textbf{V}^\top \). It can see that \(\textbf{A}\) and \((\textbf{A}{} \textbf{A}^\top )^{q}{} \textbf{A}\) have the same left and right singular vectors, but the latter has a faster decay rate of singular values, making its tail energy much smaller.

Although power iteration can improve the accuracy of Algorithm 5 to some extent, it still suffers from a problem, i.e., during the execution with power iteration, the rounding errors will eliminate all information about the singular modes associated with the singular values. To address this issue, we propose an improved sketching algorithm by orthonormalizing the columns of the sample matrix between each application of \(\textbf{A}\) and \(\textbf{A}^\top \), see Algorithm 7. When \(\textbf{A}\) is dense, the arithmetic cost of Algorithm 7 is \(\mathcal {O}((q+1)(k+l)mn+kl(m+n))\) flops. Numerical experiments show that a good approximation can achieve with a choice of 1 or 2 for subspace power iteration parameter [21].

Using Algorithm 7 to compute the low-rank approximations of intermediate matrices, we can obtain an improved sketching algorithm for STHOSVD, called sub-Sketch-STHOSVD, see Algorithm 8.

The error-bound for Algorithm 8 states in the following Theorem 5. Its proof is deferred in Appendix.

Theorem 5

Let \(\hat{{\varvec{\mathcal {X}}}}={\varvec{\mathcal {G}}}\times _1\textbf{U}^{(1)}\times _2\textbf{U}^{(2)}\ldots \times _N\textbf{U}^{(N)}\) be the low multilinear rank-\((r_1,r_2,\ldots ,r_N)\) approximation of a tensor \({\varvec{\mathcal {X}}}\in \mathbb {R}^{I_1\times I_2\times \ldots \times I_N}\) by the sub-Sketch-STHOSVD algorithm (i.e., Algorithm 8) with processing order \(s_p:\{1,2,\ldots ,N\}\) and sketch size parameters \(\{l_1,l_2,\ldots ,l_N\}\). Then

Proof

See Appendix. \(\square \)

5 Numerical Experiments

This section conducts numerical experiments with synthetic data and real-world data, including comparisons between the traditional THOSVD algorithm, STHOSVD algorithm, the R-STHOSVD algorithm proposed in [14], and our proposed algorithms Sketch-STHOSVD and sub-Sketch-STHOSVD. Regarding the numerical settings, the oversampling parameter \(p=5\) is used in Algorithm 3, the sketch parameters \(l_n = r_n+2, n = 1, 2, \ldots , N\), are used in Algorithms 6 and 8, and the power iteration parameter \(q=1\) is used in Algorithm 8.

5.1 Hilbert Tensor

Hilbert tensor is a synthetic and supersymmetric tensor, with each entry defined as

In the first experiment, we set \(N=5\) and \(I_n = 25, n=1,2,\ldots ,N\). The target rank is chosen as (r, r, r, r, r), where \(r\in [1,25]\). Due to the supersymmetry of the Hilbert tensor, the processing order in the algorithms does not affect the final experimental results, and thus the processing order can be directly chosen as \(s_p:\{1,2,3,4,5\}\). The results of different algorithms are given in Fig. 1. It shows that our proposed algorithms (i.e., Sketch-STHOSVD and sub-Sketch-STHOSVD) and algorithm R-STHOSVD outperform the algorithms THOSVD and STHOSVD. In particular, the error of the proposed algorithms Sketch-STHOSVD and sub-Sketch-STHOSVD is comparable to R-STHOSVD (see the left plot in Fig. 1), while they both use less CPU time than R-STHOSVD (see the right plot in Fig. 1).

This result demonstrates the excellent performance of the proposed algorithms and indicates that the two-sided sketching method and the subspace power iteration used in our algorithms can indeed improve the performance of STHOSVD algorithm.

For a large-scale test, we use a Hilbert tensor with a size of \(500\times 500\times 500\) and conduct experiments using ten different approximate multilinear ranks. We perform the tests ten times and report the algorithms’ average running time and relative error in Tables 3 and 4, respectively. The results show that the randomized algorithms can achieve higher accuracy than the deterministic algorithms. The proposed Sketch-STHOSVD algorithm is the fastest, and the sub-Sketch-STHOSVD algorithm achieves the highest accuracy efficiently.

5.2 Sparse Tensor

In this experiment, we test the performance of different algorithms on a sparse tensor \( {\varvec{\mathcal {X}}} \in \mathbb {R}^{200 \times 200 \times 200}\), i.e.,

Where \(\mathbf{{x}}_i,{\mathbf{\textbf{y}}_i},{\mathbf{{z}}_i} \in \mathbb {R}^n\) are sparse vectors all generated using the sprand command in MATLAB with \(5\%\) nonzeros each, and \(\gamma \) is a user-defined parameter which determines the strength of the gap between the first ten terms and the rest terms. The target rank is chosen as (r, r, r), where \(r\in [20,100]\).

The experimental results show in Fig. 2, in which three different values \(\gamma = 2,10,200\) are tested. The increase of gap means that the tail energy will be reduced, and the accuracy of the algorithms will be improved. Our numerical experiments also verified this result. Figure 2 demonstrates the superiority of the proposed sketching algorithms. In particular, we see that the proposed Sketch-STHOSVD is the fastest algorithm, with a comparable error against R-STHOSVD; the proposed sub-Sketch-STHOSVD can reach the same accuracy as the STHOSVD algorithm but in much less CPU time; and the proposed sub-Sketch-STHOSVD achieves much better low-rank approximation than R-STHOSVD with similar CPU time.

Now we consider the influence of noise on algorithms’ performance. Specifically, the sparse tensor \({\varvec{\mathcal {X}}}\) with noise is designed in the same manner as in [24], i.e.,

where \({\varvec{\mathcal {K}}}\) is a standard Gaussian tensor and \(\delta \) is used to control the noise level. Let \(\delta =10^{-3}\) and keep the rest parameters the same as the settings in the previous experiment. The relative error and running time of different algorithms are shown in Fig. 3. In Fig. 3, we see that noise indeed affects the accuracy of the low-rank approximation, especially when the gap is small. However, the influence of noise does not change the conclusion obtained on the case without noise. The accuracy of our sub-Sketch-STHOSVD algorithm is the highest among the randomized algorithms. As \(\gamma \) increases, sub-Sketch-STHOSVD can achieve almost the same accuracy as that of THOSVD and STHOSVD in a comparable CPU time against R-STHOSVD.

5.3 Real-World Data Tensor

In this experiment, we test the performance of different algorithms on a colour image, called HDU picture,Footnote 1 with a size of \(1200\times 1800\times 3\). We also evaluate the proposed sketching algorithms on the widely used YUV Video Sequences.Footnote 2 Taking the ‘hall monitor’ video as an example and using the first 30 frames, a three order tensor with a size of \(144\times 176\times 30\) is then formed for this test.

Firstly, we conduct an experiment on the HDU picture with target rank (500, 500, 3), and compare the PSNR and CPU time of different algorithms.

The experimental result is shown in Fig. 4, which shows that the PSNR of sub-Sketch-STHOSVD, THOSVD and STHOSVD is very similar (i.e., \(\sim \) 40) and that sub-Sketch-STHOSVD is more efficient in terms of CPU time. R-STHOSVD and Sketch-STHOSVD are also very efficient compared to sub-Sketch-STHOSVD; however, the PSNR they achieve is 5 dB less than sub-Sketch-STHOSVD.

Then we conduct separate numerical experiments on the HDU picture and the ‘hall monitor’ video clip as the target rank increases, and compare these algorithms in terms of the relative error, CPU time and PSNR, see Figs. 5 and 6. These experimental results again demonstrate the superiority (i.e., low error and good approximation with high efficiency) of the proposed sub-Sketch-STHOSVD algorithm in computing the Tucker decomposition approximation.

In the last experiment, a larger-scale real-world tensor data is used. We choose a color image (called the LONDON picture) with a size of \(4775\times 7155\times 3\) as the test image and consider the influence of noise. The LONDON picture with white Gaussian noise is generated using the awgn(X,SNR) built-in function in MATLAB. We set the target rank as (50,50,3) and SNR to 20. The results comparisons without and with white Gaussian noise are respectively shown in Figs. 7 and 8 in terms of the CPU time and PSNR.

Moreover, we also test the algorithms on the LONDON picture as the target rank increases. The results regarding the relative error, the CPU time and the PSNR are reported in Tables 5, 6 and 7, respectively. On the whole, the results again show the consistent performance of the proposed methods.

In summary, the numerical results show the superiority of the sub-sketch STHOSVD algorithm for large-scale tensors with or without noise. We can see that sub-Sketch-STHOSVD could achieve close approximations to that of the deterministic algorithms in a time similar to other randomized algorithms.

6 Conclusion

In this paper we proposed efficient sketching algorithms, i.e., Sketch-STHOSVD and sub-Sketch-STHOSVD, to calculate the low-rank Tucker approximation of tensors by combining the two-sided sketching technique with the STHOSVD algorithm and using the subspace power iteration. Detailed error analysis is also conducted. Numerical results on both synthetic and real-world data tensors demonstrate the competitive performance of the proposed algorithms in comparison to the state-of-the-art algorithms.

Data Availability

Enquiries about data availability should be directed to the authors.

References

Comon, P.: Tensors: a brief introduction. IEEE Signal Process. Mag. 31(3), 44–53 (2014)

Hitchcock, F.L.: Multiple invariants and generalized rank of a P-Way matrix or tensor. J. Math. Phys. 7(1–4), 39–79 (1928)

Kiers, H.A.L.: Towards a standardized notation and terminology in multiway analysis. J. Chemom Soc. 14(3), 105–122 (2000)

Tucker, L.R.: Implications of factor analysis of three-way matrices for measurement of change. Probl. Meas. Change 15, 122–137 (1963)

Tucker, L.R.: Some mathematical notes on three-mode factor analysis. Psychometrika 31(3), 279–311 (1966)

De Lathauwer, L., De Moor, B., Vandewalle, J.: A multilinear singular value decomposition. SIAM J. Matrix Anal. Appl. 21(4), 1253–1278 (2000)

Hackbusch, W., Kühn, S.: A new scheme for the tensor representation. J. Fourier Anal. Appl. 15(5), 706–722 (2009)

Grasedyck, L.: Hierarchical singular value decomposition of tensors. SIAM J. Matrix Anal. Appl. 31(4), 2029–2054 (2010)

Oseledets, I.V.: Tensor-train decomposition. SIAM J. Sci. Comput. 33(5), 2295–2317 (2011)

De Lathauwer, L., De Moor, B., Vandewalle, J.: On the best rank-1 and rank-(r1, r2,...,rn) approximation of higher-order tensors. SIAM J. Matrix Anal. Appl. 21(4), 1324–1342 (2000)

Vannieuwenhoven, N., Vandebril, R., Meerbergen, K.: A new truncation strategy for the higher-order singular value decomposition. SIAM J. Sci. Comput. 34(2), A1027–A1052 (2012)

Zhou, G., Cichocki, A., Xie, S.: Decomposition of big tensors with low multilinear rank. arXiv preprint, arXiv:1412.1885 (2014)

Che, M., Wei, Y.: Randomized algorithms for the approximations of Tucker and the tensor train decompositions. Adv. Comput. Math. 45(1), 395–428 (2019)

Minster, R., Saibaba, A.K., Kilmer, M.E.: Randomized algorithms for low-rank tensor decompositions in the Tucker format. SIAM J. Math. Data Sci. 2(1), 189–215 (2020)

Che, M., Wei, Y., Yan, H.: The computation of low multilinear rank approximations of tensors via power scheme and random projection. SIAM J. Matrix Anal. Appl. 41(2), 605–636 (2020)

Che, M., Wei, Y., Yan, H.: Randomized algorithms for the low multilinear rank approximations of tensors. J. Comput. Appl. Math. 390(2), 113380 (2021)

Sun, Y., Guo, Y., Luo, C., Tropp, J., Udell, M.: Low-rank tucker approximation of a tensor from streaming data. SIAM J. Math. Data Sci. 2(4), 1123–1150 (2020)

Tropp, J.A., Yurtsever, A., Udell, M., Cevher, V.: Streaming low-rank matrix approximation with an application to scientific simulation. SIAM J. Sci. Comput. 41(4), A2430–A2463 (2019)

Malik, O.A., Becker, S.: Low-rank tucker decomposition of large tensors using Tensorsketch. Adv. Neural. Inf. Process. Syst. 31, 10116–10126 (2018)

Ahmadi-Asl, S., Abukhovich, S., Asante-Mensah, M.G., Cichocki, A., Phan, A.H., Tanaka, T.: Randomized algorithms for computation of Tucker decomposition and higher order SVD (HOSVD). IEEE Access. 9, 28684–28706 (2021)

Halko, N., Martinsson, P.-G., Tropp, J.A.: Finding structure with randomness: probabilistic algorithms for constructing approximate matrix decompositions. SIAM Rev. 53(2), 217–288 (2011)

Tropp, J.A., Yurtsever, A., Udell, M., Cevher, V.: Practical sketching algorithms for low-rank matrix approximation. SIAM J. Matrix Anal. Appl. 38(4), 1454–1485 (2017)

Rokhlin, V., Szlam, A., Tygert, M.: A randomized algorithm for principal component analysis. SIAM J. Matrix Anal. Appl. 31(3), 1100–1124 (2009)

Xiao, C., Yang, C., Li, M.: Efficient alternating least squares algorithms for low multilinear rank approximation of tensors. J. Sci. Comput. 87(3), 1–25 (2021)

Zhang, J., Saibaba, A.K., Kilmer, M.E., Aeron, S.: A randomized tensor singular value decomposition based on the t-product. Numer. Linear Algebra Appl. 25(5), e2179 (2018)

Acknowledgements

We would like to thank the anonymous referees for their comments and suggestions on our paper, which lead to great improvements of the presentation.

Funding

G. Yu’s work was supported in part by the National Natural Science Foundation of China (No. 12071104) and the Natural Science Foundation of Zhejiang Province (No. LD19A010002).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have not disclosed any competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Lemma 1

([25], Theorem 2) Let \(\varrho < k-1\) be a positive natural number and \({\varvec{\Omega }}\in \mathbb {R}^{k\times n}\) be a Gaussian random matrix. Suppose \(\textbf{Q}\) is obtained from Algorithm 7. Then \(\forall \textbf{A}\in \mathbb {R}^{m\times n}\), we have

Lemma 2

([22], Lemma A.3) Let \(\textbf{A}\in \mathbb {R}^{m\times n}\) be an input matrix and \(\hat{\textbf{A}}=\textbf{QX}\) be the approximation obtained from Algorithm 7. The approximation error can be decomposed as

Lemma 3

([22], Lemma A.5) Assume \({\varvec{\Psi }}\in \mathbb {R}^{l\times n}\) is a standard normal matrix independent from \({\varvec{\Omega }}\). Then

The error-bound for Algorithm 7 can be shown in Lemma 4 below.

Lemma 4

Assume the sketch size parameter satisfies \(l>k+1\). Draw random test matrices \(\varvec{\Omega }\in \mathbb {R}^{n\times k}\) and \(\varvec{\Psi }{\in \mathbb {R}}^{l\times m}\) independently from the standard normal distribution. Then the rank-k approximation \(\hat{\textbf{A}}\) obtained from Algorithm 7 satisfies

Proof

Using Eqs. (23), (24) and (25), we have

After minimizing over eligible index \(\varrho <k-1\), the proof is completed. \(\square \)

We are now in the position to prove Theorem 5. Combining Theorem 2 and Lemma 4, we have

which completes the proof of Theorem 5.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Dong, W., Yu, G., Qi, L. et al. Practical Sketching Algorithms for Low-Rank Tucker Approximation of Large Tensors. J Sci Comput 95, 52 (2023). https://doi.org/10.1007/s10915-023-02172-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-023-02172-y

Keywords

- Tensor sketching

- Randomized algorithm

- Tucker decomposition

- Subspace power iteration

- High-dimensional data