Abstract

A neuron receives input from other neurons via electrical pulses, so-called spikes. The pulse-like nature of the input is frequently neglected in analytical studies; instead, the input is usually approximated to be Gaussian. Recent experimental studies have shown, however, that an assumption underlying this approximation is often not met: Individual presynaptic spikes can have a significant effect on a neuron’s dynamics. It is thus desirable to explicitly account for the pulse-like nature of neural input, i.e. consider neurons driven by a shot noise – a long-standing problem that is mathematically challenging. In this work, we exploit the fact that excitatory shot noise with exponentially distributed weights can be obtained as a limit case of dichotomous noise, a Markovian two-state process. This allows us to obtain novel exact expressions for the stationary voltage density and the moments of the interspike-interval density of general integrate-and-fire neurons driven by such an input. For the special case of leaky integrate-and-fire neurons, we also give expressions for the power spectrum and the linear response to a signal. We verify and illustrate our expressions by comparison to simulations of leaky-, quadratic- and exponential integrate-and-fire neurons.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Two synaptically connected neurons communicate via spikes or action potentials (APs). Neglecting the underlying conductance dynamics, one can model the effect of a presynaptic AP as a jump in the voltage of the postsynaptic neuron. The height of this jump then depends on the strength of the synapse. Cortical neurons typically have thousands of presynaptic partners (Braitenberg and Schüz 1998) that often fire asynchronously. Thus, a popular assumption in theoretical work has been that the overall rate of input is high, while each individual presynaptic spike only causes a very small jump in voltage, so that the input can be modeled as a Gaussian process. If one additionally assumes that it is temporally uncorrelated, one obtains the so-called diffusion approximation (DA). Modeling neural input as Gaussian white noise has allowed theoretical insights, ranging from the statistics of single neurons to the dynamics of whole networks (Gerstein and Mandelbrot 1964; Ricciardi and Sacerdote 1979; Lindner and Schimansky-Geier 2001; Brunel 2000). This kind of theory has been extended to correlated Gaussian noise (Brunel and Sergi 1998; Moreno et al. 2002; Fourcaud and Brunel 2002; Moreno-Bote et al. 2008; Schwalger et al. 2015).

The assumption that individual spikes have only a weak effect on postsynaptic voltage, however, is not always justified: In recent experiments, the mean peak height of evoked postsynaptic potentials (EPSPs) has been reported to lie in the range of 1–2mV (Thomson et al. 1993; Markram et al. 1997; Song et al. 2005; Lefort et al. 2009). Further, the distributions of EPSPs can be highly skewed (Song et al. 2005; Lefort et al. 2009), so that individual EPSPs can range up to 8–10mV (Thomson et al. 1993; Lefort et al. 2009; Loebel et al. 2009). For pyramidal neurons, the distance from resting potential to threshold lies between 10 and 20mV (Badel et al. 2008; Lefort et al. 2009). Thus, between 5 and 20 EPSPs – and in some cases even a single strong EPSP – can be sufficient to make the neuron fire. If such large EPSPs dominate the input to the neuron, the DA cannot be expected to yield good results (Nykamp and Tranchina 2000), and one should rather model the input as a shot noise (SN), in which individual events have weights that are drawn from a skewed distribution. In contrast to these expectations, some approximations, such as the moment-closure method for multidimensional integrate-and-fire (IF) models, suggest that there is no difference between neurons driven by Gaussian or shot noise - at least with respect to simple firing statistics (this is critically evaluated by Ly and Tranchina (2007)). Hence, it is important to test the effect of the pulsatile nature of SN input on the firing statistics of IF neurons and to compare it with the case of Gaussian noise.

Integrate-and-fire neurons driven by SN have been analytically studied for a long time (Stein 1965; Holden 1976; Tuckwell 1988), with most studies focusing on the voltage distribution, either in spiking, current-based leaky IF (LIF) neurons (Sirovich et al. 2000; Sirovich 2003; Richardson 2004, Helias et al. 2010a, 2010b, 2011) or in conductance-based neurons far below threshold (Richardson 2004; Richardson and Gerstner 2005, 2006; Wolf and Lindner 2008, 2010). With respect to neural signal transmission, it was found that LIF neurons can faithfully transmit signals even at high frequencies if they are encoded in an SN process, rather than as a current modulation (Helias et al. 2010b; 2011; Richardson and Swarbrick 2010).

Most of the work concerning SN-driven spiking neurons has relied on simulations or approximation; exact analytical results are rare. For perfect IF (PIF) neurons driven by excitatory Poisson shot noise with constant weights, the density of interspike intervals and the power spectrum have been calculated early on by Stein et al. (1972). Some results have been derived without explicit reference to IF neurons but apply directly to their interspike-interval (ISI) density; these comprise the Laplace-transformed first-passage-time density of linear systems driven by excitatory (Tsurui and Osaki 1976; Novikov et al. 2005) or both excitatory and inhibitory (Jacobsen and Jensen 2007) SN with exponentially distributed weights, as well as the mean first-passage time for potentially nonlinear systems driven by excitatory SN with either exponentially distributed or constant weights (Masoliver 1987). Recently, Richardson and Swarbrick (2010) have considered LIF neurons driven by excitatory and inhibitory SN with exponentially distributed weights and given expressions for the firing rate, the susceptibility with respect to a modulation of the input rate, and the power spectrum.

The aim of this paper is methodological: to draw attention to the shot-noise limit of dichotomous noise, which has been used in statistical physics for a long time (van den Broeck 1983), but, as far as we know, never been applied to the description of neural input. We present exact expressions, derived using this limit, in the hope that others may find them useful. Thus, rather than systematically exploring particular effects (or even functional consequences) of SN input, we demonstrate the theory for a few illustrative examples. We provide the complete source code implementing the analytics (in PYTHON and C++) and the simulation (in C++) at http://modeldb.yale.edu/228604.

The paper is organized as follows: We first describe the model in Section 2, before introducing the shot-noise limit of dichotomous noise in Section 3 and discussing the treatment of fixed points in the drift dynamics in Section 4. For general IF neurons, we give expressions for the stationary voltage distribution (Section 5), and the moments of the ISI density (Section 6). For the special case of LIF neurons, we derive the power spectrum in Section 7 and the susceptibility with respect to a current-modulating signal in Section 8. We close with a brief discussion in Section 9.

2 Model

We consider the dynamics

where τ m is the membrane time constant, f(v) is a potentially nonlinear function, 𝜖 ≪ 1, and s(t) is some time-dependent signal. Note that we set 𝜖 = 0, except for the calculation of the susceptibility. Whenever the voltage v crosses a threshold v T , a (postsynaptic) spike is emitted, v is reset to v R and clamped there for an absolute refractory period τ ref (see voltage trace in Fig. 1a). The input X in(t) is a weighted shot noise,

Here, a i is the weight and t i the time of the i th presynaptic spike. The a i are drawn from an exponential distribution with mean a; they are all statistically independent of each other and of the spike times t i . The spikes occur according to a homogeneous Poisson process with rate r in,

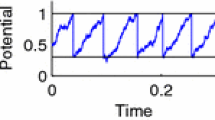

a Voltage trace of an exponential integrate-and-fire (EIF) neuron driven by excitatory shot noise with exponentially distributed weights. b The shot-noise limit of dichotomous noise: Increasing the noise value in the + state, σ +, while decreasing its mean duration 1/k + such that a, the area under each excursion, remains constant, yields a shot noise with exponentially distributed weights of mean a

Common choices for f(v) are f(v) = μ, yielding the perfect integrate-and-fire (PIF) neuron, f(v) = μ − v, the leaky integrate-and-fire (LIF) neuron, f(v) = μ + v 2, the quadratic integrate-and-fire (QIF) neuron, or \(f(v) = \mu - v + {\Delta } \exp [(v-\bar {v}_{T})/{\Delta }]\), the exponential integrate-and-fire (EIF) neuron. Here, μ quantifies a constant input to the neuron; in the EIF, \(\bar {v}_{T}\) sets the position of the (soft) threshold and the slope parameter Δ controls the sharpness of postsynaptic spike onset. In the QIF neuron, one typically chooses reset and threshold at infinity, v R →−∞,v T →∞. For the EIF, the hard threshold can also be set to infinity, v T →∞. In practice, it is sufficient to take large but finite values. For a systematic comparison of the different IF models in the Gaussian noise case, see Fourcaud-Trocmé et al. (2003), Vilela and Lindner (2009b), and Vilela and Lindner (2009a).

A common modeling assumption in theoretical studies is that the a i are sufficiently small and r in sufficiently large that one can approximate X in(t) as a Gaussian white noise. Throughout this paper, we will contrast our results to this so-called diffusion approximation (DA). For the DA, the shot noise input is replaced by a Gaussian white noise with the intensity

Its mean value can be lumped into the function f(v), in which the parameter μ is replaced by a new effective parameter

Note that D eff differs from the case with fixed synaptic weights, in which it would be \(a^{2} \tau _{\mathrm {m}}^{2} r_{\text {in}} /2\).

As often done, we rescale the voltage axis such that v R = 0 and v T = 1. Thus, for a distance between resting potential and threshold of approximately 10–20mV, the spike weights a = 0.1 and a = 0.2 typically used in the figures shown below correspond to mean EPSP peak heights of 1–2mV and 2–4mV, respectively.

For the DA, we use the known results for the firing rate (Siegert 1951; Ricciardi and Sacerdote 1979; Brunel and Latham 2003; Lindner et al. 2003, for general IF, LIF, or QIF neurons, respectively), the CV (Siegert 1951; Lindner et al. 2003) power spectrum (Lindner et al. 2002), and the susceptibility with respect to current modulation (Brunel et al. 2001; Lindner and Schimansky-Geier 2001).

3 Dichotomous noise and its shot-noise limit

All our results rely on the fact that a dichotomous Markov process (DMP) can be taken to a shot-noise limit (van den Broeck 1983). A dichotomous Markov process (Gardiner 1985; Bena 2006) is a two-state process that jumps between two values, σ + and σ −. Jumps occur at constant rates, k + and k −, where k + is the rate at which transitions from σ + to σ − occur (and vice versa). The residence times within one state are exponentially distributed (with mean 1/k ±).

One obtains the shot-noise limit by taking σ + →∞ and k + →∞ while keeping a = σ +/k + constant. This is illustrated in Fig. 1b: As the limit is taken, the excursions to the plus state get shorter and higher, such that a, the mean area under the excursions, is conserved. The area under individual excursions is an exponentially distributed random number; in the SN limit, this corresponds to exponentially distributed weights of the δ functions.

The recipe for calculating the statistics of shot-noise-driven IF neurons is thus the following: One solves the associated problem for an IF neuron driven by asymmetric dichotomous noise with appropriate boundary conditions and then takes the shot-noise limit on the resulting expression. We have derived expressions for statistical properties of IF neurons driven by dichotomous noise elsewhere (Droste and Lindner 2014, 2017).

In most cases, taking the shot noise limit is rather straighforward: In the expressions for dichotomous noise, one replaces f(v) by f(v) + (σ + + σ −)/2 and σ by (σ + − σ −)/2. Then, one renames k − to r in (the rate of leaving the minus state corresponds to the rate at which input spikes occur), sets σ − = 0, and replaces σ + by a k +, before performing the limit k + →∞. We have previously expressed power spectrum and susceptibility of dichotomous-noise-driven neurons in terms of hypergeometric functions 2 F 1(a,b,c;z) (Abramowitz and Stegun 1972), in which k + appears both in the parameter b as well as, inversely, in the argument z. It can be shown that the limit k + →∞ turns them into confluent hypergeometric functions 1 F 1(a,b;z). For example,

Note that if one wants to consider also the limit of vanishing refractory period, it is important to take the shot noise limit first to get consistent results. In the remainder of this paper, we just give the resulting expressions in the shot-noise limit.

4 Importance of fixed points of the drift dynamics

Systems driven by dichotomous noise follow a deterministic dynamics within each of the two noise states, and these deterministic flows can contain fixed points (FPs). It has been pointed out that these FPs call for special attention when such systems are treated analytically (Bena 2006; Droste and Lindner 2014). In particular, when solving differential equations to obtain the probability density, the integration constants need to be chosen separately in N int intervals on the voltage axis, delimited by the threshold v T , the lowest attainable voltage v −, and FPs of the drift dynamics, i.e. points v F for which f(v F ) = 0. An FP is stable if f ′(v F ) < 0 and unstable if f ′(v F ) > 0.

The LIF neuron with μ < v T , for example, has a stable FP at v S = μ. If μ < v R , this is also the lowest attainable voltage (v − = v S = μ), because the excitatory shot noise can kick the neuron only to higher voltages; in this case, N int = 1. Otherwise, the lowest attainable voltage is v − = v R because of the reset rule; here, we have N int = 2 and need to consider the intervals [v R ,μ] and [μ,v T ]. Nonlinear neuron models such as QIF or EIF neurons have in general also an unstable FP, v U , and thus N int = 3 intervals need to be distinguished.

5 Voltage distribution

The stationary probability density for the voltage of IF neurons driven by a dichotomous noise has been derived elsewhere (Droste and Lindner 2014). Taking the shot-noise limit yields, for the i th interval (i = 1…N int),

where r 0 is the firing rate, the constant c i depends on the interval,

(here x + (x −) indicates that the value is to be taken infinitesimally above (below) x), and the function in the exponent is defined as

For PIF, LIF, and QIF neuron, closed-form expressions for ϕ(v) are given in Appendix A. For the EIF, the integral has to be obtained numerically.

Note that Eq. (7) gives, strictly speaking, only the continuous part of the probability density; if one considers the voltage during the refractory period to be clamped at v R , then P 0(v) should also contain a δ peak with weight r 0 τ ref, centered at v R . To make comparison between theory and simulation somewhat easier, we discard this trivial contribution also in the simulation by building the histogram of voltage values only when the neuron is not refractory. We emphasize that in higher dimensional models or when using colored noise as an input, it becomes important to take the time evolution of neurons in the refractory state into account.

The firing rate r 0, which appears in Eq. (7), can either be determined from the requirement that the probability density needs to be normalized,

or using the recursive relations for the ISI moments given in the following section.

In Fig. 2, we compare Eq. (7) for an EIF neuron to simulation results and the diffusion approximation. The theory matches the histogram obtained in the simulation. For the parameter regime shown, only an average of eight presynaptic spikes occurring in short succession are needed to take the neuron from the resting potential to the unstable fixed point of f(v) (here slightly above 1.4). Because of the relative strength of the single presynaptic spikes, large qualitative differences to the diffusion approximation can be observed. In particular, while the diffusion approximation predicts a rather symmetric and approximately Gaussian distribution for the voltage, the sparse, excitatory shot noise input leads to an asymmetric, clearly non-Gaussian profile.

Voltage distribution in a shot-noise-driven EIF neuron. We compare our theory (Eq. (7), red solid line) to a histogram obtained in a simulation (gray bars) and the diffusion approximation (green dashed line). The discontinuity in the density’s derivative, typical for the DA, is in this example particularly small and hence not visible. Parameters: \(\tau _{\mathrm {m}} = 20 \text { ms}, \mu =-0.1, a=0.2, r_{\text {in}}=0.12\text { kHz}, v_{R} = 0, \bar {v}_{T}=1, {\Delta }=0.2, \tau _{\text {ref}}=2\text { ms}\)

It is also interesting to consider the voltage distribution for neuron models with a sharp threshold. In Fig. 3, we plot P 0(v) of a LIF neuron for three different values of μ. Focusing on the value at the threshold, it is apparent that \(P_{0}(v_{T}^{-})\) is finite only for μ > v T . This is reflected in the analytical formula Eq. (7), which yields

where

It is apparent from Eq. (8) that α = 1 if f(v T ) < 0 and α < 1 for f(v T ) > 0. There is an direct interpretation of α that will become clearer in the next section: it is the fraction of trajectories that cross the threshold because of a kick (rather than by drifting). The probability density can thus only be non-vanishing at the threshold if it is possible to drift across it in the absence of inputs.

Voltage distribution in a shot-noise-driven leaky integrate-and-fire (LIF) neuron for three values of μ . Our theory (Eq. (7), red solid lines) is compared to histograms obtained in simulations (gray bars) and the diffusion approximation (green dashed lines). Remaining parameters: τ m = 20 ms,μ = −0.2,a = 0.2,r in = 0.28 kHz,v R = 0,v T = 1,τ ref = 2 ms

If μ < v R (third panel in Fig. 3), the density P 0(v R ) jumps to a higher value when crossing v R from above. This is caused by reset trajectories which, until the next incoming spike occurs, drift to lower voltages.

6 ISI moments of shot-noise-driven IF neurons

We have previously derived recursive relations that allow to obtain the moments of the ISI density of IF neurons driven by dichotomous noise (Droste and Lindner 2014). In the shot-noise limit, they read

where i(v) denotes the interval that contains v, r i (l i ) is the upper (lower) boundary of the i th interval, c i is defined as in Eq. (8), and where

Here, J(v,t) is the total probability current and J −(v,t) is the probability current due to drift only. The n th ISI moment is then given by

In Eq. (11), we have introduced α, the fraction of trajectories that cross the threshold directly due to an incoming spike (and not by drifting over the threshold). Expressed via integrated fluxes, it should be given by \(\alpha = \mathcal {J}_{0}(v_{T}) - \mathcal {M}_{0}(v_{T})\). Indeed, evaluating Eqs. (13) and (14) for n = 0 and v = v T recovers the expression given in Eq. (12).

6.1 Firing rate

Using Eqs. (13), (14), the following formula for the firing rate can be obtained:

where \(\bar {c}_{i}\) is the interval boundary opposite of c i . For the special case of an LIF neuron with τ ref = 0 and μ → 0, this can be shown to reduce exactly to the formula given by Richardson and Swarbrick (2010) if one sets the inhibitory weights to zero in their expression (Droste 2015, App. B5).

We plot the firing rate of an EIF neuron as a function of the input rate in Fig. 4 for two values of the slope factor Δ. The actual firing rate can be both lower or higher than what the DA predicts, depending on the input rate. For an increased slope factor, the deviations are less pronounced. This can be traced to an effective reduction in the weight of presynaptic spikes: As shown in the inset of Fig. 4, a higher slope factor pushes the effective threshold – the unstable fixed point – to higher voltages. Relative to the distance from resting potential to threshold, the spike weight is thus reduced by increasing Δ, bringing the system closer to the range of validity of the DA.

Firing rate of a EIF neuron as a function of the input rate for two values of the slope factor Δ. Our theory (Eq. (17), solid lines) is compared to simulations (symbols) and the diffusion approximation (dashed lines). Inset: the nonlinearity f(v) for the two values of Δ. The white dot marks the unstable fixed point. Parameters: \(\tau _{\mathrm {m}} = 20 \text { ms}, \mu =-0.1, a=0.1, v_{R} = 0, \bar {v}_{T}= 1, \tau _{\text {ref}}=2\text { ms}\)

A particularly pronounced deviation between the SN case and the DA can be observed for very low input rates (shown, in Fig. 5, for an LIF neuron). This is mainly due to the distributed weights in the SN case: no matter how sparsely input arrives, each input spike can potentially cause an output spike if its weight a i > v T − μ, where we have assumed that input is so sparse that the neuron relaxes to μ between input spikes. Thus, for r in ≪ 1, the output firing rate goes linear with the input rate,

In contrast, the diffusion approximation (and also SN input with weights fixed to a, black squares in Fig. 5) falls off much more rapidly with decreasing input rate.

Firing rate of a LIF neuron as a function of the input rate. Our theory (Eq. (17), red solid lines) is compared to simulations (gray symbols) and the diffusion approximation (green dashed line). Also shown are simulations using spike weights that were either distributed but cut off at \(a_{\max } = 0.4\) (green triangles) or fixed (at a = 0.1) (black squares), the maximally achievable firing rate \(\min (r_{\text {in}}, 1/\tau _{\text {ref}})\), and the asymptotic rate for SN input with exponentially distributed weights (Eq. (18)). Parameters: τ m = 20 ms,μ = 0.5,a = 0.1,v R = 0,v T = 1,τ ref = 2 ms

The fact that individual incoming spikes can have a weight larger than the distance from reset to threshold is not necessarily realistic. However, even if we set the weight of all incoming spikes with a i > a max to a max (green triangles in Fig. 5), the decay of the firing rate with decreasing r in is still much slower than predicted by the DA.

6.2 Coefficient of variation

The recursive relations, Eqs. (13), 14), can also be used to calculate the coefficient of variation (CV),

which quantifies the regularity of the spiking. For a Poisson process, C V = 1; a lower CV indicates that firing is more regular than for a Poisson process.

In Fig. 6, we plot the CV for of a shot-noise-driven QIF neuron as a function of the mean spike weight a. In order to demonstrate the plausibility of limit cases, we deliberately show an unphysiologically large range for a. For small a, where the assumptions underlying the diffusion approximation are still approximately fulfilled, the CV predicted by the diffusion approximation is still a good match. For very low spike weights, the input is so sparse that spiking becomes a rare event and the output spike train thus becomes Poisson-like with a CV of 1. At (unreasonably) high spike weights, the CV also approaches 1, which is a plausible limit: when a becomes very large, each incoming spike causes an output spike (note that in Fig. 6, τ ref = 0) and the CV approaches that of the Poissonian input. This is in contrast to the DA, which, for a QIF with v R →−∞,v T →∞, predicts a CV of \(1/\sqrt {3}\) (dotted line in Fig. 6) as the noise intensity tends to infinity (Lindner et al. 2003). However, even up to unphysiological mean spike weights of a = 1 (which here corresponds to an average of only two presynaptic spikes needed to reach the threshold), the CV calculated using the DA is not qualitatively different from the exact one.

Coefficient of variation of a quadratic integrate-and-fire (QIF) neuron as a function of the mean spike weight a . Our theory (solid red line) is compared to simulations (gray symbols) and the diffusion approximation (green dashed line). The dashed line marks the value 1, expected for a Poisson process, the dotted line marks \(1/\sqrt {3}\), the asymptotic value expected for the DA with v R →−∞,v T →∞. Parameters: τ m = 20 ms,μ = −1,r in = 0.675 kHz ,v R = −20,v T = 20,τ ref = 0 ms

7 Power spectrum of shot-noise-driven LIF neurons

For leaky integrate-and-fire neurons driven by dichotomous noise, we have previously derived expressions for the power spectrum for the parameter regime μ < v T (Droste 2015; Droste and Lindner 2017). Their shot-noise limit reads

where \({\mathcal {F}}(v,f)\) and \({\mathcal {G}}(v,f)\) are given in terms of confluent hypergeometric functions (Abramowitz and Stegun 1972),

In Fig. 7, Eq. (20) is compared to simulation results and the diffusion approximation. The SN theory matches simulation results nicely. The DA, in contrast, does not even qualitatively match it. Somewhat surprising is that, in the SN-driven case, the low value of the power spectrum at zero frequency (proportional to the squared CV of the interspike interval) does not translate into a pronounced peak around the firing rate. Hence, although the single interspike interval for this parameter set has a rather low variability (that is close to the value in the DA), the resulting spike sequence does not show a pronounced periodicity.

Power spectrum of an LIF neuron. Theory (Eq. (20), solid red line), compared to simulation results (gray line) and the diffusion approximation (green dashed line). Parameters: τ m = 20 ms,μ = 0.5,α = 0.2,r in = 1 kHz ,v R = 0,v T = 1,τ ref = 2 ms

8 Linear response of the firing rate

The susceptibility with respect to a current modulation of LIF neurons driven by dichotomous noise has been derived elsewhere (Droste and Lindner 2017). Its shot-noise limit reads

Here, the derivatives of Eqs. (21), (22) with respect to v are given by Abramowitz and Stegun (1972)

In Fig. 8, we compare the absolute value and the phase of the susceptibility to simulations and the DA. Especially for high frequencies, a marked deviation from the DA can be observed. This high-frequency behavior of the susceptibility is of particular interest to understand how well neurons can track fast signals; it has thus attracted both experimental and theoretical attention (Fourcaud-Trocmé et al. 2003; Boucsein et al. 2009; Tchumatchenko et al. 2011; Ilin et al. 2013; Ostojic et al. 2015; Doose et al. 2016). With the properties of confluent hypergeometric functions (Abramowitz and Stegun 1972), we find from Eq. (23) for f ≫ 1,

This is in contrast to LIF neurons driven by Gaussian white noise (i.e. the DA), for which the absolute value of the susceptibility decays like \(1/\sqrt {f}\) while its phase approaches \(\frac {\pi }{4}\) (see Fourcaud and Brunel 2002, for a comparison of different neuron/noise combinations).

Susceptibility of an LIF neuron with respect to a current modulation. Theory (Eq. (23), solid red line) compared to simulations (gray symbols) and the diffusion approximation (green dashed line). Also shown is the high-frequency behavior of the theory (blue dotted line). Parameters: τ m = 20 ms,μ = 0.5,α = 0.2r in = 0.28 kHz ,v R = 0,v T = 1,τ ref = 2 ms

Note that the shot noise limit of a dichotomous-noise-driven LIF neuron can also be used to study the susceptibility with respect to a modulation of the firing rate (Droste 2015), a quantity previously studied by Richardson and Swarbrick (2010).

9 Discussion

We have used the shot-noise limit of dichotomous noise to obtain novel analytical expressions for statistical properties of integrate-and-fire neurons driven by excitatory shot noise with exponentially distributed weights. We have derived exact expressions for the stationary distribution of voltages and the moments of the ISI density for general IF neurons as well as, for LIF neurons, the power spectrum and the susceptibility to a current signal.

Our approach is complementary to others that have previously been employed. We obtain, for instance, the same expression for the Laplace-transform of the first-passage-time density of LIF neurons as Novikov et al. (2005) (not shown), or the same expression for the firing rate as Richardson and Swarbrick (2010) (if one considers only excitatory input). Our expression for the power spectrum provides an alternative formulation to the one given by Richardson and Swarbrick (2010), given in terms of confluent hypergeometric functions that can be evaluated quickly. Exact results for the coefficient of variation (CV), the voltage distribution, or the susceptibility with respect to a current-modulating signal have, to our knowledge, not previously been obtained for SN-driven IF neurons.

For low input rates, the firing rate of the SN-driven neuron was found to be much higher than predicted by the DA. As we have argued, this is mainly due to the exponential distribution of spike weights, which implies that individual presynaptic spikes can be strong enough to make the neuron fire. While arbitrarily high spike weights are not realistic, this effect survives at least partially when we introduce a weight-cutoff, i.e. when we no longer allow single individual spikes to push the neurons across threshold. This may be related to the finding that few strong synapses can stabilize self-sustained spiking activity in recurrent networks (Ikegaya et al. 2013).

An obvious limitation of our approach is that is does not incorporate inhibitory SN input to the neuron. Many of the qualitative results in this paper can be expected to persist also with additional inhibitory input: the probability density at the threshold, for instance, should still only be finite if it can be crossed by drifting, the output firing rate should still be proportional to the (excitatory) input rate when input is sparse, and the CV should still approach 1 in the a →∞ limit. Nevertheless, it would be valuable to have quantitatively exact expressions also for this more realistic case. A possible approach could be to derive statistics for neurons driven by a trichotomous noise (Mankin et al. 1999) and then take a shot noise limit.

Another interesting extension is to consider multiple neurons with shot noise in order to understand the emergence of correlations among neurons in recurrent neural networks. The simplest problem of this kind is the calculation of the count correlations of two uncoupled neurons which are driven by a common noise (de la Rocha et al. 2007). Recent work indicates that a common shot noise has a decisively different effect than a Gaussian noise (Rosenbaum and Josic 2011). Some of the methods developed here may be also useful for tackling this more involved problem.

References

Abramowitz, M., & Stegun, I.A. (1972). Handbook of mathematical functions with formulas, graphs and mathematical tables. New York: Dover.

Badel, L., Lefort, S., Brette, R., Petersen, C.C., Gerstner, W., & Richardson, M.J. (2008). Dynamic IV curves are reliable predictors of naturalistic pyramidal-neuron voltage traces. Journal of Neurophysiology, 99(2), 656–666.

Bena, I. (2006). Dichotomous Markov noise: exact results for out-of-equilibrium systems. International Journal of Modern Physics B, 20(20), 2825–2888.

Boucsein, C., Tetzlaff, T., Meier, R., Aertsen, A., & Naundorf, B. (2009). Dynamical response properties of neocortical neuron ensembles: multiplicative versus additive noise. Journal of Neuroscience, 29(4), 1006–1010.

Braitenberg, V., & Schüz, A. (1998). Cortex: statistics and geometry of neuronal connectivity. Heidelberg, Berlin: Springer.

van den Broeck, C. (1983). On the relation between white shot noise, Gaussian white noise, and the dichotomic Markov process. Journal of Statistical Physics, 31(3), 467–483.

Brunel, N. (2000). Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. Journal of Computational Neuroscience, 8(3), 183–208.

Brunel, N., & Latham, P.E. (2003). Firing rate of the noisy quadratic integrate-and-fire neuron. Neural Computation, 15(10), 2281–2306.

Brunel, N., & Sergi, S. (1998). Firing frequency of leaky intergrate-and-fire neurons with synaptic current dynamics. Journal of Theoretical Biology, 195(1), 87–95.

Brunel, N., Chance, F.S., Fourcaud, N., & Abbott, L.F. (2001). Effects of synaptic noise and filtering on the frequency response of spiking neurons. Physical Review Letters, 86, 2186–2189.

Doose, J., Doron, G., Brecht, M., & Lindner, B. (2016). Noisy juxtacellular stimulation in vivo leads to reliable spiking and reveals high-frequency coding in single neurons. The Journal of Neuroscience, 36(43), 11,120–11,132.

Droste, F. (2015). Signal transmission in stochastic neuron models with non-white or non-Gaussian noise. Humboldt-Universität zu Berlin: PhD thesis.

Droste, F., & Lindner, B. (2014). Integrate-and-fire neurons driven by asymmetric dichotomous noise. Biological Cybernetics, 108(6), 825–843.

Droste, F., & Lindner, B. (2017). Exact results for power spectrum and susceptibility of a leaky integrate-and-fire neuron with two-state noise. Physical Review E, 95, 012–411.

Fourcaud, N., & Brunel, N. (2002). Dynamics of the firing probability of noisy integrate-and-fire neurons. Neural Computation, 14(9), 2057–2110.

Fourcaud-Trocmé, N., Hansel, D., Van Vreeswijk, C., & Brunel, N. (2003). How spike generation mechanisms determine the neuronal response to fluctuating inputs. The Journal of Neuroscience, 23(37), 11,628–11,640.

Gardiner, C.W. (1985). Handbook of stochastic methods. Heidelberg: Springer.

Gerstein, G.L., & Mandelbrot, B. (1964). Random walk models for the spike activity of a single neuron. Biophysical Journal, 4, 41.

Helias, M., Deger, M., Diesmann, M., & Rotter, S. (2010a). Equilibrium and response properties of the integrate-and-fire neuron in discrete time. Frontiers in Computational Neuroscience, 3, 29.

Helias, M., Deger, M., Rotter, S., & Diesmann, M. (2010b). Instantaneous non-linear processing by pulse-coupled threshold units. PLoS Conput Biol, 6(9), e1000–929.

Helias, M., Deger, M., Rotter, S., & Diesmann, M. (2011). Finite post synaptic potentials cause a fast neuronal response. Frontiers in Neuroscience, 5, 19.

Holden, A.V. (1976). Models of the stochastic activity of neurones. Heidelberg: Springer.

Ikegaya, Y., Sasaki, T., Ishikawa, D., Honma, N., Tao, K., Takahashi, N., Minamisawa, G., Ujita, S., & Matsuki, N. (2013). Interpyramid spike transmission stabilizes the sparseness of recurrent network activity. Cerebral Cortex, 23(2), 293–304.

Ilin, V., Malyshev, A., Wolf, F., & Volgushev, M. (2013). Fast computations in cortical ensembles require rapid initiation of action potentials. Journal of Neuroscience, 33, 2281.

Jacobsen, M., & Jensen, A.T. (2007). Exit times for a class of piecewise exponential Markov processes with two-sided jumps. Stochastic Processes and their Applications, 117(9), 1330–1356.

Lefort, S., Tomm, C., Sarria, J.C.F., & Petersen, C.C. (2009). The excitatory neuronal network of the C2 barrel column in mouse primary somatosensory cortex. Neuron, 61(2), 301–316.

Lindner, B., & Schimansky-Geier, L. (2001). Transmission of noise coded versus additive signals through a neuronal ensemble. Physical Review Letters, 86, 2934–2937.

Lindner, B., Schimansky-Geier, L., & Longtin, A. (2002). Maximizing spike train coherence or incoherence in the leaky integrate-and-fire model. Physical Review E, 66, 031–916.

Lindner, B., Longtin, A., & Bulsara, A. (2003). Analytic expressions for rate and CV of a type I neuron driven by white Gaussian noise. Neural Computation, 15(8), 1761–1788.

Loebel, A., Silberberg, G., Helbig, D., Markram, H., Tsodyks, M., & Richardson, M.J. (2009). Multiquantal release underlies the distribution of synaptic efficacies in the neocortex. Frontiers in Computational Neuroscience 3.

Ly, C., & Tranchina, D. (2007). Critical analysis of dimension reduction by a moment closure method in a population density approach to neural network modeling. Neural Computation, 19, 2032.

Mankin, R., Ainsaar, A., & Reiter, E. (1999). Trichotomous noise-induced transitions. Physical Review E, 60, 1374–1380.

Markram, H., Lübke, J., Frotscher, M., Roth, A., & Sakmann, B. (1997). Physiology and anatomy of synaptic connections between thick tufted pyramidal neurones in the developing rat neocortex. Journal of Physiology, 500(Pt 2), 409.

Masoliver, J. (1987). First-passage times for non-Markovian processes: Shot noise. Physical Review A, 35(9), 3918.

Moreno, R., de La Rocha, J., Renart, A., & Parga, N. (2002). Response of spiking neurons to correlated inputs. Physical Review Letters, 89(28), 288–101.

Moreno-Bote, R., Renart, A., & Parga, N. (2008). Theory of input spike auto-and cross-correlations and their effect on the response of spiking neurons. Neural Computation, 20(7), 1651–1705.

Novikov, A., Melchers, R., Shinjikashvili, E., & Kordzakhia, N. (2005). First passage time of filtered Poisson process with exponential shape function. Probabilistic Engineering Mechanics, 20(1), 57–65.

Nykamp, D.Q., & Tranchina, D. (2000). A population density approach that facilitates large-scale modeling of neural networks: Analysis and an application to orientation tuning. Journal of Computational Neuroscience, 8, 19.

Ostojic, S., Szapiro, G., Schwartz, E., Barbour, B., Brunel, N., & Hakim, V. (2015). Neuronal morphology generates high-frequency firing resonance. Journal of Neuroscience, 35(18), 7056–7068.

Ricciardi, L.M., & Sacerdote, L. (1979). The Ornstein-Uhlenbeck process as a model for neuronal activity. Biological Cybernetics, 35, 1.

Richardson, M.J., & Gerstner, W. (2005). Synaptic shot noise and conductance fluctuations affect the membrane voltage with equal significance. Neural Computation, 17(4), 923–947.

Richardson, M.J., & Swarbrick, R. (2010). Firing-rate response of a neuron receiving excitatory and inhibitory synaptic shot noise. Physical Review Letters, 105(17), 178–102.

Richardson, M.J.E. (2004). Effects of synaptic conductance on the voltage distribution and firing rate of spiking neurons. Physical Review E, 69(5 Pt 1), 051–918.

Richardson, M.J.E., & Gerstner, W. (2006). Statistics of subthreshold neuronal voltage fluctuations due to conductance-based synaptic shot noise. Chaos, 16(2), 026–106.

de la Rocha, J., Doiron, B., Shea-Brown, E., Josic, K., & Reyes, A. (2007). Correlation between neural spike trains increases with firing rate. Nature, 448, 802.

Rosenbaum, R., & Josic, K. (2011). Mechanisms that modulate the transfer of spiking correlations. Neural Computation, 23, 1261.

Schwalger, T., Droste, F., & Lindner, B. (2015). Statistical structure of neural spiking under non-Poissonian or other non-white stimulation. Journal of Computational Neuroscience, 39, 29–51.

Siegert, A.J.F. (1951). On the first passage time probability problem. Physical Review, 81, 617–623.

Sirovich, L. (2003). Dynamics of neuronal populations: eigenfunction theory; some solvable cases. Network, 14 (2), 249–272.

Sirovich, L., Omurtag, A., & Knight, B. (2000). Dynamics of neuronal populations: The equilibrium solution. SIAM Journal on Applied Mathematics, 60(6), 2009–2028.

Song, S., Sjöström, P.J., Reigl, M., Nelson, S., & Chklovskii, D.B. (2005). Highly nonrandom features of synaptic connectivity in local cortical circuits. PLoS Biology, 3(3), e68.

Stein, R.B. (1965). A theoretical analysis of neuronal variability. Biophysical Journal, 5, 173.

Stein, R.B., French, A.S., & Holden, A.V. (1972). The frequency response, coherence, and information capacity of two neuronal models. Biophysical Journal, 12, 295.

Tchumatchenko, T., Malyshev, A., Wolf, F., & Volgushev, M. (2011). Ultrafast Population Encoding by Cortical Neurons. The Journal of Neuroscience, 31, 12–171.

Thomson, A.M., Deuchars, J., & West, D.C. (1993). Large, deep layer pyramid-pyramid single axon EPSPs in slices of rat motor cortex display paired pulse and frequency-dependent depression, mediated presynaptically and self-facilitation, mediated postsynaptically. Journal of Neurophysiology, 70(6), 2354– 2369.

Tsurui, A., & Osaki, S. (1976). On a first-passage problem for a cumulative process with exponential decay. Stochastic Processes and their Applications, 4(1), 79–88.

Tuckwell, H.C. (1988). Introduction to theoretical neurobiology: (Vol. 2): nonlinear and stochastic theories Vol. 8. Cambridge: Cambridge University Press.

Vilela, R.D., & Lindner, B. (2009a). Are the input parameters of white noise driven integrate and fire neurons uniquely determined by rate and CV? Journal of Theoretical Biology, 257(1), 90–99.

Vilela, R.D., & Lindner, B. (2009b). Comparative study of different integrate-and-fire neurons: Spontaneous activity, dynamical response, and stimulus-induced correlation. Physical Review E, 80, 031–909.

Wolff, L., & Lindner, B. (2008). Method to calculate the moments of the membrane voltage in a model neuron driven by multiplicative filtered shot noise. Physical Review E, 77, 041–913.

Wolff, L., & Lindner, B. (2010). Mean, variance, and autocorrelation of subthreshold potential fluctuations driven by filtered conductance shot noise. Neural Computation, 22(1), 94–120.

Acknowledgements

This work was funded by the BMBF (FKZ:01GQ1001A), the DFG research training group GRK1589/1, and a DFG research grant (LI 1046/2-1).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no conflict of interest

Additional information

Action Editor: Brent Doiron

Appendix A: Expressions for ϕ(v) for various neuron models

Appendix A: Expressions for ϕ(v) for various neuron models

Here, we list explicit expressions for

for PIF, LIF and QIF neurons.

-

PIF (f(v) = μ):

$$ {\phi}(v) = v \left( \frac{1}{a} + \frac{\tau_{\mathrm{m}} r_{\text{in}}}{\mu} \right). $$(28) -

LIF (f(v) = μ − v):

$$ {\phi}(v)= \frac{v}{a} - \tau_{\mathrm{m}} r_{\text{in}} \ln(|\mu-v|). $$(29) -

QIF (f(v) = μ + v 2):

$$ {\phi}(v)\,=\, \frac{v}{a} + \frac{\tau_{\mathrm{m}} r_{\text{in}}}{\sqrt{|\mu|}} \left\{\begin{array}{lll} \arctan\left( \frac{v}{\sqrt{\mu}} \right) & \mu > 0 \\ \!-\text{arctanh}\left( \frac{v}{\sqrt{-\mu}} \right) & \,-\,\sqrt{-\mu} < v \!<\! \sqrt{\,-\,\mu} \\ -\text{arccoth}\left( \frac{v}{\sqrt{-\mu}} \right) & \text{otherwise} \\ \end{array}\right.. $$(30)

Rights and permissions

About this article

Cite this article

Droste, F., Lindner, B. Exact analytical results for integrate-and-fire neurons driven by excitatory shot noise. J Comput Neurosci 43, 81–91 (2017). https://doi.org/10.1007/s10827-017-0649-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10827-017-0649-5