Abstract

We consider a general integrate-and-fire (IF) neuron driven by asymmetric dichotomous noise. In contrast to the Gaussian white noise usually used in the so-called diffusion approximation, this noise is colored, i.e., it exhibits temporal correlations. We give an analytical expression for the stationary voltage distribution of a neuron receiving such noise and derive recursive relations for the moments of the first passage time density, which allow us to calculate the firing rate and the coefficient of variation of interspike intervals. We study how correlations in the input affect the rate and regularity of firing under variation of the model’s parameters for leaky and quadratic IF neurons. Further, we consider the limit of small correlation times and find lowest order corrections to the first passage time moments to be proportional to the square root of the correlation time. We show analytically that to this lowest order, correlations always lead to a decrease in firing rate for a leaky IF neuron. All theoretical expressions are compared to simulations of leaky and quadratic IF neurons.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Models of the integrate-and-fire (IF) type have been widely used in the study of neural systems (reviewed in Burkitt 2006a, b). Usually, they describe neuronal dynamics via a single variable, the membrane voltage \(v\), complemented by a fire-and-reset rule that is applied once a voltage threshold is crossed. In numerical as well as analytical studies, this minimal description has allowed insights, for instance, into neuronal information transmission properties (Brunel et al. 2001; Lindner and Schimansky-Geier 2001), the effect of input correlations (De La Rocha et al. 2007; Salinas and Sejnowski 2002; Moreno et al. 2002), or the dynamics of whole networks (Brunel 2000; Softky and Koch 1993; Shadlen and Newsome 1998). Further, it can be readily extended to include more complex behavior, such as spike-frequency adaptation (Liu and Wang 2001; Schwalger et al. 2010), which can then be studied in a well-understood setting. Last but not least, exponential IF models have been shown to be powerful predictors of the dynamics of real pyramidal cells (Badel et al. 2008).

The synaptic input to the neuron is commonly thought of as a sequence of stereotypical spikes with stochastic arrival times; mathematically speaking, it is a point process where each event is a delta function (shot noise). As such discrete input with a potentially rich correlation structure is notoriously difficult to treat analytically, many studies have employed the so-called diffusion approximation, modeling the massive synaptic bombardment as Gaussian white noise. This is usually justified by arguing that the overall input is a superposition of a large number of nearly uncorrelated spike trains and that each individual synaptic event only has a small weight.

However, there are various physiologically observed input characteristics that cannot be accounted for by uncorrelated Gaussian fluctuations. Deviations from Gaussian white noise occur both with respect to the stationary distribution, which is often non-Gaussian, and temporal correlations, which can be pronounced. In other words, a more faithful description of the input is a non-Gaussian colored noise process.

In certain brain states, for example, the input switches between two well distinguishable levels, so-called up and down states of the network (Cowan and Wilson 1994; Shu et al. 2003). Such input is not Gaussian but follows a bimodal distribution; on a coarse level, it can be considered two-valued. Further, in some cells, it has been shown (Markram et al. 1997) that the assumption of many small superposed pulses underlying the Gaussian approximation is violated and that thus, the shot-noise character of synaptic input should be taken into account (Richardson and Gerstner 2005).

Deviations from the assumption of white input (i.e., lack of temporal correlations) are even more severe in many neural systems. The activity of presynaptic cells may be correlated, both among neurons (De La Rocha et al. 2007) and in time (Bair et al. 1994) and superposition of spike trains does not remove temporal correlations (Lindner 2006). This, along with synaptic filtering (Brunel and Sergi 1998; Moreno et al. 2002) and short-term synaptic plasticity (Lindner et al. 2009), leads to an overall input that is colored instead of white.

A simple non-Gaussian colored noise process is dichotomous noise, a Markovian two-valued stochastic process. In physics and biophysics, dichotomous noise has been popular as a modeling tool for a long time (Horsthemke and Lefever 1984; Hänggi and Jung 1995; Bena 2006), both due to its analytical tractability as well as its applicability to “on-off” situations. Dichotomous noise has been used to model a vast number of phenomena, ranging from the movement of molecular motors (Astumian and Bier 1994) to the switching of single spins (Rugar et al. 2004). Some further applications arise because dichotomous noise converges to Gaussian white noise or Poissonian shot noise when taken to appropriate limits (van den Broeck 1983). In contrast to the (likewise exponentially correlated) Gaussian Ornstein–Uhlenbeck process, dichotomous noise is a discrete process, possessing infinitely many cumulant correlation functions.

In neuroscience, dichotomous noise has been used to model the opening and closing of ion channels (Horsthemke and Lefever 1981; Fitzhugh 1983; Goychuk and Hänggi 2000); its use as a model for neural input has been relatively sparse (Salinas and Sejnowski 2002; Lindner 2004a). However, dichotomous noise is interesting in several of the situations mentioned above. Specifically, it can be used to model input (i) from a presynaptic population that undergoes transitions between up and down states, (ii) in the form of finite pulses, and (iii) from a strong single presynaptic bursting cell. More generally, dichotomous noise allows to build a tractable model to explore the effect of temporal correlations and non-Gaussianity of input currents on the firing statistics of neurons.

Here, we apply the well-developed techniques for the study of dichotomous flows (Horsthemke and Lefever 1984; Bena 2006) to neuron models of the IF type. In doing so, the main difference to the problems previously considered in the statistical physics literature lies in the initial and boundary conditions that are imposed by the fire-and-reset rule. In the first part, we derive exact expressions for the stationary distribution of membrane voltages as well as the moments of the first passage time density for a general IF neuron driven by dichotomous noise with asymmetric switching rates. We emphasize that this constitutes one of the rare cases where the firing statistics of a stochastic neural dynamics can be exactly calculated. As a first application, we then use these expressions to study the effect of temporal input correlations on neural firing. In the interest of conciseness, we restrict ourselves to symmetric rates in this case; the general asymmetric case is especially relevant for taking the shot-noise limit and for modeling input from a presynaptic population that switches between up and down states, both of which will be dealt with elsewhere.

The impact of temporal correlations in the input on the firing statistics of a neuron has been studied by a number of authors, mostly using Ornstein–Uhlenbeck (OU) processes (Brunel and Sergi 1998; Fourcaud and Brunel 2002; Salinas and Sejnowski 2002; Brunel and Latham 2003; Middleton et al. 2003; Lindner 2004a; Moreno et al. 2002; Schwalger and Schimansky-Geier 2008). Specifically, one can ask whether the firing rate increases or decreases with increasing correlation time and whether firing becomes more or less regular. Salinas and Sejnowski (2002) have investigated these questions in a setup of perfect and leaky IF neurons receiving symmetric dichotomous noise. They give analytical expressions for firing rate and CV in closed form for the perfect IF and as a series expansion for the leaky IF. For both, they report that firing rate as well as CV increases with increasing correlation time \(\tau _c\). When varying \(\tau _c\), Salinas and Sejnowski (2002) keep the noise variance fixed. As we argue below, this is the proper choice for input with large \(\tau _c\), while for small \(\tau _c\), fixing the noise intensity allows for a more meaningful comparison between white and correlated input, and leads to different results (most importantly, we find a decrease instead of an increase in firing rate).

The outline of this paper is as follows: After describing the model and the governing equations (Sect. 2), we calculate exact expressions for the stationary probability density (Sect. 3) of a general IF neuron driven by dichotomous noise. We then give exact recursive relations for the moments of the first passage time density (Sect. 4) and derive a simple approximation for rate and CV that is valid in the limit of long correlation times (Sect. 5). In Sect. 6, we apply these results and study the effect of correlations on firing rate and CV. Specifically, we plot firing rate and CV as a function of the correlation time, the base current, and the noise intensity both for leaky and quadratic IF neurons and compare them with the white-noise-driven case. In all cases, we find the firing rate to be lower for correlated input, while the CV may be either higher or lower. In Sect. 7, we consider the limit of small correlation times and show analytically that to the lowest order in \(\tau _c\), the firing rate of leaky IF neurons always decreases with the correlation time. We conclude with a brief discussion of our results in Sect. 8.

2 Model and governing equations

We consider a general IF neuron. Between spikes, its voltage dynamics is described by

where \(\eta (t)\) is dichotomous noise and \(f(v)\) is a continuous, potentially nonlinear function. Whenever \(v\) crosses the threshold \(v_T\), it is reset to \(v_R\) and a spike is registered. Common choices for \(f(v)\) are \(f(v) = \mu \), yielding the perfect IF neuron (PIF), \(f(v) = \mu - v\), the leaky IF neuron (LIF), and \(f(v) = \mu + v^2\), the quadratic IF neuron (QIF), where the parameter \(\mu \) quantifies a base current to the neuron. We will use the latter two models for numerical verification of the expressions we derive, but stress that our theory is valid for arbitrary nonlinearities. Note that for ease of notation, we consider non-dimensionalized dynamics where time is measured in units of the membrane time constant.

The dichotomous Markovian process (DMP) \(\eta (t)\) is a two-state process; it jumps between the values \(\sigma _+\) and \(\sigma _-\) (see Fig. 1). Jumps happen at constant rates \(k_+\) and \(k_-\), where \(k_+\) denotes the rate of hopping from \(\sigma _+\) to \(\sigma _-\) (and vice versa for \(k_-\)). When \(k_+ = k_- = k\) and \(\sigma _+ = -\sigma _- = \sigma ,\,\eta (t)\) is called symmetric. Note that asymmetry in the values \(\sigma _\pm \) can always be transformed away: a system driven by such noise is equivalent to a system with an additional bias \((\sigma _+ + \sigma _-)/2\), driven by noise with symmetric values \(\pm \sigma \), where \(\sigma = (\sigma _+ - \sigma _-)/2\). We assume \(\sigma _+ > \sigma _-\) and restrict all further analysis to the case \(f(v) + \sigma _+ > 0 \) for all \(v \in [v_R, v_T]\) (thereby excluding scenarios where the neuron would never be able to reach the threshold).

The time evolution of dichotomous noise is described by the master equation

where \(P_{\pm }(t)\) is the probability that the DMP takes the value \(\sigma _{\pm }\) at time \(t\). The solution of this equation is straightforward and well known (Fitzhugh 1983; Horsthemke and Lefever 1984); here, we list important statistics it allows to calculate. The expectation of \(\eta (t)\) is

It is apparent that in general, asymmetric dichotomous noise has nonzero mean. The residence times in each state are exponentially distributed with expectation \(1/k_\pm \); the variance of the process is

Dichotomous noise is exponentially correlated with the correlation time \(\tau _c\) given by

Another quantity useful for the comparison to other noise processes is the noise intensity

In accordance with the standard approach for dynamical systems driven by dichotomous noise (Horsthemke and Lefever 1981; Bena 2006), we extend the master equation to the full system (noise process and neuronal dynamics) by considering \(P_{\pm }(v,t) dv\), i.e., the probability that the DMP takes the value \(\sigma _{\pm }\) and the neuron’s membrane voltage is in the interval \((v, v + dv)\) at time \(t\). To this end, we combine the continuity equations that link the change in \(P_{\pm }(v,t)\) to the fluxes \(J_{\pm }(v,t) = (f(v) + \sigma _{\pm }) P_{\pm }(v,t)\) with Eqs. (2) and (3). Additionally, we need to incorporate the fire-and-reset rule: Trajectories are removed at \(v_T\) and reinserted at \(v_R\). If \(f(v_T) + \sigma _- > 0\), the threshold can be crossed at both noise values. For the moment, we call the respective fluxes over the threshold \(r_+(t) {:=} J_+(v_T,t)\) and \(r_-(t) {:=} J_-(v_T,t)\); they sum to the instantaneous firing rate, \(r(t) = r_+(t) + r_-(t)\). The probability density is thus governed by

This describes the stochastic switching between two deterministic flows, the “+” dynamics and the “-” dynamics. The source and sink terms \(r_\pm (t) \delta (v - v_R)\) and \(r_\pm (t) \delta (v - v_T)\) can be seen as a formal way to prescribe boundary conditions (cf. Richardson and Swarbrick 2010). They implement the fire-and-reset rule and mark the most profound difference to dichotomous noise problems previously treated in the statistical physics literature.

3 Stationary distribution

We first want to calculate the stationary probability distribution of voltages. To this end, we start from the stationarity condition for Eqs. (8) and (9),

where we have symmetrized the DMP values by introducing

(to unburden notation, we return to calling this new function \(f(v)\) in the following) and where we have expressed the fluxes over the threshold by the stationary firing rate \(r_0\) and the ratio

which denotes the fraction of trajectories that cross the threshold in the “+” dynamics.

Without solving the equations, we can already assess how the probability density behaves at threshold and reset voltage due to the fire-and-reset rule. To this end, we integrate Eq. (10) from \(v_R - \epsilon \) to \(v_R + \epsilon \) and let \(\epsilon \rightarrow 0\), which yields

where \(P_+(v_R^{\pm }) = \lim _{\epsilon \rightarrow 0}P_+(v_R\pm \epsilon )\). Similarly, integrating from \(v_T - \epsilon \) to \(v_T + \epsilon \) and taking into account that there is no probability above threshold yields

Analogously, we obtain

Here, we already observe an important difference to the case of IF neurons driven by white noise: The probability density is no longer continuous, but exhibits jumps at \(v_R\) and \(v_T\) (cf. Fig. 2). This is typical for colored noise and has also been observed for neurons driven by an OU process (Fourcaud and Brunel 2002).

Stationary probability density compared to the diffusion approximation. Input in both cases is symmetric dichotomous noise with intensity \(D = 0.4\) and correlation time \(\tau _c = 0.15\). a LIF with \(\mu = 0.8, v_R = 0, v_T = 1\). b QIF with \(\mu =-0.2, v_R=-\infty , v_T=\infty \). We plot theory (thin lines) and simulation results (circles) and compare them to the analytical results for white noise input with the same \(D\) (thick lines, see e.g., Vilela and Lindner 2009). The most prominent qualitative difference lies in the discontinuities that correlated input induces at the (finite) reset and threshold points of the LIF

In the following, we will solve Eqs. (10) and (11) separately below \(v_R\) and between \(v_R\) and \(v_T\), i.e., excluding the source and sink terms. The jump conditions can then be satisfied by choosing the respective integration constants appropriately.

Ultimately, we are interested in the probability that the voltage is in an infinitesimal interval around \(v\), independent of the state of the noise. The corresponding probability density is given by

We define also

Writing Eqs. (10) and (11) in terms of \(p(v)\) and \(q(v)\) and adding one equation to the other leads to a stationarity condition for the total flux which can be directly integrated, yielding

where \(J_0\) is piecewise constant:

Subtracting Eq. (11) from Eq. (10) yields one remaining ordinary differential equation (ODE),

where

We can eliminate \(q(v)\) using Eq. (20) and define \(g(v) {:=} f(v)\) \( J_0 - (f^2(v)- \sigma ^2) p(v)\); the ODE then reads

Note that we have divided by \(f^2(v)- \sigma ^2\) here, which can lead to singular behavior at points where the “-” dynamics has a fixed point (FP); this is treated in detail below.

Solving the ODE through variation of constants and integrating by parts to simplify the result yields

where

In Eq. (25), \(d\) can still be chosen freely, as long as \(c\) has not been fixed. Equation 25 represents two solutions (one with \(J_0 = 0\) for \(v < v_R\) and one with \(J_0 = r_0\) for \(v > v_R\)), each with its own integration constant \(c\). In order to fully appreciate how these integration constants as well as \(r_0\) and \(\alpha \) are determined, we first need to discuss how fixed points of the “-” dynamics need to be dealt with.

3.1 Dealing with fixed points in the “-” dynamics

As mentioned above and noted first by Bena et al. (2002), problems may arise at fixed points of the deterministic flows. We only need to consider fixed points in the “-” dynamics, \(f(v_F) - \sigma = 0\), as fixed points in the “+” dynamics would be impossible for the system to overcome; such a neuron would never fire. To see how \(p(v)\) behaves in the vicinity of a fixed point \(v_F\), we approximate \(f(v) \approx f(v_F) + f'(v_F) (v - v_F) = \sigma + f'(v_F) (v - v_F)\). This means \(f'(v) \approx f'(v_F)\), \(f^2(v)-\sigma ^2 \approx 2 \sigma f'(v_F) (v - v_F)\),

and thus

Further discussion of this formula depends on whether we are dealing with a stable or an unstable fixed point.

3.1.1 Unstable fixed points

If \(v_U\) is an unstable fixed point, \(f'(v_U) > 0\) and \(p(v)\) diverges,

This is “clearly unphysical and mathematically improper in view of the requirement of normalization” (Bena et al. 2002). Specifically, one would expect the probability to find the system near an unstable fixed point to be low and not high, and more generally, divergences in the probability density are only acceptable if they can be integrated.

As pointed out in Bena et al. 2002, one thus needs to consider separate solutions above and below such fixed points and then choose their integration constants such that divergent terms vanish at \(v_U\). In the case considered here, this corresponds to setting \(c = 0\) and \(d = v_U\), both above and below \(v_U\). We can then apply l’Hôpital’s rule to calculate the limit

To see that this does indeed make sense, it is instructive to take one step back and ask: If \(p(v)\) must not diverge at \(v_U\), which value should it take? At a fixed point of the “-” dynamics, \(J_- = (f(v_U) - \sigma ) P_-(v_U) = 0\), so that the whole flux \(r_0\) has to be mediated by the “+” dynamics, which fixes

This allows to calculate \(P_-(v_U)\) and thus \(p(v_U)\) as follows: At \(v_U\), Eq. (11) becomes

which can be solved for \(P_-(v_U)\), yielding indeed the limit calculated above.

3.1.2 Stable fixed points

For stable fixed points, \(f'(v_S) = -|f'(v_S)|\) and one sees from Eq. (28) that the previously problematic term becomes

For \(k_- > |f'(v_S)|\), this does not diverge (trajectories leave toward the “+” state faster than new ones are coming in); for \(k_- < |f'(v_S)|\), it diverges but can still be integrated (cf. Fig. 3). Stable fixed points thus pose no fundamental problem; however, we still have to make sure that they lie outside the integration boundaries of the integral in Eq. (25), where they would cause a divergence.

Behavior of the stationary probability density at fixed points of the “-” dynamics. We plot probability densities (thin lines: theory, circles: simulation results) as well as the nonlinearity \(f(v) \pm \sigma \), where stable FPs are marked by black dots and unstable FPs by white dots. a LIF with \(\mu = 0.8, v_R = 0, v_T = 1, \sigma = 0.4\) and \(k_+ = 1.5\). Depending on the value of \(k_-\), \(p(v)\) can either be continuous at a stable FP (\(k_- = 1.2\)) or exhibit an integrable divergence (\(k_- = 0.8\)). b QIF with \(\mu =-0.2, v_R=-\infty , v_T=\infty , \sigma = 3\) and \(k_+ = 5\). Again, \(p(v)\) is either continuous (\(k_- = 4\)) or exhibits an integrable divergence (\(k_- = 3\)) at the stable FP. Note that due to a proper choice of integration constants, it is smooth and continuous at the unstable FP

3.2 Boundary conditions and full solution

In contrast to the case of white-noise-driven IF neurons, the stationary probability density is in general not defined for arbitrarily negative values of \(v\). The support of \(p(v)\) is the interval \([v_-, v_T]\), where \(v_-\) is either the first fixed point smaller than \(v_R\), if \(f(v_R) - \sigma < 0\), or \(v_R\) itself, if \(f(v_R) - \sigma > 0\) (in both cases, trajectories cannot cross this point toward smaller values of \(v\). It extends to negative infinity only if \(f(v_R) - \sigma < 0\), and no fixed point exists below of \(v_R\) (such as, e.g., for a PIF with \(\mu - \sigma < 0\)).

As pointed out above, solutions have to be given separately for intervals that are delimited by \(v_R\), \(v_T\), and fixed points of the “-” dynamics. The integration constants are either determined by the jump conditions at reset voltage and threshold or, if the interval in question neighbors an unstable fixed point, fixed by the requirement of avoiding divergence (see Fig. 4 for a schematic depiction). If the lower and upper interval boundaries are denoted by \(a\) and \(b\), the full solution is given by

where using the abbreviation \(h(v) {:=} (\sigma (2\alpha -1)-f(v))e^{\phi (v)}\), the \(\varGamma _i(v)\) are given by

The above expressions for \(p(v)\) still include \(r_0\), the stationary firing rate, and \(\alpha \), the fraction of trajectories that cross \(v_T\) during “+” dynamics. The latter can be calculated as follows: If \(f(v_T) - \sigma < 0\), threshold crossings happen only during “+” dynamics, which entails \(\alpha = 1\). Otherwise, the integration constant of the rightmost interval is always determined by either an unstable FP or the jump condition at \(v_R\), which allows us to use the remaining jump condition at the threshold to calculate \(\alpha \) (see Fig. 4). This yields

if there is no unstable FP, or

if the unstable FP next to the threshold is at \(v_U\). Finally, the stationary firing rate \(r_0\) can be determined by requiring \(p(v)\) to be normalized.

Schematic depiction of how the integration constants for the probability density are determined in different regions (delimited by dashed lines). Integration constants in regions next to unstable fixed points are always chosen to avoid a divergence; the remaining ones are determined by the jump conditions at reset and threshold. If \(f(v_T) - \sigma < 0\), threshold crossing happens only via the “+” dynamics, so \(\alpha = 1\). If \(f(v_T) - \sigma > 0\), the integration constant in the rightmost region is either determined by ensuring non-divergence at the nearest fixed point (necessarily unstable) or the jump condition at the reset, if no such fixed point exists. This allows \(\alpha \) to be determined via the jump condition at the threshold. Roman numerals denote which of the solutions \(p_\mathrm{I}(v) \cdots p_\mathrm{V}(v)\) applies. Note that commonly used IF models have at most one stable and one unstable fixed point

4 Moments of the inter-spike interval density

The expressions derived in the previous section allow us to calculate the stationary distribution of voltages and the firing rate of an IF neuron driven by dichotomous noise. The firing rate is inversely proportional to the average interspike interval (ISI), i.e., the first moment of the ISI density. Higher moments of the ISI density are also of interest; the second moment, for example, appears in the definition of the coefficient of variation (CV), which is often used to characterize the regularity of neural firing. The ISI density is equivalent to the density of trajectories that, starting at the reset voltage, reach the threshold for the first time after a time \(T\), i.e., the first passage time (FPT) density.

In this section, we derive expressions for the moments of the FPT density. To this end, we adopt and adapt the approach outlined (for Gaussian white noise) in Lindner 2004b. The central argument of our derivation is the following: The FPT density \(\rho (T)\) corresponds exactly to the (time dependent) flux of probability across the threshold, \(j(v_T, T) = J_+(v_T, T) + J_-(v_T, T)\), provided that all trajectories start at \(v_R\) at \(t = 0\) and that no probability can flow back from above the threshold (otherwise, we would count re-entering trajectories multiple times). Note that we do not need to directly consider the fire-and-reset rule in this case; it only enters through its impact on the initial conditions.

For colored noise that can take continuous values, the “no-backflow” condition is notoriously difficult to implement and often only fulfilled approximately. Here, it amounts to the simple condition \(J_-(v_T, t) \ge 0\). For \(f(v_T) - \sigma > 0\), this is automatically fulfilled, while for \(f(v_T) - \sigma < 0\), it will have to be enforced through the boundary condition \(J_-(v_T, t) = 0\). The \(n\)-th moment of the FPT density is then given by

We start by rewriting the master equation Eqs. (8) and (9) in terms of fluxes. We introduce

and

which allows us to write

where

We then multiply both sides by \(t^n\) and integrate over \(t\) from 0 to \(\infty \) (the l.h.s by parts); introducing the abbreviations

we obtain

-

for \(n = 0\)

$$\begin{aligned} \partial _v J_0(v)&= \frac{f(v)j(v,0) - \sigma w(v,0)}{f^2(v)- \sigma ^2}, \end{aligned}$$(50)$$\begin{aligned} \partial _v Q_0(v)&= \frac{f(v)w(v,0) - \sigma j(v,0)}{f^2(v)- \sigma ^2} \nonumber \\&-\gamma _1(v) Q_0(v) - \gamma _2(v) J_0(v), \end{aligned}$$(51) -

for \(n > 0\)

$$\begin{aligned} \partial _v J_n(v)&= n \frac{f(v)J_{n-1}(v) - \sigma Q_{n-1}(v)}{f^2(v)- \sigma ^2}, \end{aligned}$$(52)$$\begin{aligned} \begin{aligned} \partial _v Q_n(v)&= n \frac{f(v)Q_{n-1}(v) - \sigma J_{n-1}(v)}{f^2(v)- \sigma ^2}\\&\quad \!\!\! -\gamma _1(v) Q_n(v) - \gamma _2(v) J_n(v), \end{aligned} \end{aligned}$$(53)

where we have used that

(eventually, every trajectory will have crossed the threshold). Given suitable boundary and initial conditions (IC), we can recursively solve these ODEs for \(J_n(v)\). Evaluated at the threshold, this is exactly the \(n\)-th FTP moment,

All trajectories start at the reset voltage \(v_R\) at \(t = 0\), so for \(\eta (0) = \sigma \), we have the ICs

(and vice versa for \(\eta (0) = -\sigma \)). Thus, we actually need to consider two conditional FPT densities

and

The stationary FPT density is then

where \(p(\eta (0) = \sigma ) = \alpha \) is the probability that the last threshold crossing happened in “+” dynamics. Due to the linearity of the problem, we can replace this averaging over the noise upon firing by preparing a “mixed” initial state, \(P_+(v, 0) = \alpha \delta (v - v_R)\), \(P_-(v, 0) = (1 - \alpha ) \delta (v - v_R)\). We thus have the ICs

Equations (50, 52) can directly be integrated,

where \(\theta (v)\) is the Heaviside step function and the integration constant in Eq. (62) is fixed by requiring the FPT density to be normalized (\(J_0(v_T) = 1\)). The other ODEs can be solved by variation of constants, yielding the general solution

where we have used \(\phi (v) = \int ^v dx\; \gamma _1(x)\) and where \(E_n\) can be freely chosen as long as \(D_n\) is not fixed.

Unfortunately, it is evident from Eqs. (64) and (65) that the presence of fixed points of the “-” dynamics remains the nuisance that it was in the calculation of the stationary probability density (see Sect. 3.1). Again, we thus need to split the range of possible voltages \([v_-, v_T]\) at fixed points of the “-” dynamics [note that, in contrast to the previous section, we do not need to split at \(v_R\), as the jump condition at \(v_R\) is already incorporated through the initial conditions Eqs. (60 and 61)].

If an interval borders on an unstable FP, we can avoid a divergence by setting the integration constants \(D_n= 0\) and \(E_n = v_U\). To see that this makes sense, one can apply l’Hôpital’s rule to calculate the limits of \(Q_0(v)\) and \(Q_{n>0}(v)\) for \(v \rightarrow v_U\) and this choice of values,

At an FP of the “-” dynamics, all flux occurs through the “+” state, so there is no difference between \(J_{n}(v_U)\) and \(Q_{n}(v_U)\). This is reflected in the two limits: According to Eq. (62), \(J_0(v_U) = 1\) (unstable FPs occur only above the reset voltage), so we have indeed \(J_0(v_U) = Q_0(v_U)\). Eq. (67) extends this to all \(n\) by induction, as from \(Q_{n - 1}(v_U) = J_{n - 1}(v_U),\,Q_n(v_U) = J_n(v_U)\) follows. This also means that no special treatment of Eq. (63) is necessary at unstable FPs, as the divergence of the integrand cancels exactly for \(Q_n(v_U) = J_n(v_U)\).

Boundary conditions for \(Q_n(v)\) are well defined in all intervals:

-

Leftmost interval. If \(f(v_R)-\sigma > 0\), the lower boundary is the reset voltage \(v_R\), which all trajectories leave toward the right. For \(t > 0\), \(P(v_R, t)=0\) and consequently \(J_-(v_R, t) = 0\), fixing \(Q_0(v_R) = 2 \alpha - 1\) and \(Q_{n>0}(v_R) = 0\). Otherwise, if \(f(v_R)-\sigma < 0\), the upper boundary of the interval is either an unstable fixed point (see inner intervals) or \(v_T\) (with \(f(v_T)-\sigma < 0\), see rightmost interval).

-

Inner intervals. Inner intervals necessarily have an unstable FP as upper or lower boundary, at which \(Q_n(v_U) = J_n(v_U)\).

-

Rightmost interval. If \(f(v_T)-\sigma < 0\), we need to make sure that there is no flux of probability back across the threshold. This amounts to imposing \(J_-(v_T, t) = 0\), implying \(Q_n(v_T) = J_n(v_T)\). If \(f(v_T)-\sigma > 0\), the “no-backflow” condition is always fulfilled. In this case, we have a condition at the lower boundary of the interval, which is either an unstable FP (demanding \(Q_n(v_U) = J_n(v_U)\)) or the reset voltage \(v_R\) (with \(f(v_R)-\sigma > 0\), see leftmost interval).

The integration constant of \(J_{n>0}(v)\) is determined as follows: If \(f(v_-) - \sigma > 0\), all trajectories leave \(v_- = v_R\) instantaneously toward higher values of \(v\). Thus, \(p(v_-,t) = 0\) for \(t > 0\) and consequently \(J_{n>0}(v_-) = 0\). If \(f(v_-) - \sigma < 0\), \(v_-\) is at a stable FP, so that \(J_n(v_-) = Q_n(v_-)\). Because \(Q_n(v)\) goes to zero as \(v\) approaches a stable FP, we again have the condition \(J_{n>0}(v_-) = 0\).

The fraction of trajectories that cross the threshold in “+” state, \(\alpha \), can be calculated using the expression given in the previous section. An alternative derivation goes as follows: \(\alpha \) corresponds to the time-integrated flux in “+” state, \(\int _{0}^{\infty } dt\;J_+(v_T,t)\). Thus, it can be determined from the relation

in which \(Q_0(v_T)\) on the r.h.s. in general also depends on \(\alpha \).

If there are no FPs other than a stable FP at \(v_-\), we obtain the following recursive relations

This case is relevant if \(\sigma \) is sufficiently large, e.g., when considering a limit close to white noise. A general solution that is further transformed to ease numerical integration is given in Appendix 9.

Evaluating Eqs. (69) - (72) recursively, we can now obtain an exact expression for the \(n\)-th moment of the FPT density,

This allows us to calculate, for instance, the stationary firing rate (given here for the case that there is no FP above \(v_R\)),

Similarly, one may use \(J_1(v_T)\) and \(J_2(v_T)\) to obtain a lengthy expression for the coefficient of variation \(CV = \sqrt{\left\langle T^2 \right\rangle -\left\langle T \right\rangle ^2}/\left\langle T \right\rangle \) (which we do not reproduce here). These exact expressions for firing rate and CV of a general IF neuron driven by asymmetric dichotomous noise are a central result of this work.

5 Approximation for firing rate and CV at large correlation times

When the correlation time of the DMP is large, one can derive a simple quasi-static approximation for the firing rate and CV. While we have already derived exact expressions, valid over the whole range of correlation times, in the previous section, this approximation has its merits in allowing immediate insights into neuronal firing at high \(\tau _c\), \(\mu \) or \(\sigma \), without the need for numerical integration. The approach outlined below shares similarities with those used to study PIF (Middleton et al. 2003) and LIF neurons (Schwalger and Schimansky-Geier 2008) driven by an OU process; however, the two-state nature of the DMP renders the derivation considerably less complicated.

Let us first make the notion of a “large” correlation time more precise. If we fix the DMP \(\eta (t)\) in “+” state, we are dealing with a deterministic system \(\dot{v} = f(v) + \sigma \). We denote the time that this system takes to go from \(v_R\) to \(v_T\) by \(T_d^+\). If \(f(v) - \sigma > 0\) for all \(v\) (the neuron also fires if the noise is fixed in “-” state), we can equivalently define a \(T_d^-\). We call the correlation time large if \(\tau _c \gg T_d^+\), and, in case \(T_d^-\) is defined, if also \(\tau _c \gg T_d^-\). Note that this implies the same for the residence times in both states, i.e., \(1/k_\pm \gg T_d^+\) and \(1/k_\pm \gg T_d^-\).

We start with the case where the neuron only fires in “+” state (\(f(v) - \sigma < 0\) for some \(v\)). On average, it emits \(1/(k_+ T_d^+) \gg 1\) spikes while in “+” state, followed by one long ISI in the quiescent “-” state. Neglecting “boundary effects” at switching events of the noise, the probability that a randomly picked ISI is a short interval of length \(T_d^+\) is thus

while the probability that it is a long interval in “-” state is

The mean ISI in this limit is given by

where we have used that \(1/(k_+ T_d^+) \gg 1\). Similarly, the second moment is given by

Firing rate and CV thus read

For the case where threshold crossings happen also in “-” state (\(f(v) - \sigma > 0\)), expressions can be derived using similar reasoning; here, we obtain

For symmetric dichotomous noise, the expressions simplify to

if \(f(v) - \sigma < 0\) for some \(v \in [v_R, v_T]\), and

if \(f(v) - \sigma > 0\) for all \(v \in [v_R, v_T]\).

It remains to calculate

For the LIF, it reads

and for the QIF

We will discuss the implications of these formulas in different limits and scalings in the next section.

6 Application: impact of correlations on firing rate and CV

Can we make general statements about the influence that temporal correlations in the input exert on the firing statistics of a neuron? Does the firing rate increase or decrease when we replace white input by correlated input? Does firing become more or less regular? In order to address such questions, we first need to clarify how to choose parameters to allow for a meaningful comparison between white noise and dichotomous noise. Here and in the following, we consider symmetric dichotomous noise (\(k_+ = k_- = k\)), but the discussion also applies to the asymmetric case.

When varying the correlation time \(\tau _c\), one faces the question of how to parameterize the noise process. One choice is to keep its variance \(\sigma ^2\) constant, which in turn means that the noise intensity \(D\) varies proportionally to \(\tau _c\), as

Alternatively, one may choose \(\tau _c\) and \(D\) to parameterize the noise, with the consequence that \(\sigma ^2\) varies inversely proportional to \(\tau _c\). Choosing one or the other can have a decisive impact on the response of the system and raises questions about how to interpret the observed behavior: Is a change in the response really a manifestation of changed correlations in the input? Or is it rather a consequence of the simultaneous change in \(\sigma \) or \(D\), i.e., one that would also occur if \(\tau _c\) were fixed and only \(\sigma \) or \(D\) were varied?

The fact that white noise has infinite variance, but a finite intensity suggests that in the limit of small correlation times, the appropriate choice is fixing the noise intensity \(D\). This is indeed a common parameterization in the literature when considering the white noise limit of colored noise (van den Broeck 1983; Broeck and Hänggi 1984; Bena 2006; Fourcaud and Brunel 2002; Brunel and Latham 2003). On the other hand, when \(\tau _c\) is large, a more sensible choice is fixing \(\sigma ^2\) (Schwalger and Schimansky-Geier 2008). This is also the parametrization chosen by Salinas and Sejnowski (2002). We note that an alternative parameterization that interpolates between these two extremes has been used in Alijani and Richardson (2011).

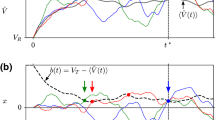

In Fig. 5 A and B, we reproduce Fig. 12a of Salinas and Sejnowski 2002, which shows firing rate and CV of an LIF as a function of \(\tau _c\) for different values of \(\sigma \) (which are kept fixed for each curve). Additionally, we plot the same curves as a function of \(D\) and include results for a white-noise-driven LIF (Fig. 5C,D). Exact expressions for the white noise case have been known for a long time (Siegert 1951, for a summary of results for LIF and QIF see e.g., (Vilela and Lindner 2009). First, we note that for the parameter values for which it converges, the series expansion for the LIF given in Salinas and Sejnowski 2002 is in excellent agreement with our quadrature expressions (the resulting curves are indistinguishable). It can be seen, however, that for small correlation times, much of the observed effect can already be explained by the increase in noise intensity; it is already present if the noise does not contain any correlations at all but is purely white (thick line in Fig. 5C,D). Put differently, the increase in firing rate and CV with \(\tau _c\) can be attributed to the increase of the noise intensity \(D=\sigma ^2 \tau _c\) at fixed variance. If we instead fix \(D\), the firing rate drops with increasing correlation time (illustrated by the symbols in Fig. 5A,C).

Firing rate (a, c) and CV (b, d) of an LIF at different correlation times/noise intensities. All parameters were chosen as in Fig. 12a of (Salinas and Sejnowski 2002) (\(v_R = 1/3, v_T=1, \mu = 0.5\)). For each of the thin lines, only \(\tau _c\) was varied, while \(\sigma \) was kept constant. Both columns show curves for the same parameters; plotted as a function of \(\tau _c = 1/(2k)\) in a, b (reproducing Fig. 12a of Salinas and Sejnowski 2002) and as a function of the noise intensity \(D = \sigma ^2/(2k)\) in c, d. Four points in parameter space are marked by symbols to ease comparison of the two columns. The thick lines are analytical results for an LIF driven by Gaussian white noise with the same \(D\). The insets in the right column show the same plots over a wider range of noise intensities. It can be seen that for small correlation times, much of the change in both mean firing rate and CV can already be explained by the increase in noise intensity. As illustrated by the symbols, the firing rate drops with correlation time if the noise intensity is kept fixed. Note that to compare our non-dimensionalized model quantitatively to the plots in Salinas and Sejnowski 2002, time needs to be multiplied and rate divided by the membrane time constant \(\tau _m =\) 10 ms

The analysis in Salinas and Sejnowski 2002 thus applies to input with large correlation times; in order to make statements about input with small \(\tau _c\), one should instead keep the noise intensity \(D\) fixed. This is the parameterization we use in the rest of this section. In order to fully describe the range of neuronal responses one may observe in this parameterization, we also show and interpret results for large correlation times.

The bounded support of the DMP has consequences that one needs to keep in mind when doing parameter scans: It must be possible for the neuron to fire in the “+” dynamics, which corresponds to demanding \(f(v) + \sigma > 0\) for all accessible \(v\). If the neuron is in a sub-threshold regime (\(f(v) < 0\) for at least some \(v\)), this imposes a constraint on the possible parameter values. For an LIF, they must fulfill the inequality

and for a QIF the inequality

If \(f(v)\) alone is already positive for all \(v\), the neuron is in a supra-threshold regime and there is no such constraint; however, for

the stable fixed point in the “-” dynamics disappears (see Fig. 6). This means that the neuron may fire even if the noise never leaves the “-” state, which has a strong qualitative effect on the rate and, especially, the regularity of firing.

In Figs. 7 and 8, we plot the firing rate (A) and the CV (B) of an LIF and a QIF, respectively, when \(\tau _c\) is varied while \(D\) is kept constant. First of all, it is apparent that fixing \(D\) results in a vastly different picture compared to (Salinas and Sejnowski 2002). In particular, for small \(\tau _c\) (where this is the appropriate parameterization), we find that the firing rate actually decreases with increased \(\tau _c\); for both LIF and QIF, it is always lower than for white noise input with the same intensity (thick bars). The CV at short correlation times is always larger than for white noise input in the QIF, while it can also be smaller for the LIF in a moderately sub-threshold regime. For larger \(\tau _c\), effects of our parameterization become clearly visible: In the supra-threshold case, the decrease in \(\sigma ^2\) eventually leads to the disappearance of the stable FP in the “-” dynamics (cf. Fig. 6), leading to a kink in the curves. More dramatically, in the sub-threshold case, firing is no longer possible when the variance becomes to small (\(f(v)+\sigma < 0\) for at least some \(v\)); consequently, curves end at such points.

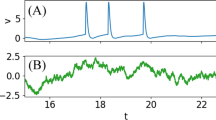

Two regimes of firing (here in an LIF). Shown are nonlinearities and FPs (a) and an example time course of input and voltage (b). A correlation time that is much larger than the deterministic time from reset to threshold leads to burst-like behavior if there is a stable FP in the “-” dynamics (left column). However, if the base current is high enough or \(\sigma \) is small enough, the neuron may fire even if the DMP \(\eta (t)\) is in the “-” state, leading to a much more regular spike train (right column)

Dependence of the firing rate (a) and the CV (b) of an LIF on the correlation time \(\tau _c\). We vary the correlation time while adjusting \(\sigma \) to keep \(D = 1\) constant. Different curves correspond to different base currents: far sub-threshold (\(\mu = -0.8\)), slightly sub-threshold (\(\mu = 0.8\)), and supra-threshold (\(\mu = 1.6)\). Thin lines: theory (quadrature results), circles: simulation results, dotted lines: quasi-static approximation (for \(\mu = 0.8\) and \(\mu =1.6\)). Thick bars: theory for white-noise-driven LIF. The firing rate can be seen to be always lower for correlated input compared to the white noise case, while the CV can either be lower or higher

Dependence of the firing rate (a) and the CV (b) of a QIF on the correlation time \(\tau _c (D = 1)\). Different curves correspond to different base currents \(\mu \) as indicated. Thin lines: theory (quadrature results), circles: simulation results, dotted lines: quasi-static approximation (for \(\mu = -0.1\) and \(\mu =1\)). Thick bars: theory for white-noise-driven QIF. Again, the firing rate can be seen to be always lower for correlated input compared to the white noise case, while the CV can either be lower or higher

The behavior for \(\tau _c \rightarrow \infty \) in the different parameterizations can also be directly read off the expressions from the quasi-static approximation, Eqs. (85)–(88): For fixed \(D\) and a supra-threshold \(\mu \), both \(T_d^+\) and \(T_d^-\) converge to \(T_d {:=} \int _{v_R}^{v_T} dv/f(v)\) for \(\sigma \rightarrow 0\), so for \(\tau _c \rightarrow \infty \), the firing rate saturates (\(r_0 \rightarrow T_d/2\)) and the CV tends to zero. In contrast, when \(\sigma ^2\) is fixed, \(T_d^+\) and \(T_d^-\) are independent of \(\tau _c\), and one directly sees that the firing rate saturates, while the CV either diverges \(\sim \sqrt{\tau _c}\) (sub-threshold) or saturates (supra-threshold). This is indeed the behavior described in Salinas and Sejnowski 2002. The divergence of the CV \(\sim \sqrt{\tau _c}\) was also reported for OU noise with fixed variance (Schwalger and Schimansky-Geier 2008).

In Figs. 9 and 10, we plot the firing rate (A) and the CV (B) of an LIF and a QIF, respectively, as the mean input \(\mu \) is varied for three different values of \(\tau _c\). The firing rate shows qualitatively the same behavior for LIF and QIF. It can be seen to be lower for correlated than for white noise input, and unsurprisingly, it increases monotonically with \(\mu \). The kinks in the curves for \(\tau _c= 1\) and \(\tau _c= 10\) occur where the stable FP in the “-” dynamics disappears (cf. Fig. 6). For the LIF, the CV may be slightly smaller than for the white noise case even where this FP still exists, whereas for the QIF, it is always larger than for the white noise case for small \(\mu \). In the limit \(\mu \rightarrow \infty \), both \(T_d^+\) and \(T_d^-\) decay \(\sim (v_T-v_R)/\mu \). Consequently, \(r_0\) diverges while the CV tends to zero (this is true for both parameterizations).

Firing rate (a) and CV (b) of an LIF as a function of the base current \(\mu \). Different curves correspond to different correlation times as indicated; \(D = 1\). Thin lines: theory (quadrature results), circles: simulation results, dotted lines: quasi-static approximation. Thick lines: theory for white noise case. No simulation results were plotted in the top panel to avoid an overly cluttered presentation. Again, the firing rate can be seen to be always lower for correlated input compared to the white noise case, while the CV may be either higher or lower. The kinks in the curves for both rate and CV occur where \(\mu \) becomes large enough that the neuron starts to fire tonically both in the “+” and the “-” state and are qualitatively well captured by the quasi-static approximation

Firing rate (a) and CV (b) of a QIF as a function of the base current \(\mu \). Different curves correspond to different correlation times as indicated; \(D = 1\). Thin lines: theory (quadrature results), circles: simulation results, dotted lines: quasi-static approximation. Thick lines: theory for white noise. No simulation results were plotted in the top panel to avoid an overly cluttered presentation. We see qualitatively the same behavior as for the LIF (Fig. 9)

Finally, in Figs. 11 and 12, we plot the firing rate (A) and the CV (B) of an LIF and a QIF, respectively, as a function of the noise intensity. The firing rate can again be seen to decrease with increasing \(\tau _c\). For the LIF, the CV shows a minimum at a finite noise intensity, both for white noise input as well as correlated input, if the correlation time is not too large. This is a signature of coherence resonance (Pikovsky and Kurths 1997). In contrast, the QIF is known not to exhibit coherence resonance (in the sense that the CV is minimal at a finite \(D\)) for white noise input; here, the CV monotonically decreases and, independent of parameters, approaches the value \(1/\sqrt{3}\) for large \(D\) (Lindner et al. 2003). It can be seen that this changes with correlated input, for which the CV diverges with increasing \(D\), leading again to a (potentially very broad) minimum. This is true for arbitrarily small correlation times: For any value of \(\tau _c\), there is a value of \(D\) at which \(T_d^+\) becomes small enough that the quasi-static approximation may be applied. From looking at Eq. (86), it is then apparent that any non-vanishing value of \(\tau _c\) eventually leads to a divergence of the CV.

Firing rate (a) and CV (b) of an LIF as a function of the noise intensity \(D\). Different curves correspond to different correlation times (as indicated) and share the same base current \(\mu = 0.8\). Thin lines: theory (quadrature results), circles: simulation results, dotted lines: quasi-static approximation. Thick lines: theory for white noise. The firing rate can be seen to be always lower than for the white noise case, while for this particular choice of \(\mu \), the CV is always higher

Firing rate (a) and CV (b) of a QIF as a function of the noise intensity \(D\). Different curves correspond to different correlation times (as indicated); \(\mu = -0.2\). Thin lines: theory (quadrature results), circles: simulation results, dotted lines: quasi-static approximation. Thick lines: theory for white noise. For a white noise driven QIF, the CV does not diverge in the high-noise limit but monotonically approaches a value of \(1/\sqrt{3}\), independent of parameters. Correlations in the input make this universal behavior disappear, leading to a (potentially very broad) minimum in the CV

7 Application: the limit of small correlation times

While our analytical expressions for firing rate and CV show excellent agreement with simulations, it is hard to derive general statements from them. For instance, evaluating our expressions for specific parameters has shown the firing rate to be lower for correlated than for white input for every parameter set we tried (see Figs. 7, 8, 9, 10, 11, 12), raising the question whether this is always the case. However, it seems impossible to answer this question just by looking at the recursive relations Eqs. (69)–(72).

In order to make the difference to white-noise input explicit, we thus expand the recursive relations for the moments of the FPT density for small values of \(\tau _c\), similar to what has been done for the case of Gaussian colored noise (Brunel and Sergi 1998; Fourcaud and Brunel 2002; Moreno et al. 2002; Brunel and Latham 2003). In this limit, \(\sigma = \sqrt{D/\tau _c}\) is large, so it is sufficient to consider the case with no unstable FP and a stable FP left of \(v_R\) (for the QIF, we will discuss the limit \(v_R \rightarrow -\infty , v_T \rightarrow \infty \) after doing the expansion in \(\tau _c\)). We thus start from Eqs. (69)–(72). The function appearing in the exponents, \(\phi (v)\), is readily expanded,

where we have used the potential

Consequently, we have

As \(\gamma _2(v)\) diverges for \(\tau _c\rightarrow 0 \), it is advantageous to rewrite the recursive relations in terms of \(\hat{Q}_n(v) {:=} Q_n(v)/\sigma \); after replacing \(\sigma \) by \(\sqrt{D/\tau _c}\), they read

Looking at the above equations, it is apparent that the lowest order contribution due to correlations will in general be of order \(\sqrt{\tau _c}\). Expanding in powers of \(\sqrt{\tau _c}\) and letting \(v_- \rightarrow -\infty \), we obtain

where

Equations (104) and (105) give the recursive relations for the FPT moments under Gaussian white noise input (Siegert 1951; Lindner 2004b). When the neuron is driven by dichotomous noise, these FPT moments undergo a correction [Eqs. (106) and (107)] that is in general to the lowest order proportional to \(\sqrt{\tau _c}\). Interestingly, this \(\sqrt{\tau _c}\) behavior was also found for LIFs driven by an Ornstein–Uhlenbeck process (OU), a Gaussian colored noise (Brunel and Sergi 1998; Moreno et al. 2002).

In Fig. 13, we compare the first-order approximation to firing rate (A) and CV (B) of an LIF to numerical simulations. It is apparent that for small \(\tau _c\), the correction decays indeed with the square root of the correlation time. For this particular choice of parameters, the first-order correction can be seen to provide a decent description over the whole range of admissible \(\tau _c\) values (for small \(\tau _c\), we always find it in excellent agreement with simulations).

For a QIF driven by an OU, the correction to the firing rate has been reported to be linear in \(\tau _c\), due to the choice of threshold and reset \(v_R\) and \(v_T\) at \(\pm \infty \) (Brunel and Latham 2003). Indeed, for \(v_R \rightarrow -\infty \) and \(v_T \rightarrow \infty \), the \(\sqrt{\tau _c}\) corrections vanish also for dichotomous noise, as we show in Appendix 10.

Firing rate (a) and CV (b) of an LIF in the limit of small correlation times (\(\mu = 0.5, D = 0.15\)). Circles: Simulation results, thick lines: theory for white noise, thin lines: first-order (\(\sqrt{\tau _c}\)) approximation for dichotomous noise. The insets show the absolute difference between a white-noise-driven LIF and simulation results (circles) as well as the first-order correction (lines) in a log–log plot, demonstrating that, to the lowest order, the correction is indeed \(\propto \sqrt{\tau _c}\)

Finally, we can now address the question under which conditions the correction to the firing rate is negative. Consider the correction to the mean FPT:

This correction is positive if

where

This is certainly the case if \(\vartheta (v)\) is monotonically increasing, i.e., \(\vartheta '(v) > 0\) or

If \(U'(v) > 0\) this is always true, so let us focus on \(U'(v) < 0\). In this case, if \(U'(x)\) is monotonically increasing up to \(v\), then \(-U'(x) \ge -U'(v) \quad \forall \quad x < v\) and

Thus, for potential shapes with positive curvature—such as the quadratic potential of the LIF—the correction to the mean FPT is indeed always positive, meaning that to lowest order in \(\tau _c\), the firing rate of an LIF always decreases with increasing correlations.

8 Summary and discussion

In this paper, we have theoretically studied IF neurons driven by asymmetric dichotomous noise. We have derived exact analytical expressions for the stationary probability distribution of voltages as well as for the moments of the ISI density. In doing this, we have taken care to ensure the proper treatment of fixed points in the “-” dynamics, which has allowed us to obtain valid expressions in all parameter regimes.

As a first application, we have used our theory to study the impact of temporally correlated input on neural firing, using symmetric dichotomous noise. We have argued that it is advantageous to keep the noise intensity fixed when exploring the effect of input with short correlation times, as opposed to keeping the variance fixed (Salinas and Sejnowski 2002), which is the more appropriate choice for long correlation times. We have then studied the firing rate and CV of LIF and QIF neurons when varying either the correlation time \(\tau _c\), the base current \(\mu \), or the noise intensity \(D\). We have found that, compared to neurons driven by Gaussian white noise with the same \(D\), the firing rate always decreases when the input is correlated, while the CV can be either higher or lower.

When varying the base current \(\mu \), we find that CVs change abruptly at a certain value of \(\mu \) when \(\tau _c\) is large, but not for small or vanishing \(\tau _c\). This could in principle be used to infer properties of presynaptic activity from single cell recordings in vivo. By measuring the spiking activity of a cell at different values of an injected current, an experimenter could replicate Figs. 9B and 10B. According to our theory, input that can be described by a two-state process with a long correlation time would manifest itself in a sudden drop of the CV as \(\mu \) is increased. Such input could for example arise due to up and down states of a presynaptic population. Conversely, input with short or vanishing correlation times would lead to a smoother and weaker dependence of the CV on \(\mu \).

Finally, varying \(D\), we found that under correlated input, the CV of a QIF no longer converges to the universal value of \(1/\sqrt{3}\) for large \(D\), as found for white noise (Lindner et al. 2003), but instead diverges. This means that with correlated input, also QIF neurons may exhibit a weak form of coherence resonance (in the sense that the CV is minimal at a finite value of \(D\)).

We have studied the recursive relations for the ISI moments in the limit of small correlation times and found that, in general, the first-order correction with respect to the diffusion approximation is proportional to \(\sqrt{\tau _c}\). The same had previously been observed for LIF neurons driven by an OU process, a different colored noise (Brunel and Sergi 1998; Moreno et al. 2002). For QIF neurons driven by OU processes, the firing rate correction has been shown to be of order \(\tau _c\) (Brunel and Latham 2003), which is also recovered in our case, as for \(v_R \rightarrow -\infty , v_T \rightarrow \infty \), the corrections proportional to \(\sqrt{\tau _c}\) can be shown to vanish. We have also used the expansion in small \(\tau _c\) to prove that for potentials with positive curvature (as is the case for LIF neurons), corrections to the firing rate are always negative (to the lowest order in \(\tau _c\)).

In addition to the qualitative similarities between neurons driven by dichotomous and OU processes at small correlation times, we have found that they agree well even quantitatively (results not shown). We thus expect our conclusions for small correlation times to be relevant also for other noise processes and think that they may help to clarify the effect of input correlations on neural firing, as well as its dependence on the specific choice of neuron model. Beyond the study of input correlations, our theory allows for the exploration of excitatory shot-noise input, as well as the effect of network up and down states. These applications will be pursued elsewhere.

9 Appendix: FPT recursive relations in the general case, transformed to ease numerical integration

Here, we transform the recursive relations for the moments of the FPT density in order to facilitate stable numerical integration near fixed points. We start by considering how Eqs. (62)–(65) behave at unstable FPs. As pointed out, setting \(D_n=0\) and \(E_n = v_U\) ensures that \(Q_0(v)\) and \(Q_n(v)\) do not diverge at an unstable FP \(v_U\). Also, this choice entails \(Q_n(v_U) = J_n(v_U)\), which means that the no divergence occurs in the integrand of Eq. (63),

Numerically, however, relying on this cancellation turns out to be problematic. It is thus advisable to rewrite the recursive relations, as shown in the following.

We define

With this, \(J_{n>0}(v)\) becomes

where we have already satisfied the boundary condition for \(J_{n>0}(v)\) by setting the lower integration limit to \(v_-\). This means that, going from \(\{J_n(v), Q_n(v)\}\) to \(\{J_n(v), H_n(v)\}\), we now only need to pay attention to unstable FPs in the calculation of \(H_n(v)\), instead of in both calculations. For the calculation of \(H_n(v)\), we again need to split the voltage range \(v_-\) to \(v_T\) into intervals at fixed points of the “-” dynamics.

Expressions for the solution in the \(i\)th interval, \(H_n^i(v)\) can be obtained by plugging Eqs. (62)–(65) into Eq. (116). Exploiting that,

one may rewrite the integrand to obtain

As for \(Q_n(v)\), the solutions in different intervals differ only in their integration constant \(c_i\).

It is easily verified that the boundary conditions for \(Q_n(v)\) (see Sect. 4) are satisfied by the following choice for \(c_i\):

Denoting the index of the rightmost interval by \(N\) and the left and right boundaries of the \(i\)th interval by \(l_i\) and \(r_i\), we have

These equations, together with Eqs. (121) and (122), allow for a recursive calculation of the \(n\)-th ISI moment in the general case.

10 Proof that the \(\sqrt{\tau _c}\) correction vanishes for a QIF

Consider first the correction to the mean FPT:

If \(U(v) \rightarrow \infty \) as \(v \rightarrow -\infty \) (as is the case for a QIF), l’Hôpital’s rule can be used to show that the second term goes to zero as \(v_R\) goes to \(-\infty \). It then remains to show that

If the potential additionally has the properties

-

1.

\(U(v) \rightarrow -\infty \) for \(v \rightarrow \infty \),

-

2.

there is an \(a\) such that \(U'(v) < -D v \quad \forall \quad v > a\),

as is the case for the cubic potential of a QIF, then

Thus, \(\lim _{v_T \rightarrow \infty } \vartheta (v_T)\) is smaller than any finite value \(c\), because we can always choose an \(a > 1/c\). The same reasoning can be used to show that the \(\sqrt{\tau _c}\) contribution to the \(n\)-th FPT moment vanishes.

References

Alijani AK, Richardson MJ (2011) Rate response of neurons subject to fast or frozen noise: from stochastic and homogeneous to deterministic and heterogeneous populations. Phys Rev E 84(1):011919

Astumian RD, Bier M (1994) Fluctuation driven ratchets: molecular motors. Phys Rev Lett 72(11):1766

Badel L, Lefort S, Brette R, Petersen CC, Gerstner W, Richardson MJ (2008) Dynamic IV curves are reliable predictors of naturalistic pyramidal-neuron voltage traces. J Neurophysiol 99(2):656–666

Bair W, Koch C, Newsome W, Britten K (1994) Power spectrum analysis of bursting cells in area MT in the behaving monkey. J Neurosci 14:2870

Bena I (2006) Dichotomous Markov noise: exact results for out-of-equilibrium systems. Int J Mod Phys B 20(20):2825–2888

Bena I, van den Broeck C, Kawai R, Lindenberg K (2002) Nonlinear response with dichotomous noise. Phys Rev E 66(045):603

Brunel N (2000) Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons. J Comput Neurosci 8(3):183–208

Brunel N, Latham PE (2003) Firing rate of the noisy quadratic integrate-and-fire neuron. Neural Comput 15(10):2281–2306

Brunel N, Sergi S (1998) Firing frequency of leaky intergrate-and-fire neurons with synaptic current dynamics. J Theor Biol 195(1):87–95

Brunel N, Chance FS, Fourcaud N, Abbott LF (2001) Effects of synaptic noise and filtering on the frequency response of spiking neurons. Phys Rev Lett 86:2186–2189

Burkitt AN (2006a) A review of the integrate-and-fire neuron model: I. Homogeneous synaptic input. Biol Cybern 95(1):1–19

Burkitt AN (2006b) A review of the integrate-and-fire neuron model: II. Inhomogeneous synaptic input and network properties. Biol Cybern 95(2):97–112

Cowan RL, Wilson CJ (1994) Spontaneous firing patterns and axonal projections of single corticostriatal neurons in the rat medial agranular cortex. J Neurophysiol 71(1):17–32

De La Rocha J, Doiron B, Shea-Brown E, Josic K, Reyes A (2007) Correlation between neural spike trains increases with firing rate. Nature 448(7155):802–806

Fitzhugh R (1983) Statistical properties of the asymmetric random telegraph signal, with applications to single-channel analysis. Math Biosci 64(1):75–89

Fourcaud N, Brunel N (2002) Dynamics of the firing probability of noisy integrate-and-fire neurons. Neural Comput 14(9):2057–2110

Goychuk I, Hänggi P (2000) Stochastic resonance in ion channels characterized by information theory. Phys Rev E 61:4272–4280

Hänggi P, Jung P (1995) Colored noise in dynamical systems. Adv Chem Phys 89:239–326

Horsthemke W, Lefever R (1981) Voltage-noise-induced transitions in electrically excitable membranes. Biophys J 35(2):415–432

Horsthemke W, Lefever R (1984) Noise induced transitions. Springer, Berlin

Lindner B (2004a) Interspike interval statistics of neurons driven by colored noise. Phys Rev E 69(2):022901

Lindner B (2004b) Moments of the first passage time under external driving. J Stat Phys 117(3–4):703–737

Lindner B (2006) Superposition of many independent spike trains is generally not a Poisson process. Phys Rev E 73(2):022901

Lindner B, Schimansky-Geier L (2001) Transmission of noise coded versus additive signals through a neuronal ensemble. Phys Rev Lett 86:2934–2937

Lindner B, Longtin A, Bulsara A (2003) Analytic expressions for rate and CV of a type I neuron driven by white gaussian noise. Neural Comp 15(8):1761–1788

Lindner B, Gangloff D, Longtin A, Lewis J (2009) Broadband coding with dynamic synapses. J Neurosci 29(7):2076–2087

Liu YH, Wang XJ (2001) Spike-frequency adaptation of a generalized leaky integrate-and-fire model neuron. J Comput Neurosci 10(1):25–45

Markram H, Lübke J, Frotscher M, Roth A, Sakmann B (1997) Physiology and anatomy of synaptic connections between thick tufted pyramidal neurones in the developing rat neocortex. J Physiol 500(Pt 2):409

Middleton J, Chacron M, Lindner B, Longtin A (2003) Firing statistics of a neuron model driven by long-range correlated noise. Phys Rev E 68(2):021920

Moreno R, de La Rocha J, Renart A, Parga N (2002) Response of spiking neurons to correlated inputs. Phys Rev Lett 89(28):288101

Pikovsky AS, Kurths J (1997) Coherence resonance in a noise-driven excitable system. Phys Rev Lett 78:775–778

Richardson MJ, Gerstner W (2005) Synaptic shot noise and conductance fluctuations affect the membrane voltage with equal significance. Neural Comput 17(4):923–947

Richardson MJ, Swarbrick R (2010) Firing-rate response of a neuron receiving excitatory and inhibitory synaptic shot noise. Phys Rev Lett 105(17):178102

Rugar D, Budakian R, Mamin H, Chui B (2004) Single spin detection by magnetic resonance force microscopy. Nature 430(6997):329–332

Salinas E, Sejnowski TJ (2002) Integrate-and-fire neurons driven by correlated stochastic input. Neural Comput 14(9):2111–2155

Schwalger T, Schimansky-Geier L (2008) Interspike interval statistics of a leaky integrate-and-fire neuron driven by gaussian noise with large correlation times. Phys Rev E 77(3):031914

Schwalger T, Fisch K, Benda J, Lindner B (2010) How noisy adaptation of neurons shapes interspike interval histograms and correlations. PLoS Comput Biol 6(12):e1001026

Shadlen M, Newsome W (1998) The variable discharge of cortical neurons: implications for connectivity, computation, and information coding. J Neurosci 18(10):3870–3896

Shu Y, Hasenstaub A, McCormick DA (2003) Turning on and off recurrent balanced cortical activity. Nature 423(6937):288–293

Siegert AJF (1951) On the first passage time probability problem. Phys Rev 81:617–623

Softky W, Koch C (1993) The highly irregular firing of cortical cells is inconsistent with temporal integration of random epsps. J Neurosci 13(1):334–350

van den Broeck C (1983) On the relation between white shot noise, Gaussian white noise, and the dichotomic Markov process. J Stat Phys 31(3):467–483

van den Broeck C, Hänggi P (1984) Activation rates for nonlinear stochastic flows driven by non-Gaussian noise. Phys Rev A 30(5):2730

Vilela RD, Lindner B (2009) Are the input parameters of white noise driven integrate and fire neurons uniquely determined by rate and CV? J Theor Biol 257(1):90–99

Acknowledgments

We thank Tilo Schwalger and Finn Müller-Hansen for discussions and helpful comments on an earlier version of the manuscript. This work was supported by a Bundesministerium für Bildung und Forschung grant (FKZ: 01GQ1001A) and the research training group GRK1589/1.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Droste, F., Lindner, B. Integrate-and-fire neurons driven by asymmetric dichotomous noise. Biol Cybern 108, 825–843 (2014). https://doi.org/10.1007/s00422-014-0621-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00422-014-0621-7