Abstract

The SAMPL Challenges aim to focus the biomolecular and physical modeling community on issues that limit the accuracy of predictive modeling of protein-ligand binding for rational drug design. In the SAMPL5 log D Challenge, designed to benchmark the accuracy of methods for predicting drug-like small molecule transfer free energies from aqueous to nonpolar phases, participants found it difficult to make accurate predictions due to the complexity of protonation state issues. In the SAMPL6 log P Challenge, we asked participants to make blind predictions of the octanol–water partition coefficients of neutral species of 11 compounds and assessed how well these methods performed absent the complication of protonation state effects. This challenge builds on the SAMPL6 p\({K}_{{\rm a}}\) Challenge, which asked participants to predict p\({K}_{{\rm a}}\) values of a superset of the compounds considered in this log P challenge. Blind prediction sets of 91 prediction methods were collected from 27 research groups, spanning a variety of quantum mechanics (QM) or molecular mechanics (MM)-based physical methods, knowledge-based empirical methods, and mixed approaches. There was a 50% increase in the number of participating groups and a 20% increase in the number of submissions compared to the SAMPL5 log D Challenge. Overall, the accuracy of octanol–water log P predictions in SAMPL6 Challenge was higher than cyclohexane–water log D predictions in SAMPL5, likely because modeling only the neutral species was necessary for log P and several categories of method benefited from the vast amounts of experimental octanol–water log P data. There were many highly accurate methods: 10 diverse methods achieved RMSE less than 0.5 log P units. These included QM-based methods, empirical methods, and mixed methods with physical modeling supported with empirical corrections. A comparison of physical modeling methods showed that QM-based methods outperformed MM-based methods. The average RMSE of the most accurate five MM-based, QM-based, empirical, and mixed approach methods based on RMSE were 0.92 ± 0.13, 0.48 ± 0.06, 0.47 ± 0.05, and 0.50 ± 0.06, respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The development of computational biomolecular modeling methodologies is motivated by the goal of enabling quantitative molecular design, prediction of properties and biomolecular interactions and achieving a detailed understanding of mechanisms (chemical and biological) via computational predictions. While many approaches are available for making such predictions, methods often suffer from poor or unpredictable performance, ultimately limiting their predictive power. It is often difficult to know which method would give the most accurate predictions for a target system without extensive evaluation of methods. However, such extensive comparative evaluations are infrequent and difficult to perform, partly because no single group has expertise in or access to all relevant methods and also because of the scarcity of blind experimental data sets that would allow prospective evaluations. In addition, many publications which report method comparisons for a target system constructs these studies with the intention of highlighting the success of a method being developed.

The Statistical Assessment of the Modeling of Proteins and Ligands (SAMPL) Challenges [http://samplchallenges.github.io] provide a forum to test and compare methods with the following goals:

- 1.

Determine prospective predictive power rather than accuracy in retrospective tests.

- 2.

Allow a head to head comparison of a wide variety of methods on the same data.

Regular SAMPL challenges focus attention on modeling areas that need improvement, and sometimes revisit key test systems, providing a crowdsourcing mechanism to drive progress. Systems are carefully selected to create challenges of gradually increasing complexity spanning between prediction objectives that are tractable and that are understood to be slightly beyond the capabilities of contemporary methods. So far, most frequent SAMPL challenges have been on solvation and binding systems. Iterated blind prediction challenges have played a key role in driving innovations in the prediction of physical properties and binding. Here we report on a SAMPL6 log P Challenge on octanol-water partition coefficients, treating molecules resembling fragments of kinase inhibitors. This is a follow-on to the earlier SAMPL6 p\({K}_{{\rm a}}\) Challenge which included the same compounds.

The partition coefficient describes the equilibrium concentration ratio of the neutral state of a substance between two phases:

The log P challenge examines how well we model transfer free energy of molecules between different solvent environments in the absence of any complications coming from predicting protonation states and p\({K}_{{\rm a}}\) values. Assessing log P prediction accuracy also allows evaluating methods for modeling protein-ligand affinities in terms of how well they capture solvation effects.

History and motivation of the SAMPL challenges

The SAMPL blind challenges aim to focus the field of quantitative biomolecular modeling on major issues that limit the accuracy of protein-ligand binding prediction. Companion exercises such as the Drug Design Data Resource (D3R) blind challenges aim to assess the current accuracy of biomolecular modeling methods in predicting bound ligand poses and affinities on real drug discovery project data. D3R blind challenges serve as an accurate barometer for accuracy. However, due to the conflation of multiple accuracy-limiting problems in these complex test systems, it is difficult to derive clear insights into how to make further progress towards better accuracy.

Instead, SAMPL seeks to isolate and focus attention on individual accuracy-limiting issues. We aim to field blind challenges just at the limit of tractability in order to identify underlying sources of error and help overcome these challenges. Working on similar model systems or the same target with new blinded datasets in multiple iterations of prediction challenges maximize our ability to learn from successes and failures. Often, these challenges focus on physical properties of high relevance to drug discovery in their own right, such as partition or distribution coefficients critical to the development of potent, selective, and bioavailable compounds (Fig. 1).

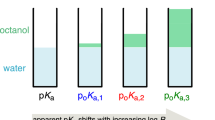

The desire to deconvolute the distinct sources of error contributing to the large errors observed in the SAMPL5 log D Challenge motivated the separation of p\({K}_{{\rm a}}\) and log P challenges in SAMPL6. The SAMPL6 p\({K}_{{\rm a}}\) and log P Challenges aim to evaluate protonation state predictions of small molecules in water and transfer free energy predictions between two solvents, isolating these prediction problems

The partition coefficient (log P) and the distribution coefficient (log D) are driven by the free energy of transfer from an aqueous to a nonpolar phase. Transfer free energy of only neutral species is considered for log P, whereas both neutral and ionized species contribute to log D. Such solute partitioning models are a simple proxy for the transfer free energy of a drug-like molecule to a relatively hydrophobic receptor binding pocket, in the absence of specific interactions. Protein-ligand binding equilibrium is analogous to the partitioning of a small molecule between two environments: a protein binding site and an aqueous phase. Methods that employ thermodynamic cycles—such as free energy calculations—can, therefore, use similar strategies for calculating binding affinities and partition coefficients, and given the similarity in technique and environment, we might expect the accuracy on log P and log D may be related to the accuracy expected from binding calculations, or at least a lower bound for the error these techniques might make in more complex protein-ligand binding phenomena. Evaluating log P or log D predictions makes it far easier to probe the accuracy of computational tools used to model protein-ligand interactions and to identify sources of error to be corrected. For physical modeling approaches, evaluation of partition coefficient predictions comes with the additional advantage of separating force field accuracy from protonation state modeling challenges (Fig. 1).

The SAMPL5 log D Challenge uncovered surprisingly large modeling errors

Hydration free energies formed the basis of several previous SAMPL challenges, but distribution coefficients (log D) capture many of the same physical effects—namely, solvation in the respective solvents—and thus replaced hydration free energies in SAMPL5 [1, 2]. This choice was also driven by a lack of ongoing experimental work with the potential to generate new hydration free energy data for blind challenges. Octanol–water log D is also a property relevant to drug discovery, often used as a surrogate for lipophilicity, further justifying its choice for a SAMPL challenge. The SAMPL5 log D Challenge allowed decoupled evaluation of small molecule solvation models (captured by the transfer free energy between environments) from other issues, such as the sampling of slow receptor conformational degrees of freedom. This blind challenge generated considerable insight into the importance of various physical effects [1, 2]; see the SAMPL5 special issue (https://springerlink.bibliotecabuap.elogim.com/journal/10822/30/11/page/1) for more details.

The SAMPL5 log D Challenge used cyclohexane as an apolar solvent, partly to further simplify this challenge by avoiding some complexities of octanol. In particular, log D is typically measured using water-saturated octanol for the nonaqueous phase, which can give rise to several challenges in modeling accuracy such as a heterogeneous environment with potentially micelle-like bubbles [3,4,5,6], resulting in relatively slow solute transitions between environments [4, 7]. The precise water content of wet octanol is unknown, as it is affected by environmental conditions such as temperature as well as the presence of solutes, the organic molecule of interest, and salts (added to control pH and ionic strength). Inverse micelles transiently formed in wet octanol create spatial heterogeneity and can have long correlation times in molecular dynamics simulations, potentially presenting a challenge to modern simulation methods [3,4,5,6], resulting in relatively slow solute transitions between environments [4, 7].

Performance in the SAMPL5 log D Challenge was much poorer than the organizers initially expected—and than would have been predicted based on past accuracy in hydration free energy predictions—and highlighted the difficulty of accurately accounting for protonation state effects [2]. In many SAMPL5 submissions, participants treated distribution coefficients (log D) as if they were asked to predict partition coefficients (log P). The difference between log D (which reflects the transfer free energy at a given pH including the effects of accessing all equilibrium protonation states of the solute in each phase) and log P (which reflects aqueous-to-apolar phase transfer free energies of the neutral species only) proved particularly important. In some cases, other effects like the presence of a small amount of water in cyclohexane may have also played a role.

Because the SAMPL5 log D Challenge highlighted the difficulty in correctly predicting transfer free energies involving protonation states (the best methods obtained an RMSE of 2.5 log units [2]), the SAMPL6 Challenge aimed to further subdivide the assessment of modeling accuracy into two challenges: A small-molecule p\({K}_{{\rm a}}\) prediction challenge [8] and a log P challenge. The SAMPL6 p\({K}_{{\rm a}}\) Challenge asked participants to predict microscopic and macroscopic acid dissociation constants (p\({K}_{{\rm a}}\)s) of 24 small organic molecules and concluded in early 2018. Details of the challenge are maintained on the GitHub repository (https://github.com/samplchallenges/SAMPL6/). p\({K}_{{\rm a}}\) prediction proved to be difficult; a large number of methods showed RMSE in the range of 1–2 p\({K}_{{\rm a}}\) units, with only a handful achieving less than 1 p\({K}_{{\rm a}}\) unit. While these results were in line with findings from the SAMPL5 Challenge that protonation state predictions were one of the major sources of error in computed log D values, the present challenge allows us to delve deeper into modeling the solvation of neutral species by focusing on log P.

The SAMPL6 log P Challenge focused on small molecules resembling kinase inhibitor fragments

By measuring the log P of a series of compounds resembling fragments of kinase inhibitors—a subset of those used in the SAMPL6 p\({K}_{{\rm a}}\) Prediction Challenge—we sought to assess the limitations of force field accuracy in modeling transfer free energies of drug-like molecules in binding-like processes. This time, the challenge featured octanol as the apolar medium, partly to assess whether wet octanol presented as significant a problem as previously suspected. Participants were asked to predict the partition coefficient (log P) of the neutral species between octanol and water phases. Here, we focus on several key aspects of the design and analysis of this challenge, particularly the staging, analysis, results, and lessons learned. Experimental details of the collection of the log P values are reported elsewhere [9]. One of the goals of this challenge is to encourage prediction of model uncertainties (an estimate of the inaccuracy with which your model predicts the physical property) since the ability to tell when methods are unreliable would be very useful for increasing the application potential and impact of computational methods.

The SAMPL challenges aim to advance predictive quantitative models

The SAMPL challenges have a key focus on lessons learned. In principle, they are a challenge or competition, but we see it as far more important to learn how to improve accuracy than to announce the top-performing methods. To aid in learning as much as possible, this overview paper provides an overall assessment of performance and some analysis of the relative performance of different methods in different categories and provides some insights into lessons we have learned (and/or other participants have learned). Additionally, this work presents our own reference calculations which provide points of comparison for participants (some relatively standard and some more recent, especially in the physical category) and also allow us to provide some additional lessons learned. The data, from all participants and all reference calculations, is made freely available (see “Code and data availability”) to allow others to compare methods retrospectively and plumb the data for additional lessons.

Common computational approaches for predicting log P

Many methods have been developed to predict octanol–water log P values of small organic molecules, including physical modeling (QM and MM-based methods) and knowledge-based empirical prediction approaches (atom-contribution approaches and QSPR). There are also log P prediction methods that combine the strengths of physical and empirical approaches. Here, we briefly highlight some of the major ideas and background behind physical and empirical log P prediction methods.

Physical modeling approaches for predicting log P

Physical approaches begin with a detailed atomistic model of the solute and its conformation and attempt to estimate partitioning behavior directly from that. Details depend on the approach employed.

Quantum mechanical (QM) approaches for predicting log P

QM approaches to solvation modeling utilize a numerical solution of the Schrödinger equation to estimate solvation free energies (and thereby partitioning) directly from first principles. There are several approaches for these calculations, and discussing them is outside the scope of this work. However, it is important to note that direct solution of the underlying equations, especially when coupled with dynamics, becomes impractical for large systems such as molecules in solution. Several approximations must be made before such approaches can be applied to estimating phase transfer free energies. These typical approximations include assuming the solute has one or a small number of dominant conformations in each phase being considered and using an implicit solvent model to represent the solvent. The basis set and level of theory can be important choices and can significantly affect the accuracy of calculated values. Additionally, the protonation or tautomerization state(s) selected as an input can also introduce errors. With QM approaches possible protonation states and tautomers can be evaluated to find the lowest energy state in each solvent. However, if these estimates are erroneous, any errors will propagate into the final transfer free energy and log P predictions.

Implicit solvent models can be used, in the context of the present SAMPL, to represent both water and octanol. Such models are often parameterized—sometimes highly so—based on experimental solvation free energy data. This means that such models perform well for solvents (and solute chemistries) where solvation free energy data is abundant (as in the present challenge) but are often less successful when far less training data is available. In this respect, QM methods, by virtue of the solvent model, have some degree of overlap with the empirical methods discussed further below.

Several solvent models are particularly common, and in the present challenge, two were employed by multiple submissions. One was Marenich, Cramer, and Truhlar’s SMD solvation model [10], which derives its electrostatics from the widely used IEF-PCM model and was empirically trained on various solutes/solvents utilizing a total of some 2821 different solvation measurements. This model has been employed in various SAMPL challenges in the past in the context of calculation of hydration free energies, including the earliest SAMPL challenges [11, 12]. Others in the Cramer–Truhlar series of solvent models were also used, including the 2012 SM12 solvation model, which is based on the generalized born (GB) approximation [13]. Another set of submissions also used the reference interaction site model (RISM) integral equation approach, discussed further below.

The COSMO-RS solvation model is another method utilized in this context which covers a particularly broad range of solvents, typically quite well [14,15,16,17,18]. In the present challenge, a “Cosmoquick” variant was also applied and falls into the “Mixed” method category, as it utilizes additional empirical adjustments. The COSMO-RS implementation of COSMOtherm takes into account conformational effects to some extent; the chemical potential in each phase is computed using the Boltzmann weights of a fixed set of conformers.

In general, while choice of solvation model can be a major factor impacting QM approaches, the neglect of conformational changes means these approaches typically (though not always) neglect any possibility of significant change of conformation on transfer between phases and they simply estimate solvation by the difference in (estimated) solvation free energies for each phase of a fixed conformation. Additionally, solute entropy is often neglected, assuming the single-conformation solvation free energy plays the primary role in driving partitioning between phases. In addition to directly estimating solvation, QM approaches can also be used to drive the selection of the gas- or solution-phase tautomer, and thus can be used to drive the choice of inputs for MM approaches discussed further below.

Integral equation-based approaches Integral equation approaches provide an alternate approach to solvation modeling (for both water and non-water solvents) and have been applied in SAMPL challenges within both the MM and QM frameworks [19,20,21]. In this particular challenge, however, the employed approaches were entirely QM and utilized the reference interaction site model (RISM) approach [22,23,24]. Additionally, as noted above, the IEF-PCM model used by the SMD solvation model (discussed above) is also an integral equation approach. Practical implementation details mean that RISM approaches typically have one to a few adjustable parameters (e.g. four [25]) which are empirically tuned to experimental solvation free energies, in contrast to the SMD and SM-n series of solvation models which tend to have a larger number of adjustable parameters and thus require larger training sets. In this particular SAMPL challenge, RISM participation was limited to embedded cluster EC-RISM methods [19, 22, 26], which combine RISM with a quantum mechanical treatment of the solute.

Molecular mechanics (MM) approaches for predicting log P

MM approaches to computing solvation and partition free energies (and thus log P values), as typically applied in SAMPL, use a force field or energy model which gives the energy (and, usually, forces) of a system as a function of the atomic positions. These models include all-atom fixed charge additive force fields, as well as polarizable force fields. Such approaches typically (though not always) are applied in a dynamical framework, integrating the equations of motion to solve for the time evolution of the system, though Monte Carlo approaches are also possible.

MM-based methods are typically coupled with free energy calculations to estimate partitioning. Often, these are so-called alchemical methods that utilize a non-physical thermodynamic cycle to estimate transfer between phases, though pulling-based techniques that directly model phase transfer are in principle possible [27, 28]. Such free energy methods allow detailed all-atom modeling of each phase, and compute the full free energy of the system, in principle (in the limit of adequate sampling) providing the correct free energy difference given the choice of energy model (“force field”). However, adequate sampling can sometimes prove difficult to achieve.

Key additional limitations facing MM approaches are the accuracy of the force field, the fact that a single protonation state/tautomer is generally selected as an input and held fixed (meaning that incorrect assignment or multiple relevant states can introduce significant errors), and timescale—simulations only capture motions that are faster than simulation timescale. However, these approaches do capture conformational changes upon phase transfer, as long as such changes occur on timescales faster than the simulation timescale.

Empirical log P predictions

Due to the importance of accurate log P predictions, ranging from pharmaceutical sciences to environmental hazard assessment, a large number of empirical models to predict this property have been developed and reviewed [29,30,31]. An important characteristic of many of these methods is that they are very fast, so even large virtual libraries of molecules can be characterized.

In general, two main methodologies can be distinguished: group- or atom-contribution approaches, also called additive group methods, and quantitative structure-property relationship (QSPR) methods.

Atom- and group-contribution approaches

Atom-contribution methods, pioneered by Crippen in the late 1980s [32, 33], are the easiest to understand conceptually. These assume that each atom contributes a specific amount to the solvation free energy and that these contributions to log P are additive. Using a potentially large number of different atom types (typically in the order of 50–100), the log P is the sum of the individual atom types times the number of their occurrences in the molecule:

A number of log P calculation programs are based on this philosophy, including AlogP [34], AlogP98 [34], and moe_SlogP [35].

The assumption of independent atomic contributions fails for compounds with complex aromatic systems or stronger electronic effects. Thus correction factors and contributions from neighboring atoms were introduced to account for these shortcomings (e.g. in XlogP [36,37,38], and SlogP [35]).

In contrast, in group contribution approaches, log P is calculated as a sum of group contributions, usually augmented by correction terms that take into account intramolecular interactions. Thus, the basic equation is

where the first term describes the contribution of the fragments \(f_i\) (each occurring \(a_i\) times), the second term gives the contributions of the correction factors \(F_j\) occurring \(b_j\) times in the compound. Group contribution approaches assume that the details of the electronic or intermolecular structure can be better modeled with whole fragments. However, this breaks down when molecular features are not covered in the training set. Prominent examples of group contribution approaches include clogP [39,40,41,42], KlogP [43], ACD/logP [44], and KowWIN [45].

clogP is probably one of the most widely used log P calculation programs [39,40,41]. clogP relies on fragment values derived from measured data of simple molecules, e.g., carbon and hydrogen fragment constants were derived from measured values for hydrogen, methane, and ethane. For more complex hydrocarbons, correction factors were defined to minimize the difference to the experimental values. These can be structural correction factors taking into account bond order, bond topology (ring/chain/branched) or interaction factors taking into account topological proximity of certain functional groups, electronic effects through \(\pi\)-bonds, or special ortho-effects.

QSPR approaches

Quantitative structure-property relationships (QSPR) provide an entirely different category of approaches. In QSPR methods, a property of a compound is calculated from molecular features that are encoded by so-called molecular descriptors. Often, these theoretical molecular descriptors are classified as 0D-descriptors (constitutional descriptors, only based on the structural formula), 1D-descriptors (i.e. list of structural fragments, fingerprints), 2D-descriptors (based on the connection table, topological descriptors), and 3D-descriptors (based on the three-dimensional structure of the compound, thus conformation-dependent). Sometimes, this classification is extended to 4D-descriptors, which are derived from molecular interaction fields (e.g., GRID, CoMFA fields).

Over the years, a large number of descriptors have been suggested, with varying degrees of interpretability. Following the selection of descriptors, a regression model that relates the descriptors to the molecular property is derived by fitting the individual contributions of the descriptors to a dataset of experimental data; both linear and nonlinear fitting is possible. Various machine learning approaches such as random forest models, artificial neural network models, etc. also belong to this category. Consequently, a large number of estimators of this type have been proposed; some of the more well-known ones include MlogP [46] and VlogP [47].

Expectations from different prediction approaches

Octanol–water log P literature data abounds, impacting our expectations. Given this abundance of data, in contrast to the limited availability of cyclohexane–water log D data to train models for the SAMPL5 log D Challenge, we expected higher accuracy here in the SAMPL6 Challenge. Some sources of public octanol–water log P values include DrugBank [48], ChemSpider [49], PubChem, the NCI CACTUS databases [50, 51], and SRC’s PHYSPROP Database [52].

Our expectation was that empirical knowledge-based and other trained methods (implicit solvent QM, mixed methods) would outperform other methods in the present challenge as they are impacted directly by the availability of octanol-water data. Methods well trained to experimental octanol-water partitioning data should typically result in higher accuracy if the fitting is done well. The abundance of octanol-water data may also provide empirical and mixed approaches with an advantage over physical modeling methods. Current molecular mechanics-based methods and other methods not trained to experimental log P data ought to do worse in this challenge. Performance of strictly physical modeling-based prediction methods might generalize better across other solvent types where training data is scarce, but that will not be tested by this challenge. In principle, molecular mechanics-based methods could also be fitted using octanol-water data as one of the targets for force field optimization, but present force fields have not made broad use of this data in the fitting. Thus, top methods are expected to be from empirical knowledge-based, QM-based approaches and combinations of QM-based and empirical approaches because of training data availability. These categories are broken out separately for analysis.

Challenge design and evaluation

Challenge structure

The SAMPL6 Part II log P Challenge was conducted as a blind prediction challenge on predicting octanol-water partition coefficients of 11 small molecules that resemble fragments of kinase inhibitors (Fig. 2). The challenge molecule set was composed of small molecules with limited flexibility (less than 5 non-terminal rotatable bonds) and covers limited chemical diversity. There are six 4-aminoquinazolines, two benzimidazoles, one pyrazolo[3,4-d]pyrimidine, one pyridine, one 2-oxoquinoline substructure containing compounds with log P values in the range of 1.95–4.09. Information on experimental data collection is presented elsewhere [9].

Structures of the 11 protein kinase inhibitor fragments used for the SAMPL6 log P Blind Prediction Challenge. These compounds are a subset of the SAMPL6 p\({K}_{{\rm a}}\) Challenge compound set [8] which were found to be tractable potentiometric measurements with sufficient solubility and p\({K}_{{\rm a}}\) values far from pH titration limits. Chemical identifiers of these molecules are available in Table S2 and experimental log P values are published [9]. Molecular structures in the figure were generated using OEDepict Toolkit [53]

The dataset composition was announced several months before the challenge including details of the measurement technique (potentiometric log P measurement, at room temperature, using water-saturated octanol phase, and ionic strength-adjusted water with 0.15 M KCl [9]), but not the identity of the small molecules. The instructions and the molecule set were released at the challenge start date (November 1, 2018), and then submissions were accepted until March 22, 2019.

Following the conclusion of the blind challenge, the experimental data was made public on March 25, 2019, and results are first discussed in a virtual workshop (on May 16, 2019) [54] then later in an in-person workshop (Joint D3R/SAMPL Workshop, San Diego, August 22–23, 2019). The purpose of the virtual workshop was to go over a preliminary evaluation of results, begin considering analysis and lessons learned, and nucleate opportunities for follow up and additional discussion. Part of the goal was to facilitate discussion so that participants can work together to maximize lessons learned in the lead up to an in-person workshop and special issue of a journal. The SAMPL6 log P Virtual Workshop video [54] and presentation slides [55] are available, as are organizer presentation slides from the joint D3R/SAMPL Workshop 2019 [56, 57] on the SAMPL Community Zenodo page (https://zenodo.org/communities/sampl/).

A machine-readable submission file format was specified for blind submissions. Participants were asked to report SAMPL6 molecule IDs, predicted octanol–water log P values, the log P standard error of the mean (SEM), and model uncertainty. It was mandatory to submit predictions for all these values, including the estimates of uncertainty. The log P SEM captures the statistical uncertainty of the predicted method, and the model uncertainty is an estimate of how well prediction and experimental values will agree. Molecule IDs assigned in SAMPL6 p\({K}_{{\rm a}}\) Challenge were conserved in the log P challenge for the ease of reference.

Participants were asked to categorize their methods as belonging to one of four method categories—physical, empirical, mixed, or other. The following are definitions provided to participants for selecting a method category: empirical models are prediction methods that are trained on experimental data, such as QSPR, machine learning models, artificial neural networks, etc. Physical models are prediction methods that rely on the physical principles of the system such as molecular mechanics or quantum mechanics based methods to predict molecular properties. Methods taking advantage of both kinds of approaches were asked to be reported as “mixed”. The “other” category was for methods which do not match the previous ones. At the analysis stage, some categories were further refined, as discussed in the “Evaluation approach” section.

The submission files also included fields for naming the method, listing the software utilized, and a free text method section for the detailed documentation of each method. Only one log P value for each molecule per submission and only full prediction sets were allowed. Incomplete submissions—such as for a subset of compounds—were not accepted. We highlighted various factors for participants to consider in their log P predictions. These included:

- 1.

There is a significant partitioning of water into the octanol phase. The mole fraction of water in octanol was previously measured as \(0.271 \pm 0.003\) at \(25^{\circ}\,{\hbox{C}}\) [58].

- 2.

The solutes can impact the distribution of water and octanol. Dimerization or oligomerization of solute molecules in one or more of the phases may also impact results [59].

- 3.

log P measurements capture partition of neutral species which may consist of multiple tautomers with significant populations or the major tautomer may not be the one given in the input file.

- 4.

Shifts in tautomeric state populations on transfer between phases are also possible.

Research groups were allowed to participate with multiple submissions, which allowed them to submit prediction sets to compare multiple methods or to investigate the effect of varying parameters of a single method. All blind submissions were assigned a 5-digit alphanumeric submission ID, which will be used throughout this paper and also in the evaluation papers of participants. These abbreviations are defined in Table 3.

Evaluation approach

A variety of error metrics were considered when analyzing predictions submitted to the SAMPL6 log P Challenge. Summary statistics were calculated for each submission for method comparison, as well as error metrics of predictions of each method. Both summary statistics and individual error analysis of predictions were provided to participants before the virtual workshop. Details of the analysis and scripts are maintained on the SAMPL6 Github Repository (described in the “Code and data availability” section).

There are six error metrics reported: the root-mean-squared error (RMSE), mean absolute error (MAE), mean (signed) error (ME), coefficient of determination (R2), linear regression slope (m), and Kendall’s rank correlation coefficient (\(\tau\)). In addition to calculating these performance metrics, 95% confidence intervals were computed for these values using a bootstrapping-over-molecules procedure (with 10,000 bootstrap samples) as described elsewhere in a previous SAMPL overview article [60]. Due to the small dynamic range of experimental log P values of the SAMPL6 set, it is more appropriate to use accuracy based performance metrics, such as RMSE and MAE, to evaluate methods than correlation-based statistics. This observation is also typically reflected in the confidence intervals on these metrics. Calculated error statistics of all methods can be found in Tables S4 and S5.

Submissions were originally assigned to four method categories (physical, empirical, mixed, and other) by participants. However, when we evaluated the set of participating methods it became clear that it was going to be more informative to group them using the following categories: physical (MM), physical (QM), empirical, and mixed. Methods from the “other” group were reassigned to empirical or physical (QM) categories as appropriate. Methods submitted as “physical” by participants included quantum mechanical (QM), molecular mechanics-based (MM) and, to a lesser extent, integral equation-based approaches (EC-RISM). We subdivided these submissions into “physical (MM)” and “physical (QM)” categories. Integral equation-based approaches were also evaluated under the Physical (QM) category. The “mixed” category includes methods that physical and empirical approaches are used in combination. Table 3 indicates the final category assignments in the “Category” column.

We created a shortlist of consistently well-performing methods that were ranked in the top 20 consistently according to two error and two correlation metrics: RMSE, MAE, R\(^{2}\), and Kendall’s Tau. These are shown in Table 4.

We included null and reference method prediction sets in the analysis to provide perspective for performance evaluations of blind predictions. Null models or null predictions employ a model which is not expected to be useful and can provide a simple point of comparison for more sophisticated methods, as ideally, such methods should improve on predictions from a null model. We created a null prediction set (submission ID NULL0) by predicting a constant log P value for every compound, based on a plausible log P value for drug-like compounds. We also provide reference calculations using several physical (alchemical) and empirical approaches as a point of comparison. The analysis is presented with and without the inclusion of reference calculations in the SAMPL6 GitHub repository. All figures and statistics tables in this manuscript include reference calculations. As the reference calculations were not formal submissions, these were omitted from formal ranking in the challenge, but we present plots in this article which show them for easy comparison. These are labeled with submission IDs of the form REF## to allow easy recognition of non-blind reference calculations.

In addition to the comparison of methods we also evaluated the relative difficulty of predicting log P of each molecule in the set. For this purpose, we plotted prediction error distributions of each molecule considering all prediction methods. We also calculated MAE for each molecule’s overall predictions as well as for predictions from each category as a whole.

Methods for reference calculations

Here we highlight the null prediction method and reference methods. We have included several widely-used physical and empirical methods as reference calculations in the comparative evaluation of log P prediction methods, in addition to the blind submissions of the SAMPL6 log P Challenge. These reference calculations are not formally part of the challenge but are provided as comparison methods. They were collected after the blind challenge deadline when the experimental data was released to the public. For a more detailed description of the methods used in the reference calculations, please refer to Sect. 12.1 in Supplementary Information.

Physical reference calculations

Physical reference calculations were carried out using YANK [61], an alchemical free energy calculation toolkit [62, 63]. YANK implements Hamiltonian replica exchange molecular dynamics (H-REMD) simulations to sample multiple alchemical states and is able to explore a number of different alchemical intermediate functional forms using the OpenMM toolkit for molecular simulation [64,65,66].

The GAFF 1.81 [67] and smirnoff99Frosst 1.0.7 (SMIRNOFF) [68] force fields were combined with three different water models. Water models are important for accuracy in modeling efforts in molecular modeling and simulation. The majority of modeling packages make use of rigid and fixed charge models due to their computational efficiency. To test how different water models can impact predictions, we combined three explicit water models TIP3P [69], TIP3P Force Balance (TIP3P-FB) [70], and the Optimal Point Charge (OPC) model [71] with the GAFF and SMIRNOFF force fields. The TIP3P and TIP3P-FB models are a part of the three-site water model class where each atom has partial atomic charges and a Lennard–Jones interaction site centered at the oxygen atom. The OPC model is a rigid 4-site, 3-charge water model that has the same molecular geometry as TIP3P, but the negative charge on the oxygen atom is located on a massless virtual site at the HOH angle bisector. This arrangement is meant to improve the water molecule’s electrostatic distribution. While TIP3P is one of the older and more common models used, OPC and TIP3P-FB are newer models that were parameterized to more accurately reproduce more of the physical properties of liquid bulk water.

Reference calculations also included wet and dry conditions for the octanol phase using the GAFF and SMIRNOFF force field with TIP3P water. The wet octanol phase was 27% water by mole fraction [58]. The methods used for physical reference calculations are summarized in Table 1.

Physical reference calculations (submission IDs: REF01–REF08) were done using a previously untested direct transfer free energy calculation protocol (DFE) which involved calculating the transfer free energy between water and octanol phases (explained in detail in Sect. 12.1.1 in Supplementary Information), rather than a more typical protocol involving calculating a gas-to-solvent transfer free energy for each phase—an indirect solvation-based transfer free energy (IFE) protocol. In order to check for problems caused by the new DFE protocol, we included additional calculations performed by the more typical IFE protocol. Method details for the IFE protocol are presented in Sect. 12.1.2 in Supplementary Information and results are discussed in “Lessons learned from physical reference calculations”. However, only reference calculations performed with DFE protocol were included in the overall evaluation of the SAMPL6 Challenge presented in the “Overview of challenge results” section because only these spanned the full range of force fields and solvent models we sought to explore.

Empirical reference calculations

As empirical reference models, we used a number of commercial calculation programs, with the permission of the respective vendors, who agreed to have the results included in the SAMPL6 comparison. The programs are summarized in Table 2 and cover several of the different methodologies described in the “Empirical log P predictions” section and 12.1.3 in the Supplementary Information.

Our null prediction method

This submission set is designed as a null model which predicts the log P of all molecules to be equal to the mean clogP of FDA approved oral new chemical entities (NCEs) between the years 1998 and 2017 based on the analysis of Shultz [72]. We show this null model with submission ID NULL0. The mean clogP of FDA approved oral NCEs approved between 1900–1997, 1998–2007, and 2008–2017 were reported 2.1, 2.4, and 2.9, respectively, using StarDrop clogP calculations (https://www.optibrium.com/). We calculated the mean of NCEs approved between 1998 and 2017, which is 2.66, to represent the average log P of contemporary drug-like molecules. We excluded the years 1900–1997 from this calculation as the early drugs tend to be much smaller and much more hydrophilic than the ones being developed at present.

Results and discussion

Overview of challenge results

A large variety of methods were represented in the SAMPL6 log P Challenge. There were 91 blind submissions collected from 27 participating groups in the log P challenge (Tables of participants and the predictions they submitted are presented in SAMPL6 GitHub Repository and its archived copy in the Supporting Information.). This represented an increase in interest over the previous SAMPL challenges. In the SAMPL5 cyclohexane–water log D Challenge, there were 76 submissions from 18 participating groups [2], so participation was even higher this iteration.

Out of blind submissions of the SAMPL6 log P Challenge, there were 31 in the physical (MM) category, 25 in the physical (QM) category, 18 in the empirical category, and 17 in the mixed method category (Table 3). We also provided additional reference calculations—five in the empirical category, and eight in the physical (MM) category.

The following sections present a detailed performance evaluation of blind submissions and reference prediction methods. Performance statistics of all the methods can be found in Supplementary Table S4. Methods are referred to by their submission ID’s which are provided in Table 3.

Performance statistics for method comparison

Many methods in the SAMPL6 Challenge achieved good predictive accuracy for octanol–water log P values. Figure 3 shows the performance comparison of methods based on accuracy with RMSE and MAE. 10 methods achieved an RMSE \(\le\) 0.5 log P units. These methods were QM-based, empirical, and mixed approaches (submission IDs: hmz0n, gmoq5, 3vqbi, sq07q, j8nwc, xxh4i, hdpuj, dqxk4, vzgyt, ypmr0). Many of the methods had an RMSE \(\le\) 1.0 log P units. These 40 methods include 34 blind predictions, 5 reference calculations, and the null prediction method.

Correlation-based statistics methods only provide a rough comparison of methods of the SAMPL6 Challenge, given the small dynamic range of the experimental log P dataset. Figure 4 shows R2 and Kendall’s Tau values calculated for each method, sorted from high to low performance. However, the uncertainty of each correlation statistic is quite high, not allowing a true ranking based on correlation. Methods with R2 and Kendall’s Tau higher than 0.5 constitute around 50% of the methods and can be considered as the better half. However, the performance of individual methods is statistically indistinguishable. Nevertheless, it is worth noting that QM-based methods appeared marginally better at capturing the correlation and ranking of experimental log P values. These methods comprised the top four based on R2 (\(\ge\) 0.75; submission IDs: 2tzb0, rdsnw, hmz0n, mm0jf), and the top six based on Kendall’s Tau, (\(\ge\) 0.70; submission IDs: j8nwc, qyzjx, 2tzb0, rdsnw, mm0jf, and 6fyg5). However, due to the small dynamic range and the number of experimental log P values of the SAMPL6 set, correlation-based statistics are less informative than accuracy-based performance metrics such as RMSE and MAE.

Overall accuracy assessment for all methods participating in the SAMPL6 log P Challenge. Both root-mean-square error (RMSE) and mean absolute error (MAE) are shown, with error bars denoting 95% confidence intervals obtained by bootstrapping over challenge molecules. Submission IDs are summarized in Table 3. Submission IDs of the form REF## refer to non-blinded reference methods computed after the blind challenge submission deadline, and NULL0 is the null prediction method; all others refer to blind, prospective predictions. Evaluation statistics calculated for all methods are also presented in Table S4 of Supplementary Information

Overall correlation assessment for all methods participating SAMPL6 log P Challenge. Pearson’s R2 and Kendall’s Rank Correlation Coefficient Tau (\(\tau\)) are shown, with error bars denoting 95% confidence intervals obtained by bootstrapping over challenge molecules. Submission IDs are summarized in Table 3. Submission IDs of the form REF## refer to non-blinded reference methods computed after the blind challenge submission deadline, and NULL0 is the null prediction method; all others refer to blind, prospective predictions. Overall, a large number and wide variety of methods have a statistically indistinguishable performance on ranking, in part because of the relatively small dynamic range of this set and because of the small size of the set. Roughly the top half of methods with Kendall’s Tau > 0.5 fall into this category. Evaluation statistics calculated for all methods are also presented in Table S4 of Supplementary Information

Results from physical methods

One of the aims of the SAMPL6 log P Challenge was to assess the accuracy of physical approaches in order to potentially provide direction for improvements which could later impact accuracy in downstream applications like protein-ligand binding. Some MM-based methods used for log P predictions use the same technology applied to protein-ligand binding predictions, so improvements made to the modeling of partition may in principle carry over. However, prediction of partition between two solvent phases is a far simpler test only capturing some aspects of affinity prediction—specifically, small molecule and solvation modeling—in the absence of protein-ligand interactions and protonation state prediction problems.

Figure 5 shows a comparison of the performance of MM- and QM-based methods in terms of RMSE and Kendall’s Tau. Both in terms of accuracy and ranking ability, QM methods resulted in better results, on average. QM methods using implicit solvation models outperformed MM-based methods with explicit solvent methods that were expected to capture the heterogeneous structure of the wet octanol phase better. Only 3 MM-based methods and 8 QM-based methods achieved RMSE less than 1 log P unit. Five of these QM-based methods showed very high accuracy (RMSE \(\le\) 0.5 log P units). The three MM-based methods with the lowest RMSE were:

Molecular-Dynamics-Expanded-Ensembles (nh6c0): This submission used an AMBER/OPLS-based force field with manually adjusted parameters (following rules from the participant’s article [86]), modified Toukan–Rahman water model with Non-zero Lennard–Jones parameters [84], and modified Expanded Ensembles (EEMD) method [87] for free energy estimations.

Alchemical-CGenFF (ujsgv, 2mi5w, [83]): These two submissions used Multi-Phase Boltzmann Weighting with the CHARMM Generalized Force Field (CGenFF) [88], and the TIP3P water model [69]. From the brief method descriptions submitted to the challenge, we could not identify the difference between these prediction sets.

RMSE values for predictions made with MM-based methods ranged from 0.74 to 4.00 log P units, with the average of the better half being 1.44 log P units.

Submissions included diverse molecular simulation-based log P predictions made using alchemical approaches. These included Free Energy Perturbation (FEP) [89] and BAR estimation [90], Thermodynamic Integration (TI) [91], and Non-Equilibrium Switching (NES) [92, 93]. Predictions using YANK [61] Hamiltonian replica exchange molecular dynamics and MBAR [94] were provided as reference calculations.

A variety of combinations of force fields and water models were represented in the challenge. These included CGenFF with TIP3P or OPC3 [95] water models; OPLS-AA [96] with OPC3 and TIP4P [69] water models; GAFF [67] with TIP3P, TIP3P Force Balance [70], OPC [71], and OPC3 water models; GAFF2 [97] with the OPC3 water model; GAFF with Hirshfeld-I [98] and Minimal Basis Set Iterative Stockholder (MBIS) [99] partial charges and the TIP3P or SPCE water models [100]; the SMIRNOFF force field [68] with the TIP3P, TIP3P Force Balance, and OPC water models; and submissions using Drude [101] and ARROW [102] polarizable force fields.

Predictions that used polarizable force fields did not show an advantage over fixed-charged force fields in this challenge. RMSEs for polarizable force field submissions range from 1.85 to 2.86 (submissions with the Drude Force Field were fyx45, pnc4j, and those with the ARROW Force Field were odex0, padym,fcspk, and 6cm6a).

Predictions using both dry and wet octanol phases were submitted to the log P challenge. When submissions from the same participants were compared, we find that including water in the octanol phase only slightly lowers the RMSE (0.05–0.10 log P units), as seen in Alchemical-CGenFF predictions ( wet: ujshv, 2mi5w, ttzb5; dry: 3wvyh), YANK-GAFF-TIP3P predictions (wet: REF02, dry: REF07), MD-LigParGen predictions with OPLS and TIP4P (wet: mwuua, dry: eufcy), and MD-OPLSAA predictions with TIP4P (wet: 623c0, dry: cp8kv). However, this improvement in performance with wet octanol phase was not found to be a significant effect on overall prediction accuracy. Methodological differences and choice of force field have a greater impact on prediction accuracy than the composition of the octanol phase.

Refer to Table S1 for a summary of force fields and water models used in MM-based submissions. For additional analysis, we refer the interested reader to the work of Piero Procacci and Guido Guarnieri, who provide a detailed comparison of MM-based alchemical equilibrium and non-equilibrium approaches in SAMPL6 log P Challenge in their paper [73]. Specifically, in the section “Overview on MD-based SAMPL6 submissions” of their paper, they provide comparisons subdividing submissions based force field (for CGenFF, GAFF1/2, and OPLS-AA).

Performance statistics of physical methods. Physical methods are further classified into quantum chemical (QM) methods and molecular mechanics (MM) methods. RMSE and Kendall’s Rank Correlation Coefficient Tau are shown, with error bars denoting 95% confidence intervals obtained by bootstrapping over challenge molecules. Submission IDs are summarized in Table 3. Submission IDs of the form REF## refer to non-blinded reference methods computed after the blind challenge submission deadline; all others refer to blind, prospective predictions. Method details of log P predictions with MM-based physical methods are presented in Table S1 of Supplementary Information

A shortlist of consistently well-performing methods

Although there was not any single method that performed significantly better than others in the log P challenge, we identified a group of consistently well-performing methods. There were many methods with good performance when judged based on RMSE, but not many methods consistently showed up at the top according to all metrics. When individual error metrics are considered, many submissions were not different from one another in a statistically significant way, and ranking typically depends on the metric chosen due to overlapping confidence intervals. Instead, we identified several consistently well-performing methods by looking at several different metrics—two assessing accuracy (RMSE and MAE) and two assessing correlation (Kendall’s Tau and R2). We determined those methods which are in the top 20 by each of these metrics. This resulted in a list of eight methods that are consistently well-performing. The shortlist of consistently well-performing methods are presented in Table 4.

The resulting eight consistently well-performing methods were QM-based physical models and empirical methods. These eight methods were fairly diverse. Traditional QM-based physical methods included log P predictions with the COSMO-RS method as implemented in COSMOtherm v19 at the BP//TZVPD//FINE Single Point level (hmz0n, [16,17,18]) and the SMD solvation model with the M06 density functional family (dqxk4, [79]). Additionally, two other top QM-based methods seen in this shortlist used EC-RISM theory with wet or dry octanol (j8nwc and qyzjx) [22]. Several empirical submissions also were among these well-performing methods—specifically, the Global XGBoost-Based QSPR LogP Predictor (gmoq5), the RayLogP-II (hdpuj) approach, and rfs-logp (vzgyt). Among reference calculations, SlogP calculated by MOE software (REF13) was the only method that was consistently well-performing.

Figure 6 compares log P predictions with experimental values for these 8 well-performing methods, as well as one additional method which has an average level of performance. This representative average method (rdsnw, [22]) is the method with the highest RMSE below the median of all methods (including reference methods).

Predicted vs. experimental value correlation plots of 8 best-performing methods and one representative average method. Dark and light green shaded areas indicate 0.5 and 1.0 units of error. Error bars indicate standard error of the mean of predicted and experimental values. Experimental log P SEM values are too small to be seen under the data points. EC_RISM_wet_P1w+1o method (rdsnw) was selected as the representative average method, as it is the method with the highest RMSE below the median

Difficult chemical properties for log P predictions

In addition to comparing method performance, we analyzed the prediction errors for each compound in the challenge set to assess whether particular compounds or chemistries are especially challenging (Fig. 7). For this analysis, MAE is a more appropriate statistical measure for assessing global trends, as its value is less affected by outliers than is RMSE.

Performance on individual molecules shows relatively uniform MAE across the challenge set (Fig. 7A). Predictions of SM14 and SM16 were slightly more accurate than the rest of the molecules when averaged across all methods. The prediction accuracy on each molecule, however, is highly variable depending on the method category (Fig. 7B). Predictions of SM08, SM13, SM09, and SM12 were significantly less accurate with physical (MM) methods than the other method categories by 2 log P units in terms of MAE calculated over all methods in each category. These molecules were not challenging for QM-based methods. Discrepancies in predictions of SM08 and SM13 are discussed in “Lessons learned from physical reference calculations”. For QM-based methods, SM04 and SM02 were most challenging. The largest MAE for empirical methods was observed for SM11 and SM15.

Figure 7C shows the error distribution for each SAMPL6 molecule over all prediction methods. It is interesting to note that most distributions are peaked near an error of zero, suggesting that perhaps a consensus model might outperform most individual models. However, SM15 is more significantly shifted away from zero than any other compound (ME calculated across all molecules is \(-0.88\pm 1.49\) for SM15). SM08 had the most spread in log P prediction error.

This challenge focused on log P of neutral species, rather than log D as studied in SAMPL5, which meant that we do not see the same trends where performance is significantly worse for compounds with multiple protonation states/tautomers or where p\({K}_{{\rm a}}\) values are uncertain. However, in principle, tautomerization can still influence log P values. Multiple neutral tautomers can be present at significant populations in either solvent phase, or the major tautomer can be different in each solvent phase. However, this was not expected to be the case for any of the 11 compounds in this SAMPL6 challenge. We do not have experimental data on the identity or ratio of tautomers, but tautomers other than those depicted in Fig. 2 would be much higher in energy according to QM predictions [22] and, thus, very unlikely to play a significant role. Still, for most log P prediction methods, it was at least necessary for participants to select the major neutral tautomer. We do not observe statistically worse error for compounds with potential tautomer uncertainties here, suggesting it was not a major factor in overall accuracy, some participants did chose to run calculations on tautomers that were not provided in the challenge input files (Fig. 11 and Table 5), as we discuss in the “Lessons learned from physical reference calculations” section.

Molecule-wise prediction error distribution plots show how variable the prediction accuracy was for individual molecules across all prediction methods. A MAE calculated for each molecule as an average of all methods shows relatively uniform MAE across the challenge set. SM14 and SM16 predictions were slightly more accurate than the rest. B MAE of each molecule broken out by method category shows that for each method category the most challenging molecules were different. Predictions of SM08, SM13, SM09, and SM12 log P values were significantly less accurate with Physical (MM) methods than the other method categories. For QM-based methods, SM04 and SM02 were most challenging. The largest MAE values for Empirical methods were observed for SM11 and SM15. C Error distribution for each SAMPL6 molecule overall prediction methods. It is interesting to note that most distributions are peaked near an error of zero, suggesting that perhaps a consensus model might outperform most individual models. However, SM15 is more significantly shifted away from zero than any other compound. SM08 has a significant tail showing the probability of overestimated log P predictions by some methods. D Error distribution for each molecule calculated for only 7 methods from blind submissions that were determined to be consistently well-performing (hmz0n, gmoq5, j8nwc, hdpuj, dqxk4, vzgyt, qyzjx)

Comparison to the past SAMPL challenges

Overall, SAMPL6 log P predictions were more accurate than log D predictions in the SAMPL5 Cyclohexane–Water log D Challenge (Fig. 3). In the log D challenge, only five submissions had an RMSE \(\le\) 2.5 log units, with the best having an RMSE of 2.1 log units. A rough estimate of the expected error for log P and log D is 1.54 log units. This comes from taking the mean RMSE of the top half of submissions in SAMPL4 Hydration Free Energy Prediction Challenge (1.5 kcal/mol) [60] and assuming the error in each phase is independent and equal to this value, yielding an expected error of 1.54 log units [2]. Here, 64 log P challenge methods performed better than this threshold (58 blind predictions, 5 reference calculations, and the null prediction). However, only 10 of them were MM-based methods, with the lowest RMSE of 0.74 observed for the method named Molecular-Dynamics-Expanded-Ensembles (nh6c0).

Challenge construction and experimental data availability are factors that contributed to the higher prediction accuracy observed in SAMPL6 compared to prior years. The log P challenge benefited from having a well-defined protonation state, especially for physical methods. Empirical methods benefited from the wealth of octanol-water training data. Accordingly, empirical methods were among the best performers here. But also, the chemical diversity represented by 11 compounds of the SAMPL6 log P Challenge is very restricted and lower than the 53 small molecules in the SAMPL5 log D Challenge set. This was somewhat consistent with our expectations (discussed in “Expectations from different prediction approaches” section)—that empirical, QM (with trained implicit solvation models), and mixed methods would outperform MM methods given their more extensive use of abundant octanol-water data in training (Fig. 3).

Lessons learned from physical reference calculations

Comparison of reference calculations did not indicate a single force field or water model with dramatically better performance

As in previous SAMPL challenges, we conducted a number of reference calculations with established methods to provide a point of comparison. These included calculations with alchemical physical methods. Particularly, to see how the choice of water model affects accuracy we included three explicit solvent water models—TIP3P, TIP3P-FB, and the OPC model—with the GAFF and SMIRNOFF force fields in our physical reference calculations. Deviations from the experiment were significant (RMSE values ranged from 2.3 [1.1, 3.5] to 4.0 [2.7, 5.3] log units) across all the conditions used in the physical reference predictions (Fig. 3A). In general, all the water models tend to overestimate the log P, especially for the carboxylic acid in the challenge set, SM08, though our calculations on this molecule had some specific difficulties we discuss further below. Relative to the TIP3P-FB and OPC water models, predictions that used TIP3P showed improvement in some of the error metrics, such as lower deviation from the experimental values with an RMSE range of 2.3 [1.1, 3.5] to 2.34 [1.0, 3.7] log units. The OPC and TIP3P-FB containing combinations had a higher RMSE range of 3.2 [2.0, 4.5] to 4.0 [2.7, 5.3] log units.

Physical reference calculations also included wet and dry conditions for the octanol phase using the GAFF and smirnoff99Frosst (SMIRNOFF) force fields with TIP3P water. The wet octanol phase was composed of 27% water and dry octanol was modeled as pure octanol (0% water content). For reference calculations with the TIP3P water model the GAFF, and SMIRNOFF force fields using wet or dry octanol phases resulted in statistically indistinguishable performance. With GAFF, the dry octanol (REF07) RMSE was 2.4 [1.0, 3.7]. The wet octanol (REF02) RMSE was 2.3 [1.1, 3.5]. With SMIRNOFF, the dry octanol REF08 RMSE was 2.4 [1.0, 3.7], with wet octanol (REF05) RMSE of 2.3 [1.2, 3.5] (Tables S4 and S5 of Supplementary Information).

While water model and force field may have significantly impacted differences in performance across methods in some cases in this challenge, we have very few cases—aside from these reference calculations—where submitted protocols differed only by force field or water model, making it difficult to know the origin of performance differences for certain.

Comparison of independent predictions that use seemingly identical methods (free energy calculations using GAFF and TIP3P water) shows significant systematic deviations between predictions for many compounds. Comparison of the calculated and experimental values for submissions v2q0t (InterX_GAFF_WET_OCTANOL), 6nmtt (MD-AMBER-wetoct), sqosi (MD-AMBER-dryoct) and physical reference calculations REF02 (YANK-GAFF-TIP3P-wet-oct) and REF07 (YANK-GAFF-TIP3P-dry-oct). A Compares calculations that used wet octanol, and B compares those that used dry octanol. C–F The methods compared to one another. The dark and light-shaded region indicates 0.5 and 1.0 log P units of error, respectively

Different simulation protocols lead to different results between “equivalent” methods that use the same force field and water model

Several participants submitted predictions from physical methods that are equivalent to those used in our reference calculations and use the same force field and water model, which in principle ought to give identical results given adequate simulation time. There were three submissions that used the GAFF force field, TIP3P water model, and wet octanol phase: 6nmtt (MD-AMBER-wetoct), v2q0t (InterX_GAFF_ WET_OCTANOL), and REF02 (YANK-GAFF-TIP3P-dry-oct). As can be seen in Fig. 3A, v2q0t (InterX_GAFF_WET_OCTANOL) showed the best accuracy with an RMSE of 1.31 [0.94, 1.65]. 6nmtt (MD-AMBER-wetoct) and REF02 (YANK-GAFF-TIP3P-dry-oct) had higher RMSE values of 1.87 [1.33, 2.45] and 2.29 [1.07, 3.53], respectively. Two methods that used GAFF force field, TIP3P water model, and wet octanol phase are sqosi (MD-AMBER-dryoct) and REF07 (YANK-GAFF-TIP3P-dry-oct). These two also have an RMSE difference of 0.7 log P units. Although in terms of overall accuracy there are differences, Fig. 8 shows that in terms of individual predictions, submissions using the same force field and water model largely agree for most compounds.

Some discrepancies are observed for molecules SM13 and SM07, but are largest for SM08. For SM13 and SM07, method v2q0t (InterX_GAFF_WET_OCTANOL) performs over 1 log P unit better than 6nmtt (MD-AMBER-wetoct). The rest of the predictions for these two methods differ by no more than about 1 log P unit, with the majority of the molecules differing by about 0.5 log P units or less from each other. Comparing 6nmtt (MD-AMBER-wetoct) vs REF02 (YANK-GAFF-TIP3P-wet-oct) (Fig. 8A), there is a substantial difference in the predicted values for molecules SM08 (4.6 log unit difference), SM13 (1.4 log unit difference), and SM07 (1.2 log unit difference). Method v2q0t (InterX_GAFF_WET_OCTANOL) and 6nmtt (MD-AMBER-wetoct) perform about 5 log P units better than REF02 (YANK-GAFF-TIP3P-wet-oct) for molecule SM08. Besides SM08, predictions from v2q0t (InterX_GAFF_ WET_OCTANOL) and REF02 (YANK-GAFF-TIP3P-wet-oct) differ by 0.5 log P units or less from each other. In dry octanol, REF07 (YANK-GAFF-TIP3P-dry-oct) performs about 4 log P units worse than sqosi (MD-AMBER-dryoct) for SM08 (Fig. 8B).

Submissions 6nmtt (MD-AMBER-wetoct), sqosi (MD-AMBER-dryoct) and v2q0t (InterX_GAFF_WET_OCTANOL) used GAFF version 1.4 and the reference calculations used version 1.81, though GAFF differences are not expected to play a significant role here (i.e. only the valence parameters differ).

Selected small-molecule state differences may have caused divergence between otherwise equivalent methods

In several of these approaches, users selected their own starting conformation, protonation state, and tautomer, rather than those provided in the SAMPL6 Challenge, so the differences here could possibly be attributed to differences in tautomer or resonance structures. Submissions 6nmtt (MD-AMBER-wetoct) and sqosi (MD-AMBER-dryoct) used different tautomers for SM08 and different resonance structures for SM11 and SM14 (microstates SM08_micro010, SM11_micro005, SM14_micro001 from the previous SAMPL6 p\({{K}}_{{\rm a}}\) Challenge). We will discuss possible differences due to tautomer choice below in “Choice of the tautomer, resonance state, and assignment of partial charges impact log P predictions appreciably” section. The majority of the calculated log P values in 6nmtt (MD-AMBER-wetoct), sqosi (MD-AMBER-dryoct), v2q0t (InterX_GAFF_WET_OCTANOL), REF02 (YANK-GAFF-TIP3P-wet-oct), and REF07 (YANK-GAFF-TIP3P-dry-oct) show the molecules having a greater preference for octanol over water than the experimental measurements (Fig. 8A, B). Methods 6nmtt (MD-AMBER-wetoct) and REF02 (YANK-GAFF-TIP3P-wet-oct) overestimate log P more than v2q0t (InterX_GAFF_WET_OCTANOL) (Fig. 8A). Method REF07 (YANK-GAFF-TIP3P-dry-oct) overestimates log P slightly more than sqosi (MD-AMBER-dryoct) (Fig. 8B).

Three equivalent wet octanol methods and 2 equivalent dry octanol methods gave dissimilar results, and specific molecules were identified that show the major differences in predicted values (Fig. 8C–F). GAFF and the TIP3P water model were used in all of these cases, but different simulation setups and codes were used, as well as different equilibration protocols and production methods. Submissions 6nmtt (MD-AMBER-wetoct) and sqosi (MD-AMBER-dryoct), which come from the same group, used 10 ps NPT, 15 ns additional equilibration with MD, and Thermodynamic Integration for production in their setup. Submission v2q0t (InterX_GAFF_WET_OCTANOL) used 200 ns of molecular dynamics to pre-equilibrate octanol systems, 10 ns of temperature replica exchange in equilibration, and Isothermal-isobaric ensemble—based molecular dynamics simulations in production. The reference calculations (REF02 and REF07) were equilibrated for about 500 ns and used Hamiltonian replica exchange in production. Reference calculations performed with the IFE protocol and MD-AMBER-dryoct (sqosi) method used shorter equilibration times than the DFE protocol (REF07).

Comparison of predictions that use free energy calculations using GAFF and TIP3P water show deviations between predictions for the challenge molecules and several alternative tautomers and resonance structures. Deviations seem to largely stem from differences in equilibration amount and choice of tautomer. A compares reference direct transfer free energy (DFE, REF07) and indirect solvation-based transfer free energy (IFE) protocols to experiment for the challenge provided resonance states of molecules and a couple of extra resonance states for SM14 and SM11, and extra tautomers for SM08. B compares the same exact tautomers for submission sqosi (MD-AMBER-dryoct) and the two reference protocols to experiment. Submission sqosi (MD-AMBER-dryoct) used different tautomers than the ones provided in the challenge. C–E compares the calculated log P between different methods using the same tautomers. All of the predicted values can be found in Table 5

DFE and IFE protocols led to indistinguishable performance, except for SM08 and SM02

The direct transfer free energy (DFE) protocol was used for the physical reference calculations (REF01-REF08). Because the DFE protocol implemented in YANK [61] (which was also used in our reference calculations, REF01-REF08) was relatively untested (see Sect. 12.1.1 in Supplementary Information for more details), we wanted to ensure it had not dramatically affected performance, so we compared it to the indirect solvation-based transfer free energy protocol (IFE) [103] protocol. The DFE protocol directly computed the transfer free energy between solvents without any gas phase calculation, whereas the IFE protocol (used in the blind submissions and some additional reference calculations labeled IFE) computed gas-to-solution solvation free energies in water and octanol separately and then subtracted to obtain the transfer free energy. The IFE protocol calculates the transfer free energy as the difference between the solvation free energy of the solute going from the gas to the octanol phase, and the hydration free energy going from the gas to the water phase. These protocols ought to yield equivalent results in the limit of sufficient sampling, but may have different convergence behavior.

Figure 9A shows calculations from our two different reference protocols using the DFE and IFE methods. We find that the two protocols yield similar results, with the exception of two molecules. Molecule SM08 is not substantially overestimated using the IFE protocol, where it is with the DFE protocol, and SM02 is largely overestimated by IFE, but not DFE (Fig. 9A). The DFE (REF07) and IFE protocol both tend to overestimate the molecules’ preference for octanol over water than in the experiment, with the DFE protocol overestimating it slightly more. Figure 9D shows a comparison of predicted log P values of the same tautomers by the DFE (REF07) and IFE protocols. The DFE and IFE protocols are almost within the statistical error of one another, with the largest discrepancies coming from SM02 and SM08. The DFE and IFE protocols are in better agreement for some tautomers of SM08 more than others. They agree better on the predicted values for SM08_micro008 and SM08_micro010 than for SM08_micro011.

In the SAMPL6 blind submissions, there was a third putatively equivalent method to our reference predictions with the DFE protocol (REF07) and IFE protocol: sqosi (MD-AMBER-dryoct). It is identical in the chosen force field, water model, and the composition of octanol phase, however, different tautomers and resonance states for some molecules were used. All three predictions used free energy calculations with GAFF, TIP3P water, and a dry octanol phase. Additionally, sqosi (MD-AMBER-dryoct) also used the more traditional indirect solvation free energy protocol. We chose to investigate the differences in these equivalent approaches by comparing predictions using matching tautomers and resonance structures (Fig. 9). Figure 9B shows comparison of these three methods using predictions made with DFE and IFE protocols using identical tautomer and resonance input states as sqosi (MD-AMBER-dryoct): SM08_micro010, SM11_micro005, and SM14_micro001 (structures can be found in Fig. 11). Except for SM02, there is a general agreement between these predictions. In Fig. 9C, other than the SM08_micro010 tautomer, predictions of DFE (REF07) and sqosi (MD-AMBER-dryoct) largely agree. Figure 9E highlights SM02 and SM08_micro010 predictions as the major differences between our predictions with IFE protocol and sqosi (MD-AMBER-dryoct).

Only results from the DFE protocol were assigned submission numbers (of the form REF##) and presented in the overall method analysis in the “Overview of challenge results” section. More details of the solvation and transfer free energy protocol can be found in Sect. 12.1 in Supplementary Information.

The prediction errors per molecule indicate some compounds were more difficult to predict than others for the reference calculations category. A MAE of each SAMPL6 molecule broken out by physical and empirical reference method category. B Error distribution for each molecule calculated for the reference methods. SM08 was the most difficult to predict for the physical reference calculations, due to our partial charge assignment procedure

SM08 and SM13 were the most challenging for physical reference calculations

For the physical reference calculations category, some of the challenge molecules were harder to predict than others (Fig. 10). Overall, the chemical diversity in the SAMPL6 Challenge dataset was limited. This set has 6 molecules with 4-amino quinazoline groups and 2 molecules with a benzimidazole group. The experimental values have a narrow dynamic range from 1.95 to 4.09 and the number of heavy atoms ranges from 16 to 22 (with the average being 19), and the number of rotatable bonds ranges from 1 to 4 (with most having 3 rotatable bonds). SM13 had the highest number of rotatable bonds and the number of heavy atoms. This molecule was overestimated in the reference calculations. As noted earlier, molecule SM08, a carboxylic acid, was predicted poorly across all reference calculations. The origin of problems with molecule SM08 are discussed below in the “Choice of tautomer, resonance state, and assignment of partial charges impact log P predictions appreciably” section.

SM08 is a carboxylic acid and can potentially form an internal hydrogen bond. This molecule was greatly overestimated in the physical reference calculations. When this one molecule in the set is omitted from the analysis, log P prediction accuracy improves. For example, the average RMSE and R2 values across all of the physical reference calculations when the carboxylic acid is included are 2.9 (2.3–4.0 RMSE range) and 0.2 (0.1–0.2 R2 range), respectively. Excluding this molecule gives an average RMSE of 2.1 (1.3–3.3 RMSE range) and R2 of 0.57 (0.3–0.7 R2 range), which is still considerably worse than the best-performing methods.

Choice of tautomer, resonance state, and assignment of partial charges impact log P predictions appreciably

Some physical submissions selected alternate tautomers or resonance structures for some compounds. Figure 11 shows three tautomers of SM08, and two alternative resonance structures of SM11 and SM14, all of which were considered by some participants. The leftmost structure of the alternate structure group of each molecule depicts the structure provided to participants.