Abstract

The Soil and Water Assessment Tool (SWAT) is a computational hydrological model extensively utilised for developing sustainable strategies and viable approaches for prudent management of water resources. The central emphasis of this study is on the utilisation of SWAT model along with SWAT-CUP (SWAT calibration toolbox) to simulate streamflow in the upper Jhelum basin, the North West Himalayas, for a period of 20 years from 2000 to 2019. The global sensitivity analysis algorithm, Sequential Uncertainty Fitting 2 (SUFI-2) of SWAT-CUP, is used for sensitivity and uncertainty analysis. The optimised parameter set estimated by SUFI-2 constitutes 11 parameters that are found to be sensitive with soil conservation service (SCS) curve number (CN) being the most influential parameter followed by snowmelt base temperature. Autocorrelation analysis using the autocorrelation function was conducted on the temperature and precipitation time series data, followed by a pre-whitening procedure to remove any autocorrelation effects. Subsequently, the modified Mann–Kendall (MMK) test was applied to examine trends in the annual temperature and precipitation data. The results indicated statistically significant positive trends in both datasets on an annual scale. The results for the calibration period (2003–2014) for monthly simulation displayed good model performance at three gauging stations, Rambiara, Sangam and Ram Munshi Bagh with R2 values of 0.83, 0.847, 0.829, P factor values of 0.73, 0.76, 0.75 and R factor values of 0.61, 0.58, 0.63, respectively. The validation results for monthly simulation for the 2015–2019 period showed good model agreement with R2 values of 0.817, 0.853, and 0.836, P factor values of 0.76, 0.8, and 0.75 and R factor values of 0.62, 0.53, and 0.65, respectively. The study concludes that the SWAT hydrological model can perform satisfactorily in high mountainous catchments and can be employed to analyse the impact of land use-land cover changes and the effect of climate variation on streamflow dynamics.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Hydrological modelling simulates hydrological processes across a wide spectrum of geomorphic terrains. It has emerged as an astute tool to deal with the highly random and dynamic phenomena involving the interaction between various hydrological parameters present in the ecosystem. The modelling, coupled with the latest innovative and highly sensitive techniques employing the principles of geographic information system, has turned hydrological modelling into an important tool for development and management, enhancing the understanding of multiple hydrological phenomena. The predominant focus of the majority of hydrological models has been in formulating the simulation process that involves hydrological activities occurring in both small and large watersheds. The Soil and Water Assessment Tool (SWAT) (Arnold et al., 1998) is recognised as the hydrological model employed to investigate the climate change scenarios, the impact of heterogeneous land use-land cover and diverse management processes on watershed hydrology (Abbas et al., 2019; Bashir et al., 2018; Hosseini & Khaleghi, 2020; Malik et al., 2021).

The SWAT is a parameter-oriented model utilised for simulating the hydrological phenomena like surface runoff and the groundwater flow in catchments with intricate topography. The model is further used to simulate water quality and quantity, streamflow, and sediment transport in a typical watershed (Andrianaki et al., 2019; Arnold et al., 2012; Dagnew et al., 2016; Ghaith & Li, 2020; Hallouz et al., 2018; Iudicello & Chin, 2013). The major driving force behind all the hydrological phenomena occurring within the SWAT model is the water balance approach (Mengistu et al., 2019). The model’s efficacy, efficiency and prediction capability depend on the appropriate calibration and validation techniques employed in the process. The calibration procedure is applied to input parameters that are either highly sensitive or cannot be measured directly. The input parameter values are adjusted so that the minimum difference and maximum correlation are achieved between the observed and simulated output. The SWAT Calibration and Uncertainty Procedures (SWAT-CUP) is one of the promising and now extensively used calibration toolboxes incorporated within the parent model interface (Arnold et al., 2012). This toolbox has various techniques to systematically perform uncertainty analysis. The SUFI-2 (Sequential Uncertainty Fitting 2) algorithm is a widely employed approach and is an automated system that calibrates and validates the hydrological parameters and evaluates the sensitivity and uncertainty analysis and parameterization (Abbaspour et al., 2007). This algorithm-based technique eliminates the manual calibration complexities, which are computationally more expensive (Mehan et al., 2017), and under time restrictions, the calibration process is achieved at faster rates (Sloboda & Swayne, 2011). Gull and Shah (2022) investigated sediment yield, soil loss, and runoff in the Sindh watershed utilising SWAT-CUP’s SUFI-2 algorithm and reported good correlation during both the calibration and validation periods among the observed and simulated streamflow and sediment yield values.

To develop a reliable and effective predictive hydrological model, the main step involved is sensitivity analysis, which helps in identifying and prioritising the parameters that have a major impact on the output results of the model (Saltelli et al., 2000). The SUFI-2 algorithm employs the OAT (one-at-a time, local analysis) and GSA (global sensitivity analysis) techniques for sensitivity analysis. The value of each input parameter is adjusted one by one while the other parameters are left unchanged in the OAT technique (Holvoet et al., 2005). This method does not take into consideration the interactions taking place between the different system components and instead focusses on individual components, which can later on generate different types of errors. The GSA calculates all the model parameters and categorises the interactions between them. Both methods yield different results, and therefore, it is essential to thoroughly assess parameter sensitivity (Arnold et al., 2012; Cibin et al., 2010; Mehan et al., 2017). The model predictions are unable to attain specific values and be interpreted within a certain confidence interval range due to uncertainties that accumulate from the model input parameters and model morphology (Beven, 2001; Van Griensven et al., 2008). In streamflow modelling from high-altitude terrains, the high spatial variability and a large number of input factors induce uncertainties and errors into the flow output results (Xuan et al., 2009). These uncertainties result in the overestimation and underestimation of the hydrological processes that result in suboptimal decision-making strategies (Van Griensven et al., 2006).

To reduce the uncertainties in hydrological modelling, appropriate and rigorous calibration and uncertainty analysis must be performed employing various modelling methodologies and calibration techniques. The multisite or single-site techniques can be used to calibrate the SWAT model in the case of high-altitude catchments. In the single-site calibration, parameterization is executed using a single set of optimised variables (Neupane et al., 2015), while multisite calibration technique involves multiple sites with a single set of calibrated variables used for single-site calibration (Bai et al., 2017; Odusanya et al., 2019).

In mountainous regions, snowmelt hydrology is a critical component as the streamflow is primarily generated from snow melting and is estimated by the degree-day factor method present in the SWAT model (Duan et al., 2018; Kumar et al., 2017). It employs two fundamental techniques for snowmelt runoff modelling: the temperature index and energy balance approaches, with the temperature index technique being the widely employed and reliable method (Debele et al., 2010; Neitsch et al., 2011). The SWAT model’s snow package and elevation band approach are valuable for evaluating temperature and precipitation distribution profiles and for incorporating mountainous effects, particularly in high-altitude topographies (Fontaine et al., 2002). Kang and Lee (2014) studied the impacts of temperature and snowmelt in the American River basin (North Fork) employing the snowfall-snowmelt routine of the SWAT model, yielding good results as analysed by statistical coefficients. Raazia and Rasool (2017) calculated the area covered by snow in an ungauged and data-scarce mountainous region (Himalaya) employing the temperature index technique of the SWAT model. They observed that the accumulated snow depth for all elevation bands conformed to the results obtained from the image classification approach. Abbas et al. (2019) contended that the model outcomes showed a significant improvement with the use of elevation bands, and the mathematical quantification of the uncertainty involved by the SUFI-2 algorithm indicated positive outcomes for the calibration and validation stages. Malik et al. (2021) used the SUFI-2 technique in the SWAT-CUP program for multisite calibration and streamflow simulation in the North Western Himalayas and concluded that the SWAT model performs well in snow-dominated areas, with the model’s statistical coefficients showing good agreement with observed values.

Hydrological processes in the mountainous catchments are challenging to understand due to the diversity in land use-land cover, heterogeneity in elevation, and the dynamic snow processes. SWAT model calibration must consider these parameters for better simulation results including the number of iterations, elevation bands, the number of simulations, parameter sensitivity, snowmelt processes, and parameter uncertainty estimations. The study aims to establish and configure the SWAT model to execute multisite calibration and hydrological evaluation of the North West Himalayas and simulate streamflow in the snow-dominated mountainous areas employing the SUFI-2 algorithm incorporated in SWAT-CUP.

Study area

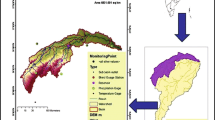

The upper Jhelum basin, with an elevation range of 1567 to 5283 m AMSL, is situated in the North West Himalayas spatially between 33° 22ʹ 4ʹʹ N to 34° 15ʹ 40ʹʹ N latitude and 74° 30ʹ 35ʹʹ E to 75° 32ʹ 46ʹʹ E longitude. This basin comprises various subbasins: Arpal, Bringi, Kuthar, Lidder, Romshi, Rambiarra, Sandran and Vishav. All these subbasins flow into the Jhelum river at various locations. The origin of the river Jhelum emanates from the Verinag spring and traverses the entire geographic area. Jhelum is the main and important tributary of the larger Indus river system. For the study region, four metrological stations: Kokernag, Pahalgam, Qazigund and Srinagar, along with three discharge stations: Rambiara, Sangam and Ram Munshi Bagh (Fig. 1), were considered. The high elevation of the region is about 42%. A substantial contribution to the total runoff comes from snowmelt and is one of the prominent features in the region.

Description of SWAT model and snow routine

The SWAT model, a comprehensive semi-distributed and widely used hydrological model, employed to perform hydrological and environmental modelling, was developed by the Agricultural Research Services of the United States Department of Agriculture (USDA) (Arnold & Fohrer, 2005; Arnold et al., 1998; Williams et al., 2008). The model takes a host of inputs and other datasets pertaining to the given watershed: DEM (digital elevation model), slope, soil data, land use, and daily meteorological parameters such as windspeed, rainfall, relative humidity, temperature and sunshine hours to simulate hydrological processes like streamflow, soil water content, infiltration, evapotranspiration and transportation of nutrients and sediments (Gassman et al., 2014; Bashir & Kumar, 2017; Hasan & Pradhanang, 2017; Choudhary & Athira, 2021) (Fig. 2). The model uses the heterogeneity of the watersheds and accordingly bifurcates them into numerous subwatersheds which are further discretized into spatial elements called HRUs (hydrological response units). These HRUs are spatially homogenous with idiosyncratic and exclusive land management, slope, soil and land type combinations characterised by the same hydrological and geomorphologic properties (Kumar et al., 2013). The soil conservation service (SCS) curve number (CN) method integrated into the SWAT model calculates the runoff. The water balance is estimated separately for each HRU using four reservoirs: soil, snow, shallow and deep aquifer, and then runoff from all the HRUs is added at the subbasin level. The total runoff is determined at the outlet and is governed by the following SWAT water balance equation:

\(SW_{t}\) and \(SW_{0}\) are the soil water content at final and initial stages (mm), respectively, i represents the iteration or index number in the summation, \(n\) is the time (days), \(P_{day}\) is the total amount of precipitation (mm), \(R_{sur}\) is the surface runoff (mm) in the region, \(ET_{a}\) is the evapotranspiration (mm), \(W_{seep}\) is the water penetrating vadose region from soil profile (mm), and \(Q_{gw}\) is the return flow (mm).

The SWAT model differentiates liquid and solid precipitation based on the near-surface air temperature to evaluate the governing water balance equation. The degree-day factor technique is implemented to determine the snowmelt (Jost et al., 2012; Kumar et al., 2013). The snowmelt process in the hydrologic model is regulated by the snowpack, the air temperature, and the daylight hours. The volume of water generated due to the snowmelt process is generally governed by the extent of snow cover in a watershed. The mass balance of the snowpack is given by the equation

\(SNO_{i}\) and \(SNO_{i-1}\) are the water content of snow present in snowpack during day i (mm water) and on previous day, respectively, \(P_{day_{i}}\) is the precipitation occurring as snowfall during day \(i\) (mm water), \(E_{sub_{i}}\) is the sublimation of snow happening during day \(i\) (mm water), and \(SNO_{mlt_{i}}\) is the snowmelt during day \(i\) (mm water).

To describe precipitation as either snowfall or rainfall, the determining threshold parameter is the snowfall temperature (SFTMP). At the subbasin scale, a comparison is established between the mean daily temperature and the SFTMP. If the value of the SFTMP is higher than the mean daily temperature, the precipitation is termed solid and added to the snowpack. As the snowpack temperature becomes greater than the snowmelt base temperature (SMTMP), the accumulated snow will start to melt. For the present day, the snowpack temperature is calculated as

\(T_{snowd_{i}}\) is the temperature of snowpack on present day \(i\) (°C), \(T_{snowd_{i-1}}\) is the temperature of snowpack on previous day \(i-1\) (°C), \(TIMP_{sno}\) is the lag factor for snow temperature, \(T_{av_{i}}\) is the mean temperature on present day \(i\) (°C), and \(\left(1-TIMP_{sno}\right)\) represents the complement of the parameter \(TIMP_{sno}\). It is equal to 1 minus the value of \(TIMP_{sno}\).

The snowpack starts to melt as the temperature of the snowpack exceeds the SMTMP. Two factors influence the rate of snowmelt: the first is the maximum melt rate, which occurs on 21st June (SMFMX), and the second is the minimum melt rate which occurs on 21st December (SMFMN). The snowmelt is calculated as

\(b_{mlt_{i}}\) is the melt factor during day \(i\) (mm of water/°C/d), \(SNO_{cov_{i}}\) is the HRU area fraction covered with snow, and \(T_{maxd_{i}}\) is the maximum temperature (°C) on current day \(i\). \(\left(\frac{T_{snowd_{i}}+T_{maxd_{i}} }{2}\right)\) calculates the average temperature for a specific day \(i\) by adding the snowpack temperature (\(T_{snowd_{i}}\)) and the maximum daily temperature (\(T_{maxd_{i}}\)) and then dividing the sum by 2. This average temperature is used in snowmelt models to estimate the temperature conditions that influence the rate of snowmelt.

\(b_{mlt,max}\) is the maximum melt factor and represents the highest rate at which snow can melt under certain conditions, typically during the warmest part of the year (June 21), and \(b_{mlt,min}\) is the minimum melt factor. It represents the lowest rate at which snow can melt, typically during the coldest part of the year (December 21). Maximum and minimum melt factor is measured in mm of water/°C/d. \(\mathrm{sin}\left[\frac{2\pi }{365}(d_{i}-81)\right]\) is a sine function that introduces seasonal variation into the melt factor depending on \(d_{i}\), where \(d_{i}\) represents the day of the year and \(81\) is the value subtracted from \(d_{i}\) to align the function with the middle of the year.

\(\mathrm{SNOCOVMX}\) is the snow threshold depth at full coverage (mm water), and \(cov_{1}\) and \(cov_{2}\) are shape-defining coefficients of curve.

The snowfall, snowpack, and snowmelt processes, spatially defined as the function of the elevation, are computed by the SWAT model when the value of snowfall temperature is reduced below the defined threshold value. The snow processes are evaluated efficiently with the help of elevation bands, also known as snow bands. Every subbasin in the catchment considers a maximum of ten snow bands as appropriate for estimating snowmelt processes. Each individual elevation band takes into account the two lapse rates: (i) the temperature lapse rate (TLAPS in C/km) and (ii) the precipitation lapse rate (PLAPS in mm of water/km/yr).

Input data for model

DEM, land use-land cover and soil data

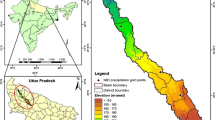

The United States Geological Survey’s (USGS) Shutter Radar Topography Mission (SRTM) provides 30 × 30 m resolution DEM (Fig. 3). The data was employed for creating and analysing the stream network, watershed delineation process, and computation of geomorphological parameters, including slope for HRU definition. The slope map was generated from the DEM for the present study for geomorphometric analysis and hydrological modelling (Fig. 4). The area is marked with rugged terrain as the slope values exhibit high variation.

The LANDSAT 8 OLI Satellite imagery downloaded from the USGS, having a resolution of 30 × 30 m, was processed in ERDAS IMAGINE 2014 to produce the map pertaining to land use-land cover for the given study region, employing the supervised classification technique (Fig. 5). The SWAT model utilised its land use classification scheme and classified the land use into 13 major classes: barren, evergreen forest, agriculture land, orchards, shrubland, urban industrial, urban high, medium and low density, urban water, transportation and wetland. The areal extent and description of the land use-land cover for the region under study are presented in Table 1. The primary use of the land use-land cover data is that it assists in creating HRUs, which then assign varied CNs to the heterogeneous land use-land cover and soil combinations to estimate runoff and employ the hydrological analysis in the area (Neitsch et al., 2011).

The soil map was obtained from the FAO soil survey for the region of study (Fig. 6). Eleven soil types were identified in the present study. The description of all the soil classes involved, along with the areal coverage, is mentioned in Table 2.

Meteorological and discharge data

The model uses meteorological data on a daily time scale, comprising the minimum and maximum air temperature, precipitation, solar radiation, relative humidity and wind speed from the meteorological stations. These parameters were obtained from the regional centre, the Indian Meteorological Centre (IMC) Ram Bagh from 2000 to 2019 at a spatial resolution 0.25° × 0.25°. The climate data in the model is generated using the WXGEN weather generator model. The monthly gauge and streamflow data were obtained from the Irrigation and Flood Control Department, Kashmir, from 2000 to 2019 for the gauging stations.

Autocorrelation (AC) and autocorrelation function (ACF)

Autocorrelation (AC) and autocorrelation function (ACF) are the core elements in time series analysis that aid in assessing the correlations between observations at different time lags. AC is the correlation of a variable within itself at different time points (Box et al., 2015), while ACF is a plot or representation of the AC values at various time lags (Brockwell & Davis, 2002; Shumway & Stoffer, 2017). In this study, the ACF plots were created for the temperature and precipitation time series data (Fig. 7a–d).

The temporal data series underwent a two-tailed test to determine the existence of autocorrelation coefficients at the 5% significance level. Mathematically, the ACF for a time series {\({X}_{t}\)} at lag K can be defined as

where ACF (K) denotes the autocorrelation coefficient or serial correlation at lag K, n denotes the total observation numbers in the data, \({X}_{t}\) and \({X}_{t-k}\) represent the observations at time t and t-k, respectively, the summation (∑) is performed over all valid time points in the series.

The ACF value was evaluated against the null hypothesis using a two-tailed test at a 95% confidence interval is given as

The indication of the data being serially correlated is when the values of ACF (K) fall within the confidence interval’s upper and lower bounds. In such cases, the pre-whitening method, variance correlation (Hamed & Rao, 1998) and TFPW (trend-free pre-whitening) test (Yue & Wang, 2002) are commonly suggested as an approach to address the serial correlation.

The ACF (K) quantifies the linear association between the observations at present time and those at a lag of K time periods. The values of ACF (K) range from − 1 to 1, suggesting negative values show negative correlation, zero value suggests no correlation and the positive values suggest positive correlation.

Pre-whitening and modified Mann–Kendall (MMK)

Pre-whitening is the procedure that involves the removal of serial correlation from the time-dependent data. The method transforms the correlated time series data into uncorrelated data structure (Brockwell & Davis, 2002). The primary step involved in pre-whitening process is to identify the model that appropriately detects the existence of serial correlation or AC in data using moving average model (MA) or autoregressive (AR) model or autoregressive integrated moving average (ARIMA) model. Maximum likelihood estimation method or least squares method is used for parameter estimation, and the residuals are obtained by deducting the fitted values from the observed values. The obtained residuals undergo appropriate transformations to reduce the residual AC from the data. In the present study, the pre-whitening test was implemented after checking for the existence of AC in the datasets and by visualising the ACF plots.

The modified Mann–Kendall (MMK) is a non-parametric statistical test that has been utilised in the study area for analysing the precipitation and temperature trends in the data across different time scales which includes monthly, seasonal (winter as DJF, spring as MAM, summer as JJAS and autumn as ON) and annual periods. This non-parametric test is a modification over the Mann–Kendall test that identifies the monotonic trends present in time series data. The MMK test accounts for potential serial correlation in the data, appropriate for analysing the time series data with autocorrelations. The pre-whitening phenomenon is the robust method to eliminate the serial correlations from the data by applying transformation to data that reduces the autocorrelation structure. The implementation of MMK test involves analysing the residuals obtained from pre-whitening procedure at different temporal scales encompassing shorter time intervals like monthly as well as longer intervals including seasonal and yearly scales. The incorporation of both the tests on the time series data takes into account the serial correlation of the data efficiently and aims to provide the more reliable test results with independent and uncorrelated datasets (Yue & Wang, 2002). The MK test statistic, corrected Z statistic and the corrected variance in MMK test are represented by the following formulas:

where sign represents signum function.

The trend test is employed on a ranked time series, \({X}_{l}\), ranging from l = 1 to n − 1 and another time series, \({X}_{k}\), that ranges from k = l + 1 to n, each data point in \({X}_{l}\) serves as a reference point with comparative analysis with the remaining data points in \({X}_{k}\) as a part of analysis and is mathematically represented as follows:

where \({X}_{l}\),\({X}_{k}\) are the successive data points with n as total number of data points.

There is corrected variance term associated with MMK test and is calculated as follows:

where VARcorr is the new variance or variance after correction, VAR(S) is the variance before correction, tk is the number of ties of extent k, m is the total number of values tied, and N/N* is the correction factor called effective sample size. When the sample size n exceeds 10, Zcorr statistic is calculated using VARcorr and MK statistic. The following formula is used to estimate Zcorr statistic:

The statistically increasing trend is denoted by the positive values attained by the \({Z}_{\mathrm{corr}}\) statistic while as negative values for the same depict decreasing trend in the time series data. When the value of standard normal deviate for \({Z}_{\mathrm{corr}}\) given as \({Z1}_{\mathrm{corr}(1-\frac{\alpha }{2})}\)) is greater than the value of \({Z}_{\mathrm{corr}}\) statistic, it demonstrates that the trend is statistically significant or vice versa and “α” is the significance value, considered 5% in the present study.

The other statistical variables calculated by MMK test are the Sen’s slope estimator and tau. The Sen’s slope, employed in the MMK test, is a non-parametric estimator that represents the median of all possible slopes between adjacent data points in a time series. It provides a robust measure of trend magnitude without relying on distributional assumptions. Sen’s slope is computed by calculating the pairwise differences in data values and their corresponding time intervals. It offers valuable insights into the direction and magnitude of the trend observed in the data and is represented by the following equation:

where M denotes the slope, \({X}_{k}\) and \({X}_{l}\) are the data points at values at k and l positions, respectively, and \(k-l\) represents time interval between the data points.

The above equation finds the slope between two data points in the time series data (X). Given a time series X with n observations, the number of possible values of M, denoted as N, can be calculated as N = n(n − 1)/2. Among different approaches, Sen’s method is widely employed to determine the overall estimator of the slope (M). This method calculates M as the median of the N values of M. Thus, the overall estimator of the slope (M) using Sen’s method is obtained by taking the median of the N values of M.

In the MMK test, the tau statistic represents a quantitative measure of trend strength or the magnitude of the monotonic trend observed in a time series. It quantifies the level of concordance or discordance between the ranks assigned to the data points. The tau value ranges from − 1 to 1, where positive values signify an increasing trend, negative values indicate a decreasing trend, and values close to zero suggest the absence of a statistically significant trend. The mathematical formula for calculating tau (τ) for the data pairs having the same direction of trend is given as

where \({n}_{c}\) is the number of concordant pairs, \({n}_{d}\) is the number of discordant pairs, \({n}_{t}\) is the number of tied data pairs with the same value, and \({n}_{u}\) is the number of unique data pairs.

Modelling framework

SWAT model setup

The ArcGIS software with the ARCSWAT interface was employed to generate the required datasets, including spatial and weather-related data. It was further used to delineate the watershed, subwatersheds and drainage networks. The threshold value of 8000 ha was marked to delineate the outlet points and streams to execute the hydrologic analysis and the model simulation for defining the subwatersheds. The upper Jhelum was bifurcated into 33 subbasins with minimal uncertainty in spatial variability.

The hydrological system analysis of the SWAT model was performed on a daily time step at the HRU level. All spatial datasets, DEM, land use-land cover, and soil map, were overlaid, and each parameter was assigned threshold values. The soil type, slope map and land type were assigned threshold values of 5%, 5%, and 1%, respectively. While delineating the HRUs, the areas below the threshold value were ignored. The SWAT model calculated the surface runoff and channel routing using the SCS CN method and the Muskingum method, respectively (Arnold et al., 1998). Potential evapotranspiration (PET) was calculated using the Penman–Monteith estimation in conjunction with the simplified plant growth model. The implementation of the snow package with the inclusion of elevation bands was used to examine the impact of snow cover and snowmelt simulations in the SWAT model. A maximum of five elevation bands were used in snow-dominated areas of the basin. The elevation band method was applied in 33 subbasins to account for snowmelt hydrology, and the elevation band distribution is shown in Table 3. However, the elevation band method was not applied to subbasins 7, 9, 12, 19, 20 and 22 as the difference between the minimum and maximum elevation was less than 500 m, implying no significant effect on the hydrological phenomena pertaining to the study region (Abbaspour et al., 2015).

SWAT model calibration, validation and sensitivity analysis

The simulation process for the hydrological model was executed from the year 2000 to 2019. The initial three years (2000–2002) were set up as the warm-up phase for the model to attain satisfactory initial parameter values for the 33 subbasins. The streamflow data from the year 2003 to 2014 were used for calibrating the hydrological and snow parameters. The validation period was set for five years from 2015 to 2019. The SWAT-CUP program determined the sensitivity analysis of hydrological and snow parameters using the Latin hypercube-OAT (LH-OAT) sampling technique and the SUFI-2 global sensitivity analysis algorithm (Abbaspour et al., 2015) to establish the most sensitive parameter affecting the streamflow conditions (Winchell et al., 2010). Sensitivity analysis employs various methods to identify parameters with a strong influence on the model output. Nineteen flow parameters were found sensitive in the basin. Two different statistical measurements that ascertain the parameter sensitivity are (i) t-stat and (ii) p value. The t-stat is a statistical measure that defines the relationship between the coefficient of regression of a parameter and its standard error (SE). When the coefficient of regression exceeds the SE, the parameter under consideration behaves sensitively. The p value associated with the t-stat of a parameter is estimated using the t-distribution table with degrees of freedom equal to n − 1. The higher p value suggests lower parameter sensitivity and vice versa (Abbaspour, 2011).

Model performance evaluation

Model statistical evaluation criteria

The model performance metrics used in the calibration and evaluation of hydrological models are utilised by various researchers to express uniquely the similarity between the simulated and observed discharge estimates (Gupta et al., 2009). Several studies have suggested the standard statistical model evaluation criteria. The calibration results obtained from the model are considered acceptable only when the values of the Nash–Sutcliffe model efficiency (NSE), the coefficient of determination (R2), and the Kling-Gupta efficiency (KGE) exceed 0.50, 0.60, and 0.3, respectively (Santhi et al., 2001). Several researchers have used R2 and NSE as metrics to evaluate the effectiveness of the SWAT model (Rahman et al., 2013, Rahman et al., 2014, Troin & Caya, 2014). The NSE and KGE are used to differentiate between behavioural and non-behavioural models. The KGE metric utilises a decomposition method to break down NSE into its correlation, variability bias, and mean bias components to overcome its limitations (Gupta et al., 2009). The model performance indicators comprise R2, NSE and KGE. The errors generated in the model are studied by mean absolute bias (MAE), percent bias (P.B), and root mean square error (RMSE) and are referred to as model error evaluation metrics, with an optimum value of zero (Moriasi et al., 2007). The P.B indicates overestimation of flow when the values are negative and underestimation when the values are positive (Gupta et al., 1999). The statistical metrics are calculated using the following equations:

\(Q_{obs}\), \(\overline{Q}_{obs }\) is the observed and mean observed value, respectively, \(Q_{sim}\), \(\overline{Q}_{sim }\) is the simulated and mean simulated value, respectively, \(N\) represents the total number of data points or observations being compared, \(r\) is the correlation between values, \(\propto\) is the error due to flow variability, and \(\beta\) is the bias.

Model uncertainty prediction criteria

The process of calibration performed on the hydrological model should first be properly evaluated before further use. The results predicted by the model do not attain certain values because of the presence of uncertainties that creep in due to input parameters, the structure of the model, and output. To evaluate the statistical uncertainty, the two prediction measures, P factor and R factor, are used to determine the accuracy of model. The P factor refers to the percentage of measured data that falls within the 95PPU, i.e., 95% prediction boundary, and its value ranges from 0 to 1. The R factor is a dimensionless ratio between the average thickness of the 95PPU (95% prediction boundary) and the standard deviation (SD), and its value ranges from 0 to infinity. When the P factor value is equal to 1 (100%) and the R factor approaches zero, it implies that simulated values and observed values are in absolutely good agreement (Abbaspour, 2011). The equations for calculating the P and R factor are given as

\(ny_{ti}\) is the measured values bracketed by 95PPU, \(N\) is the total measured values, \(\overline{dx }\) is the average of the x variable, and \(\sigma_{x}\) is the standard deviation of measured variable.

Results and discussion

The upper Jhelum river basin covers an expansive area of approximately 5417.06 km2, with the highest elevation and lowest elevation of 5308 m and 1561 m, respectively. It has a total length of 88.9 km, a perimeter of 368.6 km, and a mean width of 60.9 km. The basin serves as the trunk river and is the seventh order stream, consisting of five subbasins from the great Himalayan range. The basin features a trellis, dendritic, and parallel/subparallel drainage pattern. All the basin parameters are illustrated in Table 4.

The SWAT model was configured using ARCSWAT, an ArcGIS interface, using the datasets prepared for the basin. The SWAT model generated 33 subbasins after the watershed delineation process. The modelling of hydrological processes was influenced by soil, slope data, and the overlaying land, which led to the creation of HRUs. A three-year period was set as the initialization period for the model, along with the integration of meteorological datasets and a user table for the weather generator for simulation.

Upper Jhelum basin hydroclimatic conditions

Temperature

The temperature data were analysed from 2000 to 2019 (20 years). The average highest temperature (Tmax) and average lowest temperature (Tmin) were calculated by averaging the values from the four meteorological stations (Fig. 8). The mean annual Tmax ranged from 17.01 to 20.5 °C (average, 18.91 °C), and the mean annual Tmin varied from 3.46 to 7.79 °C (average, 6.13 °C) in the basin. On a monthly basis, the highest temperature was recorded in July and varied from 16.11 to 27.71 °C. The subzero temperatures were witnessed in the month of January, which also onsets the severe winter period, with temperature ranging from − 3.29 and 6.91 °C. The spring season marks the rise in temperature again and reaches its maximum in the summer season, followed by the autumn season when the temperature again starts to decline. The spatial variability of temperature is shown in Fig. 9. The spatial heterogeneity in temperature distribution is primarily modulated by variations in elevation across the study area. Alterations in elevation induce corresponding fluctuations in atmospheric pressure, which, in turn, exert a notable influence on temperature patterns. Higher elevations typically exhibit lower temperatures due to the reduced atmospheric pressure, while lower elevations tend to experience relatively higher temperatures. The meteorological stations are located within a topographical landscape characterised by mountainous regions, with lower elevation areas situated at the centre. This topography leads to temperature variations, with higher temperatures observed at the central region and lower temperatures observed at the peripheries (Rashid et al., 2015; Zaz et al., 2019; Dad et al., 2021; Nabi et al., 2022).

Precipitation

The annual average rainfall from the 2000–2019 period was recorded to be 1684.18 mm across four weather stations (Fig. 10). The minimum and maximum yearly mean rainfall values were 654.35 mm for the year 2000 and 1477.85 mm for the year 2014, respectively. On a monthly basis, the maximum precipitation was recorded for April (130.30 mm) and the lowest for November (44.51 mm). The maximum precipitation in the basin is received from October to May, accounting for 70% of the total annual precipitation. The spatial variation in the total amount of annual precipitation received has been depicted in Fig. 11. The orographic effect, which results from the interaction between topographic features and atmospheric conditions, is the prominent mechanism affecting the spatial dispersion of rainfall across the region. This phenomenon exerts a substantial influence on the spatial pattern of rainfall. The atmospheric circulation patterns, monsoon dynamics, heterogenous landscape, and local microclimate also contribute to the spatial variation of rainfall. The maximum amount of yearly precipitation is received by the areas surrounded by the Zanskar range, and the lowest annual precipitation receiving areas are the central low elevation regions. The elevation has a significant impact on precipitation patterns due to its influence on atmospheric processes and moisture availability. Changes in elevation can lead to variations in temperature, air pressure, and topographic features, which, in turn, affect precipitation. The high elevation regions receive the maximum amount of precipitation, and lower elevation regions receive minimal precipitation.

Trend analysis of time series data

The MMK test was implemented on the time series data after checking the ACF plots and applying pre-whitening procedures. The outputs of the MMK test along with other statistics for the temperature time series data for the four meteorological stations are summarised in Table 5. The analysis of the results revealed statistically significant trends in the data at 5% significance level. Specifically, a notable decreasing trend was observed in the months of November, December, January, and March across all stations. In contrast, significant increasing trends were found in the months of June, July, and August for all stations. When examining the seasonal patterns, a noteworthy declining trend was observed during the winter season (DJF). Additionally, the analysis of the temperature time series data indicated a significant upward trend in temperatures throughout the year.

The trend analysis of precipitation time series data is summarised in Table 6. The results obtained from the MMK test statistics reveal significant increasing trends in precipitation for the months of January, February, April, June, and August across all four meteorological stations. Conversely, a significant decreasing trend was observed for the month of July at all stations. In terms of temperature data series, a significant positive trend was identified for the month of July, indicating that it is the warmest month in the study region. Furthermore, the analysis suggests that July receives the least amount of precipitation, as evidenced by the significant negative trend observed during this month. The examination of seasonal patterns indicates a statistically significant positive trend in precipitation during the spring season (MAM). Similarly, the annual analysis of the precipitation data confirms a significant positive increasing trend. The increase in precipitation can be attributed to the western disturbances occurring in the basin. The western disturbances exert their primary influence on the western portions of Kashmir, including the Kashmir Valley and the vicinity of the Pir Panjal Range. The four meteorological stations are frequently impacted by these atmospheric systems. These areas exhibit an elevated frequency of precipitation events, encompassing both rainfall and snowfall, which are associated with the passage of western disturbances. The intricate interaction between these disturbances and the rugged topography of the Pir Panjal Range contributes to an augmented precipitation regime within these regions. The prevailing findings of this study are consistent with the outcomes of prior investigations conducted within the upper Jhelum basin (Dad et al., 2021; Nabi et al., 2022; Romshoo et al., 2018; Shafiq et al., 2019).

The MMK test played a critical role by assessing statistically significant trends in temperature and precipitation datasets from 2000 to 2019. These test results were essential in shaping the data pre-processing methodology. Before conducting trend analysis, rigorous implementation of a pre-whitening procedure was carried out, a crucial step in hydrological modelling. This process is vital as it removes any inherent serial correlation in the data, ensuring statistical independence of residuals generated in subsequent modelling stages.

The MMK test was primarily used to maintain high standards of data integrity and quality assurance for temperature and precipitation time series data in the study area. The main aim was to examine the temporal temperature and precipitation data series for discernible trends or non-stationary characteristics. This methodological approach is significant because it can greatly impact the precision of the hydrological modelling efforts and, consequently, the accuracy of the discharge simulations. Identifying persistent trends or non-stationary attributes in the dataset could substantially influence the overall findings and conclusions of the study.

Streamflow

The three gauging stations were subjected to streamflow analysis, and the maximum peak discharge was recorded at Sangam (3465.37 cumecs), followed by Ram-Munshi Bagh (2634.64 cumecs), and then by Rambiara (1716.35 cumecs), respectively (Fig. 12). The lowest values of peak discharge for Sangam, Ram-Munshi Bagh, and Rambiara were 2079.93 cumecs, 1160.40 cumecs and 600.99 cumecs, respectively. The year 2014 witnessed flood at all gauging stations and marked the highest annual maximum flow. The spring and summer seasons contribute the maximum streamflow (71%) of the yearly streamflow.

Sensitivity analysis

During the calibration process, the first step is to ascertain the most influential input parameter that regulates the streamflow. The GSA was used, considering nineteen (19) flow parameters, and the autocalibration process was executed by employing the SUFI-2 global sensitivity algorithm incorporated in SWAT-CUP.

To analyse the influence of each parameter on the selected objective function, a large number of iterations were required. The values for hydrological parameters were extracted after running SWAT-CUP for 1000 iterations, taking into consideration the experience and complete hydrological knowledge of the basin. The model input parameters were chosen based on the previous studies (Table 7). Out of the 19 flow parameters, some snowmelt parameters greatly influenced the basin hydrology, particularly the runoff in the basin.

The first step was to fit the values for snowmelt parameters using SUFI-2. As per Abbaspour et al. (2017), there may be identification problems if the parameters pertaining to the snowmelt (SFTMP, SMTMP, TIMP, SMFMX, SMFMN, TLAPS) are simultaneously calibrated with hydrological parameters. To overcome this constraint, separate and rigorous calibrations of snow parameters were executed to attain the best fit values. Subsequently, the snowmelt parameters were eliminated while calibrating the rainfall parameters that influence the surface runoff (El Harraki et al., 2021; Malik et al., 2021).

The results of the sensitivity analysis demonstrated how different model parameters impact the uncertainties in the output, and the relevant calibration ranges are provided for examination (Table 8). From the sensitivity analysis, it was revealed that CN was the most influential hydrological parameter (t = 9.3667; p = 0.0000) in the basin. The CN is a rainfall runoff conversion coefficient and is a dominant parameter, hence regulating the runoff generation. Depending on the values of the land use-land cover, soil type, and the corresponding slope, the CN value varies from HRU to HRU and subbasin to subbasin. A large change was registered in the model output corresponding to the relative variation in the CN value. It was increased to 10% from its default value, thus increasing the runoff generation. The CN parameter helps to maintain spatial consistency; hence its value is changed relatively. The SMTMP and SFTMP exhibited strong sensitivity, followed by TLAPS. The SMTMP was the second most sensitive parameter regulating the peak and shape of the simulated hydrograph. The TLAPS is also an influential parameter as it is directly linked with the process of snowmelt. The available soil water capacity (SOL_AWC) significantly regulates the infiltration process occurring in the basin. To enhance the water movement into the soil matrix, the SOL_AWC value was reduced by 12% proportionally. The two influential and interdependent parameters that significantly influenced the basin hydrology were TIMP and SMFMX. The TIMP parameter takes into account the preceding day’s snowmelt and determines the influence of temperature variation on snowpack temperature on a daily basis. The base flow alpha factor (ALPHA_BF) and the ground water delay (GW_DELAY) exhibited sensitivity in relation to base flow and determined the total water that infiltered into the soil matrix and then recontributes this water to the streamflow in the form of groundwater passing through different soil profiles. These parameters also take into consideration the time traversed by the base flow to reach the outlet. The overall ranking of the parameters and the values associated with them give insight into the importance of optimisation for parameter estimation. The SUFI-2 algorithm efficiently reflects the ranges of parameters considering the uncertainties creeping through the input data, the structure of the model, parameter estimation, and observation data. The contribution of all parameters in model output uncertainties is depicted in the sensitivity analysis. The sensitive parameters induce the maximum uncertainty in the model output; hence, their values should be sufficiently changed during the calibration process so that the best model output is obtained.

SWAT calibration and validation

After determining the optimal parameter datasets with their valid values, the model streamflow simulation was performed for a period of 20 years (2000–2019) with initializing the model for three years. The model calibration for the calculation of monthly runoff was performed for the years 2003 to 2014, and the validation was performed from years 2015 to 2019. The study area presents unique hydroclimatic challenges due to its complex terrain and meteorological variability. The hydrological patterns in the study region are characterised by localised and significantly variable behaviours, frequently influenced by monsoon dynamics and snowmelt. Therefore, the modelling periods within this time span aim to present a more precise depiction of the unique hydrological behaviour. The effectiveness of the model was illustrated by the statistical values obtained for the calibration and validation phases. The values of R2, KGE, and NSE for Rambiara were 0.838, 0.813 and 0.775, respectively, and for Sangam the values were 0.847, 0.91 and 0.832, respectively; and for Ram Munshi Bagh, the values were 0.829, 0.82, and 0.767, respectively, for the calibration period (Table 9). In terms of the errors in model simulation (P.B, MAE and RMSE) for Ram-Munshi Bagh, the estimated errors were − 6.5%, 33.957, and 46.472, respectively. For Sangam, the estimated errors were 2.3%, 39.544, and 49.195, respectively, and for Rambiara, the estimated errors were − 5.3%, 20.571, and 27.455, respectively.

The R2 values for the validation phase were 0.817, 0.853 and 0.836 for Rambiara, Sangam and Ram Munshi Bagh, respectively. The NSE figures for Rambiara, Sangam and Ram Munshi Bagh were reported as 0.75, 0.802, and 0.794, respectively, while the KGE values were 0.812, 0.834, and 0.855, respectively (Table 10). The RMSE, P.B and MAE were 46.361, − 6.4%, and 32.978 for Rambiara, 58.972, 1.5%, and 49.131 for Sangam and 52.75, − 7.7%, and 40.181 for Ram Munshi Bagh.

The results showed that the model performance indicators were close to the suggested value of 1; and the model error evaluators were close to zero (0). The positive values of P.B for Sangam imply underestimated flow, and the negative value of P.B at Ram Munshi Bagh and Rambiara indicates overestimation of the flow (Gupta et al., 1999). The reason for this behaviour could be due the fact that SWAT does not satisfactorily model the watershed streamflow that is predominantly produced from snowmelt (Fontaine et al., 2002). The values as obtained from the objective functions show that the model exhibits minimum bias towards both the calibration and validation periods, confirming that a modest agreement exists between the simulated and observed discharge. This also illustrates that our model has been adequately calibrated for the basin.

The performance evaluation of the model was further assessed trough graphical comparison between simulated and observed values of streamflow. The hydrograph representing the runoff in the basin and the scatter plots at discharge stations for the calibration and validation periods are shown in Figs. 13a–f and 14a–f, respectively. The observed discharge was reasonably simulated by the model, and the peak values in the hydrograph were well defined by the model. The observed and simulated peak flows are almost similar for all three gauging stations. The year 2014, considered as the flood year, recorded the maximum peak flow that was efficiently recognised by the model, implying that the SWAT hydrological model has the capability to produce satisfactory results for any simulation period and can precisely and approximately regenerate high flows. The primary objective of incorporating the flood year 2014 into the calibration period was because significant events such as floods have profound consequences for the study area, and this selection aimed to capture the specific dynamics of such occurrences within the modelling framework.

a, b Scatter plot of simulated against observed for the calibration (2003–2014) and validation (2015–2019) periods at Ram Munshi Bagh; c, d scatter plot of simulated against observed for the calibration (2003–2014) and validation (2015–2019) periods at Sangam; e, f scatter plot of simulated against observed for calibration (2003–2014) and validation (2015–2019) at Rambiara

Uncertainty analysis

The statistical indices for uncertainty analysis, the P and R factors, reported acceptable values for the calibration and validation periods as 0.7 and 1, respectively (Abbaspour et al., 2015). The P and R factors for all gauging stations are illustrated in Tables 9 and 10. The 95PPU bands revealed that certain low flow events were not properly represented by the SWAT model and could potentially be attributed to the model’s inadequacy in recognising the groundwater flow efficiently (Rostamian et al., 2008; Abbas et al., 2019). As analysed and documented by many researchers, the correct representation of low flow events has always been a limitation for the concerned model (Abbas et al., 2019; Sudheer et al., 2007). This may be due to the inability of CN to effectively justify abstractions because of the antecedent soil moisture conditions. The resolution of DEM can have a major impact on runoff generation, as it influences drainage areas, stream networks, elevation slope distribution, which can significantly affect hydrograph shape and characteristics.

Conclusion

The primary aim of this study was to set up and implement the calibration and validation procedures on the SWAT model in the upper Jhelum basin, where runoff is mainly due to rain and snowmelt processes. The semi-automatic calibration program, SWAT-CUP, was used to perform the calibration and validation in the snowy basin. The application starts with the set of input parameters and their initial ranges and finds the best fit values for the chosen objective functions by iteration. The introduction of elevation bands into the SWAT model, especially in snow-dominated regions, aimed to analyse the effects of snow cover and snowmelt in 33 subbasins for hydrological assessment. The temperature and precipitation time series data were thoroughly analysed to detect any potential serial correlation. Autocorrelation plots were created using the autocorrelation function to assess the existence of autocorrelation patterns. To mitigate the impact of autocorrelation, a systematic pre-whitening procedure was applied, resulting in the derivation of residuals. Subsequently, the modified Mann–Kendall test was utilised to carefully examine the trend patterns evident in the obtained residuals. This comprehensive scientific approach facilitated a robust assessment of trends in both the temperature and precipitation datasets. The analysis of the results revealed statistically significant trends in the data at a 5% significance level. For temperature time series data, there was a pronounced decreasing trend observed in November, December, January, and March and significant increasing trends in June, July, and August for all stations. A notable declining trend was evident during the winter season (DJF), and a significant upward trend was noticed throughout the year. The findings for precipitation time series data reveal significant upward trends during January, February, April, June, and August and substantial decreasing trend was noted for July across all stations. Additionally, the analysis indicates that July experiences the lowest precipitation, supported by the significant negative trend observed during this period. The exploration of seasonal patterns highlights a statistically meaningful increase in precipitation during the spring season (MAM). Similarly, the annual analysis of the precipitation data affirms a substantial and significant positive increasing trend. The sensitivity analysis conducted in SWAT-CUP using the SUFI-2 algorithm resulted in the optimisation of a parameter set consisting of 11 parameters, with the soil conservation service curve number identified as the most influential parameter, followed by the snowmelt base temperature. The model was calibrated (2003–2014) and validated (2015–2019) for the three gauging stations. The statistical analysis revealed that the results were in good agreement as both the NSE and R2 were > 0.5. The uncertainty associated with the model was quantified using the SUFI-2 global sensitivity algorithm, and the P and R factor values for the calibration–validation periods indicated good results encompassing more than 70% of the observed data. The current study further concludes that in mountainous catchments, the SWAT hydrological model can simulate flows generated from the snowmelt processes that form an important component of the hydrological system. The calibrated model provides a framework to analyse the effect of the spatial variability of land use-land cover and its impacts on biodiversity and climate change. Furthermore, it facilitates in assessing the impact of various management and conservation strategies on the streamflow, water quality, nutrient and sediment yield for a specific watershed.

Data availability

On request.

References

Abbas, T., Hussain, F., Nabi, G., Boota, M. W., Wu, R., & S. (2019). Uncertainty evaluation of SWAT model for snowmelt runoff in a Himalayan watershed. Terrestrial, Atmospheric and Oceanic Sciences, 30(2), 265–279. https://doi.org/10.3319/TAO.2018.10.08.01

Abbaspour, K. C. (2011). SWAT-CUP: SWAT calibration and uncertainty programs—a user manual. Swiss Federal Institute of Aquatic Science and Technology.

Abbaspour, K. C., Vaghefi, S. A., & Srinivasan, R. (2017). A guideline for successful calibration and uncertainty analysis for soil and water assessment: A review of papers from the 2016 international SWAT conference. Water, 10(1), 6.

Abbaspour, K.C., Vejdani, M., & Haghighat, S. (2007). SWAT-CUP calibration and uncertainty programs for SWAT. MODSIM 2007 International Congress on Modelling and Simulation, Modelling and Simulation Society of Australia and New Zealand 1596–1602. https://doi.org/10.3390/w10010006.

Abbaspour, K. C., Rouholahnejad, E., Vaghefi, S., Srinivasan, R., Yang, H., & Kløve, B. (2015). A continental scale hydrology and water quality model for Europe: Calibration and uncertainty of a high-resolution largescale SWAT model. Journal of Hydrology, 52(4), 733–752. https://doi.org/10.1016/j.jhydrol.2015.03.027

Andrianaki, M., Shrestha, J., Kobierska, F., Nikolaidis, N. P., & Bernasconi, S. M. (2019). Assessment of SWAT spatial and temporal transferability for a high-altitude glacierized catchment. Hydrology and Earth System Science, 23(8), 3219–3232. https://doi.org/10.5194/hess-23-3219-2019

Arnold, J.G., Srinivasan, R., Muttiah, R.S., & Williams, J.R. (1998). Large area hydrologic modelling and assessment. Part I: model development. The Journal of the American Water Resources Association, 34(1), 73–89. https://doi.org/10.1111/j.1752-1688.1998.tb05961.x.

Arnold, J.G., Moriasi, D.N., Gassman, P.W., Abbaspour, K.C., White, M.J., Srinivasan, R., Santhi, C., Harmel, R.D., Van Griensven, A., Van Liew, M.V., Kannan, N., & Jha, M.K. (2012). SWAT: model use, calibration, and validation. Transactions of the ASABE, 55(4), 1491-1508. https://doi.org/10.13031/2013.42256.

Arnold, J. G., & Fohrer, N. (2005). SWAT2000: Current capabilities and research opportunities in applied watershed modelling. Hydrological Processes, 19(3), 563–572. https://doi.org/10.1002/hyp.5611

Bai, J., Shen, Z., & Yan, T. (2017). A comparison of single- and multi-site calibration and validation: a case study of SWAT in the Miyun Reservoir watershed, China. Frontiers in Earth Science, 11, 592–600. https://doi.org/10.1007/s11707-017-0656-x

Bashir, T., & Kumar, R. (2017). Simulation of modelling of water ecohydrologic dynamics in a multilayer root zone under protected conditions in the temperate region of India. Journal of Hydrologic Engineering, 22(10), 05017020.

Bashir, T., Romshoo, S. H., & Sabha, I. (2018). Monitoring land use/cover change using remote sensing and GIS techniques in upper Jhelum basin. International Journal of Advance Research in Science and Engineering, 7(4), 1060–1077.

Beven, K. (2001). How far can we go in distributed hydrological modelling? Hydrology and Earth System Science, 5(1), 1-12. https://doi.org/10.5194/hess-5-1-2001.

Box, G. E., Jenkins, G. M., Reinsel, G. C., & Ljung, G. M. (2015). Time series analysis: Forecasting and control. John Wiley & Sons.

Brockwell, P. J., & Davis, R. A. (Eds.). (2002). Introduction to time series and forecasting. New York, NY: Springer New York.

Choudhary, R., & Athira, P. (2021). Effect of root zone soil moisture on the SWAT model simulation of surface and subsurface hydrological fluxes. Environmental Earth Sciences, 80, 1–16. https://doi.org/10.1007/s12665-021-09912-z

Cibin, R., Sudheer, K., & Chaubey, I. (2010). Sensitivity and identifiability of streamflow generation parameters of the SWAT model. Hydrological Processes, 24(9), 1133–1148. https://doi.org/10.1002/hyp.7568

Dad, J. M., Muslim, M., Rashid, I., & Reshi, Z. A. (2021). Time series analysis of climate variability and trends in Kashmir Himalaya. Ecological Indicators, 126, 107690. https://doi.org/10.1016/J.ECOLIND.2021.107690

Dagnew, A., Philip, T., & Silvia, W. G. (2016). Modelling agricultural watersheds with the soil and water assessment tool (SWAT): calibration and validation with a novel procedure for spatially explicit HRUs. Environmental Management, 57, 894–911. https://doi.org/10.1007/s00267-015-0636-4

Debele, B., Srinivasan, R., & Gosain, A. K. (2010). Comparison of process-based and temperature-index snowmelt modeling in SWAT. Water Resources Management, 24, 1065–1088. https://doi.org/10.1007/s11269-009-9486-2

Duan, Y., Liu, T., Meng, F., Luo, M., Frankl, A., De Maeyer, P., & Feng, X. (2018). Inclusion of modified snow melting and flood processes in the SWAT model. Water, 10(12), 1715. https://doi.org/10.3390/w10121715

El Harraki, W., Ouazar, D., Bouziane, A., El Harraki, I., & Hasnoui, D. (2021). Streamflow prediction upstream of a dam using SWAT and assessment of the impact of land use spatial resolution on model performance. Environmental Processes, 8, 1165–1186. https://doi.org/10.1007/s40710-021-00532-0

Fontaine, T. A., Cruickshank, T. S., Arnold, J. S., & Hotchkiss, R. H. (2002). Development of a snowfall–snowmelt routine for mountainous terrain for the soil water assessment tool (SWAT). Journal of Hydrology, 262(1–4), 209–223. https://doi.org/10.1016/S0022-1694(02)00029-X

Gassman, P. W., Sadeghi, A. M., & Srinivasan, R. (2014). Applications of the SWAT model special section: overview and insights. Journal of Environmental Quality, 43(1), 1–8. https://doi.org/10.2134/jeq2013.11.0466

Ghaith, M., & Li, Z. (2020). Propagation of parameter uncertainty in SWAT: A probabilistic forecasting method based on polynomial chaos expansion and machine learning. Journal of Hydrology, 586, 124854.

Gull, S., & Shah, S.R. (2022). Modelling streamflow and sediment yield from two small watersheds of Kashmir Himalayas, India. ISH Journal of Hydraulic Engineering, 1–10. https://doi.org/10.1080/09715010.2021.2015463.

Gupta, H. V., Kling, H., Yilmaz, K. K., & Martinez, G. F. (2009). Decomposition of the mean squared error and NSE performance criteria: Implications for improving hydrological modelling. Journal of Hydrology, 377(1–2), 80–91.

Gupta, H. V., Sorooshian, S., & Yapo, P. O. (1999). Status of automatic calibration for hydrologic models: comparison with multilevel expert calibration. Journal of Hydrologic Engineering, 4(2), 135–143. https://doi.org/10.1061/(ASCE)1084-0699(1999)4:2(135)

Hallouz, F., Meddi, M., Mahé, G., & Alirahmani, S. (2018). Modeling of discharge and sediment transport through the SWAT model in the basin of Harraza (Northwest of Algeria). Water Science, 32(1), 79–88. https://doi.org/10.1016/j.wsj.2017.12.004

Hamed, K. H., & Rao, A. R. (1998). A modified Mann-Kendall trend test for autocorrelated data. Journal of Hydrology, 204, 182–196. https://doi.org/10.1016/S0022-1694(97)00125-X

Hasan, M. A., & Pradhanang, S. M. (2017). Estimation of flow regime for a spatially varied Himalayan watershed using the improved multisite calibration of the Soil and Water Assessment Tool (SWAT) model. Environmental Earth Sciences, 76, 1–13. https://doi.org/10.1007/s12665-017-7134-3

Holvoet, K., Van Griensven, A., Seuntjens, P., & Vanrolleghem, P. (2005). Sensitivity analysis for hydrology and pesticide supply towards the river in SWAT. Physics and Chemistry of the Earth, Parts A/B/C, 30(8–10), 518–526. https://doi.org/10.1016/j.pce.2005.07.006

Hosseini, S. H., & Khaleghi, M. R. (2020). Application of SWAT model and SWAT-CUP software in simulation and analysis of sediment uncertainty in arid and semi-arid watersheds (case study: the Zoshk-Abardeh watershed). Modeling Earth Systems and Environment, 6(4), 2003–2013. https://doi.org/10.1007/s40808-020-00846-2

Iudicello, J. J., & Chin, D. A. (2013). Multimodel, multiple watershed examination of in-stream bacteria modelling. Journal of Environmental Engineering, 139(5), 719–727. https://doi.org/10.1061/(ASCE)EE.1943-7870.0000670

Jost, G., Moore, R. D., Smith, R., & Gluns, D. R. (2012). Distributed temperature-index snowmelt modelling for forested catchments. Journal of Hydrology, 420, 87–101. https://doi.org/10.1016/j.jhydrol.2011.11.045

Kang, K., & Lee, J. H. (2014). Hydrologic modelling of the effect of snowmelt and temperature on a mountainous watershed. Journal of Earth System Science, 123, 705–713. https://doi.org/10.1007/s12040-014-0423-2

Kumar, M., Marks, D., Dozier, J., Reba, M., & Winstral, A. (2013). Evaluation of distributed hydrologic impacts of temperature-index and energy-based snow models. Advances in Water Resources, 56, 77–89. https://doi.org/10.1016/j.advwatres.2013.03.006

Kumar, N., Singh, S. K., Srivastava, P. K., & Narsimlu, B. (2017). SWAT model calibration and uncertainty analysis for streamflow prediction of the Tons River Basin, India, using Sequential Uncertainty Fitting (SUFI-2) algorithm. Modeling Earth Systems and Environment, 3, 1–13. https://doi.org/10.1007/s40808-017-0306-z

Malik, M. A., Dar, A. Q., & Jain, M. K. (2021). Modelling streamflow using the SWAT model and multi-site calibration utilizing SUFI-2 of SWAT-CUP model for high altitude catchments, NW Himalaya’s. Modeling Earth Systems and Environment, 8(1), 1203–1213. https://doi.org/10.1007/s40808-021-01145-0

Mehan, S., Neupane, R.P., & Kumar, S. (2017). Coupling of SUFI 2 and SWAT for improving the simulation of streamflow in an agricultural watershed of South Dakota. Hydrology: Current Research, 8(3). https://doi.org/10.4172/2157-7587.1000280

Mengistu, A. G., van Rensburg, L. D., & Woyessa, Y. E. (2019). Techniques for calibration and validation of SWAT model in data scarce arid and semi-arid catchments in South Africa. Journal of Hydrology: Regional Studies, 25, 100621.

Moriasi, D.N., Arnold, J.G., Van Liew, M.W., Bingner, R.L., Harmel, R.D., & Veith, T.L. (2007). Model evaluation guidelines for systematic quantification of accuracy in watershed simulations. Transactions of the ASABE, 50(3), 885–900. https://doi.org/10.13031/2013.23153

Nabi, S., Ahanger, M. A., & Dar, A. Q. (2022). Employing sensitivity analysis to catchments having scanty data. Environmental Science and Pollution Research, 29(45), 68118–68131. https://doi.org/10.1007/s11356-022-20514-5

Naqash, T. B., Ahanger, M. A., & Maity, R. (2022). Multi-site hydrometeorological simulation of streamflow for upper Jhelum basin in northwest Himalayas using HEC–HMS soil moisture accounting algorithm. Modeling Earth Systems and Environment, 9(1), 431–455. https://doi.org/10.1007/s40808-022-01510-7

Neitsch, S.L., Arnold, J.G., Kiniry, J.R., & Williams, J.R. (2011). Soil and water assessment tool: theoretical documentation version 2009. Texas Water Resources Institute

Neupane, R. P., Yao, J., White, J. D., & Alexander, S. E. (2015). Projected hydrologic changes in monsoon-dominated Himalaya Mountain basins with changing climate and deforestation. Journal of Hydrology, 525, 216–230. https://doi.org/10.1016/j.jhydrol.2015.03.048

Odusanya, A. E., Mehdi, B., Schürz, C., Oke, A. O., Awokola, O. S., Awomeso, J. A., & Schulz, K. (2019). Multi-site calibration and validation of SWAT with satellite-based evapotranspiration in a data-sparse catchment in southwestern Nigeria. Hydrology and Earth System Sciences, 23(2), 1113–1144. https://doi.org/10.5194/hess-23-1113-2019

Raazia, S., & Rasool, S. (2017). Modelling of areal coverage of snow of an ungauged catchment with ArcSWAT. British Journal of Environment and Climate Change, 7(3), 182–194.

Rahman, K., Maringanti, C., Beniston, M., Widmer, F., Abbaspour, K., & Lehmann, A. (2013). Streamflow modeling in a highly managed mountainous glacier watershed using SWAT: the upper Rhone River watershed case in Switzerland. Water Resource Management, 27, 323–339. https://doi.org/10.1007/s11269-012-0188-9

Rashid, I., Romshoo, S. A., Chaturvedi, R. K., Ravindranath, N. H., Sukumar, R., Jayaraman, M., Lakshmi, T. V., & Sharma, J. (2015). Projected climate change impacts on vegetation distribution over Kashmir Himalayas. Climatic Change, 132, 601–613. https://doi.org/10.1007/S10584-015-1456-5/TABLES/3

Romshoo, S. A., Rafiq, M., & Rashid, I. (2018). Spatio-temporal variation of land surface temperature and temperature lapse rate over mountainous Kashmir Himalaya. Journal of Mountain Science, 15(3), 563–576.

Rostamian, R., Jaleh, A., Afyuni, M., Mousavi, S. F., Heidarpour, M., Jalalian, A., & Abbaspour, K. C. (2008). Application of a SWAT model for estimating runoff and sediment in two mountainous basins in central Iran. Hydrological Sciences Journal, 53, 977–988. https://doi.org/10.1623/hysj.53.5.977

Saltelli, A., Chan, K., & Marian, S. (2000). Sensitivity analysis. Wiley.

Santhi, C., Arnold, J. G., Williams, J. R., Hauck, L. M., & Dugas, W. A. (2001). Application of a watershed model to evaluate management effects on point and nonpoint source pollution. Transactions of the ASABE, 44(6), 1559. https://doi.org/10.13031/2013.7041

Shafiq, M. U., Rasool, R., Ahmed, P., & Dimri, A. P. (2019). Temperature and precipitation trends in Kashmir valley, North Western Himalayas. Theoretical and Applied Climatology, 135(1–2), 293–304. https://doi.org/10.1007/S00704-018-2377-9

Shumway, R. H., & Stoffer, D. S. (2017). ARIMA models. In: Time series analysis and its applications: Springer Texts in Statistics. Springer, Cham, pp 75–163. https://doi.org/10.1007/978-3-319-52452-8_3

Sloboda, M., & Swayne, D. (2011). Autocalibration of environmental process models using a PAC learning hypothesis. In Environmental software systems. Frameworks of environment; IFIP Advances in information and communication technology, vol 359. Springer, Berlin/Heidelberg, pp 528–534. https://doi.org/10.1007/978-3-642-22285-6_57

Sudheer, K. P., Chaubey, I., Garg, V., & Migliaccio, K. W. (2007). Impact of time-scale of the calibration objective function on the performance of watershed models. Hydrological Process, 21(25), 3409–3419. https://doi.org/10.1002/hyp.6555

Troin, M., & Caya, D. (2014). Evaluating the SWAT’s snow hydrology over a Northern Quebec watershed. Hydrological Process, 28(4), 1858–1873. https://doi.org/10.1002/hyp.9730

Van Griensven, A., Meixner, T., Grunwald, S., Bishop, T., Diluzio, M., & Srinivasan, R. (2006). A global sensitivity analysis tool for the parameters of multi-variable catchment models. Journal of Hydrology, 324(1–4), 10–23. https://doi.org/10.1016/j.jhydrol.2005.09.008

Van Griensven, A., Meixner, T., Srinivasan, R., & Grunwald, S. (2008). Fit-for-purpose analysis of uncertainty using split-sampling evaluations. Hydrological Sciences Journal, 53(5), 1090–1103. https://doi.org/10.1623/hysj.53.5.1090

Williams, J. R., Arnold, J. G., Kiniry, J. R., Gassman, P. W., & Green, C. H. (2008). History of model development at Temple. Texas. Hydrological Sciences Journal, 53(5), 948–960. https://doi.org/10.1623/hysj.53.5.948

Winchell, M., Srinivasan, R., Luzio, D.M., & Arnold, J. (2010). ArcSWAT interface for SWAT 2009. User’s Guide, Texas Agricultural Experiment Station (Texas) and USDA Agricultural Research Service (Texas), Temple (Texas), 102–122

Xuan, Y., Cluckie, I., & Wang, Y. (2009). Uncertainty analysis of hydrological ensemble forecasts in a distributed model utilizing short-range rainfall prediction. Hydrology and Earth System Sciences, 13(3), 293–303. https://doi.org/10.5194/hess-13-293-2009

Yue, S., & Wang, C. Y. (2002). Applicability of prewhitening to eliminate the influence of serial correlation on the Mann-Kendall test. Water Resources Research, 38(6), 4–1. https://doi.org/10.1029/2001WR000861

Zaz, S. N., Romshoo, S. A., Krishnamoorthy, R. T., & Viswanadhapalli, Y. (2019). Analyses of temperature and precipitation in the Indian Jammu and Kashmir region for the 1980–2016 period: Implications for remote influence and extreme events. Atmospheric Chemistry and Physics, 19(1), 15–37. https://doi.org/10.5194/ACP-19-15-2019

Acknowledgements

The authors highly acknowledge the help and support rendered by the Department of Ecology, Environment & Remote Sensing, Kashmir, Jammu and Kashmir, and Department of Civil Engineering, National Institute of Technology, Srinagar, Jammu and Kashmir.

Author information

Authors and Affiliations

Contributions

Taroob Bashir Naqash, Manzoor Ahmad Ahanger and Rajib Maity conceived the idea, Taroob Bashir Naqash collected all data, applied the model and statistics, Taroob Bashir Naqash drafted the manuscript and Manzoor Ahmad Ahanger and Rajib Maity did the proofreading.

Corresponding author

Ethics declarations

Ethics approval

All authors have read, understood, and complied as applicable with the statement on “Ethical responsibilities of Authors” as found in the Instructions for Authors and are aware that with minor exceptions no changes can be made to authorship once the paper is submitted.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Naqash, T.B., Ahanger, M.A. & Maity, R. Impacts of hydrometeorological factors on discharge simulation in the North West Himalayas: a SUFI-2 algorithm-driven investigation using the SWAT model. Environ Monit Assess 195, 1366 (2023). https://doi.org/10.1007/s10661-023-11916-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10661-023-11916-0